The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Security Analytics and Visualization interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Security Analytics and Visualization Interview

Q 1. Explain the difference between SIEM and SOAR.

SIEM (Security Information and Event Management) and SOAR (Security Orchestration, Automation, and Response) are both crucial components of a robust cybersecurity infrastructure, but they serve distinct purposes. Think of SIEM as the detective and SOAR as the police officer.

SIEM collects and analyzes security logs from various sources (servers, network devices, applications) to detect security incidents. It aggregates this data, correlates events, and generates alerts based on predefined rules or machine learning models. Essentially, it identifies what happened and when.

SOAR takes over once a threat is detected. It automates incident response processes, orchestrates actions across different security tools, and facilitates collaboration among security teams. It focuses on how to respond to the identified threat efficiently and effectively. For example, SOAR might automatically quarantine a compromised machine, block malicious IP addresses, and notify the relevant team.

In short, SIEM is about detection and monitoring, while SOAR is about automated response and remediation. They often work together, with SIEM providing the alerts that trigger automated actions in SOAR.

Q 2. Describe your experience with various data visualization tools (e.g., Tableau, Splunk, Qlik Sense).

I have extensive experience with several leading data visualization tools, including Tableau, Splunk, and Qlik Sense. My experience isn’t just about creating pretty dashboards; it’s about leveraging the strengths of each tool to solve specific security analytics challenges.

Tableau excels at creating interactive and visually appealing dashboards for communicating security insights to both technical and non-technical audiences. I’ve used it to present key metrics like threat volume, attack surface, and remediation times to executive stakeholders, enabling data-driven decision-making.

Splunk, with its powerful log management capabilities, is my go-to tool for deep dive analysis of security logs. I’ve used its search processing language (SPL) extensively to develop custom dashboards and alerts for detecting advanced persistent threats (APTs) and insider threats. For instance, I developed a SPL query to identify unusual login attempts from unusual locations.

Qlik Sense is particularly useful for its associative data analysis capabilities. Its ability to connect disparate data sources seamlessly has helped me in correlating security events across different systems and identifying previously unseen patterns. I used Qlik Sense to map network traffic patterns and pinpoint vulnerabilities.

My proficiency in these tools allows me to choose the best tool for the job, maximizing effectiveness and ensuring that security insights are communicated clearly and efficiently.

Q 3. How do you identify and prioritize security alerts in a high-volume environment?

Prioritizing security alerts in a high-volume environment is crucial to avoid alert fatigue and ensure that critical threats are addressed promptly. My approach involves a multi-layered strategy combining automated filtering, rule-based scoring, and machine learning.

- Automated Filtering: I leverage SIEM capabilities to filter out known false positives, such as alerts generated by expected system events. This significantly reduces the noise.

- Rule-Based Scoring: I define rules that assign scores to alerts based on factors like severity, source, and affected assets. High-scoring alerts, representing more critical threats, are prioritized.

- Machine Learning: I employ machine learning algorithms to identify patterns in alerts and predict the likelihood of a true positive. This helps to automatically prioritize alerts with a high probability of being a genuine threat.

- Contextual Analysis: I enrich alerts with contextual information from other sources (e.g., threat intelligence feeds) to refine the prioritization process. For example, an alert related to a known malware hash would receive a higher priority.

This layered approach allows me to focus on the most critical threats while minimizing the impact of irrelevant alerts, ensuring efficient incident response.

Q 4. What are the key performance indicators (KPIs) you track in security analytics?

The KPIs I track in security analytics depend on the specific organization and its objectives, but some key metrics consistently provide valuable insights. These include:

- Mean Time To Detect (MTTD): How long it takes to identify a security incident.

- Mean Time To Respond (MTTR): How long it takes to resolve a security incident.

- Number of Security Incidents: Tracks the overall frequency of security events.

- False Positive Rate: Measures the accuracy of security alerts.

- Security Alert Volume: Monitors the overall number of alerts generated.

- Vulnerability Remediation Rate: Measures the speed at which vulnerabilities are addressed.

- Percentage of Critical Vulnerabilities Remediated: Focuses on the most severe vulnerabilities.

By regularly monitoring these KPIs, I can identify trends, measure the effectiveness of security controls, and proactively improve the overall security posture.

Q 5. Explain your experience with log aggregation and analysis.

Log aggregation and analysis are foundational to security analytics. My experience encompasses collecting logs from diverse sources using various methods, processing them efficiently, and extracting meaningful insights.

I’ve worked with centralized logging systems like Elasticsearch, Logstash, and Kibana (ELK stack) and Splunk to aggregate logs from various sources – servers, network devices, databases, and applications. This involves configuring agents on each source to forward logs to the central repository, ensuring proper parsing and formatting for efficient analysis.

Data analysis involves using query languages (like SPL in Splunk or Kibana’s query DSL) to filter, search, and correlate log events. I regularly use regular expressions and other advanced techniques to identify patterns and anomalies that may indicate malicious activity. For example, I used log analysis to detect a data exfiltration attempt based on unusual outbound network connections.

My focus extends beyond simply analyzing individual logs; I integrate log data with other security information to provide a comprehensive picture of the security landscape. This enables a more holistic view of security events and facilitates accurate threat detection.

Q 6. Describe your experience with threat intelligence platforms and how you integrate them into your workflow.

Threat intelligence platforms (TIPs) are invaluable in proactive threat detection and response. I have experience integrating several TIPs into my workflow to enrich security data, identify emerging threats, and improve the accuracy of our security alerts.

My process typically involves subscribing to threat feeds from reputable sources, integrating them into our SIEM or SOAR platform, and using the threat intelligence data to enhance existing security rules and develop new ones. For instance, I’ve used threat intelligence feeds to identify malicious IP addresses, domains, and file hashes to improve the detection of phishing attempts and malware infections.

Furthermore, I use TIPs to gain situational awareness about emerging threats and vulnerabilities affecting our organization. This proactive approach allows us to prioritize remediation efforts and enhance our overall security posture. I actively investigate alerts flagged by the TIP, correlating them with our internal security logs to determine their relevance to our environment.

The integration of TIPs into my workflow is an ongoing process of refinement. I continually monitor the effectiveness of the integrated intelligence and adjust the integration strategy to optimize threat detection and response.

Q 7. How do you correlate security events from different sources to identify threats?

Correlating security events from diverse sources is crucial for effective threat detection. This involves using a combination of technologies and techniques to identify relationships between seemingly unrelated events that together paint a clearer picture of a threat.

My approach typically involves using a SIEM or SOAR platform to integrate data from multiple sources (e.g., firewalls, intrusion detection systems, endpoint detection and response tools). These platforms provide the capability to correlate events based on various attributes like timestamps, IP addresses, user accounts, and process IDs.

For example, I might correlate a failed login attempt from an unusual location with subsequent suspicious network activity from the same IP address, potentially indicating a brute-force attack followed by data exfiltration. The correlation of these seemingly unrelated events provides strong evidence of a sophisticated attack.

I also use advanced techniques such as machine learning and graph databases to identify complex relationships between events, enhancing the detection of sophisticated attacks such as APT campaigns. Graph databases help visualize relationships between entities and reveal subtle connections missed by traditional rule-based correlation methods. The combination of these approaches is crucial to uncover advanced threats and ensure accurate incident response.

Q 8. Explain your understanding of different types of security logs (e.g., system logs, application logs, network logs).

Security logs are the digital bread crumbs left behind by systems and applications as they operate. Different types offer various perspectives on security events.

- System Logs: These logs record events happening at the operating system level. Think of it as a diary of the OS – login attempts, file access, system errors, and hardware changes are all documented. For example, a Windows event log might record a user’s failed login attempt, providing details like the username and the timestamp. A Linux system log (syslog) might record a disk space warning or a service restart.

- Application Logs: These logs track events specific to individual applications. They provide granular details about application performance and security events. A web server’s log might record requests from different IP addresses, access times, and any errors encountered. A database application log might track successful and failed database queries, along with any security-related events like authentication failures.

- Network Logs: These logs capture network traffic, recording details like source and destination IP addresses, port numbers, protocols, and the amount of data transferred. Firewalls, intrusion detection/prevention systems (IDS/IPS), and routers generate these logs, offering a window into network activity. For example, a firewall log might record a blocked connection attempt from a known malicious IP address.

Analyzing these different log types in conjunction provides a holistic view of security posture and facilitates more effective threat detection and response.

Q 9. How do you handle false positives in security alerts?

False positives in security alerts are like a faulty smoke alarm – they signal a potential threat when none exists. Handling them effectively is crucial to avoid alert fatigue and maintain efficient security operations.

My approach is multi-faceted:

- Fine-tuning Alert Rules: This is the first line of defense. By adjusting thresholds and refining the criteria that trigger alerts, we can reduce the number of false positives. For example, if an alert is triggered by a specific user logging in from an unusual location, we can adjust the alert to only trigger after multiple instances from that location within a short period.

- Employing Machine Learning: Modern SIEMs leverage machine learning algorithms to analyze historical data and learn what constitutes normal behavior. This allows them to distinguish between genuine threats and anomalies that simply fall outside the norm but are not necessarily malicious.

- Contextual Analysis: This is where human intelligence comes in. Carefully examining the circumstances surrounding an alert is crucial. For example, an alert for unusual file access might be a false positive if it’s related to a scheduled backup.

- Incident Response Playbook: A well-defined playbook outlines how to investigate and triage alerts, clearly defining steps for handling various scenarios, including assessing whether alerts are false positives.

- Regular Review and Optimization: The efficacy of alert rules must be continuously reviewed and adjusted based on ongoing analysis and feedback. This ensures that alerts remain relevant and accurate.

Essentially, it’s a continuous feedback loop of refinement, learning, and adaptation to minimize disruptions from irrelevant alerts.

Q 10. What are some common security threats you’ve encountered and how did you address them?

I’ve dealt with a range of security threats, from common attacks like phishing and denial-of-service (DoS) attempts to more sophisticated threats like ransomware and insider attacks.

- Phishing Attacks: I implemented comprehensive security awareness training programs for employees, emphasizing techniques for identifying phishing emails and the importance of secure password management. We also deployed email filtering and anti-spam solutions.

- Denial-of-Service (DoS) Attacks: Mitigation involved strengthening our network infrastructure with DDoS mitigation solutions and implementing robust rate-limiting and intrusion detection systems.

- Ransomware Attacks: This involved implementing a multi-layered defense, including regular data backups, strong endpoint protection, and rigorous patch management to minimize vulnerabilities. Incident response planning included protocols for containing the infection and restoring data from backups.

- Insider Threats: Addressing insider threats requires a more nuanced approach, typically involving access control measures (principle of least privilege), regular audits of user activity, and detailed monitoring of sensitive data access.

Each situation requires a tailored response, combining technical solutions with robust security policies and user education. My focus is always on prevention, detection, and effective remediation.

Q 11. Describe your experience with security information and event management (SIEM) systems.

My experience with SIEM systems is extensive. I’ve worked with several leading platforms, including Splunk, QRadar, and LogRhythm. My expertise spans the entire lifecycle, from initial deployment and configuration to ongoing maintenance and optimization.

My responsibilities have included:

- Data Ingestion and Normalization: Configuring the SIEM to collect logs from diverse sources, standardizing their format for efficient analysis.

- Rule Creation and Management: Developing and fine-tuning security rules to detect anomalies and potential threats. This includes correlating events from multiple sources to identify complex attacks.

- Alerting and Reporting: Setting up effective alerting mechanisms to notify security personnel of critical events. Generating reports to track security trends and assess the effectiveness of security controls.

- Incident Response: Utilizing the SIEM to investigate security incidents, analyze event timelines, and identify root causes. This involves employing the SIEM’s forensic capabilities to reconstruct attack paths.

- Performance Tuning and Optimization: Ensuring that the SIEM operates efficiently and effectively, optimizing data processing and reducing resource consumption.

SIEMs are the backbone of modern security operations, and I have a deep understanding of how to leverage their capabilities to enhance an organization’s security posture.

Q 12. How do you create effective visualizations to communicate security insights to technical and non-technical audiences?

Effective visualization is key to communicating security insights, especially when conveying complex information to diverse audiences. My approach depends on the audience and the specific insight being communicated.

For technical audiences, I utilize detailed dashboards with rich visualizations like:

- Time-series graphs: Show trends in security events over time, highlighting spikes in suspicious activity.

- Network diagrams: Illustrate network connections, indicating potential attack paths or compromised systems.

- Heatmaps: Visualize data density, highlighting regions with concentrated security events.

For non-technical audiences, the key is simplicity and clarity:

- High-level summaries: Present key findings in a concise and easily digestible format, avoiding technical jargon.

- Visual metaphors: Use analogies and relatable images to help non-technical audiences grasp complex concepts.

- Data storytelling: Present security insights within a narrative framework, making it easier for the audience to connect with the information.

In both cases, clear labels, consistent color schemes, and effective use of charts and graphs are essential. The goal is to make the data visually compelling and easy to understand, regardless of the audience’s technical expertise. Tools like Tableau and Power BI are extremely valuable in creating these visualizations.

Q 13. Explain your experience with scripting languages (e.g., Python, PowerShell) for security analytics.

Scripting languages are indispensable for automating security tasks and performing in-depth analysis. My experience primarily revolves around Python and PowerShell.

Python: I use Python extensively for:

- Log parsing and analysis: Python libraries like

re(regular expressions) andpandasfacilitate efficient parsing and manipulation of log files. - Security automation: Automating tasks such as vulnerability scanning, malware analysis, and security report generation.

- Threat intelligence integration: Integrating threat intelligence feeds into security workflows to identify and prioritize threats.

Example: A simple Python script to count failed login attempts from a log file:

import re with open('auth.log', 'r') as f: log_data = f.read() failed_logins = len(re.findall(r'Failed password for', log_data)) print(f'Number of failed login attempts: {failed_logins}')

PowerShell: I leverage PowerShell primarily for:

- Windows system administration and security: Managing security configurations, auditing system events, and deploying security updates.

- Active Directory security: Managing user accounts, groups, and permissions within Active Directory.

My proficiency in these languages empowers me to perform complex analyses, automate repetitive tasks, and enhance the overall efficiency of security operations.

Q 14. How do you use security analytics to identify and mitigate insider threats?

Identifying and mitigating insider threats requires a multi-layered approach that combines behavioral analytics with access controls and security awareness.

Behavioral Analytics: This involves establishing baselines of normal user activity and using security analytics to detect deviations. Examples include:

- Unusual access patterns: Accessing sensitive data outside of normal work hours or from unusual locations.

- Data exfiltration attempts: Large volumes of data being transferred to external servers or unusual file access patterns.

- Account compromise: Unusual login attempts, failed logins, or suspicious account modifications.

Access Control: Implementing the principle of least privilege ensures that users only have access to the resources they need. Regular audits and reviews of user privileges are essential.

Security Awareness: Educating employees about the risks of insider threats and the importance of reporting suspicious activity.

Data Loss Prevention (DLP): Deploying DLP tools to monitor and prevent sensitive data from leaving the organization’s control.

My approach involves using SIEM systems to collect and analyze user activity logs, correlating events to identify suspicious behaviors. This data is used to generate alerts and initiate investigations. The investigation involves carefully reviewing user activity, interviewing the user, and verifying the integrity of systems. This process is crucial in preventing or minimizing damage from insider threats.

Q 15. What are the ethical considerations when analyzing security data?

Ethical considerations in security data analysis are paramount. We’re dealing with sensitive information, and our actions have real-world consequences. Privacy is a major concern; we must ensure we only access and analyze data we are explicitly authorized to handle, adhering strictly to data privacy regulations like GDPR and CCPA. This means anonymizing or pseudonymizing data whenever possible, minimizing data retention, and implementing robust access controls.

Another crucial aspect is fairness and non-discrimination. Our analyses should not perpetuate or exacerbate existing biases. For example, if we’re analyzing network traffic for malicious activity, we must be cautious not to unfairly target specific user groups based on superficial characteristics. We need to ensure our algorithms are fair and objective. Transparency is key – documenting our methodologies and sharing our findings responsibly is critical to building trust and ensuring accountability.

Finally, there’s the potential for misuse of the information we gather. The insights we uncover could be used to unfairly target individuals, organizations, or even countries. We have a responsibility to ensure our work is used ethically and for the greater good, not to create harm or violate the rights of others. Regular ethical reviews and oversight are vital.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with data mining and machine learning techniques for security analytics.

I have extensive experience leveraging data mining and machine learning techniques for security analytics. I’ve employed various algorithms, including anomaly detection methods like One-Class SVM and Isolation Forest to identify unusual network activity, which can signal intrusions or malware. For example, I used Isolation Forest to detect anomalies in DNS traffic, identifying a previously unknown malware variant that was exfiltrating data through DNS tunneling.

I’ve also utilized supervised learning techniques, like Support Vector Machines (SVMs) and Random Forests, for threat classification. In one project, I trained an SVM model to identify phishing emails based on features like sender address, email content, and links. This resulted in a significant improvement in our organization’s ability to filter out phishing attempts.

Furthermore, I’ve worked with unsupervised techniques, such as K-means clustering, to group similar security events. This helped us identify patterns and relationships between seemingly disparate incidents, enabling us to better understand attack campaigns and improve our overall security posture. My experience also extends to deep learning, where I’ve explored recurrent neural networks (RNNs) for analyzing time-series data like system logs, detecting intrusions or failures before they escalate into major incidents.

In all cases, careful feature engineering, model selection, and validation were crucial steps in developing robust and accurate security analytics models. Regular model retraining and monitoring are critical to maintain accuracy and adapt to evolving threats.

Q 17. How do you stay up-to-date with the latest security threats and technologies?

Staying current in the ever-evolving landscape of security threats and technologies requires a multi-pronged approach. I subscribe to reputable industry publications and newsletters, such as SANS Institute’s resources and publications from various security vendors. I actively participate in online security communities and forums, engaging in discussions and learning from the experiences of other security professionals. This allows me to learn about emerging threats and techniques from a diverse range of perspectives.

Regular attendance at security conferences and workshops is crucial. These events offer valuable insights from leading experts and provide opportunities to network with colleagues and learn about new technologies. I also dedicate time to hands-on experimentation. I regularly test new security tools and technologies, and explore potential vulnerabilities in simulated environments to build practical experience and understanding. Certifications, such as CISSP, maintain a high level of professional standards and ongoing learning.

Finally, I maintain a strong focus on continuous learning through online courses and training programs. Platforms like Coursera and edX offer excellent resources for staying up-to-date on the latest security trends and techniques. This combined approach ensures that I remain informed about emerging threats and have the skills necessary to effectively address them.

Q 18. Explain your understanding of different types of security visualizations (e.g., dashboards, charts, graphs).

Security visualizations are essential for communicating complex security data effectively. Dashboards provide a high-level overview of the current security state, displaying key metrics such as the number of active alerts, successful login attempts, and network traffic volume. They typically use charts and graphs to present this information concisely.

Charts, like bar charts, pie charts, and line charts, are used to show the frequency, distribution, and trends in security data. For instance, a bar chart can show the number of security incidents by severity level, while a line chart can illustrate network traffic over time. Graphs, often network graphs or dependency graphs, visualize the relationships between different security elements, such as devices, users, and applications. Network graphs, for example, can illustrate how malware spreads through a network. Heatmaps offer another compelling visualization technique, ideal for showing data density or correlations across different dimensions of data.

Choosing the right visualization depends on the specific data and the message you want to convey. A well-designed visualization makes complex information easily digestible, allowing security analysts to quickly identify patterns, anomalies, and potential threats. Poorly designed visualizations, on the other hand, can obscure important information and lead to misinterpretations.

Q 19. How do you ensure the accuracy and integrity of security data?

Ensuring the accuracy and integrity of security data is paramount. This involves implementing robust data collection processes, using validated security tools and data sources, and establishing rigorous data quality checks and validation procedures. Data validation techniques vary depending on the specific data type; we use various methods, including regular expression matching to validate format, checksums to ensure data integrity, and comparing data across multiple sources for consistency.

Data normalization is also essential to ensure consistency and comparability. Data enrichment is another critical component; it involves adding context and additional information to the raw data to improve analysis. For example, enriching IP addresses with geolocation information provides a richer context. We employ data governance strategies with clearly defined roles and responsibilities for data collection, storage, and processing. Data lineage tracking, that is, tracing data from its origin to its final use, is vital to understanding data integrity and identifying potential errors.

Regular audits and reviews of data quality are necessary to identify and address any discrepancies. The use of appropriate security controls to protect data integrity during storage and transmission is also crucial, along with regular testing of these security controls. These measures help ensure that the security data used for analysis is reliable and trustworthy, leading to accurate and informed security decisions.

Q 20. Describe your experience with incident response processes and how security analytics plays a role.

Security analytics plays a vital role in incident response processes. During the initial detection phase, security analytics tools can quickly identify potential security incidents by analyzing logs, network traffic, and other security data, raising alerts based on defined thresholds or anomalous behavior. This speed of detection is critical in minimizing the impact of an attack.

During the analysis phase, security analytics tools help investigators understand the scope and impact of the incident. By analyzing the data, we can identify the attacker’s techniques, their objectives, and the extent of the compromise. For example, by analyzing log files, we can trace the attacker’s actions, determining which systems were accessed and what data was compromised. This enables a more targeted and effective response.

The containment, eradication, and recovery phases also benefit from security analytics. The analysis provides information to isolate affected systems, remove malware, and restore systems to a secure state. Post-incident analysis, where we analyze the incident to understand what happened, why it happened, and how to prevent it from happening again, heavily relies on security analytics to identify weaknesses and improve future responses. Security analytics provides invaluable data to refine our incident response plan and improve our overall security posture.

Q 21. How do you use security analytics to support compliance requirements?

Security analytics is crucial for supporting compliance requirements. Many regulations, like PCI DSS, HIPAA, and GDPR, mandate the maintenance of detailed security logs and the ability to demonstrate compliance. Security analytics tools help collect and analyze these logs, providing evidence of compliance with various regulatory requirements.

For example, to demonstrate compliance with PCI DSS, we can use security analytics to analyze transaction logs to ensure that cardholder data is protected according to the standard. Similarly, HIPAA compliance often requires demonstrating the integrity and confidentiality of Protected Health Information (PHI). Security analytics can analyze access logs to identify unauthorized access attempts or data breaches involving PHI.

GDPR compliance requires demonstrating the ability to respond to data subject access requests. Security analytics can help locate and retrieve relevant data quickly and efficiently, demonstrating compliance with this requirement. Regular reporting and auditing functions within security analytics tools produce documented evidence of compliance for internal and external audits, demonstrating consistent adherence to regulations and standards. This not only helps organizations meet legal and contractual obligations but also contributes to building trust with customers and stakeholders.

Q 22. Explain your experience with different data formats used in security analytics (e.g., JSON, XML, CSV).

Security analytics relies on diverse data formats, each with its strengths and weaknesses. My experience encompasses working with JSON, XML, and CSV, among others. JSON (JavaScript Object Notation) is lightweight and human-readable, making it ideal for transmitting structured data like event logs. Its key-value pair structure facilitates easy parsing and analysis. I’ve used JSON extensively in projects involving real-time log ingestion and analysis. XML (Extensible Markup Language) provides a more robust and hierarchical structure, useful for complex data representations with nested elements. It’s less common in high-volume security analytics, due to its verbosity, but I’ve utilized it in situations where data schema consistency and validation were paramount, such as integrating with legacy systems. CSV (Comma-Separated Values) is the simplest, offering a tabular structure suitable for importing data into spreadsheets or databases. I frequently employ CSV for quick analysis and data export, particularly when dealing with static datasets or performing preliminary data exploration. Choosing the right format depends heavily on the data source, the analytics tools used, and the overall efficiency requirements of the process.

For example, during an incident response investigation, we received alerts in JSON format which were easily parsed and enriched with data from a CSV file containing threat intelligence indicators. This streamlined our process, allowing for rapid correlation and analysis.

Q 23. How do you handle large datasets in security analytics?

Handling large datasets in security analytics requires a multifaceted strategy. The sheer volume of security data necessitates efficient data processing techniques. My experience focuses on leveraging distributed computing frameworks like Apache Spark and Hadoop to process massive datasets in parallel. These frameworks allow for distributing the computational load across multiple machines, dramatically reducing processing time. Furthermore, I’m proficient in techniques such as data sampling and aggregation to reduce data volume without significant loss of analytical insight. This involves intelligently selecting representative subsets of the data for analysis, reducing the computational burden while retaining sufficient information to draw valid conclusions. Data filtering is also critical; we employ techniques like regular expressions and structured query language (SQL) to refine our focus on relevant events. Finally, efficient data storage is essential. I’ve worked with cloud-based data lakes and data warehouses (like AWS S3, Snowflake) that are designed to handle petabytes of data. These offer scalability and cost-effectiveness compared to traditional on-premise solutions.

//Example Spark code snippet (pseudo-code): // val data = spark.read.csv("large_dataset.csv") // val filteredData = data.filter(col("event_type") === "intrusion") // val aggregatedData = filteredData.groupBy("sourceIP").count() // aggregatedData.show()Q 24. What are the limitations of using only security analytics for threat detection?

While security analytics plays a crucial role in threat detection, relying solely on it has limitations. Security analytics primarily focuses on analyzing historical data to identify patterns and anomalies. This means that truly novel or zero-day attacks, which haven’t been seen before, might evade detection. The efficacy of security analytics depends heavily on the quality and completeness of the data ingested. Incomplete or poorly formatted logs can lead to false negatives (missing actual threats) or false positives (flagging benign events as threats). It’s also important to remember that security analytics operates retrospectively; it identifies threats after they’ve occurred, limiting its potential for real-time prevention. Therefore, it’s vital to integrate security analytics with other security measures like intrusion detection systems (IDS), intrusion prevention systems (IPS), and security information and event management (SIEM) for a layered and comprehensive approach. Think of it like a doctor diagnosing a disease: security analytics provides valuable insights from past medical history (logs), but a physical examination (IDS/IPS) and real-time monitoring are equally critical for effective diagnosis and treatment.

Q 25. How do you ensure the scalability and maintainability of your security analytics solutions?

Ensuring scalability and maintainability of security analytics solutions is crucial for long-term effectiveness. This requires a well-architected system that is modular, adaptable, and efficient. I advocate for a microservices architecture, breaking down the system into smaller, independent services that can be scaled and updated independently. This prevents single points of failure and improves maintainability. Using containerization technologies like Docker and Kubernetes allows for easy deployment, scaling, and management of these microservices across different environments. Automation is key: I leverage Infrastructure as Code (IaC) tools like Terraform to manage infrastructure automatically, ensuring consistent deployments and reducing manual errors. Automated testing is also essential, employing unit and integration tests to guarantee functionality and prevent regressions during updates. Finally, proper documentation is non-negotiable. Clear, well-maintained documentation for code, configurations, and processes is crucial for maintainability and facilitates onboarding new team members.

Q 26. Describe your experience with cloud-based security analytics platforms.

My experience with cloud-based security analytics platforms is extensive. I’ve worked with various platforms, including AWS Security Hub, Azure Sentinel, and Google Cloud Security Command Center. These platforms offer significant advantages in terms of scalability, cost-effectiveness, and ease of management. Cloud platforms provide readily available resources, eliminating the need for substantial upfront investment in hardware infrastructure. They also offer automated scaling, ensuring that the system can handle fluctuating data volumes effectively. The integration with other cloud services is seamless, allowing for efficient data collection and analysis. However, challenges include security considerations like data residency, compliance regulations, and data governance. Careful planning and adherence to best practices are essential to mitigate these risks. For example, in a recent project, we migrated on-premise security analytics to AWS Security Hub. The migration involved carefully planning data migration strategies, ensuring data security and compliance throughout the process.

Q 27. Explain your approach to building a security dashboard.

Building a security dashboard is an iterative process focused on providing actionable intelligence at a glance. My approach starts with clearly defining the target audience and their specific needs. Understanding what information is most critical to different stakeholders (security analysts, management, etc.) guides the dashboard’s design. Key performance indicators (KPIs) are carefully selected to represent critical security metrics. These might include the number of security alerts, the severity of threats, response times, and system uptime. Data visualization is paramount. I leverage charts, graphs, and maps to present data in an easily digestible format. Different visualization techniques are chosen based on the type of data and the message to be conveyed. For instance, a timeline would be ideal for tracking events over time, while a heatmap could highlight geographical patterns of attacks. The dashboard should be interactive and allow for drilling down into specific details for deeper investigation. This could involve filtering data based on specific criteria or viewing related events. Finally, the dashboard is designed with an emphasis on usability and accessibility. The interface should be clean, intuitive, and easy to navigate, ensuring that users can quickly understand the information presented.

Key Topics to Learn for Security Analytics and Visualization Interview

- Data Sources & Ingestion: Understanding various data sources (SIEM, firewalls, endpoint detection, etc.) and methods for efficient data ingestion and normalization.

- Log Analysis & Correlation: Practical application of log analysis techniques to identify patterns, anomalies, and security threats. This includes correlating data from multiple sources to build a comprehensive security picture.

- Threat Hunting & Incident Response: Developing proactive threat hunting strategies and applying visualization techniques to pinpoint and respond to security incidents effectively.

- Security Information and Event Management (SIEM): Deep understanding of SIEM functionalities, including data aggregation, alert management, and reporting.

- Data Visualization Techniques: Mastering techniques like dashboards, graphs, and charts to effectively communicate security insights to technical and non-technical audiences. Understanding different chart types and their appropriate uses.

- Statistical Analysis & Anomaly Detection: Applying statistical methods to identify unusual patterns and outliers in security data that might indicate malicious activity.

- Big Data Technologies (e.g., Hadoop, Spark): Familiarity with big data technologies used in processing and analyzing large volumes of security data.

- Security Metrics & KPIs: Defining and tracking key performance indicators (KPIs) to measure the effectiveness of security controls and initiatives.

- Data Security & Privacy: Understanding data protection principles and regulations (e.g., GDPR, CCPA) relevant to security data handling and visualization.

- Cloud Security Analytics: Understanding the unique challenges and solutions for security analytics in cloud environments (AWS, Azure, GCP).

Next Steps

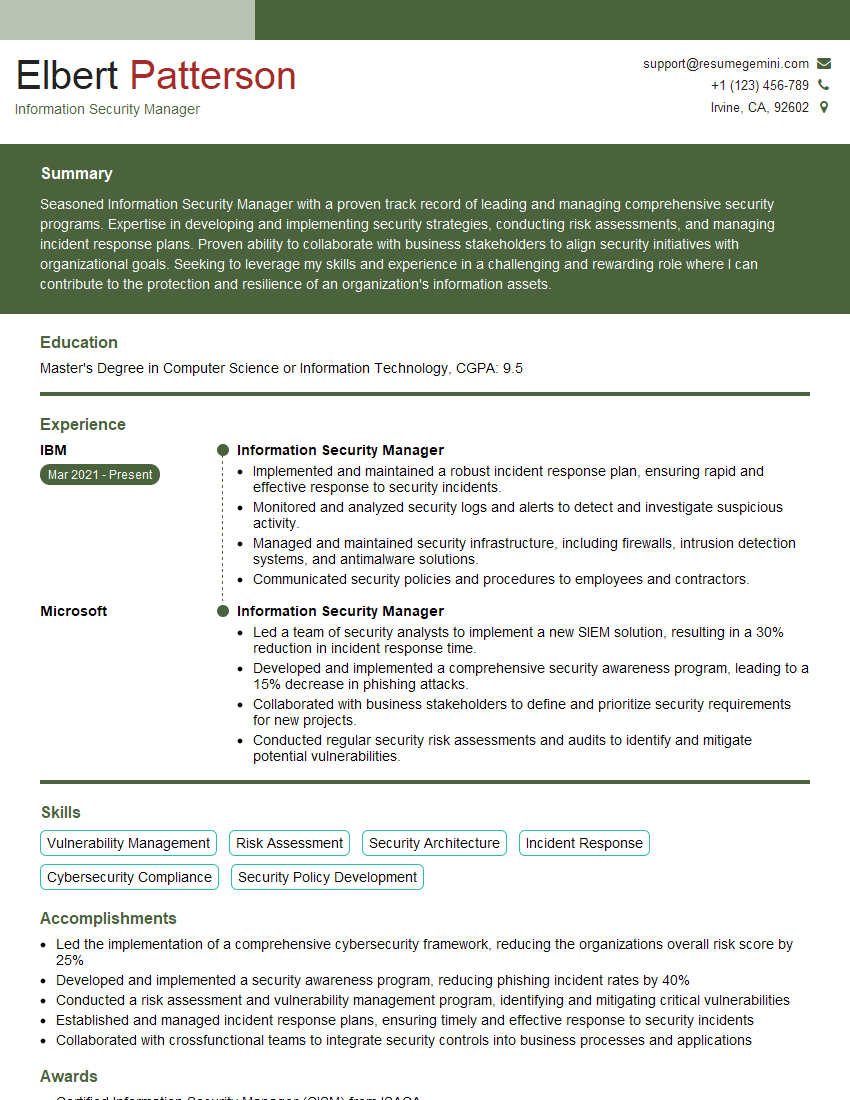

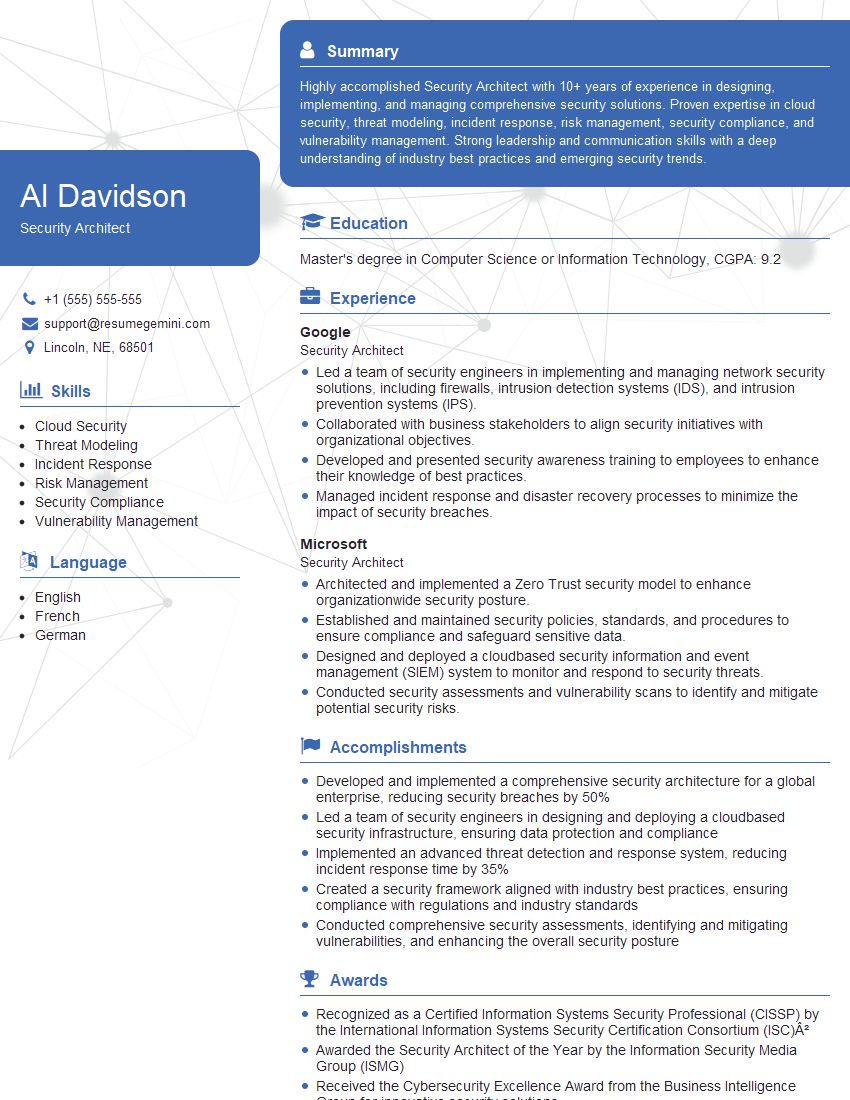

Mastering Security Analytics and Visualization is crucial for a successful and rewarding career in cybersecurity. This skillset is highly sought after, opening doors to exciting roles and significant career advancement. To maximize your job prospects, it’s vital to create an ATS-friendly resume that showcases your expertise effectively. We highly recommend using ResumeGemini to build a professional and impactful resume. ResumeGemini provides a user-friendly platform and offers examples of resumes tailored specifically to Security Analytics and Visualization roles, helping you present your qualifications in the best possible light.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO