Cracking a skill-specific interview, like one for Defect Removal, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Defect Removal Interview

Q 1. Explain the difference between defect prevention and defect removal.

Defect prevention and defect removal are two crucial, yet distinct, aspects of software quality assurance. Think of it like this: prevention is about stopping problems *before* they occur, while removal is about fixing problems *after* they’ve surfaced.

Defect Prevention focuses on proactively identifying and eliminating potential issues during the design and development phases. This involves activities like thorough requirements analysis, robust design reviews, code inspections, and the use of static analysis tools. The goal is to minimize the number of defects introduced in the first place.

Defect Removal, on the other hand, is the process of identifying and fixing defects that have already made their way into the software. This primarily involves testing activities like unit testing, integration testing, system testing, and user acceptance testing. The aim is to find and rectify defects before the software is released to end-users.

In essence, prevention is about building quality *into* the software, while removal is about finding and fixing quality issues *within* the software. An effective software development process needs both to achieve high-quality outcomes.

Q 2. Describe your experience with different defect tracking systems (e.g., Jira, Bugzilla).

I have extensive experience with various defect tracking systems, including Jira and Bugzilla. My experience spans across different project types and team sizes. I’m proficient in using these tools to manage the entire defect lifecycle—from initial reporting to resolution and closure.

In Jira, for instance, I’ve used it extensively to create and assign issues, track their progress through different statuses (e.g., Open, In Progress, Resolved, Closed), manage workflows, and generate reports on defect density, resolution time, and other key metrics. I’m comfortable with customizing workflows, defining issue types, and utilizing Jira’s reporting capabilities to analyze trends and identify areas for improvement.

My experience with Bugzilla is similar. I’ve utilized its features for bug tracking, reporting, and management. While Bugzilla might offer slightly less advanced features than Jira, its strengths lie in its flexibility and open-source nature. I’ve adapted my workflow seamlessly to both systems based on the specific project needs.

Beyond Jira and Bugzilla, I’ve also had exposure to other systems like Azure DevOps and Mantis, demonstrating my adaptability to various defect management tools.

Q 3. What are the key metrics you use to measure the effectiveness of defect removal?

Several key metrics help measure the effectiveness of defect removal. These metrics provide insights into the quality of the software and the efficiency of the testing process.

- Defect Density: This metric represents the number of defects found per unit of code (e.g., lines of code, function points). A lower defect density indicates better code quality.

- Defect Removal Efficiency (DRE): DRE calculates the percentage of defects found during testing compared to the total number of defects found during testing and post-release. A higher DRE indicates a more effective testing process.

- Mean Time To Resolution (MTTR): This tracks the average time it takes to resolve a defect, highlighting areas for process optimization. Lower MTTR signifies a quicker resolution process.

- Escape Rate: This measures the percentage of defects that escape the testing phase and reach production. A lower escape rate is the ultimate goal, showcasing the success of defect removal efforts.

- Cost of Defects: This metric tracks the overall cost associated with finding and fixing defects at different stages of the software development lifecycle. Early detection is typically far less expensive.

By monitoring these metrics, we gain valuable insights into testing effectiveness and areas requiring improvement. Trend analysis allows us to proactively address issues and continuously improve the software development process.

Q 4. How do you prioritize defects based on severity and priority?

Prioritizing defects requires a balanced approach that considers both severity and priority. Severity refers to the impact of the defect on the software’s functionality or user experience (e.g., critical, major, minor, trivial). Priority, on the other hand, reflects the urgency of fixing the defect based on business needs and deadlines.

I usually employ a prioritization matrix that combines severity and priority. A simple approach is a 4×4 matrix, with severity and priority each having four levels (e.g., Critical, High, Medium, Low). Defects falling into the Critical/High quadrant are addressed first, followed by High/Medium, and so on.

For example, a critical security vulnerability (high severity, high priority) would take precedence over a minor cosmetic issue (low severity, low priority). However, even a low-severity issue might be prioritized higher if it impacts a crucial business function or an upcoming deadline.

Using a prioritization matrix allows for a transparent and consistent approach to defect handling, ensuring that the most impactful defects are addressed promptly.

Q 5. Describe your approach to root cause analysis of defects.

My approach to root cause analysis (RCA) is systematic and thorough. I often utilize the 5 Whys technique, a simple yet powerful tool for identifying the underlying causes of a defect. I don’t just focus on the symptom (the visible defect); I delve deeper to uncover the root cause.

For instance, if a user reports a crash (symptom), I wouldn’t just fix the crash. I’d ask ‘why’ repeatedly:

- Why 1: The application crashed because of a null pointer exception.

- Why 2: A null pointer exception occurred because a variable wasn’t initialized properly.

- Why 3: The variable wasn’t initialized because the logic in the function was flawed.

- Why 4: The logic was flawed due to an incomplete understanding of the requirements.

- Why 5: The incomplete understanding stemmed from inadequate documentation and communication during the design phase.

By repeatedly asking ‘why’, we can identify the root cause—inadequate documentation and communication. Addressing this root cause prevents similar defects from occurring in the future. I often supplement the 5 Whys with other techniques like fishbone diagrams (Ishikawa diagrams) for a more structured and comprehensive RCA.

Q 6. What testing methodologies are you familiar with (e.g., Agile, Waterfall)?

I’m experienced in both Agile and Waterfall testing methodologies. My approach adapts to the project’s specific needs and context.

In Agile projects, testing is integrated throughout the development lifecycle. I’m familiar with practices like test-driven development (TDD), continuous integration/continuous delivery (CI/CD), and sprint-based testing. The focus is on iterative development and rapid feedback loops.

In Waterfall projects, testing occurs in distinct phases after the development phase is complete. This often involves more comprehensive testing efforts, including system testing and user acceptance testing (UAT), with a stronger emphasis on formal documentation.

My adaptability allows me to leverage the strengths of each methodology, selecting the most appropriate approach based on the project’s requirements and constraints. Irrespective of the methodology, I maintain a strong focus on collaboration and communication throughout the testing process.

Q 7. Explain your experience with test case design techniques (e.g., boundary value analysis, equivalence partitioning).

I’m proficient in several test case design techniques, including boundary value analysis and equivalence partitioning. These techniques ensure thorough test coverage and help uncover a wide range of defects.

Boundary Value Analysis (BVA) focuses on testing the boundaries of input values. We test values at the edges of valid input ranges and just beyond those edges to find defects related to boundary conditions. For example, if a system accepts input values between 1 and 100, BVA would include tests for 0, 1, 99, 100, and 101.

Equivalence Partitioning divides the input values into groups (partitions) that are likely to be processed similarly. We select representative values from each partition to test. For instance, if the system accepts positive integers, we might create partitions for positive integers less than 10, positive integers between 10 and 100, and positive integers greater than 100. Testing one value from each partition is often sufficient to represent the entire partition.

These techniques, along with others like decision table testing and state transition testing, are crucial for creating effective and efficient test suites, ensuring comprehensive test coverage and maximizing defect detection.

Q 8. How do you handle conflicting priorities between speed of delivery and quality?

Balancing speed of delivery and quality is a crucial aspect of software development. It’s often a delicate tightrope walk, and the key is proactive risk management and prioritization. Thinking of it like building a house: you can build quickly with substandard materials, but it will fall apart. Or, you can build slowly with high-quality materials, ensuring longevity. The best approach is finding the optimal balance.

Prioritization Matrix: I use a prioritization matrix, often a Risk vs. Impact matrix, to categorize defects. High-impact, high-risk defects are addressed immediately, regardless of the delivery schedule. Low-impact, low-risk defects might be deferred to a later sprint or release.

Shift-Left Testing: I advocate for ‘shift-left’ testing, meaning we incorporate testing earlier in the development lifecycle. This includes unit testing, integration testing, and system testing during the development phase itself, rather than solely relying on testing at the very end. This reduces the number of defects found late, which would significantly impact release timelines.

Defect Triage and Root Cause Analysis: When faced with time constraints, a thorough defect triage is vital. We assess the severity and root cause of each defect to determine the optimal fix strategy. If a defect is minor and doesn’t severely impact functionality, it might be prioritized lower.

Collaboration and Communication: Open and honest communication with stakeholders is essential. Transparency about potential risks and trade-offs is critical to securing buy-in and making informed decisions.

Q 9. Describe your experience with automated testing frameworks (e.g., Selenium, Appium).

I have extensive experience with Selenium and Appium, utilizing them to automate testing across various web and mobile applications. My experience extends beyond simply writing test scripts; it encompasses designing robust and maintainable test frameworks, integrating them into CI/CD pipelines, and leveraging reporting features for effective analysis.

Selenium: I’ve used Selenium WebDriver extensively to automate functional and regression tests for web applications. For example, I developed a comprehensive Selenium framework for a large e-commerce website, covering user authentication, product search, shopping cart functionality, and checkout processes. This framework significantly reduced testing time and improved test coverage.

Appium: With Appium, I’ve automated UI testing for both Android and iOS mobile apps. In one project, we used Appium to create automated tests for a banking app, ensuring the seamless functioning of features like fund transfers, bill payments, and account balance inquiries across different devices and operating system versions. This involved handling various device emulators and simulators.

Test Framework Design: I prioritize creating maintainable and reusable frameworks using Page Object Model (POM) and data-driven approaches. This promotes code reusability and simplifies test maintenance as the application evolves.

Q 10. How do you ensure test coverage is adequate?

Adequate test coverage is not simply about achieving a high percentage; it’s about ensuring that all critical functionalities and user flows are thoroughly tested. I utilize a combination of techniques to ensure this.

Requirement Traceability Matrix: I create a traceability matrix that links requirements to test cases. This guarantees that every requirement has associated test cases to verify its fulfillment. This ensures nothing is missed.

Risk-Based Testing: We prioritize testing based on the risk associated with each feature. High-risk features, such as payment gateways or user authentication, receive more comprehensive testing.

Code Coverage Tools: For unit and integration tests, we utilize code coverage tools to assess how much of the codebase is exercised by our tests. While code coverage isn’t the sole metric, it provides valuable insight into untested areas.

Test Case Design Techniques: I employ various test design techniques like equivalence partitioning, boundary value analysis, and state transition testing to ensure comprehensive test coverage with efficient test case design.

Q 11. Explain your experience with performance testing and load testing.

Performance and load testing are critical for ensuring application stability and responsiveness under various conditions. My experience involves using various tools and techniques to assess system performance.

LoadRunner/Jmeter: I have experience with tools like LoadRunner and JMeter to simulate realistic user loads on applications, identifying bottlenecks and performance limitations. For instance, I used JMeter to test the scalability of a web application expecting high traffic during promotional events, ensuring the servers could handle the load without significant performance degradation.

Performance Monitoring Tools: Beyond load generation, I utilize performance monitoring tools like New Relic or AppDynamics to monitor key performance indicators (KPIs) like response times, throughput, and resource utilization during testing. This allows for granular analysis and identifying performance issues.

Performance Tuning: My experience includes collaborating with development teams to optimize application performance based on the findings from load and performance tests. This often involves database optimization, code refactoring, and infrastructure adjustments.

Q 12. How do you handle defects found in production?

Production defects are serious; they directly impact end-users. My approach prioritizes immediate mitigation, root cause analysis, and preventing recurrence.

Immediate Mitigation: The first step is to immediately assess the impact of the defect and implement a hotfix or workaround to minimize disruption to users. This might involve rolling back a release or deploying a temporary patch.

Root Cause Analysis: Once the immediate impact is addressed, a thorough root cause analysis is conducted to understand why the defect made it to production. This often involves reviewing code, test logs, and monitoring data.

Defect Prevention: The most important step is preventing similar defects from happening again. This includes improving testing processes, enhancing code reviews, and strengthening the CI/CD pipeline.

Post-Mortem Analysis: A post-mortem analysis is done to document the incident, identify contributing factors, and implement corrective actions to prevent similar issues.

Q 13. Describe your experience with security testing.

Security testing is crucial for protecting applications and user data. My experience encompasses various security testing techniques.

Static and Dynamic Analysis: I use static and dynamic analysis tools to identify vulnerabilities in the application’s code and runtime behavior. This includes using tools like SonarQube for static analysis and Burp Suite for dynamic analysis.

Penetration Testing: I have experience conducting penetration tests to simulate real-world attacks and identify potential security weaknesses. This involves identifying vulnerabilities and assessing the potential impact of exploits.

Security Best Practices: I am familiar with security best practices like OWASP Top 10, and incorporate these guidelines throughout the development process to mitigate potential vulnerabilities.

Vulnerability Management: This involves tracking identified vulnerabilities, prioritizing their remediation, and verifying that fixes are effective.

Q 14. What is your process for reporting defects?

My defect reporting process is meticulous and aims for clear, concise communication. I use a structured approach to ensure all necessary information is captured.

Defect Tracking System: I utilize a defect tracking system (e.g., Jira, Bugzilla) to log, track, and manage defects. Each defect report includes a unique ID, a clear description of the problem, steps to reproduce, expected vs. actual results, severity, priority, screenshots or screen recordings, and relevant logs.

Clear and Concise Reporting: I ensure that the defect report is easy to understand by developers and stakeholders. Technical jargon is minimized, and clear, concise language is used.

Communication and Collaboration: I actively participate in defect triage meetings and communicate effectively with developers to ensure that defects are properly understood and addressed.

Defect Verification: After a defect is resolved, I verify the fix to ensure the problem is resolved correctly and doesn’t introduce new issues.

Q 15. How do you collaborate with developers to resolve defects?

Collaboration with developers is crucial for efficient defect resolution. It’s not about blame, but about a shared goal of delivering high-quality software. I believe in a proactive, communicative approach. This starts with clearly and concisely documenting defects, including steps to reproduce, expected vs. actual results, screenshots, and log files. I use a collaborative defect tracking system (like Jira or Azure DevOps) to ensure transparency and facilitate communication. I then work with the developers to understand the root cause of the defect. This often involves joint debugging sessions, where I can explain the issue from a testing perspective, and they can provide insights into the code’s logic. We discuss possible solutions and prioritize fixes based on severity and impact. Following the fix, I thoroughly retest to verify the correction and prevent regressions. For example, in a recent project, a UI defect caused inconsistent button behavior. By collaborating with the front-end developer and providing detailed reproduction steps and screenshots, we identified a CSS conflict, corrected it, and ensured the button functioned correctly across all browsers.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with different types of testing (e.g., unit, integration, system, acceptance).

My experience encompasses the entire software testing lifecycle, involving various testing types. Unit testing, performed by developers, focuses on individual code modules. I review unit test coverage to ensure sufficient testing at this level. Integration testing verifies the interaction between different modules, which I often participate in by creating test cases focused on the interfaces between components. System testing tests the entire system as a whole, verifying requirements are met and the system works correctly. I design and execute system test cases, covering various functionalities and scenarios. Finally, acceptance testing involves validating the system against user requirements, often with the involvement of the client or end-users. I work closely with stakeholders in this phase to ensure satisfaction and acceptance. For example, in one project, I designed system tests focusing on data integrity, covering database transactions and ensuring data consistency under stress. In another, I participated in user acceptance testing to confirm the system met the specific requirements of the client, resulting in a smoother launch.

Q 17. How do you manage your workload when faced with a large number of defects?

Managing a large number of defects requires a structured approach. I prioritize defects based on their severity and impact, using a risk-based approach. Critical defects that severely impact functionality or security are addressed first. I then use defect tracking tools to manage the workflow, assigning priorities and tracking progress. I utilize techniques like defect triage meetings to discuss the root cause of a group of similar defects or to identify any patterns. This allows for proactive measures to prevent similar defects from recurring in future development cycles. Furthermore, I work closely with the development team to establish realistic timelines for defect resolution. Communication is key—keeping stakeholders informed of progress and any potential delays is essential. Think of it like a fire fighter; you don’t fight every little flame at once; you focus on the major fires first.

Q 18. Describe your experience with using test management tools.

I have extensive experience with various test management tools, including Jira, Azure DevOps, and TestRail. These tools are essential for efficient defect tracking and management. I use them to create and assign test cases, track defects, generate reports, and manage testing activities. For example, in Jira, I create detailed defect reports with relevant information such as steps to reproduce, screenshots, and expected versus actual results. I utilize the workflow features to track the status of defects from discovery to resolution and closure. The reporting features allow me to monitor testing progress and identify areas needing attention. The ability to link test cases to defects ensures complete traceability throughout the process. My experience extends to customizing workflows in these tools to meet specific project needs.

Q 19. How do you ensure the quality of your test data?

High-quality test data is crucial for reliable testing. I employ several strategies to ensure this. First, I work with developers or database administrators to access realistic and representative data, possibly utilizing a subset of production data (sanitized to protect sensitive information). For scenarios where production data isn’t suitable, I use test data generation tools to create synthetic data that mimics real-world data patterns. Data masking techniques are crucial to protect sensitive information. I also create test data management plans to outline data creation, maintenance, and cleanup procedures. Furthermore, I verify the quality of the test data by validating its structure, content, and coverage. Using data validation scripts, I ensure data integrity and consistency. For example, before executing a performance test, I generated a large dataset that mimicked the expected production volume using a test data generation tool, ensuring the test accurately reflected realistic conditions.

Q 20. How do you stay updated on the latest testing technologies and best practices?

Staying updated in the rapidly evolving field of testing is a continuous process. I actively participate in online communities, forums, and conferences to learn about new testing technologies and best practices. I follow industry influencers and blogs, and I read industry publications and research papers. I also actively pursue professional development opportunities, such as attending workshops and taking online courses, to expand my skills and knowledge. For example, I recently completed a course on automated testing using Selenium, enhancing my ability to implement automated tests and improve efficiency. Continuous learning is critical to staying ahead of the curve and applying the latest techniques to enhance testing effectiveness.

Q 21. What are some common challenges you face in defect removal, and how do you overcome them?

Common challenges in defect removal include unclear defect reports, inconsistent test environments, and time constraints. To overcome unclear defect reports, I collaborate with the reporter to gather additional information and clarify ambiguities. I use tools and techniques to reproduce the defect consistently. Inconsistent test environments can lead to unreliable results. I address this by ensuring the test environment accurately mirrors the production environment. Finally, time constraints necessitate prioritization, which I achieve by focusing on high-severity defects first and using efficient testing techniques to maximize test coverage within limited timeframes. For example, when faced with a large backlog of low-severity defects, I employed automated testing to cover those efficiently while focusing my manual efforts on higher-priority items.

Q 22. Explain your understanding of different defect life cycles.

Defect life cycles track a defect’s journey from discovery to resolution. Different organizations may have slightly varying models, but common stages include:

- New/Open: The defect is reported and logged. Details like severity, priority, and steps to reproduce are recorded.

- Assigned: A developer or team is assigned responsibility for investigating and fixing the defect.

- In Progress/Fixed: The assigned team member is actively working on the solution.

- Pending Retest: The fix is ready, and the defect is handed back to the testing team for verification.

- Retesting: The testers verify the fix. If successful, the defect is closed.

- Reopened: If the fix doesn’t resolve the issue, or a new problem emerges, the defect is reopened and goes through the cycle again.

- Closed/Resolved: The defect is successfully fixed and verified.

- Rejected: The defect report might be rejected if it’s deemed not a defect, a duplicate, or out of scope.

Think of it like a doctor treating a patient: the ‘New’ stage is like the initial diagnosis, ‘In Progress’ is the treatment, ‘Retesting’ is the post-treatment checkup, and ‘Closed’ is the patient’s full recovery. Understanding the life cycle is crucial for effective defect tracking and management.

Q 23. Describe a situation where you had to deal with a critical defect.

During the launch of a major e-commerce platform update, we encountered a critical defect that prevented users from adding items to their shopping carts. This impacted sales directly and led to significant customer frustration. The defect stemmed from a conflict between the newly implemented payment gateway and the existing inventory management system. We immediately escalated the issue, forming a cross-functional team of developers, testers, and customer support personnel. We worked around the clock, using a combination of root cause analysis and temporary workarounds to minimize disruption. We initially implemented a temporary solution to partially restore cart functionality while a permanent fix was developed and thoroughly tested. The permanent fix involved rewriting a specific section of the code to resolve the conflict between the two systems. Post-mortem analysis revealed a lack of thorough integration testing as the root cause, resulting in process improvements to our testing strategy.

Q 24. What is your approach to creating effective test plans?

Creating an effective test plan involves a structured approach. I start by clearly defining the scope – what software components and functionalities will be tested. Next, I identify the testing objectives and what we aim to achieve through testing. Then, I determine the test strategy—which testing methodologies will be employed (unit, integration, system, regression, etc.) along with their timelines. I then outline the resources required: personnel, tools, and environment. Test cases are meticulously designed, covering both positive and negative test scenarios, based on requirements and risk analysis. The plan should also include entry and exit criteria, defect tracking procedures, and a clear reporting mechanism. Finally, I ensure the test plan is reviewed and approved by stakeholders to ensure everyone is on the same page. This collaborative approach helps prevent misunderstandings and ensures the effectiveness of testing efforts. A well-defined plan acts as a roadmap, guiding the testing process and ensuring comprehensive coverage.

Q 25. How do you contribute to improving the overall software development process?

I contribute to improving the software development process by actively participating in various stages. During requirements gathering, I collaborate with developers and stakeholders to identify potential defects early on. I champion the use of best practices, such as code reviews, static analysis tools, and robust testing methodologies. I advocate for clear documentation and communication to prevent misunderstandings. By analyzing defect trends and patterns, I highlight areas needing improvement in the development process. My post-release defect reports, including root cause analysis, inform the development team and lead to more effective testing and prevention strategies. This proactive approach reduces defects, improves software quality, and increases overall team efficiency.

Q 26. What are some common causes of defects in software development?

Defects in software development arise from various sources. Common causes include:

- Requirements ambiguity or incompleteness: Unclear or missing requirements often lead to misinterpretations and incorrect implementation.

- Poor design: A poorly designed system is more prone to defects due to complexity and lack of maintainability.

- Coding errors: These are the most common defects, ranging from simple typos to logic errors.

- Inadequate testing: Insufficient or ineffective testing leaves defects undiscovered until later stages.

- Integration problems: Issues arise when different components of a system don’t work together seamlessly.

- Changes in requirements: Late changes in requirements can disrupt the development process and introduce defects.

- Lack of communication: Poor communication between team members can result in misunderstandings and errors.

- Time pressure: Rushing development often leads to compromises in quality and increased defects.

Understanding these causes allows for the development of preventative measures throughout the software development lifecycle.

Q 27. Explain your experience with non-functional testing.

My experience with non-functional testing is extensive. Non-functional testing focuses on aspects of the software that are not directly related to specific features but are crucial for user experience and system stability. This includes:

- Performance testing: Assessing response times, load handling, and scalability.

- Security testing: Identifying vulnerabilities and ensuring data protection.

- Usability testing: Evaluating the ease of use and user-friendliness of the software.

- Reliability testing: Determining how often the system fails and how quickly it recovers.

- Compatibility testing: Ensuring the software works correctly across different browsers, operating systems, and devices.

For example, I’ve used tools like JMeter for performance testing, and Selenium for testing compatibility across various browsers. Understanding non-functional requirements is critical to providing a user-friendly, secure, and reliable software product.

Q 28. How would you approach testing a new software feature with limited documentation?

Testing a new feature with limited documentation requires a strategic approach. I would start by thoroughly examining the code itself, understanding its functionality and interactions with other parts of the system. I’d use exploratory testing techniques, focusing on trying out different scenarios and inputs to discover potential issues. I would also leverage any available information, such as design mockups or developer comments within the code. I would prioritize testing critical paths and edge cases, even in the absence of detailed documentation, to uncover potential issues early. Collaborating with developers to clarify ambiguous aspects is essential. This iterative approach, combining code analysis, exploratory testing, and developer collaboration, helps me effectively test even with incomplete documentation.

Key Topics to Learn for Defect Removal Interview

- Defect Prevention Strategies: Understanding proactive measures to minimize defects during the software development lifecycle. Explore techniques like code reviews, static analysis, and design reviews.

- Defect Detection Techniques: Mastering various testing methodologies including unit testing, integration testing, system testing, and user acceptance testing. Consider the practical application of each in different development environments.

- Defect Tracking and Management: Learn about effective bug tracking systems and the importance of clear, concise bug reports. Practice documenting defects with sufficient detail for efficient resolution.

- Root Cause Analysis: Develop your skills in identifying the underlying causes of defects, rather than just addressing symptoms. Explore techniques like the 5 Whys and Fishbone diagrams.

- Defect Metrics and Reporting: Understand key metrics like defect density, defect removal efficiency, and mean time to resolution. Practice analyzing these metrics to identify areas for improvement.

- Software Quality Assurance (SQA) Processes: Familiarize yourself with the overall SQA lifecycle and your role within it. Understand the relationship between defect removal and overall software quality.

- Different Defect Removal Methodologies: Explore various methodologies like Agile, Waterfall, and DevOps and how they influence defect removal practices.

Next Steps

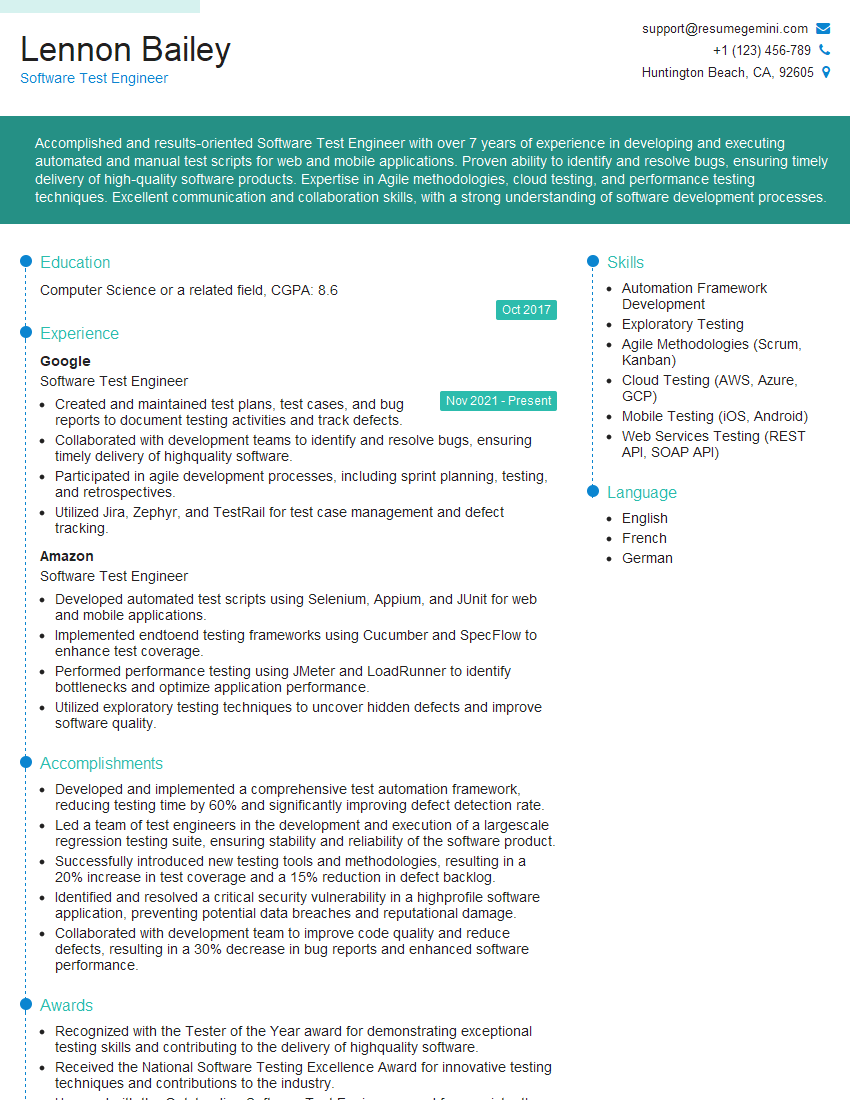

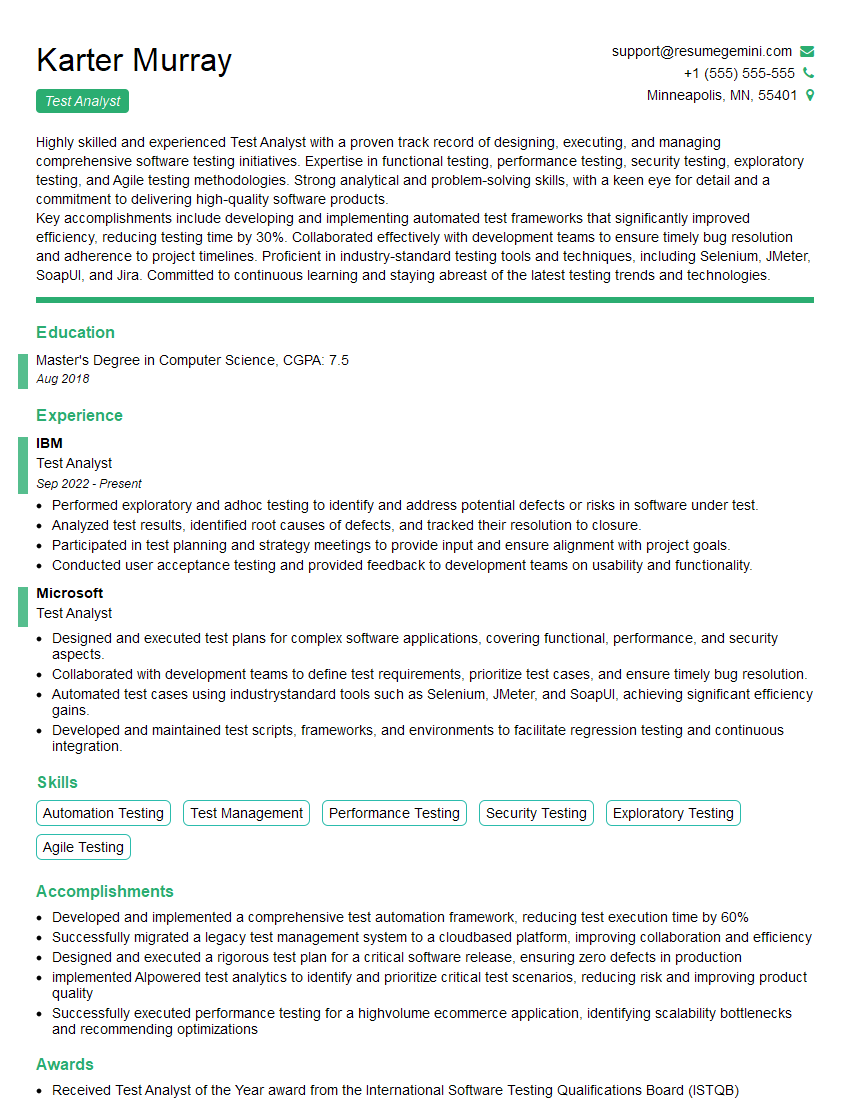

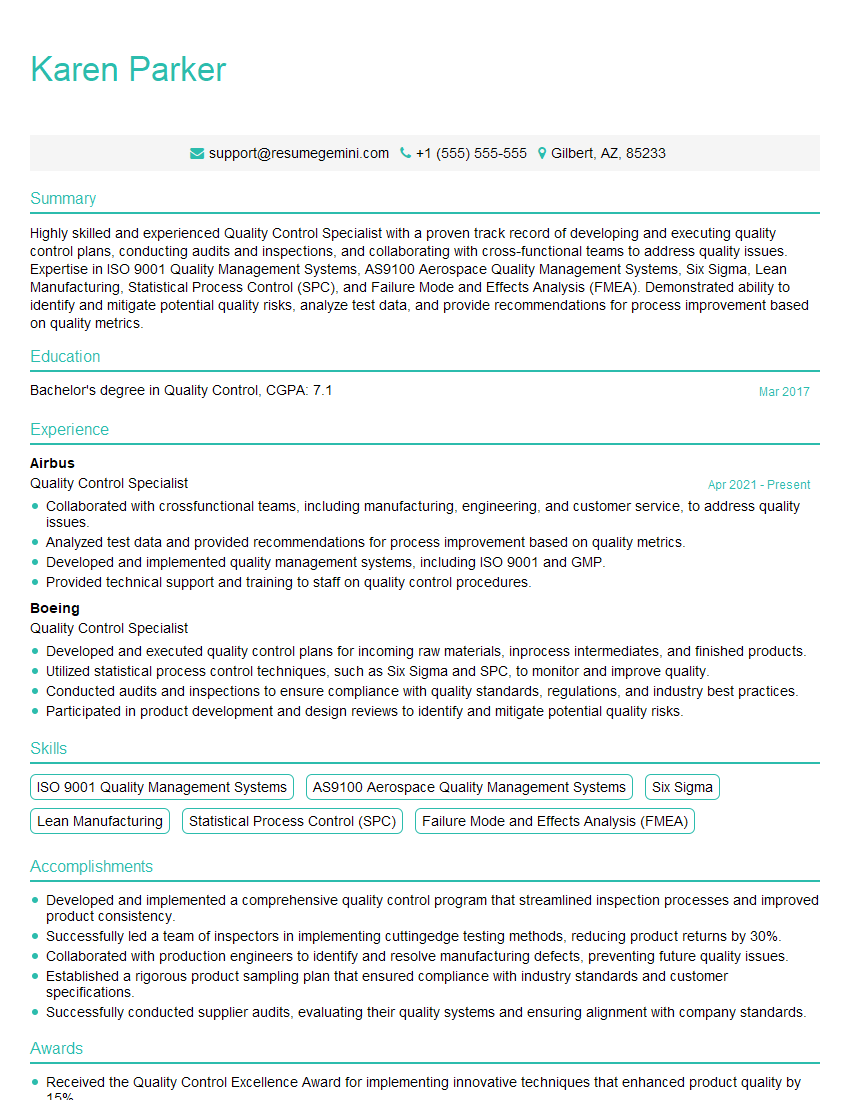

Mastering defect removal is crucial for advancing your career in software development. Proficiency in this area demonstrates a commitment to quality and problem-solving, highly valued by employers. To significantly enhance your job prospects, create an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional, impactful resume tailored to your specific career goals. We offer examples of resumes specifically tailored to Defect Removal roles, showcasing how to best present your expertise to potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO