Unlock your full potential by mastering the most common Software Data Entry interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Software Data Entry Interview

Q 1. What software programs are you proficient in for data entry?

My proficiency in data entry software spans a wide range of applications. I’m highly skilled in using spreadsheet software like Microsoft Excel and Google Sheets for data organization and manipulation. I’m also experienced with database management systems such as MySQL and PostgreSQL, allowing me to efficiently handle structured data. Furthermore, I’m comfortable working with specialized data entry tools like those found in CRM platforms (e.g., Salesforce) and ERP systems (e.g., SAP). My experience extends to using data import/export utilities to seamlessly transfer data between different systems. Finally, I’m adept at using various text editors and word processors for data cleaning and preparation before import into database systems.

Q 2. What is your typing speed and accuracy rate?

My typing speed consistently averages 80 words per minute with an accuracy rate exceeding 99%. This speed and accuracy are maintained even when working with complex data sets containing special characters or numerical codes. I achieve this through years of practice and a focus on maintaining an ergonomic posture to minimize errors and fatigue. I regularly utilize online typing tests to track my progress and identify areas for improvement. Maintaining high accuracy is crucial to me because it directly translates to minimizing errors that can lead to costly rework.

Q 3. Describe your experience with data validation and error correction.

Data validation and error correction are integral parts of my data entry workflow. I employ multiple techniques to ensure data accuracy. This starts with carefully reviewing source documents to identify any inconsistencies or ambiguities *before* data entry. During the entry process, I leverage built-in validation features within software (e.g., data type validation in Excel, constraint checking in databases). If an error is detected, I trace it back to its source, rectify it, and document the correction. I frequently utilize data comparison tools to spot discrepancies between different datasets. For example, if I’m entering customer data from a spreadsheet into a database, I’d use a comparison tool to ensure all data fields align and values match. My experience with complex data sets has taught me to be meticulous and persistent in ensuring data integrity.

Q 4. How do you handle large volumes of data entry tasks?

Handling large volumes of data requires a structured and organized approach. I break down large tasks into smaller, manageable chunks. This involves prioritizing entries based on urgency and importance. I also utilize tools and techniques to streamline the process. For example, if I’m importing a large CSV file, I’ll use scripts or automation tools to expedite the process. Moreover, I focus on maintaining consistent work habits – taking short, regular breaks to avoid fatigue and maintain focus. This reduces errors and improves efficiency over long periods. Regular quality checks throughout the process help to catch errors early on, rather than having to correct them all at once at the end.

Q 5. Explain your experience with different data entry methods (e.g., manual, automated).

My experience encompasses both manual and automated data entry methods. Manual entry, while slower, allows for careful review and validation of each data point. I’ve used this extensively for smaller datasets where accuracy is paramount and automation isn’t feasible. Automated entry, on the other hand, is ideal for large datasets. I’ve utilized tools like macros in Excel, scripting languages (like Python), and application programming interfaces (APIs) to automate repetitive entry tasks, significantly increasing efficiency. For example, I’ve written Python scripts to extract data from web forms and automatically populate a database. The choice between methods depends on the size and complexity of the dataset, as well as the need for real-time validation.

Q 6. How do you ensure data accuracy and integrity during entry?

Data accuracy and integrity are paramount. I employ several strategies to ensure this. First, I meticulously check source documents for accuracy and completeness before commencing data entry. Secondly, I utilize built-in data validation tools in software and database constraints to prevent incorrect entries. Thirdly, I perform regular data checks during and after entry. This might involve running data consistency checks or comparing data to other sources. If discrepancies arise, I thoroughly investigate their causes and make appropriate corrections. Finally, I always maintain detailed logs of my work, documenting any changes or corrections made to the data. This audit trail is vital for ensuring data integrity and facilitates quick troubleshooting if any issues arise.

Q 7. Describe your experience using databases and data management systems.

I have extensive experience working with various databases and data management systems. My skills include designing and implementing relational databases using SQL, querying and manipulating data using SQL commands (SELECT, INSERT, UPDATE, DELETE), and managing database schemas. I’m proficient in working with different database management systems, such as MySQL, PostgreSQL, and MS SQL Server. Beyond SQL, I’m comfortable using NoSQL databases like MongoDB for specific applications. I’m also familiar with data warehousing concepts and the ETL (Extract, Transform, Load) process. This allows me to efficiently manage, process, and analyze large datasets. For example, I’ve been involved in projects where I helped extract data from multiple sources, transform it into a consistent format, and load it into a data warehouse for reporting and analysis.

Q 8. How do you prioritize tasks when faced with multiple data entry projects?

Prioritizing data entry tasks involves a strategic approach that considers urgency, importance, and dependencies. I typically use a combination of methods. First, I assess each project’s deadline and the potential consequences of delay. Projects with tighter deadlines or significant impact on other processes receive higher priority. Second, I consider the size and complexity of each project. Smaller, simpler projects can often be completed more quickly and can be tackled before larger, more intricate ones. Finally, I look for dependencies. If one project’s completion is necessary to begin another, the dependent project takes precedence.

For example, if I’m working on updating customer records (urgent), processing payroll data (critical), and migrating old inventory data (less time-sensitive), I’d prioritize payroll and customer records first, followed by inventory migration. I often use project management tools to visualize task dependencies and deadlines, creating a clear workflow.

Q 9. How do you handle conflicting data or data inconsistencies?

Handling conflicting or inconsistent data requires meticulous attention to detail and a systematic approach. My first step is to identify the source of the conflict. This might involve examining multiple data sources, checking for data entry errors, or reviewing data transformation rules. Once the source is identified, I investigate the data’s accuracy and validity. I might cross-reference information with other reliable sources or consult with subject matter experts to resolve discrepancies.

For instance, if a customer’s address differs across two databases, I’d investigate both entries, perhaps contacting the customer directly for clarification or referring to delivery records to determine the correct address. If the conflict cannot be resolved through verification, I would document the inconsistency, noting the possible sources of error, and escalate the issue to the appropriate team for further investigation. Proper documentation is critical for transparency and future auditing.

Q 10. Explain your experience with data cleaning and transformation techniques.

Data cleaning and transformation are crucial aspects of my work. Cleaning involves identifying and correcting or removing inaccurate, incomplete, irrelevant, duplicated, or improperly formatted data. This often involves using data validation techniques, such as checking for data type errors (e.g., text in a numerical field), range checks (e.g., age cannot be negative), and consistency checks (e.g., verifying that the postal code matches the state). Transformation involves converting data from one format to another, consolidating multiple data sources, or modifying existing data to meet specific requirements.

For example, I might use scripting languages like Python with libraries like Pandas to clean and transform data. This might involve tasks such as removing leading/trailing whitespace from strings (df['column'] = df['column'].str.strip()), converting data types (df['age'] = df['age'].astype(int)), handling missing values (df.fillna(0, inplace=True)), or standardizing data formats (e.g., converting date formats). My experience spans diverse tools and techniques, depending on the dataset and the required outcome.

Q 11. What is your experience with data migration?

Data migration involves moving data from one system to another. This process requires careful planning and execution to minimize disruption and ensure data integrity. My experience includes migrating data between various database systems (e.g., MySQL to PostgreSQL, Oracle to SQL Server), spreadsheet applications, and cloud-based storage solutions. The process typically includes data extraction, transformation, and loading (ETL). Data validation and testing are crucial at each step.

In a recent project, I migrated customer data from a legacy system to a new CRM platform. This involved extracting the data, transforming it to match the CRM’s schema, and loading it into the new system. I used ETL tools to automate the process and implemented rigorous testing procedures to ensure data accuracy and completeness after migration. This included comparisons against the source data and comprehensive validation checks within the new system.

Q 12. Describe your experience with data backup and recovery procedures.

Data backup and recovery are critical for business continuity and data protection. I’m proficient in establishing and maintaining backup procedures, including full backups, incremental backups, and differential backups. I understand the importance of storing backups securely, both on-site and off-site, using techniques such as cloud storage or external hard drives. Recovery procedures are equally important; I’ve practiced restoring data from backups to ensure a smooth recovery in case of data loss or system failure.

My experience includes working with different backup software and strategies, adapting to various organizational requirements and security protocols. Regular testing of backup and recovery processes is a key part of my routine, ensuring that the procedures are effective and reliable in real-world scenarios.

Q 13. How do you maintain data confidentiality and security?

Maintaining data confidentiality and security is paramount. I adhere strictly to organizational policies and industry best practices, such as access control, data encryption, and regular security audits. I understand the importance of using strong passwords, limiting access to authorized personnel only, and following protocols for handling sensitive information. I also stay updated on the latest security threats and vulnerabilities and participate in security training to enhance my knowledge and skills.

For example, I would never leave sensitive data unattended on a computer screen or store unencrypted data on shared drives. I would always follow the organization’s guidelines on password complexity and access permissions. The principle of ‘need-to-know’ access is crucial to maintain data security and confidentiality.

Q 14. What quality control measures do you employ during data entry?

Quality control is an integral part of my data entry process. I employ several measures to ensure data accuracy and completeness. These include double-entry (having a second person independently enter the same data for comparison), data validation checks (using software or manual checks to identify inconsistencies), and regular data audits (periodically reviewing a sample of the entered data to identify errors). I also use checksums or hash functions to verify data integrity, ensuring that data hasn’t been altered during transfer or storage.

For example, when entering financial data, I would cross-reference amounts from source documents multiple times. I might also use automated validation rules in spreadsheets or databases to detect inconsistencies. After data entry, a sample of records would be audited to further verify accuracy. This multi-layered approach ensures high-quality data and minimizes errors.

Q 15. How do you troubleshoot data entry problems?

Troubleshooting data entry problems involves a systematic approach. It starts with identifying the issue – is it inaccurate data, missing data, inconsistencies, or errors in the process itself?

My troubleshooting strategy typically follows these steps:

- Identify the source: Is the error stemming from the input source (e.g., a faulty scanner, incorrect data export), the data entry process (e.g., user error, incorrect data mapping), or the system itself (e.g., software bug, database issue)? I use error logs and data validation checks to pinpoint this.

- Isolate the problem: Once the source is identified, I try to narrow down the specifics. Is it affecting all entries or just a subset? Are there patterns to the errors?

- Apply solutions: Solutions depend on the nature of the problem. For instance, inconsistent data formats might require standardizing input fields. User errors can be addressed through better training or improved data entry interfaces. Software bugs require reporting to the IT department for resolution. Missing data could require manual follow-up or automated imputation techniques.

- Test and Verify: After implementing a solution, I rigorously test to ensure the issue is resolved and that the changes don’t introduce new problems. This often involves cross-checking data against original sources and running data quality checks.

For example, if I encounter frequent errors in postal codes, I would investigate if the input field has adequate validation rules (e.g., correct length, allowed characters), whether the data source itself is faulty, or if there are inconsistencies in how postal codes are formatted across different sources. Addressing the root cause is crucial, not just applying a band-aid solution.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How familiar are you with data entry standards and guidelines?

I’m very familiar with data entry standards and guidelines. These are critical for ensuring data accuracy, consistency, and usability. My knowledge encompasses:

- Data validation rules: I understand how to apply rules to ensure data conforms to specific formats (e.g., date formats, numerical ranges, character limits). For instance, I would ensure a date field accepts only valid dates and not random text.

- Data normalization: I know the importance of structuring data consistently to avoid redundancy and improve database integrity. This involves techniques like creating separate tables for related data and enforcing unique identifiers.

- Data governance policies: I’m familiar with organizational rules regarding data security, access control, and data quality. This includes understanding sensitive data handling procedures and complying with regulations like GDPR or HIPAA, where applicable.

- Industry-specific standards: I can adapt to various industry-specific standards and best practices, ensuring data complies with regulatory requirements and organizational needs. For example, healthcare data requires adherence to HIPAA regulations, while financial data needs to follow specific accounting standards.

These guidelines help to minimize errors and make data more reliable and easier to analyze. Thinking about these standards upfront saves considerable time and effort downstream.

Q 17. Describe your experience working with different data formats (e.g., CSV, Excel, XML).

I have extensive experience working with various data formats, including CSV, Excel, and XML. My proficiency extends to both importing and exporting data in these formats, as well as transforming data between them.

- CSV (Comma Separated Values): I’m comfortable parsing and manipulating CSV files. I understand how to handle delimiters, deal with special characters, and manage potential inconsistencies in data formatting within a CSV file.

- Excel (XLS/XLSX): I’m adept at working with Excel spreadsheets, including handling formulas, data validation, pivot tables, and advanced features for data cleaning and analysis. I understand the nuances of different data types and how they are handled in Excel.

- XML (Extensible Markup Language): I have experience parsing XML using various programming languages and tools. This involves navigating XML structures, extracting specific data elements, and transforming XML into other formats as needed.

In a previous role, I had to consolidate data from multiple Excel spreadsheets into a single, unified XML file for use in a new company database. This involved cleaning the data in Excel, transforming it to a standardized format, and then using XSLT to create the XML file conforming to the database schema. Understanding the strengths and limitations of each format allows me to choose the best tool for the job and ensures efficient data handling.

Q 18. How do you stay organized and efficient while managing large datasets?

Managing large datasets efficiently requires a structured approach that combines organizational skills with appropriate tools. My strategies include:

- Data organization: I use clear naming conventions for files and folders, employing a hierarchical structure to keep things logical and easily searchable. I often use version control for datasets to track changes and allow for easy rollback if necessary.

- Data validation and cleansing: I regularly perform data validation checks to identify and correct inconsistencies and errors early in the process, preventing larger problems later on. I use both manual and automated data cleansing techniques to ensure data quality.

- Data partitioning: For extremely large datasets, I break down the data into smaller, manageable chunks to facilitate processing and analysis. This improves efficiency and reduces resource consumption.

- Database management systems (DBMS): I leverage DBMS like SQL Server or MySQL to manage and query large datasets efficiently. This provides advanced features for data manipulation, searching, and reporting.

- Automation: I use scripting and automation tools to streamline repetitive tasks, such as data import, cleaning, and transformation. This saves time and reduces the risk of manual errors.

For example, I once managed a dataset of over 10 million records. I used a combination of SQL queries for data extraction and manipulation, Python scripts for automation, and a cloud-based data warehouse to store and manage the data efficiently.

Q 19. What is your experience with data entry automation tools?

I have experience with several data entry automation tools, including:

- Optical Character Recognition (OCR) software: I’ve used OCR tools to automate the conversion of scanned documents (e.g., invoices, forms) into digital text, significantly speeding up data entry. This reduces manual effort and improves accuracy.

- Robotic Process Automation (RPA) tools: I have used RPA to automate repetitive data entry tasks, such as extracting data from websites or applications and entering it into databases. This improves efficiency and reduces human error.

- Import/Export utilities: I am proficient in using built-in import/export functions of various applications (like Excel, databases) and programming languages (like Python) to automate data transfer between systems. This avoids manual copy-pasting and reduces the chance of errors.

- Custom scripts: I can write custom scripts using languages such as Python or VBA to automate specific data entry processes tailored to specific needs. This provides flexibility and allows for automating complex workflows.

The choice of automation tool depends on the specific task and data source. For instance, OCR is ideal for digitizing paper documents, while RPA is better suited for automating interactions with applications. Choosing the right tool ensures optimal automation and efficiency.

Q 20. Describe a time you had to meet a tight deadline for a data entry project. How did you manage it?

I remember one project where we needed to enter thousands of customer records into a new CRM system within a very tight deadline – just one week. To manage this, I employed a multi-pronged approach:

- Prioritization: I prioritized the most critical data fields, ensuring those were entered first. Less critical fields could be added later, if time allowed.

- Teamwork: I assembled a team and divided the workload based on individual skills and experience. Clear roles and responsibilities were defined.

- Data validation: We incorporated data validation checks during the process to catch errors early and prevent major issues later. This saved time and effort in the long run.

- Regular progress checks: We held daily meetings to track progress, identify roadblocks, and adjust strategies as needed. This ensured we were on track and could address any challenges proactively.

- Automation where possible: We identified opportunities to automate parts of the process, such as using scripts to import data from spreadsheets.

Through careful planning, teamwork, and the effective use of available resources, we successfully met the deadline without compromising data quality. The key was a flexible and adaptable approach that adjusted based on daily progress.

Q 21. What is your understanding of data integrity and its importance?

Data integrity is the accuracy, completeness, consistency, and trustworthiness of data. Maintaining data integrity is paramount because inaccurate or incomplete data leads to flawed decisions and analysis, which can have significant consequences, depending on the application. For example, incorrect medical data can result in misdiagnosis or improper treatment.

My understanding encompasses:

- Accuracy: Ensuring data is correct and free from errors. This involves validating data against source documents and using appropriate data quality checks.

- Completeness: Verifying that all necessary data is present. Missing data can lead to incomplete analysis and inaccurate conclusions. I employ techniques to identify and handle missing data, such as imputation or data reconciliation.

- Consistency: Ensuring data is consistent across different sources and systems. This involves using consistent formats, naming conventions, and data types. Data normalization is a key aspect here.

- Timeliness: Data should be up-to-date and reflect the current state. This means implementing processes for data updates and ensuring data is refreshed regularly.

- Validity: Data should conform to defined rules and constraints. This involves using data validation techniques to check data against pre-defined rules and limits.

I prioritize data integrity in every stage of a data entry project, from initial data collection to final validation. I view it as an ongoing process, not just a one-time task.

Q 22. How do you handle repetitive tasks to avoid errors and maintain focus?

Handling repetitive data entry tasks effectively requires a multi-pronged approach focused on preventing errors and maintaining concentration. Think of it like an assembly line – consistency and attention to detail are key.

Macro creation/Automation: For highly repetitive tasks, I leverage macro functionality within data entry software (like AutoHotkey or built-in macro recorders) to automate sequences. This significantly reduces errors caused by manual repetition.

Regular Breaks: Data entry can be monotonous. I schedule short, frequent breaks to avoid burnout and maintain accuracy. Even a 5-minute break every hour can drastically improve focus and prevent errors caused by fatigue.

Verification and Cross-checking: I implement a system of double-checking entered data, either by visually inspecting entries or using data validation rules within the software. This ensures that any accidental keystrokes are caught before submission.

Data Validation Rules: When possible, I leverage built-in data validation to prevent invalid entries. For example, if a field requires a numerical input, I configure the software to reject non-numerical entries. This stops errors before they happen.

For instance, while entering customer addresses, I might create a macro to auto-fill common fields like state and zip code after the city is entered, dramatically speeding up the process and reducing typing errors.

Q 23. Explain your experience with different data entry keyboard layouts.

My experience encompasses several keyboard layouts, focusing on efficiency and accuracy. The right layout significantly impacts speed and error rates. I’m proficient with:

QWERTY: The standard layout. My proficiency allows for fast and accurate input even with complex data.

Dvorak: While less common, I’ve experimented with Dvorak, finding it beneficial for reducing finger strain and increasing typing speed after an initial learning curve. It’s particularly advantageous for prolonged data entry sessions.

Numeric Keypads: I’m highly skilled in utilizing numeric keypads for rapid entry of numerical data. This is crucial for tasks involving large quantities of numbers, such as financial transactions or inventory management.

Adaptability is crucial; I quickly adjust to different layouts to maximize performance in various work environments. I find that regular practice and focusing on correct posture are key factors in maintaining speed and accuracy.

Q 24. What are some common data entry errors and how do you prevent them?

Common data entry errors stem from human factors like typos, incorrect data interpretation, and fatigue. Preventing them requires a proactive approach.

Typos: These are minimized through careful typing, utilizing features like auto-correct (when appropriate), and proofreading. Using a well-maintained keyboard and taking breaks also helps.

Incorrect Data Interpretation: This is addressed by double-checking source documents for accuracy and clarity. Understanding the data structure and field requirements is paramount.

Data Validation: Employing software validation rules is a significant error prevention strategy. These rules can check data types, ranges, and formats, rejecting invalid entries.

Fatigue: Regular breaks, proper ergonomics, and a well-lit workspace significantly reduce fatigue-related errors.

For example, if I encounter an ambiguous abbreviation in the source document, I’ll verify its meaning before entry, preventing data inconsistencies. Similarly, if a field requires a specific date format (e.g., MM/DD/YYYY), I’ll ensure all entries adhere to it.

Q 25. Describe your experience using different data entry software interfaces.

My experience spans various data entry software interfaces. Adaptability is key as each interface presents a unique workflow. I’m comfortable with:

Spreadsheet Software (Excel, Google Sheets): Proficient in using these for both basic and complex data entry tasks, including formula application and data manipulation.

Database Management Systems (DBMS): Experienced with various DBMS interfaces, like MySQL and SQL Server, for inputting, managing, and querying data within database environments.

Custom Data Entry Applications: I have experience working with proprietary software, quickly learning new interfaces and navigating diverse data structures.

CRM Systems (Salesforce, etc.): Familiar with CRM software interfaces for managing customer-related data, such as contact information and sales records.

I approach each new interface systematically, understanding the data flow and validation rules before beginning entry. This allows me to efficiently navigate the system and minimize errors.

Q 26. How do you ensure compliance with data privacy regulations?

Data privacy is paramount. My approach to compliance involves adherence to regulations such as GDPR and CCPA (as applicable).

Confidentiality: I treat all data as confidential, avoiding discussions of sensitive information outside secure work environments.

Data Minimization: I only collect and process data necessary for the specific task.

Access Control: I only access data to which I have explicit authorization, respecting established access control mechanisms.

Secure Data Handling: I follow established protocols for handling and storing data securely, using appropriate security measures like strong passwords and encryption (when required).

Data Breach Reporting: I know the procedures for reporting any suspected data breaches to relevant authorities immediately.

For example, if I notice any data privacy violations, I will immediately report them to the appropriate personnel. I’m committed to upholding data protection principles throughout the entire data entry process.

Q 27. What is your experience with data auditing and reporting?

Data auditing and reporting are crucial for maintaining data integrity and providing insights. My experience includes:

Data Validation Checks: I regularly conduct data validation checks to identify discrepancies and inaccuracies within datasets.

Report Generation: I’m proficient in generating reports summarizing data entry activities, including metrics like entries processed, error rates, and time spent on tasks. I use tools like spreadsheets or dedicated reporting systems.

Data Reconciliation: I’m skilled in reconciling data from multiple sources to ensure consistency and accuracy. This involves identifying and resolving discrepancies between datasets.

For example, in a financial data entry setting, I might generate a daily report showing the number of transactions processed, the total value of transactions, and any identified errors. This allows for prompt identification of potential issues and ensures data quality.

Q 28. How do you adapt your data entry skills to different industries and data types?

Adapting to different industries and data types requires a flexible approach and a willingness to learn. My adaptability stems from a solid foundation in data entry principles.

Understanding Data Structures: I focus on understanding the specific data structure and format relevant to the industry and data type. This includes analyzing data fields, relationships, and validation rules.

Industry-Specific Knowledge: I make a point of learning the basic terminology and processes within the specific industry. For example, understanding medical coding is crucial for healthcare data entry.

Software Proficiency: I maintain proficiency in a range of software applications to handle different data formats and industry-specific software.

Continuous Learning: I proactively seek training and resources to stay updated on the latest data entry techniques and industry-specific standards.

For instance, transitioning from entering sales data for a retail company to entering medical records for a hospital would involve learning about HIPAA compliance, medical terminologies, and potentially working with specialized software for managing patient data. My adaptable nature ensures efficient integration into various contexts.

Key Topics to Learn for Software Data Entry Interview

- Data Entry Techniques: Understanding various data entry methods (e.g., keyboarding, optical character recognition (OCR), automated data entry tools) and their efficiency.

- Data Validation and Verification: Mastering techniques to ensure data accuracy and identify inconsistencies, including using checksums and cross-referencing data sources.

- Data Cleaning and Transformation: Learning how to clean, standardize, and transform raw data into usable formats, handling missing values and outliers.

- Software Proficiency: Demonstrating familiarity with relevant software (e.g., spreadsheet software, database management systems, ERP systems) and their functionalities in a data entry context.

- Data Security and Confidentiality: Understanding and applying best practices for handling sensitive data, adhering to privacy regulations and security protocols.

- Data Entry Workflow and Process Optimization: Analyzing and improving data entry processes for efficiency and accuracy, suggesting improvements to workflows and identifying bottlenecks.

- Problem-solving and Troubleshooting: Developing the ability to identify and resolve data entry errors, handle unexpected situations, and troubleshoot technical issues.

- Attention to Detail and Accuracy: Highlighting your meticulous approach to work and emphasizing your commitment to maintaining high accuracy rates in data entry.

Next Steps

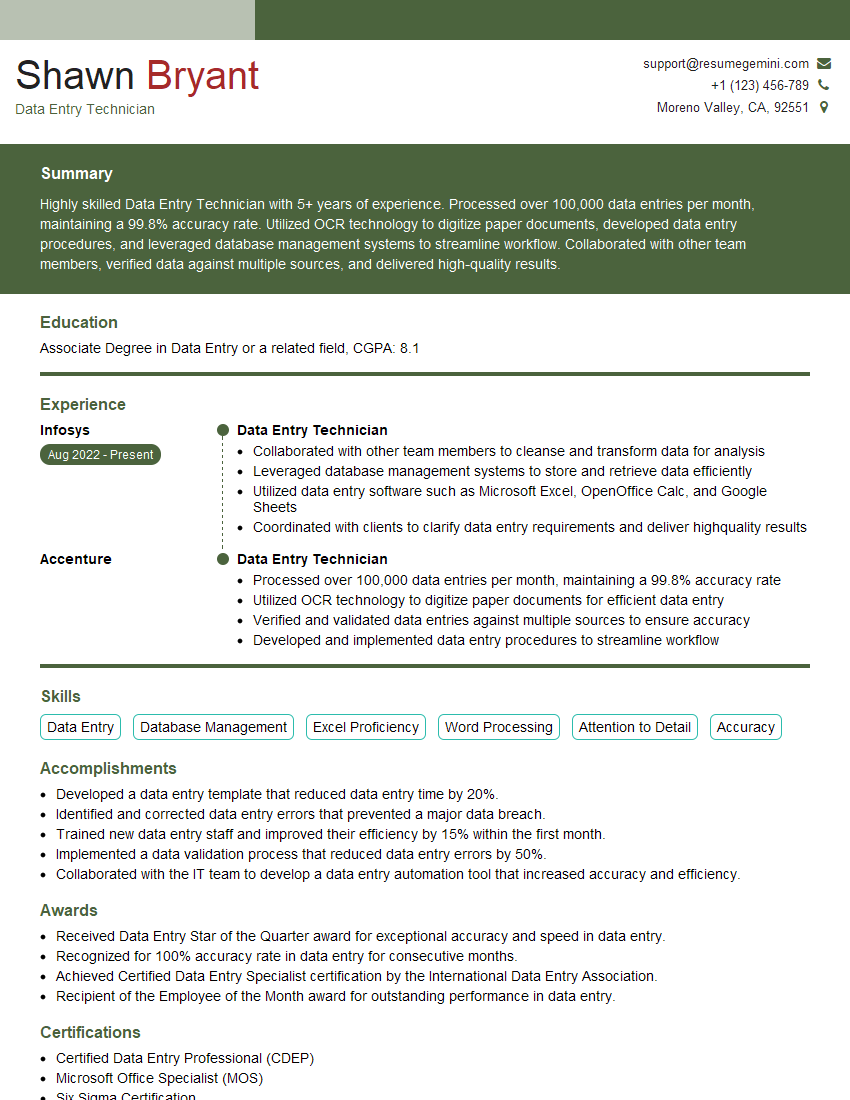

Mastering Software Data Entry opens doors to diverse roles within many organizations, providing a solid foundation for a successful career in data management and analysis. To significantly increase your chances of landing your dream job, create a resume that’s optimized for Applicant Tracking Systems (ATS). This ensures your qualifications are clearly identified by recruiters. ResumeGemini is a trusted resource that can help you build a professional and ATS-friendly resume. We provide examples of resumes tailored to Software Data Entry positions to guide you in showcasing your skills effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

There are no reviews yet. Be the first one to write one.