Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Troubleshoot and resolve production problems interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Troubleshoot and resolve production problems Interview

Q 1. Describe your process for troubleshooting a production issue.

My approach to troubleshooting production issues is systematic and follows a structured process. I start by gathering information: checking logs, monitoring tools, and talking to users to understand the impact and scope of the problem. This initial assessment helps define the problem’s context. Then, I move to the diagnosis phase, using my knowledge of the system architecture to isolate the potential causes. I employ a combination of techniques like binary search (dividing the problem space in half with each test), and examining error messages to pinpoint the issue. Once I’ve identified the likely root cause, I develop and implement a solution, testing thoroughly in a staging environment before deploying to production. Finally, I document the whole process meticulously, including the steps taken, the resolution, and any preventative measures implemented to avoid recurrence. This ensures the issue’s full resolution and assists in future troubleshooting.

Example: Imagine a website experiencing slow load times. My process would begin with checking server logs for errors, then analyzing network performance metrics. If the issue is identified as a database query taking too long, I’d analyze the query itself and optimize it, before testing the changes in a staging environment to prevent unintended consequences.

Q 2. How do you prioritize multiple production issues simultaneously?

Prioritizing multiple production issues requires a clear understanding of impact and urgency. I use a framework that considers severity, impact, and frequency. Severity refers to the criticality of the issue – is it preventing users from accessing core features or is it a minor inconvenience? Impact considers the number of users affected and the potential business losses. Frequency refers to how often the issue is occurring. I use a matrix to visually represent these factors, allowing me to quickly prioritize the most critical issues first. For example, a widespread outage affecting core functionality will always take precedence over a less impactful, infrequent bug. Communication is key; keeping stakeholders informed about prioritization decisions is crucial for transparency and maintaining trust.

Example: Let’s say we have three issues: (1) A critical database error impacting 50% of users, (2) A minor UI glitch affecting 10% of users, and (3) A recurring but less severe performance issue. I would immediately address issue (1) due to its high severity and impact, followed by (3) because of its recurring nature, and then handle issue (2) last as its impact is relatively small.

Q 3. Explain your experience with monitoring tools and dashboards.

I have extensive experience with a variety of monitoring tools and dashboards, including Datadog, Prometheus, Grafana, and CloudWatch. These tools are invaluable for proactively identifying potential issues and reacting quickly to incidents. I’m proficient in setting up alerts based on key metrics, such as CPU utilization, memory usage, response times, and error rates. Dashboards provide a centralized view of the system’s health, allowing me to quickly assess the overall performance and pinpoint areas of concern. My experience goes beyond simply using these tools; I understand the underlying infrastructure and know how to configure them effectively to provide meaningful and actionable insights. I’m also skilled at interpreting the data presented, differentiating between normal fluctuations and genuine anomalies. For instance, I can easily spot a sudden spike in error rates or a consistent degradation in response time that requires investigation.

Q 4. How do you identify the root cause of a production problem?

Identifying the root cause requires a methodical approach. I start by gathering all available data: logs, metrics, error messages, user reports. I then use a combination of techniques, including the ‘five whys’ (repeatedly asking ‘why’ to drill down to the root cause), fault tree analysis (mapping potential causes and their interdependencies), and debugging tools. The context of the problem is extremely important, so I always analyze the circumstances leading up to the issue. Sometimes, replicating the issue in a controlled environment is necessary for thorough investigation. A crucial aspect is ensuring that the solution addresses the underlying problem and not just the symptoms.

Example: If a user reports they can’t login, I won’t just reset their password. I’ll investigate the logs to see if there’s a pattern, check the database for errors, and even test the login functionality myself. This might reveal a problem with the authentication service or a database connection issue – the root cause, rather than a symptomatic workaround.

Q 5. What techniques do you use to escalate production issues effectively?

Escalating production issues effectively involves clear, concise communication and the right escalation path. I use a structured approach based on the severity and urgency of the issue. For minor issues, I might only involve the immediate team. For major incidents, I’ll escalate to senior engineers, management, and potentially even external support teams, depending on the situation. Communication is key – updates need to be timely, accurate, and contain the necessary context. Tools like Slack or dedicated incident management systems are crucial for effective collaboration and communication during escalations. The goal is to ensure everyone involved understands the situation and their role in resolving it. Clear communication prevents confusion and delays.

Example: During a major outage, I would initially communicate with my team, then escalate to the engineering manager, providing a concise summary of the situation, impact, and initial troubleshooting steps. If the problem remains unresolved, I would escalate further to the operations team and potentially our 24/7 support provider.

Q 6. Describe your experience with incident management processes.

My experience with incident management processes is extensive. I’m familiar with various frameworks, including ITIL and other industry best practices. I understand the importance of establishing clear roles and responsibilities, defining communication protocols, and using standardized tools for incident tracking and reporting. I’ve participated in numerous incident response calls and post-incident reviews, contributing to the continuous improvement of our processes. These reviews are critical for identifying areas for improvement and preventing future incidents. A key aspect is conducting thorough post-incident reviews, where we analyze the incident’s cause, impact, and response, to identify systemic weaknesses and develop preventative measures.

Q 7. How do you document your troubleshooting steps and resolutions?

Documentation is crucial for knowledge sharing, troubleshooting future issues, and improving our processes. I meticulously document all troubleshooting steps, including the initial symptoms, the steps taken to diagnose the problem, the solution implemented, and any preventative measures taken. This documentation is typically stored in a centralized knowledge base, wiki, or ticketing system. The level of detail is tailored to the complexity of the issue; simpler issues might require a brief summary, while more complex ones need a detailed explanation including logs, screenshots, and code snippets. The format needs to be clear, consistent, and easily searchable so that others can benefit from past experiences. This reduces response times for similar issues in the future, promoting a learning culture and fostering continuous improvement.

Example: For a database connection issue, my documentation would include the error messages, the steps taken to check network connectivity, the database server logs, the configuration changes made, and any steps taken to prevent similar issues in the future.

Q 8. Explain your experience with different debugging tools and techniques.

My experience with debugging tools and techniques spans a wide range, depending on the system and the nature of the issue. For application code, I’m proficient with debuggers like gdb (GNU Debugger) for C/C++ and lldb (LLVM Debugger) for various languages. These allow me to step through code line by line, inspect variables, and set breakpoints to pinpoint the exact location of errors. For Java applications, I use tools like IntelliJ IDEA’s debugger which provides similar functionalities.

Beyond debuggers, I utilize logging extensively. Properly structured log files, with varying levels of detail (DEBUG, INFO, WARNING, ERROR), provide a crucial historical record of application behavior. Analyzing log files using tools like grep, awk, and sed is essential for finding patterns and identifying the root cause of problems. I’m also adept at using monitoring tools such as Prometheus and Grafana to visualize system metrics (CPU usage, memory consumption, network traffic) and identify performance bottlenecks or anomalies that may signal underlying problems. For distributed systems, I leverage tools like Jaeger or Zipkin for distributed tracing, allowing me to track requests across multiple services to quickly locate failure points.

Finally, I use various profiling tools to analyze application performance, identifying slow functions or memory leaks. The specific tools vary by language and platform but generally involve analyzing execution time and resource consumption.

Q 9. How do you handle pressure and tight deadlines during a production crisis?

Production crises demand a calm and systematic approach, even under immense pressure. My strategy centers around prioritizing and delegating effectively. First, I assess the impact and severity of the issue. Is it a complete outage, a performance degradation, or something minor? This determines the urgency and the team needed to address it. I then clearly define roles and responsibilities, ensuring each team member understands their tasks. Effective communication is paramount – keeping everyone informed of the situation, progress, and any changes in strategy. I focus on quick wins, addressing the most critical issues first to stabilize the system. Once the immediate crisis is contained, we move to a more thorough investigation and remediation of the root cause. Throughout the process, I maintain a clear head, resist the urge to panic, and rely on my experience and training to guide the team to a successful resolution.

I’ve found that practicing mindfulness and stress-reduction techniques like deep breathing exercises helps me stay focused and maintain composure under pressure. Also, having well-defined incident management procedures in place greatly improves our response time and effectiveness during emergencies.

Q 10. How do you communicate technical issues to non-technical stakeholders?

Communicating technical issues to non-technical stakeholders requires translating complex technical jargon into clear, concise, and understandable language. I avoid technical terms whenever possible, opting for plain English and simple analogies. For instance, instead of saying “The database experienced a deadlock condition,” I might explain, “The system got stuck because two parts were trying to access the same data simultaneously, like two people trying to use the same phone at the same time.” I use visuals, such as charts and graphs, to illustrate key metrics and trends.

I focus on the impact of the issue on business operations, rather than getting bogged down in technical details. For example, “This issue is causing a 20% decrease in website traffic, resulting in a potential loss of X dollars in revenue.” I provide regular updates on the status of the problem and the steps being taken to resolve it. Transparency and proactive communication build trust and reduce anxiety.

Q 11. Describe a time you had to troubleshoot a complex production issue. What was the outcome?

In one instance, our e-commerce platform experienced a sudden surge in error rates, causing significant delays and impacting sales during a major promotional campaign. Initial investigations revealed high CPU and memory usage on several application servers. Through thorough log analysis, we identified a critical flaw in a recently deployed caching mechanism. The code was improperly handling cache invalidation, leading to excessive memory consumption and eventually causing the system to thrash.

Our team immediately rolled back the deployment of the faulty caching mechanism, which immediately stabilized the system. While the immediate problem was resolved, we conducted a postmortem analysis. We identified the gaps in our testing process that allowed the bug to reach production. We implemented stricter code reviews and automated load tests to catch such issues in the future, reducing the risk of this type of production incident repeating. The outcome was not only the restoration of service but also a significant improvement in our development processes and a more robust system.

Q 12. What is your experience with using logging and metrics for troubleshooting?

Logging and metrics are indispensable for troubleshooting. Robust logging provides a detailed audit trail of system activity, making it easy to pinpoint the source of errors. I advocate for structured logging, where logs are formatted in a consistent, machine-readable way, allowing for easier analysis and aggregation. For example, using JSON format instead of free-form text logs enables easier parsing and querying using tools.

Metrics, collected using monitoring tools, provide real-time insights into the health and performance of the system. These can reveal anomalies or trends that may foreshadow problems. I use these to identify performance bottlenecks, resource contention issues, or unusual activity patterns. By correlating log messages with metrics, we can create a more comprehensive understanding of events and gain valuable context for identifying the root cause. For instance, a spike in error logs coincident with high CPU usage points to a performance-related issue.

Q 13. How do you ensure the stability and reliability of production systems?

Ensuring stability and reliability requires a multi-faceted approach. First, robust testing procedures are crucial. This includes unit tests, integration tests, and end-to-end tests to catch bugs before they reach production. Automated testing is highly beneficial for speed and consistency. We also employ rigorous code reviews to ensure code quality and adherence to best practices.

Secondly, monitoring and alerting are critical for early detection of issues. Real-time monitoring of key metrics allows us to proactively identify and address problems before they escalate. Automated alerts, triggered by defined thresholds, ensure timely intervention. Finally, a well-defined incident management process is essential for handling emergencies effectively. This includes predefined roles, responsibilities, communication channels, and post-incident analysis to learn from mistakes and prevent future issues.

Q 14. What are your strategies for preventing future production issues?

Preventing future production issues requires a proactive and preventative approach, rather than simply reacting to problems after they occur. This involves implementing robust testing and quality control processes, including automated tests and code reviews. We perform thorough capacity planning and load testing to ensure our systems can handle expected and unexpected traffic spikes.

Investing in infrastructure monitoring and alerting systems allows us to identify potential problems before they impact users. We regularly conduct code audits and security scans to identify and remediate vulnerabilities. Finally, performing postmortem analyses after any incident, regardless of severity, is crucial. This provides an opportunity to identify root causes, learn from mistakes, and implement improvements to prevent similar incidents in the future. This continuous improvement cycle is essential for building robust and reliable production systems.

Q 15. Explain your understanding of different types of production incidents.

Production incidents can be categorized in various ways, but a common approach groups them by severity and impact. Think of it like a spectrum of pain: a minor inconvenience at one end and a complete system outage at the other.

- Service Degradation: This is a partial loss of functionality. Imagine a website where images load slowly but the core content is still accessible. It’s annoying, but the system isn’t down.

- Partial Outage: A significant portion of the system is unavailable. For example, only a specific feature or a subset of users might be affected. This is more serious than service degradation but not a complete catastrophe.

- Complete Outage: The entire system is unusable. This is the worst-case scenario, often requiring immediate attention and potentially impacting a large number of users or processes. A bank’s online transfer system failing is an example.

- Security Incidents: These involve breaches in system security, data leaks, or unauthorized access. These require immediate response due to potential legal and reputational damage.

- Performance Issues: These aren’t necessarily outages, but they significantly impact user experience due to slow response times, high latency, or resource exhaustion. Think of a website becoming sluggish during peak hours.

Understanding these categories helps prioritize incidents and allocate resources effectively. Each type requires a different approach to resolution, focusing on minimizing user impact and restoring full functionality as quickly and safely as possible.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you maintain a balance between speed and quality when resolving production issues?

Balancing speed and quality in incident resolution is crucial. It’s about finding the optimal solution, not just the quickest fix. Think of it as a tightrope walk: move too fast, and you risk making things worse; move too slowly, and you prolong user disruption. My approach involves a methodical process:

- Rapid Assessment: Quickly determine the impact and root cause. Tools like monitoring dashboards and logging systems are invaluable here.

- Prioritize Fixes: Address the most critical issues first. If part of a system is completely down, fixing that takes precedence over optimizing a less critical component.

- Implement Temporary Fixes (if necessary): Sometimes, a quick workaround is better than a delayed, perfect solution. These should always be temporary and well-documented to be replaced by a more robust fix.

- Thorough Root Cause Analysis: After immediate resolution, conduct a thorough analysis to understand the underlying problem and prevent recurrence. This ensures long-term quality.

- Post-Mortem Review: After resolution, review what went wrong, what worked well, and what improvements can be made for future incidents.

This process ensures that we address immediate problems swiftly while simultaneously committing to long-term stability and improvements. A quick fix that creates instability is worse than a slightly delayed but robust resolution.

Q 17. What metrics do you use to measure the effectiveness of your troubleshooting efforts?

Measuring the effectiveness of troubleshooting efforts involves a combination of quantitative and qualitative metrics. We need numbers to show improvements, but also need to understand the ‘why’ behind those numbers.

- Mean Time To Detection (MTTD): How long it took to discover the incident.

- Mean Time To Resolution (MTTR): How long it took to fully resolve the incident.

- Mean Time To Recovery (MTTR): Similar to MTTR, focusing specifically on the time it takes to restore service to a usable level.

- Customer Impact: Number of affected users, revenue loss (if applicable), and negative feedback.

- Incident Severity Levels: Categorizing incidents by severity allows tracking of how many critical vs. minor incidents occurred.

- Post-Incident Surveys/Feedback: Gathering feedback helps determine if the resolution effectively addressed the root cause and the users’ needs.

These metrics, analyzed together, provide a holistic view of the effectiveness of our troubleshooting efforts. Improvements in MTTD and MTTR demonstrate efficient response and resolution. Tracking customer impact ensures that we are prioritizing user experience.

Q 18. How familiar are you with automated incident response systems?

I have extensive experience with automated incident response systems. These systems automate parts of the incident management lifecycle, improving efficiency and reducing human error. Think of them as a first responder for your systems.

My experience includes working with systems that:

- Monitor system health: These systems constantly watch for anomalies, like CPU spikes or memory leaks, and trigger alerts.

- Automate responses: They can automatically restart services, scale resources up or down, or execute pre-defined remediation scripts.

- Escalate incidents: When automation can’t handle an issue, they escalate it to the appropriate teams, providing crucial context.

- Integrate with monitoring and logging tools: They pull data from various sources to provide a comprehensive view of the incident.

For example, I’ve worked with systems that automatically restart a web server if CPU usage exceeds 90%, significantly reducing downtime. These systems are essential in today’s fast-paced environment, enabling faster and more consistent responses to incidents.

Q 19. Describe your experience with using version control systems for troubleshooting.

Version control systems (VCS) are indispensable for troubleshooting, especially in complex software environments. They provide a detailed history of code changes, allowing us to pinpoint the source of issues. Imagine it like a time machine for your code.

I’m proficient with Git and have used it extensively for troubleshooting. My workflow often involves:

- Reviewing commit history: Identifying recent changes that might have introduced bugs.

- Rolling back changes: Quickly reverting to a previous working version to restore functionality. This is often a crucial step in quickly resolving critical issues.

- Comparing code versions: Identifying differences between the working and broken versions helps isolate the root cause.

- Using branching and merging: Experimenting with fixes in separate branches before merging them into the main codebase, minimizing risks.

In one particular incident, we used Git’s history to pinpoint a specific code change that caused a database performance bottleneck. Rolling back that change immediately resolved the issue. Without Git, the problem would have been significantly harder to trace and fix.

Q 20. What is your experience with different database systems and their troubleshooting?

My experience encompasses various database systems, including MySQL, PostgreSQL, MongoDB, and SQL Server. Troubleshooting database issues requires a deep understanding of the specific system’s architecture, query optimization, and error logging. Think of each database as having its own language and quirks.

My troubleshooting approach generally involves:

- Analyzing database logs: These logs provide crucial information about errors, performance issues, and deadlocks.

- Monitoring resource usage: Checking CPU, memory, and disk I/O to identify performance bottlenecks.

- Query optimization: Improving the efficiency of database queries to reduce response times.

- Schema analysis: Examining the database schema to identify design flaws or inconsistencies that might be contributing to problems.

- Data integrity checks: Ensuring data consistency and accuracy.

For instance, I once resolved a performance issue in a MySQL database by identifying and optimizing poorly written queries that were causing table scans instead of using indexes. This reduced query execution time by over 80%, significantly improving the overall application performance.

Q 21. How do you handle situations where multiple teams are involved in resolving a production issue?

Handling multi-team incidents requires clear communication, well-defined roles, and a collaborative approach. Think of it as coordinating an orchestra: each section plays a crucial part, but the conductor ensures harmony.

My approach includes:

- Establish a central communication channel: Using a collaborative tool (like Slack or Microsoft Teams) ensures everyone is informed and updates are easily shared.

- Define clear roles and responsibilities: Assigning specific tasks to different teams based on their expertise avoids confusion and ensures accountability.

- Regular updates and status reports: Keeping all involved teams updated on progress fosters transparency and allows for quick adjustments.

- Use a shared incident management system: This provides a central location for tracking the issue, progress, and related documentation.

- Facilitate collaboration and conflict resolution: Ensuring respectful and efficient communication between teams is critical in resolving the issue promptly.

In one case, a production issue involved the development, database, and network teams. By establishing a clear communication channel, assigning specific roles (database team focused on schema checks, developers on code review, network team on connectivity), and holding regular status updates, we resolved the issue in a timely and collaborative manner.

Q 22. What is your experience with capacity planning and performance tuning?

Capacity planning and performance tuning are crucial for maintaining a healthy and responsive production environment. Capacity planning involves predicting future resource needs – things like CPU, memory, storage, and network bandwidth – based on historical data, projected growth, and anticipated workloads. Performance tuning, on the other hand, focuses on optimizing existing resources to improve application speed, efficiency, and scalability.

In my experience, I’ve used various tools and techniques for both. For capacity planning, I’ve leveraged tools like monitoring dashboards (e.g., Datadog, Prometheus) to analyze historical trends and predict future needs. I also collaborate closely with development teams to understand upcoming features and their resource implications. For performance tuning, I use profiling tools to identify bottlenecks, such as slow database queries or inefficient code. For example, I once identified a database query that was slowing down a critical application by a factor of 10. After optimizing the query with appropriate indexing and refactoring, we saw a dramatic improvement in response time.

I employ a proactive approach, regularly reviewing resource utilization and proactively scaling resources before they become a bottleneck. This minimizes the risk of performance degradation and ensures a smooth user experience.

Q 23. Describe your approach to root cause analysis.

My approach to root cause analysis is systematic and data-driven. I follow a structured methodology, often using a variation of the 5 Whys technique combined with data analysis. This involves asking ‘why’ repeatedly to drill down to the root cause of a problem, instead of just addressing the symptoms. I typically start by gathering data from various sources – logs, monitoring systems, application metrics, and user feedback. Then, I reconstruct the sequence of events that led to the problem. Let’s say we have a website outage. I would first gather metrics on server load, network traffic, and database response times. Then, I’d examine logs to identify any error messages or unusual activity leading up to the failure. I would continue asking ‘why’ until I unearth the fundamental cause, perhaps a poorly written database query, or a lack of sufficient capacity.

My focus is always on objective evidence, rather than speculation. I create a clear timeline of events and meticulously document each step of my investigation. This not only helps to resolve the immediate issue but also prevents similar problems from recurring in the future. It’s like detective work for software, but instead of a magnifying glass we use metrics and logs.

Q 24. How do you ensure data integrity during troubleshooting and resolution?

Data integrity is paramount during troubleshooting and resolution. My approach involves several key strategies to ensure data remains accurate and consistent. First, I always take backups before making any significant changes to the system. This acts as a safety net in case something goes wrong. Second, I use version control systems (like Git) for code changes, allowing me to revert to previous states if needed. Third, I meticulously check data consistency using various validation checks. For example, if a database is impacted, I would run checksums to verify data integrity after the resolution. In one instance, we were troubleshooting a data corruption issue caused by a faulty hard drive. By meticulously comparing the data on the faulty drive with a backup, we were able to recover the lost data and restore system integrity.

I also make use of automated testing, running various tests (unit, integration, etc.) to confirm that data transformations or fixes haven’t introduced new errors. The whole process follows a strict change management procedure with rigorous approvals.

Q 25. How do you handle security-related incidents in production?

Handling security-related incidents requires a swift and coordinated response. My approach follows a well-defined incident response plan, which involves immediate containment, eradication, recovery, and post-incident analysis. Upon discovering a security breach, the first step is to contain the threat by isolating affected systems or accounts to prevent further damage. This could involve blocking malicious IP addresses or temporarily suspending affected services. Next, I work to eradicate the threat, removing malware or fixing vulnerabilities. This might involve patching systems, resetting passwords, or performing a forensic analysis. The recovery phase involves restoring systems to their pre-incident state, and finally, a post-incident analysis is performed to identify weaknesses and prevent future breaches.

Communication is key during security incidents. I collaborate closely with security teams, legal, and potentially law enforcement. We adhere strictly to organizational security policies and regulations during the entire process. Transparency and careful documentation are critical for successful resolution and learning from the experience.

Q 26. What is your experience with disaster recovery and business continuity planning?

Disaster recovery (DR) and business continuity planning (BCP) are crucial aspects of production support. My experience involves developing and implementing DR/BCP plans that ensure minimal disruption to business operations in the event of a disaster. This includes identifying critical systems and applications, defining recovery time objectives (RTOs) and recovery point objectives (RPOs), and establishing backup and recovery procedures. I’ve worked with various technologies for DR, such as replication, backups to cloud storage, and failover systems.

For example, I’ve designed and implemented a DR plan that uses cloud-based replication for a critical e-commerce application. In case of a primary data center failure, the application automatically fails over to a secondary cloud-based instance, minimizing downtime. Regular drills and testing are essential to validate the effectiveness of the DR/BCP plan. These drills help identify and address any gaps or weaknesses in the plan, ensuring it’s ready when needed. A well-executed DR plan is like having a fire escape – you hope you never need it, but you’re glad it’s there when you do.

Q 27. Explain your experience with different cloud platforms and their troubleshooting.

I have extensive experience with various cloud platforms, including AWS, Azure, and GCP. My troubleshooting approach is similar across platforms, but the specific tools and services differ. For instance, on AWS, I use services like CloudWatch for monitoring, X-Ray for application tracing, and CloudTrail for auditing. On Azure, I use Azure Monitor, Application Insights, and Azure Log Analytics. GCP offers similar services like Cloud Monitoring, Cloud Trace, and Cloud Logging.

Troubleshooting in the cloud often involves understanding the cloud provider’s infrastructure and service offerings. For example, if I encounter a network issue, I’ll investigate VPC configurations, routing tables, and network security groups on AWS, equivalent services on Azure or GCP. Cloud-specific tools are indispensable for efficient troubleshooting; they provide centralized logging, monitoring, and insights that are far more advanced than what’s typically available on-premise.

Q 28. How do you stay up-to-date with the latest technologies and best practices related to production support?

Staying current with the latest technologies and best practices is essential for effective production support. I actively engage in various activities to achieve this. I regularly attend industry conferences and webinars, read technical blogs and publications, and participate in online communities and forums. I also actively pursue certifications relevant to my field to stay abreast of evolving technologies. Further, I experiment with new tools and technologies in controlled environments to gain practical experience before implementing them in production. This approach allows me to be adaptable and proficient in handling new challenges and effectively utilizing the latest tools to ensure optimal system performance and reliability. It’s a constant learning journey, and I embrace the challenge of keeping my skills sharp.

Key Topics to Learn for Troubleshoot and Resolve Production Problems Interview

- Understanding Production Environments: Grasp the complexities of different production environments (cloud, on-premise, hybrid), including infrastructure components and dependencies.

- Log Analysis and Monitoring Tools: Become proficient in using various monitoring and logging tools (e.g., Splunk, ELK stack, CloudWatch) to identify and interpret error messages and performance bottlenecks. Practical application: Walk through a scenario of analyzing logs to pinpoint the root cause of a production issue.

- Debugging Techniques: Master debugging methodologies – systematic approaches to isolate and resolve issues. This includes understanding code tracing, breakpoint usage, and utilizing debugging tools within your chosen programming languages.

- Incident Management and Response: Learn the principles of incident management – prioritizing, escalating, and resolving issues effectively while minimizing downtime. Practical application: Discuss your experience with incident response processes and workflows.

- Root Cause Analysis (RCA): Develop your ability to conduct thorough RCA investigations to prevent similar issues from recurring. This involves understanding the 5 Whys methodology and other RCA frameworks.

- System Architecture and Design: Familiarize yourself with different system architectures (microservices, monolithic) and how understanding the design impacts troubleshooting. This includes understanding how components interact and depend on each other.

- Performance Tuning and Optimization: Learn techniques to identify and resolve performance bottlenecks, including database query optimization and code optimization strategies.

- Communication and Collaboration: Practice explaining complex technical issues clearly and concisely to both technical and non-technical audiences. Highlight your experience collaborating with teams to resolve production issues.

Next Steps

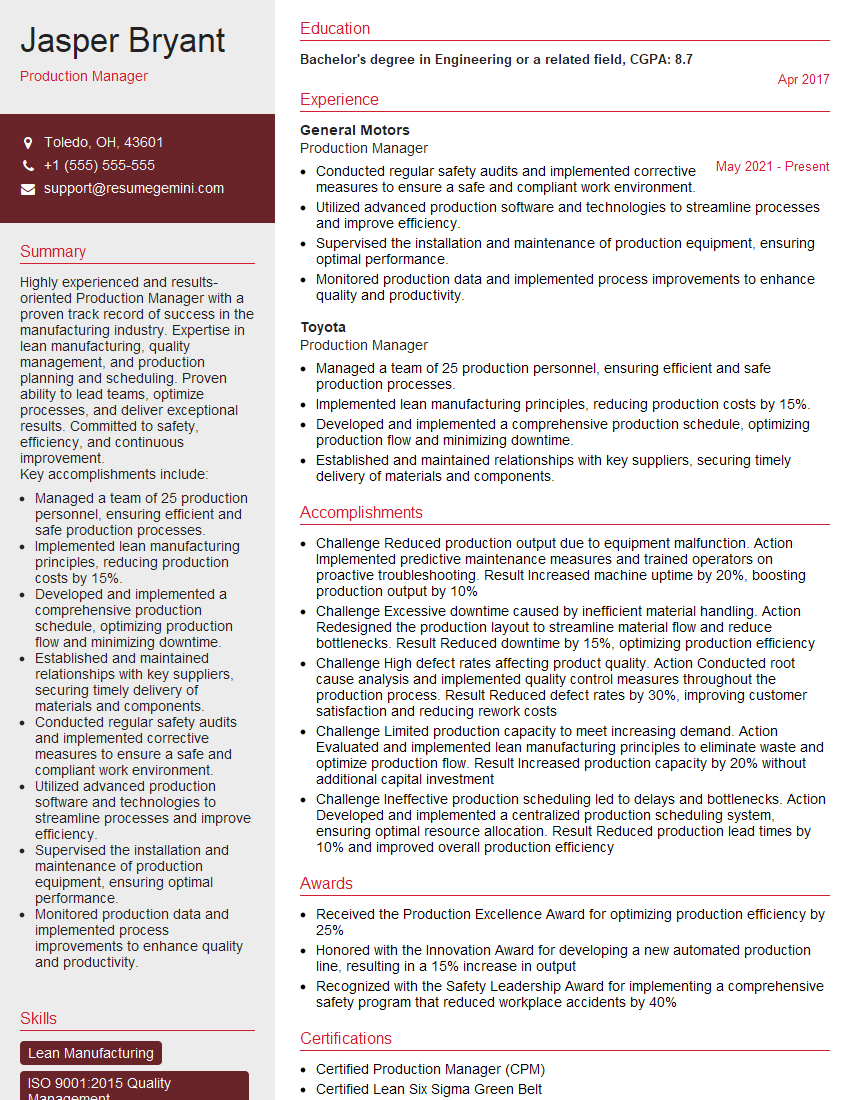

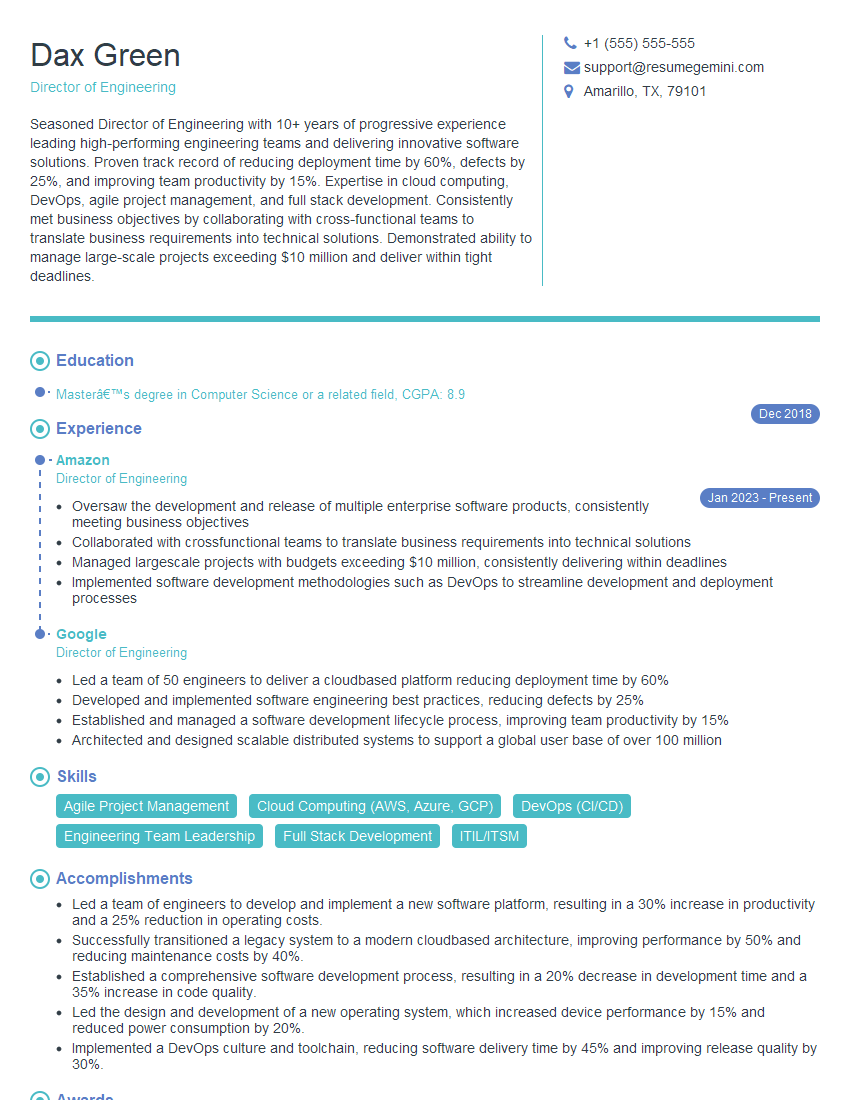

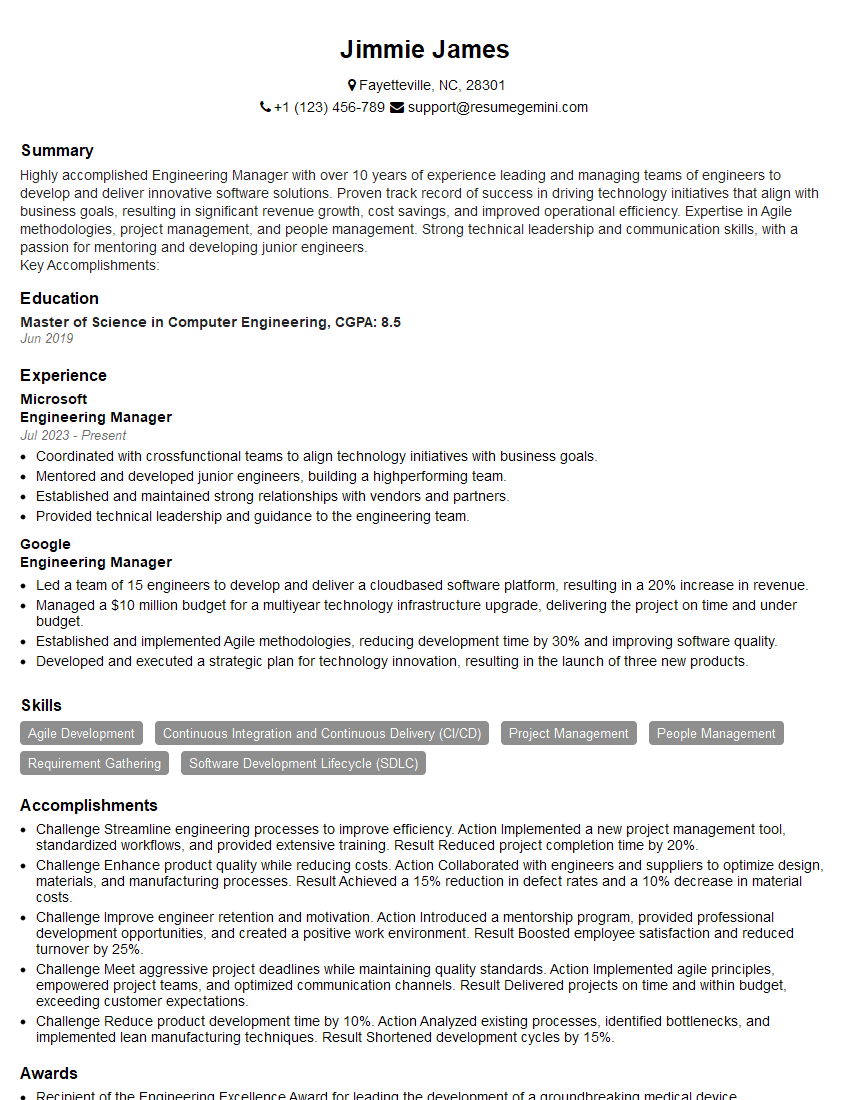

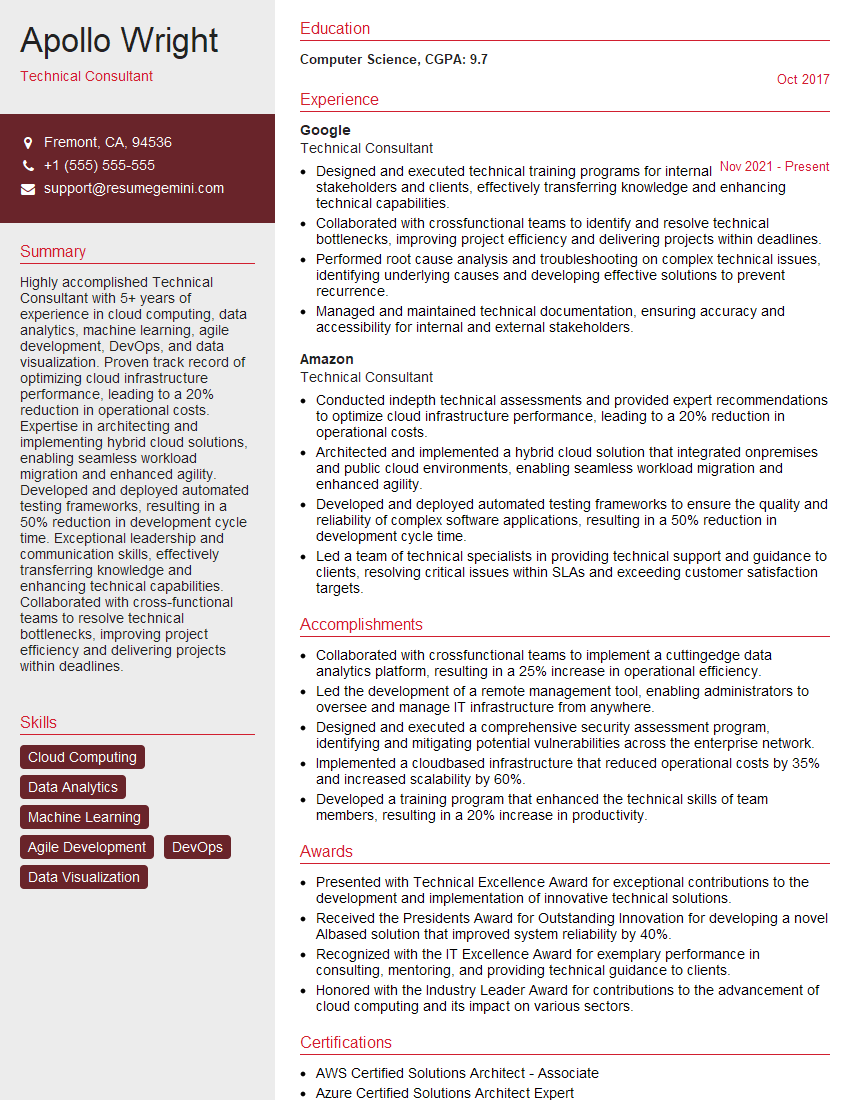

Mastering the art of troubleshooting and resolving production problems is crucial for career advancement in any technical field. It demonstrates critical thinking, problem-solving skills, and the ability to handle pressure – all highly valued attributes. To enhance your job prospects, it’s vital to present these skills effectively on your resume. Creating an ATS-friendly resume is key to getting your application noticed. ResumeGemini is a trusted resource that can help you build a professional, impactful resume tailored to highlight your capabilities in this area. Examples of resumes tailored to “Troubleshoot and resolve production problems” are available to help you craft your winning application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO