Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Microsoft Certified Solutions Associate (MCSA): Windows Server interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Microsoft Certified Solutions Associate (MCSA): Windows Server Interview

Q 1. Explain the different types of Windows Server installations.

Windows Server offers several installation options, each tailored to specific needs. The primary choices are:

- Server Core installation: This minimal installation includes only the core operating system components. It’s ideal for servers managed remotely, offering enhanced security and reduced attack surface because it has fewer services running. Think of it like a lean, mean, efficient machine perfect for specific tasks.

- Server with a GUI (Graphical User Interface): This provides the full Windows Server experience, including a desktop environment and all the graphical tools. It’s easier for administrators who prefer a visual interface and for situations needing more interactive management.

- Nano Server installation: An extremely lightweight installation option, optimized for virtualization and containers. It’s headless (no GUI) and boasts even smaller resource footprint than Server Core. This is for advanced users and scenarios requiring maximum efficiency and minimal attack surface.

- Workstation installation: While technically not a ‘server’ installation, Windows 10 or 11 Pro/Enterprise installations can be used as servers in small or testing environments. However, it’s not recommended for production environments due to limitations in features and scalability compared to dedicated server operating systems.

Choosing the right installation type depends heavily on the server’s role and the administrator’s comfort level. For a highly secure and remotely managed file server, Server Core might be perfect. For a server managing various applications requiring frequent interaction, the GUI option is more suitable.

Q 2. Describe the roles and responsibilities of Active Directory.

Active Directory (AD) is the cornerstone of Windows domain-based networks. It’s a directory service that manages user accounts, computer accounts, groups, and other security objects. Its roles and responsibilities include:

- Authentication: Verifying the identity of users and computers trying to access network resources. Think of it as the network’s bouncer, ensuring only authorized individuals get in.

- Authorization: Determining what resources authenticated users are allowed to access. This defines what each user can and cannot do on the network.

- Centralized Management: Providing a single point of management for users and computers across the entire network. Instead of configuring each computer individually, changes can be made centrally through AD.

- Group Policy Management: Enabling centralized configuration and management of computers and users through Group Policy Objects (GPOs). This allows for enforcing settings, software installations, and security policies across the network.

- Replication: Maintaining consistent data across multiple domain controllers. This ensures high availability and redundancy – if one domain controller goes down, others can still provide services.

In essence, Active Directory acts as a central database and management tool, ensuring efficient and secure access to network resources. It’s the backbone of many enterprise networks, maintaining order and control within complex IT landscapes.

Q 3. How do you troubleshoot Active Directory replication issues?

Troubleshooting Active Directory replication issues requires a systematic approach. Here’s a breakdown:

- Identify the Affected Domains and Domain Controllers: Use tools like

repadmin(command-line) or Active Directory Sites and Services (GUI) to pinpoint which domain controllers aren’t replicating correctly. Look for replication delays or errors. - Check Network Connectivity: Ensure proper network connectivity between the domain controllers. Ping tests, tracert, and checking firewall rules are essential steps. Network problems are the most common cause of replication issues.

- Review Event Logs: Examine the Directory Service event logs on the affected domain controllers for error messages. These logs provide valuable clues about the nature and source of the problem. Search for event IDs related to replication failures.

- Use Repadmin Commands: Use

repadmin /showreplto check replication status and identify any replication errors.repadmin /replshowcmdswill reveal pending commands.repadmin /syncallcan force a replication sync, but use cautiously. - Check DNS Configuration: Incorrect DNS settings can severely impact replication. Ensure that the domain controllers can resolve names correctly.

- Verify Domain Controller Health: Check the health of the affected domain controllers – are they running low on resources like disk space or memory? Are there any other system errors?

- Check for Site Link Issues: If your network utilizes multiple sites, examine site link configurations for any issues. These links define how replication occurs between sites. Ensure the correct connections and costs are set.

- Consider Schema Issues: Conflicts in the Active Directory Schema can block replication. Schema versions should be consistent across all domain controllers.

Remember to carefully document your findings and steps taken throughout the troubleshooting process. The key is to be methodical and patient.

Q 4. What are Group Policy Objects (GPOs) and how are they used?

Group Policy Objects (GPOs) are sets of rules and settings that determine how computers and users within an Active Directory domain are configured. Think of them as customizable blueprints defining network behavior.

They are used to:

- Enforce Security Settings: Control user access rights, passwords, and security policies, enhancing network security.

- Manage Software Deployment: Deploy software automatically to computers, ensuring consistency and reducing manual intervention.

- Configure System Settings: Set Windows settings like desktop backgrounds, network settings, and printer mappings.

- Automate Tasks: Automate various administrative tasks through scripts and scheduled actions.

- Centralize Management: Manage settings for numerous computers and users from a central location in Active Directory.

GPOs are linked to Organizational Units (OUs) or directly to the domain. This allows for targeted application of policies to specific groups of computers or users. For example, you might create a GPO to control settings for all marketing computers within a specific OU, without affecting other departments.

Using GPOs, administrators can effectively enforce consistency, streamline management, and enhance security across a large and complex network environment.

Q 5. Explain the concept of DNS in a Windows Server environment.

In a Windows Server environment, the Domain Name System (DNS) acts as the network’s phonebook. It translates human-readable domain names (like www.example.com) into machine-readable IP addresses (like 192.168.1.100) and vice versa. This allows computers to locate each other on the network.

A Windows Server acting as a DNS server provides:

- Name Resolution: Translates domain names to IP addresses and vice versa, enabling clients to connect to services and other computers by their names rather than remembering complex IP addresses.

- Domain Name Registration and Management: Manages the zone files containing mappings between names and IP addresses. This ensures correct and consistent mappings for all resources.

- Support for Active Directory: Plays a critical role in Active Directory functionality; for example, locating domain controllers.

- Resource Record Management: Manages several types of resource records (like A, AAAA, CNAME, MX, etc.) defining how different types of network resources are located.

Without a properly functioning DNS server, users wouldn’t be able to access network resources using user-friendly names. It is essential for any network’s usability and efficiency. Imagine trying to remember all the IP addresses of websites and servers – a nightmare!

Q 6. How do you manage DHCP in a Windows Server network?

Dynamic Host Configuration Protocol (DHCP) automatically assigns IP addresses and other network configuration parameters to devices on a network. In a Windows Server environment, this is managed through the DHCP Server role.

Key management tasks include:

- Scope Creation and Management: Define IP address ranges (scopes) and assign them to specific parts of the network. This ensures efficient allocation of IP addresses and prevents conflicts.

- Reservation: Reserve specific IP addresses for important servers or devices, ensuring they always get the same address.

- Exclusion: Exclude IP addresses from the pool if they need to remain statically assigned or are not available for use.

- Lease Time Management: Set how long a device holds onto an assigned IP address before needing to renew. Shorter lease times improve address reclamation but increase overhead.

- Option Configuration: Configure DHCP options to provide additional network settings to clients, such as default gateways, DNS servers, and WINS servers.

- Monitoring and Troubleshooting: Monitor DHCP server health, address allocation, and troubleshoot any issues. Tools like the DHCP console and event logs are used for diagnostics.

Proper DHCP management is critical for a smoothly running network. Automatic IP address assignment eliminates manual configuration, reduces errors, and simplifies administration, especially in large networks.

Q 7. What are the different types of virtual switches in Hyper-V?

Hyper-V offers different types of virtual switches to connect virtual machines (VMs) to the network. These provide various levels of isolation and connectivity options:

- External Virtual Switch: This connects VMs directly to the physical network adapter. VMs on this switch can communicate with other devices on the network, including physical machines and devices.

- Internal Virtual Switch: VMs connected to this switch only communicate with other VMs on the same Hyper-V host. They are isolated from the physical network, providing a level of security and containment.

- Private Virtual Switch: Similar to an Internal switch, this offers communication between VMs only on the same Hyper-V host, but it provides additional flexibility for advanced network configurations, such as software-defined networking scenarios.

The choice of switch type depends on the network design and the needs of the VMs. For example, a web server would likely use an external switch to be reachable from the internet. VMs used for internal testing might use an internal switch for security reasons.

Q 8. Describe the process of creating and managing virtual machines in Hyper-V.

Creating and managing virtual machines (VMs) in Hyper-V is a fundamental task for any Windows Server administrator. Think of Hyper-V as a virtual computer lab within your physical server, allowing you to run multiple operating systems simultaneously. The process involves several key steps:

- Creating a VM: You begin by opening the Hyper-V Manager, clicking ‘New’, and then selecting ‘Virtual Machine’. The wizard guides you through specifying the VM name, generation (Gen 1 or Gen 2 – Gen 2 offers enhanced security features), memory allocation, and network settings. You then select the location for the virtual hard disk (VHDX) file, which stores the VM’s data. It’s crucial to choose an appropriate storage location with sufficient space and performance.

- Installing the Guest OS: Once the VM is created, you need to install an operating system within it. This is done by connecting an ISO image of the OS (like Windows Server or Linux) as a virtual DVD drive. The installation process is similar to installing an OS on physical hardware.

- Managing the VM: After installation, you can manage the VM through the Hyper-V Manager. This includes actions like starting, stopping, pausing, and saving the VM’s state. You can also configure the VM’s network adapters, add virtual hard drives (for additional storage), and adjust processor and memory allocation dynamically based on the workload.

- Snapshots: Hyper-V allows you to create snapshots, which are essentially point-in-time copies of the VM’s state. This is incredibly useful for testing updates or configurations without affecting the main VM. If something goes wrong, you can simply revert to the snapshot.

For example, let’s say you’re testing a new application. You create a VM, install the application on it using a snapshot, and test it extensively. If an issue occurs, you can simply revert to the snapshot without reinstalling the entire OS.

Q 9. Explain the different storage options available in Hyper-V.

Hyper-V offers various storage options to accommodate different performance and scalability needs. The choice depends on factors like VM size, workload demands, and budget. Here are the key options:

- Basic VHDX/VHD: This is the simplest option, storing the VM’s data in a single file (.vhdx or .vhd). It’s suitable for smaller VMs or those with less demanding I/O requirements.

- Differencing Disks: These are created on top of a parent VHDX, storing only the changes made. This is helpful for testing or creating multiple VMs from a base image. It saves storage space by not duplicating the base image.

- iSCSI: Using iSCSI allows you to store the VHDX files on an external SAN (Storage Area Network) or NAS (Network Attached Storage). This offers better performance and scalability, especially for larger deployments. This is also beneficial for Disaster Recovery.

- Storage Spaces Direct: This feature combines multiple physical hard drives into a single, larger storage pool. It’s particularly useful for creating highly available and scalable storage solutions for Hyper-V. It uses features like data redundancy to improve reliability.

- SMB 3.0 file shares: Hyper-V can also leverage SMB 3.0 file shares for storing VHDX files. This offers flexibility and enables the use of centralized storage solutions. This is useful when your VMs are hosted across multiple servers.

For instance, a production environment requiring high availability and performance might use Storage Spaces Direct or iSCSI, whereas a smaller development environment might use basic VHDX files.

Q 10. How do you perform backups and restores of virtual machines?

Backing up and restoring VMs in Hyper-V is critical for data protection and disaster recovery. You can use several methods:

- Hyper-V Replica: This built-in feature replicates VMs to a secondary Hyper-V host, providing disaster recovery. It offers near-synchronous and asynchronous replication modes. It’s crucial to understand the implications of RPO (Recovery Point Objective) and RTO (Recovery Time Objective) for the chosen replication mode.

- Third-party backup solutions: Many commercial backup solutions (like Veeam, Acronis) provide robust features for backing up and restoring Hyper-V VMs. These solutions often offer advanced features like granular recovery and data deduplication.

- Windows Server Backup: The built-in Windows Server Backup can back up VMs, but it may require more manual steps compared to dedicated backup solutions. It creates a backup file containing the entire VM state.

- Exporting VMs: You can export a VM to a single file (.vhdx) which is useful for migrating a VM between servers. It’s not necessarily a backup as it does not contain any metadata related to its configuration.

For example, a company might use Hyper-V Replica for immediate disaster recovery and a third-party solution for regular backups with advanced features like granular recovery.

Q 11. What are the different types of Windows Server failover clusters?

Windows Server Failover Clustering offers different types of clusters depending on the needs:

- Failover Cluster with Shared Storage: This is the most common type, requiring shared storage (like SAN or iSCSI) accessible by all cluster nodes. If one node fails, the other nodes can access the shared storage and take over the services hosted on the failed node. This ensures high availability of applications and services.

- Failover Cluster without Shared Storage (also known as a single-node cluster or storage-less cluster): This type doesn’t need shared storage; instead, it uses features like Hyper-V Replica for failover and is mostly useful for Hyper-V virtual machines. It’s suitable for situations where shared storage isn’t available or cost-effective.

The choice between these types depends on factors like the type of workloads, budget constraints, and the level of high availability required.

Q 12. Describe the process of setting up a failover cluster.

Setting up a failover cluster involves several steps:

- Hardware and Network Configuration: Ensure that the server nodes meet the minimum hardware requirements and have a reliable network connection. A dedicated heartbeat network is generally recommended.

- Shared Storage (if applicable): If you are creating a shared storage cluster, the servers need to be able to access the same shared storage. This is usually a SAN or iSCSI storage solution.

- Installing the Failover Clustering Feature: Install the Failover Clustering feature on all the nodes that will be part of the cluster using Server Manager.

- Creating the Cluster: Use the Failover Cluster Manager to create the cluster. The wizard will validate the nodes and storage. During this phase, all cluster nodes will be validated, and their network configurations will be checked.

- Configuring the Cluster Resources: Add the resources (like virtual machines, applications, and network shares) that you want to be highly available to the cluster. Each resource will require specific configurations based on the type of resource.

- Testing the Cluster: After configuring the cluster, it’s crucial to test failover and failback scenarios to ensure that everything works as expected. Testing provides confidence that the cluster setup and configurations are valid.

Imagine a web server application. A failover cluster ensures that even if one server fails, the web application remains available on the other node. It is essential to test the cluster thoroughly by manually simulating node failures.

Q 13. Explain the role of Windows Server Update Services (WSUS).

Windows Server Update Services (WSUS) is a centralized update management system that allows administrators to deploy software updates to computers within a network. Think of it as a central update distribution point for your organization, reducing bandwidth usage and allowing controlled deployment of updates. Instead of each computer contacting Microsoft Update directly, they receive updates from your local WSUS server.

WSUS’s key roles include:

- Downloading updates: WSUS downloads updates from Microsoft Update and stores them locally.

- Approving updates: Administrators review and approve updates before deploying them to client computers. This allows for testing and a controlled rollout.

- Deploying updates: WSUS allows administrators to deploy updates to groups of computers or individual computers.

- Reporting: WSUS provides reports on update deployment status, allowing administrators to monitor the progress and identify any issues.

Q 14. How do you manage software updates using WSUS?

Managing software updates using WSUS involves several steps:

- Synchronizing updates: Regularly synchronize WSUS with Microsoft Update to download the latest updates. The frequency of synchronization is configurable.

- Approving updates: Review the downloaded updates and approve them for deployment to specific computer groups. This allows for targeted deployment based on the needs of different departments or applications.

- Creating update groups: Organize computers into groups to target updates effectively. This is efficient for managing updates based on the operating systems of the machines.

- Deploying updates: Deploy approved updates to the relevant computer groups. You can schedule deployments or deploy them immediately. WSUS allows for various deployment options such as automated, manual, and scheduled.

- Monitoring deployment: Monitor the deployment progress using WSUS reports. Identify any failures and take corrective actions.

For instance, a company might create separate update groups for different departments (e.g., accounting, marketing). This allows for deploying updates relevant to the applications used by those departments without affecting computers in other groups. If a particular update is causing issues, you can simply not approve it for other groups.

Q 15. Describe the different levels of security in Windows Server.

Windows Server security operates on multiple layers, working together to protect your data and systems. Think of it like a castle with multiple defenses. We have:

- Physical Security: This is the most basic level – securing the server room itself from unauthorized physical access. This involves things like locked doors, security cameras, and environmental controls.

- Network Security: This layer protects the server from network-based attacks. It includes firewalls (discussed in the next question), intrusion detection systems, and network segmentation to isolate sensitive resources.

- Operating System Security: This involves features built into Windows Server itself, such as User Account Control (UAC), which restricts what processes can run without administrator privileges. This minimizes the impact of malware. Strong passwords and regular updates are crucial here.

- Application Security: Each application running on the server should have its own security measures. This might include things like access control lists (ACLs) determining which users can access specific application data, secure coding practices to prevent vulnerabilities, and regular security patching.

- Data Security: This protects data at rest and in transit. It involves techniques like encryption (both disk encryption like BitLocker and data-in-transit encryption with SSL/TLS), data backups, and access control to ensure only authorized personnel can access sensitive information. Think of this as the royal treasury within the castle – only the king has the key!

These layers work in concert. A breach in one area doesn’t necessarily compromise the entire system, but a well-rounded strategy addresses all layers for robust protection.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you configure firewalls in Windows Server?

Windows Server firewalls, primarily Windows Defender Firewall with Advanced Security, are configured through the Windows Server Manager or PowerShell. You can manage rules to allow or deny specific network traffic based on ports, protocols, IP addresses, and applications.

For example, you might create a rule to allow inbound traffic on port 443 (HTTPS) for secure web access, while blocking all inbound traffic on port 3389 (RDP) except from specific IP addresses to prevent unauthorized remote connections. This keeps your server from being easily accessed by malicious actors.

Using the GUI (Graphical User Interface): Navigate to ‘Windows Defender Firewall with Advanced Security’ in the Server Manager. There you can create inbound and outbound rules, manage profiles (Domain, Private, Public), and monitor active rules. This is intuitive and visually clear.

Using PowerShell: PowerShell provides more granular control and automation capabilities. For instance, creating a rule to allow inbound traffic on port 80 (HTTP) from a specific IP address (192.168.1.100) would involve commands like:

New-NetFirewallRule -DisplayName "Allow HTTP from 192.168.1.100" -Direction Inbound -Protocol TCP -LocalPort 80 -RemoteAddress 192.168.1.100 -Action AllowThis approach is faster and more efficient for advanced configurations and scripting.

Q 17. What are the different types of network security protocols?

Several network security protocols are used to secure communication between devices and servers. They fall into various categories:

- Authentication Protocols: These verify the identity of users and devices. Examples include Kerberos (commonly used in Active Directory environments), NTLM (an older authentication protocol), and RADIUS (Remote Authentication Dial-In User Service) for network access control.

- Encryption Protocols: These secure data in transit by encrypting it, making it unreadable to eavesdroppers. Common protocols include TLS/SSL (Transport Layer Security/Secure Sockets Layer) used for HTTPS, and IPSec (Internet Protocol Security) used for VPNs and secure communication between networks.

- Tunneling Protocols: These create secure, encrypted connections over less secure networks. The most well-known example is IPsec, which often forms the basis of VPNs, creating a secure virtual tunnel through the internet.

- Access Control Protocols: These control network access based on user identity or device characteristics. Examples include 802.1X and RADIUS. These are often used to enforce security policies on wireless networks and other access points.

The choice of protocol depends on the specific security requirements. For instance, HTTPS is essential for secure web traffic, while IPSec is crucial for protecting data transmitted across public networks.

Q 18. Explain the concept of Network Access Protection (NAP).

Network Access Protection (NAP) is a suite of technologies that enforces network access based on a client’s security health. Imagine it as a bouncer at a nightclub, only letting in patrons who meet certain criteria. It verifies that the device meets minimum security requirements before granting network access.

NAP uses health policies to define these requirements. A health policy might specify that a client must have up-to-date antivirus software, a functioning firewall, and a patched operating system. If a client doesn’t meet these requirements, NAP can restrict its access, perhaps only granting access to a limited, quarantined network for remediation.

NAP helps prevent compromised or unpatched devices from infecting the network. It’s particularly useful in larger organizations with many different devices connecting, preventing malware from spreading quickly across the network.

Q 19. How do you monitor and manage server performance?

Monitoring and managing server performance is crucial for ensuring reliability, availability, and optimal resource utilization. This involves actively tracking key metrics and proactively addressing performance bottlenecks.

This can be done by:

- Resource Monitoring: Regularly track CPU utilization, memory usage, disk I/O, and network traffic. High CPU or memory usage often indicate problems with applications or services. Slow disk I/O can suggest storage issues. High network traffic could point to a network bottleneck or a denial-of-service (DoS) attempt.

- Application Monitoring: Monitor the performance of specific applications and services running on the server, looking for errors, slow response times, or resource leaks.

- Event Logging: Review Windows Event Logs for errors, warnings, and information. This provides valuable insight into system events and potential problems. Specific application logs also provide application-specific debugging info.

- Performance Counters: Utilize performance counters, accessible through Performance Monitor, to graphically and numerically visualize various system parameters over time.

- Proactive Maintenance: This includes regular software updates, patching, disk defragmentation, and cleaning up unnecessary files.

By carefully analyzing these metrics, you can identify performance bottlenecks, anticipate potential issues, and implement solutions before they significantly impact the server’s operation.

Q 20. What are the different tools used for server monitoring?

Several tools aid in server monitoring and management:

- Performance Monitor: A built-in Windows tool allowing you to monitor performance counters, create custom alerts based on thresholds, and log performance data over time.

- Resource Monitor: A real-time tool visualizing CPU, memory, disk, and network usage, making it easier to spot immediate bottlenecks.

- System Center Operations Manager (SCOM): A comprehensive system management suite that enables centralized monitoring of multiple servers and applications across an entire infrastructure. This is ideal for larger, more complex environments.

- Third-Party Monitoring Tools: Several commercial tools offer sophisticated monitoring and alerting features, often with more advanced reporting and visualization capabilities. Examples include Datadog, Nagios, and Zabbix.

- PowerShell: While not strictly a monitoring tool, PowerShell scripts can be used to automate tasks and collect performance data. This allows customized and automated monitoring processes tailored to your specific needs.

The best choice depends on the scale and complexity of your environment and your specific monitoring needs. For smaller deployments, Performance Monitor might suffice, while larger organizations often benefit from enterprise-level solutions like SCOM or third-party tools.

Q 21. Describe the process of troubleshooting network connectivity issues.

Troubleshooting network connectivity issues involves a systematic approach, similar to diagnosing a car problem. You need to isolate the problem step-by-step.

Here’s a structured approach:

- Identify the problem: What exactly is broken? Can a user access network resources? Is it a specific application or a general network problem? Note the error messages and symptoms.

- Isolate the scope: Is the problem limited to a single machine, or are multiple users affected? Is it a specific network segment or the entire network? This helps determine if the problem lies with the client, the server, or the network infrastructure itself.

- Check the basics: This sounds trivial, but it’s often the solution. Are network cables plugged in correctly? Are Wi-Fi signals strong? Is the server running? Is the DNS server accessible?

- Use diagnostic tools:

ping: Verify network connectivity to a server or gateway (ping 8.8.8.8to check internet connectivity).ipconfig /all: Display network interface information, including IP address, subnet mask, and default gateway. Look for errors or unexpected settings.tracert: Trace the route to a destination, helping identify points of failure.- Network Monitor (or similar tools): Capture network traffic to analyze data flow and identify potential bottlenecks or errors.

- Check server configuration: Ensure the server’s network settings, firewall rules, and services are correctly configured. Check for errors in event logs.

- Consult documentation and resources: Check relevant manuals and support documentation for troubleshooting tips.

A methodical approach, combined with appropriate diagnostic tools, helps pinpoint the source of the problem and apply the correct solution efficiently.

Q 22. How do you troubleshoot application performance issues?

Troubleshooting application performance issues requires a systematic approach. Think of it like diagnosing a car problem – you wouldn’t just start replacing parts randomly! Instead, you need to isolate the issue through observation and testing.

My troubleshooting process usually starts with gathering information. This includes checking application logs for error messages, monitoring resource utilization (CPU, memory, disk I/O) using tools like Performance Monitor, and analyzing network traffic. For example, if an application is consistently slow, I’d first check if the CPU is pegged at 100%, suggesting a CPU bottleneck. Similarly, high disk I/O could indicate a storage issue.

Once a potential bottleneck is identified, I’d focus my investigation there. If it’s a CPU problem, I’d look into the application code for inefficient algorithms or resource-intensive operations. Memory issues might point to memory leaks or insufficient RAM allocation. Slow disk I/O could be due to fragmented drives, inadequate storage space, or problems with the SAN.

Next, I’d use tools like Event Viewer to pinpoint the specific errors and their timestamps. This is crucial for identifying patterns and correlating events to pinpoint the root cause. Often, performance issues are not isolated incidents; they are symptoms of a larger problem.

Finally, after resolving the issue, I’d implement monitoring to prevent similar problems from occurring again. Regularly reviewing performance metrics and setting up alerts are key to proactive maintenance.

Q 23. Explain the concept of PowerShell in Windows Server administration.

PowerShell is a command-line shell and scripting language built into Windows Server. Think of it as a supercharged command prompt on steroids, allowing you to automate tasks, manage servers, and interact with the operating system in a much more powerful way than traditional command-line tools. It uses cmdlets (command-lets), which are small, specialized commands, to perform specific functions.

In Windows Server administration, PowerShell is indispensable. It allows you to manage virtually every aspect of the server, from Active Directory to networking and storage. It’s much more efficient than using the GUI for repetitive or complex tasks. For example, imagine manually creating 100 user accounts – that’s tedious! PowerShell can do it with a single script.

PowerShell’s strength lies in its scripting capabilities. You can write scripts to automate complex tasks, run them on multiple servers simultaneously, and schedule them to run automatically. This dramatically improves administrative efficiency and reduces the risk of human error.

Q 24. Describe some common PowerShell cmdlets used in server management.

Numerous cmdlets are essential for server management. Here are a few common examples:

Get-Process: Retrieves information about running processes. Useful for identifying resource-intensive applications.Get-Service: Gets information about Windows services. Lets you start, stop, and manage services.Get-EventLog: Retrieves entries from the Windows event logs. Helps identify errors and warnings.New-ADUser: Creates new users in Active Directory. Great for automation.Set-Location: Changes the current directory. Navigation within the PowerShell environment.Get-ChildItem: Lists the contents of a directory (like the ‘dir’ command). Useful for file management.Stop-Computer: Shuts down a computer remotely.

These are just a small subset of the many cmdlets available. PowerShell’s strength comes from its comprehensive command library and its ability to be chained together to create sophisticated administrative workflows.

Q 25. How do you automate administrative tasks using PowerShell?

PowerShell automation is done through scripting. Scripts are sequences of cmdlets combined with control flow statements (like loops and conditional statements) to create powerful automated tasks. A simple example would be a script to back up files regularly.

Let’s say you need to back up all files from the C:\Data folder to a network share. Here’s a basic example (remember this is a simplified illustration and error handling should be added for a production environment):

Copy-Item -Path 'C:\Data\*' -Destination '\\server\share\backup' -Recurse

This single line copies all files and subfolders recursively from the source to the destination. You can add error checking, scheduling (using Task Scheduler), and logging to make it robust. For more complex tasks, you’d use loops, functions, and other PowerShell features to create sophisticated scripts that automate almost anything.

Imagine automating the deployment of servers, configuring network settings, or performing routine maintenance. PowerShell scripts can significantly reduce administrative overhead and improve efficiency.

Q 26. What are the benefits of using Windows Server containers?

Windows Server containers offer several advantages in modern application deployment:

- Lightweight: Containers share the host OS kernel, resulting in smaller footprints than virtual machines (VMs). This translates to faster startup times and better resource utilization.

- Portability: Containers can easily be moved between different environments without modification, as they package all application dependencies within them.

- Isolation: Containers provide isolation between applications, preventing conflicts and enhancing security. However, this isolation is less strict than with VMs.

- Improved Resource Utilization: Due to their lightweight nature, multiple containers can run on a single host, maximizing server resources.

- Faster Deployment: Containerized applications deploy much faster than VMs, leading to quicker release cycles.

Think of containers like shipping containers – they each hold a specific cargo (application) but share the same ship (host OS). This allows for efficient transport and management of multiple packages.

Q 27. Explain the difference between Hyper-V and containers.

Hyper-V and containers are both virtualization technologies, but they differ significantly in their approach:

- Hyper-V: Creates full virtual machines, each with its own guest OS, kernel, and resources. Think of it as a full-fledged virtual computer within your physical server.

- Containers: Share the host OS kernel, meaning they are much more lightweight. They have their own isolated application space but share system libraries and services with the host and other containers.

The key difference lies in resource utilization and isolation. Hyper-V provides stronger isolation but consumes more resources. Containers offer better resource efficiency but with less stringent isolation. The choice depends on the application’s requirements and the desired level of isolation.

Imagine Hyper-V as a separate apartment building, where each apartment (VM) has its own utilities and complete structure. Containers, on the other hand, are like rooms within a single building, sharing common infrastructure.

Q 28. How do you manage and monitor Windows Server containers?

Managing and monitoring Windows Server containers involves using several tools and techniques:

- Docker: The most common containerization platform, offering command-line tools to manage containers (

docker psto list running containers,docker runto start a container, etc.). - PowerShell: Can interact with Docker and manage containers via cmdlets. It offers scripting capabilities for automating container management tasks.

- Container logs: Monitoring container logs helps identify errors and issues within the applications running inside the containers. Docker provides tools for accessing these logs.

- Resource monitoring: Tools like Performance Monitor can be used to monitor resource consumption (CPU, memory, network) of individual containers and the host OS.

- Kubernetes (Optional): For managing multiple containers in a cluster, orchestration platforms like Kubernetes provide advanced functionalities.

Effective management requires monitoring resource utilization to prevent resource starvation and ensuring proper container health. Regularly reviewing logs helps identify problems early on and prevent service disruptions.

Key Topics to Learn for Microsoft Certified Solutions Associate (MCSA): Windows Server Interview

- Core Windows Server Services: Understand the architecture and functionality of key services like Active Directory, DNS, DHCP, and File Services. Focus on practical application and troubleshooting scenarios.

- Virtualization with Hyper-V: Master the creation, management, and maintenance of virtual machines. Practice creating different virtual switch configurations and understanding resource allocation.

- Networking Fundamentals: Demonstrate a solid grasp of IP addressing, subnetting, routing protocols, and network security best practices within a Windows Server environment.

- Storage Solutions: Explore different storage options, including SAN, NAS, and iSCSI. Understand how to implement and manage storage solutions for optimal performance and data protection.

- Security Best Practices: Discuss various security measures, including Active Directory security, Group Policy management, and implementing firewalls and intrusion detection systems. Be prepared to explain how to mitigate common security threats.

- High Availability and Disaster Recovery: Understand concepts like clustering, failover clustering, and backup/restore strategies. Be able to discuss how to ensure high availability and business continuity.

- PowerShell Scripting: Demonstrate proficiency in using PowerShell to automate administrative tasks and manage Windows Server infrastructure. Practice writing simple scripts for common administrative functions.

- Troubleshooting and Problem-Solving: Develop a systematic approach to troubleshooting common Windows Server issues. Practice identifying the root cause of problems and implementing effective solutions.

Next Steps

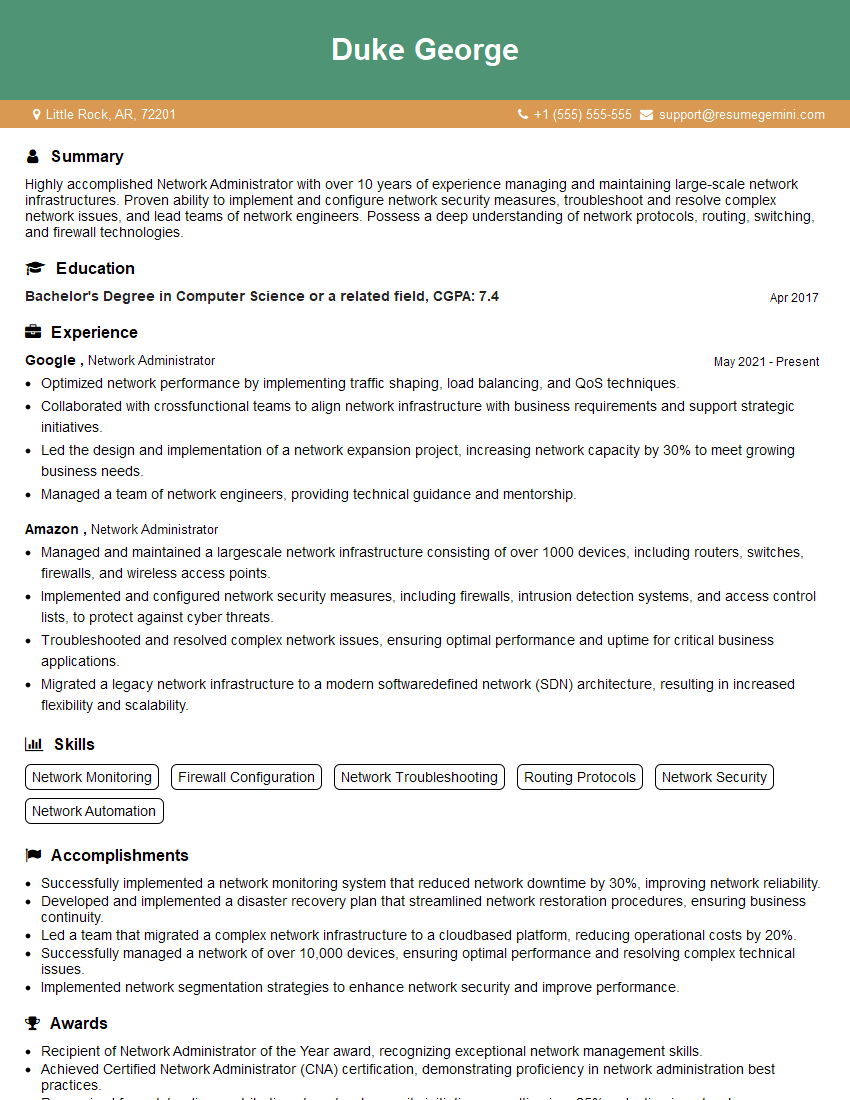

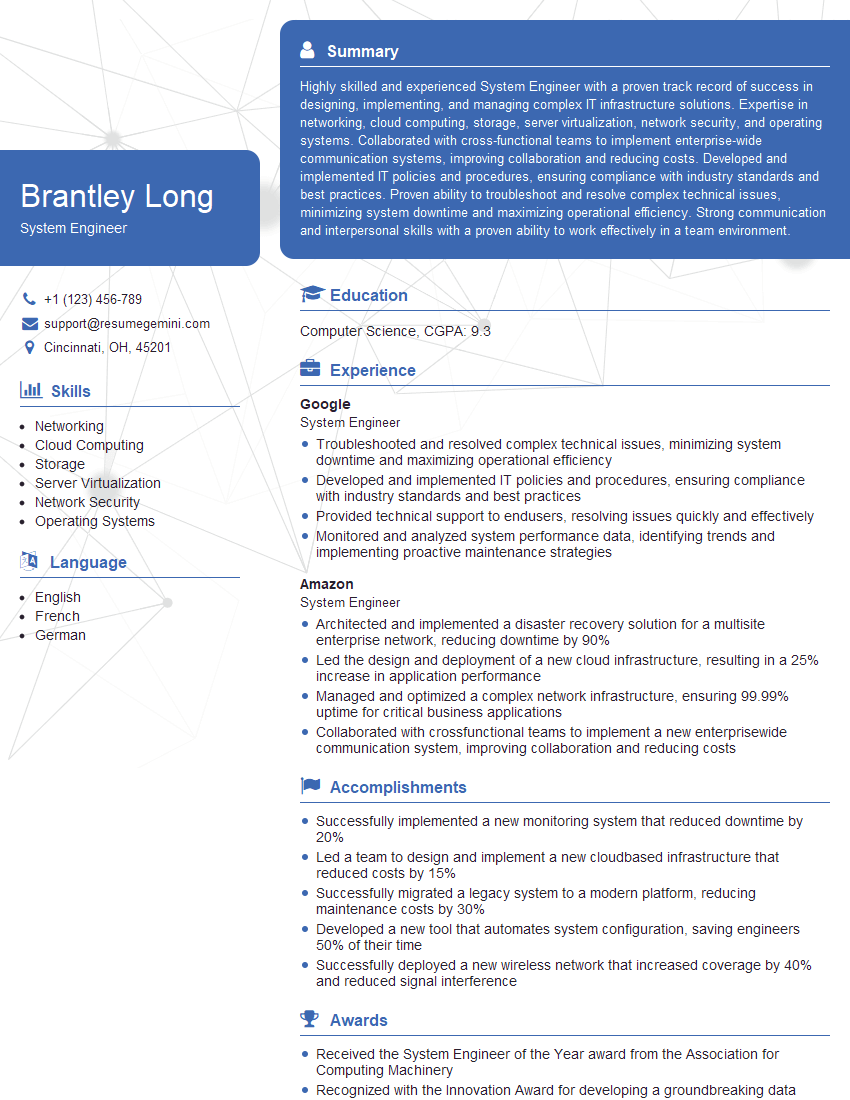

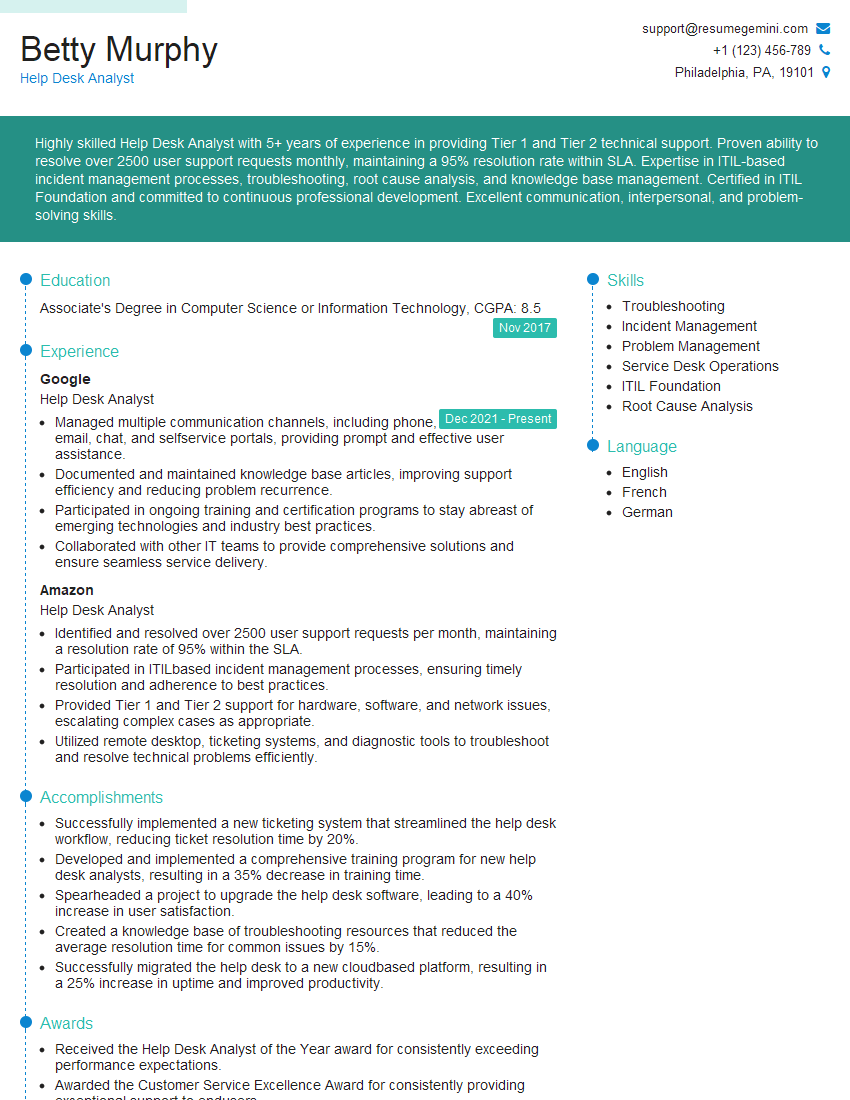

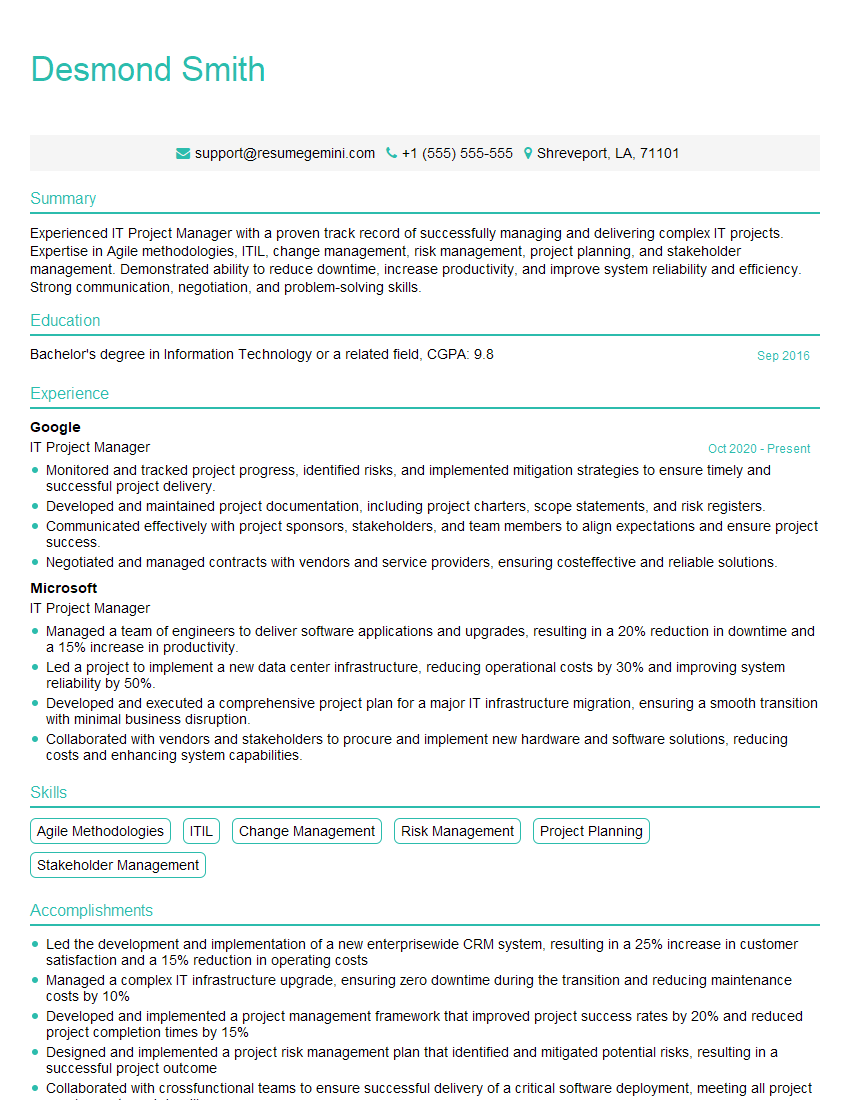

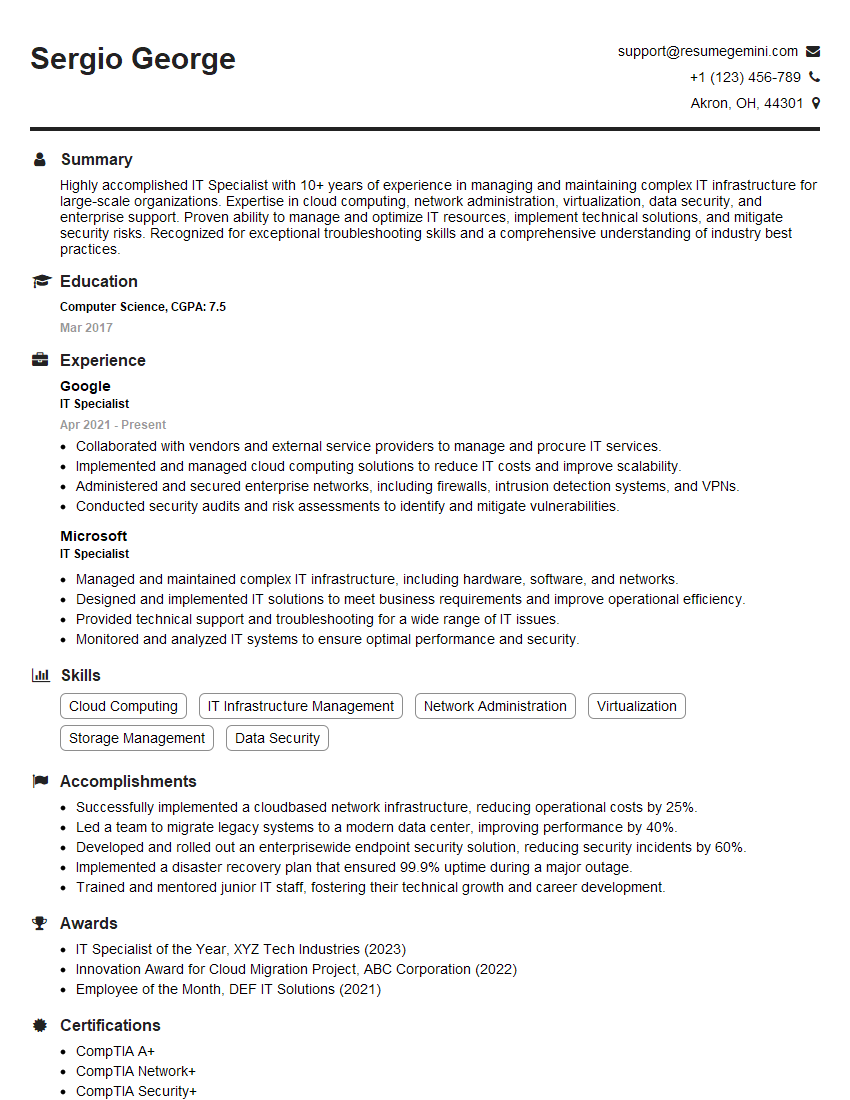

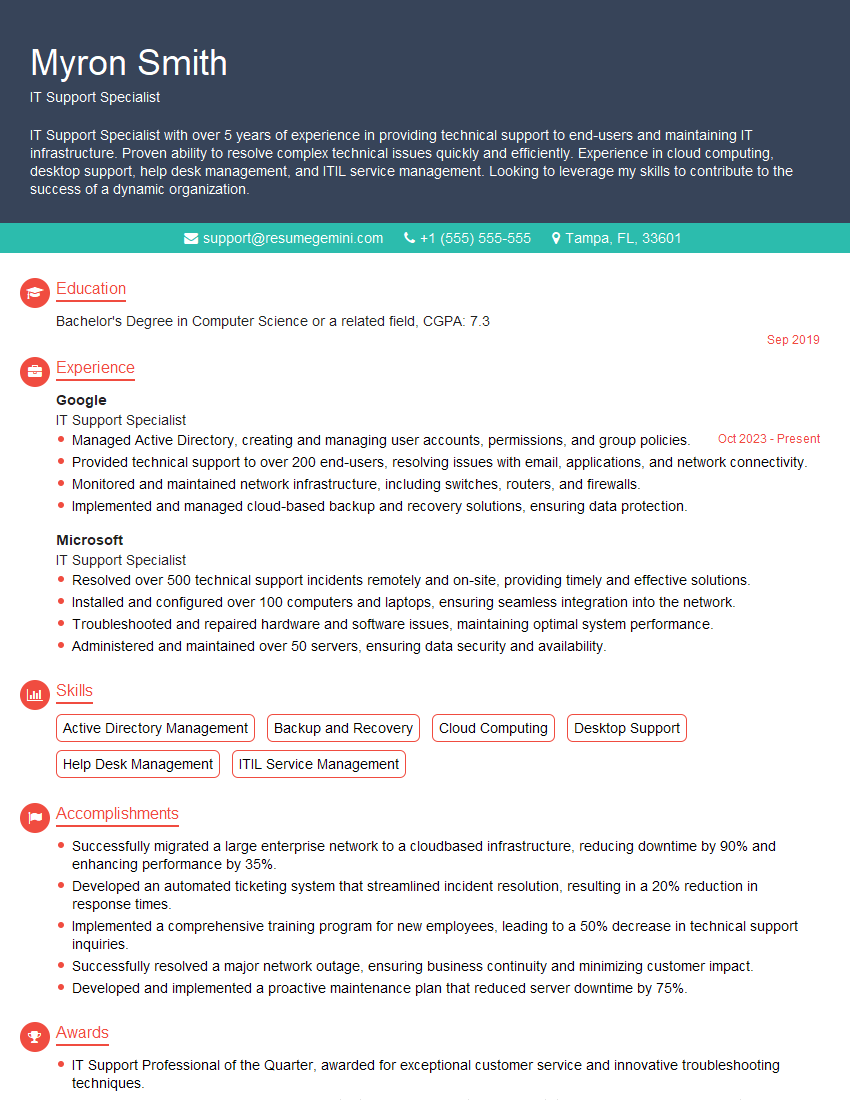

Mastering the MCSA: Windows Server certification significantly enhances your career prospects, opening doors to rewarding roles in system administration, cloud computing, and IT infrastructure management. To maximize your job search success, it’s crucial to present your skills effectively. Crafting an ATS-friendly resume is essential for getting your application noticed by recruiters. Use ResumeGemini to build a professional resume that showcases your MCSA skills and experience. ResumeGemini provides examples of resumes tailored to the MCSA: Windows Server certification, helping you present your qualifications compellingly. Invest the time to create a strong resume – it’s a key ingredient in your career advancement.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO