Cracking a skill-specific interview, like one for Use gauging tools, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Use gauging tools Interview

Q 1. Explain the difference between go/no-go gauges and variable gauges.

Go/no-go gauges and variable gauges serve different purposes in dimensional measurement. Go/no-go gauges are designed for simple pass/fail inspection; they quickly determine if a part is within tolerance. They have two ends: a ‘go’ end, slightly larger than the minimum acceptable size, and a ‘no-go’ end, slightly smaller than the maximum acceptable size. If the part fits the ‘go’ end but not the ‘no-go’ end, it’s within tolerance. Conversely, variable gauges, such as micrometers and calipers, provide precise measurements of the part’s actual dimension. These allow for more granular analysis and identification of deviations from the nominal value.

Think of it like this: A go/no-go gauge is like a doorway – you either fit through or you don’t. A variable gauge is like a measuring tape – it tells you exactly how tall you are.

Q 2. Describe the process of calibrating a micrometer.

Calibrating a micrometer ensures its accuracy. The process typically involves:

- Cleaning: Thoroughly clean the micrometer’s anvils and spindle to remove any debris that could affect the measurement.

- Zeroing: Close the micrometer anvils completely and verify the reading is zero. If not, adjust the zero setting mechanism carefully according to the manufacturer’s instructions. Most micrometers have a thimble lock to prevent accidental adjustments.

- Verification: Use a calibrated gauge block or standard (traceable to a national standard) of known dimensions to verify the micrometer’s readings. Compare several measurements at different points on the micrometer’s range to check for linearity.

- Adjustment (If Necessary): If there’s a significant discrepancy between the micrometer reading and the known standard, the micrometer might require adjustment by a qualified technician. This is often not user-adjustable.

- Documentation: Record the calibration date, results, and any corrective actions taken. This documentation is crucial for traceability and quality control.

Regular calibration, usually at intervals specified by the manufacturer and/or company standards (often monthly or annually), is essential to maintain accuracy.

Q 3. How do you determine the appropriate gauge for a specific measurement task?

Selecting the appropriate gauge depends on several factors:

- Required Accuracy: Go/no-go gauges suffice for simple pass/fail checks, while micrometers or dial indicators are needed for precise measurements.

- Part Geometry: The shape and size of the part dictate the type of gauge needed. For example, a caliper is suitable for measuring the outside diameter of a cylindrical part, while a depth gauge measures internal depths.

- Material: Some gauges are better suited for specific materials. Hardness of the part can also influence gauge selection to avoid scratching or damaging either the part or the gauge.

- Tolerance: The allowable variation in the part’s dimension determines the necessary gauge precision and resolution.

For instance, in mass production of a simple bolt, a go/no-go gauge might suffice. But in aerospace manufacturing, where tolerances are incredibly tight, a high-precision micrometer or even a coordinate measuring machine (CMM) might be required.

Q 4. What are the common sources of error when using gauging tools?

Common sources of error when using gauging tools include:

- Improper Calibration: Using an uncalibrated or incorrectly calibrated gauge leads to inaccurate readings.

- Operator Error: Incorrect handling, reading errors (parallax), or insufficient force application can introduce measurement bias.

- Tool Wear: Wear on the gauge’s anvils or measuring surfaces reduces accuracy. Regular inspection for wear is essential.

- Environmental Factors: Temperature fluctuations can affect the dimensions of both the part and the gauge, causing inaccurate measurements. Maintaining a controlled environment is vital for critical measurements.

- Incorrect Technique: Failing to properly zero the instrument or using incorrect measuring techniques can lead to substantial errors.

For example, applying too much pressure when using a micrometer can compress the part, resulting in a lower-than-actual measurement. Parallax error can occur if the user does not view the scale squarely.

Q 5. How do you handle gauge inaccuracy or malfunction during a measurement?

If gauge inaccuracy or malfunction is detected, the immediate action is to cease using the gauge for measurements. The gauge should be clearly marked as ‘out of service’ to prevent accidental use. The next steps are:

- Investigate the Cause: Determine why the gauge is malfunctioning (e.g., wear, damage, improper calibration).

- Report the Issue: Notify the appropriate personnel (supervisor, metrology lab) about the problem, providing details.

- Calibration/Repair: Send the gauge for recalibration or repair by a qualified technician if necessary.

- Use a Replacement Gauge: Until the faulty gauge is repaired or replaced, use a verified and calibrated replacement to continue the measurement process, if possible. Proper documentation should outline the procedure for gauge substitution.

- Review Affected Measurements: If the gauge was used for measurements before the malfunction was detected, the affected measurements might need to be repeated with a verified gauge.

Failing to address gauge inaccuracy can lead to significant quality issues, potentially resulting in defective parts or costly rework.

Q 6. Explain the concept of gauge repeatability and reproducibility (R&R).

Gauge Repeatability and Reproducibility (R&R) studies assess the variability of a measurement process. Repeatability refers to the variation obtained when the same operator measures the same part multiple times using the same gauge. Reproducibility refers to the variation obtained when different operators measure the same part using the same gauge. An R&R study helps to determine the proportion of total variation attributable to the gauge itself, the operator, and the part-to-part variation.

A high R&R value indicates significant variation in measurements and suggests problems with the gauge, the measurement process, or both, needing investigation and improvement. A low R&R value shows that the measurement system is reliable and provides consistent results.

These studies are crucial for process capability analysis and are often performed as part of a quality control plan, enabling manufacturers to quantify the variation in their measurement process and identify potential sources of error.

Q 7. Describe your experience with different types of gauging tools (e.g., calipers, micrometers, dial indicators).

My experience encompasses a wide range of gauging tools. I’ve extensively used:

- Vernier Calipers: For measuring external and internal dimensions, depths, and step heights with good accuracy.

- Micrometers: For highly precise measurements of linear dimensions, utilizing both inch and metric scales. I’m proficient in using both digital and mechanical micrometers, understanding their respective advantages and limitations.

- Dial Indicators: These are essential for measuring surface irregularities, runout, and small displacements. I have experience with different types of dial indicators such as lever dial indicators and test indicators, and applying them for various applications.

- Go/No-Go Gauges: I’ve used these extensively in quality control inspections for quick pass/fail assessments.

- Height Gauges: I have used these in situations needing precise height measurements or when using surface plates.

In one project involving the production of high-precision bearings, the use of micrometers was crucial in ensuring that all dimensions were within the extremely tight tolerances specified. Another situation involved the troubleshooting of a component with excessive runout, where a dial indicator proved invaluable in identifying the source of the problem.

Q 8. How do you ensure the cleanliness and proper handling of gauging tools?

Maintaining the cleanliness and proper handling of gauging tools is paramount for accurate measurements and the longevity of the tools themselves. Think of it like this: a dirty or damaged measuring tape won’t give you accurate length measurements, right? The same principle applies to gauging tools.

- Cleaning: After each use, gauging tools should be meticulously cleaned with a suitable solvent (depending on the material of the tool and the measured substance) and a soft, lint-free cloth. Compressed air can be used to remove debris from hard-to-reach areas. For particularly sensitive tools, ultrasonic cleaning might be necessary. Always refer to the manufacturer’s instructions for specific cleaning recommendations.

- Storage: Proper storage is crucial. Tools should be stored in a clean, dry, and temperature-controlled environment, ideally in a designated case or cabinet to prevent damage and contamination. This protects them from dust, moisture, and accidental impacts.

- Handling: Always handle gauging tools with care. Avoid dropping them or subjecting them to excessive force. Use gloves when necessary to prevent contamination from oils or sweat on your hands. Regularly inspect tools for damage – even minor scratches or dents can affect accuracy.

For example, if I’m using a micrometer to measure the thickness of a precision component, even a tiny particle of dust could skew the reading. Similarly, dropping a dial indicator could misalign its internal mechanism and render it inaccurate.

Q 9. What are the safety precautions you take when using gauging tools?

Safety is paramount when using gauging tools. Many tools have sharp edges or moving parts that pose potential hazards.

- Eye Protection: Always wear safety glasses to protect your eyes from flying debris, especially when using tools like snap gauges or dial indicators.

- Appropriate Clothing: Loose clothing or jewelry should be avoided as they could get caught in moving parts or become contaminated during the gauging process.

- Proper Tool Usage: Follow the manufacturer’s instructions carefully and only use the tool for its intended purpose. Applying excessive force can damage both the tool and the workpiece.

- Ergonomics: Maintain a proper posture and avoid awkward positions to prevent strain and fatigue. This is particularly important during prolonged use.

- Sharp Edges and Points: Handle tools with sharp edges or points with extreme care to avoid injury. Always use appropriate handling techniques.

For instance, if using a depth micrometer, ensuring a firm grip is vital to prevent dropping the tool and potentially causing injury.

Q 10. How do you document your measurements and maintain traceability?

Accurate documentation and traceability are essential for quality control and regulatory compliance. This ensures that measurements are verifiable and auditable.

- Measurement Records: All measurements should be recorded clearly and completely in a dedicated logbook or electronic database. This includes the date, time, tool ID, part number, operator ID, and the measured values. Digital data acquisition systems connected to the gauging tools can automate this process.

- Calibration Records: Calibration certificates should be readily available and should be referenced with each gauging session. This demonstrates that the tools are functioning within acceptable tolerances.

- Traceability Chain: Establishing a complete traceability chain—from the original calibration certificate to the final measurement—is vital. This enables the verification of every measurement’s accuracy.

- Data Management System: Using a robust data management system helps maintain an organized and efficient way to manage measurement data and traceability records. This can range from a simple spreadsheet to a sophisticated quality management system (QMS).

Imagine a scenario where a faulty part is discovered. Having detailed measurement records allows us to quickly trace back the entire process, identifying the exact point where the error occurred, and helping prevent such issues in the future.

Q 11. Explain your understanding of statistical process control (SPC) in relation to gauging.

Statistical Process Control (SPC) is a crucial tool for monitoring and improving the gauging process. It allows us to detect variation in measurements and identify potential problems before they escalate.

In gauging, SPC involves collecting data from repeated measurements, creating control charts (like X-bar and R charts, or X-MR charts for individual measurements), and analyzing the data to determine if the process is stable and within specified limits. Out-of-control points on a control chart signal potential issues like tool wear, operator error, or process instability.

By using SPC, we can identify and address the root causes of variation, leading to improved precision and consistency in measurements. It helps in preventing costly rework and ensures that the gauging process is robust and reliable. For example, consistently high or low readings could indicate a bias in a specific gauging tool, prompting recalibration or replacement.

Q 12. How do you interpret gauge control charts?

Gauge control charts visually represent the variation in measurements taken over time. The most common charts are X-bar and R charts (for subgroups of measurements) or X-MR charts (for individual measurements).

Interpreting these charts involves looking for patterns that indicate instability or bias in the gauging process.

- Points outside control limits: Points falling outside the upper or lower control limits signal a significant deviation from the expected range and indicate a potential problem. This could be due to a faulty tool, process variation, or an outlier measurement.

- Trends: A consistent upward or downward trend in the data suggests a gradual change in the process mean, perhaps due to tool wear or environmental factors.

- Stratification: Clusters of points above or below the centerline suggest that subgroups or specific operators might be contributing to more variation.

- Cycles: Repeating patterns in the data, like a wave, could indicate cyclical process variation, perhaps related to machine operation cycles.

By carefully analyzing these patterns, we can identify the source of the variation and take corrective actions. For instance, a consistent upward trend in measurements might indicate the need for recalibration of a specific gauge.

Q 13. Describe your experience with using CMM (Coordinate Measuring Machine) for gauging.

Coordinate Measuring Machines (CMMs) are sophisticated instruments used for highly accurate three-dimensional measurements. My experience with CMMs in gauging includes programming and operating the machine to measure complex parts with high precision.

The process typically involves creating a CAD model of the part and then using the CMM’s probe to measure specific points on the physical part. The CMM software then compares the measured points to the CAD model, providing detailed dimensional data, including deviations from the nominal values. This allows for precise analysis of form, location, and orientation of features.

I’m proficient in using various probing strategies, such as touch-trigger probing and scanning, depending on the complexity of the part and the required level of detail. I’m also experienced in analyzing the CMM data, identifying sources of variation, and generating reports to document the findings. For example, I’ve used CMMs to verify the accuracy of injection-molded plastic parts, ensuring conformance to strict dimensional tolerances.

Q 14. How do you select the appropriate sampling plan for gauging?

Selecting the appropriate sampling plan for gauging depends on various factors, including the required level of accuracy, the acceptable risk of making an incorrect decision (Type I and Type II errors), the cost of inspection, and the production volume.

Common sampling plans include:

- Acceptance sampling: This plan involves inspecting a sample of parts from a batch and accepting or rejecting the entire batch based on the results. The sample size and acceptance criteria are determined by statistical tables or software, often using a sampling plan like MIL-STD-105E.

- Variable sampling: This plan uses continuous measurements, such as dimensions, to assess the quality of a process. It’s often used with control charts, such as X-bar and R charts, to monitor process variation over time.

- Attribute sampling: This plan focuses on discrete characteristics, such as the number of defects or nonconforming parts, using simpler counts and percentages.

The choice depends heavily on the context. For high-volume production with low defect rates, a smaller sample size might suffice. Conversely, for critical parts with tight tolerances, a larger sample size might be necessary. Using statistical software, like Minitab, helps to determine the optimal sample size and acceptance criteria based on the risk tolerance and process capability.

Q 15. What are the limitations of using various types of gauging tools?

Gauging tools, while precise, have inherent limitations. These limitations depend heavily on the type of tool and the application. For example, mechanical gauges can wear down over time, leading to inaccurate measurements. The wear on the anvils or measuring surfaces can cause the gauge to read consistently higher or lower than the actual dimension. This is especially true for frequently used gauges. Optical comparators, while offering high precision, rely on proper setup and lighting. Incorrect calibration, or even shadows, can significantly impact accuracy. Digital calipers and micrometers are susceptible to operator error; improper handling or applying too much pressure can result in skewed readings. Air gauges, while useful for detecting minute variations, are sensitive to environmental conditions such as temperature and air pressure fluctuations, requiring careful calibration and stable operating environments. Finally, all gauging methods have inherent limitations defined by the tool’s resolution. You can’t measure to a greater degree of precision than your tool allows.

- Wear and Tear: Mechanical gauges require regular calibration and maintenance to compensate for wear.

- Environmental Factors: Air gauges and optical comparators are particularly sensitive to environmental factors.

- Operator Skill: Proper handling and technique are crucial for accurate measurements with all types of gauges.

- Resolution Limits: No gauge can provide a measurement more precise than its resolution.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you troubleshoot problems encountered while using gauging tools?

Troubleshooting gauging tool problems involves a systematic approach. First, I always verify the calibration of the gauge. Is it due for recalibration? Has it been dropped or damaged? Is it within its certified tolerance range? Second, I’d check the environment. Are the temperature and humidity within acceptable limits for the specific type of gauge? If using an air gauge, is the air supply clean and at the correct pressure? Third, I examine the gauging procedure. Am I using the correct technique? Am I applying excessive force? Am I reading the scale correctly? Fourth, I inspect the part itself. Are there burrs, scratches, or other imperfections that could affect the measurement? Finally, If the problems persist after these checks, the gauge should be sent for professional calibration and repair.

For instance, if a micrometer consistently reads too high, it might be due to dirt or debris lodged between the anvil and spindle. Cleaning it thoroughly would solve this issue. If an air gauge provides inconsistent readings, I’d check the air pressure and look for leaks in the system. In short, troubleshooting requires a thorough approach, considering all possible sources of error.

Q 17. Explain your understanding of tolerance and its implications in gauging.

Tolerance in gauging refers to the permissible variation in a dimension. It defines the acceptable range of measurements around a nominal (target) value. A tolerance is usually expressed as a plus or minus value. For example, a shaft with a nominal diameter of 10mm and a tolerance of ±0.1mm means that any diameter between 9.9mm and 10.1mm is acceptable. Implication of tolerance in gauging is that we’re not aiming for perfect precision in every measurement, only that the measurements fall within the defined acceptable range. Gauging tools are used to quickly and effectively verify if a part falls within its specified tolerances; ‘Go’ and ‘No-Go’ gauges are particularly useful in this respect. Tolerances are critical for ensuring the functionality and interchangeability of parts. Too tight tolerances increase production costs and reduce yields while too loose tolerances may compromise performance or reliability. A thorough understanding of tolerance is essential for designing, manufacturing, and quality control.

Q 18. Describe your experience with different types of gauge standards.

My experience includes working with various gauge standards, including ring gauges, plug gauges, snap gauges, and master gauges. Ring gauges are used for checking the external diameter of cylindrical parts, plug gauges for the internal diameter, and snap gauges for quick ‘Go/No-Go’ checks. Master gauges serve as reference standards for calibrating other gauges. I’ve used both ANSI (American National Standards Institute) and ISO (International Organization for Standardization) standards and am familiar with the processes and documentation required for calibration traceability. For example, I was once involved in a project where we needed to verify the accuracy of a batch of newly manufactured engine components using specific size and tolerance standards defined in our customer’s quality manual, requiring us to use gauges certified to those standards.

Q 19. How do you ensure the accuracy of your measurements when using gauging tools?

Ensuring accuracy begins with using calibrated gauges traceable to national or international standards. Regular calibration is paramount. It is crucial to adhere to proper measuring techniques. This includes ensuring the gauge is clean, applying the correct amount of force, and correctly interpreting the reading, taking multiple measurements and averaging to minimize random error. The environmental conditions should also be considered and controlled where possible, such as temperature and humidity. Proper handling and storage also prevents damage. Any damage to the gauge necessitates recalibration before further use. Employing statistical process control (SPC) techniques helps track gauge performance over time and identify potential drifts or issues before they lead to inaccurate measurements.

Q 20. Explain the difference between precision and accuracy in the context of gauging.

In gauging, accuracy refers to how close a measurement is to the true value. Precision, on the other hand, refers to the repeatability of measurements; how closely repeated measurements agree with each other. A highly precise measurement might not be accurate if the instrument is miscalibrated, while a measurement can be accurate but not precise if it’s based on a single inconsistent reading. Think of it like shooting arrows at a target: high accuracy means the arrows are clustered around the bullseye, while high precision means the arrows are clustered tightly together, regardless of whether they’re near the bullseye. Ideal measurements are both accurate and precise.

Q 21. How do you handle discrepancies between measurements taken with different gauging tools?

Discrepancies between measurements from different gauging tools require a careful investigation. First, I would verify the calibration status of each tool. If the tools are not calibrated, this will be the most likely source of the discrepancy. Then, I’d examine the measuring techniques used with each tool to ensure consistency. If possible, repeat the measurements to assess repeatability. Inspect the tools for damage, wear, or contamination. If all the above checks don’t explain the discrepancy, then a deeper examination is required. This might involve checking the tool’s specifications and determining if the differing gauges have compatible measurement ranges and resolutions. In some cases, it may be necessary to involve a metrology expert to help identify the source of the error. Ultimately, a root cause analysis may be needed to resolve persistent inconsistencies.

Q 22. How familiar are you with various gauge materials and their applications?

My familiarity with gauge materials is extensive, encompassing various metals, ceramics, and polymers, each suited for specific applications. The choice of material hinges on factors like the measured part’s material, the required accuracy, the operating environment (temperature, pressure, chemicals), and the gauge’s lifespan.

- Steel: A common choice due to its durability, good dimensional stability, and relatively low cost. Often used in applications where high precision and long-term stability are needed, such as precision bore gauges or snap gauges.

- Carbide: Offers exceptional wear resistance, making it ideal for measuring hard materials or in high-volume production where frequent use is expected. Excellent for applications involving abrasive materials.

- Ceramics: Resistant to high temperatures and chemical attack, making them suitable for demanding environments. Often found in high-temperature applications or when measuring chemically reactive materials.

- Polymers (Plastics): Lightweight, cost-effective, and sometimes offer better resistance to certain chemicals than metals. Often used in less demanding applications or when lightweight is prioritized.

For example, in a high-precision automotive engine part inspection, we might use a carbide gauge to ensure long-term accuracy when measuring hard steel components, while a plastic gauge might be sufficient for less critical measurements of a plastic housing.

Q 23. Describe your experience with automated gauging systems.

I have significant experience with automated gauging systems, from simple CNC-controlled measuring machines to fully integrated robotic gauging cells. My experience includes programming, calibration, troubleshooting, and data analysis. I am proficient in various types of automated systems, including:

- Vision Systems: Using cameras and image processing to measure dimensions and features, often for complex geometries.

- Coordinate Measuring Machines (CMMs): Precisely measuring dimensions and geometries using probe systems, often for large or complex parts. I’m familiar with both touch-trigger and scanning probe technologies.

- Laser Gauging Systems: Utilizing lasers for high-speed, non-contact measurements of dimensions and surface profiles.

For instance, in a previous role, I was responsible for implementing a vision system for automated inspection of printed circuit boards (PCBs). This involved programming the vision system to identify components, measure their dimensions and spacing, and flag any defects. The implementation resulted in a significant increase in inspection speed and accuracy, reducing production errors and improving overall quality.

Q 24. How do you ensure the integrity of your gauging data?

Ensuring data integrity is paramount. My approach involves a multi-layered strategy:

- Regular Calibration: Gauges are calibrated against traceable standards at defined intervals, using appropriate methods based on the gauge type and relevant standards. Calibration certificates are meticulously maintained.

- Gauge R&R Studies: Gauge Repeatability and Reproducibility (R&R) studies are conducted to determine the gauge’s variation and ensure it meets the required precision for the application. This involves multiple operators measuring the same parts multiple times.

- Environmental Control: Maintaining a stable environment (temperature, humidity) is crucial for preventing drift and ensuring consistent measurements. Data is flagged if environmental conditions exceed acceptable limits.

- Data Logging and Traceability: All gauging data is logged, including calibration records, operator identification, and environmental conditions. This enables traceability and facilitates analysis of potential issues.

- Statistical Process Control (SPC): SPC techniques are used to monitor the gauging process and detect any shifts in measurement values, indicating potential problems with the gauge or the process itself.

Imagine a scenario where a critical dimension is consistently measuring slightly off. By analyzing the logged data, including calibration and environmental records, we can isolate whether the issue stems from a gauge malfunction, a change in environmental conditions, or perhaps an error in the manufacturing process.

Q 25. What are some common industry standards relevant to gauging (e.g., ISO, ASME)?

Several industry standards are crucial in gauging. These standards ensure consistency, accuracy, and traceability across different organizations and industries.

- ISO 9001: This standard focuses on quality management systems, which is vital for ensuring the reliability and traceability of gauging processes and data.

- ISO 10012: Specifically addresses measurement management systems, providing guidelines for establishing and maintaining a measurement management system to ensure accurate and reliable measurements.

- ASME B89.1.19/B89.1.M: These standards (and related parts within the B89 series) define practices for dimensional metrology and testing equipment. They often cover aspects like calibration, reporting, and uncertainty analysis.

- Other relevant standards: Specific industries may have their own standards related to gauging, such as those related to automotive (AIAG) or aerospace (AS9100) industries.

For example, complying with ISO 10012 ensures that our calibration procedures are well-documented, our equipment is properly maintained, and our measurement uncertainties are properly assessed and reported.

Q 26. Describe a situation where you had to troubleshoot a gauging issue and how you resolved it.

In one instance, we encountered inconsistent measurements from a CMM during an automotive component inspection. The parts were failing quality control checks even though visual inspection revealed no obvious defects. Our troubleshooting involved a systematic approach:

- Data Analysis: We reviewed the CMM data for patterns, inconsistencies, and outliers. We noticed slight variations in measurements depending on the probe approach angle.

- Gauge Calibration: The CMM underwent a thorough recalibration against traceable standards. No significant deviations were found.

- Environmental Assessment: We checked temperature and humidity logs to rule out environmental fluctuations affecting the measurements. Conditions were within acceptable limits.

- Probe Inspection: A detailed examination of the CMM’s probe revealed minor wear, indicating potential for inaccuracy. The probe was replaced.

- Re-inspection: After replacing the probe, we re-inspected the parts. Measurements became consistent and within acceptable tolerances.

This experience highlighted the importance of a methodical troubleshooting process, starting with data analysis and progressively narrowing down the possibilities. The problem was not immediately obvious, demonstrating the need for a systematic approach and thorough investigation.

Q 27. How do you stay up-to-date with the latest advancements in gauging technology?

Staying current in this rapidly evolving field is crucial. My methods include:

- Professional Organizations: I actively participate in professional organizations like ASME or societies focused on metrology and quality control. These often host conferences, workshops, and provide access to publications.

- Trade Publications and Journals: I regularly read industry publications and journals focused on gauging and metrology technologies to learn about new advancements and best practices.

- Vendor Training: Participating in training courses provided by equipment vendors keeps me abreast of new features, software updates, and troubleshooting techniques for the specific gauging systems we use.

- Conferences and Workshops: Attending industry conferences and workshops is an excellent opportunity to network with peers, learn about the latest innovations, and hear case studies from other professionals.

- Online Resources: Many online resources, including webinars and online courses, provide access to information on the latest technologies and advancements.

Think of it like learning a new programming language – you need continuous practice and exposure to new updates and libraries to remain competent. The same applies to gauging technology.

Key Topics to Learn for Use gauging tools Interview

- Understanding User Needs: Explore different methodologies for identifying and defining user needs in the context of gauging tools. Consider qualitative and quantitative research approaches.

- Types of Gauging Tools and Their Applications: Familiarize yourself with various types of gauging tools (e.g., dial indicators, micrometers, calipers) and their appropriate applications in different industries and contexts. Practice explaining the strengths and limitations of each.

- Calibration and Accuracy: Understand the importance of proper calibration and the impact of inaccuracies on measurement results. Learn how to perform basic calibration checks and troubleshoot common calibration issues.

- Data Interpretation and Analysis: Practice interpreting measurements obtained from gauging tools and analyzing the results to identify trends and potential problems. Consider statistical methods relevant to data analysis in this field.

- Safety Procedures and Best Practices: Review safety protocols associated with handling and using various gauging tools to ensure safe and accurate measurements. Understand proper handling techniques and maintenance procedures.

- Troubleshooting and Problem Solving: Develop your ability to identify and troubleshoot problems related to gauging tools, including inaccurate measurements, equipment malfunctions, and inconsistencies in results. Practice diagnosing and resolving these issues.

- Selecting Appropriate Tools: Learn to choose the right gauging tool for a specific task, considering factors such as accuracy requirements, material properties, and measurement range.

Next Steps

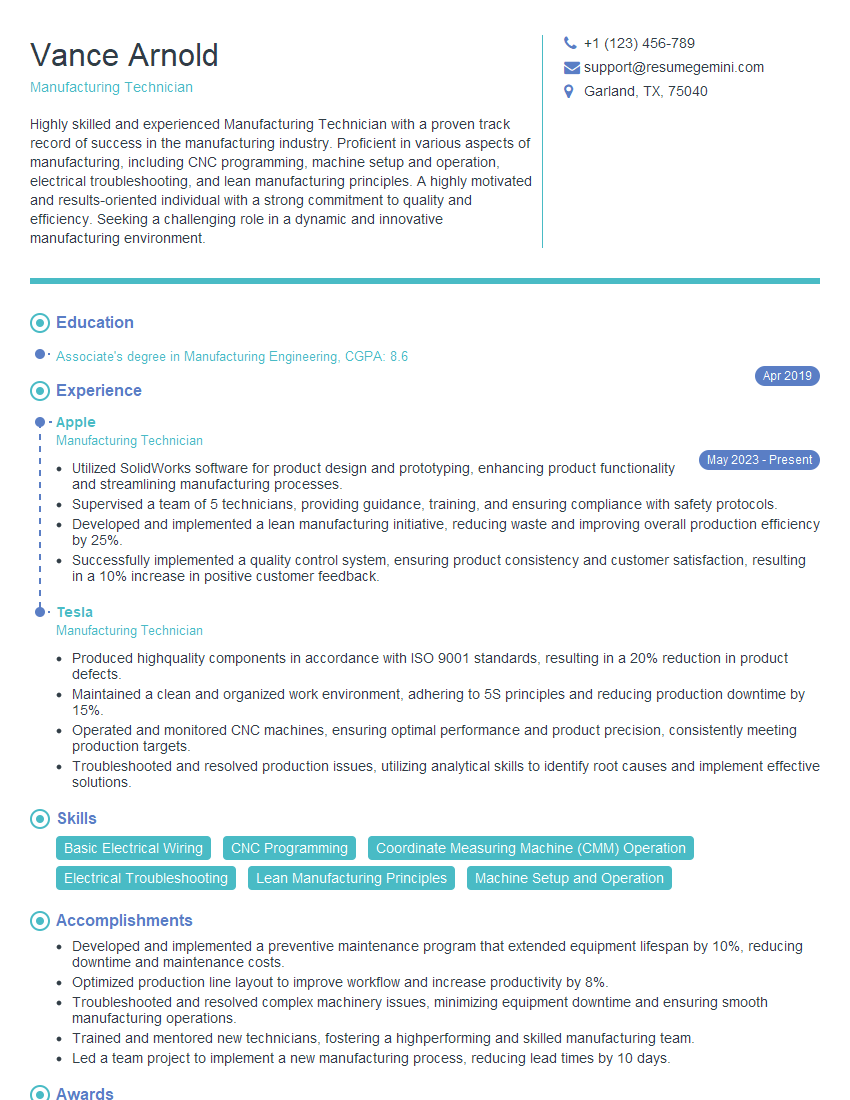

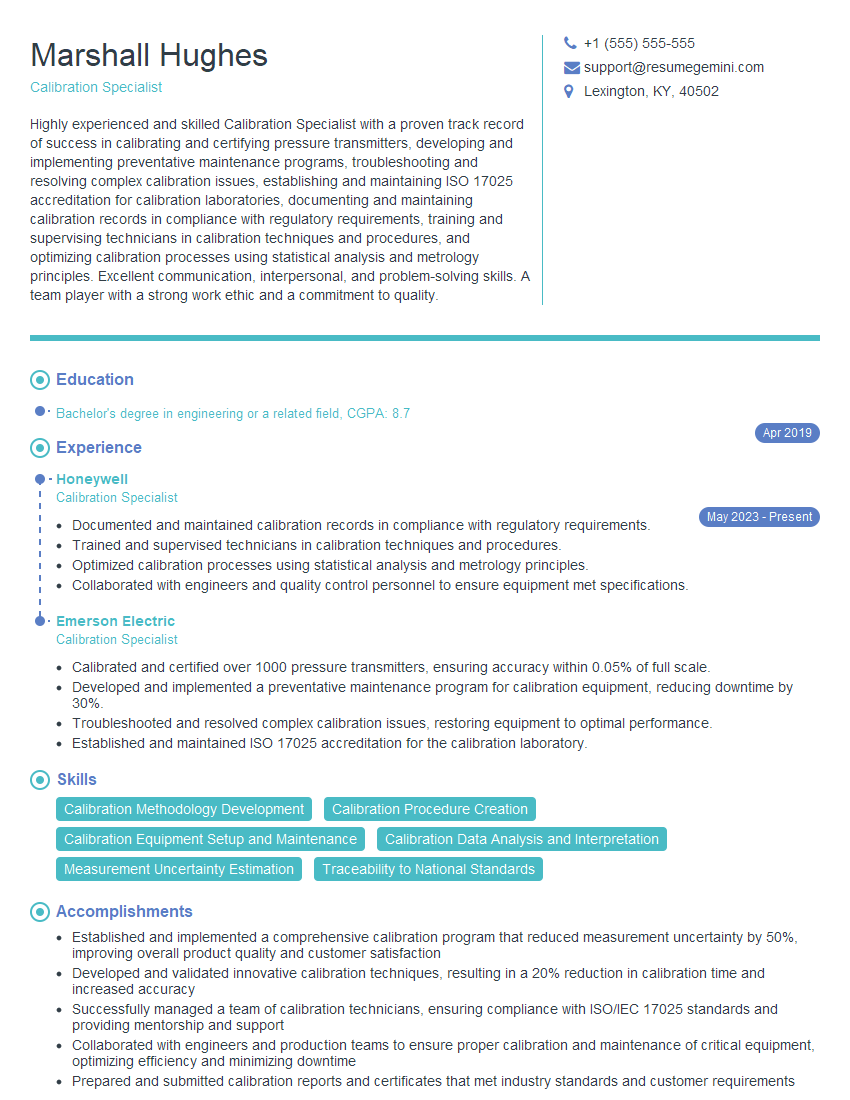

Mastering the use of gauging tools is crucial for career advancement in many technical fields, opening doors to higher-paying roles and greater responsibility. To maximize your job prospects, it’s essential to create an ATS-friendly resume that highlights your skills and experience effectively. We strongly recommend using ResumeGemini to build a professional and impactful resume. ResumeGemini provides tools and resources to help you craft a compelling narrative that showcases your expertise, and examples of resumes tailored to use gauging tools are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO