Cracking a skill-specific interview, like one for CI/CD Pipelines (Jenkins, GitLab), requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in CI/CD Pipelines (Jenkins, GitLab) Interview

Q 1. Explain the concept of CI/CD.

CI/CD, or Continuous Integration/Continuous Delivery (and sometimes Deployment), is a set of practices that automates the process of software development. Imagine it as an assembly line for software, streamlining the journey from code commit to production release. Continuous Integration focuses on frequently merging code changes into a central repository, followed by automated builds and tests. Continuous Delivery extends this by automating the release process, making it possible to deploy new versions to production quickly and reliably. Continuous Deployment goes a step further by automatically deploying every successful build to production.

Q 2. What are the key benefits of implementing CI/CD?

The benefits of CI/CD are numerous and impactful. Faster time to market is a key advantage; automated processes significantly reduce the time it takes to get new features or bug fixes into the hands of users. Improved software quality is another; automated testing catches errors early, reducing the risk of deploying faulty code. Increased team collaboration is facilitated because developers are merging their work frequently, leading to better communication and fewer integration issues. Reduced risk is achieved through frequent releases and automated testing, making it easier to revert to previous versions if something goes wrong. Finally, improved efficiency is a direct result of automation, freeing developers to focus on building new features instead of managing manual processes. Think of it as moving from hand-crafting each car on an assembly line to using robots to automate many of the repetitive tasks.

Q 3. Describe the stages involved in a typical CI/CD pipeline.

A typical CI/CD pipeline involves several key stages:

- Source Code Management: Developers commit code changes to a version control system like Git.

- Build: The code is compiled and packaged into a deployable artifact (e.g., a JAR file, a Docker image).

- Test: Automated tests (unit, integration, system, etc.) are run to ensure the quality of the code.

- Deploy: The artifact is deployed to a staging environment for further testing and validation.

- Release: The artifact is deployed to the production environment, making it available to end-users.

- Monitor: The application’s performance and health are monitored in production to identify and address any issues.

These stages can be further customized and expanded upon based on the project’s specific needs. For example, adding security scans or performance tests is common.

Q 4. What are some common challenges encountered when implementing CI/CD?

Implementing CI/CD presents several common challenges:

- Legacy Systems: Integrating CI/CD into older systems can be difficult and time-consuming.

- Test Coverage: Achieving sufficient test coverage to ensure high quality can be challenging.

- Infrastructure: Setting up and maintaining the necessary infrastructure can be complex and costly.

- Team Buy-in: Getting the development team to adopt new processes and tools requires effective communication and training.

- Tooling Complexity: The sheer number of tools available can make choosing the right ones and integrating them effectively difficult.

Overcoming these challenges often requires careful planning, incremental implementation, and a strong commitment from the development team.

Q 5. How do you handle failures in a CI/CD pipeline?

Handling failures is crucial in a CI/CD pipeline. Robust error handling mechanisms are essential. This typically involves:

- Automated Rollbacks: If a deployment fails, the system should automatically revert to the previous stable version.

- Notifications: Automated alerts should be sent to the relevant team members when failures occur.

- Detailed Logging: Comprehensive logs should be maintained to facilitate debugging and troubleshooting.

- Monitoring and Alerting: Continuous monitoring of the application’s performance and health allows for quick identification and resolution of issues.

- Root Cause Analysis: A systematic approach to identifying the root cause of failures is necessary to prevent recurrence.

Using a well-defined process for handling failures and post-mortems helps learn from mistakes and improve the pipeline’s reliability.

Q 6. Explain different branching strategies used in CI/CD.

Several branching strategies are employed in CI/CD. The choice depends on the project’s complexity and team size:

- Gitflow: A robust model with separate branches for development, features, releases, and hotfixes. Excellent for larger projects with multiple developers.

- GitHub Flow: A simpler model with only one main branch (often called ‘main’ or ‘master’). Features are developed on branches and merged directly into main. Suitable for smaller teams and faster iterations.

- Trunk-Based Development: Developers work directly on the main branch, merging their changes frequently. Requires rigorous testing and small, frequent commits. Focuses on speed and continuous integration.

Each strategy has its strengths and weaknesses; selecting the appropriate one involves considering team size, project complexity, and risk tolerance.

Q 7. What are the differences between Jenkins and GitLab CI?

Jenkins and GitLab CI are both popular CI/CD tools, but they differ in their approach:

- Integration: Jenkins is a standalone server that needs to be installed and configured separately. GitLab CI is integrated directly into GitLab, simplifying setup and configuration.

- Ease of Use: GitLab CI is generally considered easier to use, especially for developers already familiar with GitLab. Jenkins requires more technical expertise for setup and configuration.

- Flexibility: Jenkins is highly customizable and extensible, offering a wide range of plugins for various tools and integrations. GitLab CI is less flexible but often sufficient for most projects.

- Scalability: Both can scale to handle large projects, but Jenkins often requires more manual configuration for scaling.

- Cost: Jenkins is open-source and free to use, while GitLab CI’s pricing depends on the GitLab plan.

The best choice depends on project needs and team expertise. Smaller projects might prefer GitLab CI for its simplicity, while larger, more complex projects might benefit from Jenkins’ flexibility and extensibility.

Q 8. How do you configure Jenkins to build and deploy an application?

Configuring Jenkins for building and deploying an application involves several key steps. Think of it like an assembly line for your software: each stage has a specific job.

First, you’ll need to install and set up Jenkins, ideally on a dedicated server for stability. Then, you create a new Jenkins job, specifying the type (e.g., Freestyle project). Next comes the crucial part: defining the build process. This usually involves:

- Source Code Management (SCM): Connect Jenkins to your Git repository (GitHub, GitLab, Bitbucket). This is where Jenkins fetches the code to build.

- Build Triggers: Configure how the build process is initiated. Common options include polling the SCM for changes (at specified intervals) or using webhooks to trigger builds immediately after a commit.

- Build Steps: This is where you define the actual build commands. For example, if you’re building a Java application, you might use Maven or Gradle. Each step is executed sequentially.

- Post-build Actions: These actions happen after a successful build. This is where you’d typically deploy your application to a staging or production environment. You might use tools like SSH, SCP, or dedicated deployment plugins.

Example: Let’s say you have a simple Java application. Your build steps might include:

mvn clean install(to compile and package the application)ssh user@server 'mkdir -p /var/www/myapp'(to create a deployment directory on your server)scp target/myapp.war user@server:/var/www/myapp(to copy the war file to the server)ssh user@server 'systemctl restart myapp'(to restart the application server)

Remember to manage credentials securely within Jenkins, avoiding hardcoding sensitive information directly in the configuration. Using Jenkins’ built-in credential management is essential.

Q 9. How do you manage dependencies in your CI/CD pipeline?

Managing dependencies effectively is vital for reproducible and reliable builds. Think of it like baking a cake – you need the right ingredients in the right amounts. In CI/CD, these ‘ingredients’ are your project dependencies.

The most common approach is using a dependency management tool tailored to your programming language (e.g., Maven for Java, npm for Node.js, pip for Python). These tools manage the versions of libraries your application relies on, ensuring consistency across different environments.

In your CI/CD pipeline, you should:

- Declare dependencies: Your project should explicitly define its dependencies in a configuration file (like

pom.xmlfor Maven orpackage.jsonfor npm). - Use a dependency cache: Tools like Maven and npm have built-in caching mechanisms that speed up subsequent builds by reusing previously downloaded dependencies. This significantly improves pipeline efficiency.

- Dependency locking: To prevent unexpected updates breaking your build, use mechanisms like

package-lock.json(npm) or Maven’s dependency management features to lock down specific dependency versions. - Regular dependency updates: Periodically review and update your dependencies to benefit from bug fixes and security patches. Automated tools and processes can greatly assist.

Example (Maven): Your pom.xml file explicitly defines the versions of your dependencies, preventing conflicts and ensuring consistent builds across different environments. The Maven build process automatically downloads and manages these dependencies.

org.example my-library 1.0.0 Q 10. Explain the use of Docker in a CI/CD pipeline.

Docker revolutionizes CI/CD pipelines by providing consistent and isolated build and runtime environments. Imagine shipping your application in a perfectly packaged container – that’s what Docker does. This ensures your application runs the same way regardless of the underlying infrastructure (development, testing, or production).

Key benefits of using Docker in CI/CD:

- Consistency: Docker images ensure that your application runs consistently across different environments because the environment itself is included as part of the image.

- Isolation: Docker containers provide a level of isolation, preventing conflicts between applications and their dependencies.

- Reproducibility: By building Docker images as part of your pipeline, you can guarantee reproducible builds and deployments.

- Improved efficiency: Docker images can be cached, reducing build times.

- Simplified deployments: Deploying a Docker image is generally easier than deploying applications directly to servers.

In a typical pipeline:

- The pipeline builds a Docker image from the application code.

- The image is pushed to a Docker registry (e.g., Docker Hub, private registry).

- A separate deployment step pulls the image from the registry and runs it in the target environment (e.g., Kubernetes).

Example: Your Jenkinsfile might include steps like:

docker build -t myapp:latest . docker push my-docker-registry/myapp:latestQ 11. How do you implement security best practices in your CI/CD pipeline?

Security is paramount in CI/CD. A compromised pipeline can lead to severe consequences. Think of it like protecting your home – you’d secure the doors and windows. Similarly, you must secure your CI/CD pipeline.

Key security best practices:

- Least privilege access: Grant only the necessary permissions to users and services involved in the pipeline.

- Secure credentials management: Use Jenkins’ built-in credential management or a dedicated secrets management solution (e.g., HashiCorp Vault). Never hardcode passwords or API keys in your pipeline configuration.

- Regular security audits: Conduct regular security assessments and penetration testing to identify and address vulnerabilities.

- Image scanning: Scan Docker images for known vulnerabilities before deploying them. Tools like Clair and Trivy can help.

- Input validation: Sanitize all inputs to your pipeline to prevent injection attacks.

- Secure communication: Use HTTPS for communication between components of the pipeline.

- Regular updates: Keep all tools and components involved in your pipeline up-to-date with the latest security patches.

By following these best practices, you minimize your attack surface and significantly reduce the risk of compromise.

Q 12. How do you monitor and track the performance of your CI/CD pipeline?

Monitoring and tracking your CI/CD pipeline’s performance is crucial for identifying bottlenecks and ensuring smooth operation. Think of it as monitoring your car’s engine – you need to check vital signs. The same applies to your pipeline.

Key monitoring strategies:

- Pipeline duration: Track the time it takes to complete each stage of the pipeline. This helps identify slow steps that can be optimized.

- Error rates: Monitor the number of failed builds and deployments to understand the stability of your pipeline.

- Resource utilization: Track CPU, memory, and disk usage of the build agents to prevent resource exhaustion.

- Logging: Use comprehensive logging to gain insights into pipeline execution. Centralized logging systems are highly beneficial.

- Metrics dashboards: Create dashboards to visualize key metrics, providing a clear overview of pipeline health.

- Alerting: Set up alerts for critical events, such as failed builds, deployments, or resource limitations.

Tools like Prometheus, Grafana, and Datadog can be integrated with your CI/CD pipeline to provide comprehensive monitoring and alerting capabilities.

Q 13. Describe your experience with different CI/CD tools (e.g., Bamboo, CircleCI).

I have extensive experience with various CI/CD tools, including Jenkins, GitLab CI, Bamboo, and CircleCI. Each tool has its strengths and weaknesses, and the best choice depends on the specific project requirements and team preferences.

Jenkins: Highly flexible and customizable, but can have a steeper learning curve. Ideal for complex pipelines and large organizations.

GitLab CI: Tightly integrated with GitLab, simplifying the setup and management of pipelines. Excellent for projects already hosted on GitLab.

Bamboo: A robust, commercially supported option from Atlassian, providing good integration with other Atlassian tools (Jira, Bitbucket). Suitable for organizations already invested in the Atlassian ecosystem.

CircleCI: Cloud-based CI/CD platform, offering ease of use and scalability. A good choice for projects that don’t require extensive customization or on-premise infrastructure.

I’ve used these tools in different contexts, adapting my approach to each project’s specific needs. My experience covers everything from setting up basic pipelines to designing and implementing complex, multi-stage deployments involving various technologies.

Q 14. How do you handle version control in your CI/CD pipeline?

Version control is the backbone of any successful CI/CD pipeline. Think of it as the blueprint for your application’s evolution – every change is tracked. The most common version control system is Git.

Integrating Git into your CI/CD pipeline:

- Centralized repository: Use a central Git repository (e.g., GitHub, GitLab, Bitbucket) to store your code.

- Branching strategy: Implement a robust branching strategy (e.g., Gitflow) to manage different versions of your application and streamline development.

- Automated code reviews: Integrate code review tools into your pipeline to ensure code quality and catch potential issues early.

- Commit messages: Encourage clear and informative commit messages to document changes and improve traceability.

- Tags: Use tags to mark significant releases (e.g., v1.0.0) for easy identification and deployment.

In the CI/CD pipeline: the pipeline will automatically pull code changes from the Git repository, build the application, run tests, and deploy it based on the defined build triggers and deployment strategy. The use of Git branches allows for separate build and deployment processes for development, testing, and production.

Q 15. What are some best practices for writing Jenkinsfiles?

Writing effective Jenkinsfiles is crucial for creating robust and maintainable CI/CD pipelines. Think of a Jenkinsfile as a blueprint for your entire build, test, and deployment process. Best practices revolve around readability, reusability, and maintainability.

- Declarative Pipeline: Prefer the declarative pipeline syntax over the scripted pipeline. It’s more readable, easier to understand, and less prone to errors. This approach uses a structured syntax to define stages and steps.

- Modularity: Break down your Jenkinsfile into smaller, reusable functions or stages. This promotes code reusability across different projects and makes it easier to maintain and update. Think of it like building with LEGOs – smaller, interchangeable parts.

- Version Control: Always store your Jenkinsfile in version control (like Git) alongside your application code. This allows for tracking changes, collaboration, and easy rollback in case of issues.

- Parameterization: Use parameters to make your Jenkinsfile flexible. This allows you to easily change configurations without modifying the code itself. For instance, you could parameterize the environment (development, testing, production).

- Error Handling: Implement robust error handling to catch issues and prevent pipeline failures. Use

try-catchblocks to handle potential exceptions and provide meaningful error messages. - Logging: Thorough logging is critical for debugging and monitoring. Use the

echostep liberally to provide context and track the pipeline’s progress.

Example (Declarative Pipeline):

pipeline { agent any stages { stage('Build') { steps { sh 'mvn clean package' } } stage('Test') { steps { sh 'mvn test' } } stage('Deploy') { steps { sh 'kubectl apply -f deployment.yaml' } } } }Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of Infrastructure as Code (IaC) and its role in CI/CD.

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through code instead of manual processes. Think of it as writing code to create and manage your servers, networks, and other infrastructure components. This is transformative for CI/CD because it allows you to automate the entire infrastructure setup as part of your pipeline.

Role in CI/CD: IaC integrates seamlessly with CI/CD pipelines by automating the creation of environments needed for testing and deployment. For example, you can use tools like Terraform or Ansible to define your infrastructure in code, and then trigger the infrastructure provisioning as a stage within your Jenkins or GitLab pipeline. This ensures consistency, repeatability, and eliminates the risk of manual configuration errors.

Benefits:

- Automation: Automates the creation and management of infrastructure.

- Consistency: Ensures consistent infrastructure across different environments.

- Version Control: Tracks changes to infrastructure configurations, allowing for easy rollback.

- Repeatability: Enables easy recreation of environments.

- Collaboration: Facilitates collaboration among development and operations teams.

Q 17. How do you integrate testing into your CI/CD pipeline?

Integrating testing into your CI/CD pipeline is absolutely critical for ensuring high-quality software. The goal is to automate as much testing as possible and run it frequently, ideally after every code commit.

Integration Steps:

- Unit Tests: These tests should be part of the build process and run automatically. Tools like JUnit or pytest are frequently used.

- Integration Tests: These tests verify the interaction between different components of your system and should run after the build phase. They often require setting up a test environment.

- System Tests/End-to-End Tests: These tests verify the complete system functionality and should be run in a staging environment mimicking production as closely as possible.

- Test Reporting: Aggregate test results and generate reports to track progress and identify issues. Tools such as JUnit or Allure offer comprehensive reporting.

Example (Jenkins): In your Jenkinsfile, you’d include stages for each test type. The pipeline would halt if tests fail, preventing faulty code from being deployed. If using a test framework like JUnit, you could integrate a test result reporter plugin within your Jenkins pipeline.

stage('Test') { steps { sh './gradlew test' junit '''report.xml''' } }Q 18. What are different types of testing (unit, integration, system, etc.) and where do they fit in the CI/CD pipeline?

Various types of testing are used at different stages of the software development lifecycle to ensure quality and reliability. They fall into a logical sequence within the CI/CD pipeline.

- Unit Tests: Test individual components or units of code in isolation. They are the first line of defense and typically run very fast. They are part of the build process itself.

- Integration Tests: Verify the interactions between different units or modules. These are usually run after successful unit tests and require more setup.

- System Tests (End-to-End Tests): Verify the functionality of the entire system as a whole. They simulate real-world scenarios and often involve multiple components. These typically run in a staging environment.

- Regression Tests: Ensures that new code changes haven’t broken existing functionality. They are often a subset of unit, integration and system tests, run after every code change.

- Performance Tests (Load Tests): These tests assess how the system performs under stress, checking scalability and responsiveness.

- UI Tests: Focus on the user interface, verifying that the application behaves as expected from a user perspective. These tests are often more time-consuming.

Placement in CI/CD Pipeline: Unit tests are typically run early in the pipeline, followed by integration tests, and then system tests in a staging environment. Performance and UI tests might be performed less frequently, perhaps before release to production.

Q 19. Explain the concept of blue/green deployment and canary releases.

Both blue/green deployments and canary releases are advanced deployment strategies aimed at minimizing downtime and risk during deployments.

Blue/Green Deployment: This involves maintaining two identical environments: a ‘blue’ (live) environment and a ‘green’ (staging) environment. You deploy your new code to the ‘green’ environment, thoroughly test it, and then switch traffic from ‘blue’ to ‘green’. If something goes wrong, you can quickly switch back. Think of it like having two identical stages on a play, one live and one for rehearsal.

Canary Release: This involves gradually rolling out new code to a small subset of users (your ‘canaries’) before deploying it to the entire user base. You monitor the performance and behavior of the new code in the small group and roll back if issues arise. It’s like testing a new medicine on a small group before wider distribution.

Key Differences: Blue/green is a complete switch, while canary is a gradual rollout. Blue/green is faster for simple deployments, while canary is better suited for situations where you need to assess the impact on a live system more carefully before a full release.

Q 20. How do you manage secrets in your CI/CD pipeline?

Managing secrets (like API keys, database passwords, and certificates) securely in your CI/CD pipeline is paramount. Hardcoding secrets directly into your Jenkinsfile or scripts is a major security risk. Instead, employ dedicated secret management solutions.

- Dedicated Secret Management Tools: Use tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault to store and manage your secrets centrally. Your CI/CD pipeline can then securely access these secrets through these tools’ APIs.

- Environment Variables: Store secrets as environment variables in your CI/CD environment. Jenkins and GitLab provide mechanisms for securely injecting environment variables into your pipelines.

- Jenkins Credentials Plugin: In Jenkins, use the Credentials Binding plugin to securely store and retrieve credentials without exposing them in the Jenkinsfile itself.

- GitLab CI/CD Variables: In GitLab CI/CD, you can define variables (including masked variables for secrets) at the project or group level. These variables are automatically available to your pipelines.

- Least Privilege: Grant only the necessary permissions to your CI/CD pipeline to access the secrets it needs, adhering to the principle of least privilege.

Q 21. What are some common metrics used to measure the success of a CI/CD pipeline?

Measuring the success of a CI/CD pipeline goes beyond simply checking if deployments succeed. Key metrics provide insights into efficiency, reliability, and overall performance.

- Deployment Frequency: How often are deployments happening? A higher frequency generally indicates a more efficient pipeline.

- Lead Time for Changes: How long does it take to go from code commit to deployment? Shorter lead times are better.

- Mean Time to Recovery (MTTR): How long does it take to recover from a failure? Lower MTTR indicates better resilience.

- Change Failure Rate: What percentage of deployments result in failures? Lower is better.

- Deployment Success Rate: What percentage of deployments are successful? High success rates show pipeline stability.

- Test Automation Rate: What percentage of testing is automated? Higher automation means faster feedback loops and better quality.

By monitoring these metrics, you can identify areas for improvement in your CI/CD pipeline and continuously optimize it for efficiency and reliability. Tools like Prometheus and Grafana are commonly used to visualize and analyze these metrics.

Q 22. How do you troubleshoot common pipeline failures?

Troubleshooting pipeline failures starts with understanding the error messages. Think of it like detective work – you need to gather clues to find the culprit. I begin by examining the logs generated at each stage of the pipeline. Jenkins and GitLab both provide detailed logging that can pinpoint the exact point of failure. These logs often include stack traces, which are incredibly valuable in identifying the root cause. For instance, a failed build might indicate a problem with code compilation, a dependency issue, or a test failure. A deployment failure could stem from network issues, server configuration problems, or incorrect deployment scripts.

Once the error is identified, I investigate the underlying cause. This could involve checking code changes, examining server configurations, and verifying network connectivity. Using version control effectively is key; I often revert to previous successful builds to isolate the faulty change. Furthermore, I leverage debugging tools and techniques specific to the technology stack. For example, if a test fails, I’ll use debuggers to step through the code and identify where it breaks. Finally, comprehensive monitoring tools help proactively identify slowdowns or anomalies that could lead to failure.

Let’s say a deployment to production fails due to a database connection issue. My first step would be to check the production database logs for errors. Then I’d verify the database credentials used in the deployment script are correct and the database server is accessible. If the problem persists, I’d consider using a rollback strategy to revert to the last known good deployment, minimizing disruption.

Q 23. Explain your experience with different deployment strategies (e.g., rolling deployment, rolling back).

Deployment strategies are crucial for minimizing downtime and risk. Rolling deployments, for example, are like gradually replacing old parts in a machine. Instead of deploying the new version all at once, you update a small subset of servers, monitor their performance, and then proceed to update the rest. This reduces the impact of any unforeseen issues.

Rolling back is just as vital; it’s the ‘undo’ button in case something goes wrong. If problems surface after a deployment, a well-defined rollback procedure ensures you can quickly revert to the previous stable version. Both strategies require robust monitoring and automated rollback mechanisms. In my experience, I’ve used blue-green deployments extensively, which involve deploying to a separate ‘blue’ environment and switching traffic only after validation. This minimizes downtime and offers a safety net in case of issues.

Consider a scenario where we’re deploying a new feature. A rolling deployment strategy would mean updating 20% of servers first, observing performance metrics (like latency and error rates). If things look good, we incrementally update the remaining servers. If issues arise, we have the option to halt the deployment and even roll back to the previous version quickly and easily using scripts and automation.

Q 24. How do you ensure the scalability and reliability of your CI/CD pipeline?

Scalability and reliability are paramount. To ensure a scalable CI/CD pipeline, I utilize infrastructure-as-code (IaC) tools like Terraform or CloudFormation. This allows me to define and manage the pipeline’s infrastructure programmatically, making it easily replicable and scalable. Furthermore, I employ parallel processing where possible. For example, running tests concurrently across multiple machines speeds up the process dramatically. Auto-scaling is also crucial; this ensures the pipeline can handle varying workloads without performance degradation.

Reliability is achieved through redundancy and monitoring. Using multiple CI/CD servers minimizes downtime if one fails. Comprehensive monitoring using tools like Prometheus and Grafana allows for proactive identification and resolution of potential bottlenecks or errors. Automated alerting systems also play a key role, notifying the team of critical issues in real-time. Regular testing and updates to the pipeline itself is essential – treating the CI/CD pipeline as code allows for the same rigorous testing and version control as the application.

Imagine a scenario with a surge in pull requests during a release cycle. A well-designed scalable pipeline with auto-scaling features will automatically spin up additional runners to handle the increased workload, preventing slowdowns and ensuring that builds are processed quickly.

Q 25. Describe your experience with GitLab CI runners and their configuration.

GitLab CI runners are the workhorses of the GitLab CI/CD system. They execute the jobs defined in the .gitlab-ci.yml file. I have extensive experience configuring runners in various environments, including Docker, Kubernetes, and virtual machines. Configuration involves specifying the executor (Docker, shell, etc.), setting up tags for job assignment, and configuring communication with the GitLab server. A crucial aspect is securing the runners; I ensure they have appropriate access levels and employ strong authentication mechanisms. Understanding tags is important; they allow for granular control, assigning specific jobs to runners with certain capabilities, such as specific software or hardware resources.

For example, I might have one runner tagged ‘docker’ and ‘database’ dedicated to jobs requiring Docker and database access, and another tagged ‘android’ for Android application build tasks. This allows optimization of resource allocation and prevents conflicts.

runner: url: https://gitlab.example.com token: your_token executor: docker description: My custom runner tags: - docker - database Q 26. Explain the use of GitLab CI/CD variables and pipelines.

GitLab CI/CD variables and pipelines are powerful tools for managing configuration and data within the CI/CD process. Variables allow you to store sensitive information, such as API keys or database credentials, securely without hardcoding them into scripts. They can be defined at various levels – project, group, or instance – providing flexibility in managing configurations. Pipelines are the workflows that orchestrate different stages of your CI/CD process, allowing you to define a sequence of jobs. Variables are frequently passed between jobs within the pipeline, making information readily available as needed. Using them is much safer than hardcoding credentials in your .gitlab-ci.yml file.

Consider a scenario where you’re deploying an application to AWS. You wouldn’t want to embed your AWS access key directly in the deployment script. Instead, you would define an environment variable, such as AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY, at the project level and access them securely within your pipeline jobs.

variables: AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY deploy: stage: deploy script: - aws s3 cp ./build/ dist/ --acl public-read Q 27. How do you use GitLab CI/CD to deploy to different environments (e.g., development, staging, production)?

Deploying to different environments (development, staging, production) is handled elegantly using GitLab CI/CD by creating separate jobs and leveraging environment variables and conditional logic. I usually define distinct stages in my .gitlab-ci.yml file, one for each environment. Each stage contains a job responsible for deploying to its designated environment. Environmental variables are crucial here, as they allow me to differentiate configurations for each environment – for example, different database URLs or server addresses.

This is often combined with approvals, requiring manual intervention before deploying to higher environments like production. This provides a layer of security and allows for human review before changes go live. For example, I might have a stage called ‘production’ that only runs when the pipeline reaches it and a designated team member manually approves the deployment.

stages: - build - test - deploy_dev - deploy_staging - deploy_prod deploy_prod: stage: deploy_prod environment: production when: manual script: - kubectl apply -f k8s/production.yaml Key Topics to Learn for CI/CD Pipelines (Jenkins, GitLab) Interview

- Understanding CI/CD Fundamentals: Define Continuous Integration, Continuous Delivery, and Continuous Deployment. Differentiate between these concepts and explain their benefits.

- Jenkins Configuration and Setup: Describe the process of installing and configuring Jenkins. Explain how to create and manage Jenkins jobs, including setting up pipelines.

- GitLab CI/CD Integration: Explain how GitLab CI/CD works and how to define pipelines using `.gitlab-ci.yml`. Discuss the advantages of integrating GitLab with other tools.

- Pipeline Stages and Stages: Detail the creation and management of pipeline stages (e.g., build, test, deploy). Discuss strategies for handling failures and rollbacks.

- Version Control Systems (Git): Demonstrate a strong understanding of Git branching strategies (e.g., Gitflow) and their relevance to CI/CD pipelines.

- Artifact Management: Explain how to manage build artifacts within Jenkins and GitLab CI/CD. Discuss strategies for storing and deploying artifacts efficiently.

- Testing Strategies in CI/CD: Discuss different types of testing (unit, integration, system) and how to integrate them into a CI/CD pipeline. Explain the importance of test automation.

- Security Considerations: Discuss security best practices in CI/CD, including secure credential management and pipeline security.

- Troubleshooting and Debugging: Describe common issues encountered in CI/CD pipelines and strategies for troubleshooting and debugging them.

- Monitoring and Logging: Explain the importance of monitoring CI/CD pipelines and utilizing logging for debugging and performance analysis.

Next Steps

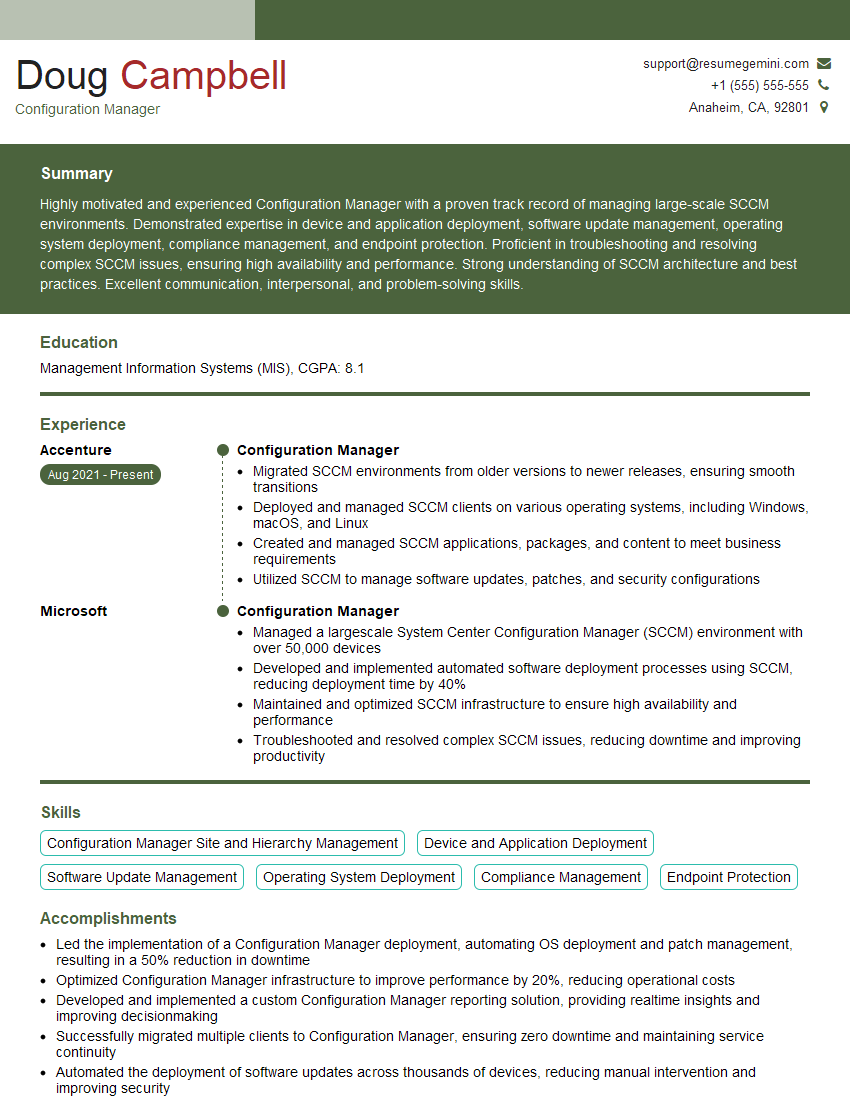

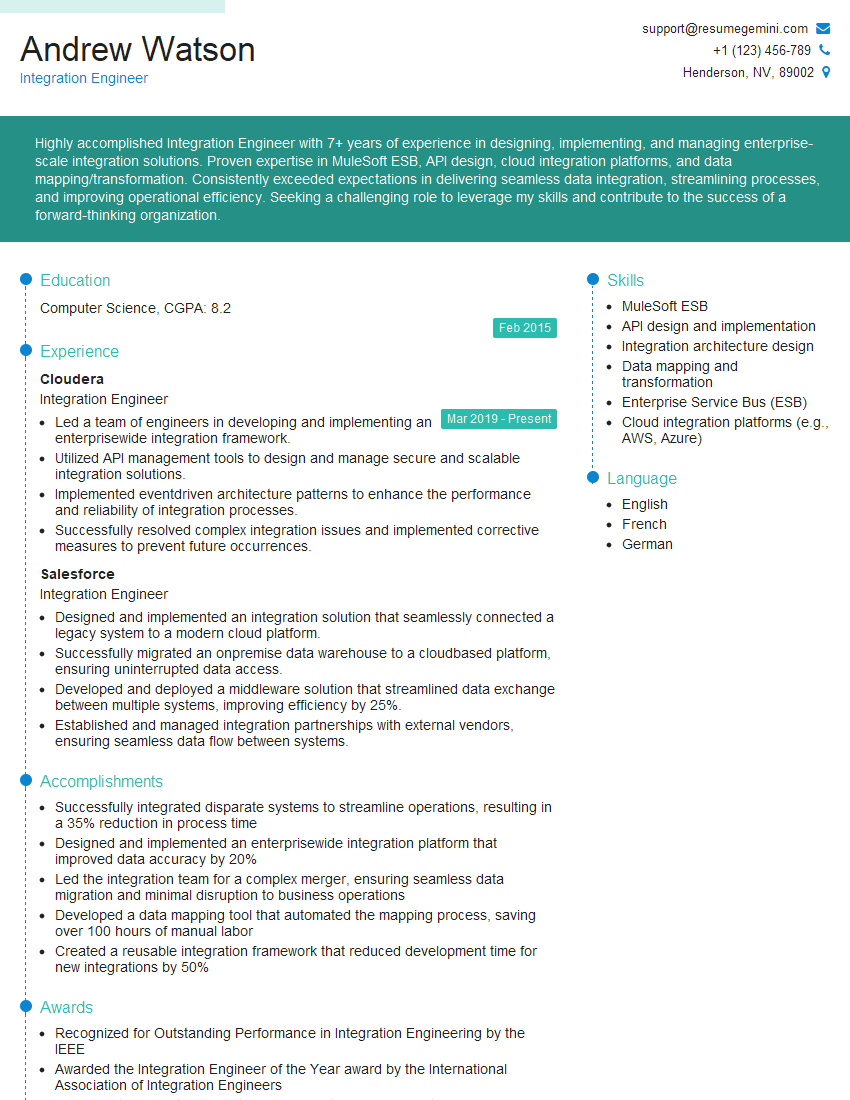

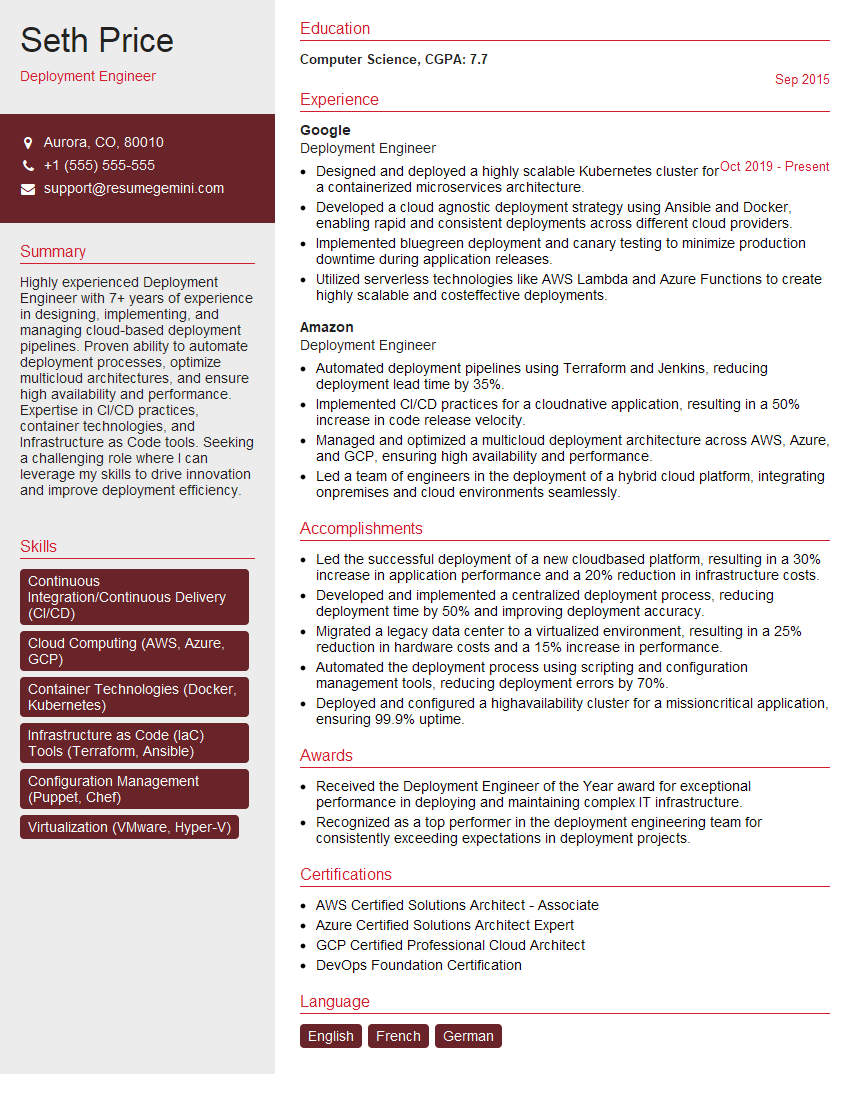

Mastering CI/CD pipelines with Jenkins and GitLab is crucial for advancing your career in software development and DevOps. These skills are highly sought after, demonstrating your ability to automate processes, improve efficiency, and contribute to a robust and reliable software delivery system. To significantly boost your job prospects, invest time in crafting an ATS-friendly resume that highlights your CI/CD expertise. ResumeGemini is a trusted resource that can help you create a compelling and effective resume. They provide examples of resumes tailored to CI/CD Pipelines (Jenkins, GitLab) roles, ensuring you present your skills in the best possible light. Take advantage of these resources and confidently showcase your abilities to land your dream job!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO