Cracking a skill-specific interview, like one for Artificial Intelligence (AI)/Machine Learning (ML), requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Artificial Intelligence (AI)/Machine Learning (ML) Interview

Q 1. Explain the difference between supervised, unsupervised, and reinforcement learning.

The core difference between supervised, unsupervised, and reinforcement learning lies in how the algorithms learn from data. Think of it like teaching a dog:

- Supervised Learning: This is like explicitly showing your dog what’s right and wrong. You provide labeled data – input data with corresponding correct outputs. The algorithm learns to map inputs to outputs based on these examples. For instance, showing your dog pictures of cats and saying “cat” and pictures of dogs and saying “dog.” The algorithm learns to classify images as either “cat” or “dog.” Examples include image classification, spam detection, and predicting house prices.

- Unsupervised Learning: This is more like letting your dog explore and discover patterns on its own. You give the algorithm unlabeled data, and it tries to find structure, patterns, or relationships within the data. Imagine giving your dog a bunch of toys and letting it group them by color, shape, or size. Clustering, dimensionality reduction (like PCA), and anomaly detection are examples of unsupervised learning techniques.

- Reinforcement Learning: This is like training your dog with rewards and punishments. The algorithm learns through trial and error, receiving rewards for correct actions and penalties for incorrect ones. Think of teaching your dog a trick – you reward it when it performs the trick correctly and don’t reward it (or even give a slight punishment) when it makes a mistake. Examples include game playing (e.g., AlphaGo), robotics, and personalized recommendations.

Q 2. What is the bias-variance tradeoff?

The bias-variance tradeoff is a fundamental concept in machine learning that describes the relationship between the complexity of a model and its ability to generalize to unseen data. It’s like finding the sweet spot in a recipe: too much of one ingredient (bias or variance) spoils the dish (prediction accuracy).

- Bias: This refers to the error introduced by approximating a real-world problem, which is often complex, by a simplified model. High bias means the model is too simple and makes strong assumptions, leading to underfitting. Think of a very basic model that assumes a linear relationship between variables when the true relationship is actually highly complex.

- Variance: This refers to the model’s sensitivity to fluctuations in the training data. High variance means the model is too complex and fits the training data too closely, capturing noise and outliers, leading to overfitting. Imagine a model that perfectly fits every single data point in your training set, but performs poorly on new data because it’s learned the noise, not the underlying patterns.

The goal is to find a model with low bias and low variance. This usually involves finding the right balance between model complexity and the amount of training data.

Q 3. Describe different types of regularization techniques and their purpose.

Regularization techniques are used to prevent overfitting by adding a penalty to the model’s complexity. Think of it as adding constraints to your model to stop it from becoming too specialized to the training data.

- L1 Regularization (LASSO): Adds a penalty proportional to the absolute value of the model’s coefficients. This can lead to feature selection, as some coefficients may be driven to zero.

loss = original_loss + lambda * sum(|coefficients|) - L2 Regularization (Ridge): Adds a penalty proportional to the square of the model’s coefficients. This shrinks the coefficients towards zero, but rarely sets them exactly to zero.

loss = original_loss + lambda * sum(coefficients^2) - Elastic Net: A combination of L1 and L2 regularization, offering the benefits of both. It can be particularly useful when dealing with highly correlated features.

The parameter lambda (or alpha in some implementations) controls the strength of the regularization. A larger lambda leads to stronger regularization and simpler models, reducing overfitting but potentially increasing bias.

Q 4. Explain the concept of overfitting and underfitting.

Overfitting and underfitting are two common problems in machine learning that affect a model’s ability to generalize to new, unseen data. They represent two ends of the bias-variance spectrum.

- Overfitting: Occurs when a model learns the training data too well, including the noise and outliers. It performs exceptionally well on the training data but poorly on new data. Imagine a student who memorizes the answers to a practice test without understanding the underlying concepts – they’ll do well on the practice test but fail the actual exam.

- Underfitting: Occurs when a model is too simple to capture the underlying patterns in the data. It performs poorly on both the training data and new data. Think of a student who only learns the basic concepts without applying them to practice questions – they’ll do poorly on both the practice test and the actual exam.

The key difference is that overfitting is a high-variance problem, while underfitting is a high-bias problem. Addressing these problems often involves adjusting model complexity (e.g., number of features, model type), regularization, and increasing the amount of training data.

Q 5. How do you handle missing data in a dataset?

Handling missing data is a crucial preprocessing step in machine learning. There are several strategies, each with its own strengths and weaknesses:

- Deletion: The simplest approach, but can lead to significant information loss if a large portion of the data is missing. You can either delete rows with missing values (listwise deletion) or delete columns (pairwise deletion), depending on the context.

- Imputation: Replacing missing values with estimated ones. Common methods include:

- Mean/Median/Mode Imputation: Replacing missing values with the mean, median, or mode of the respective feature. Simple but can distort the distribution, especially for non-normally distributed data.

- K-Nearest Neighbors (KNN) Imputation: Imputes missing values based on the values of similar data points. More sophisticated but computationally expensive.

- Multiple Imputation: Creates multiple plausible imputed datasets, then combines the results. Accounts for uncertainty in imputation, providing more robust estimates.

The best approach depends on the amount of missing data, the pattern of missingness, and the nature of the data. It’s often a good idea to experiment with different methods and compare their results.

Q 6. What are different methods for feature scaling and selection?

Feature scaling and selection are critical for improving model performance and interpretability. They help to ensure that features contribute equally to the model and prevent features with larger values from dominating the learning process.

- Feature Scaling: This transforms features to a similar range of values. Common methods include:

- Standardization (Z-score normalization): Centers data around zero with a standard deviation of one.

z = (x - μ) / σ - Min-Max scaling: Scales features to a range between 0 and 1.

x_scaled = (x - min) / (max - min)

- Standardization (Z-score normalization): Centers data around zero with a standard deviation of one.

- Feature Selection: This involves selecting the most relevant features for the model. Methods include:

- Filter methods: Rank features based on statistical measures (e.g., correlation, chi-squared test) and select the top-ranked ones. Simple and fast, but may not capture complex interactions between features.

- Wrapper methods: Use a model to evaluate subsets of features and select the best subset. More computationally expensive but can capture feature interactions. Examples include recursive feature elimination.

- Embedded methods: Perform feature selection as part of the model training process. Examples include L1 regularization (LASSO), which automatically performs feature selection by shrinking some coefficients to zero.

Q 7. What is cross-validation and why is it important?

Cross-validation is a resampling technique used to evaluate the performance of a machine learning model and to prevent overfitting. It involves splitting the data into multiple subsets (folds), training the model on some subsets, and testing it on the remaining subset. This process is repeated several times, with different subsets used for training and testing in each iteration.

Why is it important?

- Model Evaluation: Provides a more robust estimate of the model’s performance on unseen data than using a single train-test split. This helps to avoid overly optimistic performance estimates that may be due to the specific random train-test split used.

- Hyperparameter Tuning: Allows for the selection of optimal hyperparameters by evaluating model performance across different hyperparameter settings using cross-validation. This prevents overfitting to a specific hyperparameter setting.

- Overfitting Detection: The difference in performance between training and cross-validation can indicate overfitting. If the model performs significantly better on the training set than on the cross-validation sets, it suggests overfitting.

Common cross-validation techniques include k-fold cross-validation (where the data is split into k folds), leave-one-out cross-validation (where each data point is used as a test set), and stratified k-fold cross-validation (which ensures that the class distribution is similar in each fold).

Q 8. Explain the concept of a confusion matrix and its metrics (precision, recall, F1-score).

A confusion matrix is a table that visualizes the performance of a classification model. It shows the counts of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) predictions. Imagine you’re building a spam filter; TP would be correctly identifying spam, TN would be correctly identifying non-spam, FP would be incorrectly flagging non-spam as spam, and FN would be missing actual spam emails.

From the confusion matrix, we derive crucial metrics:

Precision: Out of all the instances predicted as positive, what proportion was actually positive? Formula:

Precision = TP / (TP + FP). High precision means fewer false positives – in our spam example, it means fewer legitimate emails are mistakenly flagged as spam.Recall (Sensitivity): Out of all the actual positive instances, what proportion did the model correctly identify? Formula:

Recall = TP / (TP + FN). High recall means fewer false negatives – meaning fewer spam emails slip through the filter.F1-Score: The harmonic mean of precision and recall, providing a balanced measure. Formula:

F1-Score = 2 * (Precision * Recall) / (Precision + Recall). It’s useful when you need to balance both precision and recall.

For example, a spam filter with high precision but low recall might be overly cautious, missing some spam but ensuring few legitimate emails are marked as spam. Conversely, a filter with high recall but low precision might flag many legitimate emails as spam while catching most spam.

Q 9. What are some common evaluation metrics for classification and regression problems?

Evaluation metrics vary depending on whether you’re dealing with classification or regression problems:

Classification:

- Accuracy: The overall correctness of predictions (

(TP + TN) / (TP + TN + FP + FN)). Simple but can be misleading with imbalanced datasets. - Precision, Recall, F1-score: As explained above, these provide a more nuanced view than accuracy, especially with class imbalance.

- AUC (Area Under the ROC Curve): Measures the model’s ability to distinguish between classes across different thresholds. A higher AUC indicates better performance.

- Log Loss: Measures the uncertainty of the model’s predictions. Lower log loss is better.

Regression:

- Mean Squared Error (MSE): The average squared difference between predicted and actual values. Sensitive to outliers.

- Root Mean Squared Error (RMSE): The square root of MSE, easier to interpret as it’s in the same units as the target variable.

- Mean Absolute Error (MAE): The average absolute difference between predicted and actual values, less sensitive to outliers than MSE.

- R-squared (R²): Represents the proportion of variance in the target variable explained by the model. Ranges from 0 to 1, with higher values indicating better fit.

The choice of metric depends on the specific problem and its priorities. For instance, in medical diagnosis, high recall (minimizing false negatives) is often prioritized over precision.

Q 10. Describe different types of neural networks (e.g., CNN, RNN, LSTM).

Neural networks come in various architectures, each designed for specific tasks:

Convolutional Neural Networks (CNNs): Excel at processing grid-like data like images and videos. They use convolutional layers to detect features at different scales, making them ideal for image classification, object detection, and image segmentation. Think of them as learning to recognize patterns like edges, corners, and textures in images.

Recurrent Neural Networks (RNNs): Designed for sequential data like text and time series. They have loops that allow information to persist across time steps, enabling them to capture temporal dependencies. Imagine understanding the meaning of a sentence; RNNs remember previous words to understand the context.

Long Short-Term Memory networks (LSTMs): A type of RNN that addresses the vanishing gradient problem, which hinders RNNs from learning long-range dependencies. LSTMs have internal mechanisms to regulate the flow of information, allowing them to learn patterns over much longer sequences. This makes them excellent for tasks like machine translation and speech recognition where understanding long sequences is crucial.

Other architectures include autoencoders (for dimensionality reduction and anomaly detection), generative adversarial networks (GANs) for generating new data samples, and transformers (for natural language processing).

Q 11. Explain the backpropagation algorithm.

Backpropagation is the algorithm that trains neural networks. It works by calculating the gradient of the loss function with respect to the network’s weights, then using this gradient to update the weights and minimize the loss. Think of it as finding the steepest downhill path on a loss landscape to reach the minimum loss.

Here’s a simplified breakdown:

- Forward Pass: Input data is fed forward through the network, generating predictions.

- Loss Calculation: The difference between the predictions and the actual values is calculated using a loss function (e.g., MSE, cross-entropy).

- Backward Pass: The gradient of the loss function is computed with respect to each weight in the network using the chain rule of calculus. This determines how much each weight contributed to the error.

- Weight Update: The weights are adjusted using an optimization algorithm like gradient descent (discussed in the next question), moving them in the direction that reduces the loss.

- Repeat: Steps 1-4 are repeated for multiple iterations (epochs) until the loss converges to an acceptable level or a stopping criterion is met.

The process is iterative, refining the network’s weights with each iteration to improve its accuracy.

Q 12. What is gradient descent and its variants (e.g., stochastic gradient descent)?

Gradient descent is an optimization algorithm used to find the minimum of a function (in this case, the loss function). It works by iteratively updating the model’s parameters (weights) in the direction of the negative gradient (steepest descent).

Imagine you’re standing on a mountain and want to reach the valley. Gradient descent is like taking small steps downhill, following the direction of the steepest slope.

Batch Gradient Descent: Computes the gradient using the entire dataset in each iteration. Slow but provides a stable update.

Stochastic Gradient Descent (SGD): Computes the gradient using only one data point (or a small batch) in each iteration. Faster than batch gradient descent but introduces more noise in the updates.

Mini-batch Gradient Descent: A compromise between batch and stochastic gradient descent, using a small subset (mini-batch) of the data to compute the gradient. It’s a common choice due to its efficiency and relatively stable updates.

Variants like Adam, RMSprop, and Adagrad are sophisticated versions of SGD that adapt the learning rate for each parameter, often leading to faster convergence and better performance.

Q 13. How do you choose the appropriate algorithm for a given machine learning problem?

Choosing the right algorithm is crucial for successful machine learning. There’s no one-size-fits-all answer, but a structured approach helps:

- Understand the Problem: Is it classification, regression, clustering, or something else? What’s the size of the dataset? What are the features like (numerical, categorical, text)?

- Explore Data: Analyze the data to identify patterns, outliers, and potential issues like missing values or class imbalance. This informs the choice of preprocessing techniques and algorithm selection.

- Consider Algorithm Properties: Different algorithms have strengths and weaknesses. For instance, linear regression is simple but assumes linearity, while decision trees can handle non-linearity but can be prone to overfitting. Consider factors like interpretability, computational complexity, and scalability.

- Experiment and Evaluate: Try different algorithms and evaluate their performance using appropriate metrics. Cross-validation is essential to ensure reliable evaluation.

- Iterate: Machine learning is an iterative process. Based on the evaluation results, refine the data preprocessing, feature engineering, and algorithm selection until satisfactory performance is achieved.

Consider these examples: For image classification, CNNs are usually a good starting point; for sentiment analysis, RNNs or transformers are often preferred; for simple linear relationships, linear regression might suffice.

Q 14. Explain the difference between L1 and L2 regularization.

L1 and L2 regularization are techniques used to prevent overfitting in machine learning models. They add a penalty term to the loss function, discouraging overly complex models.

L1 Regularization (LASSO): Adds a penalty term proportional to the absolute value of the model’s weights (

||w||₁). It encourages sparsity, meaning it pushes many weights to exactly zero. This can be useful for feature selection, as it effectively removes less important features from the model.L2 Regularization (Ridge): Adds a penalty term proportional to the square of the model’s weights (

||w||₂²). It shrinks the weights towards zero but doesn’t force them to be exactly zero. This tends to improve the generalization ability of the model by reducing the influence of individual features.

The choice between L1 and L2 depends on the specific problem and the desired outcome. If feature selection is a goal, L1 is preferred; if a smoother, more stable model is desired, L2 is often the better choice. The hyperparameter (lambda) controlling the strength of the regularization needs to be tuned through techniques like cross-validation to find the optimal balance between fitting the training data and generalizing well to unseen data.

Q 15. What is the difference between accuracy and AUC (Area Under the Curve)?

Accuracy and AUC (Area Under the Curve) are both metrics used to evaluate the performance of a classification model, but they capture different aspects.

Accuracy simply measures the percentage of correctly classified instances. It’s easy to understand and calculate: (True Positives + True Negatives) / Total Instances. However, accuracy can be misleading when dealing with imbalanced datasets (where one class has significantly more instances than others). A model might achieve high accuracy by simply predicting the majority class most of the time, even if it performs poorly on the minority class.

AUC, on the other hand, considers the model’s ability to distinguish between classes across different thresholds. It’s calculated from the ROC curve (Receiver Operating Characteristic curve), which plots the True Positive Rate (TPR) against the False Positive Rate (FPR) at various classification thresholds. A higher AUC indicates better classification performance, as it reflects the model’s ability to rank instances correctly, regardless of the specific threshold chosen. An AUC of 1 represents perfect classification, while an AUC of 0.5 indicates random classification.

Example: Imagine a medical diagnosis model predicting whether a patient has a rare disease. Accuracy might be high if the model mostly predicts ‘no disease’ (the majority class), but AUC would reveal its ability to correctly rank patients with the disease higher than those without, even if the overall accuracy is deceptively high.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are hyperparameters and how do you tune them?

Hyperparameters are settings that control the learning process of a machine learning model. Unlike model parameters (weights and biases learned during training), hyperparameters are set before training begins. They influence the model’s architecture, training behavior, and ultimately its performance.

Examples include the learning rate (how much the model adjusts its parameters in each iteration), the number of hidden layers in a neural network, regularization strength (to prevent overfitting), and the number of trees in a random forest.

Hyperparameter tuning is the process of finding the optimal set of hyperparameters that yield the best model performance. This is often an iterative process, involving experimentation and evaluation. Common techniques include:

- Grid Search: Systematically trying all combinations of hyperparameters within a defined range.

- Random Search: Randomly sampling hyperparameter combinations from a specified distribution.

- Bayesian Optimization: A more sophisticated approach that uses a probabilistic model to guide the search, focusing on promising regions of the hyperparameter space.

- Evolutionary Algorithms: Inspired by natural selection, these algorithms evolve a population of hyperparameter configurations over generations.

The choice of tuning method depends on factors such as the computational resources available and the complexity of the hyperparameter space. Cross-validation is often used to evaluate the performance of different hyperparameter configurations, preventing overfitting to the training data.

Q 17. Explain different dimensionality reduction techniques (e.g., PCA, t-SNE).

Dimensionality reduction techniques are used to reduce the number of variables (features) in a dataset while preserving important information. This is crucial for improving model performance, reducing computational cost, and visualizing high-dimensional data.

Principal Component Analysis (PCA): A linear transformation that projects the data onto a lower-dimensional subspace spanned by principal components – the directions of maximum variance in the data. PCA is useful for noise reduction and feature extraction, but it assumes linear relationships between variables.

t-distributed Stochastic Neighbor Embedding (t-SNE): A non-linear technique that focuses on preserving the local neighborhood structure of the data points. It’s particularly effective for visualizing high-dimensional data in 2D or 3D, but it doesn’t preserve global distances well and can be computationally expensive for large datasets.

Example: Imagine analyzing customer data with hundreds of features (age, income, purchase history, etc.). PCA can reduce the dimensionality to a smaller set of uncorrelated principal components that capture most of the variance, simplifying the data for subsequent modeling. t-SNE can then visualize these reduced data points, revealing clusters of customers with similar characteristics.

Q 18. Describe your experience with different deep learning frameworks (e.g., TensorFlow, PyTorch).

I have extensive experience with both TensorFlow and PyTorch, two leading deep learning frameworks. My experience spans various tasks, including building and training complex neural networks, deploying models to production environments, and leveraging their respective ecosystems of tools and libraries.

TensorFlow: I’ve used TensorFlow for large-scale projects, particularly appreciating its robust production deployment capabilities through TensorFlow Serving and its extensive community support. Its static computation graph, while once a strength, can be less flexible than PyTorch’s dynamic approach. I’ve worked with Keras, TensorFlow’s high-level API, to streamline model building and experimentation.

PyTorch: I’ve found PyTorch to be very intuitive and well-suited for research and prototyping due to its dynamic computation graph and Pythonic style. Its debugging capabilities are generally easier than TensorFlow’s. I’ve used PyTorch extensively for tasks involving natural language processing and computer vision.

My experience includes leveraging both frameworks’ built-in functionalities for tasks such as automatic differentiation, GPU acceleration, and distributed training. I’m comfortable choosing the framework best suited for a specific project based on its requirements and my team’s familiarity.

Q 19. How do you handle imbalanced datasets?

Imbalanced datasets, where one class significantly outnumbers others, pose a challenge to machine learning models because they tend to bias towards the majority class. To address this, several techniques can be used:

- Resampling: This involves adjusting the class distribution either by oversampling the minority class (creating synthetic samples) or undersampling the majority class (removing instances).

- Cost-sensitive learning: Assigning different misclassification costs to different classes. Higher costs for misclassifying the minority class penalize the model for making those errors.

- Ensemble methods: Combining multiple models trained on different subsets of the data or with different sampling strategies can improve performance on the minority class.

- Anomaly detection techniques: If the minority class represents anomalies or outliers, anomaly detection algorithms might be more appropriate than standard classification.

The best approach often depends on the specific dataset and problem. Careful evaluation using appropriate metrics (like precision, recall, F1-score, and AUC) is essential to assess the effectiveness of the chosen technique.

Q 20. Explain the concept of ensemble methods (e.g., bagging, boosting).

Ensemble methods combine multiple individual models to improve predictive performance and robustness. They leverage the ‘wisdom of the crowd’ principle, where the collective decision of many models is often better than any single model’s prediction.

Bagging (Bootstrap Aggregating): Creates multiple subsets of the training data through bootstrapping (random sampling with replacement). A separate model is trained on each subset, and the final prediction is an aggregate (e.g., average or majority vote) of the individual model predictions. Bagging reduces variance and improves model stability.

Boosting: Sequentially trains models, where each subsequent model focuses on correcting the errors made by the previous models. Boosting assigns higher weights to misclassified instances, forcing subsequent models to pay more attention to them. Common boosting algorithms include AdaBoost, Gradient Boosting, and XGBoost. Boosting reduces bias and improves accuracy.

Example: A random forest is a bagging ensemble of decision trees. Gradient boosting machines (GBMs) are popular boosting algorithms used in various applications, including credit scoring and fraud detection.

Q 21. What is A/B testing and how is it used in the context of machine learning?

A/B testing is a controlled experiment used to compare two versions of something (A and B) to determine which performs better. In machine learning, it’s often used to compare different models, algorithms, or hyperparameter configurations.

How it’s used: A subset of the data is randomly split into two groups (A and B). Each group is evaluated using a different version of the machine learning system (e.g., Model A vs. Model B). Statistical tests (like t-tests or chi-squared tests) are then used to determine if there’s a statistically significant difference in performance between the two versions.

Example: Imagine you have two different versions of a recommendation system. You could use A/B testing to compare their click-through rates or conversion rates. The version that performs significantly better would be deployed to the entire user base.

A/B testing is crucial for validating the effectiveness of machine learning models in a real-world setting and ensuring that improvements actually translate to measurable gains in key performance indicators.

Q 22. Describe your experience with cloud computing platforms for machine learning (e.g., AWS SageMaker, Google Cloud AI Platform, Azure ML Studio).

My experience with cloud computing platforms for machine learning is extensive. I’ve worked extensively with AWS SageMaker, Google Cloud AI Platform, and Azure ML Studio, leveraging their strengths for different project needs. SageMaker’s ease of integration with other AWS services, particularly for deploying models at scale, is a key advantage. I’ve used it to build and deploy real-time prediction services for a fraud detection system, taking advantage of its built-in model monitoring and automatic scaling features. Google Cloud AI Platform shines in its powerful pre-trained models and AutoML capabilities. I utilized AutoML for a customer segmentation project, significantly reducing the time and expertise required for model development. Finally, Azure ML Studio’s visual workflow designer proved invaluable for prototyping and iterating quickly on models during a sentiment analysis project. The choice of platform always depends on the project’s specific requirements – whether it’s scalability, cost-effectiveness, pre-trained models, or ease of use – and I am proficient in adapting to each.

For instance, in one project involving a large-scale image classification task, AWS SageMaker’s ability to distribute training across multiple instances proved crucial for handling the massive dataset efficiently. In contrast, for a smaller project requiring rapid prototyping, Google Cloud AI Platform’s AutoML features significantly streamlined the development process. This adaptability to different platforms is a key skill in my ML workflow.

Q 23. Explain the concept of transfer learning.

Transfer learning is a powerful technique in machine learning where a pre-trained model, usually trained on a large dataset for a general task (like image classification on ImageNet), is fine-tuned for a new, related task using a smaller dataset. Instead of training a model from scratch, which can require vast amounts of data and computational resources, transfer learning leverages the knowledge already encoded in the pre-trained model. Think of it like learning a new language; you already know the grammar and vocabulary of your native language, so learning a new one becomes easier.

This is particularly useful when you have limited data for your specific task. The pre-trained model provides a strong starting point, allowing for faster training and potentially better performance, even with a relatively small dataset. For example, if you want to build a model to classify cat breeds, you can start with a model pre-trained on a massive dataset of general images. You would then fine-tune this model using a smaller dataset of cat images, focusing on the features that distinguish different breeds. This significantly reduces training time and improves accuracy compared to training a model from scratch.

The process typically involves freezing the weights of the initial layers of the pre-trained model, which capture general features, and only training the later layers, adapting them to the specific task. This prevents the model from ‘forgetting’ the knowledge gained from the initial training.

Q 24. What are some ethical considerations in developing and deploying AI systems?

Ethical considerations in AI development and deployment are paramount. We must actively mitigate biases, ensure fairness, transparency, and accountability. Bias in datasets can lead to discriminatory outcomes. For example, a facial recognition system trained primarily on images of one race might perform poorly on others. Addressing this requires careful data curation, ensuring representation across diverse groups and using techniques to mitigate biases during model training.

- Bias Mitigation: Careful data selection and preprocessing techniques are crucial, but algorithms themselves can also perpetuate bias. We need to constantly evaluate and monitor models for unfair outcomes.

- Fairness and Transparency: Models should be explainable, meaning their decision-making processes should be understandable. This allows for identification of biases and ensures accountability. Techniques like LIME (Local Interpretable Model-agnostic Explanations) can help in this regard.

- Privacy and Security: AI systems often deal with sensitive data, so robust security measures are critical to prevent breaches and misuse. Data anonymization and differential privacy are crucial for protecting individual privacy.

- Accountability: Clear lines of responsibility need to be established for AI systems’ actions and their consequences. Who is responsible when an AI system makes a mistake? This is a complex question requiring clear guidelines and regulatory frameworks.

Ignoring these ethical aspects can lead to severe societal consequences, from unfair loan applications to biased criminal justice systems. Therefore, a responsible approach demands careful consideration of these factors throughout the AI lifecycle.

Q 25. Describe your experience with data visualization and communication of results.

Data visualization is an integral part of my workflow. I use various tools and techniques to communicate results effectively, catering to both technical and non-technical audiences. My go-to tools include Matplotlib, Seaborn, and Plotly for Python, which allow for creating diverse visualizations, from simple line charts and scatter plots to more complex interactive dashboards. I also have experience with Tableau and Power BI for creating more sophisticated visualizations.

For example, when presenting model performance to stakeholders, I often use precision-recall curves and ROC curves to illustrate the trade-offs between different metrics. For exploring relationships within the data, I might use heatmaps or correlation matrices. When presenting to non-technical audiences, I focus on high-level summaries and clear, concise visualizations that effectively communicate key insights without overwhelming them with technical details. The choice of visualization always depends on the audience and the message I want to convey.

Furthermore, I believe in iterative visualization. I often start with exploratory visualizations to understand the data and then refine them as the analysis progresses, creating a narrative that guides the audience through the key findings. The final visualizations are always accompanied by clear, concise explanations that enhance understanding and ensure that insights are easily grasped.

Q 26. How do you stay updated with the latest advancements in AI/ML?

Staying updated in the rapidly evolving field of AI/ML requires a multifaceted approach. I regularly follow leading research publications, including journals like JMLR (Journal of Machine Learning Research) and NeurIPS (Neural Information Processing Systems) conference proceedings. I also actively participate in online communities, such as those on Reddit and researchgate, where researchers and practitioners discuss cutting-edge work.

Attending conferences and workshops is essential for networking and learning from experts. Following key researchers and influencers on platforms like Twitter and LinkedIn helps to stay informed about new developments. I regularly read technical blogs and articles from reputable sources and take online courses on platforms like Coursera and edX to deepen my expertise in specific areas. Finally, experimenting with new techniques and frameworks on personal projects keeps me hands-on and allows me to explore the practical implications of new advancements.

This multi-pronged approach ensures I’m aware of not just the theoretical advancements but also their practical applications and potential limitations.

Q 27. Describe a challenging machine learning project you worked on and the solutions you implemented.

One particularly challenging project involved developing a recommendation system for a large e-commerce platform. The challenge stemmed from the sheer scale of the data – millions of users and products – and the need for real-time recommendations with low latency. Traditional collaborative filtering methods were too slow and couldn’t handle the data volume efficiently.

To address this, I employed a hybrid approach combining collaborative filtering with content-based filtering and deep learning techniques. I used techniques like matrix factorization for collaborative filtering but optimized it for scalability using distributed computing frameworks like Spark. For content-based filtering, I leveraged product descriptions and user reviews to create embeddings using word2vec and doc2vec. Finally, I incorporated a deep learning model (a neural network) to combine the outputs of both approaches, learning complex relationships between users, products, and their characteristics. This hybrid model provided significantly better accuracy and scalability than using any single method alone.

Furthermore, I implemented a caching mechanism to improve response times, storing frequently accessed recommendations in memory. Regular model retraining and A/B testing were crucial for monitoring performance and identifying areas for improvement. The final system delivered real-time personalized recommendations with acceptable latency, significantly increasing user engagement and sales conversion rates.

Key Topics to Learn for Artificial Intelligence (AI)/Machine Learning (ML) Interview

- Supervised Learning: Understanding algorithms like linear regression, logistic regression, support vector machines (SVMs), decision trees, and random forests. Consider practical applications such as image classification and spam detection.

- Unsupervised Learning: Mastering clustering techniques (k-means, hierarchical clustering), dimensionality reduction (PCA, t-SNE), and anomaly detection. Explore applications in customer segmentation and fraud detection.

- Deep Learning: Familiarize yourself with neural networks, convolutional neural networks (CNNs) for image processing, recurrent neural networks (RNNs) for sequential data, and their applications in natural language processing (NLP) and computer vision.

- Model Evaluation & Selection: Grasp key metrics like precision, recall, F1-score, AUC-ROC, and understand techniques for model selection, cross-validation, and hyperparameter tuning.

- Bias-Variance Tradeoff: Comprehend the concepts of overfitting and underfitting and strategies to mitigate them, such as regularization techniques.

- Data Preprocessing & Feature Engineering: Master techniques for handling missing data, outliers, and feature scaling. Learn about feature selection and extraction methods to improve model performance.

- Probability & Statistics: Strengthen your foundational knowledge of probability distributions, hypothesis testing, and statistical significance. This is crucial for understanding model outputs and interpreting results.

- Big Data Technologies (Optional but beneficial): Basic familiarity with tools like Spark or Hadoop can be advantageous for roles involving large datasets.

Next Steps

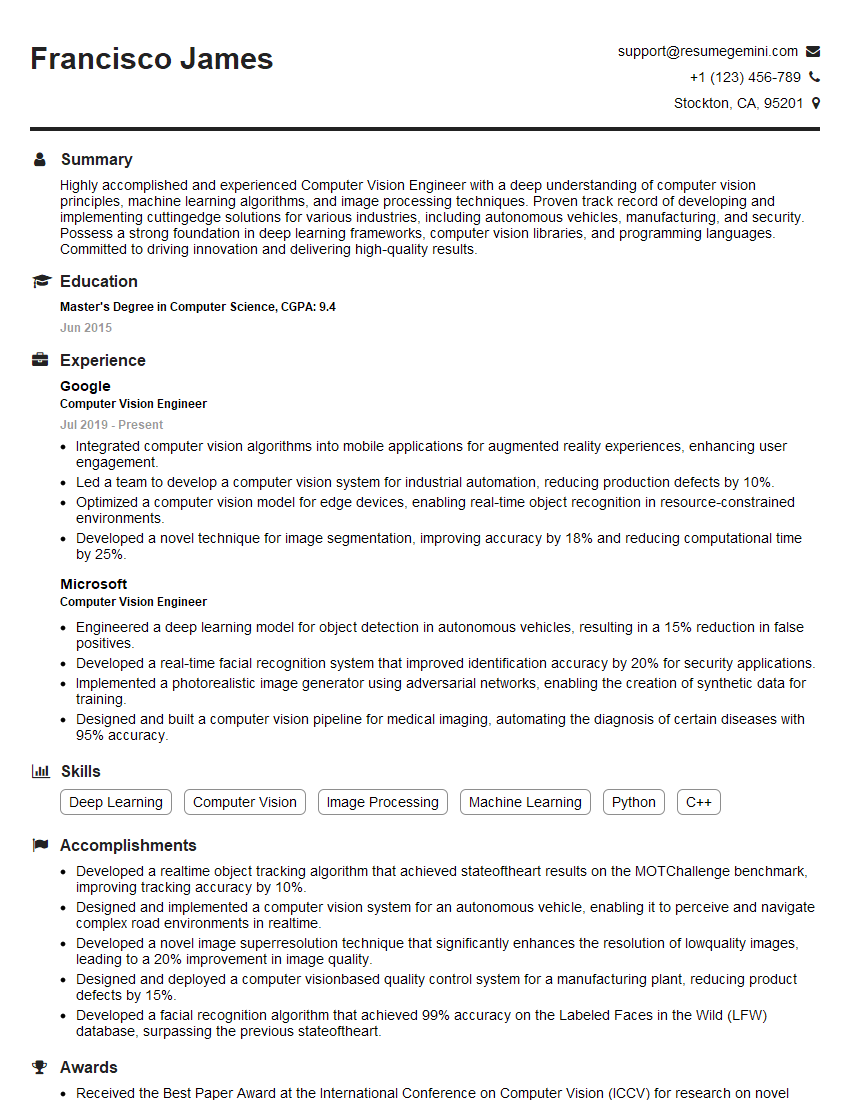

Mastering AI/ML opens doors to incredibly rewarding and impactful careers. To maximize your job prospects, creating a strong, ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that showcases your skills effectively. We provide examples of resumes tailored to the Artificial Intelligence and Machine Learning fields to guide you. Invest time in crafting a compelling narrative that highlights your achievements and technical expertise. Your future in AI/ML awaits!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO