The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Information Technology (IT) Proficiency interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Information Technology (IT) Proficiency Interview

Q 1. Explain the difference between TCP and UDP.

TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) are both communication protocols used for transmitting data over the internet, but they differ significantly in how they handle data delivery. Think of it like sending a package: TCP is like using a courier service that guarantees delivery and provides tracking, while UDP is like sending a postcard – it’s faster but there’s no guarantee of arrival.

- TCP (Transmission Control Protocol): TCP is a connection-oriented protocol. This means it establishes a connection between the sender and receiver before transmitting data and ensures reliable delivery through acknowledgments and retransmissions. It’s slower but more reliable, making it suitable for applications requiring guaranteed data delivery, such as web browsing (HTTP), email (SMTP), and file transfer (FTP).

- UDP (User Datagram Protocol): UDP is a connectionless protocol. It doesn’t establish a connection before sending data, making it faster but less reliable. There’s no guarantee that data will arrive or arrive in the correct order. It’s ideal for applications where speed is prioritized over reliability, such as online gaming, video streaming, and DNS lookups. If some data loss is acceptable for the sake of speed, UDP is a good choice.

In essence, the choice between TCP and UDP depends on the application’s needs. If reliability is paramount, TCP is preferred. If speed is more critical, and some data loss is tolerable, UDP is a better option.

Q 2. Describe your experience with cloud computing platforms (AWS, Azure, GCP).

I have extensive experience working with all three major cloud computing platforms: AWS (Amazon Web Services), Azure (Microsoft Azure), and GCP (Google Cloud Platform). My experience spans various aspects, from infrastructure management to application deployment and data analytics.

- AWS: I’ve used AWS extensively for building and managing scalable web applications. I’m proficient in using services like EC2 (virtual servers), S3 (object storage), RDS (relational databases), and Lambda (serverless computing). For example, I once used AWS to build a highly available, fault-tolerant e-commerce platform that scaled seamlessly during peak shopping seasons.

- Azure: My Azure experience focuses on developing and deploying cloud-native applications. I’m familiar with Azure’s services like Virtual Machines, Azure Storage, Azure SQL Database, and Azure Functions. I’ve used Azure DevOps for CI/CD pipeline implementation in several projects.

- GCP: In GCP, I’ve worked with Compute Engine, Cloud Storage, Cloud SQL, and Kubernetes. My projects on GCP often involve big data processing and machine learning workloads. I used GCP’s BigQuery for analyzing large datasets and building predictive models.

My experience with these platforms allows me to choose the best solution depending on the specific requirements of a project, considering factors like cost, scalability, and security.

Q 3. What are the different types of database systems?

Database systems are broadly categorized into several types based on their data model and how they organize and manage data. The most common types are:

- Relational Databases (RDBMS): These databases store data in tables with rows and columns, establishing relationships between tables. Examples include MySQL, PostgreSQL, Oracle, and Microsoft SQL Server. They are well-suited for structured data and offer robust features like ACID properties (Atomicity, Consistency, Isolation, Durability) for ensuring data integrity.

- NoSQL Databases: These databases don’t adhere to the traditional relational model. They offer flexible schemas and are designed for handling large volumes of unstructured or semi-structured data. Examples include MongoDB (document database), Cassandra (wide-column store), and Redis (in-memory data structure store). NoSQL databases are frequently used for applications like social media, content management systems, and real-time analytics.

- Object-Oriented Databases (OODBMS): These databases store data as objects, similar to object-oriented programming. They’re suitable for applications dealing with complex data structures and relationships.

- Graph Databases: These databases are optimized for representing and querying relationships between data points. They’re commonly used for social networks, knowledge graphs, and recommendation systems. Neo4j is a popular example.

The choice of database system depends heavily on the nature of the application and the type of data being managed. For example, an e-commerce application might use a relational database for structured product information and a NoSQL database for handling user reviews and session data.

Q 4. How do you troubleshoot network connectivity issues?

Troubleshooting network connectivity issues requires a systematic approach. I typically follow these steps:

- Identify the problem: Determine which devices or users are affected, what the symptoms are (e.g., slow speeds, intermittent connectivity, complete outage), and when the problem started.

- Check the basics: Make sure cables are properly connected, devices are powered on, and Wi-Fi is enabled (if applicable). Try restarting devices to see if that resolves the issue.

- Test connectivity: Use simple tools like

ping(to check basic network reachability) andtraceroute(to identify network path issues). For example,ping google.comwill check connectivity to Google’s servers.traceroute google.comwill show the path packets take to reach Google’s servers, identifying potential bottlenecks or outages along the way. - Check network configuration: Verify IP addresses, subnet masks, default gateways, and DNS settings are correctly configured on all affected devices. Look for any misconfigurations that might be preventing connectivity.

- Examine network devices: If the problem persists, check the status of routers, switches, and firewalls. Look for error logs or indicators of problems.

- Use network monitoring tools: Tools like Wireshark or tcpdump can be invaluable for capturing and analyzing network traffic, helping to pinpoint the source of the problem.

- Consult documentation and support: If the problem remains unsolved, refer to relevant documentation or contact the IT support team for assistance.

Troubleshooting network connectivity is a process of elimination, systematically testing different components and configurations until the root cause is identified and resolved.

Q 5. Explain the concept of virtualization.

Virtualization is the process of creating a virtual version of something, such as a server, operating system, storage device, or network. It allows multiple virtual instances to run on a single physical resource. Imagine having many apartments in a single building – each apartment is independent, but they all share the same building’s infrastructure.

- Hypervisors: The software that enables virtualization is called a hypervisor (also known as a virtual machine monitor). Hypervisors create and manage virtual machines (VMs).

- Types of Hypervisors: There are two main types: Type 1 (bare-metal) hypervisors run directly on the host hardware, while Type 2 (hosted) hypervisors run on top of an existing operating system.

- Benefits of Virtualization: Virtualization offers several benefits, including increased efficiency (consolidating multiple servers onto fewer physical machines), improved resource utilization, enhanced flexibility (easily creating and destroying VMs), and better disaster recovery (creating backups and replicating VMs).

Virtualization is crucial in modern IT infrastructure. It enables cloud computing, improves server efficiency, and simplifies managing IT resources. Examples include running multiple web servers on a single physical machine or running different operating systems concurrently on a single computer.

Q 6. Describe your experience with scripting languages (Python, PowerShell, Bash).

I have significant experience with several scripting languages, including Python, PowerShell, and Bash. Each language serves different purposes, and my choice depends on the task at hand.

- Python: I’ve used Python extensively for automating tasks, developing web applications, and data analysis. Python’s versatility and extensive libraries (like NumPy and Pandas) make it ideal for complex tasks. For instance, I wrote a Python script to automate the daily backup of a critical database, significantly reducing manual workload and improving data security.

- PowerShell: PowerShell is my go-to language for automating tasks within the Windows environment. Its strong integration with the Windows operating system and Active Directory makes it highly effective for system administration and management. I’ve used PowerShell to automate user account management, software deployments, and system configurations.

- Bash: Bash is my preferred scripting language for Linux and macOS systems. I’ve used it extensively for system administration, automation, and data manipulation within the Linux environment. For example, I developed a Bash script to monitor system logs and generate alerts if specific error messages occur.

My proficiency in these scripting languages significantly increases my efficiency and allows me to automate repetitive tasks, reducing errors and improving overall productivity.

Q 7. What are some common cybersecurity threats and how do you mitigate them?

Cybersecurity threats are constantly evolving, but some common threats include:

- Malware: This includes viruses, worms, ransomware, and trojans that can infect systems and steal data, disrupt operations, or encrypt files for ransom.

- Phishing: Social engineering attacks that attempt to trick users into revealing sensitive information (like passwords or credit card details) through deceptive emails or websites.

- Denial-of-Service (DoS) attacks: These attacks flood a system with traffic, making it unavailable to legitimate users.

- SQL Injection: A technique used to attack databases by injecting malicious SQL code into input fields.

- Man-in-the-middle (MitM) attacks: These attacks intercept communication between two parties, allowing the attacker to eavesdrop or manipulate the data.

Mitigating these threats requires a multi-layered approach:

- Strong passwords and multi-factor authentication (MFA): These significantly improve account security.

- Regular software updates and patching: This closes security vulnerabilities.

- Firewall and intrusion detection systems: These help prevent unauthorized access and detect malicious activity.

- Security awareness training for users: Educating users about phishing and social engineering techniques is critical.

- Data encryption: Protecting sensitive data with encryption helps prevent unauthorized access even if a system is compromised.

- Regular backups: In case of a ransomware attack, having regular backups allows for quick data recovery.

- Security information and event management (SIEM) systems: These systems collect and analyze security logs from various sources, providing a comprehensive view of security events.

A robust cybersecurity strategy requires a combination of technical safeguards and security awareness training, constantly adapting to the ever-changing threat landscape.

Q 8. Explain the difference between a firewall and an IDS/IPS.

Firewalls and Intrusion Detection/Prevention Systems (IDS/IPS) are both crucial for network security, but they operate differently. Think of a firewall as a bouncer at a club, meticulously checking IDs and only allowing authorized individuals inside. It examines network traffic based on pre-defined rules and filters out unauthorized access attempts. An IDS/IPS, on the other hand, is more like a security camera and alert system. It monitors network traffic for suspicious activity, even if it’s not explicitly blocked by the firewall. An IDS simply detects and reports these activities, while an IPS goes a step further by actively blocking or mitigating threats.

- Firewall: Acts as a barrier, blocking unwanted network traffic based on configured rules (e.g., blocking traffic from specific IP addresses or ports). It’s primarily reactive, responding to known threats.

- IDS: Monitors network traffic for malicious activity and generates alerts. It doesn’t actively block traffic; it informs administrators about potential security breaches.

- IPS: Similar to an IDS, but it actively intervenes to block or mitigate threats detected in network traffic. This could involve dropping malicious packets, resetting connections, or other proactive measures.

For example, a firewall might block all incoming connections on port 23 (Telnet), a known insecure protocol. An IDS might detect a known malware signature in network packets and alert the administrator, while an IPS would automatically block those packets from reaching their destination.

Q 9. How do you ensure data integrity and security?

Ensuring data integrity and security is paramount. We use a multi-layered approach encompassing technical safeguards and robust policies. Data integrity refers to the accuracy and consistency of data over its entire lifecycle, while data security focuses on protecting it from unauthorized access, use, disclosure, disruption, modification, or destruction.

- Data Encryption: Encrypting data both in transit (using HTTPS, TLS/SSL) and at rest (using encryption at the database level or file system level) protects it from unauthorized access even if a breach occurs. Imagine encrypting a confidential document with a strong password – only someone with the correct password can decipher it.

- Access Control: Implementing strong access control mechanisms, such as role-based access control (RBAC), ensures only authorized personnel can access sensitive information. This limits the potential damage from insider threats or compromised accounts.

- Data Validation and Sanitization: Validating data input and sanitizing it before storage prevents malicious code injection or data corruption. This is like checking a document for errors and removing any harmful elements before archiving it.

- Regular Backups and Disaster Recovery: Regular backups are vital for recovering from data loss due to hardware failures, natural disasters, or cyberattacks. A well-defined disaster recovery plan ensures business continuity and minimal downtime in such events.

- Security Audits and Penetration Testing: Regularly auditing security controls and conducting penetration testing identify vulnerabilities and weaknesses that need addressing. It’s like having a thorough inspection of your house’s security system to identify and fix any flaws.

Q 10. Describe your experience with Agile methodologies.

I have extensive experience working within Agile methodologies, primarily Scrum and Kanban. In my previous role at [Previous Company Name], we used Scrum to manage the development of a critical e-commerce platform. This involved working in short, iterative sprints (typically two weeks) with daily stand-up meetings to track progress, identify roadblocks, and ensure we stayed on track. We used tools like Jira for project management and task tracking. The Agile approach allowed for flexibility and adaptability, enabling us to respond quickly to changing requirements and client feedback. I played a key role in sprint planning, task estimation, daily stand-ups, sprint reviews, and retrospectives. Kanban has also been beneficial in managing maintenance tasks and bug fixes where a more flexible workflow was needed, allowing us to prioritize urgent tasks while still maintaining a steady stream of work.

Q 11. What is your experience with version control systems (Git)?

I’m proficient in using Git for version control, both locally and on platforms like GitHub and GitLab. I regularly use branching strategies like Gitflow to manage features and bug fixes independently. I understand the importance of writing clear and concise commit messages to aid collaboration and track changes effectively. I’m familiar with resolving merge conflicts, using rebasing when appropriate, and creating pull requests for code review. A recent project involved collaborating with a remote team using Git to manage the development of a microservices architecture. The ability to track changes, revert to previous versions, and collaborate seamlessly was crucial for the success of that project. I often employ GitHub Actions for automated testing and deployments, streamlining the development pipeline.

Q 12. Explain the concept of a distributed system.

A distributed system is a collection of independent components, often geographically dispersed, that work together to achieve a common goal. Imagine a large online retailer like Amazon – its website isn’t hosted on a single server but rather spread across numerous servers in different data centers around the world. This allows for high availability, scalability, and fault tolerance. Each component can fail independently without bringing down the entire system. Key characteristics of distributed systems include:

- Decentralization: No single point of control or failure.

- Scalability: The system can easily handle increased workload by adding more components.

- Fault tolerance: The system can continue operating even if some components fail.

- Concurrency: Multiple components can operate simultaneously.

Challenges in managing distributed systems often include ensuring data consistency across different components, managing network latency, and dealing with partial failures.

Q 13. How do you handle conflicting priorities in a project?

Handling conflicting priorities requires a structured approach. I typically start by clearly documenting all priorities, then assess their urgency and importance using a prioritization matrix (like MoSCoW – Must have, Should have, Could have, Won’t have). This allows for a transparent understanding of the trade-offs involved. Next, I engage in open communication with stakeholders to discuss the constraints and collaboratively redefine priorities if necessary. This might involve negotiating deadlines, adjusting project scope, or reallocating resources. Finally, regular progress monitoring and adjustments based on feedback are crucial to ensure that the most important tasks are addressed effectively and efficiently.

For example, if a project has conflicting deadlines for two critical features, I would analyze their dependencies and business value. Perhaps one feature is more vital for initial release, allowing us to deliver core functionality on time and address the other feature in a subsequent iteration. Transparency and proactive communication with stakeholders are key to resolving these situations successfully.

Q 14. Describe your experience with database administration tasks (SQL, NoSQL).

I have extensive experience administering both SQL and NoSQL databases. With SQL databases (like PostgreSQL and MySQL), I’m proficient in writing complex queries, optimizing database performance (indexing, query tuning), managing database security (user roles, permissions), and performing backups and recovery. I have experience with database design principles, normalization, and data modeling. My experience with NoSQL databases (like MongoDB and Cassandra) involves schema design, data modeling, and managing distributed data across clusters. I understand the trade-offs between SQL and NoSQL databases and can choose the appropriate technology based on specific project requirements. For example, in a project requiring high scalability and flexibility, I’d opt for a NoSQL solution like MongoDB, while for a project with stringent data consistency requirements, I’d choose a relational SQL database like PostgreSQL.

I’ve utilized SQL for transactional data management in several applications, while NoSQL has been crucial for handling large volumes of unstructured or semi-structured data in projects involving big data analytics and real-time data processing.

Q 15. How do you perform capacity planning for IT infrastructure?

Capacity planning for IT infrastructure is a crucial process to ensure that your systems can handle current and future demands. It involves forecasting resource needs, like computing power, storage, and network bandwidth, and then procuring or scaling resources accordingly. Think of it like planning seating for a concert – you need to estimate attendance to ensure everyone has a seat and the venue can handle the crowd.

The process typically involves:

- Performance Monitoring: Regularly monitoring current system performance (CPU utilization, memory usage, disk I/O, network traffic) to identify bottlenecks and trends.

- Demand Forecasting: Projecting future growth based on historical data, business plans, and anticipated changes (e.g., new applications, increased user base). This often involves using statistical modeling or trend analysis.

- Resource Sizing: Determining the required capacity for each resource based on projected demand, considering factors like performance thresholds and acceptable service levels.

- Capacity Acquisition/Scaling: Procuring additional hardware or software, or scaling cloud resources to meet projected needs. This could include adding servers, increasing storage capacity, or upgrading network infrastructure.

- Testing and Validation: Conducting load tests and simulations to validate the capacity plan and ensure the infrastructure can handle anticipated loads.

- Documentation and Reporting: Maintaining detailed documentation of the capacity plan, including assumptions, methodologies, and results.

For example, if our web application experiences a surge in traffic during holiday seasons, capacity planning would involve predicting the peak load, procuring additional server capacity in advance, and implementing auto-scaling mechanisms to dynamically adjust resources as needed. This prevents service disruptions during peak demand.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are your experiences with IT project management methodologies?

I have extensive experience with various IT project management methodologies, including Agile (Scrum, Kanban), Waterfall, and hybrid approaches. My choice of methodology depends heavily on the project’s nature, complexity, and client requirements.

Waterfall is suitable for projects with clearly defined requirements and minimal expected changes. It’s a linear approach with distinct phases (initiation, planning, execution, monitoring, closure).

Agile methodologies, like Scrum, are ideal for projects with evolving requirements and a need for iterative development. They emphasize collaboration, flexibility, and rapid feedback loops. Kanban is another Agile method focused on visualizing workflow and limiting work in progress.

In practice, I often find a hybrid approach is most effective. For example, a large project might utilize a Waterfall framework for the initial phases of planning and requirements gathering, then transition to Agile for development and implementation to allow for greater flexibility and adaptation to changing needs. I’m proficient in using project management tools like Jira and Asana to track progress, manage tasks, and facilitate communication among team members.

Q 17. Describe a time you had to troubleshoot a complex technical issue.

During a recent database migration, we experienced unexpected performance degradation after the cutover. The application became extremely slow and unresponsive, impacting our users. Initial investigations pointed towards network issues, but after careful monitoring and analysis, we discovered a configuration problem within the database itself.

My troubleshooting steps included:

- Isolate the Problem: We used performance monitoring tools to pinpoint the specific database queries causing the slowdown.

- Gather Data: We collected logs, performance metrics, and error messages to understand the root cause.

- Analyze the Data: We identified a missing index on a heavily used table, which was leading to slow query execution.

- Implement a Solution: We created the missing index, tested thoroughly in a staging environment, and then deployed the fix to the production database.

- Monitor and Verify: Post-implementation monitoring confirmed that the application performance had returned to normal.

This experience highlighted the importance of meticulous database optimization and the critical role of monitoring tools in quickly identifying and resolving performance bottlenecks. It also reinforced the value of having a well-defined rollback plan in case of unforeseen issues.

Q 18. Explain your understanding of network topologies.

Network topologies refer to the physical or logical layout of nodes (computers, servers, printers) and connections in a network. Understanding different topologies is critical for designing, implementing, and troubleshooting networks. Common topologies include:

- Bus Topology: All devices are connected to a single cable (the bus). Simple to implement but a single point of failure.

- Star Topology: All devices connect to a central hub or switch. Easy to manage, scalable, and relatively fault-tolerant.

- Ring Topology: Devices are connected in a closed loop. Data travels in one direction. Relatively efficient but a single failure can disrupt the entire network.

- Mesh Topology: Devices connect to multiple other devices, providing redundancy and fault tolerance. Complex to implement but highly reliable.

- Tree Topology: A hierarchical structure combining star and bus topologies. Commonly used in larger networks.

For example, most home networks use a star topology, with all devices connected to a router. Large corporate networks often use a combination of topologies to balance performance, scalability, and redundancy.

Q 19. What are your preferred methods for documenting IT processes?

My preferred methods for documenting IT processes emphasize clarity, accessibility, and maintainability. I utilize a combination of techniques:

- Flowcharts: Visually represent the steps in a process, making them easy to understand and follow.

- Standard Operating Procedures (SOPs): Detailed, step-by-step instructions for performing specific tasks. These ensure consistency and reduce errors.

- Wiki Documentation: Collaborative platforms like Confluence allow for easy updating and sharing of information among team members.

- Diagram Tools: Tools like Visio or Lucidchart are useful for creating network diagrams, system architecture diagrams, and other visual representations.

- Version Control: Using a version control system like Git for documentation allows tracking changes, collaboration, and rollback capabilities.

The key is to choose the method that best suits the audience and the complexity of the process. Simple processes may only need a flowchart, while complex ones might require detailed SOPs and supporting diagrams.

Q 20. How do you stay up-to-date with the latest IT technologies?

Staying current in the rapidly evolving IT landscape requires a multi-faceted approach:

- Online Courses and Certifications: Platforms like Coursera, edX, and Udemy offer courses on various technologies. Pursuing relevant certifications validates skills and demonstrates commitment to professional development.

- Industry Publications and Blogs: Regularly reading industry publications, blogs, and news sites keeps me abreast of emerging trends and technologies.

- Conferences and Workshops: Attending conferences and workshops provides opportunities to network with peers, learn from experts, and gain insights into cutting-edge technologies.

- Professional Organizations: Joining professional organizations (e.g., ACM, IEEE) provides access to resources, publications, and networking opportunities.

- Hands-on Practice: Experimenting with new technologies through personal projects or contributing to open-source projects solidifies understanding and builds practical experience.

I actively participate in online communities and forums to engage with other professionals, discuss challenges, and share knowledge.

Q 21. Describe your experience with disaster recovery planning.

Disaster recovery planning is essential to ensure business continuity in case of unexpected events like natural disasters, cyberattacks, or hardware failures. A robust plan outlines procedures for minimizing downtime and data loss.

My experience involves developing and implementing plans that cover:

- Risk Assessment: Identifying potential threats and vulnerabilities.

- Business Impact Analysis: Determining the impact of various disruptions on business operations.

- Recovery Time Objective (RTO): Defining the acceptable downtime for critical systems.

- Recovery Point Objective (RPO): Specifying the acceptable data loss in case of failure.

- Data Backup and Recovery: Implementing strategies for regular data backups and testing the restoration process.

- High Availability (HA): Implementing technologies to minimize downtime (e.g., redundant servers, load balancing).

- Failover Mechanisms: Establishing procedures for switching to backup systems or locations.

- Testing and Drills: Regularly testing the disaster recovery plan through simulations and drills to validate its effectiveness.

For instance, in a previous role, we implemented a geographically redundant data center with automated failover capabilities. This ensured that if one data center was affected by a disaster, our applications and data were seamlessly transitioned to the other location with minimal disruption. Regular disaster recovery drills helped refine our procedures and ensure preparedness.

Q 22. Explain your understanding of different software development lifecycle models.

Software Development Lifecycle (SDLC) models are frameworks that define the stages involved in creating software. Different models suit various projects based on their size, complexity, and requirements. Here are a few popular ones:

- Waterfall: A linear, sequential approach. Each phase (requirements, design, implementation, testing, deployment, maintenance) must be completed before the next begins. Think of it like a waterfall, each stage cascading into the next. It’s simple to understand but inflexible, making it unsuitable for projects with evolving requirements.

- Agile: An iterative and incremental approach emphasizing flexibility and collaboration. Popular Agile methodologies include Scrum and Kanban. Instead of a large upfront design, Agile breaks the project into smaller, manageable sprints (typically 2-4 weeks), allowing for continuous feedback and adaptation. This is excellent for projects where requirements might change or where rapid prototyping is desired. I’ve successfully used Scrum on several projects, resulting in faster delivery and increased client satisfaction.

- Spiral: Combines elements of Waterfall and iterative models. It emphasizes risk management and involves repeated cycles of planning, risk analysis, engineering, and evaluation. Each cycle produces a more refined version of the software. This is beneficial for high-risk projects where thorough risk assessment is crucial.

- DevOps: Focuses on collaboration and communication between development and operations teams. It aims to automate and integrate the entire software lifecycle, leading to faster deployments and improved reliability. I’ve leveraged DevOps principles to streamline our deployment processes, reducing deployment times from days to hours.

Choosing the right SDLC model is crucial for project success. The choice depends on various factors such as project complexity, team size, client involvement, and risk tolerance.

Q 23. What are your experiences with implementing and managing security protocols?

Implementing and managing security protocols is paramount in any IT environment. My experience includes implementing various security measures, from basic network security to more complex cloud security strategies.

- Network Security: I’ve configured firewalls (both hardware and software), implemented intrusion detection/prevention systems (IDS/IPS), and managed virtual private networks (VPNs) to secure network access and protect against unauthorized intrusions. For example, I implemented a multi-layered firewall system that significantly reduced our vulnerability to external attacks.

- Data Security: I’ve worked extensively with data encryption (both at rest and in transit), access control lists (ACLs), and data loss prevention (DLP) tools to safeguard sensitive data. A specific project involved implementing end-to-end encryption for our customer database, ensuring compliance with data privacy regulations.

- Application Security: I’ve integrated security best practices into the software development lifecycle (SDLC), including secure coding practices, penetration testing, and vulnerability scanning. This proactive approach significantly reduces the risk of application-level vulnerabilities.

- Cloud Security: I have experience managing security in cloud environments (AWS, Azure, GCP), including identity and access management (IAM), security groups, and cloud-based security information and event management (SIEM) systems. Recently, I migrated our infrastructure to AWS and implemented robust IAM controls to enhance security.

Regular security audits and vulnerability assessments are crucial for maintaining a strong security posture. I always stay updated with the latest security threats and best practices to ensure the ongoing protection of our systems and data.

Q 24. How do you ensure the scalability and performance of IT systems?

Ensuring scalability and performance of IT systems requires a holistic approach. It’s about designing systems that can handle increasing workloads and user demands without compromising performance. Here’s my approach:

- Capacity Planning: This involves accurately predicting future resource needs (CPU, memory, storage, bandwidth). I use historical data and forecasting models to estimate future demands and proactively scale resources.

- Load Balancing: Distributing traffic across multiple servers prevents overloading any single server. I’ve implemented load balancers to ensure high availability and consistent performance, even during peak usage.

- Database Optimization: Database performance is crucial. I optimize database queries, indexing, and schema design to ensure efficient data retrieval. I’ve used techniques like query optimization and database sharding to improve database performance significantly.

- Caching Strategies: Caching frequently accessed data reduces database load and improves application response times. I’ve implemented caching mechanisms at various levels (browser, server, database) to enhance overall system performance. For example, I implemented Redis caching to dramatically reduce database load for frequently accessed user profiles.

- Horizontal Scaling: Adding more servers to handle increased load. This is a key aspect of cloud-based architectures. I’ve successfully scaled systems horizontally in AWS using auto-scaling groups.

Continuous monitoring and performance testing are crucial to identify and address performance bottlenecks proactively. Regularly analyzing system logs and metrics helps in identifying areas for improvement.

Q 25. Describe your experience with IT budgeting and cost management.

IT budgeting and cost management are critical for effective resource allocation. My experience includes developing and managing IT budgets, tracking expenses, and identifying cost-saving opportunities.

- Budget Planning: I collaborate with stakeholders to define IT goals and objectives, translating them into a detailed budget that aligns with the overall business strategy. This includes forecasting hardware, software, personnel, and operational costs.

- Cost Tracking and Analysis: I use various tools and techniques to monitor IT spending, analyze cost drivers, and identify areas for potential savings. I regularly review expenses against the budget to ensure we stay within allocated funds.

- Vendor Negotiation: I negotiate contracts with vendors to obtain the best possible pricing and service level agreements (SLAs). I actively seek out cost-effective solutions without compromising quality or security.

- Cloud Cost Optimization: In cloud environments, cost optimization is crucial. I use cloud cost management tools to monitor cloud spending, identify underutilized resources, and implement strategies to reduce cloud costs. For example, I implemented right-sizing instances in AWS, which reduced our cloud costs significantly.

- Return on Investment (ROI) Analysis: I assess the ROI of IT investments to justify spending and ensure that resources are allocated to projects that deliver maximum value.

Effective IT budgeting and cost management requires a combination of planning, monitoring, and analysis. It’s about getting the most value from every dollar spent while ensuring the IT department effectively supports the business goals.

Q 26. How do you communicate technical information to non-technical audiences?

Communicating technical information to non-technical audiences requires simplifying complex concepts and using clear, concise language. I employ several strategies:

- Analogies and Metaphors: Relating technical concepts to everyday experiences helps non-technical audiences understand the information more easily. For instance, explaining network latency using the analogy of traffic congestion on a highway.

- Visual Aids: Charts, diagrams, and infographics make complex information easier to grasp. A well-designed diagram can often convey information more effectively than lengthy explanations.

- Plain Language: Avoiding technical jargon and using simple, straightforward language is crucial. If jargon is necessary, I ensure to define it clearly.

- Storytelling: Presenting technical information in the form of a story can make it more engaging and memorable. This approach helps the audience connect with the information on a personal level.

- Active Listening and Feedback: Engaging with the audience, actively listening to their questions, and addressing any concerns or misunderstandings is essential for effective communication.

Tailoring the message to the specific audience is vital. I adjust my communication style depending on the audience’s level of technical expertise and their interest in the topic. I always aim for clear, concise, and engaging communication, regardless of the audience.

Q 27. What are your experience with system monitoring and alerting tools?

System monitoring and alerting tools are essential for maintaining the health and performance of IT systems. My experience includes using various tools to monitor system performance, detect anomalies, and respond to incidents.

- Nagios/Zabbix: These are popular open-source monitoring tools that I’ve used extensively to monitor server health, network performance, and application availability. They provide real-time monitoring and alerting capabilities, allowing for proactive identification of issues.

- Prometheus/Grafana: This powerful combination provides time-series database monitoring and visualization. I’ve used this stack to monitor application performance metrics and identify trends and anomalies. The visualization capabilities of Grafana make it easy to understand complex data.

- Datadog/Dynatrace: These are commercial monitoring tools providing advanced features such as automated anomaly detection, root cause analysis, and AI-powered insights. I’ve used these tools in larger-scale environments where comprehensive monitoring and detailed analysis are required.

- Cloud Monitoring Services: AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring provide built-in monitoring capabilities for cloud-based infrastructure and applications. I’ve leveraged these services for monitoring our cloud deployments, ensuring high availability and performance.

Choosing the right monitoring tools depends on the specific needs and scale of the IT environment. Effective monitoring involves configuring appropriate alerts, setting thresholds, and establishing clear escalation procedures to ensure timely responses to incidents.

Q 28. Describe your approach to problem-solving in an IT context.

My approach to problem-solving in an IT context is systematic and methodical. I typically follow these steps:

- Problem Definition: Clearly defining the problem is the first crucial step. This involves gathering all relevant information, understanding the symptoms, and identifying the scope of the issue. I avoid making assumptions and focus on gathering concrete data.

- Troubleshooting: Using a combination of technical expertise, diagnostic tools, and documentation, I systematically troubleshoot the problem. I often use a process of elimination to narrow down the potential causes.

- Root Cause Analysis: Identifying the root cause is critical to prevent recurrence. I use techniques like the 5 Whys to drill down to the underlying problem. This ensures that we address the fundamental issue rather than just treating the symptoms.

- Solution Implementation: Once the root cause is identified, I develop and implement a solution. This often involves coordinating with other teams and stakeholders. I ensure the solution addresses the problem effectively and doesn’t create new issues.

- Testing and Validation: Thoroughly testing the solution is essential to ensure it works as expected and doesn’t have any unintended side effects. This involves various types of testing depending on the complexity of the solution.

- Documentation and Knowledge Sharing: Documenting the problem, the troubleshooting process, and the solution is crucial for future reference and knowledge sharing. This helps other team members learn from past experiences and resolve similar issues more efficiently.

Throughout the problem-solving process, clear communication is key. I keep stakeholders informed of progress and any potential roadblocks. I strive to find efficient and effective solutions that minimize disruption and downtime.

Key Topics to Learn for Information Technology (IT) Proficiency Interview

- Networking Fundamentals: Understanding network topologies, protocols (TCP/IP, HTTP, DNS), and troubleshooting common network issues. Practical application: Explain how you would diagnose a network connectivity problem.

- Operating Systems (OS): Knowledge of Windows, macOS, or Linux, including file systems, process management, and security best practices. Practical application: Describe your experience managing user accounts and permissions within an OS.

- Databases: Familiarity with relational databases (SQL) and NoSQL databases. Practical application: Explain how you would design a database schema for a specific application.

- Cybersecurity: Understanding common threats, vulnerabilities, and security protocols. Practical application: Describe your approach to securing a computer system or network.

- Cloud Computing: Knowledge of cloud platforms (AWS, Azure, GCP) and cloud services (IaaS, PaaS, SaaS). Practical application: Discuss your experience with cloud-based infrastructure or applications.

- Problem-Solving & Analytical Skills: Demonstrating your ability to approach technical challenges logically and methodically. Practical application: Describe a complex technical problem you solved and your approach to the solution.

- Software Development Fundamentals (if applicable): Basic understanding of programming concepts, data structures, and algorithms. Practical application: Describe your experience with a specific programming language or framework.

Next Steps

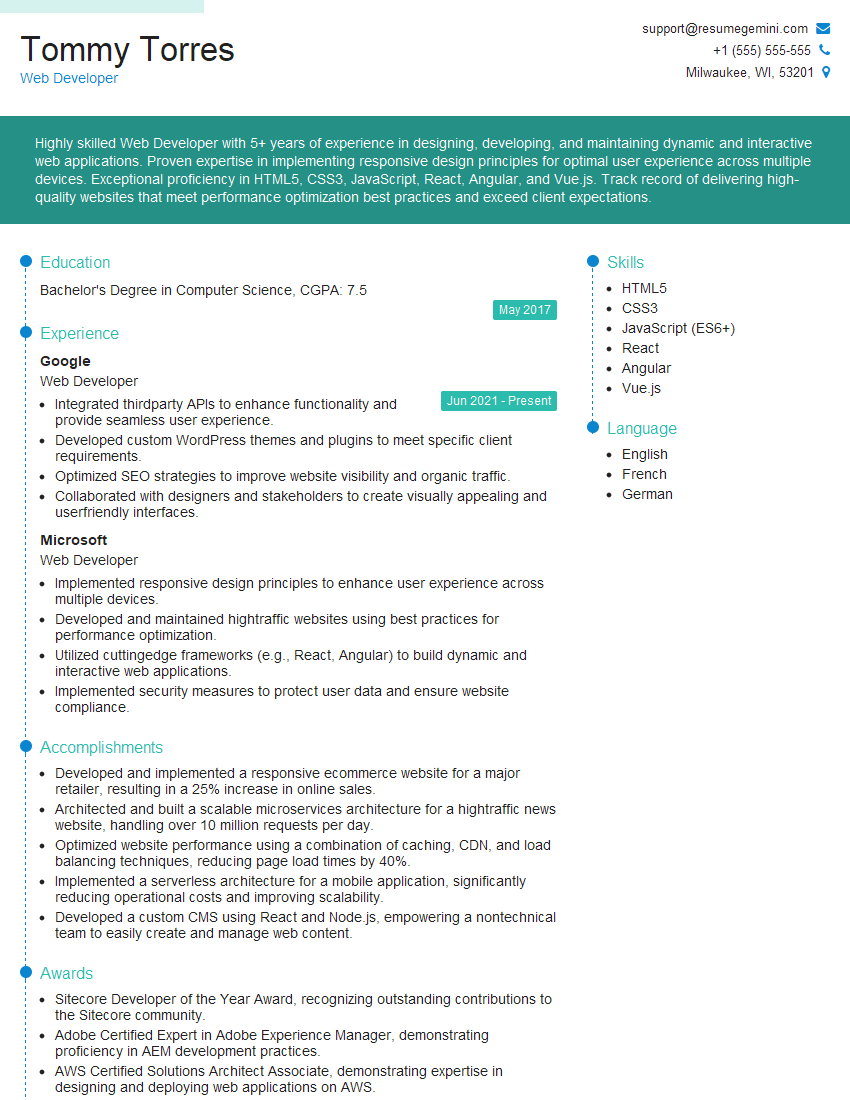

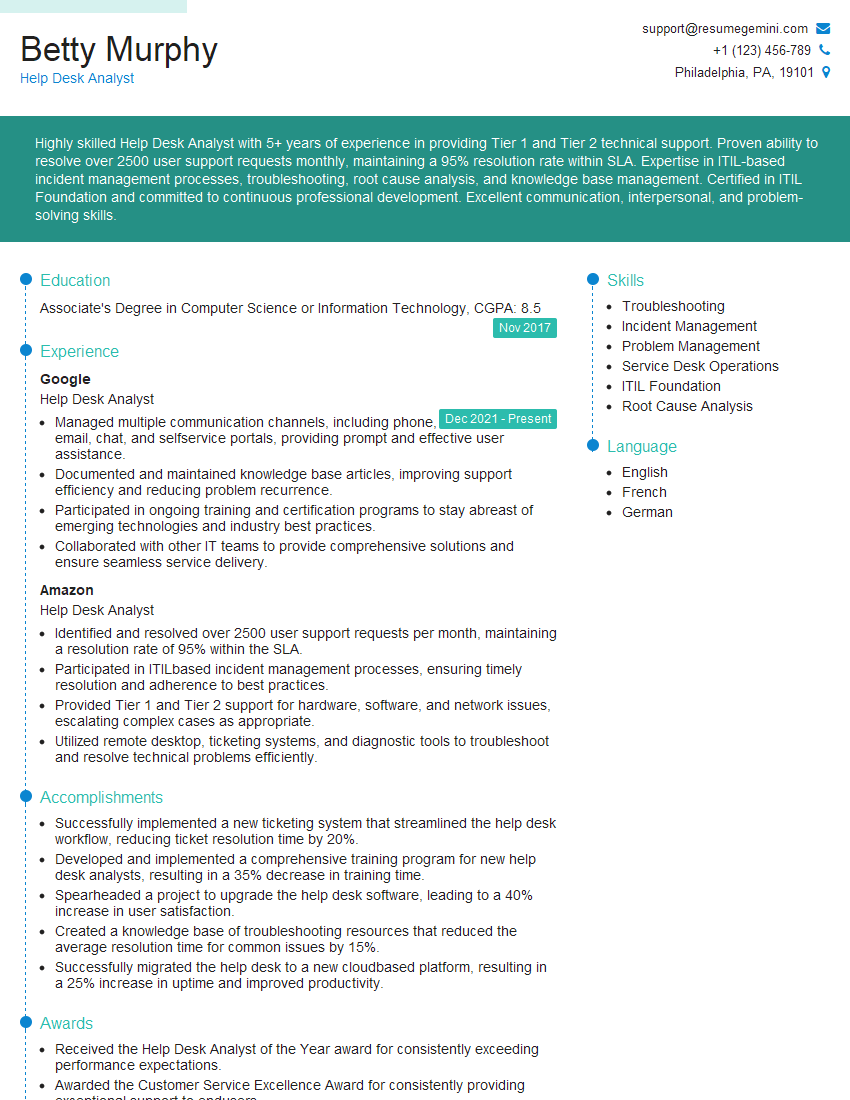

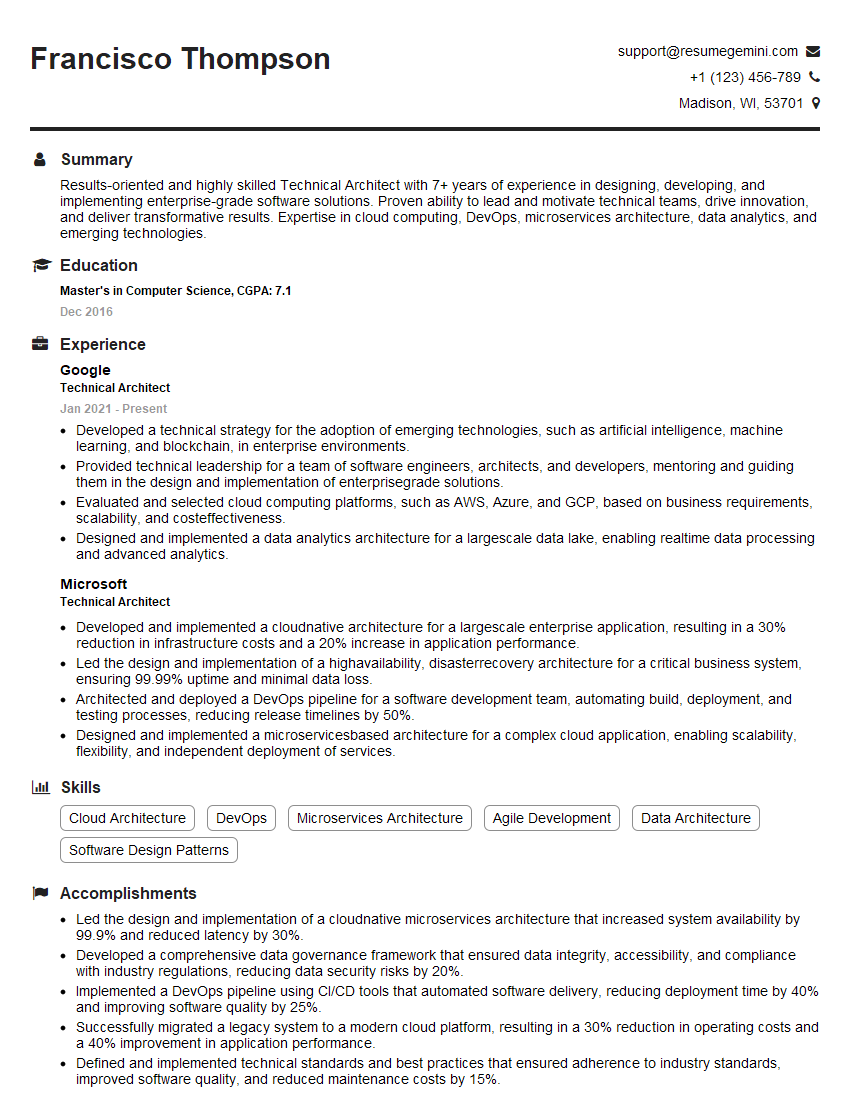

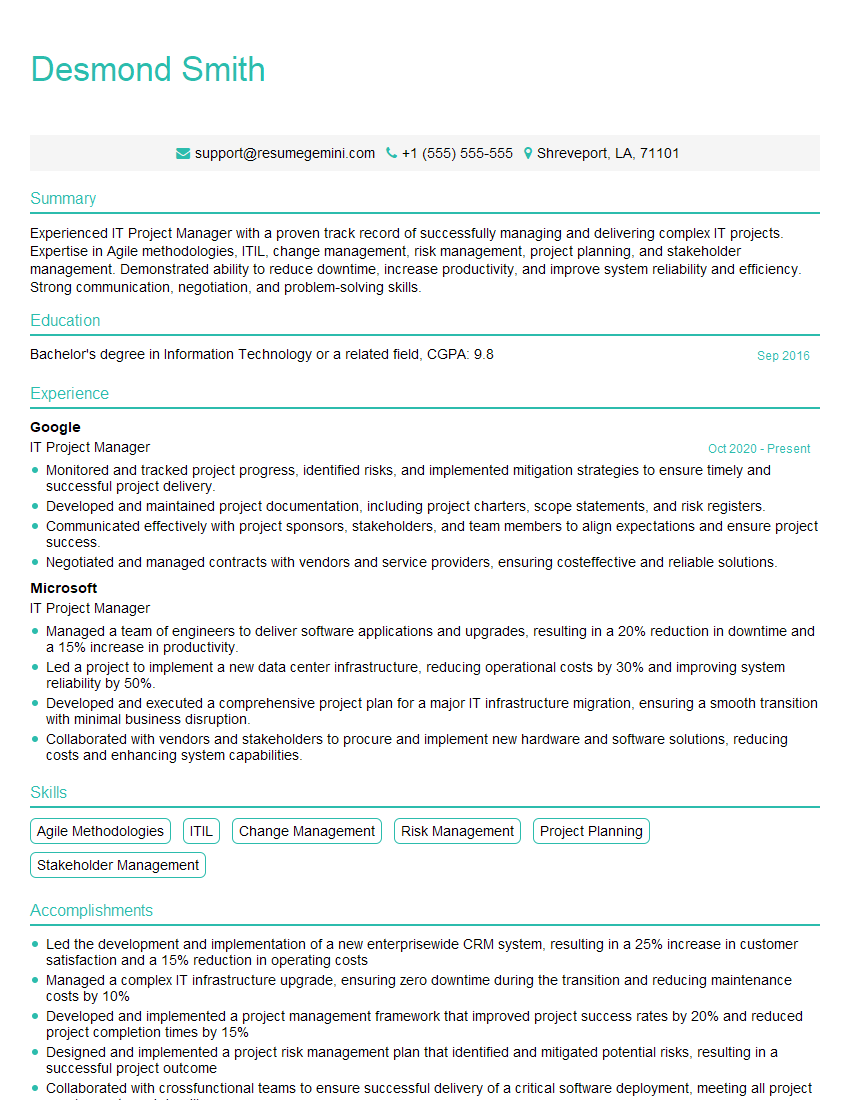

Mastering Information Technology proficiency is crucial for unlocking exciting career opportunities and accelerating your professional growth. A strong foundation in these key areas will significantly improve your chances of landing your dream IT role. To maximize your job prospects, it’s vital to create a resume that highlights your skills effectively and is optimized for Applicant Tracking Systems (ATS). We highly recommend using ResumeGemini to build a professional and ATS-friendly resume. ResumeGemini provides examples of resumes tailored to Information Technology (IT) Proficiency to help you showcase your skills and experience in the best possible light.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO