Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Random Forests interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Random Forests Interview

Q 1. Explain the Random Forest algorithm.

A Random Forest is a powerful ensemble learning method for both classification and regression tasks. Imagine it as a diverse team of decision trees, each making its own prediction. Instead of relying on a single tree, which might be prone to overfitting or making inaccurate predictions due to noise in the data, a Random Forest aggregates the predictions from many trees, resulting in a more robust and accurate outcome. This process reduces variance and improves generalization to unseen data.

Each tree in the forest is built on a slightly different subset of the training data and considers only a random subset of the features. This randomness ensures diversity among the trees, making the final prediction less sensitive to individual tree errors.

Q 2. What is bagging and how does it relate to Random Forests?

Bagging, short for Bootstrap Aggregating, is a core technique behind Random Forests. It involves creating multiple subsets of the original training data by randomly sampling with replacement. Think of it like drawing marbles from a bag; you can pick the same marble multiple times. This creates slightly different training sets for each tree in the forest.

In Random Forests, bagging reduces the variance of individual decision trees. By averaging the predictions of multiple trees trained on different subsets of the data, the final prediction is more stable and less influenced by noise or outliers in any single subset.

Q 3. Describe the process of building a Random Forest model.

Building a Random Forest model involves several steps:

- Data Preparation: Clean and preprocess the data, handling missing values and transforming categorical features as needed.

- Bootstrap Sampling: Create multiple bootstrap samples (subsets) of the training data using random sampling with replacement.

- Tree Construction: For each bootstrap sample, build a decision tree. At each node of the tree, a random subset of features is selected, and the best split is chosen based on a criterion like Gini impurity or information gain.

- Prediction Aggregation: For a new data point, each tree in the forest makes a prediction. For regression, the average prediction is taken; for classification, the majority vote (most frequent prediction) is used.

The number of trees (n_estimators in scikit-learn) is a hyperparameter that determines the size of the forest. More trees generally improve accuracy but increase computational cost.

Q 4. How does Random Forest handle missing values?

Random Forests handle missing values in several ways, often depending on the specific implementation. Some common approaches include:

- Imputation: Missing values can be filled with the mean, median, or mode of the respective feature. This is a simple but potentially inaccurate method if the missingness is not random.

- Proxy Variables: If other features strongly correlate with the one containing missing values, these could be used to predict the missing entries.

- Surrogate Splits: During tree construction, alternative splits can be chosen if a feature has missing values for a particular data point. The algorithm explores alternative paths in the tree to handle these cases.

Many implementations of Random Forest algorithms include built-in methods for handling missing data, such as the missing='NaN' option in scikit-learn’s RandomForestClassifier.

Q 5. Explain the concept of feature importance in Random Forests.

Feature importance in Random Forests quantifies the contribution of each feature to the model’s predictive accuracy. It helps understand which features are most relevant for the task and can guide feature selection or engineering. Common methods for calculating feature importance include:

- Gini Importance (Mean Decrease in Impurity): Measures the average reduction in Gini impurity across all trees caused by each feature. Higher values indicate more importance.

- Permutation Importance (Mean Decrease in Accuracy): This method assesses the change in model accuracy when the values of a specific feature are randomly permuted. A larger drop in accuracy indicates a more important feature.

Feature importance scores are usually normalized to sum to 1, providing a relative measure of the contribution of each feature.

Q 6. How do you choose the optimal number of trees in a Random Forest?

Choosing the optimal number of trees is crucial for balancing model accuracy and computational cost. Too few trees can lead to underfitting, while too many trees might not significantly improve accuracy and increase training time. Common strategies for determining the optimal number of trees include:

- Cross-Validation: Train multiple Random Forests with different numbers of trees and evaluate their performance using cross-validation techniques. Select the number of trees that yields the best cross-validated performance.

- Out-of-Bag (OOB) Error: In bagging, some data points are not used to train each tree (out-of-bag data). The OOB error is the average error rate calculated on the OOB data for each tree. Plotting the OOB error against the number of trees can help determine the point of diminishing returns.

- Early Stopping: Monitor the performance on a validation set during training. Stop adding trees when the improvement in performance plateaus.

Q 7. How does Random Forest deal with overfitting?

Random Forests are inherently resistant to overfitting, largely due to bagging and feature randomness. The averaging of predictions from multiple trees, each trained on a different subset of data and features, smooths out the effect of individual tree overfitting. Each tree is essentially a weak learner prone to overfitting, but the ensemble effect reduces this tendency significantly. The randomness in feature selection further mitigates overfitting by preventing the model from becoming too reliant on any single feature.

However, extremely deep trees or a very large number of trees can still lead to overfitting. Proper hyperparameter tuning, cross-validation, and pruning (limiting the tree depth) are important techniques to control overfitting in Random Forests.

Q 8. What are the advantages and disadvantages of Random Forests compared to other algorithms?

Random Forests are an ensemble learning method that combines multiple decision trees to improve predictive accuracy and robustness. Compared to other algorithms, they offer several advantages and disadvantages:

- Advantages:

- High Accuracy: Random Forests often achieve higher accuracy than single decision trees due to the averaging effect of multiple trees.

- Handles High Dimensionality: They can effectively handle datasets with a large number of features.

- Robust to Outliers: The ensemble nature makes them less sensitive to outliers in the data.

- Provides Feature Importance: They offer insights into which features are most influential in the predictions.

- Handles Missing Data: Many implementations can handle missing data effectively.

- Disadvantages:

- Computational Cost: Training Random Forests can be computationally expensive, especially with large datasets and many trees.

- Black Box Nature: While feature importance is provided, interpreting the internal workings of a Random Forest can be challenging compared to simpler models like linear regression.

- Overfitting (though less prone than single trees): With inappropriate hyperparameter tuning, they can still overfit, especially with a very large number of trees.

- Bias towards Categorical Features with Many Levels: Features with numerous levels can dominate the feature importance scores if not preprocessed carefully.

For example, consider predicting customer churn. A Random Forest might outperform a simple logistic regression by better capturing complex non-linear relationships between customer demographics and churn behavior. However, training a Random Forest on a massive customer database could take significantly longer than training a logistic regression model.

Q 9. Explain the difference between Gini impurity and entropy in Random Forest.

Both Gini impurity and entropy are measures of node impurity used in decision tree construction, and therefore within Random Forests. They quantify the level of disorder or randomness in a node’s data points. A pure node (all data points belong to the same class) has zero impurity. The goal of the tree building process is to minimize impurity at each split.

- Gini Impurity: Measures the probability of incorrectly classifying a randomly chosen element from the set. It’s calculated as:

1 - Σ (pi2)wherepiis the proportion of data points belonging to classiin the node. - Entropy: Measures the uncertainty or randomness in a node. It’s calculated as:

- Σ (pi * log2(pi)). A higher entropy value indicates greater uncertainty.

While both metrics aim to minimize impurity, Gini impurity is generally computationally faster, making it a popular choice for Random Forests. In practice, the difference in performance between using Gini impurity and entropy is often negligible.

Q 10. How do you tune hyperparameters for a Random Forest model?

Hyperparameter tuning for Random Forests involves finding the optimal settings for parameters that control the model’s training process. This often requires experimentation and the use of techniques like grid search or randomized search.

- n_estimators: The number of trees in the forest. Increasing this generally improves accuracy up to a point, but also increases computation time. A good starting point is often between 100 and 500.

- max_depth: The maximum depth of each tree. Controlling this prevents overfitting. Start with a relatively large value and reduce it if overfitting is observed.

- min_samples_split: The minimum number of samples required to split an internal node. Increasing this can prevent overfitting.

- min_samples_leaf: The minimum number of samples required to be at a leaf node. Similar to

min_samples_split, it helps control overfitting. - max_features: The number of features to consider when looking for the best split. A smaller value can improve efficiency and prevent overfitting.

- criterion: The function to measure the quality of a split (Gini impurity or entropy).

A common approach is to use cross-validation (e.g., k-fold cross-validation) to evaluate the performance of different hyperparameter combinations and select the combination that yields the best performance on a held-out validation set.

Tools like scikit-learn’s GridSearchCV or RandomizedSearchCV automate this process.

Q 11. What is the impact of the ‘max_depth’ parameter on Random Forest performance?

The max_depth parameter in Random Forest significantly impacts model performance. It limits the maximum depth of each decision tree in the forest.

- Low

max_depth: Results in simpler, less complex trees. This reduces the risk of overfitting, especially with noisy data, but might lead to underfitting if the depth is too small. The model may not capture complex relationships. - High

max_depth: Allows for more complex trees that can capture intricate relationships in the data. However, this increases the risk of overfitting, where the model performs well on training data but poorly on unseen data.

Finding the optimal max_depth often involves a trade-off between bias and variance. A common strategy is to start with a relatively large max_depth and gradually reduce it while monitoring performance using cross-validation. Visualizing the performance curve (accuracy vs. max_depth) can help identify the point of diminishing returns.

Q 12. How does Random Forest handle categorical variables?

Random Forests handle categorical variables in several ways, depending on the specific implementation. Most implementations use techniques that convert categorical features into numerical representations:

- One-Hot Encoding: Each category becomes a binary feature (0 or 1). This is a common and often effective approach.

- Label Encoding: Assigns an integer to each category. This is simpler but can introduce an artificial ordinality that may not exist in the data. This method should generally be avoided, as it can lead to bias in the model.

- Target Encoding: Each category is replaced by the average target value for that category. This can be effective but is prone to overfitting if not handled carefully (e.g., using regularization or smoothing techniques).

The choice of encoding method can influence the performance of the Random Forest. One-hot encoding is generally preferred for nominal features (where order doesn’t matter). More sophisticated encoding techniques may be necessary for ordinal features (where order matters), especially with many categories.

Q 13. Explain the concept of out-of-bag error.

Out-of-bag (OOB) error is a crucial evaluation metric for Random Forests that leverages the bootstrapping process during training. In Random Forests, each tree is trained on a bootstrap sample of the data (a random sample with replacement). This means some data points are left out of the training set for each tree.

These left-out data points, called OOB samples, are used to estimate the model’s performance without the need for a separate validation set. For each tree, the OOB samples are predicted using only the trees that didn’t use them during training. The OOB error is simply the average prediction error across all OOB samples. This provides a reliable estimate of the model’s generalization performance.

OOB error is a valuable tool because it eliminates the need for a separate hold-out validation set, making it particularly useful when the dataset is small.

Q 14. How do you interpret the feature importance scores from a Random Forest model?

Feature importance scores from a Random Forest quantify the contribution of each feature to the model’s predictive power. These scores are typically derived by measuring how much each feature reduces the impurity (e.g., Gini impurity or entropy) across all the trees in the forest.

A higher score indicates a more influential feature. However, it’s crucial to interpret these scores with caution:

- Correlation vs. Causation: High feature importance doesn’t necessarily imply direct causal relationships. Features might be highly correlated, leading to inflated importance scores.

- Interaction Effects: The scores might not reflect interaction effects between features. A feature might be important only in conjunction with other features.

- Scale Dependence: The scores can be affected by the scale of the features. Standardization or normalization is often advisable before interpreting feature importance.

Despite these limitations, feature importance scores are valuable tools for understanding which variables are most relevant to the prediction task. This can inform feature engineering, feature selection, and model interpretation.

For example, in a credit risk model, high feature importance for ‘credit history’ suggests that this variable strongly influences the model’s predictions, providing valuable insight into the factors driving credit risk.

Q 15. How can you improve the performance of a Random Forest model?

Improving a Random Forest’s performance involves optimizing various aspects of the model. It’s not a one-size-fits-all solution, but rather a process of iterative refinement. Here are key strategies:

Hyperparameter Tuning: This is crucial. Key hyperparameters include the number of trees (

n_estimators), maximum depth of each tree (max_depth), minimum samples required to split an internal node (min_samples_split), and minimum samples required to be at a leaf node (min_samples_leaf). Experiment with different combinations using techniques like grid search or randomized search to find the optimal settings for your specific dataset. For instance, increasingn_estimatorsgenerally improves accuracy (up to a point of diminishing returns), while controllingmax_depthprevents overfitting.Feature Engineering: Carefully selecting and transforming features can significantly impact performance. This involves understanding your data and creating new features that better capture the underlying patterns. For example, combining existing features or creating interaction terms might improve predictive accuracy.

Feature Selection: Not all features are equally important. Removing irrelevant or redundant features can reduce noise and improve model efficiency. Techniques like recursive feature elimination or feature importance scores from the Random Forest itself can help identify important features.

Addressing Class Imbalance: If your dataset has an uneven distribution of classes, techniques like oversampling the minority class, undersampling the majority class, or using cost-sensitive learning can be applied to improve the model’s ability to predict the less frequent class.

Data Cleaning and Preprocessing: Handling missing values, outliers, and inconsistencies in the data is essential for any machine learning model. Techniques like imputation (filling in missing values) or outlier removal can greatly enhance performance.

Ensemble Methods (Beyond Random Forest): Consider combining your Random Forest with other models using techniques like stacking or bagging. This can leverage the strengths of different algorithms and potentially improve overall predictive power.

Imagine you’re building a model to predict customer churn. By carefully tuning hyperparameters, you might find the optimal number of trees and maximum depth that balances model complexity and prediction accuracy. Feature engineering could involve creating new features such as ‘average transaction value’ or ‘days since last purchase’ which might significantly improve the model’s ability to identify at-risk customers.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe a situation where you would choose Random Forest over other algorithms.

I’d choose Random Forest over other algorithms when:

High dimensionality and complex relationships: Random Forests excel at handling datasets with numerous features and complex interactions between them. Unlike linear models that assume linear relationships, Random Forests can capture non-linear patterns effectively.

Robustness to outliers and noise: The ensemble nature of Random Forests makes them relatively robust to noisy data and outliers. The averaging effect of multiple trees helps mitigate the influence of individual noisy data points.

Interpretability (to a degree): While not as interpretable as a single decision tree, Random Forests provide feature importance scores which can offer insights into which variables are most influential in the predictions. This is valuable for understanding the underlying drivers of the outcome.

Need for high accuracy: Random Forests frequently achieve high accuracy compared to other algorithms, especially when dealing with complex datasets.

Minimal data preprocessing: Random Forests generally require less data preprocessing compared to some other algorithms, particularly regarding feature scaling or normalization.

For example, in fraud detection, where you might have a vast number of transactional features and a relatively small number of fraudulent cases, a Random Forest’s ability to handle high dimensionality and class imbalance makes it a strong candidate. Its robustness to noisy data is also beneficial, as real-world transactional data often contains inconsistencies and errors.

Q 17. How do you evaluate the performance of a Random Forest model?

Evaluating a Random Forest’s performance involves a combination of metrics tailored to the problem’s nature. For classification problems, common metrics include:

Accuracy: The overall percentage of correctly classified instances. Simple but can be misleading with imbalanced datasets.

Precision: The proportion of true positive predictions among all positive predictions. Important when the cost of false positives is high (e.g., medical diagnosis).

Recall (Sensitivity): The proportion of true positive predictions among all actual positives. Important when the cost of false negatives is high (e.g., fraud detection).

F1-score: The harmonic mean of precision and recall, providing a balanced measure.

AUC-ROC (Area Under the Receiver Operating Characteristic Curve): A measure of the model’s ability to distinguish between classes, useful for imbalanced datasets.

For regression problems, common metrics include:

Mean Squared Error (MSE): The average squared difference between predicted and actual values.

Root Mean Squared Error (RMSE): The square root of MSE, easier to interpret as it’s in the same units as the target variable.

R-squared: The proportion of variance in the target variable explained by the model.

Beyond these, techniques like cross-validation are crucial for obtaining robust performance estimates and avoiding overfitting. K-fold cross-validation is a common approach, where the data is divided into k subsets, the model is trained on k-1 subsets and tested on the remaining subset, and this process is repeated k times.

Q 18. Explain the bias-variance tradeoff in the context of Random Forests.

The bias-variance tradeoff is a fundamental concept in machine learning. Bias refers to the error introduced by approximating a real-world problem with a simplified model. High bias leads to underfitting, where the model is too simple to capture the underlying patterns in the data. Variance refers to the model’s sensitivity to fluctuations in the training data. High variance leads to overfitting, where the model learns the training data too well and performs poorly on unseen data.

Random Forests strike a good balance between bias and variance. The bagging (bootstrap aggregating) technique used in Random Forests reduces variance by averaging the predictions of multiple decision trees. Each tree is trained on a different subset of the data, and the random subspace method (randomly selecting a subset of features for each tree) further reduces variance and prevents individual trees from overfitting. The combination of many diverse trees leads to a lower variance model compared to a single decision tree. While the bias might be slightly higher than a highly complex single tree, the reduction in variance leads to better generalization performance on unseen data.

Imagine you’re aiming an arrow at a target. High bias is like consistently missing the target in the same direction (your model is consistently wrong in a predictable way). High variance is like your shots being scattered all over the target (your model is highly sensitive to small changes in the training data). A well-tuned Random Forest aims for a tight grouping of shots near the bullseye, balancing both bias and variance.

Q 19. What is the difference between a decision tree and a Random Forest?

A decision tree is a single tree-like structure that recursively partitions the data based on feature values to make predictions. It’s relatively simple to understand and interpret but prone to overfitting, especially with complex datasets.

A Random Forest is an ensemble method that combines multiple decision trees. Each tree is trained on a different bootstrap sample of the data, and a random subset of features is considered at each split. The final prediction is typically an average of the predictions from all trees (for regression) or a majority vote (for classification). This ensemble approach significantly reduces overfitting and generally leads to improved accuracy and robustness.

Think of it like this: a single decision tree is like a single expert making a decision. A Random Forest is like having a panel of many experts, each with slightly different viewpoints and training, who collectively make a more informed and reliable decision.

Q 20. How do you handle imbalanced datasets when using Random Forests?

Imbalanced datasets, where one class significantly outweighs others, pose a challenge for many machine learning algorithms, including Random Forests. Here are some strategies to handle this:

Resampling Techniques:

Oversampling: Increase the number of instances in the minority class by duplicating existing instances or generating synthetic samples (SMOTE – Synthetic Minority Over-sampling Technique).

Undersampling: Reduce the number of instances in the majority class by randomly removing samples.

Cost-Sensitive Learning: Assign different misclassification costs to different classes. For example, give a higher penalty to misclassifying the minority class. This can be done by adjusting class weights in the Random Forest algorithm.

Anomaly Detection Techniques: If the minority class represents anomalies (e.g., fraud), consider framing the problem as an anomaly detection task and using appropriate algorithms.

Ensemble Methods: Combine different models trained on different resampled datasets or with different cost-sensitive parameters.

In a credit card fraud detection scenario, where fraudulent transactions are significantly less frequent than legitimate ones, oversampling the fraudulent transactions or using cost-sensitive learning (giving a higher penalty for misclassifying a fraudulent transaction as legitimate) can greatly improve the model’s ability to detect fraud.

Q 21. How would you explain a Random Forest model to a non-technical audience?

Imagine you need to decide if a picture shows a cat or a dog. Instead of relying on one person’s opinion, you ask many different people. Each person looks at the picture and gives their best guess. Some people might be better at identifying cats, others might be better at identifying dogs.

A Random Forest is similar. It’s like asking many different ‘experts’ (decision trees) to make a prediction. Each expert is trained on a slightly different set of images, and they look at only some of the picture’s features. The final answer is the majority vote from all the experts. This approach makes the prediction more accurate and reliable because it considers many different perspectives and reduces the impact of any single expert’s mistakes.

Q 22. What are some common pitfalls to avoid when using Random Forests?

Random Forests, while powerful, are susceptible to several pitfalls. One major issue is overfitting, especially with a high number of trees or insufficient data. Imagine trying to build a house with too many oddly shaped bricks (features) – it might perfectly fit the existing foundation (training data), but fall apart when faced with a new one (unseen data). To avoid this, proper hyperparameter tuning (like tree depth and number of trees) through techniques such as cross-validation is crucial. Another pitfall is the ‘black box’ nature of Random Forests; interpreting individual tree contributions can be challenging, making it difficult to understand feature importance conclusively. Finally, Random Forests can struggle with high correlations between features, potentially leading to instability and decreased predictive accuracy. Feature engineering or dimensionality reduction techniques can help mitigate this.

In practice, always start with a thorough exploratory data analysis (EDA) to understand your data and potential issues. Implement robust cross-validation strategies to avoid overfitting and select optimal hyperparameters. Feature selection techniques can help combat high dimensionality and correlated features.

Q 23. How do you deal with high dimensionality in Random Forests?

High dimensionality is a common challenge in machine learning, and Random Forests are not immune. Dealing with it effectively requires a multi-pronged approach. One solution is feature selection, where we identify and keep only the most relevant features. Techniques like recursive feature elimination (RFE) or filter methods based on correlation or mutual information can help. Another approach is dimensionality reduction using methods like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE). These methods transform the original high-dimensional data into a lower-dimensional space while preserving as much variance as possible. Finally, Random Forests themselves offer some built-in dimensionality reduction through the random subspace method (explained further in a later question). By randomly selecting subsets of features for each tree, the algorithm implicitly deals with high dimensionality.

For example, if you have a dataset with hundreds of features, applying PCA to reduce it to the top 20 principal components might significantly improve training speed and potentially model accuracy by eliminating redundant or noisy features.

Q 24. How can you parallelize the training of a Random Forest model?

The beauty of Random Forests is their inherent parallelizability. Since each tree is grown independently from the others, the process of building multiple trees can be easily distributed across multiple cores or machines. Most Random Forest implementations leverage this characteristic, significantly reducing training time, especially for large datasets. This can be achieved using various tools and libraries. Libraries like scikit-learn in Python allow for parallelization through specific parameters. For even larger datasets, distributed computing frameworks like Spark or Hadoop can be utilized.

In a professional setting, leveraging parallelization can be crucial for delivering timely results. Consider a scenario where you need to train a model on a massive dataset within a short timeframe. Parallelizing the Random Forest training process is essential for completing this task efficiently.

Q 25. Explain the concept of random subspace in Random Forests.

The ‘random subspace’ method is a key component of Random Forests that helps prevent overfitting and improve the model’s generalization ability. Unlike bagging (bootstrap aggregating) which samples data points, random subspace samples features. For each tree in the forest, a random subset of features is selected to build that tree. This introduces further randomness into the model, preventing any single feature from dominating the decision-making process and improving robustness to correlated features. It’s like having a team of experts, each specializing in a different subset of skills to analyze a problem – this diversity leads to a more robust and accurate overall solution.

For instance, imagine you’re predicting customer churn based on demographics, purchase history, and browsing behavior. Random subspace ensures that each tree considers only a random subset of these features, preventing over-reliance on a single aspect like purchase frequency.

Q 26. Describe your experience with implementing Random Forests in a specific project.

In a recent project involving customer fraud detection, I utilized Random Forests to predict fraudulent transactions. The dataset contained a large number of features, including transaction amounts, locations, times, and user behavior patterns. Initial attempts resulted in overfitting. To address this, I employed a rigorous hyperparameter tuning process using k-fold cross-validation, optimizing for both precision and recall. Furthermore, I implemented feature scaling and dimensionality reduction using PCA to improve training speed and model performance. The resulting Random Forest model significantly outperformed baseline models, achieving a 15% reduction in false positives while maintaining high recall.

This project highlighted the importance of a systematic approach to hyperparameter tuning and feature engineering, along with understanding the dataset’s specific characteristics for optimal model performance.

Q 27. Compare and contrast Random Forests with Gradient Boosting Machines (GBMs).

Both Random Forests and Gradient Boosting Machines (GBMs) are ensemble methods that combine multiple decision trees to make predictions. However, they differ significantly in their approach. Random Forests build trees independently and average their predictions, emphasizing randomness and reducing variance. GBMs, on the other hand, build trees sequentially, with each subsequent tree correcting the errors of its predecessors. This sequential approach focuses on reducing bias. Think of it this way: Random Forests use a diverse team of independent experts, while GBMs train a team where each member learns from the mistakes of previous members.

In terms of performance, GBMs often achieve higher accuracy, but they are more prone to overfitting if not carefully tuned. Random Forests are generally more robust and less sensitive to hyperparameter settings but might not reach the same level of peak accuracy as GBMs. The choice depends on the specific problem and priorities (accuracy versus robustness and interpretability).

Q 28. What are some alternative algorithms to Random Forests and when would you use them?

Several alternative algorithms exist depending on the specific needs of a project. Support Vector Machines (SVMs) are excellent for high-dimensional data and can offer good generalization performance. Neural Networks, particularly deep learning models, are powerful but require significant computational resources and expertise. Naive Bayes is a simple and efficient algorithm, particularly useful for text classification or when dealing with high-dimensional data with many features. k-Nearest Neighbors (k-NN) is a non-parametric method suitable for situations where the relationship between features and target variable is complex and difficult to model explicitly.

The choice depends on various factors such as data size, dimensionality, computational resources, and desired interpretability. For example, Naive Bayes might be preferable for its simplicity and speed when dealing with very large datasets, while SVMs might be better suited for high-dimensional data with clear separating hyperplanes. Neural Networks would be considered if high accuracy is paramount, despite the increased complexity and computational cost.

Key Topics to Learn for Random Forests Interview

- Decision Trees: Understand the fundamentals of decision trees, including splitting criteria (Gini impurity, information gain), pruning techniques, and overfitting prevention. Consider exploring different tree algorithms like CART and ID3.

- Bagging and Random Subspaces: Grasp the core concepts behind bootstrapping aggregations (bagging) and how random subspaces enhance model diversity and reduce overfitting in Random Forests.

- Bias-Variance Tradeoff: Explain how Random Forests address the bias-variance dilemma compared to single decision trees. Be prepared to discuss the impact of hyperparameter tuning on this tradeoff.

- Hyperparameter Tuning: Discuss the importance of optimizing parameters like the number of trees, tree depth, and number of features considered at each split. Understand the impact of these choices on model performance and computational cost.

- Feature Importance: Know how to interpret feature importance scores generated by Random Forests and utilize this information for feature selection and model understanding. Be ready to explain different methods for determining feature importance.

- Out-of-Bag (OOB) Error Estimation: Explain the concept of OOB error and its advantages in evaluating Random Forest models without the need for a separate validation set.

- Practical Applications: Be ready to discuss real-world applications of Random Forests, such as image classification, fraud detection, customer segmentation, and medical diagnosis. Prepare examples showcasing your understanding of how the algorithm is applied in diverse contexts.

- Ensemble Methods Comparison: Compare and contrast Random Forests with other ensemble methods like Gradient Boosting Machines (GBM) and AdaBoost. Understand their strengths and weaknesses and when to prefer one over another.

- Handling Imbalanced Datasets: Discuss strategies for dealing with class imbalance in datasets when using Random Forests, such as cost-sensitive learning or resampling techniques.

- Model Interpretability and Explainability: Discuss the relative interpretability of Random Forests compared to other machine learning models and techniques used to enhance explainability, such as partial dependence plots.

Next Steps

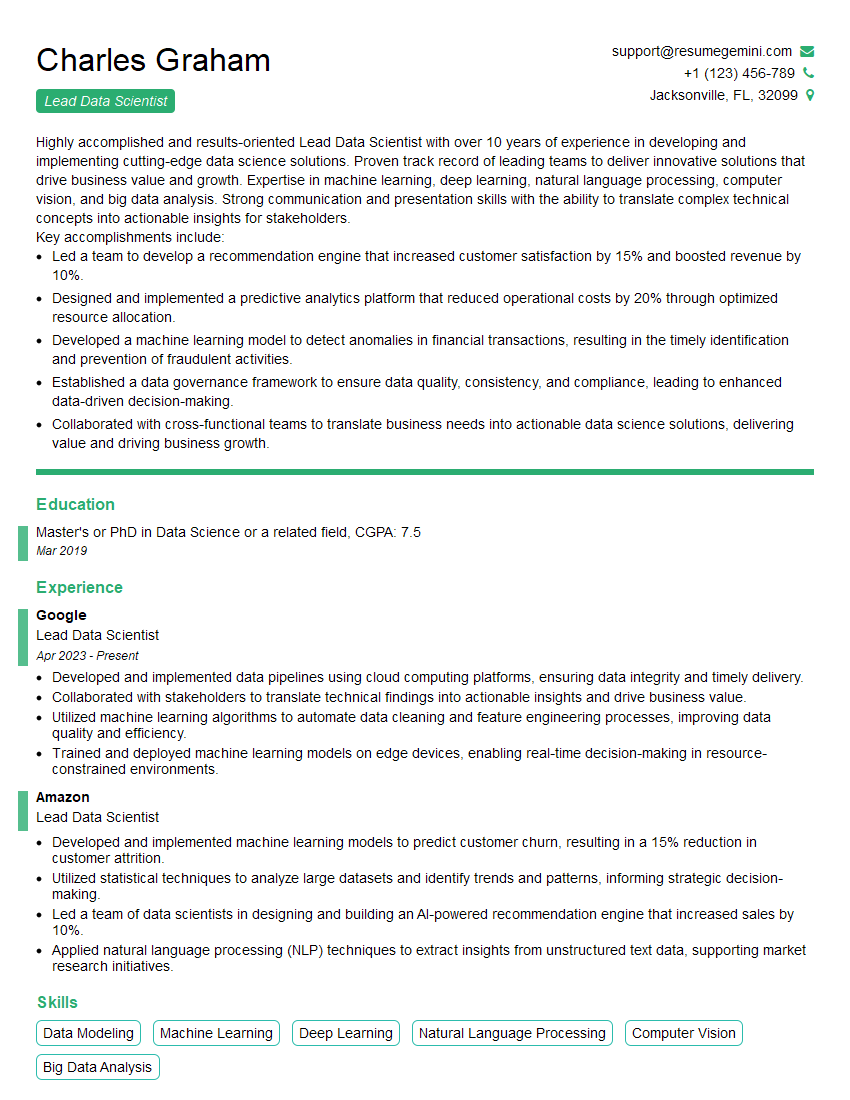

Mastering Random Forests significantly enhances your value in the data science job market, opening doors to diverse and challenging roles. To maximize your job prospects, crafting an ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a compelling and effective resume that highlights your Random Forests expertise. We provide examples of resumes tailored specifically to showcase Random Forests skills, helping you present your qualifications in the most impactful way.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO