The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Data Cleaning and Transformation interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Data Cleaning and Transformation Interview

Q 1. Explain the difference between data cleaning and data transformation.

Data cleaning and data transformation are both crucial steps in data preprocessing, but they address different aspects. Think of it like preparing a meal: cleaning is like washing and chopping vegetables, removing unwanted parts, while transformation is like cooking them – changing their form and creating something new.

Data cleaning focuses on identifying and correcting (or removing) inaccurate, incomplete, irrelevant, duplicated, or inconsistent data. This ensures the data is reliable and consistent. Examples include handling missing values, removing duplicates, and correcting spelling errors.

Data transformation involves converting data from one format or structure into another to make it more suitable for analysis or modeling. This could involve scaling numerical features, converting categorical variables into numerical representations (like one-hot encoding), or creating new features from existing ones.

For instance, cleaning might involve removing rows with missing ages in a customer dataset, while transformation could involve converting those ages into age groups (e.g., 18-25, 26-35, etc.). They often work together – you might clean the data first, then transform the cleaned data for analysis.

Q 2. What are the common techniques for handling missing data?

Missing data is a common problem. Several techniques exist to handle it, and the best approach often depends on the dataset and the amount of missing data. Here are some of the most common strategies:

- Deletion: This involves removing rows or columns with missing values. Listwise deletion removes entire rows, while pairwise deletion only removes data for specific analyses. This is simple but can lead to significant information loss if a large portion of data is missing.

- Imputation: This replaces missing values with estimated values. Common methods include:

- Mean/Median/Mode Imputation: Replace missing values with the mean (average), median (middle value), or mode (most frequent value) of the respective column. Simple but can distort the distribution if many values are missing.

- Regression Imputation: Use regression models to predict missing values based on other variables. More sophisticated but requires careful model selection.

- K-Nearest Neighbors (KNN) Imputation: Finds the k nearest data points with complete data and uses their values to estimate the missing value. A good balance between simplicity and accuracy.

- Multiple Imputation: Creates multiple plausible imputed datasets and combines the results. This addresses the uncertainty associated with single imputation.

The choice depends on the nature of the data and the percentage of missing values. For instance, mean imputation is quick but might not be suitable for skewed data, while KNN imputation is more robust but computationally more expensive.

Q 3. Describe different methods for outlier detection and treatment.

Outliers are data points that significantly deviate from the rest of the data. They can be caused by errors in data entry, natural variation, or truly exceptional events. Identifying and handling them is crucial because they can heavily skew statistical analyses and model results.

Detection Methods:

- Visual Inspection: Box plots, scatter plots, and histograms can visually reveal outliers.

- Z-score: Measures how many standard deviations a data point is from the mean. Data points with a Z-score above a certain threshold (e.g., 3 or -3) are often considered outliers.

- IQR (Interquartile Range): Calculates the difference between the 75th and 25th percentiles. Outliers are typically defined as data points below Q1 – 1.5*IQR or above Q3 + 1.5*IQR.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): A clustering algorithm that identifies outliers as points that are not part of any dense cluster.

Treatment Methods:

- Removal: Removing outliers is straightforward but can lead to information loss if they represent genuine events.

- Transformation: Applying transformations like logarithmic or Box-Cox transformations can reduce the influence of outliers.

- Winsorizing/Trimming: Replacing extreme values with less extreme values (Winsorizing) or removing a certain percentage of extreme values (Trimming).

- Imputation: Replacing outliers with imputed values, similar to handling missing data.

The choice of method depends on the context. For example, if outliers represent genuine errors, removal is suitable. If they represent extreme but valid values, transformation or winsorizing might be preferable.

Q 4. How do you identify and handle inconsistent data formats?

Inconsistent data formats create major headaches. Imagine a dataset with dates formatted as MM/DD/YYYY in some rows and DD/MM/YYYY in others – your analysis will be completely unreliable. Here’s how to handle them:

Identification:

- Data Profiling: Tools and techniques that automatically examine the data to detect inconsistencies in data types, formats, and ranges.

- Visual Inspection: Carefully reviewing samples of the data, especially columns with textual or date information.

- Regular Expressions: Using regular expressions to check for patterns and deviations from expected formats in textual data.

Handling:

- Standardization: Convert all data into a consistent format. For dates, this involves using a standard format (e.g., YYYY-MM-DD) and converting everything to this format. For text, this involves case conversion (lowercase) or using consistent separators.

- Parsing: Break down complex data fields into smaller, more manageable and consistent components. For example, parsing a full address string into separate fields for street, city, state, and zip code.

- Data Transformation Functions: Utilize programming language functions to specifically convert data formats. For example, using

strptime()in Python to convert date strings into datetime objects.

For example, you might use Python’s pandas library to detect inconsistent date formats and then use its date parsing functionalities to standardize them:

import pandas as pd

df['date_column'] = pd.to_datetime(df['date_column'], format='%m/%d/%Y', errors='coerce')The errors='coerce' argument handles cases where the parsing fails and converts them to NaT (Not a Time) values, which you can then handle using missing data techniques.

Q 5. Explain the concept of data standardization and normalization.

Standardization and normalization are data transformation techniques used to scale numerical features. They aim to improve the performance of machine learning models and avoid features with larger values dominating the analysis. Think of it like leveling a playing field.

Standardization (Z-score normalization): Transforms data to have a mean of 0 and a standard deviation of 1. This is achieved by subtracting the mean from each data point and dividing by the standard deviation:

z = (x - μ) / σwhere x is the data point, μ is the mean, and σ is the standard deviation. Standardization is useful when the data is normally distributed or approximately so.

Normalization (Min-Max scaling): Scales data to a range between 0 and 1. This is achieved by subtracting the minimum value from each data point and dividing by the range (maximum minus minimum):

x' = (x - min) / (max - min)where x is the data point, min is the minimum value, and max is the maximum value. Normalization is useful when the data has different ranges and you want to bring them to a comparable scale.

Choosing between standardization and normalization depends on the data and the algorithm. Standardization is less sensitive to outliers, whereas normalization preserves the original distribution better.

Q 6. What are some common data quality issues you’ve encountered?

Throughout my career, I’ve encountered numerous data quality issues. Some of the most common include:

- Missing values: Data points missing entirely or partially. This is often due to data entry errors, equipment malfunctions, or incomplete surveys.

- Inconsistent data formats: Dates, numbers, and text not following a unified standard across the dataset, like the date format example above.

- Outliers: Extreme values that deviate significantly from the rest of the data, potentially skewing results.

- Duplicate data: Identical rows or entries. This can inflate sample sizes and lead to biased analysis.

- Data type errors: Incorrect data types assigned to variables (e.g., a numerical value stored as text).

- Inaccurate data: Incorrect values due to typos, human error, or faulty measurement equipment.

- Incomplete data: Missing information that is necessary for analysis or modelling.

For example, I once worked on a project with customer data where addresses were inconsistently formatted, dates were recorded in various formats and some important fields were missing in some entries. This required extensive data cleaning and transformation before we could even start the analysis.

Q 7. How do you ensure data integrity during the transformation process?

Ensuring data integrity during transformation is paramount. Errors introduced during this phase can invalidate the entire analysis. Here’s how to ensure integrity:

- Validation Checks: Implement checks at each step of the transformation process to verify that the data remains consistent and accurate. This might include checking data types, ranges, and the validity of transformed values.

- Version Control: Track changes made to the data during the transformation process using version control systems (like Git). This allows you to easily revert to previous versions if needed.

- Logging: Record all transformations applied to the data, including the specific steps, parameters used and any errors encountered. This facilitates debugging and reproducibility.

- Data Auditing: Regularly audit the transformed data to compare it against the original data and check for inconsistencies or anomalies.

- Unit Testing: For automated transformation pipelines, unit testing is vital. Write tests to ensure that individual transformation functions produce the expected output under different conditions.

- Data Dictionaries: Maintain a comprehensive data dictionary that documents the meaning, data type, and format of each variable. This ensures everyone involved understands the data and transformations applied.

For example, after applying a transformation like one-hot encoding, you should validate that the resulting columns have the correct number of categories and that there are no unexpected values.

Q 8. What are your preferred tools or technologies for data cleaning and transformation?

My preferred tools for data cleaning and transformation span a range of technologies, depending on the scale and nature of the project. For smaller datasets, I find tools like Excel with Power Query or OpenRefine incredibly versatile. These offer intuitive interfaces for handling tasks like data standardization, deduplication, and basic transformations.

For larger datasets and more complex ETL processes, I heavily rely on programming languages like Python with libraries such as Pandas and NumPy. Pandas provides powerful data manipulation capabilities, allowing for efficient cleaning, transformation, and analysis. NumPy’s array operations are invaluable for numerical data processing.

For larger-scale, production-level data pipelines, I utilize tools like Apache Spark or cloud-based platforms like AWS Glue or Azure Data Factory. These distributed computing frameworks handle massive datasets with speed and efficiency. Finally, I leverage SQL extensively for data cleaning and transformation within relational databases, taking advantage of its powerful querying capabilities.

Q 9. Describe your experience with ETL processes.

ETL (Extract, Transform, Load) processes are the backbone of any robust data warehousing or analytics initiative. My experience encompasses the entire ETL lifecycle, from requirements gathering and data source analysis to deployment and ongoing maintenance.

I’ve worked on projects where we extracted data from diverse sources, including relational databases (SQL Server, MySQL, PostgreSQL), flat files (CSV, TXT), APIs, and NoSQL databases (MongoDB). The transformation phase often involved handling missing values (imputation or removal), data type conversions, data standardization (address formatting, date standardization), and data enrichment using external sources. Finally, the loading phase included writing data into target databases (Snowflake, BigQuery), data lakes (AWS S3), or data warehouses.

For example, in one project, we extracted customer data from multiple legacy systems, transformed it to conform to a standardized data model, and loaded it into a cloud-based data warehouse. This involved dealing with inconsistent data formats, duplicate records, and data quality issues across various systems. Successfully navigating these challenges required meticulous planning, careful execution, and a robust testing strategy.

Q 10. Explain the role of metadata in data cleaning and transformation.

Metadata is crucial in data cleaning and transformation; think of it as the ‘data about data.’ It provides context and understanding, guiding the entire process. For example, metadata might specify the data type of a field (integer, string, date), its business meaning (customer ID, order date), allowed values, or data quality rules.

Without proper metadata, data cleaning becomes a guesswork game. Consider a column labeled ‘customer_id.’ Metadata tells us what constitutes a valid customer ID (format, length, range). This allows us to identify and correct invalid values or duplicates. Metadata about data sources, including data definitions, formats, and data quality rules, is equally crucial for efficient and accurate data integration.

In practice, I leverage metadata in several ways: to understand data structures, to automate data validation checks, to improve data quality monitoring, and to streamline the data transformation process.

Q 11. How do you handle duplicate data?

Handling duplicate data is a critical step in data cleaning. The approach depends on the context: are the duplicates truly identical, or are they near-duplicates with minor variations?

For exact duplicates, I typically use techniques like SQL’s ROW_NUMBER() function to identify and remove redundant records. For example: SELECT *, ROW_NUMBER() OVER (PARTITION BY customer_id ORDER BY order_date) as rn FROM orders; DELETE FROM orders WHERE rn > 1; This would retain only the first record for each customer ID based on the order date.

For near-duplicates, I employ fuzzy matching techniques. This might involve using string similarity metrics (e.g., Levenshtein distance) to identify records with similar names or addresses, allowing for manual review or automated merging based on defined thresholds. The choice of method depends on the desired level of accuracy and the computational resources available.

Q 12. What techniques do you use for data validation?

Data validation is essential to ensure data quality and reliability. I employ a multi-faceted approach, incorporating several techniques:

- Data Type Validation: Checking if data conforms to the expected type (e.g., an integer field doesn’t contain text).

- Range Checks: Verifying that values fall within acceptable limits (e.g., age must be positive).

- Format Checks: Ensuring that data adheres to specified formats (e.g., dates are in YYYY-MM-DD format).

- Uniqueness Constraints: Verifying that primary keys and unique identifiers are indeed unique.

- Cross-Field Validation: Checking for consistency between different fields (e.g., if order date is before delivery date).

- Referential Integrity Checks: Ensuring that foreign key values exist in related tables.

- Data Profiling: Examining the data to detect anomalies, outliers, and potential errors using tools and libraries such as Pandas Profiling in Python

I often automate these checks using scripts or by setting up constraints within databases. These automated validation checks are part of the ETL process, ensuring that data quality is maintained throughout the pipeline.

Q 13. How do you prioritize data cleaning tasks?

Prioritizing data cleaning tasks requires a strategic approach, balancing urgency and impact. I typically follow a risk-based prioritization framework:

- Impact Analysis: Identify the most critical data elements for downstream analysis or decision-making. Errors in these elements will have the most significant impact.

- Data Quality Assessment: Quantify the severity of data quality issues (e.g., percentage of missing values, frequency of inconsistencies). Focus on areas with the highest error rates.

- Urgency Assessment: Consider upcoming deadlines or critical reporting requirements. Address issues impacting imminent projects first.

- Feasibility Assessment: Assess the resources (time, expertise, tools) needed to address each issue. Prioritize tasks that are realistically achievable.

This framework helps to allocate resources effectively and ensure that the most impactful cleaning tasks are addressed first, maximizing the value of the data cleaning effort.

Q 14. How do you measure the success of a data cleaning and transformation project?

Measuring the success of a data cleaning and transformation project involves both quantitative and qualitative assessments. Quantitative metrics might include:

- Reduction in data errors: Measuring the percentage decrease in missing values, inconsistencies, or duplicates after cleaning.

- Increase in data completeness: Tracking the improvement in the completeness of key data fields.

- Improvement in data consistency: Measuring the reduction in inconsistencies across different data sources.

- Enhancement in data accuracy: Assessing the improvement in the accuracy of data after cleaning and validation processes.

Qualitative measures focus on the impact on downstream processes:

- Improved data analysis results: Evaluating the reliability and insights derived from the cleaned data.

- Enhanced decision-making: Assessing the impact of improved data quality on business decisions.

- Increased user satisfaction: Gathering feedback from data users on the usability and reliability of the data.

By combining both quantitative and qualitative assessments, we can gain a comprehensive understanding of the success of the data cleaning and transformation project.

Q 15. Describe a time you had to deal with a large dataset with significant inconsistencies.

In a previous role, I worked with a customer relationship management (CRM) dataset exceeding 5 million records. The data was collected over several years from various sources, leading to significant inconsistencies. For instance, customer names were inconsistently formatted (e.g., ‘John Doe’, ‘John D.’, ‘J. Doe’), addresses contained typos and variations (e.g., ‘St.’ vs. ‘Street’), and dates were represented in multiple formats (MM/DD/YYYY, DD/MM/YYYY, YYYY-MM-DD). This posed a challenge to accurate analysis and reporting.

My approach involved a multi-stage cleaning process. First, I used data profiling techniques (discussed in a later answer) to identify the inconsistencies and their frequency. This helped prioritize the cleaning tasks. Then, I tackled the inconsistencies systematically. For names, I leveraged fuzzy matching algorithms to identify and merge duplicate entries. For addresses, I used a standardization library to convert variations into a consistent format. Finally, for dates, I employed a robust parsing function that could handle different formats and flagged invalid dates for manual review.

This experience highlighted the importance of meticulous planning and phased execution when dealing with large, inconsistent datasets. Iterative testing and validation at each stage were crucial to ensure data quality and accuracy.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the challenges of working with unstructured data?

Unstructured data, like text documents, images, audio, and video, presents numerous challenges due to its lack of pre-defined format or organization. The primary hurdles include:

- Data Extraction: Getting useful information from unstructured data requires sophisticated techniques like Natural Language Processing (NLP) for text or image recognition for images, which can be computationally expensive and complex.

- Data Cleaning and Preprocessing: Unstructured data is often noisy, containing irrelevant information, inconsistencies, and errors that necessitate significant preprocessing before analysis. This can be incredibly time-consuming.

- Data Storage and Management: Storing and managing large volumes of unstructured data requires specialized databases and storage solutions, increasing infrastructure costs and complexity.

- Data Analysis: Analyzing unstructured data often involves advanced statistical methods, machine learning algorithms, and domain expertise to uncover meaningful insights, which requires specialized skillsets.

Imagine trying to analyze customer feedback from open-ended survey responses. You’d need NLP techniques to understand sentiment, extract key topics, and identify common themes. This is far more challenging than analyzing structured data with pre-defined fields for feedback categories.

Q 17. Explain your experience with different data formats (CSV, JSON, XML, etc.).

I have extensive experience with various data formats, including CSV, JSON, XML, and Parquet. Each has its strengths and weaknesses:

- CSV (Comma Separated Values): Simple, widely supported, and easy to read/write. Ideal for tabular data but lacks schema definition and can be prone to errors if delimiters are used inconsistently in the data.

- JSON (JavaScript Object Notation): Lightweight, human-readable, and widely used for web applications. Supports nested structures and is well-suited for representing hierarchical data.

- XML (Extensible Markup Language): Hierarchical structure, allows for defining custom tags, and provides a formal schema definition, making it suitable for complex data structures. It can be more verbose than JSON.

- Parquet: A columnar storage format designed for efficient data processing, especially for large datasets. Offers better compression and query performance compared to row-oriented formats like CSV.

I’m proficient in using various programming languages and tools to read, write, and transform data in these formats. For example, I’ve used Python libraries like pandas for CSV and JSON processing, and xml.etree.ElementTree for XML. For Parquet, I use libraries like pyarrow or fastparquet.

Q 18. What is data profiling and how is it useful?

Data profiling is the process of analyzing data to understand its characteristics, including data types, distributions, ranges, missing values, and data quality issues. Think of it as taking a detailed inventory of your data before you start working with it.

It’s crucial because it provides invaluable insights for data cleaning and transformation. By understanding the data’s structure and quality, you can:

- Identify inconsistencies: Detect anomalies like unexpected values, data type mismatches, and outliers.

- Assess data quality: Determine the completeness, accuracy, and consistency of the data.

- Optimize data cleaning strategies: Tailor your cleaning techniques to the specific issues discovered during profiling.

- Inform data modeling: Make informed decisions about data storage and representation.

For instance, if data profiling reveals that a ‘date of birth’ column contains dates in the future, you know you need to implement a data validation step to correct or flag these errors.

Q 19. Explain your approach to dealing with data security concerns during cleaning and transformation.

Data security is paramount during data cleaning and transformation. My approach involves implementing measures at every stage to protect sensitive information. This includes:

- Data Minimization: Only accessing and processing the data absolutely necessary for the task at hand.

- Data Masking/Anonymization: Employing techniques to obscure or remove personally identifiable information (PII) if appropriate for the analysis.

- Access Control: Limiting access to the data to authorized personnel only, using role-based access control (RBAC).

- Encryption: Encrypting data both in transit (e.g., using HTTPS) and at rest (e.g., using database encryption).

- Secure Development Practices: Adhering to secure coding principles to prevent vulnerabilities in custom scripts or applications.

- Regular Auditing: Tracking and monitoring all access and changes made to the data.

For example, when cleaning a customer dataset containing credit card information, I would use tokenization to replace the actual credit card numbers with unique identifiers, ensuring that the sensitive data is protected.

Q 20. How do you handle data conflicts when merging data from multiple sources?

When merging data from multiple sources, conflicts inevitably arise. My approach involves a structured process:

- Identify potential conflicts: Analyze the data sources to determine areas where inconsistencies or duplicates might occur (e.g., differing column names, conflicting values for the same identifier).

- Establish a conflict resolution strategy: Develop a plan for how to handle conflicts based on data quality and business rules. Options include:

- Prioritize data sources: Give preference to one source’s data over others based on its reliability or recency.

- Use a combination of values: If multiple values are valid, combine them into a single field (e.g., concatenate strings).

- Create a new field: Flag conflicts by creating a new field that indicates the presence of conflicting data.

- Manual review: For critical conflicts, manually review and resolve the discrepancies.

- Implement the strategy: Use programming techniques to automate the conflict resolution process. This might involve conditional logic, data deduplication, or using libraries to handle merging and joins effectively.

- Validate the results: Verify the accuracy and completeness of the merged data to ensure that conflicts have been resolved appropriately.

For instance, when merging customer data from two different systems with slightly different address formats, I would use a standardization library to resolve formatting inconsistencies before merging, prioritizing one system’s address if both contain different values.

Q 21. How do you communicate data cleaning and transformation progress and results?

Effective communication is vital throughout the data cleaning and transformation process. My approach involves:

- Regular progress reports: Provide updates on the project timeline, key milestones achieved, and any challenges encountered using dashboards and visual reports.

- Data quality metrics: Track and report on key data quality indicators (DQIs) such as completeness, accuracy, and consistency. This helps monitor improvement over time.

- Data profiling reports: Share data profiling results to show stakeholders the initial state of the data and the improvements made through cleaning.

- Visualizations: Use charts and graphs to illustrate data distributions, trends, and patterns before and after cleaning.

- Documentation: Maintain comprehensive documentation of the data cleaning and transformation steps, including rationale, methods, and results, for future reference and reproducibility.

- Stakeholder meetings: Regularly engage with stakeholders to discuss progress, address concerns, and gather feedback.

For example, I’d use a dashboard to track the number of records cleaned, the percentage of missing values handled, and the number of data errors resolved. These visual metrics provide a clear picture of the progress and allow for prompt identification of issues.

Q 22. What are some common performance bottlenecks in data transformation pipelines?

Performance bottlenecks in data transformation pipelines often stem from inefficient processing, inadequate infrastructure, or poorly designed workflows. Think of a pipeline as an assembly line; if one part is slow, the entire process suffers.

- Inadequate Resource Allocation: Insufficient CPU, memory, or disk I/O can significantly slow down processing, especially when dealing with large datasets. For example, trying to process a terabyte-sized CSV file on a machine with limited RAM will lead to swapping and extremely slow performance.

- Inefficient Data Handling: Using inefficient data structures or algorithms can dramatically impact speed. For instance, repeatedly iterating through a large dataset in Python using nested loops instead of leveraging optimized libraries like Pandas or Dask will drastically increase processing time.

- Network Bottlenecks: Transferring data between different systems or cloud services can create bottlenecks if network bandwidth is limited or latency is high. Imagine transferring data across continents with a low-bandwidth connection – it will take far longer than if the data resided locally.

- Lack of Parallelism or Optimization: Many data transformation tasks can be parallelized to significantly reduce processing time. If your pipeline isn’t designed to take advantage of multiple cores or distributed computing, you’re missing out on significant performance gains. For instance, distributing a computationally intensive transformation across a cluster of machines using Apache Spark can lead to a substantial speed-up.

- Poorly Optimized Queries: If your transformation involves database queries, poorly written SQL queries can slow down the entire pipeline. This can be resolved by optimizing queries using indexes, appropriate joins and using Explain Plan to understand the execution strategy.

Addressing these bottlenecks involves careful planning, choosing the right tools and technologies, and optimizing the pipeline’s architecture for efficient data processing.

Q 23. Explain your experience with version control for data transformation processes.

Version control is crucial for managing and tracking changes in data transformation processes, ensuring reproducibility and collaboration. It’s like keeping a detailed history of every edit to a document, allowing you to revert to previous versions if needed or understand the evolution of the process.

In my experience, I’ve extensively used Git for managing data transformation code (Python scripts, SQL scripts, etc.). This allows me to track changes, branch out to experiment with different approaches, merge changes from collaborators, and easily revert to previous versions if errors occur. I often use Git alongside platforms like GitHub or Bitbucket for collaboration and remote storage.

Beyond the code, I also employ version control for data schemas and transformation specifications (e.g., using JSON or YAML files to define data mappings). This ensures that changes to the data structures are tracked and manageable, which is particularly important when working with multiple data sources and evolving business requirements.

For example, I once used Git to manage a complex ETL process involving multiple stages and several developers. When a bug was discovered in a production environment, we were able to quickly identify the commit that introduced the error, revert to a previous stable version, and thoroughly debug the issue before redeploying a corrected version.

Q 24. Describe your experience with different data warehousing techniques.

Data warehousing techniques are essential for organizing and storing data for analytical purposes. Think of them as meticulously organized libraries for your data, optimized for efficient querying and reporting.

- Data Lakehouse: This approach combines the scalability and flexibility of a data lake (raw data in its native format) with the structured query capabilities of a data warehouse. I’ve used Delta Lake and Iceberg, which leverage open table formats, schema enforcement and ACID transactions to provide a robust foundation for analytics.

- Relational Data Warehouses: These utilize relational database management systems (RDBMS) like Snowflake, Amazon Redshift or Google BigQuery to store data in a structured format, typically using a star or snowflake schema. These excel in complex queries and data relationships, but can require more upfront schema design and be less flexible than data lakehouse approaches.

- Data Lake: This involves storing raw data in its native format without pre-defined structure. I’ve worked on projects using cloud storage services like AWS S3 or Azure Blob Storage where data undergoes transformation at query time. This approach provides high flexibility but requires careful data governance and can be challenging to query efficiently.

My choice of technique depends on the specific project requirements, balancing the need for flexibility, scalability, and query performance. For projects with high velocity data and changing schema requirements, a data lakehouse provides a superior approach. For projects with a well-defined schema and a focus on complex analytical queries, a relational data warehouse is usually more suitable.

Q 25. How do you handle data anomalies or unexpected values?

Data anomalies and unexpected values are inevitable in any real-world dataset. Handling them correctly is crucial for maintaining data quality and ensuring accurate analysis. Think of it like quality control in a manufacturing plant: you need to identify and address defects to produce a good product.

My approach involves a multi-step process:

- Detection: I use a combination of automated methods and manual review. Automated methods include outlier detection algorithms (e.g., IQR, Z-score), data profiling to identify inconsistent data types or values outside predefined ranges, and data quality rules based on business knowledge.

- Investigation: Once anomalies are detected, I investigate their root cause. Are they due to data entry errors, sensor malfunctions, or genuine outliers? Understanding the source helps determine the appropriate handling strategy.

- Handling: The handling strategy depends on the nature and extent of the anomaly and the impact on analysis. Options include:

- Removal: Removing rows containing severe anomalies if they are few and insignificant to the analysis.

- Imputation: Replacing missing or invalid values with estimated values (e.g., using mean, median, or more sophisticated techniques like K-Nearest Neighbors). This is crucial when anomalies are frequent but not fundamentally wrong.

- Transformation: Transforming the data to mitigate the effects of anomalies (e.g., logarithmic transformation to handle skewed data).

- Flagging: Adding a flag to indicate the presence of an anomaly, allowing for further analysis or filtering during reporting.

The key is to document the handling strategy and its rationale, ensuring transparency and reproducibility.

Q 26. Explain your experience with data governance and compliance regulations.

Data governance and compliance are paramount in ensuring data quality, security, and adherence to regulations like GDPR, CCPA, HIPAA, etc. It’s about establishing rules, processes and accountability for how data is managed. This is like a set of rules and procedures for ensuring data is handled correctly within an organization.

My experience includes developing and implementing data governance policies, defining data quality metrics, and establishing procedures for data access and security. I’ve worked with organizations to achieve compliance by implementing data masking and anonymization techniques to protect sensitive information. I am familiar with data lineage tracking to map data flow through the entire transformation pipeline. This helps in ensuring auditability and identifying the origin of potential data issues. I’ve also collaborated with legal and compliance teams to ensure adherence to relevant regulations.

For example, in a project involving healthcare data, I implemented strict access controls, data encryption, and de-identification techniques to ensure HIPAA compliance. This involved careful consideration of data privacy, security, and regulatory requirements throughout the entire data transformation and storage process.

Q 27. What is your experience with automated data cleaning tools?

Automated data cleaning tools significantly improve efficiency and reduce manual effort in the data cleaning process. They are like sophisticated spell checkers and grammar tools but for data.

I’ve used various tools including:

- OpenRefine: A powerful tool for cleaning and transforming messy data, especially useful for dealing with inconsistencies, duplicates, and errors in tabular data.

- Talend Open Studio: A comprehensive ETL tool with built-in data quality rules and automated cleaning capabilities.

- Informatica PowerCenter: An enterprise-grade ETL tool with powerful data quality and cleansing capabilities.

- Python libraries (Pandas, scikit-learn): I use these extensively for scripting custom data cleaning procedures, leveraging their powerful data manipulation and machine learning capabilities for tasks such as outlier detection and imputation.

The choice of tool depends on the complexity of the data and the specific cleaning tasks. For simple data cleaning tasks, Python libraries might suffice. For complex scenarios with large datasets and stringent data quality requirements, enterprise-grade ETL tools are necessary.

Q 28. Describe your experience with different data integration patterns.

Data integration patterns describe how different data sources are combined and processed. They are like different architectural blueprints for connecting and merging data from various sources.

- Extract, Transform, Load (ETL): This classic pattern involves extracting data from various sources, transforming it to a consistent format, and loading it into a target system. I’ve used this extensively for consolidating data from various databases, flat files, and APIs into a central data warehouse.

- Extract, Load, Transform (ELT): This is a variation where data is extracted and loaded into a data warehouse or lake before being transformed. This is particularly beneficial for large datasets where transformation before loading would be computationally expensive.

- Change Data Capture (CDC): This focuses on capturing and processing only the changes in data sources, rather than the entire dataset. This is efficient for incremental updates and real-time data integration.

- Data Virtualization: This creates a unified view of data without physically moving or integrating data from different sources. This offers agility but can be less efficient for complex transformations.

- Message Queues (e.g., Kafka): These provide a mechanism for asynchronous data integration, handling high-volume data streams efficiently. I’ve used this in projects involving real-time data ingestion and processing.

The choice of integration pattern depends on factors like data volume, velocity, variability, veracity, and the need for real-time processing. A project with high volume streaming data might benefit from message queues, while a project with smaller, well-structured datasets might utilize a standard ETL approach.

Key Topics to Learn for Data Cleaning and Transformation Interview

- Data Quality Assessment: Understanding different types of data quality issues (completeness, accuracy, consistency, timeliness, validity) and methods for identifying them. Practical application: Analyzing data profiles and summary statistics to detect anomalies and inconsistencies.

- Data Cleaning Techniques: Mastering techniques like handling missing values (imputation, deletion), outlier detection and treatment, and data transformation (normalization, standardization). Practical application: Implementing these techniques using Python libraries like Pandas and scikit-learn.

- Data Transformation Methods: Exploring various data transformation techniques including aggregation, pivoting, merging, and reshaping data for analysis and modeling. Practical application: Preparing data for machine learning algorithms by transforming categorical variables and scaling numerical features.

- Data Validation and Consistency Checks: Implementing data validation rules and constraints to ensure data integrity. Practical application: Using SQL constraints and checks to enforce data quality during database operations.

- Data Wrangling with SQL: Utilizing SQL queries for data cleaning and transformation tasks within relational databases. Practical application: Writing efficient SQL queries to filter, join, and aggregate data, handling null values and duplicates.

- Understanding ETL Processes: Grasping the core concepts of Extract, Transform, Load (ETL) pipelines and their role in data warehousing and business intelligence. Practical application: Designing and implementing simple ETL pipelines using tools like Apache Kafka or cloud-based services.

- Data Profiling and Visualization: Using data visualization techniques to understand data characteristics and identify potential cleaning challenges. Practical application: Creating histograms, box plots, and scatter plots to analyze data distributions and detect outliers.

Next Steps

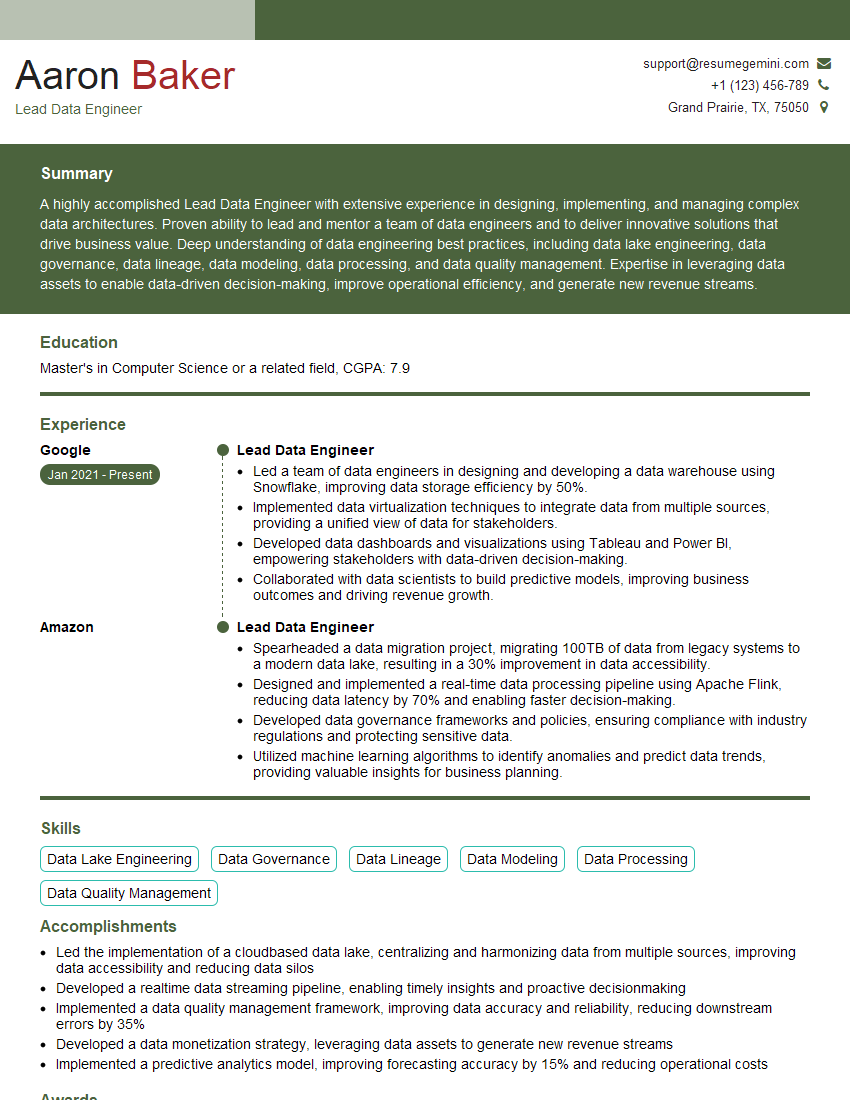

Mastering data cleaning and transformation is crucial for a successful career in data science, analytics, and engineering. These skills are highly sought after, allowing you to contribute significantly to data-driven decision-making within any organization. To maximize your job prospects, crafting an ATS-friendly resume is paramount. This ensures your application gets noticed by recruiters and hiring managers. We strongly encourage you to leverage ResumeGemini to build a professional and impactful resume that showcases your expertise in data cleaning and transformation. Examples of resumes tailored to this specific field are available to help guide you. Invest the time to build a compelling resume—it’s a crucial step in your career journey.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO