Are you ready to stand out in your next interview? Understanding and preparing for Instructional Technology Assessment interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Instructional Technology Assessment Interview

Q 1. Define instructional technology assessment and its purpose.

Instructional technology assessment is the systematic process of gathering and analyzing data to determine the effectiveness of technology-enhanced learning experiences. Its purpose is multifaceted: to evaluate the effectiveness of the instructional design, the chosen technologies, the learning outcomes achieved by learners, and the overall impact on learner performance and satisfaction. It’s about ensuring that technology is not just being used, but being used effectively to facilitate learning.

Think of it like this: you wouldn’t build a house without checking the foundation, the wiring, and the plumbing. Similarly, we assess instructional technology to ensure the ‘learning building’ is sound and effective.

Q 2. Explain the difference between formative and summative assessment in an eLearning context.

Formative and summative assessments differ significantly in their timing and purpose within an eLearning context.

- Formative Assessment: This type of assessment occurs during the learning process. It’s designed to provide ongoing feedback to both the instructor and the learner, allowing for adjustments and improvements along the way. Think of it as a ‘check-in’ to monitor understanding and address challenges promptly. Examples include quizzes within modules, interactive exercises, peer feedback, and informal discussions.

- Summative Assessment: This assessment takes place after the learning process is complete. It aims to measure the overall learning outcomes achieved by the learner. Summative assessments provide a final evaluation of the learner’s knowledge and skills. Examples include final exams, comprehensive projects, and performance-based tasks.

In essence, formative assessment is about improving learning, while summative assessment is about measuring learning.

Q 3. Describe various methods for assessing learner engagement in online learning environments.

Assessing learner engagement in online learning requires a multi-faceted approach. We can’t rely solely on completion rates.

- Tracking activity within the learning management system (LMS): This includes monitoring time spent on modules, completion rates, resource access, and forum participation.

- Analyzing interaction data within the learning materials: Interactive elements, like simulations or branching scenarios, can provide data on learner choices and responses, revealing their level of involvement.

- Using surveys and questionnaires: Regular feedback surveys can provide valuable insights into learner perceptions of engagement, enjoyment, and motivation.

- Employing focus groups and interviews: These qualitative methods allow for in-depth exploration of learner experiences and can reveal the factors influencing engagement.

- Analyzing discussion forum participation: The quality and quantity of contributions can indicate the level of learner engagement and interaction.

A combination of these methods provides a more comprehensive understanding of learner engagement than any single approach.

Q 4. How do you evaluate the effectiveness of an eLearning course using quantitative data?

Evaluating eLearning course effectiveness using quantitative data involves analyzing numerical data to identify trends and patterns. Key metrics include:

- Completion rates: What percentage of learners completed the course?

- Time on task: How much time did learners spend on different modules or activities?

- Test scores: Did learners demonstrate mastery of the learning objectives?

- Performance on assessments: How well did learners perform on formative and summative assessments?

- Learner satisfaction scores (from surveys): This helps in understanding the overall learning experience.

Statistical analysis can reveal correlations between these metrics, helping to identify areas for improvement. For example, a low completion rate might suggest issues with course design or learner motivation.

Q 5. How do you evaluate the effectiveness of an eLearning course using qualitative data?

Qualitative data provides rich, in-depth insights into the ‘why’ behind the quantitative results. Methods for gathering this data include:

- Learner interviews: Individual interviews allow for in-depth exploration of learners’ experiences and perceptions.

- Focus groups: Group discussions provide insights into shared experiences and perspectives.

- Open-ended survey questions: These allow learners to express their thoughts and opinions in their own words.

- Analysis of learner comments and feedback: Examining written feedback provides valuable information on areas of strength and weakness.

- Observations of learner behavior (if applicable): This might be used in a synchronous online environment.

Qualitative data helps to understand the context surrounding quantitative data. For instance, a low test score might be explained by a learner interview revealing a lack of prior knowledge or technical difficulties.

Q 6. What are some common challenges in assessing learning outcomes in online settings?

Assessing learning outcomes in online settings presents unique challenges:

- Ensuring authenticity of work: Preventing plagiarism and cheating requires careful assessment design and proctoring strategies.

- Maintaining learner motivation and engagement: Online learning requires higher levels of self-discipline and motivation.

- Addressing the digital divide: Unequal access to technology and reliable internet can create inequities in learning opportunities.

- Providing effective feedback in a timely manner: Balancing workload with the need to give personalized feedback can be a challenge.

- Adapting assessment methods to the online environment: Traditional assessment methods may not be suitable for all online contexts.

Addressing these challenges requires careful planning, the use of diverse assessment methods, and a strong focus on learner support.

Q 7. How do you design assessments that are both valid and reliable?

Designing valid and reliable assessments is crucial for accurate evaluation.

- Validity: An assessment is valid if it measures what it intends to measure. To ensure validity, carefully align assessment items with the learning objectives. Use multiple assessment methods to assess different aspects of learning. Consider using different question types to ensure a comprehensive assessment.

- Reliability: A reliable assessment produces consistent results. To ensure reliability, use clear instructions, well-defined rubrics, and minimize ambiguity in assessment items. Consider using multiple raters for subjective assessments to ensure consistency. Pilot test the assessment with a small group before deploying it widely.

Imagine a scale measuring weight: a valid scale accurately measures weight, and a reliable scale gives consistent readings each time you weigh the same object. Both are essential for accurate measurements; similarly, validity and reliability are both essential for trustworthy assessments.

Q 8. Explain the concept of accessibility in instructional technology assessment.

Accessibility in instructional technology assessment refers to ensuring that all learners, regardless of their abilities or disabilities, have equal opportunities to demonstrate their learning. This involves designing and implementing assessments that are usable and understandable by individuals with diverse needs, including visual, auditory, motor, cognitive, and learning disabilities.

For example, providing alternative text for images in online quizzes, offering audio descriptions for videos, and allowing learners to use assistive technologies like screen readers are crucial aspects of accessible assessment. Another example is offering different formats for assessments, such as providing a large-print version of a test or allowing students to submit audio recordings instead of written responses. We need to move beyond simple compliance with ADA regulations and create genuinely inclusive assessment experiences.

A practical application of this might involve using a universal design approach during the creation of an online test. This means that from the outset, the assessment is designed to be accessible to all, reducing the need for later adaptations.

Q 9. Discuss the role of learning analytics in instructional technology assessment.

Learning analytics plays a vital role in instructional technology assessment by providing data-driven insights into student learning. By tracking student performance, engagement, and progress, learning analytics allows educators to identify areas where students are struggling, adapt their teaching strategies, and personalize the learning experience. This data is not merely about grades; it’s about understanding the how and why behind student performance.

For example, learning analytics dashboards can reveal patterns in student engagement with online materials. If a large number of students are struggling with a particular module, the instructor can then use this information to adjust their instruction, such as providing additional support materials or revisiting the concept through a different approach. It can inform decisions about interventions and adjustments to curriculum. Imagine a scenario where analytics highlight a correlation between low quiz scores on a certain topic and infrequent logins to a specific online learning resource – this could suggest a need to improve the resource or increase its accessibility.

Q 10. Describe different assessment strategies suitable for diverse learning styles.

Catering to diverse learning styles is essential for effective assessment. Different learners process information differently; some are visual learners, some auditory, and some kinesthetic. Therefore, a variety of assessment strategies should be employed.

- Visual Learners: Assessments involving diagrams, charts, graphs, or visual presentations are effective. Examples include creating concept maps, analyzing images, or designing infographics.

- Auditory Learners: Assessments relying on listening and speaking skills work well. This includes oral presentations, debates, discussions, or listening comprehension tests.

- Kinesthetic Learners: Hands-on activities are ideal. Examples include role-playing, simulations, building models, or conducting experiments.

- Reading/Writing Learners: Traditional assessments like essays, reports, and written examinations remain suitable. But, variations in format (e.g., allowing typed responses) can help.

Employing multiple assessment methods, offering choices, and providing clear instructions ensure fairness and inclusivity for all learners. For example, giving students a choice between a written essay and an oral presentation on the same topic provides opportunities for them to showcase their understanding in ways that best suit their individual learning styles.

Q 11. How do you ensure alignment between learning objectives, instructional activities, and assessment methods?

Alignment between learning objectives, instructional activities, and assessment methods is crucial for effective instruction. This ensures that students are learning what they are expected to learn and that assessments accurately measure their understanding.

This alignment can be achieved using a backwards design approach. First, clearly define the learning objectives—what specific knowledge, skills, or attitudes should students demonstrate by the end of the learning unit? Next, design instructional activities that directly address these objectives. Finally, create assessment methods that accurately measure student achievement of those objectives. For example, if a learning objective is to analyze primary source documents, instructional activities might involve guided practice in analyzing documents, and assessment could involve an essay requiring analysis of a new primary source document.

A lack of alignment can lead to frustration for both students and instructors. For instance, if the instruction focuses on memorization, but the assessment requires analysis and critical thinking, students will likely underperform even if they have mastered the memorized material. Maintaining alignment helps to create a cohesive and effective learning experience.

Q 12. What are some best practices for providing constructive feedback to learners?

Constructive feedback is essential for student learning. It should be specific, focused on the work rather than the person, and actionable. It should not only point out errors but also suggest ways to improve.

- Specificity: Instead of saying “Your essay is weak,” say “Your argument in the second paragraph lacks supporting evidence. Consider adding specific examples from the text.”

- Focus on the work: Avoid personal criticisms. Instead of saying “You’re a sloppy writer,” say “The grammar and punctuation errors in this section detract from the clarity of your argument. Review the grammar guide for assistance.”

- Actionable suggestions: Provide concrete steps for improvement. Instead of “Try harder,” say “Review Chapter 3, specifically pages 45-52 which discuss the methodology you applied incorrectly here.”

- Balance positive and negative feedback: Highlight strengths along with areas needing improvement.

Using technology, such as automated feedback tools for multiple-choice questions or online comment features on essays, can streamline the process, but it’s crucial to supplement automated feedback with personalized instructor commentary to foster a personal connection and ensure depth and understanding.

Q 13. How do you use technology to streamline the assessment process?

Technology significantly streamlines the assessment process. Learning management systems (LMS) such as Canvas or Moodle provide platforms for delivering assessments, collecting responses, grading, and providing feedback, all in one place.

Specifically, tools like automated grading for objective assessments (multiple-choice, true/false) save time and effort. LMS also allow for efficient scheduling and distribution of assessments. Furthermore, using online assessment tools enables quick access to analytics, allowing for immediate data-driven insights into student performance.

Another way technology streamlines the process is through the use of plagiarism detection software and tools that allow for efficient review of responses from students across different sections or courses, thus ensuring consistency and fairness.

Q 14. Describe your experience with different assessment tools and technologies.

Throughout my career, I’ve extensively used various assessment tools and technologies. My experience includes using Learning Management Systems (LMS) such as Canvas, Blackboard, and Moodle for delivering and grading online quizzes, tests, and assignments. I’m proficient in utilizing various question types, including multiple-choice, fill-in-the-blank, essay, and short answer questions. I am familiar with different types of formative and summative assessment tools and methodologies.

I’ve also used response systems like Poll Everywhere and Kahoot! for interactive in-class assessments and feedback. Furthermore, I have experience using plagiarism detection software like Turnitin to maintain academic integrity. My experience extends to using various analytics dashboards within the LMS to track student progress and identify areas for improvement. I’ve found that the best approach involves a blended strategy – using a mix of technological tools and traditional methods for a holistic assessment strategy that caters to individual learner needs.

Q 15. How do you address issues of assessment bias in instructional design?

Addressing assessment bias is crucial for ensuring fairness and accuracy in evaluating learner achievement. Bias can stem from various sources, including the wording of questions, the examples used, and even the format of the assessment itself. For example, a multiple-choice question relying heavily on cultural references might disadvantage learners from different backgrounds. To mitigate bias, I employ several strategies:

- Item analysis: I carefully review each assessment item for potentially biased language or content. This includes seeking feedback from diverse colleagues and reviewing items for gender, racial, and cultural neutrality.

- Diverse item formats: I utilize a variety of assessment types – not just multiple-choice – to cater to different learning styles and avoid inadvertently favoring certain cognitive skills.

- Universal Design for Learning (UDL) principles: I incorporate UDL principles into assessment design, providing multiple means of representation, action, and engagement. This ensures accessibility for learners with diverse needs.

- Pre-testing and pilot testing: Before widespread implementation, I conduct pre-tests and pilot tests with diverse groups of learners to identify and address potential biases.

- Statistical analysis: I use statistical methods to examine the performance of different subgroups on the assessment, identifying any significant disparities that might indicate bias.

For instance, in a recent project designing an online math assessment, I replaced culturally specific examples with universally understood ones. I also included both multiple-choice and problem-solving questions to capture a broader range of mathematical skills.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the importance of using multiple assessment methods.

Using multiple assessment methods is paramount for obtaining a holistic and accurate picture of learner understanding. Relying solely on one method, such as a traditional exam, provides a limited perspective and may not capture the full spectrum of learning outcomes. Different assessments measure different skills and knowledge.

- Formative assessments: These ongoing assessments, like quizzes, short assignments, or class discussions, provide valuable feedback throughout the learning process. They help identify learning gaps early on, allowing for timely interventions.

- Summative assessments: These end-of-unit or end-of-course evaluations, such as projects, presentations, or comprehensive exams, measure overall mastery of the material.

- Authentic assessments: These assessments, such as simulations, case studies, or portfolios, require learners to apply their knowledge and skills to real-world situations, providing a more genuine measure of understanding.

Imagine assessing students’ understanding of a historical event. A multiple-choice quiz might assess factual recall, while a project requiring them to create a presentation or a role-playing simulation would assess their deeper understanding, critical thinking, and communication skills.

Q 17. Describe your experience with different types of assessments (e.g., quizzes, projects, simulations).

My experience encompasses a wide range of assessment types, each chosen strategically depending on the learning objectives. I’ve extensively used:

- Quizzes: I leverage online quiz platforms like Moodle or Canvas to create formative assessments, often incorporating varied question types (multiple-choice, true/false, fill-in-the-blank, short answer) for comprehensive coverage.

- Projects: I design projects that require learners to apply knowledge in a creative or problem-solving context. For instance, designing a website, creating a presentation, or conducting a research project allows for demonstration of higher-order thinking skills.

- Simulations: I’ve incorporated simulations, such as interactive games or virtual environments, to assess learners’ abilities in a risk-free setting. This is especially effective for complex scenarios requiring decision-making or problem-solving under pressure. For example, a flight simulator for aspiring pilots, or a business simulation for MBA students.

- Portfolios: For showcasing learner growth and development over time, I use digital portfolios, where learners collect and reflect upon their work. This method is particularly beneficial for evaluating long-term learning and skill development.

The choice of assessment method is always carefully considered, ensuring alignment with learning objectives and learner needs.

Q 18. How do you analyze assessment data to inform instructional improvement?

Analyzing assessment data is crucial for iterative instructional improvement. My approach involves a systematic process:

- Descriptive statistics: I start by calculating descriptive statistics (mean, median, standard deviation) to understand the overall performance of the learners.

- Item analysis: I examine individual item performance to identify questions that are too easy or too difficult, or those that might be ambiguous or biased.

- Subgroup analysis: I compare the performance of different subgroups (e.g., based on gender, ethnicity, prior knowledge) to identify potential disparities and address any inequities in instruction.

- Correlation analysis: I analyze the relationship between assessment scores and other variables (e.g., time spent on learning activities, participation in discussions) to gain insights into factors influencing learning outcomes.

- Qualitative data analysis: If appropriate, I incorporate qualitative data (e.g., learner feedback, observations) to gain a richer understanding of the learning process.

Based on this analysis, I revise instructional materials, teaching strategies, or assessment methods to improve learning outcomes. For example, if item analysis reveals that a particular question is consistently missed, I might revise the instructional material related to that concept or replace the question with a clearer one.

Q 19. What are some ethical considerations in instructional technology assessment?

Ethical considerations in instructional technology assessment are paramount. Key ethical principles include:

- Fairness and equity: Assessments must be designed and implemented in a way that is fair to all learners, regardless of their background or abilities. This involves addressing bias and ensuring accessibility.

- Privacy and confidentiality: Learner data must be handled responsibly, ensuring confidentiality and protecting privacy rights. This includes securing data, obtaining informed consent, and adhering to relevant data protection regulations.

- Transparency and accountability: Learners should be informed about the purpose of assessments, how their data will be used, and the criteria for evaluation. Clear grading rubrics and feedback mechanisms are crucial for transparency.

- Integrity and honesty: Preventing cheating and promoting academic integrity is crucial. This includes using appropriate assessment methods and technologies to deter plagiarism and other forms of academic dishonesty.

A clear example would be ensuring that all assessments are accessible to students with disabilities, adhering to ADA guidelines. Another example would be anonymizing student data before analysis to protect their privacy.

Q 20. How do you adapt assessments for learners with diverse needs and abilities?

Adapting assessments for learners with diverse needs and abilities is essential for inclusive education. This involves:

- Differentiated instruction: Providing various learning materials and assessment formats catering to different learning styles and preferences. This might include offering audio versions of reading materials, allowing learners to complete assessments orally or in writing, or providing extended time for completion.

- Assistive technology: Utilizing assistive technologies, such as screen readers, text-to-speech software, or speech-to-text software, to support learners with disabilities.

- Universal Design for Learning (UDL): Implementing UDL principles in the design of assessments, ensuring that they are accessible and usable by all learners.

- Individualized Education Programs (IEPs) and 504 plans: Working collaboratively with special education teachers and other professionals to design assessments that meet the unique needs of learners with IEPs or 504 plans.

For instance, a learner with dyslexia might benefit from an audio version of a reading comprehension assessment, while a learner with a visual impairment might need braille or large-print materials. Adapting assessments often requires collaboration with relevant professionals and careful consideration of individual learner needs.

Q 21. Describe your experience with using assessment data to track learner progress.

Tracking learner progress using assessment data is vital for providing timely feedback and adjusting instruction. I use several methods:

- Learning management systems (LMS): Most LMS platforms (Moodle, Canvas, Blackboard) provide tools for tracking learner progress on assignments and quizzes, generating reports and visualizations of performance over time.

- Data dashboards: I create custom data dashboards to visualize learner progress, identifying trends and patterns in individual and group performance.

- Progress monitoring: I regularly monitor learner progress through formative assessments, providing feedback and adjusting instruction as needed. This might involve providing additional support to struggling learners or challenging advanced learners with more complex tasks.

- Personalized learning plans: I use assessment data to create personalized learning plans for individual learners, tailoring instruction to their specific needs and learning styles.

For example, if a learner consistently struggles with a particular concept, I might provide them with additional resources, tutoring, or modified assignments. By regularly tracking learner progress and providing personalized feedback, I can ensure that all learners are making optimal progress towards their learning goals.

Q 22. How familiar are you with different learning management systems (LMS) and their assessment capabilities?

I’m very familiar with a wide range of Learning Management Systems (LMS), including Moodle, Canvas, Blackboard, and Brightspace. My experience extends beyond simply using these platforms; I understand their underlying architectures and the nuances of their assessment capabilities. This includes creating various assessment types – multiple-choice questions, essays, quizzes, rubrics, peer assessments, and even incorporating interactive simulations. For instance, in Moodle, I’m adept at using the ‘Workshop’ module for peer and self-assessment, leveraging its rich feedback features. In Canvas, I effectively utilize the ‘SpeedGrader’ for efficient grading and providing timely feedback. Each LMS offers unique strengths in assessment design; my expertise lies in selecting the optimal platform and tools based on the specific learning objectives and learner needs. Understanding these differences allows me to maximize the effectiveness of assessments and enhance the overall learning experience.

Q 23. How do you ensure the security and integrity of online assessments?

Security and integrity are paramount in online assessments. My approach is multi-faceted. Firstly, I leverage the inherent security features of the chosen LMS, ensuring strong password policies, user authentication, and access controls are strictly enforced. Secondly, I employ strategies to prevent cheating, such as randomized question banks, time limits, and proctoring tools where appropriate. Thirdly, I design assessments to minimize opportunities for collusion, using unique questions and closely monitoring student activity patterns. Finally, data encryption and regular system updates are essential to safeguard data from unauthorized access and breaches. For example, I might use question banks in Canvas to ensure that each student receives a unique set of questions, making it impossible to simply copy answers from a classmate. Moreover, I utilize the built-in plagiarism detection features offered by many LMS platforms, supplementing this with external tools if necessary. A holistic approach to security ensures the validity and reliability of the assessment results.

Q 24. What are some strategies for motivating learners to participate in assessments?

Motivating learners is crucial for successful assessment participation. I use several strategies, starting with clear communication of the assessment’s purpose and its relevance to learning objectives. Gamification techniques, like awarding points or badges for completing assessments, can significantly boost engagement. Providing regular feedback and celebrating successes foster a positive learning environment. Furthermore, offering choices in assessment formats –allowing students to demonstrate their understanding through presentations, projects, or essays, in addition to traditional tests – caters to diverse learning styles and promotes intrinsic motivation. I also focus on creating assessments that are challenging yet achievable, ensuring learners feel a sense of accomplishment upon completion. For example, I might incorporate elements of friendly competition (leaderboards, but focusing on improvement rather than pure ranking) or offer opportunities for self-reflection and goal setting to promote engagement and a growth mindset.

Q 25. Explain your understanding of Bloom’s Taxonomy and its relevance to assessment design.

Bloom’s Taxonomy is a hierarchical model that categorizes cognitive skills into six levels: Remembering, Understanding, Applying, Analyzing, Evaluating, and Creating. It’s fundamentally important in assessment design because it guides the creation of assessment items that accurately measure the desired learning outcomes. For instance, if the learning objective is for students to ‘analyze’ historical data, the assessment should not simply test their ability to ‘remember’ dates; instead, it should require them to interpret and compare information, identify patterns, and draw conclusions. I ensure that my assessments appropriately align with the cognitive level required by the learning objective, preventing misalignment between what is taught and what is assessed. This ensures a fair and accurate evaluation of student learning. Using Bloom’s taxonomy ensures the assessments are appropriately challenging and assess higher-order thinking skills.

Q 26. How do you incorporate learner feedback into the assessment process?

Learner feedback is invaluable for improving the assessment process. I actively solicit feedback through various means: surveys, focus groups, and informal discussions. I analyze this feedback to identify areas where the assessment could be improved in clarity, difficulty, or relevance. For instance, if students consistently struggle with a particular question type, I might revise the question or provide additional instructional support. This iterative process allows me to refine assessments over time, making them more effective and aligned with student needs. I also use learner feedback to enhance the learning experience, adjusting instructional materials and techniques based on identified learning gaps and challenges. By demonstrating that their feedback matters, I increase student engagement and motivation.

Q 27. Describe a time you had to troubleshoot a problem with an online assessment.

In one instance, we were using a custom-built online assessment platform for a large-scale certification exam. A few hours before the exam, we experienced a significant server-side issue preventing students from accessing the assessment. My first step was to contact the hosting provider to diagnose the problem. We discovered a database connectivity issue. While the hosting provider worked on restoring the database connection, we quickly implemented a contingency plan: we mirrored the assessment to a secondary server and communicated with the affected students. We provided updates regularly, minimizing the disruption and ensuring a fair and equitable assessment experience for all participants. This experience highlighted the importance of redundancy, proactive communication, and a well-defined crisis management plan in online assessment delivery.

Q 28. How do you stay current with best practices in instructional technology assessment?

Staying current is crucial in this rapidly evolving field. I actively participate in professional development opportunities, attending conferences and workshops focused on instructional technology and assessment. I subscribe to relevant journals and online publications, such as those published by educational technology associations. I also engage with online communities and professional networks to share best practices and stay informed on emerging trends. Furthermore, I regularly review and update my knowledge of new technologies and assessment strategies to enhance the effectiveness and efficiency of my work. Continuous learning is essential to ensure I provide the highest quality assessment services.

Key Topics to Learn for Instructional Technology Assessment Interview

- Needs Analysis & Learning Objectives: Defining target audience, learning needs, and aligning technology with measurable learning objectives. Practical application: Developing a needs analysis survey for a specific training program and aligning it with Bloom’s Taxonomy.

- Technology Selection & Evaluation: Criteria for selecting appropriate instructional technologies (e.g., LMS, authoring tools, simulations) based on learning goals, budget, and accessibility. Practical application: Comparing the features and suitability of different Learning Management Systems (LMS) for a specific educational context.

- Instructional Design Models: Understanding and applying various instructional design models (e.g., ADDIE, SAM, Agile) to the assessment process. Practical application: Applying the ADDIE model to design an assessment plan for a new online course.

- Assessment Methods & Strategies: Designing formative and summative assessments aligned with learning objectives, utilizing various assessment methods (e.g., quizzes, projects, peer review). Practical application: Developing a rubric for evaluating student performance in a blended learning environment.

- Data Analysis & Interpretation: Utilizing assessment data to inform instructional decisions, identify areas for improvement, and demonstrate the effectiveness of technology integration. Practical application: Interpreting learning analytics data from an LMS to improve student engagement and course design.

- Accessibility & Universal Design for Learning (UDL): Ensuring all learners have equal access to technology and learning materials. Practical application: Adapting online assessments to meet the needs of learners with disabilities.

- Ethical Considerations: Understanding the ethical implications of using technology for assessment, including data privacy and security. Practical application: Developing a plan to address data privacy concerns in an online assessment.

Next Steps

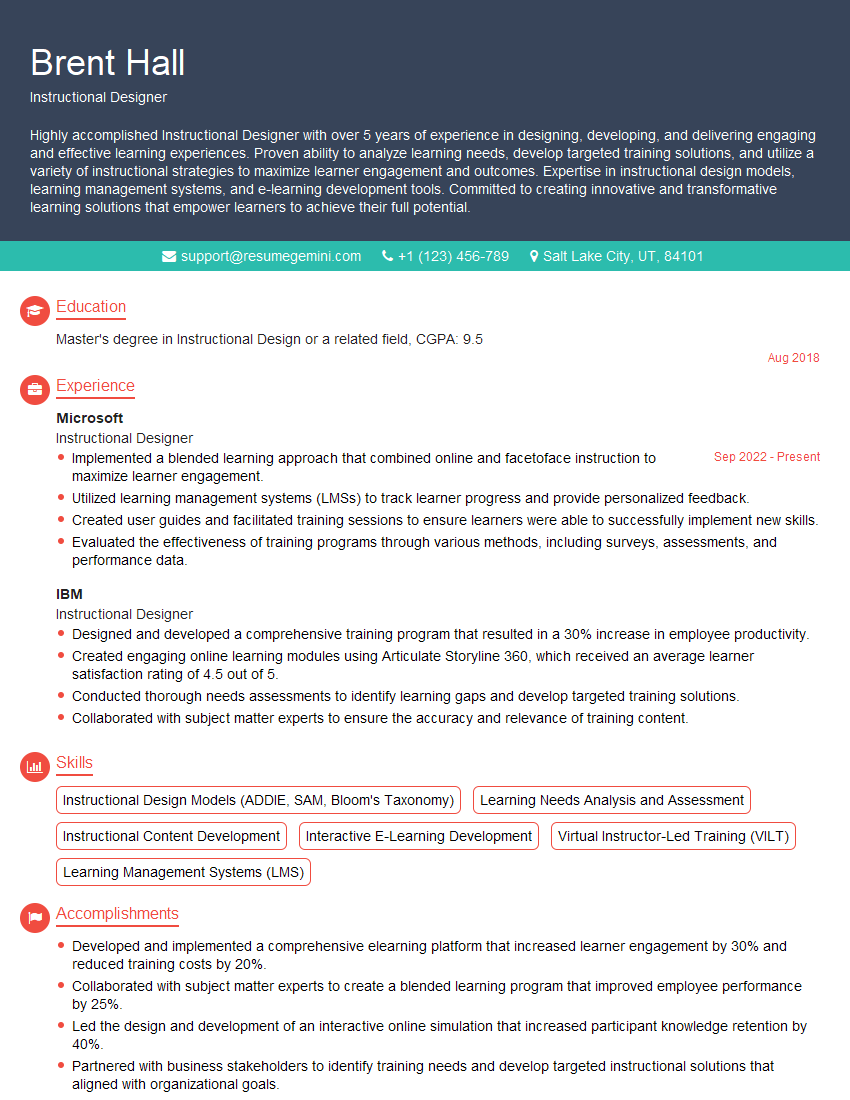

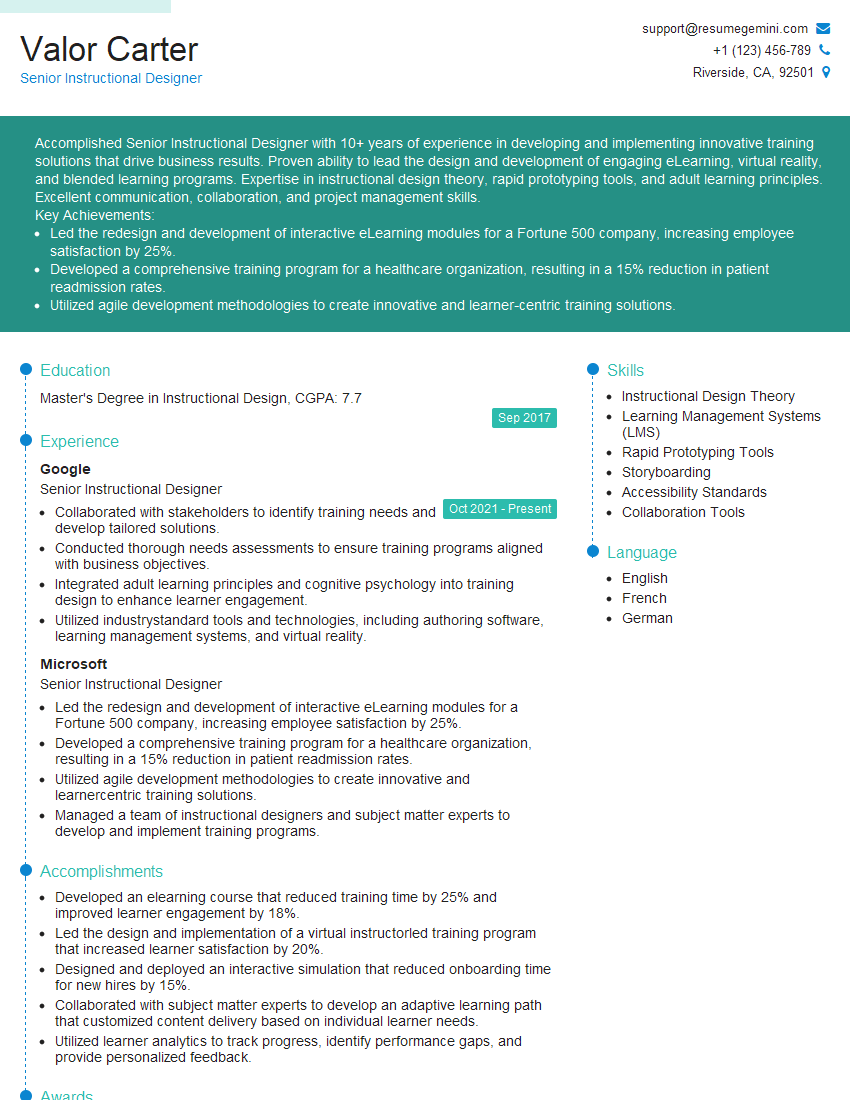

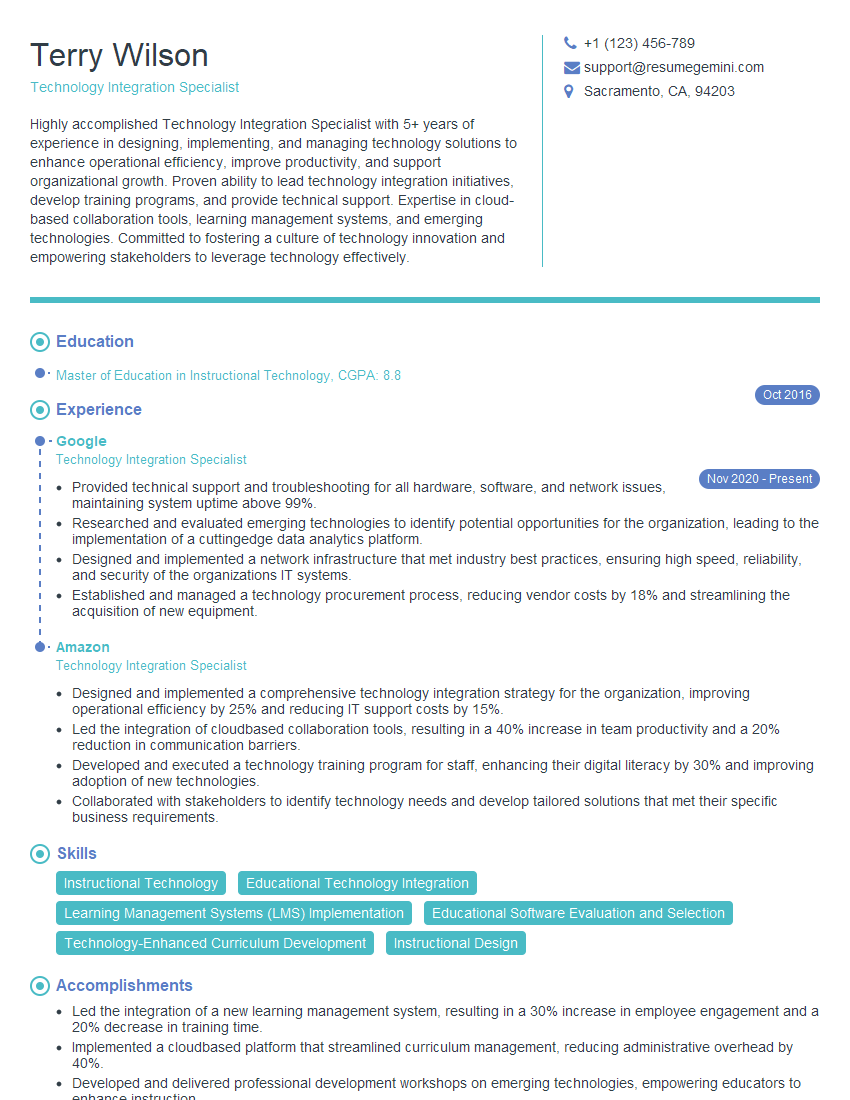

Mastering Instructional Technology Assessment is crucial for advancing your career in education and training. It demonstrates your ability to leverage technology effectively to improve learning outcomes and showcases your expertise in designing engaging and effective learning experiences. To significantly boost your job prospects, create a compelling and ATS-friendly resume that highlights your skills and accomplishments. ResumeGemini is a trusted resource to help you build a professional resume that stands out. Examples of resumes tailored to Instructional Technology Assessment are available to help guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO