The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Pattern Following interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Pattern Following Interview

Q 1. Describe a time you identified a recurring pattern in a dataset.

Identifying recurring patterns is fundamental to data analysis. In a project analyzing customer purchase history, I noticed a strong seasonal pattern in sales of winter coats. The data, initially a seemingly chaotic jumble of transactions, revealed a clear peak in sales during the autumn months and a subsequent decline throughout the year. This wasn’t just a hunch; I validated it statistically by comparing sales figures across multiple years, demonstrating a consistent upward trend in autumn and a downward trend thereafter. This pattern allowed us to optimize inventory management and marketing campaigns, leading to increased profits and reduced waste.

This experience highlighted the importance of visualizing data effectively. Initially, I used line graphs to observe trends. Then, I applied time series analysis techniques to quantify the seasonal fluctuations and ensure the pattern wasn’t simply random variation.

Q 2. Explain how you would approach identifying patterns in unstructured data.

Unstructured data, like text or images, presents a unique challenge because it lacks the inherent organization of structured datasets. My approach involves a multi-step process. First, I employ techniques like Natural Language Processing (NLP) for textual data to clean, tokenize, and potentially stem or lemmatize the text. This transforms raw text into a structured format suitable for further analysis. For images, I might use computer vision techniques to extract features like edges, corners, and textures.

Next, I would apply pattern recognition algorithms suited to the data type. For example, frequent pattern mining algorithms, like Apriori or FP-Growth, could identify commonly occurring word combinations in text. For images, techniques like clustering (k-means, hierarchical) could group similar images together based on extracted features, revealing patterns in visual data. Machine learning models, such as recurrent neural networks (RNNs) for sequential data or convolutional neural networks (CNNs) for images, can also be powerful tools for uncovering intricate patterns. Finally, I always visualize the results to identify meaningful patterns and avoid misinterpretations.

Q 3. What techniques do you use to validate identified patterns?

Validating identified patterns is crucial to ensure they aren’t spurious. I employ several techniques, including statistical significance tests (e.g., chi-squared test, t-test) to determine the probability that observed patterns are due to chance. For time series data, I use autocorrelation and partial autocorrelation functions to assess the strength and duration of the identified patterns.

Another approach is to split the dataset into training and testing sets. The training set is used to identify patterns, and the testing set is used to evaluate how well the identified patterns generalize to unseen data. A good pattern should perform well on both sets. I also use cross-validation techniques to further strengthen the validation process by rotating which portions of the data serve as the training and testing sets.

Q 4. How do you handle situations where patterns are ambiguous or inconsistent?

Ambiguous or inconsistent patterns are common and require careful consideration. My approach involves first investigating the source of the ambiguity. This may involve deeper data cleaning, exploring additional variables, or examining the data collection process for potential biases. For example, if I find a pattern that only holds true for a small subset of the data, I might need to segment the data and analyze each subset separately to understand the underlying reasons for the inconsistencies.

Sometimes, patterns might appear inconsistent due to noise. In such cases, I apply filtering or smoothing techniques to reduce noise and highlight underlying trends. If the patterns truly are inconsistent, I might need to re-evaluate the initial hypothesis or consider using more robust pattern recognition methods capable of handling noisy or complex data.

Q 5. Describe your experience with different pattern recognition algorithms.

My experience spans a wide range of pattern recognition algorithms. I’m proficient in using association rule mining algorithms (Apriori, FP-Growth) for market basket analysis, identifying frequent itemsets in transactional data. I have also extensively used clustering algorithms (k-means, DBSCAN, hierarchical clustering) for grouping similar data points. For sequential data, I’ve used Hidden Markov Models (HMMs) and recurrent neural networks (RNNs). For image recognition, I’ve worked with convolutional neural networks (CNNs).

The choice of algorithm heavily depends on the data type, the nature of the patterns being sought, and the desired outcome. For instance, while CNNs excel in image processing, they might not be suitable for time series forecasting, where RNNs or ARIMA models would be more appropriate.

Q 6. How would you differentiate between noise and a genuine pattern in a dataset?

Distinguishing noise from genuine patterns is a fundamental challenge in pattern recognition. Several approaches help differentiate between the two. Statistical methods, such as calculating signal-to-noise ratios, help quantify the relative strength of a pattern against background noise. Visual inspection of data through plots and charts also plays a critical role. Genuine patterns usually show consistency across different parts of the data, while noise tends to be random and scattered.

Furthermore, I leverage domain expertise to assess the plausibility of a pattern. A pattern that contradicts established knowledge within a particular field might be considered noise, unless there is strong evidence to suggest otherwise. Techniques like smoothing and filtering can also be applied to reduce the influence of noise, making underlying patterns more apparent.

Q 7. Explain the concept of overfitting in pattern recognition. How do you avoid it?

Overfitting occurs when a model learns the training data too well, including the noise, leading to poor generalization performance on unseen data. It’s like memorizing the answers to a test instead of understanding the underlying concepts. A model that overfits will have high accuracy on the training set but low accuracy on the testing set.

To avoid overfitting, I employ several strategies. These include using regularization techniques (e.g., L1 and L2 regularization) to penalize complex models. I also employ cross-validation, which helps assess the model’s performance on unseen data. Furthermore, feature selection helps reduce the number of features used in the model, preventing it from focusing on irrelevant details. Finally, simpler models are generally less prone to overfitting than complex ones. The choice of model architecture and the size of the training dataset also influence the risk of overfitting. A larger dataset generally helps avoid overfitting.

Q 8. How do you determine the significance of an identified pattern?

Determining the significance of an identified pattern involves a multi-faceted approach. It’s not simply about spotting a trend; it’s about understanding its impact and reliability. We need to consider several factors:

- Statistical Significance: Does the pattern hold up statistically? We use methods like hypothesis testing (e.g., chi-squared test, t-test) to determine if the observed pattern is likely due to chance or reflects a genuine underlying relationship. A low p-value (typically below 0.05) indicates statistical significance.

- Magnitude of Effect: How strong is the pattern? Is it a small, subtle change or a dramatic shift? This is often measured using effect sizes (e.g., Cohen’s d). A large effect size suggests a more significant pattern.

- Practical Significance: Even if a pattern is statistically significant, it might not be practically important. Consider the context. A tiny improvement in a metric might be statistically significant but irrelevant for business decisions. The practical significance depends on the impact it has on the goals.

- Contextual Relevance: Does the pattern align with our existing knowledge and expectations? This helps validate the findings and prevents misinterpretations. Are there confounding factors that could explain the pattern?

- Consistency and Repeatability: Can the pattern be consistently observed across different datasets or time periods? A pattern observed only once is less significant than one repeatedly observed.

For example, imagine we identify a pattern showing increased ice cream sales correlate with increased crime rates. While statistically significant, the pattern’s practical significance is low, and the likely explanation is a confounding variable – both are more common in hot weather.

Q 9. Describe a situation where you used pattern recognition to solve a problem.

During a project for a major e-commerce company, we noticed a significant drop in conversion rates during specific time periods. Initially, it seemed random. However, by carefully analyzing website logs, user behavior data, and server performance metrics, I identified a pattern. The drops coincided with periods of high server load, indicating a performance bottleneck affecting the checkout process. This wasn’t immediately obvious; it required meticulous data exploration and pattern recognition across diverse data sources. The solution involved upgrading server infrastructure and optimizing the checkout process. This led to a measurable increase in conversion rates and revenue.

Q 10. What are some common pitfalls to avoid when analyzing patterns?

Several pitfalls can derail pattern analysis. Some common ones include:

- Overfitting: Creating a model that fits the training data too well, leading to poor performance on unseen data. This is like memorizing the answers to a test without understanding the concepts. Techniques like cross-validation help prevent overfitting.

- Confirmation Bias: Seeking out or interpreting evidence to confirm pre-existing beliefs, ignoring contradictory evidence. This involves focusing only on data supporting a hypothesis while discarding data that contradicts it. Objective evaluation and multiple perspectives are crucial.

- Ignoring Randomness: Attributing significance to random fluctuations in data. Statistical significance tests help differentiate between real patterns and noise.

- Correlation vs. Causation: Mistaking correlation for causation. Two variables may correlate without one directly causing the other. A deeper investigation into causal relationships is necessary.

- Data Bias: Analyzing data that is not representative of the population. This leads to erroneous conclusions about the overall pattern. Ensure data is carefully collected and sampled.

For instance, focusing solely on positive customer reviews might paint a misleading picture of overall customer satisfaction. Analyzing negative feedback is equally crucial.

Q 11. How do you prioritize patterns based on their potential impact?

Prioritizing patterns requires a structured approach. I usually consider:

- Potential Impact: How significantly will addressing this pattern affect key metrics (e.g., revenue, customer satisfaction, operational efficiency)? Patterns with the highest potential impact get priority.

- Feasibility of Intervention: Can we realistically address the pattern? Some patterns might require significant resources or changes, making them less prioritizable.

- Urgency: How quickly does the pattern need to be addressed? Patterns causing immediate problems require immediate attention.

- Cost-Benefit Analysis: What are the costs and benefits of addressing the pattern? Prioritize patterns with a favorable cost-benefit ratio.

Imagine having several patterns: one related to a minor design flaw, another indicating a major security vulnerability, and a third showing a slow decline in website traffic. The security vulnerability would have the highest priority due to its high impact and urgency. The design flaw might be lower priority unless it directly impacts user experience significantly.

Q 12. How do you translate identified patterns into actionable insights?

Translating patterns into actionable insights involves several steps:

- Clearly Define the Pattern: Describe the pattern concisely and unambiguously.

- Identify Root Causes: Explore the underlying reasons behind the pattern. Why is this pattern occurring?

- Develop Hypotheses: Formulate testable hypotheses to explain the pattern.

- Design Interventions: Based on the hypotheses and root causes, propose concrete actions to address the pattern.

- Test and Evaluate: Implement the interventions, monitor their effectiveness, and iterate based on the results.

For example, if we identify a pattern of increased customer churn after a specific feature update, the actionable insight might be to roll back the update, redesign the feature, or implement better user training.

Q 13. Explain the difference between inductive and deductive reasoning in pattern identification.

Inductive and deductive reasoning are contrasting approaches to pattern identification:

- Inductive Reasoning: This is a bottom-up approach. We start with specific observations and try to generalize them into broader patterns or principles. It’s about drawing conclusions based on evidence. For example, observing that every swan we have ever seen is white leads to the inductive conclusion that all swans are white (this is famously false, highlighting the limitations of inductive reasoning).

- Deductive Reasoning: This is a top-down approach. We start with general principles or theories and use them to deduce specific conclusions or predictions. For instance, knowing that all men are mortal (general principle) and Socrates is a man (specific fact), we deductively conclude that Socrates is mortal.

In pattern identification, inductive reasoning is often used to discover patterns from data, while deductive reasoning helps to validate or explain those patterns using existing theories or models.

Q 14. What software or tools are you proficient in for pattern analysis?

My proficiency in pattern analysis extends to several software and tools:

- Programming Languages: Python (with libraries like Pandas, NumPy, Scikit-learn), R.

- Data Visualization Tools: Tableau, Power BI, Matplotlib, Seaborn.

- Statistical Software: SPSS, SAS.

- Machine Learning Platforms: TensorFlow, PyTorch.

- Database Management Systems: SQL, NoSQL databases.

The choice of tools depends on the nature of the data and the complexity of the analysis. For instance, I might use Python and Scikit-learn for complex machine learning tasks, while Tableau would be ideal for visualizing patterns to a non-technical audience.

Q 15. How do you handle large datasets when looking for patterns?

Handling large datasets for pattern recognition requires a strategic approach combining efficient algorithms and data reduction techniques. Simply throwing a massive dataset at a standard algorithm is computationally expensive and often ineffective. My approach focuses on several key strategies:

- Sampling: For exploratory analysis, I often start with a representative sample of the data. This allows for quicker identification of potential patterns and avoids unnecessary computational burden. Once promising patterns are identified in the sample, I can validate them on the full dataset.

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) can significantly reduce the dimensionality of the data while preserving important information. This makes pattern identification easier and faster by focusing on the most relevant features.

- Data Partitioning: Dividing the dataset into smaller, manageable chunks allows for parallel processing, drastically reducing the overall processing time. This is particularly effective with distributed computing frameworks like Spark or Hadoop.

- Algorithm Selection: Choosing the right algorithm is crucial. Algorithms designed for large datasets, such as those based on streaming data processing or approximate nearest neighbor search, are vital.

- Incremental Learning: For evolving datasets, where new data arrives continuously, I employ incremental learning techniques. These methods update the pattern recognition model incrementally without reprocessing the entire dataset each time.

For example, in a project analyzing customer purchasing behavior, I initially used a random sample of 10% of the customer records to identify purchasing patterns. This allowed me to quickly test different algorithms and identify promising leads. Once a suitable pattern was identified, the algorithm was then applied to the full dataset for validation and detailed analysis.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your understanding of time series analysis and pattern detection.

Time series analysis is a specialized field focusing on data points indexed in time order. Pattern detection within time series involves identifying recurring trends, seasonality, or anomalies in the data. This could involve anything from stock prices to weather patterns to sensor readings. My understanding encompasses several key methods:

- Decomposition: Breaking down a time series into its constituent components (trend, seasonality, residuals) allows for separate analysis of each component. This helps to isolate underlying patterns that might be obscured by noise or other factors.

- Moving Averages: Smoothing the data using moving averages helps to reduce noise and reveal underlying trends. Different window sizes can be used to capture various trends.

- Autoregressive Integrated Moving Average (ARIMA) models: These statistical models are powerful for forecasting and identifying patterns in stationary time series. They model the relationship between current and past values in the series.

- Machine Learning Techniques: Techniques like Recurrent Neural Networks (RNNs), specifically LSTMs (Long Short-Term Memory networks), are excellent for identifying complex, long-range dependencies in time series data.

For instance, I worked on a project analyzing website traffic data to identify seasonal patterns. By using ARIMA models and visualizing the data, we were able to predict peak traffic periods, allowing the company to proactively manage server capacity.

Q 17. Describe your experience working with different types of patterns (e.g., sequential, spatial, temporal).

My experience spans various pattern types. Each requires different techniques and approaches:

- Sequential Patterns: These involve identifying sequences of events or items. Think of market basket analysis (identifying items frequently purchased together) or analyzing user interactions on a website. Algorithms like Apriori or FP-Growth are commonly used.

- Spatial Patterns: These patterns are defined by geographic location. Examples include analyzing crime hotspots in a city, identifying clusters of similar businesses, or detecting patterns in satellite imagery. Spatial statistics and techniques like clustering (e.g., k-means) play a crucial role.

- Temporal Patterns: As discussed earlier, these are patterns that evolve over time. Time series analysis is the core methodology, but other approaches like event sequence analysis are also relevant.

In a past project involving sensor data from a manufacturing plant, I combined temporal and spatial pattern recognition. Analyzing sensor readings over time and their locations on the factory floor, we could pinpoint the source of recurring equipment malfunctions.

Q 18. How would you communicate your findings about identified patterns to a non-technical audience?

Communicating complex pattern recognition findings to non-technical audiences requires clear, concise language and effective visualization. I avoid jargon and rely on storytelling and relatable analogies:

- Visualizations: Charts, graphs, and maps are invaluable. A simple bar chart showing the frequency of a particular pattern is far more effective than a complex statistical report.

- Analogies and Metaphors: Relating patterns to everyday experiences makes them easier to grasp. For example, explaining a cyclical pattern in sales data as similar to the seasons makes it more intuitive.

- Storytelling: Framing the findings within a narrative context helps to engage the audience and makes the information memorable. For example, instead of just presenting results, I’d explain how the discovered patterns led to a specific outcome or solved a particular business problem.

- Focus on the ‘So What?’: Always emphasize the implications of the findings. What actions can be taken based on the identified patterns? How will this knowledge benefit the organization or its stakeholders?

For example, when presenting findings on customer segmentation based on purchasing patterns, I might show a simple visual of different customer groups, illustrating their key characteristics and purchasing habits, and then directly link those insights to improved marketing strategies and increased sales projections.

Q 19. Can you explain the concept of a ‘false positive’ in pattern recognition?

A false positive in pattern recognition refers to the incorrect identification of a pattern where none actually exists. It’s essentially a type I error. This is a common problem, particularly when dealing with noisy data or complex patterns.

Imagine searching for a specific sequence of DNA in a large genome. A false positive would be identifying that sequence where it’s actually just a random occurrence of similar base pairs. The consequences of false positives can be significant, leading to incorrect conclusions and wasted resources.

Minimizing false positives requires careful consideration of several factors:

- Significance Thresholds: Setting appropriate thresholds for statistical significance is crucial. A higher threshold reduces the likelihood of false positives, but it can also increase the risk of missing genuine patterns (false negatives).

- Data Cleaning: Removing noise and outliers from the data reduces the chance of mistaking random fluctuations for genuine patterns.

- Cross-Validation: Testing the pattern recognition model on multiple independent datasets helps to assess its generalizability and avoid overfitting, a major source of false positives.

- Multiple Hypothesis Testing Corrections: When testing many patterns simultaneously, adjustments like the Bonferroni correction are necessary to control the overall false positive rate.

Q 20. How do you evaluate the accuracy of your pattern recognition methods?

Evaluating the accuracy of pattern recognition methods requires a rigorous approach that goes beyond simply looking at the immediate results. The evaluation strategy depends heavily on the specific application and the type of pattern being detected. However, common methods include:

- Precision and Recall: These metrics assess the performance of a classification model. Precision measures the proportion of correctly identified patterns among all identified patterns, while recall measures the proportion of correctly identified patterns among all actual patterns. A balance between high precision and high recall is usually desired.

- F1-Score: The F1-score provides a single metric combining precision and recall, offering a more holistic evaluation.

- Confusion Matrix: A confusion matrix visualizes the performance of a classification model by showing the counts of true positives, true negatives, false positives, and false negatives. This allows for a detailed analysis of the model’s strengths and weaknesses.

- ROC Curves and AUC: Receiver Operating Characteristic (ROC) curves and the Area Under the Curve (AUC) are particularly useful when dealing with imbalanced datasets. They illustrate the trade-off between true positive rate and false positive rate at various threshold settings.

- Holdout Method and Cross-Validation: These techniques ensure the model’s performance is generalizable and not simply overfitted to the training data. They involve splitting the data into training and testing sets or using cross-validation folds to evaluate the model’s accuracy on unseen data.

In a real-world scenario, such as fraud detection, high recall is generally prioritized to minimize the risk of missing fraudulent transactions, even if it might lead to some false positives (which can be further investigated).

Q 21. Describe your approach to dealing with missing data when identifying patterns.

Missing data is a pervasive issue in real-world datasets. Ignoring missing values can lead to biased and inaccurate results. My approach involves several strategies depending on the nature and extent of the missing data:

- Deletion: For datasets with a small amount of missing data, listwise or pairwise deletion might be an option. Listwise deletion removes entire rows with missing values, while pairwise deletion omits cases only for specific analyses involving those variables. However, this can lead to loss of information if the missing data is not Missing Completely at Random (MCAR).

- Imputation: This involves replacing missing values with estimated values. Common methods include mean/median imputation, k-Nearest Neighbors imputation, or more sophisticated techniques like multiple imputation. The choice of method depends on the nature of the data and the pattern recognition algorithm being used.

- Model-Based Approaches: Some pattern recognition algorithms can handle missing data inherently. For example, some machine learning models are robust to missing values.

- Prediction: In certain situations, the missing data might be predictable using other variables in the dataset. This can be achieved using regression or other predictive models.

The best strategy depends heavily on the context. For example, in a medical dataset, sophisticated imputation methods might be preferred to avoid biasing results. If the amount of missing data is substantial, exploring model-based approaches that handle missing data directly might be more appropriate than imputation.

Q 22. How do you ensure the reproducibility of your pattern analysis?

Reproducibility in pattern analysis is paramount for ensuring the validity and reliability of our findings. It means that another researcher, using the same data and methods, should arrive at the same conclusions. We achieve this through meticulous documentation and standardized procedures.

- Detailed Data Documentation: We meticulously document the source, pre-processing steps (cleaning, transformation, etc.), and any relevant metadata associated with our datasets. This ensures that the exact data used in the analysis is easily accessible and replicable.

- Version Control for Code: All analysis code is stored in a version control system like Git, allowing us to track changes, revert to previous versions, and share our workflow transparently. This is critical for reproducibility, especially in complex analyses involving multiple scripts and libraries.

- Reproducible Research Environments: We utilize tools like Docker or virtual machines to create consistent computing environments. This guarantees that the same software versions, libraries, and dependencies used during the analysis are readily available for replication. This eliminates discrepancies due to different software configurations.

- Clear and Detailed Methodological Documentation: We provide detailed descriptions of our analytical methods, including the algorithms employed, parameter settings, and any specific choices made during the process. This ensures transparency and allows others to understand the exact steps taken.

For example, if we’re analyzing stock market trends, we’d specify the exact data source (e.g., Yahoo Finance), the timeframe, the specific indicators used (e.g., RSI, MACD), and the parameters for those indicators. This level of detail makes our analysis verifiable and repeatable.

Q 23. How do you adapt your pattern recognition techniques to different data types?

Adapting pattern recognition techniques to different data types requires a deep understanding of both the data’s characteristics and the strengths and weaknesses of various algorithms. The key is to pre-process the data appropriately before applying the chosen algorithm.

- Numerical Data: For numerical data (e.g., stock prices, sensor readings), techniques like regression analysis, time series analysis, and clustering (k-means, DBSCAN) are often appropriate. We might standardize or normalize the data to improve algorithm performance.

- Categorical Data: With categorical data (e.g., customer demographics, text classifications), we use techniques like decision trees, support vector machines (SVMs), or naive Bayes. We might need to encode categorical variables numerically (e.g., one-hot encoding) before analysis.

- Text Data: Analyzing text data (e.g., social media posts, news articles) often involves natural language processing (NLP) techniques such as topic modeling (LDA), sentiment analysis, or word embeddings (Word2Vec, GloVe). We need to preprocess the text – cleaning, stemming, and tokenizing – before feeding it into the chosen algorithm.

- Image Data: Image data (e.g., medical scans, satellite imagery) often requires convolutional neural networks (CNNs) for feature extraction and pattern recognition. Pre-processing might involve resizing, normalization, and augmentation.

For example, if we’re analyzing customer purchase patterns, numerical data (purchase amounts, frequency) might be analyzed using regression, while categorical data (customer demographics, product categories) might be analyzed using decision trees. We adapt our approach based on the data type to achieve optimal results.

Q 24. Explain how you would use pattern recognition to predict future trends.

Predicting future trends using pattern recognition involves identifying recurring patterns in historical data and extrapolating them into the future. This is inherently uncertain, but sophisticated techniques can significantly improve prediction accuracy.

- Time Series Analysis: For time-dependent data, we utilize time series models like ARIMA, Prophet, or LSTM networks to identify temporal dependencies and forecast future values. These models capture trends, seasonality, and cyclical patterns.

- Machine Learning Models: We can train supervised learning models (e.g., regression, SVM) on historical data to predict future values based on relevant features. The accuracy depends heavily on the quality and quantity of the training data.

- Anomaly Detection: Identifying unusual patterns in the data can be crucial for trend prediction. For example, a sudden spike in sales might indicate a new trend emerging, while a significant drop might signal a problem that needs attention.

- Ensemble Methods: Combining predictions from multiple models (ensemble methods) often leads to more robust and accurate forecasts. This mitigates the risk associated with relying on a single model.

Imagine predicting the sales of a new product. We would analyze historical sales data of similar products, considering factors such as marketing spend, seasonality, and competitor activity. We might use time series analysis to forecast sales based on past trends or train a regression model to predict sales based on these features.

Q 25. Describe a time you failed to identify a crucial pattern. What did you learn from it?

During a project analyzing social media sentiment towards a new product launch, I failed to initially identify a crucial pattern of negative sentiment stemming from a specific user group. My initial analysis focused on overall sentiment, which showed a positive trend. However, a deeper dive, prompted by declining sales figures, revealed a significant segment of users expressing strong negative feedback related to a specific product feature.

The lesson learned was the importance of segmenting the data and exploring it from multiple perspectives. Focusing solely on aggregate metrics can mask crucial patterns within subgroups. Now, I always incorporate techniques for data segmentation and subgroup analysis into my workflow to uncover nuanced patterns that might otherwise remain hidden.

Q 26. How do you stay up-to-date with the latest advancements in pattern recognition?

Staying current in pattern recognition requires a multi-pronged approach.

- Academic Publications: I regularly read research papers published in top journals and conference proceedings related to machine learning, data mining, and pattern recognition. This keeps me informed about the latest theoretical advancements and algorithmic innovations.

- Industry Conferences and Workshops: Attending conferences and workshops allows me to network with other professionals and learn about practical applications of pattern recognition techniques in various domains. The discussions and presentations offer valuable insights into real-world challenges and solutions.

- Online Courses and Tutorials: Platforms like Coursera, edX, and Udacity offer excellent courses on various aspects of pattern recognition, keeping my skills sharp and expanding my knowledge base. These courses often incorporate cutting-edge techniques and practical examples.

- Open-Source Contributions: Engaging with open-source projects related to machine learning and pattern recognition allows me to stay updated on the latest tools and libraries, and it provides opportunities to contribute to the community.

- Following Key Researchers and Influencers: I actively follow influential researchers and practitioners in the field on social media and through their publications. This provides a steady stream of updates on new developments and trends.

Q 27. Explain the trade-off between accuracy and efficiency in pattern recognition.

There’s a constant trade-off between accuracy and efficiency in pattern recognition. More complex models, while potentially achieving higher accuracy, often come at the cost of increased computational complexity and longer processing times. Conversely, simpler models might be faster but sacrifice some accuracy.

The optimal balance depends on the specific application and its constraints. For example, in real-time applications like fraud detection, speed is crucial, so we might prioritize efficiency even if it means slightly lower accuracy. However, in applications where high accuracy is paramount, such as medical diagnosis, we might tolerate longer processing times to achieve better results.

Techniques like model selection, dimensionality reduction, and algorithm optimization help us navigate this trade-off. We select models and parameters that provide the best compromise between accuracy and efficiency for the given application’s requirements. We might use techniques like pruning decision trees, applying regularization to prevent overfitting, or using approximation algorithms to reduce computation time.

Key Topics to Learn for Pattern Following Interviews

- Identifying Patterns: Learn to recognize different types of patterns (numerical, logical, spatial, etc.) and understand their underlying rules.

- Pattern Decomposition: Break down complex patterns into smaller, manageable components to simplify analysis and solution finding.

- Algorithmic Thinking: Develop the ability to translate identified patterns into algorithms or logical steps for solving similar problems.

- Abstract Reasoning: Practice abstract thinking to identify patterns beyond the obvious, focusing on underlying relationships and structures.

- Pattern Generalization: Learn to generalize observed patterns to predict future elements or outcomes within the sequence.

- Practical Applications: Explore real-world examples of pattern following in data analysis, software development, problem-solving, and other fields relevant to your target roles.

- Problem-Solving Strategies: Develop a systematic approach to tackling pattern-following problems, including techniques like working backwards, elimination, and hypothesis testing.

- Coding Practice (If Applicable): If the role involves coding, practice implementing pattern-recognition algorithms in your preferred language.

Next Steps

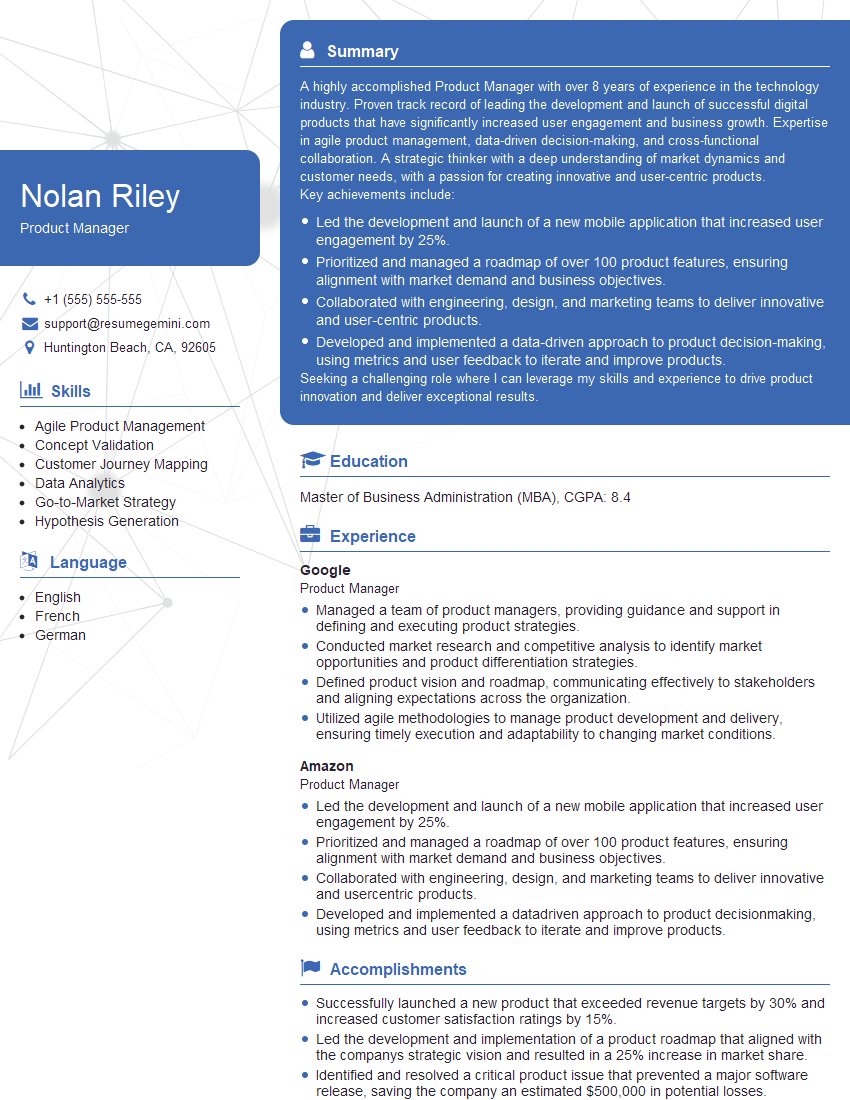

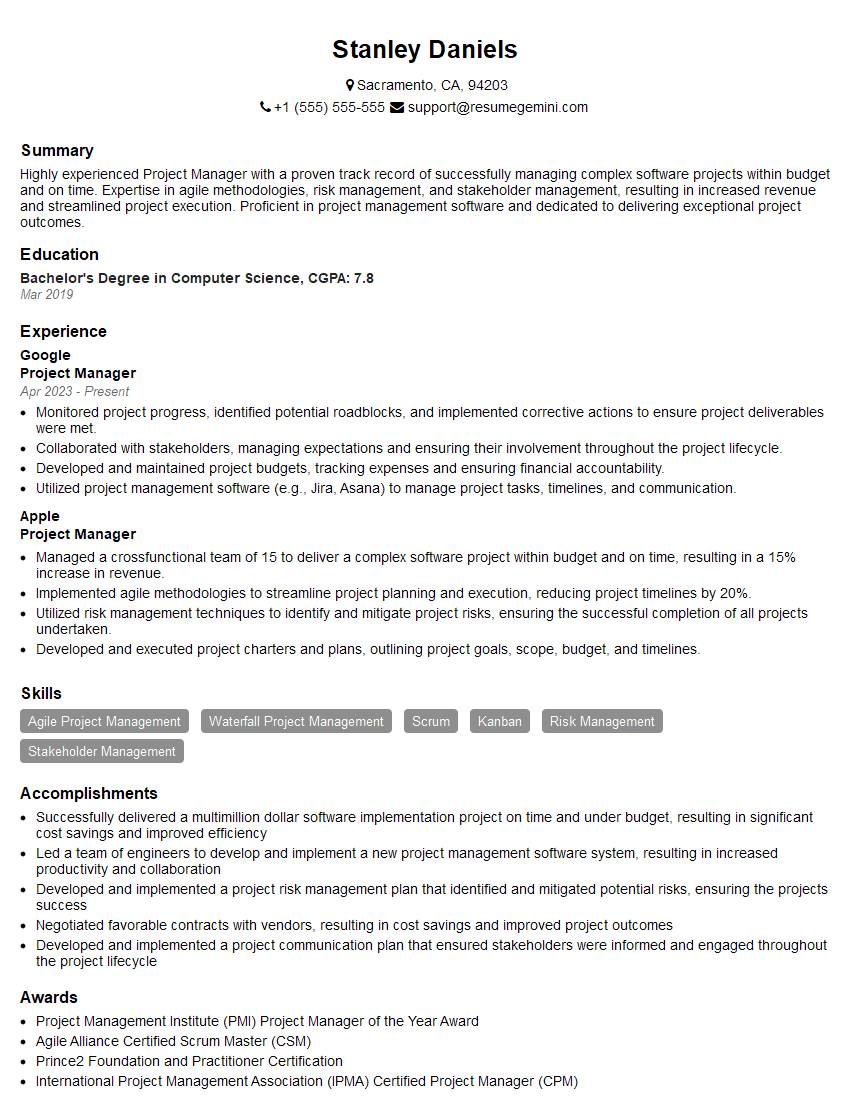

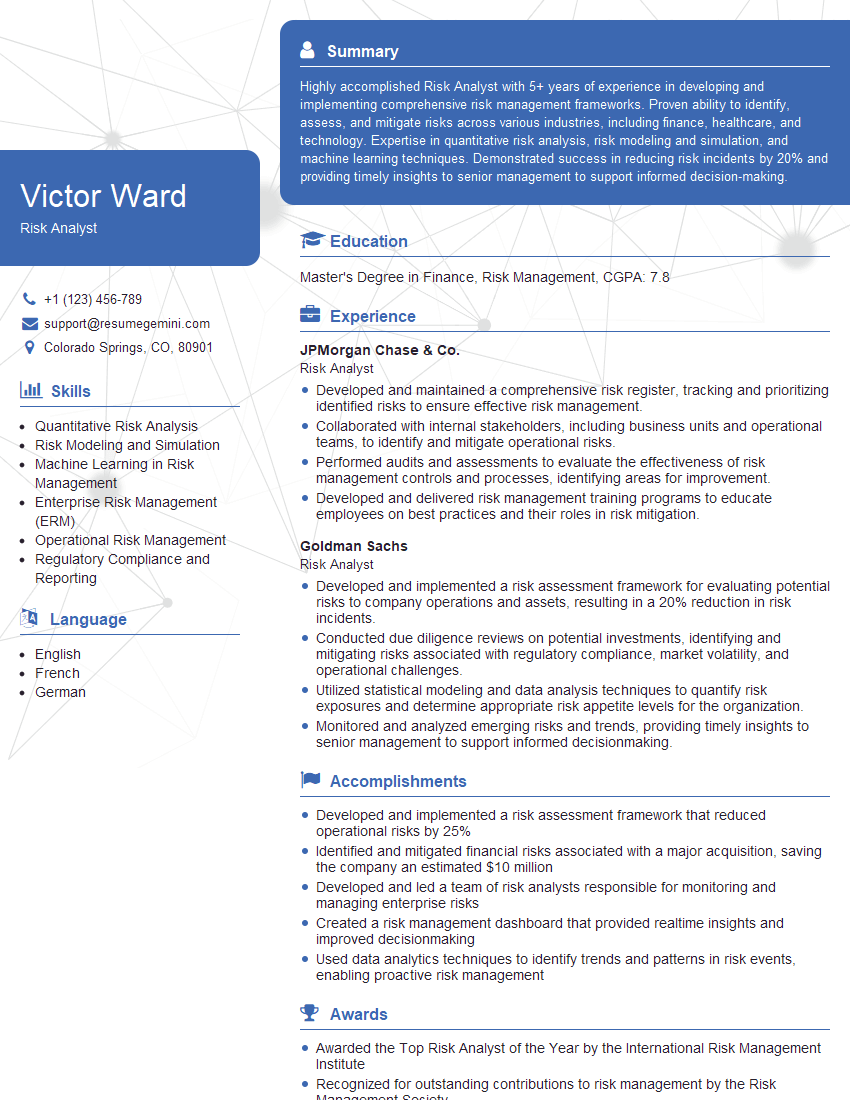

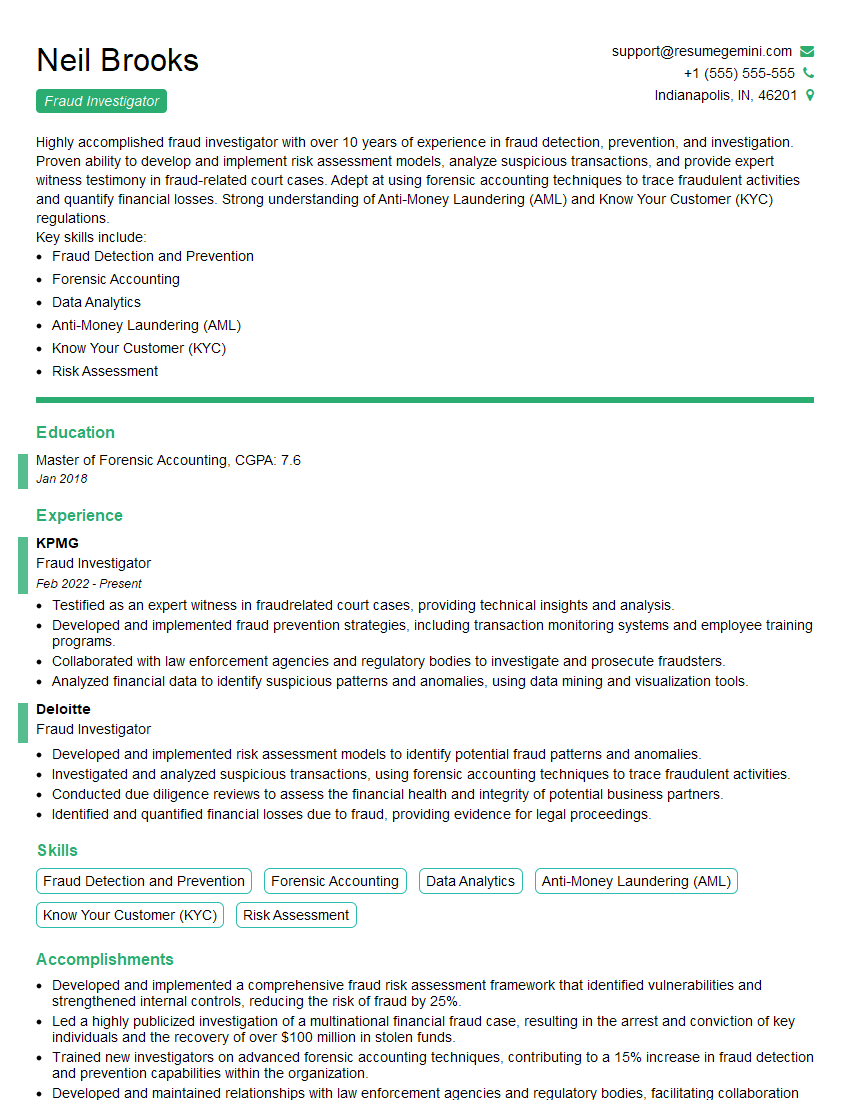

Mastering pattern following is crucial for success in many analytical and technical roles, demonstrating your problem-solving skills and ability to think critically. A strong understanding of patterns significantly enhances your ability to identify trends, make predictions, and develop efficient solutions. To significantly boost your job prospects, focus on building an ATS-friendly resume that highlights your pattern-following abilities and relevant skills. ResumeGemini is a trusted resource to help you craft a professional and impactful resume that gets noticed. We provide examples of resumes tailored to highlight Pattern Following skills; take a look at our sample resumes to get started!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO