Preparation is the key to success in any interview. In this post, we’ll explore crucial DEM Extraction interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in DEM Extraction Interview

Q 1. Explain the difference between a DEM and a DTM.

While both DEMs (Digital Elevation Models) and DTMs (Digital Terrain Models) represent the Earth’s surface, they differ in what they include. Think of it like this: a DEM is a complete picture, including everything – buildings, trees, and the ground itself. A DTM, however, is a more refined representation, showing only the bare earth. It’s like removing all the ‘stuff’ to reveal the underlying terrain.

Specifically, a DEM includes all surface features, while a DTM represents only the earth’s surface, excluding man-made features and vegetation. Extracting a DTM from a DEM often involves a process of classification and filtering to remove these non-terrain elements.

Q 2. Describe various methods for DEM extraction from LiDAR data.

LiDAR (Light Detection and Ranging) data provides an excellent source for DEM extraction. Several methods are employed, each with its strengths and weaknesses:

- Ground Point Classification: This involves identifying and classifying points in the LiDAR point cloud that represent the bare earth. Algorithms analyze point density, elevation, and neighborhood characteristics to separate ground points from those representing vegetation or buildings. This is a very common and effective method.

- Triangulated Irregular Networks (TINs): Once ground points are classified, a TIN is created by connecting these points to form a surface. This method is useful for representing complex terrain but can be computationally intensive.

- Grid-based Interpolation: This involves interpolating values onto a regular grid from the classified ground points. Several interpolation methods exist, including inverse distance weighting (IDW), kriging, and spline interpolation. Each method has different properties that make it suitable for various applications and datasets.

- Multi-resolution segmentation: This method uses image segmentation to identify coherent surface features, which helps in ground point classification, especially in complex environments.

The choice of method depends on factors such as the density and quality of the LiDAR data, the complexity of the terrain, and the desired accuracy of the DEM.

Q 3. What are the common data formats used for DEMs?

DEMs are typically stored in various formats, each with its advantages and disadvantages. Common formats include:

- GeoTIFF (.tif): A widely used, georeferenced format supporting various compression and data types.

- ASCII Grid (.asc): A simple text-based format specifying grid dimensions, cell size, and elevation values.

- ERDAS Imagine (.img): A proprietary format used by ERDAS Imagine software, supporting various raster data types.

- HDF5 (.h5): A flexible, self-describing file format that can store large datasets efficiently.

The choice of format often depends on the software used for processing and analysis, and the size and complexity of the DEM. GeoTIFF is frequently preferred due to its broad compatibility and support for georeferencing.

Q 4. How do you handle data gaps or inconsistencies in DEM data?

Data gaps and inconsistencies are common issues in DEM data, often arising from limitations in data acquisition or processing. Several techniques address these issues:

- Interpolation: Filling gaps using neighboring data points. Methods like IDW, kriging, or spline interpolation can be used, each with varying degrees of smoothness and accuracy. The choice depends on the nature and extent of the gap.

- Gap Filling using other data sources: If available, data from other sources like aerial photography or previously existing DEMs can be used to fill gaps or improve inconsistencies.

- Filtering: Applying spatial filters to smooth out inconsistencies or noise in the data. This can help reduce artifacts and improve the overall quality of the DEM. However, over-filtering can lead to loss of detail.

Careful consideration of the chosen method is crucial, as improper gap filling can introduce errors or artifacts that affect downstream analyses. Often, a combination of techniques yields the best results.

Q 5. Explain the concept of spatial resolution in DEMs and its implications.

Spatial resolution refers to the size of the individual cells or pixels in a DEM. It dictates the level of detail captured in the model. A high-resolution DEM (e.g., 1-meter resolution) shows fine details of the terrain, while a low-resolution DEM (e.g., 30-meter resolution) provides a more generalized representation. Think of zooming in on a map – higher resolution means more detail is visible.

Implications of spatial resolution include:

- Accuracy: Higher resolution generally implies greater accuracy, but requires more storage space and processing power.

- Applications: High-resolution DEMs are necessary for applications requiring precise elevation measurements, such as hydrological modeling or landslide analysis. Low-resolution DEMs are sufficient for broader scale applications such as regional planning.

- Computational Cost: Processing and analyzing high-resolution DEMs is computationally more expensive compared to low-resolution ones.

Q 6. What are the common errors associated with DEM extraction and how can they be mitigated?

Several errors can occur during DEM extraction. Understanding these errors and their mitigation is crucial for producing high-quality DEMs:

- Systematic Errors: These are consistent biases across the entire DEM, potentially stemming from sensor calibration issues or systematic errors in the georeferencing process. Calibration checks and rigorous georeferencing are vital to minimizing these errors.

- Random Errors: These are unpredictable variations in elevation data, often due to noise in the source data or limitations in interpolation techniques. Filtering techniques and careful selection of interpolation methods can mitigate these.

- Geometric Errors: These result from inaccuracies in positioning data and can lead to distortions in the DEM. Careful georeferencing and consideration of positional uncertainties are critical.

- Classification Errors: Incorrect classification of ground points from LiDAR data can significantly affect the accuracy of the DEM. Employing advanced classification algorithms and manual quality control checks can reduce these.

A rigorous quality assurance process involving visual inspection, statistical analysis, and comparison with other data sources is essential to detect and mitigate these errors. Employing rigorous data processing and quality control strategies is paramount.

Q 7. Describe the process of orthorectification and its importance in DEM creation.

Orthorectification is the process of geometrically correcting a raster image or DEM to remove distortions caused by sensor geometry and Earth’s curvature. Imagine taking a photo from an airplane – it’ll be slightly distorted. Orthorectification removes this distortion, making the image or DEM geometrically accurate. It’s like straightening a warped photograph to make all the features appear in their correct spatial locations.

The process involves:

- Determining sensor parameters: Gathering information about the sensor’s position, orientation, and internal geometry during data acquisition.

- Applying a Digital Elevation Model (DEM): The DEM is used to correct for relief displacement, ensuring that features are correctly positioned relative to their true elevations.

- Geometric Transformations: Mathematical transformations are applied to correct for distortions, resulting in an orthorectified image or DEM.

Orthorectification is crucial in DEM creation because it ensures that the elevation data is spatially accurate, making it suitable for accurate measurements, analysis, and integration with other geospatial data.

Q 8. What are the different interpolation techniques used in DEM creation, and what are their strengths and weaknesses?

DEM creation relies heavily on interpolation techniques to estimate elevation values at unsampled locations from a set of known points. Several methods exist, each with its strengths and weaknesses:

- Nearest Neighbor: This is the simplest method, assigning the elevation of the nearest known point to the unsampled location. It’s computationally fast but produces blocky, discontinuous surfaces with noticeable artifacts. Think of it like assigning a color to a pixel based on the closest colored pixel in a picture – simple but can be rough.

- Bilinear Interpolation: This method uses a weighted average of the four nearest known points to estimate the elevation. It creates smoother surfaces than nearest neighbor but can still introduce some artifacts, particularly in areas with high elevation variation. Imagine taking the average of four neighboring pixels in a photo to determine the color of the middle pixel.

- Inverse Distance Weighting (IDW): This technique gives more weight to closer points when calculating the average. The power parameter controls the influence of distance. Higher powers emphasize closer points, creating smoother surfaces but potentially obscuring local details. It’s like considering the effect of gravity – closer objects have a stronger influence.

- Spline Interpolation: This method fits a smooth curve (spline) through the known points. Various types of splines exist (cubic, thin-plate), offering flexibility in controlling smoothness. It produces visually pleasing results but can be computationally more expensive and prone to extrapolation artifacts beyond the known points, causing unrealistic elevation estimates in those areas. Spline interpolation is like drawing a smooth curve through a scatter plot – you create a continuous representation of the data.

- Kriging: A geostatistical method that accounts for spatial autocorrelation, making it particularly suitable for areas with varying data density and spatial dependencies in elevation. This produces accurate representations but requires significant computational resources and expert knowledge of geostatistical parameters.

The choice of method depends on factors like data density, desired accuracy, computational resources, and the nature of the terrain. For instance, a simple method like nearest neighbor might suffice for a preliminary visualization, while Kriging may be needed for high-precision DEMs for hydrological modeling.

Q 9. How do you assess the accuracy of a DEM?

DEM accuracy assessment involves comparing the generated DEM to a reference dataset of known high accuracy. This can be done through various methods:

- Root Mean Square Error (RMSE): This measures the average difference between the DEM and the reference data. A lower RMSE indicates higher accuracy.

- Mean Absolute Error (MAE): Similar to RMSE, but less sensitive to outliers. It represents the average absolute difference between the DEM and reference data.

- Visual Inspection: While subjective, visually comparing the DEM with aerial imagery, topographic maps, or other known terrain features helps identify potential errors like artifacts or unrealistic elevation values.

- Statistical Metrics: Analysis of the elevation distribution, histograms, and autocorrelation can reveal systematic errors or biases in the DEM.

- Comparison with GCPs: Comparing elevations from the DEM at GCP locations to their actual elevations provides direct verification of accuracy. High deviations highlight areas that need further investigation.

The selection of an appropriate accuracy assessment technique depends on the application and the reference data available. For instance, comparing the DEM to a high-resolution LiDAR DEM provides a more rigorous assessment than a simple visual comparison.

Q 10. Explain the role of ground control points (GCPs) in DEM generation.

Ground Control Points (GCPs) are points with known coordinates (latitude, longitude, and elevation) in the real world. They are crucial for georeferencing and accuracy assessment in DEM generation. GCPs act as anchor points, providing a link between the image or point cloud data used for DEM creation and the real-world coordinate system. Without GCPs, the DEM would be unreferenced or poorly referenced, rendering it useless for many applications.

During DEM generation, the software uses the GCPs to transform and register the image or point cloud data, correcting for distortions and aligning it to the earth’s surface. The accuracy of the DEM is directly influenced by the number, distribution, and accuracy of the GCPs. More GCPs, especially those strategically spread across the area, improve the accuracy of the geometric transformation and the final DEM. Poorly chosen or inaccurately measured GCPs can introduce significant errors. Imagine building a house without proper foundation markers – you risk constructing a wonky structure.

Q 11. Discuss the applications of DEMs in various fields (e.g., hydrology, urban planning).

DEMs are versatile tools with applications across various fields:

- Hydrology: DEMs are fundamental for hydrological modeling, simulating water flow, predicting flood risks, and managing watersheds. They are used to delineate drainage basins, determine flow paths, and estimate runoff volumes.

- Urban Planning: DEMs help in urban planning by providing essential information on terrain slopes, aspects, and elevation changes. This data is crucial for infrastructure development, land-use planning, and assessing urban development impact on the environment.

- Environmental Management: DEMs are used for habitat analysis, environmental impact assessments, and species distribution modeling. They are particularly useful for studying the effects of topography on ecological processes.

- Agriculture: Precision agriculture benefits from DEMs, allowing for variable-rate application of fertilizers, pesticides, and irrigation, optimizing resource use and improving yields.

- Transportation Planning: DEMs assist in route planning, road design, and transportation network analysis. They help determine optimal road alignments, minimizing construction costs and environmental impact.

- Geology: In geology, DEMs are utilized for creating geological maps, analyzing landslides, understanding terrain evolution, and modeling geological processes.

In essence, any application requiring information about the earth’s surface and its variations benefits from the use of DEMs. They are a fundamental layer of geospatial data that enables a range of spatial analyses and applications.

Q 12. How do you handle large DEM datasets efficiently?

Handling large DEM datasets efficiently requires strategies to manage both data storage and processing:

- Data Compression: Using lossless compression techniques (e.g., GeoTIFF with appropriate compression) reduces the storage space required without losing information.

- Data Tiling: Dividing the DEM into smaller tiles allows for parallel processing, significantly reducing processing time. Processing each tile independently is far faster than working with the entire dataset at once.

- Cloud Computing: Leveraging cloud-based platforms like AWS or Google Cloud provides scalability and access to powerful computing resources for processing and analyzing massive DEM datasets.

- Data Filtering and Downsampling: Reducing the spatial resolution of the DEM by downsampling or applying appropriate filters can drastically reduce the data size while preserving relevant information for specific tasks. This sacrifices some detail but increases processing speed.

- Efficient Data Structures: Using optimized data structures and algorithms (e.g., quadtrees, octrees) can improve data access speeds during processing.

The optimal strategy depends on the specific DEM size, processing requirements, and available resources. A combination of these techniques is often employed to maximize efficiency.

Q 13. What software packages are you familiar with for DEM processing and analysis?

My experience includes working with several software packages for DEM processing and analysis:

- ArcGIS: A comprehensive GIS software suite with robust tools for DEM creation, processing, and analysis, including spatial interpolation, surface analysis, and hydrological modeling.

- QGIS: A free and open-source GIS software that provides a wide range of DEM processing capabilities, comparable to commercial software but with a steeper learning curve.

- Global Mapper: Specialized software offering extensive tools for DEM creation, editing, and analysis, suitable for both novice and expert users.

- ERDAS Imagine: A powerful image processing and analysis package with strong capabilities in DEM generation and management.

- ENVI: Similar to ERDAS Imagine, ENVI is a comprehensive remote sensing software with advanced tools for DEM creation from various data sources like LiDAR and satellite imagery.

- MATLAB: A high-level programming language that can be used with specialized toolboxes for advanced DEM processing and analysis, offering excellent control over the process but requiring more programming skills.

The choice of software depends on the specific task, the user’s skill level, and the availability of licenses.

Q 14. Explain the concept of TIN (Triangulated Irregular Network) and its relationship to DEMs.

A Triangulated Irregular Network (TIN) is a vector-based representation of a surface created by connecting a series of irregularly spaced points (nodes) with triangles. Each triangle represents a facet of the surface. TINs are closely related to DEMs, often serving as an intermediate step in DEM generation or as an alternative representation of elevation data.

Unlike raster-based DEMs that have a regular grid of elevation values, TINs adapt to the terrain’s complexity, using more points in areas with high elevation variation and fewer points in flatter areas. This makes them efficient for representing complex surfaces with varying levels of detail. Think of it like fitting puzzle pieces – the pieces are triangles, and they adapt to the shape of the surface being represented.

The relationship between TINs and DEMs is that a DEM can be created from a TIN by interpolating the elevation values within each triangle to produce a regular grid of elevations. Conversely, a TIN can be generated from a DEM by selecting nodes and creating a network of triangles that best approximate the elevation surface. TINs are often preferred when high accuracy is needed in areas with complex terrain features.

Q 15. What are the advantages and disadvantages of using LiDAR vs. photogrammetry for DEM extraction?

LiDAR (Light Detection and Ranging) and photogrammetry are both powerful techniques for extracting Digital Elevation Models (DEMs), but they differ significantly in their data acquisition methods and resulting data characteristics. LiDAR uses laser pulses to measure distances, providing highly accurate point cloud data, even in dense vegetation. Photogrammetry, on the other hand, uses overlapping photographs to create a 3D model. Let’s compare their advantages and disadvantages:

LiDAR Advantages:

- High accuracy: LiDAR offers superior vertical accuracy, especially in complex terrain.

- Penetration capability: Laser pulses can penetrate vegetation to some extent, providing information about the ground surface beneath.

- Direct measurement: LiDAR directly measures distances, reducing errors associated with image interpretation.

LiDAR Disadvantages:

- High cost: LiDAR data acquisition is considerably more expensive than photogrammetry.

- Limited area coverage per mission: Depending on the platform, LiDAR might cover smaller areas in a single flight.

- Sensitivity to atmospheric conditions: Strong winds or rain can affect data quality.

Photogrammetry Advantages:

- Lower cost: Photogrammetry is significantly cheaper, especially with readily available drone imagery.

- Larger area coverage: A single flight can cover substantial areas.

- Rich color information: Photogrammetry provides color information, enabling the creation of orthomosaics and textured 3D models.

Photogrammetry Disadvantages:

- Lower accuracy: Accuracy is lower than LiDAR, especially in areas with dense vegetation or low texture.

- Sensitive to lighting conditions: Shadows and poor lighting can affect accuracy.

- Requires careful image planning: Overlapping images are crucial for successful processing.

In summary, the choice between LiDAR and photogrammetry depends on the project’s budget, required accuracy, and the nature of the terrain. High-accuracy applications, such as infrastructure monitoring or precision agriculture, often benefit from LiDAR. Projects with larger areas and tighter budgets might find photogrammetry more suitable.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you identify and remove outliers in DEM data?

Outliers in DEM data represent elevation values that significantly deviate from the surrounding values. These can be caused by errors in data acquisition, processing, or the presence of unusual features. Identifying and removing outliers is crucial for producing reliable DEMs. I typically employ a combination of techniques:

1. Visual Inspection: A visual examination of the DEM in a GIS software is the first step. This helps identify obvious spikes or depressions that stand out from the overall terrain.

2. Statistical Methods: Statistical filters can be applied to identify and remove outliers based on their deviation from the mean or median elevation in a specified neighborhood. Common methods include:

- Median filter: Replaces each elevation value with the median value of its surrounding pixels. This effectively smooths the data and removes outliers.

- Standard deviation filter: Values exceeding a certain number of standard deviations from the mean are flagged as outliers.

3. Spatial Filtering: These techniques consider the spatial context of the data. For example, a low-pass filter smooths the surface, reducing the impact of sharp elevation variations, which often indicate outliers.

4. Interpolation Techniques: If outliers are removed, the gaps need to be filled. Interpolation methods like kriging or inverse distance weighting can be used to estimate the missing elevation values based on neighboring data points.

Example using a median filter (conceptual):

Imagine a 3×3 pixel window around a central pixel with elevations [10, 12, 11; 13, 100, 14; 11, 12, 10]. The central value (100) is a clear outlier. A median filter would replace it with the median of the surrounding values (12).

The choice of outlier removal technique depends on the data characteristics and the desired level of smoothing. It’s important to strike a balance between removing noise and preserving genuine terrain features. Often, a combination of techniques is applied iteratively to refine the DEM.

Q 17. Describe your experience with different DEM data sources (e.g., SRTM, ASTER).

I have extensive experience working with various DEM data sources, including SRTM (Shuttle Radar Topography Mission) and ASTER (Advanced Spaceborne Thermal Emission and Reflection Radiometer) GDEM. Each has its strengths and limitations.

SRTM: SRTM offers global coverage with a spatial resolution of approximately 90 meters. I’ve used SRTM data for large-scale analyses, such as hydrological modeling and regional landscape characterization. Its advantage is its global coverage; however, its spatial resolution limits its use for high-resolution applications. The accuracy also varies across regions, generally being better in less vegetated areas.

ASTER GDEM: ASTER provides a global DEM with a 30-meter resolution. This finer resolution provides significantly improved detail compared to SRTM. I’ve used ASTER GDEM for applications requiring more detailed elevation information, such as slope analysis, landslide susceptibility mapping, and smaller-scale hydrological modeling. However, similar to SRTM, its accuracy can vary geographically, impacted by cloud cover and terrain complexity during acquisition.

In my work, I often pre-process and quality-check these datasets. This includes identifying and potentially removing areas with poor data quality (e.g., areas with significant cloud cover), and potentially comparing the datasets with other higher-resolution data where available for validation and gap-filling.

Q 18. Explain the concept of vertical datum and its importance in DEMs.

The vertical datum defines the reference surface used to measure elevations. Imagine trying to measure the height of a mountain without a consistent reference point – you’d get inconsistent results. The vertical datum provides this consistent reference.

Common vertical datums include NAVD88 (North American Vertical Datum of 1988) and EGM2008 (Earth Gravitational Model 2008). These datums are based on complex geodetic models that consider the Earth’s shape and gravitational field. The choice of vertical datum is crucial in DEMs because it impacts the accuracy and consistency of elevation measurements.

Importance in DEMs:

- Accuracy and consistency: A common datum ensures that all elevations in the DEM are referenced to the same surface, ensuring consistency and comparability.

- Application compatibility: Many GIS software packages and hydrological/geotechnical modeling tools rely on specific vertical datums for correct functioning.

- Data integration: Integrating DEMs from different sources requires them to share a common vertical datum to avoid errors.

For example, if you’re analyzing flood risk, using inconsistent vertical datums could lead to inaccurate estimations of flood depths and potentially dangerous results. Therefore, careful consideration and appropriate datum transformation are essential when working with DEMs.

Q 19. How do you ensure the consistency and accuracy of DEM data across different acquisition sources?

Ensuring consistency and accuracy across different DEM acquisition sources is a critical aspect of DEM processing. This often involves a multi-step process:

1. Datum Transformation: All DEMs must be referenced to the same vertical and horizontal datums. Sophisticated geospatial transformations, such as applying geoid models or using control points, are often needed for this step. Software packages like ArcGIS Pro or QGIS provide tools to handle such transformations.

2. Resampling: If DEMs have different resolutions, resampling is required. Common resampling methods include nearest neighbor, bilinear interpolation, and cubic convolution. The best method depends on the specific application; for example, nearest-neighbor preserves sharp edges, while cubic convolution produces smoother surfaces.

3. Data Fusion: Techniques like weighted averaging or more advanced methods such as fuzzy logic can integrate multiple DEMs to create a more complete and accurate DEM, leveraging the strengths of different data sources. This helps compensate for limitations in individual datasets.

4. Quality Control: Rigorous quality checks are essential throughout the process. Visual inspection, statistical analysis of elevation differences, and comparison with reference data (e.g., ground surveys) are crucial steps to ensure data integrity.

5. Error Modeling: Understanding and modeling the inherent errors in each DEM source is valuable. This involves analyzing systematic and random errors to better assess the uncertainty in the final fused DEM.

In practical terms, this might involve using LiDAR data for high-accuracy areas and filling gaps using lower-resolution data like SRTM, ensuring the final product is both accurate and complete.

Q 20. What are the key considerations when choosing an appropriate DEM resolution for a specific application?

Choosing the right DEM resolution is crucial because it directly impacts the accuracy, detail, and computational demands of downstream applications.

Factors to Consider:

- Application requirements: High-resolution DEMs (e.g., 1-meter resolution) are needed for applications requiring detailed terrain information, like precise volume calculations for construction projects or detailed hydrological modeling.

- Study area characteristics: Flat terrain might require lower resolution than mountainous regions, where high resolution is important for capturing slope variations accurately.

- Computational resources: Processing high-resolution DEMs requires significant computing power and storage. Lower resolution reduces processing time and storage needs.

- Data availability and cost: Higher resolution DEMs are typically more expensive and may not be available for every area.

Examples:

- National-scale hydrological modeling: A 90-meter DEM like SRTM is sufficient.

- Local landslide analysis: A 1-5 meter DEM from LiDAR or high-resolution imagery is necessary.

- Route planning: A relatively high-resolution DEM is needed to accurately assess slope and terrain complexity.

Ultimately, the optimal resolution involves a trade-off between detail, computational demands, cost, and the specific needs of the application. A thorough understanding of the intended application is essential for making an informed decision.

Q 21. Describe your experience with automated DEM extraction techniques.

I have extensive experience with automated DEM extraction techniques, primarily focusing on semi-automated and fully automated workflows leveraging advanced software packages. This involves a range of methods including:

1. Structure-from-Motion (SfM) photogrammetry: This is a fully automated process. I’ve used software like Pix4D and Agisoft Metashape to process large datasets of overlapping images from drones or satellites. The software automatically identifies feature points, performs image matching, and generates a dense point cloud, which is then used to create a DEM.

2. LiDAR point cloud processing: Here, I utilize software like LAStools and TerraScan to process raw LiDAR point cloud data. These tools allow for automated classification of points (ground, vegetation, buildings), filtering, and generation of DEMs through interpolation techniques like TIN (Triangulated Irregular Network) or grid-based interpolation.

3. Machine learning applications: Emerging techniques utilize machine learning for automated DEM generation, specifically for automated point cloud classification. These approaches show promise in improving accuracy and efficiency but still require careful evaluation and validation.

Challenges in Automation: While automation offers efficiency, challenges exist such as:

- Data quality: Poor quality input data (e.g., blurry images, noisy LiDAR) can significantly impact the accuracy of automated DEM extraction.

- Computational resources: Processing large datasets requires substantial computing resources, potentially limiting the feasibility of fully automated workflows.

- Error detection: Automated systems might miss errors, necessitating careful manual quality checks.

My workflow often involves a combination of automated tools and manual quality control to ensure accuracy and reliability. Automation speeds up the process, but human expertise remains crucial for validation and refinement.

Q 22. How do you manage and process DEM data from different coordinate systems?

Managing DEM data from different coordinate systems is crucial for accurate analysis and integration. Different datasets might use UTM, geographic coordinates (latitude/longitude), or state plane coordinates. The key is to perform a coordinate transformation using a Geographic Information System (GIS) software or specialized tools. This involves defining the source and target coordinate reference systems (CRSs) and applying a suitable transformation algorithm. For example, you might need to project a DEM in UTM Zone 10 to match a project using NAD83 state plane coordinates. This is usually accomplished through a process called georeferencing or projection, often using well-known transformation parameters like datum transformations (e.g., from NAD27 to NAD83) or grid-based transformations.

The process typically involves these steps:

- Identify CRS: Determine the CRS of each DEM using metadata or examining the file header.

- Select appropriate transformation: Choose the appropriate transformation method based on the source and target CRSs and the accuracy requirements. Common methods include datum transformations, projective transformations, and geographic transformations.

- Perform transformation: Apply the chosen transformation using GIS software or command-line tools. Many GIS software packages (ArcGIS, QGIS) offer built-in tools for this.

- Validate transformation: Check the accuracy of the transformation by comparing the transformed data to known control points.

For instance, I once worked on a project involving DEMs from multiple sources – some used WGS84, others used a local UTM zone. I used ArcGIS Pro to reproject all DEMs to a common UTM zone based on the project area before merging them for further analysis. This avoided potential errors and ensured consistency.

Q 23. What are the challenges associated with DEM extraction in complex terrain?

DEM extraction in complex terrain presents numerous challenges. The biggest hurdle is often data acquisition. Steep slopes, dense vegetation, and shadows can hinder the ability of sensors (like LiDAR or photogrammetry) to accurately capture surface elevations. This can lead to gaps in data, inaccurate elevation measurements, and noise.

Furthermore, processing complexities arise. Algorithms designed for smooth terrain may struggle with abrupt changes in elevation, resulting in artifacts and errors. For example, algorithms reliant on interpolation may produce unrealistic surface representations in areas with deep canyons or overhanging cliffs. Also, occlusions (parts of the terrain hidden from view) can create issues in photogrammetric DEM creation.

Finally, resolution limitations play a role. The resolution of the sensor dictates the level of detail captured. In extremely complex areas, high-resolution data is needed but may not always be available or affordable. This leads to a trade-off between resolution, accuracy and cost.

To address these challenges, we often employ advanced processing techniques like:

- Multi-source data integration: Combining LiDAR and aerial imagery to compensate for individual limitations.

- Adaptive filtering: Applying different filtering techniques based on the local terrain characteristics.

- In-situ measurements: Supplementing sensor data with ground-based measurements in challenging areas.

Q 24. Explain the concept of DEM filtering and its purpose.

DEM filtering is a crucial step in DEM processing aimed at removing noise and artifacts while preserving important terrain features. Think of it like cleaning up a blurry picture – you want to enhance the details without losing the essential information. Noise in DEMs can stem from sensor errors, atmospheric effects, or the inherent limitations of data acquisition techniques.

Various filtering techniques exist, each with strengths and weaknesses:

- Smoothing filters (e.g., median filter, Gaussian filter): These reduce high-frequency noise, making the surface appear smoother. They’re effective for removing random errors but can also blur sharp features.

- Edge-preserving filters (e.g., bilateral filter): These reduce noise while preserving sharp edges and discontinuities, better suited for complex terrain.

- Morphological filters (e.g., erosion, dilation): These are used for removing small-scale features (e.g., isolated spikes) or filling small holes in the data.

The choice of filter depends heavily on the nature and extent of noise and the level of detail needed. For instance, a Gaussian filter might be appropriate for a DEM with random point errors, while an edge-preserving filter might be better for a DEM with significant variations in slope. Over-filtering can lead to loss of detail, so a careful selection and application are crucial.

Q 25. How do you visualize and interpret DEM data using GIS software?

Visualization and interpretation of DEM data in GIS software is key to understanding the terrain’s characteristics. Software like ArcGIS, QGIS, and Global Mapper offer a range of tools for this purpose. The most common techniques involve:

- Hillshade: Creating a shaded relief map simulating the effect of light on the terrain, emphasizing topographic features like hills and valleys.

- Contour lines: Generating lines of equal elevation to show the shape of the terrain. Contour spacing can be adjusted to suit the scale and complexity of the terrain.

- 3D surface rendering: Creating a three-dimensional visualization of the terrain, allowing for detailed exploration of the landscape.

- Slope and aspect maps: Deriving slope angle and aspect (direction of the slope) maps to analyze the terrain’s steepness and orientation.

- Elevation profiles: Generating cross-sections of the terrain to study elevation changes along specific lines.

By combining these visualization techniques and adding thematic layers (e.g., land cover, geology), we can extract valuable information about the terrain and its relationships with other geographical features. For example, I once used hillshade and contour lines in QGIS to identify potential landslide-prone areas based on slope steepness and aspect.

Q 26. What are the limitations of DEMs and how can they be overcome?

DEMs, while powerful tools, have inherent limitations. One major constraint is resolution. The spacing between elevation points affects the level of detail captured, and this is particularly apparent in areas of high relief. High-resolution DEMs are better suited for detailed analysis but can be computationally expensive and require substantial storage space.

Another limitation is vertical accuracy. Errors in elevation measurements can stem from various sources, including sensor limitations, atmospheric conditions, and ground cover. These errors can affect the accuracy of derived products like slope and aspect maps. Additionally, temporal limitations exist, as DEMs represent the terrain at a single point in time. Changes due to erosion, landslides, or human activity are not reflected.

These limitations can be addressed by:

- Using higher-resolution data: When finer detail is needed, opting for higher-resolution DEMs (e.g., from LiDAR).

- Data fusion: Combining DEMs from multiple sources to improve accuracy and fill gaps.

- Data validation and error correction: Employing rigorous quality control procedures and error correction techniques.

- Regular updates: Creating new DEMs at intervals to reflect temporal changes in the terrain.

Q 27. Describe your experience working with different DEM processing workflows.

My experience encompasses a wide array of DEM processing workflows, tailored to specific project needs and data availability. I’ve worked extensively with:

- Photogrammetric workflows: Processing aerial imagery to generate DEMs using Structure from Motion (SfM) software. This involves image alignment, point cloud generation, and surface modeling.

- LiDAR-based workflows: Processing LiDAR point clouds to generate DEMs and other products like canopy height models (CHMs). This usually includes noise removal, ground classification, and interpolation.

- Multi-sensor data fusion workflows: Combining LiDAR and imagery data to leverage the strengths of each sensor type, resulting in a more comprehensive and accurate DEM.

- DEM post-processing workflows: This involves data cleaning, filtering, interpolation, and the generation of derivative products like slope, aspect, and hillshade.

I’m proficient in using various software packages, including ArcGIS, QGIS, Global Mapper, and specialized point cloud processing software. I adapt my workflow based on project specifications, data quality, and the desired accuracy. For example, one project involved using a combination of LiDAR data for high-accuracy DEM generation in flat areas and photogrammetry for areas with dense vegetation where LiDAR was less effective.

Q 28. How do you ensure the quality control of DEM data throughout the entire extraction process?

Quality control (QC) is paramount throughout the DEM extraction process. It’s an iterative procedure, not just a final check. My QC process involves several stages:

- Data acquisition QC: Assessing the quality of the raw data (e.g., imagery, LiDAR point clouds) checking for sensor errors, atmospheric effects, and geometric distortions.

- Pre-processing QC: Evaluating the results of pre-processing steps (e.g., orthorectification, point cloud classification) to identify and correct errors early on.

- DEM generation QC: Checking the accuracy and consistency of the generated DEM using various metrics, including root mean square error (RMSE) and visual inspection for artifacts and unrealistic features. Comparisons with reference data, where available, are essential.

- Post-processing QC: Assessing the quality of derived products (e.g., slope, aspect maps) and checking for inconsistencies or errors. Statistical summaries and visual inspection are employed.

- Metadata QC: Ensuring the completeness and accuracy of metadata associated with the DEM, including CRS, resolution, and date of acquisition.

I frequently use visual inspection alongside quantitative metrics to identify potential issues. For example, I might compare a generated DEM to a high-resolution reference DEM to assess accuracy and identify areas with inconsistencies. Documentation is crucial, and I meticulously record all steps and results, enabling reproducibility and traceability.

Key Topics to Learn for DEM Extraction Interview

- Data Sources and Formats: Understanding various sources of elevation data (LiDAR, aerial imagery, SRTM, etc.) and their associated file formats (e.g., LAS, TIFF, GeoTIFF).

- Pre-processing Techniques: Familiarize yourself with data cleaning, noise reduction, and outlier removal methods crucial for accurate DEM extraction.

- Interpolation Methods: Master different interpolation techniques (e.g., IDW, Kriging, Spline) and their strengths and weaknesses in DEM generation. Understand how to select the appropriate method based on data characteristics and project requirements.

- Software and Tools: Gain practical experience with GIS software (e.g., ArcGIS, QGIS) and specialized DEM processing tools. Be prepared to discuss your proficiency with relevant software packages.

- Accuracy Assessment: Learn methods for evaluating the accuracy and quality of extracted DEMs, including RMSE and statistical analysis.

- Applications of DEMs: Be ready to discuss practical applications of DEMs in various fields like hydrology, civil engineering, environmental modeling, and urban planning. Provide specific examples of how DEMs are used to solve real-world problems.

- Workflow Optimization: Discuss strategies for efficient DEM extraction workflows, including automation techniques and batch processing.

- Challenges and Limitations: Understand the inherent challenges and limitations associated with DEM extraction, such as data gaps, shadow effects, and the impact of different interpolation methods on accuracy.

Next Steps

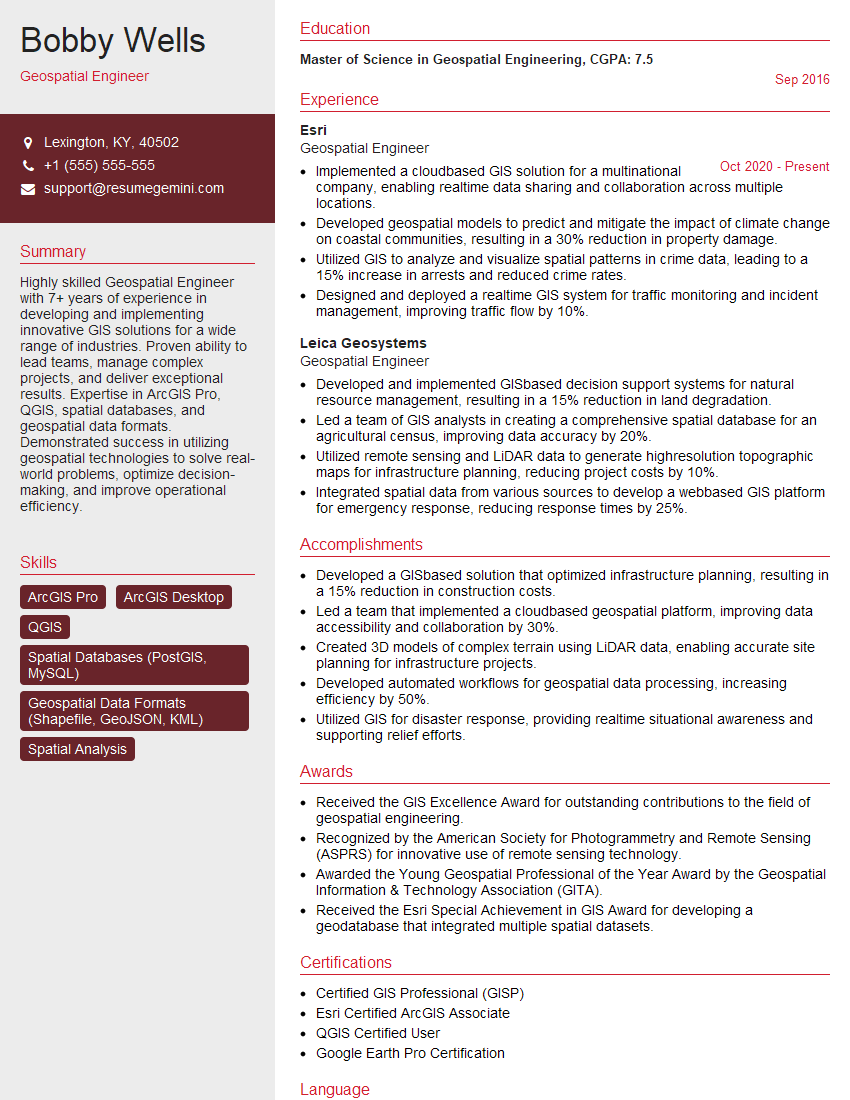

Mastering DEM extraction significantly enhances your career prospects in GIS, remote sensing, and related fields, opening doors to exciting and impactful roles. A well-crafted resume is crucial for showcasing your skills and experience effectively to potential employers. To maximize your chances of landing your dream job, creating an Applicant Tracking System (ATS)-friendly resume is essential. ResumeGemini can help you build a professional and impactful resume that stands out. ResumeGemini provides examples of resumes tailored to DEM Extraction to guide you in creating a compelling document that highlights your expertise.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO