Unlock your full potential by mastering the most common Photogrammetric Data Acquisition interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Photogrammetric Data Acquisition Interview

Q 1. Explain the principles of photogrammetry.

Photogrammetry is the science of making measurements from photographs. Essentially, it’s like using a sophisticated 3D puzzle where the pieces are photographs. By taking multiple overlapping images of an object or scene from different viewpoints, we can extract 3D information and create accurate 3D models. This is done by mathematically analyzing the differences between the images to determine the relative positions of points in space. Think of it like your eyes – each eye sees a slightly different view of the world, and your brain combines these views to perceive depth. Photogrammetry does the same thing, but on a much larger and more precise scale using computers.

Q 2. Describe the different types of cameras used in photogrammetry.

The cameras used in photogrammetry vary depending on the application and scale of the project. We can broadly categorize them as follows:

- Metric Cameras: These are highly calibrated cameras with precise internal parameters, designed specifically for photogrammetric purposes. They offer exceptional accuracy and are often used in large-scale mapping projects.

- Digital Single-Lens Reflex (DSLR) Cameras: Widely available and relatively inexpensive, DSLRs can produce high-resolution images suitable for many photogrammetric tasks. Their versatility makes them popular for many applications.

- Unmanned Aerial Vehicle (UAV) or Drone Cameras: These are compact and lightweight cameras mounted on drones, providing efficient data acquisition for large areas, such as construction sites, archeological digs, or environmental monitoring.

- Close-Range Photogrammetry Cameras: These are cameras optimized for close-up photography, useful for capturing highly detailed 3D models of smaller objects, for example, in forensic science or industrial applications.

The choice of camera depends heavily on the project’s requirements, budgetary constraints, and the desired level of accuracy.

Q 3. What are the key steps involved in a photogrammetry workflow?

A typical photogrammetry workflow involves several key steps:

- Planning and Data Acquisition: This involves determining the optimal camera settings (e.g., exposure, ISO), flight path (for UAVs), and the number of images needed for adequate overlap. Careful planning ensures the project is efficient and produces high-quality results.

- Image Processing and Alignment: This is where the software comes in. Specialized photogrammetry software automatically or semi-automatically aligns the images, identifying common features and determining the relative positions of the cameras during image capture. This is a computationally intensive step.

- Point Cloud Generation: The software then generates a point cloud – a massive collection of 3D points that represent the surface of the object or scene. The density and accuracy of the point cloud depend on the quality of the input images and processing parameters.

- Mesh Generation: A mesh is created by connecting adjacent points in the point cloud to form a 3D surface model. This process often involves techniques like Delaunay triangulation.

- Texture Mapping: The color information from the original images is applied to the mesh, creating a realistic and visually appealing 3D model.

- Model Refinement and Export: The final model is often refined through manual editing or automated procedures. It can then be exported in various formats for use in CAD software, GIS systems, or 3D printing.

Q 4. Explain the concept of image orientation and its importance.

Image orientation refers to determining the precise position and orientation (rotation) of each camera in 3D space at the moment the image was captured. This is crucial because it provides the spatial context needed to reconstruct the 3D model. Each image has six degrees of freedom: three for position (X, Y, Z coordinates) and three for orientation (roll, pitch, yaw). Accurate image orientation is essential for successful photogrammetric reconstruction. If the orientation is inaccurate, the resulting 3D model will be distorted or inaccurate. Software typically uses common features between images to achieve accurate orientation through a process called bundle adjustment, which iteratively refines the camera positions and orientations to minimize errors.

Q 5. How do you handle challenges like occlusion and shadows in photogrammetry?

Occlusion (parts of the object being hidden) and shadows are common challenges in photogrammetry. Here’s how we handle them:

- Occlusion: The best way to mitigate occlusion is to take multiple images from various angles to capture all sides of the object. The more images and the more varied the viewpoints, the less occlusion will affect the final model.

- Shadows: Shadows reduce the amount of information available to the software for feature extraction. Ideally, imaging should be conducted under even lighting conditions. If shadows are unavoidable, multiple image acquisitions at different times of day can help improve the results. Some software has advanced algorithms to handle challenging lighting conditions.

In cases with significant occlusion or shadows, acquiring additional images or employing advanced processing techniques might be necessary to obtain an acceptable result.

Q 6. What are the different types of point clouds and their applications?

Point clouds come in different types, each with its own applications:

- Unorganized Point Clouds: These are simply a collection of 3D points without any specific order or structure. They are often the initial output of photogrammetry software.

- Organized Point Clouds: These are structured point clouds, typically arranged in a regular grid, allowing for efficient processing and visualization. They are often used in applications requiring consistent point density and easy access to spatial information.

- Colored Point Clouds: These point clouds contain color information associated with each point, providing a visual representation of the surface’s appearance. They’re widely used in visualization and modeling.

- Classified Point Clouds: These are point clouds where each point has been assigned a classification, such as ground, vegetation, or building. This classification helps to segregate and analyze different aspects of a scene (common in GIS applications).

The type of point cloud generated depends on the application and the software used.

Q 7. Explain the difference between dense and sparse point clouds.

The key difference lies in the density of points.

- Sparse Point Clouds: Contain a relatively small number of points, representing key features of the object or scene. They are generated early in the photogrammetry pipeline and are mainly used for orientation and initial model creation. They are less computationally intensive to process.

- Dense Point Clouds: These contain a very large number of points, providing a detailed representation of the object’s surface. They are generated after image alignment and are used to create high-resolution 3D models. They require significant computing power and storage.

Imagine building a model with Lego bricks. A sparse point cloud would be like using just a few large bricks to outline the shape, while a dense point cloud would be like using thousands of tiny bricks to create a detailed and accurate replica.

Q 8. Describe various methods for point cloud filtering and cleaning.

Point cloud filtering and cleaning is crucial for removing noise and unwanted data from a photogrammetric point cloud, improving the quality and usability of the final 3D model. Think of it like editing a photo – you wouldn’t leave all the dust spots and blemishes in, right? Similarly, we need to clean up our point cloud.

Several methods exist, each targeting different types of noise or errors:

Statistical Filtering: This removes points that deviate significantly from their neighbors based on statistical measures like standard deviation. Imagine a perfectly smooth surface with a few unexpectedly high points – statistical filtering would identify and remove these outliers.

Radius Filtering: This removes points based on the density of points within a specified radius. Sparsely populated areas or isolated points are often removed. This is useful for eliminating noise caused by sensor limitations or occlusions.

Voxel Grid Filtering: This technique divides the point cloud into a grid of voxels (3D pixels). Then, it either keeps the average point or the point closest to the center within each voxel, effectively reducing the point cloud’s density and smoothing the surface. Think of it like creating a low-resolution version of your point cloud.

Region Growing: This method groups points based on their spatial proximity and similarity in attributes. Points not belonging to a significant region or cluster are then classified as noise and removed. It’s useful in separating distinct objects or features.

Classification-based filtering: Sophisticated algorithms can classify points into different categories (e.g., ground, vegetation, buildings). This allows for selective removal of unwanted classes from the point cloud.

The choice of filtering method depends heavily on the specific application and the nature of the noise present in the data. Often, a combination of techniques is used to achieve optimal results. For example, I might start with voxel grid filtering to reduce density, followed by statistical filtering to remove remaining outliers.

Q 9. How do you perform georeferencing in photogrammetry?

Georeferencing in photogrammetry involves assigning real-world coordinates (latitude, longitude, elevation) to the 3D model. This means transforming the model from a relative coordinate system to a known geographic coordinate system. Think of it like adding a GPS tag to your 3D model, allowing it to be accurately placed on a map.

The most common method utilizes Ground Control Points (GCPs). These are points with known coordinates measured in the field using a high-precision GPS or total station. The software then uses the GCPs to align the model to the real-world coordinate system through a process called bundle adjustment, which minimizes the errors between the measured and calculated coordinates.

Another method is using georeferenced imagery. If the input images themselves possess geographic information, the software can use this data directly for georeferencing. The accuracy of this method heavily depends on the accuracy of the image metadata.

Finally, for projects where GCP acquisition is difficult or impossible, approximate georeferencing can be performed using available maps or digital elevation models (DEMs). This involves using features in the images to manually match them to features in a known reference dataset. This method is significantly less accurate than using GCPs.

Q 10. What software packages are you familiar with for photogrammetry?

My experience spans several widely-used photogrammetry software packages. I am proficient in:

Agisoft Metashape: A powerful and versatile software known for its user-friendly interface and ability to handle large datasets.

Pix4Dmapper: Excellent for processing high-resolution imagery and creating accurate 3D models, particularly known for its strengths in automation.

RealityCapture: A professional-grade software package offering a comprehensive suite of tools for processing various data sources, including laser scans and point clouds, in addition to photogrammetry.

Meshroom: An open-source alternative that’s gaining popularity due to its free availability and community support. While not as feature-rich as commercial software, it provides a good foundation for learning and specific tasks.

My familiarity with these packages allows me to select the optimal tool for any given project based on factors such as dataset size, accuracy requirements, and budget constraints.

Q 11. Explain the concept of orthorectification and its benefits.

Orthorectification is the process of removing geometric distortions from an image or 3D model, resulting in a georeferenced, orthographic projection. Think of it like straightening a slightly skewed photograph to make it perfectly square and aligned with a map.

The main benefit is that it produces a true representation of the surface, free from distortions caused by terrain relief, camera tilt, and lens distortion. Distances and areas measured on an orthorectified image are accurate and directly relatable to ground reality. It’s incredibly useful for accurate measurements, mapping, and creating seamless mosaics. For example, an orthorectified image is essential for accurately measuring the area of a field or planning infrastructure projects.

The process typically involves using a Digital Elevation Model (DEM) to account for terrain variations and applying corrections to eliminate geometric distortions. The output is an orthomosaic – a georeferenced, geometrically-corrected composite of multiple images which can be used as an accurate map.

Q 12. How do you assess the accuracy of a photogrammetric model?

Assessing the accuracy of a photogrammetric model is crucial for ensuring the reliability of the results. This is typically achieved using several techniques:

Comparison with GCPs: The most common method is to compare the coordinates of GCPs in the model with their known ground truth coordinates. The difference represents the error, which is often expressed as Root Mean Square Error (RMSE). A lower RMSE indicates higher accuracy.

Independent Check Points (ICPs): Similar to GCPs, but these points are not used during the model creation process. They serve as an independent check of the model’s accuracy. This provides a more objective assessment as the model wasn’t fitted to these points.

Visual Inspection: A thorough visual examination can identify gross errors or inconsistencies in the model. This is particularly helpful for detecting large-scale errors that might be missed by quantitative methods.

It’s important to always specify the error metrics and methods used when reporting the accuracy of a photogrammetric model for clarity and reproducibility.

Q 13. Describe different error sources in photogrammetry.

Photogrammetry is susceptible to several error sources that can affect the accuracy and reliability of the final model. These can be broadly categorized as:

Geometric Errors: These are related to the camera and its geometry. Examples include lens distortion, camera calibration errors, and inaccurate camera orientation.

Image Errors: These arise from limitations in the imagery itself. Examples are sensor noise, atmospheric conditions (haze, fog), and image blurring.

Measurement Errors: Inaccurate measurement of GCPs or ICPs using GPS or total station instruments can introduce errors into the georeferencing process.

Software Errors: Bugs or limitations in the photogrammetry software itself can contribute to errors in the model. Software algorithms may not handle all scenarios perfectly, especially in challenging datasets.

Data Processing Errors: Errors can occur during the image processing workflow, for instance, during feature extraction, matching, or point cloud processing.

Understanding these error sources is crucial for designing an effective data acquisition strategy and applying appropriate processing techniques to minimize their impact.

Q 14. Explain the role of ground control points (GCPs) in photogrammetry.

Ground Control Points (GCPs) are points with known real-world coordinates that are identifiable in the images used for photogrammetry. They are the backbone of accurate georeferencing. Think of them as anchors that fix the 3D model in its correct location and orientation in the real world.

The process involves identifying these points in the field (using a GPS receiver or Total Station) and then precisely locating their corresponding positions within the images. The software then uses these correspondences to calculate the camera positions and orientations, resulting in a georeferenced 3D model. Without GCPs, the model would only be accurate relative to itself; it would ‘float’ in space without a defined real-world location. The number and distribution of GCPs significantly impact the final accuracy, with a greater number of GCPs, well distributed across the scene, generally leading to more accurate results.

Q 15. What are the advantages and disadvantages of using UAVs for photogrammetry?

Unmanned Aerial Vehicles (UAVs), or drones, have revolutionized photogrammetry, offering significant advantages but also presenting certain challenges.

- Advantages:

- Accessibility and Cost-Effectiveness: UAVs are relatively affordable and easy to deploy, making them ideal for projects with limited budgets or challenging terrain.

- Flexibility and Maneuverability: They can access areas inaccessible to traditional methods, such as steep cliffs or dense forests, allowing for detailed data acquisition.

- High-Resolution Imagery: Modern UAVs are equipped with high-resolution cameras capable of capturing detailed imagery, leading to high-quality 3D models.

- Rapid Data Acquisition: Data collection is significantly faster than traditional methods like ground surveys.

- Disadvantages:

- Weather Dependency: UAV operations are highly dependent on favorable weather conditions; wind, rain, and low visibility can significantly impact data quality and safety.

- Regulatory Restrictions: Operating UAVs requires adherence to strict regulations, including obtaining necessary permits and licenses. These regulations vary by location.

- Battery Life Limitations: The flight time of UAVs is limited by battery life, necessitating careful planning and potentially multiple flights for larger projects.

- Image Processing Challenges: While the data acquisition is efficient, processing large datasets from UAVs requires significant computing power and expertise.

For instance, I once used a UAV to create a 3D model of a historical building inaccessible by ground vehicles. The UAV’s maneuverability allowed us to capture detailed imagery of the building’s intricate details, resulting in a highly accurate and visually appealing 3D model that would have been impossible to create using traditional methods.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle large datasets in photogrammetry?

Handling large photogrammetry datasets efficiently involves a multi-pronged approach combining smart data acquisition strategies with powerful processing techniques.

- Optimized Data Acquisition: Careful planning of flight paths and camera settings minimizes redundant data and ensures sufficient image overlap for robust model generation. This includes employing techniques like Ground Control Points (GCPs) for accurate georeferencing.

- Cloud Computing and Processing: Utilizing cloud-based platforms like Pix4Dcloud or Agisoft Metashape allows leveraging substantial computing resources to process large datasets without burdening local hardware. This enables parallel processing, significantly shortening processing times.

- Data Subsetting and Region of Interest (ROI) Processing: Instead of processing the entire dataset, focus on specific areas of interest, creating smaller, manageable datasets that are faster to process. This is particularly useful for very large projects.

- Data Compression and File Management: Employing lossless compression techniques for image storage reduces disk space requirements and speeds up data transfer. Efficient file management is crucial for tracking and organizing large datasets.

- Software Optimization: Selecting efficient software and adjusting processing parameters, such as point cloud density and mesh resolution, allows finding a balance between model quality and processing speed.

For example, when processing a multi-gigabyte dataset from a large infrastructure project, I opted for cloud processing to divide the workload across multiple servers. By using ROI processing and carefully adjusting parameters, I was able to generate a high-quality 3D model within a reasonable timeframe without compromising detail in the key areas.

Q 17. Explain the concept of texture mapping in 3D models.

Texture mapping is the process of applying a 2D image (the texture) onto a 3D model’s surface, creating a visually realistic representation. Imagine painting a 3D sculpture – the paint is the texture, and the sculpture’s surface is the 3D model.

The texture provides visual detail to the otherwise bland, geometric surface of the 3D model. Without texture, a 3D model might consist of polygons without any surface information. The texture adds color, patterns, and overall realism.

The process involves several steps: creating a 2D image (typically from photos), aligning this image with the 3D model’s geometry, and assigning the image data to the model’s polygons. The result is a textured 3D model that appears much more lifelike.

Software like MeshLab and Blender offer comprehensive texture mapping tools. The quality of texture mapping directly impacts the realism and usability of the 3D model, making it crucial for creating high-quality visualizations and simulations.

Q 18. Describe different methods for 3D model simplification and optimization.

3D model simplification and optimization are crucial for improving performance, especially when dealing with large or complex models. These techniques reduce the number of polygons or vertices without significantly compromising visual fidelity. Think of it like reducing the number of pixels in an image while retaining the key features.

- Decimation: This method reduces the number of polygons by selectively removing vertices and faces while preserving the overall shape. Several algorithms exist, each with trade-offs in speed and quality.

- Level of Detail (LOD): This technique creates multiple versions of the model with varying levels of detail. A lower LOD uses fewer polygons for distant views, improving performance while a higher LOD provides detailed views for close-up inspections.

- Remeshing: This involves reconstructing the model with a new mesh, potentially with a lower polygon count or improved topology. This is useful for fixing imperfections or optimizing the model’s structure.

- Simplification Algorithms: Many algorithms such as Quadric Edge Collapse Decimation (QECD) and progressive meshes are designed to simplify meshes while minimizing visual artifacts. The choice of algorithm depends on the desired level of simplification and the model’s characteristics.

In a recent project, I used a combination of decimation and LOD techniques to optimize a large point cloud of a forest. The lower LOD was used for visualization and analysis of the entire forest area, and higher LODs were generated for detailed investigation of specific trees. This enabled smooth and efficient interactions in the software, even with the massive dataset.

Q 19. What are the ethical considerations in using photogrammetry?

Ethical considerations in photogrammetry are multifaceted and demand careful attention.

- Privacy: Photogrammetry can capture highly detailed imagery, potentially including individuals or sensitive locations. Obtaining necessary permissions and ensuring anonymization when necessary are crucial for protecting privacy.

- Data Security: Photogrammetric data can be highly valuable and sensitive. Secure storage, access controls, and appropriate data handling procedures must be implemented to prevent unauthorized access or misuse.

- Intellectual Property: If photogrammetry is used to model a structure or object owned by someone else, proper permissions and credit attribution are necessary.

- Transparency: It’s important to be transparent about data acquisition methods and any modifications made to the 3D model. Avoiding misrepresentation of the data is critical for maintaining credibility.

- Environmental Impact: While UAVs are generally less impactful than traditional survey methods, care should be taken to minimize disturbances to the environment, particularly in sensitive ecosystems.

For example, when creating a 3D model of a residential area, I ensured that all photos were taken from public spaces, avoiding the capture of individuals’ private property without explicit consent.

Q 20. How do you ensure the quality and accuracy of your photogrammetric products?

Ensuring quality and accuracy in photogrammetric products is paramount. This involves attention to detail at every stage of the process.

- Careful Planning: Meticulous planning is key, including selecting appropriate camera settings, sufficient image overlap, and strategically placed Ground Control Points (GCPs) for accurate georeferencing. This also involves considering weather conditions and potential obstacles.

- High-Quality Imagery: Using high-resolution cameras and stable platforms ensures sharp, well-exposed images with minimal distortion. Avoiding motion blur and other image artifacts is crucial.

- Ground Control Points (GCPs): GCPs provide known coordinates, enabling accurate georeferencing and geometric correction of the 3D model. Precise and reliable GCP measurements are vital for accuracy.

- Rigorous Software Processing: Employing suitable photogrammetry software and carefully reviewing the processing parameters is crucial for generating accurate and detailed 3D models. This includes checking for errors and inconsistencies in the model.

- Quality Control and Validation: Once the model is created, rigorous quality control checks are necessary. This may involve comparing the 3D model to existing data, performing visual inspections for anomalies and carrying out dimensional checks.

For example, in a recent survey, the inclusion of precisely surveyed GCPs reduced the positional error of the 3D model by more than 50% compared to a project without adequate GCPs.

Q 21. Describe your experience with different sensor types (e.g., RGB, multispectral, LiDAR).

My experience encompasses various sensor types, each offering unique capabilities for photogrammetric applications.

- RGB Cameras: These are standard cameras capturing visible light in red, green, and blue channels. They provide excellent color information and are widely used for creating visually appealing 3D models. I’ve extensively used RGB cameras for architectural modeling, creating detailed 3D models of buildings and structures.

- Multispectral Cameras: These cameras capture images in multiple wavelengths beyond the visible spectrum, including near-infrared (NIR) and red-edge bands. This data is invaluable for applications such as precision agriculture, vegetation analysis, and environmental monitoring. I’ve employed multispectral data to assess crop health and monitor deforestation.

- LiDAR (Light Detection and Ranging): LiDAR sensors use lasers to measure distances, creating highly accurate point clouds that represent the 3D surface of objects. LiDAR data is exceptional for creating highly accurate Digital Terrain Models (DTMs) and Digital Surface Models (DSMs). I’ve utilized LiDAR data for creating highly accurate 3D models of terrain for infrastructure planning and environmental management.

Each sensor type has strengths and weaknesses; the optimal choice depends on the specific application and desired outputs. Combining data from different sensors (e.g., RGB and multispectral) often leads to more comprehensive and informative results.

Q 22. Explain your understanding of different camera calibration techniques.

Camera calibration is crucial in photogrammetry because it determines the intrinsic and extrinsic parameters of a camera. Intrinsic parameters describe the internal geometry of the camera, such as focal length, principal point, and lens distortion coefficients. Extrinsic parameters define the camera’s position and orientation in 3D space at the time of image capture. There are several techniques to achieve this:

Direct Linear Transformation (DLT): A classic method using corresponding points in the image and their known 3D coordinates. It’s computationally efficient but sensitive to noise.

Bundle Adjustment: This iterative optimization technique refines both camera parameters and 3D point positions simultaneously, minimizing the reprojection error. It’s more accurate but computationally intensive. It’s often the gold standard for high-accuracy photogrammetry.

Self-Calibration: This technique estimates camera parameters from multiple images without using a calibration target. It relies on identifying scene constraints or repeating patterns. It is useful when a calibration target is impractical or unavailable.

Camera Calibration Targets: Using a precisely manufactured target with known geometric features (e.g., checkerboard patterns) provides highly accurate ground truth data for calibration. Software can easily identify these features and estimate camera parameters.

The choice of technique depends on the project requirements, available resources (time and computational power), and the accuracy needed. For instance, while DLT is fast, bundle adjustment is preferred when high accuracy is crucial, such as in precision engineering applications or architectural modeling.

Q 23. How do you deal with atmospheric effects (e.g., haze, fog) on image quality?

Atmospheric effects like haze and fog significantly impact image quality by reducing contrast and introducing color distortions. Dealing with them requires a multi-pronged approach:

Image Acquisition Strategies: Careful planning of image acquisition can mitigate the effects. Ideally, capturing images under optimal weather conditions is the best solution. If this isn’t possible, shooting at times with less haze or fog is preferred.

Atmospheric Correction Algorithms: Several algorithms can estimate and correct for atmospheric scattering. These algorithms often utilize dark object subtraction or physical models to account for haze and fog’s influence on image intensity and color.

Pre-processing Techniques: Techniques like dehazing filters in image processing software can improve image clarity. These often use dark channel prior or guided image filtering techniques to enhance contrast and remove haze artifacts.

Post-processing Adjustments: In some cases, manual adjustments for brightness, contrast, and color balance might be necessary to refine the images after applying atmospheric correction algorithms.

The selection of the appropriate method hinges on the severity of the atmospheric effects and the available computational resources. For instance, complex atmospheric correction models might require significant computational resources, whereas simple dehazing filters may be sufficient for mild atmospheric conditions.

Q 24. What is your experience with Structure from Motion (SfM) techniques?

Structure from Motion (SfM) is a powerful technique that reconstructs 3D models from a set of 2D images. My experience involves using various SfM software packages such as Agisoft Metashape, Pix4D, and Meshroom. I’m proficient in the entire SfM pipeline, including:

Image Alignment: Identifying and matching feature points across multiple images to establish correspondences. This forms the basis for camera pose estimation.

Camera Pose Estimation: Determining the position and orientation of each camera during image capture. This uses the feature correspondences to solve the Perspective-n-Point (PnP) problem.

3D Point Cloud Generation: Triangulating the matched feature points to create a sparse 3D point cloud representing the scene geometry.

Mesh Reconstruction: Creating a surface model by connecting the 3D points. Different algorithms, such as Delaunay triangulation, are utilized.

Texture Mapping: Projecting the image information onto the 3D mesh to create a realistic textured model.

I’ve successfully used SfM in numerous projects, from architectural modeling and archaeological site documentation to creating terrain models from drone imagery.

Q 25. Explain your experience with Multi-View Stereo (MVS) techniques.

Multi-View Stereo (MVS) techniques build upon SfM by generating dense 3D point clouds and models. While SfM provides a sparse point cloud, MVS aims to create a dense representation. My experience encompasses various MVS algorithms, including:

Patch-based MVS: This approach compares image patches to estimate the depth of each pixel by finding the best matching patch in multiple images.

Voxel-based MVS: This method discretizes the 3D space into voxels and determines the occupancy of each voxel based on image information.

Learning-based MVS: Recent advancements leverage deep learning techniques to improve the accuracy and efficiency of MVS. Convolutional Neural Networks (CNNs) are used to learn complex relationships between image data and 3D geometry.

I understand the trade-offs between different MVS algorithms regarding computational cost, accuracy, and memory requirements. For instance, patch-based MVS can be computationally expensive, while voxel-based methods can be memory-intensive. My experience allows me to choose the optimal algorithm depending on the project’s complexity and the available resources.

Q 26. Describe your proficiency in scripting languages for automating photogrammetry workflows.

I am proficient in Python and its relevant libraries (OpenCV, NumPy, SciPy) for automating photogrammetry workflows. I’ve used scripting to:

Batch process images: Automate tasks such as image renaming, conversion, and preprocessing.

Control SfM and MVS software: Automate the import of images, parameter settings, and processing steps, reducing manual intervention.

Post-process results: Automate tasks like mesh simplification, texture optimization, and model export.

Integrate with other GIS/CAD software: Develop scripts to seamlessly transfer data between photogrammetry software and other systems.

For example, I’ve developed a Python script that automatically processes a large dataset of drone images, performing image alignment, point cloud generation, mesh reconstruction, and texture mapping using Agisoft Metashape. This reduced the processing time from several days to a few hours.

# Example Python code snippet (Illustrative): import subprocess subprocess.run(['metashape', 'process', 'myproject.rsproj'])

Q 27. How would you approach a project requiring high-accuracy photogrammetry?

High-accuracy photogrammetry demands meticulous planning and execution. My approach involves:

Careful Camera Selection: Using high-resolution cameras with low distortion lenses is crucial. Metric cameras with calibrated lenses are ideal.

Optimal Image Acquisition: Employing a rigorous image acquisition strategy, including sufficient overlap (typically 60-80%), appropriate camera orientation and spacing, and consistent lighting conditions. Ground control points (GCPs) with precise coordinates are essential for georeferencing and achieving high accuracy.

Robust Processing Techniques: Utilizing advanced SfM and MVS algorithms with bundle adjustment for refinement. Careful consideration of parameters within the photogrammetry software is necessary.

Rigorous Quality Control: Thoroughly checking the results for errors, outliers, and inconsistencies. This includes validating the accuracy of the point cloud, mesh, and textured model.

Post-processing Refinement: Employing techniques such as mesh smoothing, texture optimization, and noise reduction to improve the final model’s quality.

In essence, high-accuracy photogrammetry requires attention to detail in every stage, from planning and image acquisition to processing and post-processing.

Q 28. Describe a challenging photogrammetry project you worked on and how you overcame the obstacles.

I once worked on a project involving creating a high-resolution 3D model of a complex, highly reflective industrial facility. The challenge was the highly reflective surfaces that caused significant issues with feature detection and matching in the SfM pipeline. Many images were unusable due to glare and overexposure. Additionally, the facility’s geometry involved many fine details and intricate structures that required a very high level of resolution.

To overcome these obstacles, we:

Adjusted Image Acquisition: We used specialized lighting techniques, including polarizing filters, to reduce glare and improve image quality. We also carefully selected the time of day to minimize harsh sunlight.

Employed advanced SfM software: We used a professional-grade software package with robust feature detection and matching algorithms that could handle challenging conditions better than standard solutions.

Increased Image Density: We captured a significantly larger number of images than initially planned to ensure sufficient overlap and data redundancy. This helped the software to overcome the problems caused by the reflective surfaces.

Implemented careful post-processing: We spent considerable time cleaning up the resulting point cloud and mesh, manually removing outliers and artifacts that resulted from the challenging conditions.

By implementing these strategies, we successfully generated a highly accurate 3D model of the facility, meeting the client’s requirements despite the initial challenges.

Key Topics to Learn for Photogrammetric Data Acquisition Interview

- Image Acquisition Planning: Understanding flight planning parameters (altitude, overlap, sidelap), camera calibration, and sensor selection for optimal data capture. Practical application: Designing a flight plan for a specific project, considering factors like terrain, weather, and desired accuracy.

- Image Processing and Pre-processing: Familiarizing yourself with techniques like image orientation, georeferencing, and the handling of atmospheric effects. Practical application: Identifying and correcting distortions in images, preparing data for point cloud generation.

- Structure from Motion (SfM) and Multi-View Stereo (MVS): Grasping the theoretical underpinnings of these core techniques. Practical application: Evaluating the accuracy and efficiency of different SfM/MVS software packages, troubleshooting processing errors.

- Point Cloud Processing and Mesh Generation: Understanding point cloud filtering, classification, and the generation of 3D meshes from point clouds. Practical application: Cleaning and preparing a point cloud for further analysis, creating a textured 3D model.

- Accuracy Assessment and Quality Control: Methods for evaluating the accuracy of photogrammetric products, including Ground Control Points (GCPs) and error analysis. Practical application: Identifying and mitigating sources of error in the photogrammetric workflow.

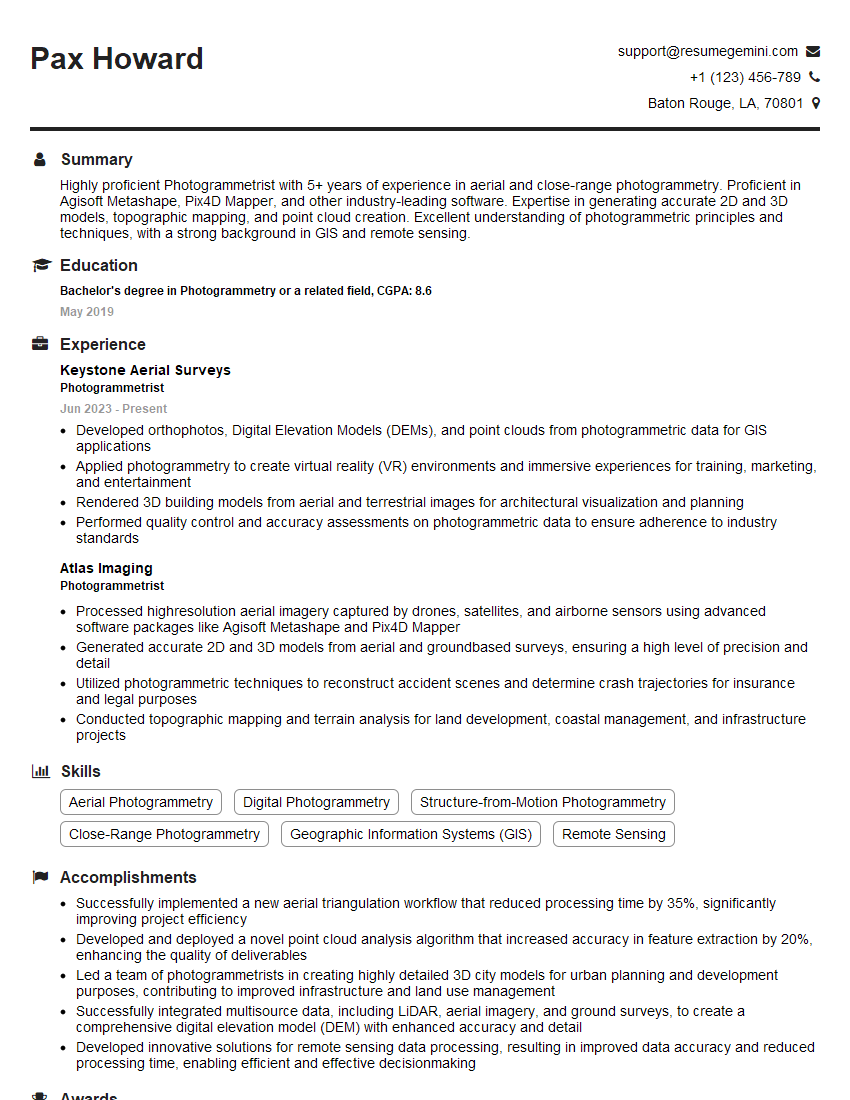

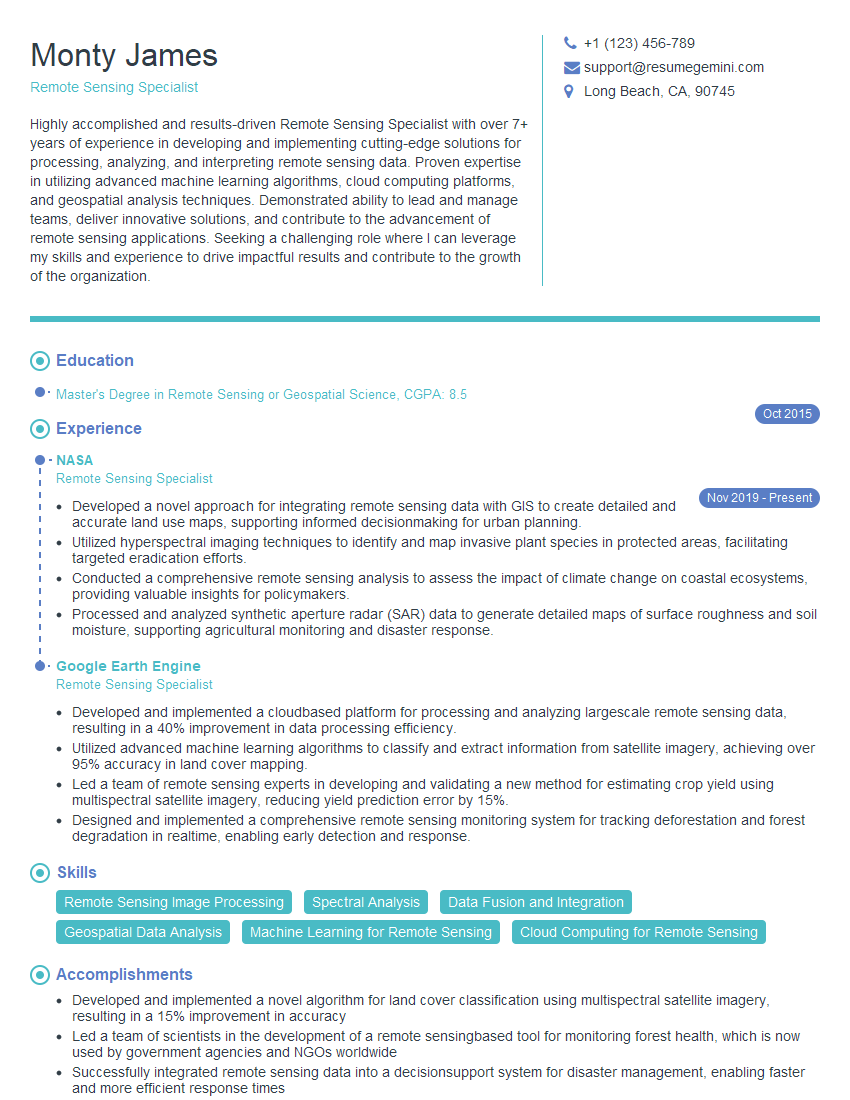

- Software and Hardware Proficiency: Demonstrating familiarity with industry-standard software (e.g., Agisoft Metashape, Pix4D) and relevant hardware (e.g., drones, cameras). Practical application: Describing your experience with specific software and hardware, highlighting successful project deployments.

- Applications in Various Fields: Understanding the diverse applications of photogrammetry in fields such as surveying, mapping, archaeology, and engineering. Practical application: Discussing relevant case studies and your experience in specific applications.

Next Steps

Mastering Photogrammetric Data Acquisition opens doors to exciting and rewarding careers in various sectors. To significantly enhance your job prospects, focus on creating a compelling and ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. They offer examples of resumes tailored to Photogrammetric Data Acquisition to guide you. Take the next step towards your dream career today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO