Are you ready to stand out in your next interview? Understanding and preparing for Expertise in natural language processing interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Expertise in natural language processing Interview

Q 1. Explain the difference between rule-based and statistical NLP approaches.

Rule-based and statistical NLP approaches represent fundamentally different philosophies in processing human language. Rule-based systems rely on explicitly defined linguistic rules, handcrafted by experts, to analyze and generate text. These rules dictate how the system should interpret grammatical structures, identify parts of speech, and perform other linguistic tasks. Think of it like a highly detailed instruction manual for language understanding.

In contrast, statistical NLP approaches use machine learning algorithms to learn patterns from large amounts of text data. Instead of relying on explicitly programmed rules, statistical methods identify statistical regularities in the data and build models to predict language phenomena. It’s like teaching a child language by immersion; the child learns through observation and repetition rather than explicit instruction.

Example: Consider part-of-speech tagging. A rule-based system might use rules like “if a word ends in ‘-ing’, it’s a verb,” while a statistical system would learn from a massive corpus of tagged text which words frequently appear as verbs, nouns, adjectives, etc., and then use that knowledge to predict the tag of new words. Statistical methods often outperform rule-based methods on real-world data due to the inherent ambiguity and irregularity of human language, which are difficult to capture fully through manually crafted rules.

Q 2. Describe different types of word embeddings (Word2Vec, GloVe, FastText).

Word embeddings are dense vector representations of words, where each word is mapped to a multi-dimensional vector capturing semantic relationships between words. Different techniques produce different embeddings, each with its own strengths and weaknesses.

- Word2Vec: This technique uses shallow neural networks (either Continuous Bag-of-Words (CBOW) or Skip-gram) to learn word embeddings. CBOW predicts a target word based on its context, while Skip-gram predicts the context words given a target word. Both methods learn vector representations that place semantically similar words closer together in the vector space.

- GloVe (Global Vectors): GloVe constructs word vectors using global word-word co-occurrence statistics from a corpus. It leverages the ratios of co-occurrence probabilities to capture relationships between words, resulting in embeddings that often exhibit better performance on certain tasks than Word2Vec.

- FastText: An extension of Word2Vec, FastText considers not just individual words but also their character n-grams. This allows it to handle out-of-vocabulary words and morphologically related words more effectively. For example, it can better represent words like “running” and “ran” because they share subword units.

Example: In the vector space produced by any of these methods, the vectors for “king” and “queen” would be closer together than the vectors for “king” and “table.” This reflects the semantic similarity between “king” and “queen” which are related by gender and royalty. These embeddings are crucial for downstream tasks like text classification, machine translation, and question answering.

Q 3. What are the limitations of using n-grams for language modeling?

N-grams are sequences of ‘n’ consecutive words in a text. While they are a fundamental tool in NLP for tasks like language modeling and text prediction, they suffer from several limitations:

- Data Sparsity: The number of possible n-grams grows exponentially with ‘n’. This means that for larger values of ‘n’, many n-grams will be unseen in the training data, leading to zero probabilities and poor performance. The longer the sequence, the more likely you are to encounter never-before-seen combinations.

- Computational Cost: Storing and processing all possible n-grams can be computationally expensive, particularly for larger values of ‘n’ and large corpora.

- Inability to Capture Long-Range Dependencies: N-grams struggle to capture relationships between words that are far apart in a sentence. The information captured is limited to the ‘n’ words in the sequence.

- Contextual information is limited: N-grams don’t inherently consider the overall meaning or context of a sentence. A particular n-gram might have different meanings depending on the context.

Example: The trigram “the cat sat” is straightforward, but longer n-grams quickly become problematic. A less frequent phrase, such as “the surprisingly fluffy cat sat”, might be unseen in a training set despite being perfectly valid. More sophisticated models like recurrent neural networks (RNNs) and transformers address these limitations by capturing long-range dependencies and handling context better.

Q 4. Explain the concept of Hidden Markov Models (HMMs) in NLP.

Hidden Markov Models (HMMs) are probabilistic models used to model sequences of observations. In NLP, they are particularly useful for tasks involving sequential data, such as part-of-speech tagging and named entity recognition. An HMM assumes that there’s an underlying hidden state sequence generating the observed sequence. For example, in part-of-speech tagging, the hidden state is the sequence of parts of speech (e.g., noun, verb, adjective), and the observed sequence is the sequence of words.

An HMM is defined by:

- Hidden states: A set of possible hidden states (e.g., parts of speech).

- Observation symbols: A set of possible observations (e.g., words).

- Transition probabilities: The probability of transitioning from one hidden state to another.

- Emission probabilities: The probability of observing a particular symbol given a particular hidden state.

Example: In part-of-speech tagging, the HMM would learn the probabilities of transitioning from one part of speech to another (e.g., the probability of a noun being followed by a verb) and the probabilities of observing a particular word given a particular part of speech (e.g., the probability of the word “cat” being a noun). Given a sequence of words, the HMM uses these probabilities to infer the most likely sequence of hidden states (parts of speech).

The algorithms used with HMMs include the Forward algorithm (for computing likelihood), the Viterbi algorithm (for finding the most likely state sequence), and the Baum-Welch algorithm (for parameter estimation).

Q 5. How does Latent Dirichlet Allocation (LDA) work for topic modeling?

Latent Dirichlet Allocation (LDA) is a generative probabilistic model for topic modeling. It assumes that each document is a mixture of several topics, and each topic is a distribution over words. LDA aims to discover these underlying topics from a collection of documents.

The core idea is that each document is represented as a distribution over topics, and each topic is represented as a distribution over words. The model uses Bayesian inference and the Dirichlet distribution to estimate these distributions. The Dirichlet distribution acts as a prior over the distributions of topics in documents and words in topics. This prior ensures that the resulting topics are meaningful and well-defined.

In simpler terms: Imagine a collection of magazine articles. LDA would automatically identify the different topics (e.g., sports, politics, technology) present in the articles. It would then determine which articles cover which topics and how much each topic contributes to each article. It also determines which words are strongly associated with each topic.

The process typically involves:

- Initialization: Randomly assigning topic assignments to words in the corpus.

- Iterative Refinement: Repeatedly updating the topic assignments based on the observed data and the current estimates of the topic and word distributions.

- Convergence: The process stops when the changes in topic assignments become negligible.

The output of LDA includes a set of topics, each represented as a distribution over words, and a set of document-topic distributions indicating the contribution of each topic to each document.

Q 6. What are some common challenges in Named Entity Recognition (NER)?

Named Entity Recognition (NER) aims to identify and classify named entities in text, such as people, organizations, locations, dates, and other types of entities. Several challenges make NER a complex task:

- Ambiguity: Words can have multiple meanings depending on context. “Apple” could refer to the fruit or the technology company.

- Nested Entities: Entities can be nested within each other. For example, “Barack Obama, President of the United States” contains multiple nested entities.

- Novelty and Out-of-Vocabulary Entities: NER systems often struggle with entities they haven’t seen before in their training data. New companies, products, or people appear all the time.

- Varying Linguistic Expressions: Entities can be expressed in many different ways, including abbreviations, acronyms, and different writing styles.

Lack of sufficient training data: For some entity types, particularly in niche domains, large training datasets might be unavailable or very costly to obtain.

- Cross-lingual differences: NER systems trained on one language may not perform well on others due to differences in language structures and entity naming conventions.

Example: A system might correctly identify “Google” as an organization but incorrectly classify “Googleplex” as a location because it has not seen the term frequently associated with its meaning in the training data.

Q 7. Describe different techniques for stemming and lemmatization.

Stemming and lemmatization are text normalization techniques used to reduce words to their root form. While both aim to reduce words to their base form, they differ in their approaches and results:

Stemming: A crude heuristic process that chops off the ends of words in the hope of achieving a common stem (root form) without considering the word’s context or actual meaning. It often produces stems that are not actual words.

Lemmatization: A more sophisticated process that uses a vocabulary and morphological analysis to reduce words to their dictionary form (lemma), considering the context and part of speech. The result is usually an actual word.

Techniques for stemming:

- Porter Stemmer: A rule-based algorithm that removes suffixes from words using a set of predefined rules. It is simple and fast but can produce non-words and inaccurate stems.

- Snowball Stemmer: An improvement over the Porter stemmer, supporting multiple languages and offering better accuracy.

Techniques for lemmatization:

- WordNet Lemmatizer (NLTK): Uses the WordNet lexical database to find the lemma of a word, considering its part of speech.

- Spacy Lemmatizer: A statistical lemmatizer that often achieves higher accuracy than rule-based approaches.

Example:

- Stemming: “running” might be stemmed to “run”, “better” to “bett”, or “amusing” to “amus”.

- Lemmatization: “running” would be lemmatized to “run”, “better” to “good”, and “amusing” to “amuse”.

Lemmatization generally produces better results for NLP tasks that require accurate semantic representation, but stemming is faster and simpler to implement.

Q 8. Explain the difference between precision and recall in NLP tasks.

Precision and recall are two crucial metrics used to evaluate the performance of a classification model, including those in NLP. Think of it like searching for a specific type of flower in a vast field.

Precision answers: “Out of all the flowers I picked, how many were actually the type I was looking for?” It focuses on the accuracy of positive predictions. A high precision means that when the model predicts something as positive, it’s usually correct. A low precision means the model makes many false positive errors – identifying things as the target when they are not.

Recall answers: “Out of all the flowers of the type I was looking for, how many did I actually pick?” It focuses on the completeness of positive predictions. High recall means the model correctly identifies most of the actual positive instances. Low recall means the model misses many true positives – failing to identify actual instances of the target.

Example: Imagine a spam filter. High precision means very few legitimate emails are flagged as spam (few false positives). High recall means very few spam emails get through (few false negatives). The ideal system balances both, but often there’s a trade-off. You might prioritize precision if misclassifying a legitimate email as spam is more costly than letting some spam through.

Formulae:

Precision = True Positives / (True Positives + False Positives)Recall = True Positives / (True Positives + False Negatives)

Q 9. How do you evaluate the performance of a machine translation system?

Evaluating a machine translation (MT) system is complex and involves both automatic and human evaluation. Automatic metrics offer quick, quantitative assessments, while human evaluation provides a more nuanced, qualitative perspective.

Automatic Metrics: These use numerical scores to compare the translated text to a reference translation (done by a human expert). Common metrics include:

- BLEU (Bilingual Evaluation Understudy): Measures the overlap of n-grams (sequences of words) between the translated text and the reference. Higher scores generally indicate better translation quality, but it has limitations in capturing semantic meaning.

- METEOR (Metric for Evaluation of Translation with Explicit ORdering): Similar to BLEU but considers synonyms and paraphrases, resulting in a more comprehensive evaluation.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Primarily used for summarization but can also be applied to MT, measuring the overlap of words and n-grams between the generated and reference texts.

Human Evaluation: Human evaluators assess different aspects like fluency, adequacy, and overall quality. This can involve rating scales, comparative judgments (ranking different translations), or free-form feedback.

Combining Approaches: The most robust evaluation combines both automatic and human evaluation. Automatic metrics offer a quick overview, while human evaluation provides crucial insights into aspects not captured by the metrics, like nuances of meaning or cultural appropriateness.

Q 10. What are some common methods for handling ambiguity in natural language?

Natural language is inherently ambiguous; words and phrases can have multiple meanings depending on context. Handling this ambiguity is a core challenge in NLP. Here are common methods:

- Part-of-Speech (POS) Tagging: Identifying the grammatical role of each word (noun, verb, adjective, etc.) helps disambiguate words with multiple meanings. For example, “bank” can be a financial institution or the side of a river; POS tagging can clarify which meaning is intended.

- Word Sense Disambiguation (WSD): Using context to determine the correct meaning of a word with multiple senses. Techniques include using dictionaries (WordNet), machine learning models trained on labeled data, or considering surrounding words.

- Contextual Embeddings (Word2Vec, GloVe, BERT): These represent words as vectors in a high-dimensional space, capturing semantic relationships. Words with similar meanings are closer in this space. This helps determine meaning based on context.

- Probabilistic Models: Using probabilities to assign weights to different possible meanings based on their likelihood given the context.

Example: The sentence “I saw a bat flying.” could refer to a flying mammal or a baseball bat. WSD, along with POS tagging, can help resolve this ambiguity by examining the surrounding words and grammar.

Q 11. Explain the concept of context in NLP and how it affects understanding.

Context is crucial in NLP; it’s the surrounding information that determines the meaning of a word, phrase, or sentence. Think of it like understanding a joke: the punchline only makes sense in the context of the setup.

Types of Context:

- Lexical Context: The surrounding words influence meaning. “Bright” can mean intelligent or shining depending on the words near it.

- Syntactic Context: The grammatical structure impacts meaning. The sentence structure helps understand the relationships between words.

- Discourse Context: The context of the entire conversation or document shapes meaning. A sentence’s meaning changes depending on the preceding sentences.

Impact on Understanding: Without context, a system misinterprets information. Consider pronoun resolution: “John met Mary. He gave her a book.” Understanding “he” and “her” requires understanding the context of who John and Mary are.

Handling Context: Techniques like Recurrent Neural Networks (RNNs), especially LSTMs and GRUs, and Transformers (like BERT) are specifically designed to incorporate context by processing sequential data and maintaining a representation of past information.

Q 12. Describe different approaches to sentiment analysis.

Sentiment analysis aims to determine the emotional tone behind text – is it positive, negative, or neutral? There are several approaches:

- Lexicon-based Approach: Uses a dictionary of words and their associated sentiment scores. The sentiment of a text is determined by summing the scores of its words. Simple but lacks context awareness.

- Machine Learning Approach: Trains a classifier (e.g., Naive Bayes, SVM, or deep learning models like RNNs) on labeled data. The model learns to classify sentiment based on features extracted from the text. More complex but can capture nuances and context.

- Hybrid Approach: Combines lexicon-based and machine learning methods. The lexicon provides initial scores which are then refined by the machine learning model.

- Deep Learning Approach: Uses deep neural networks like Recurrent Neural Networks (RNNs) or Transformers (e.g., BERT, RoBERTa) that are capable of handling long-range dependencies and context better than traditional machine learning methods. These models tend to achieve state-of-the-art results, particularly in complex sentiment analysis tasks.

Example: A lexicon-based approach might assign a positive score to “great” and a negative score to “terrible.” A machine learning approach would learn the nuances of phrases like “bitterly disappointed” (negative) or “mildly surprised” (neutral).

Q 13. How do you handle noisy or unstructured data in NLP?

Noisy and unstructured data are common challenges in NLP. Strategies include:

- Data Cleaning: Removing irrelevant characters, handling missing values, correcting spelling errors, and standardizing formatting.

- Noise Reduction: Identifying and removing noise from the data. This can include techniques like outlier detection or filtering based on specific criteria.

- Data Transformation: Converting unstructured data into a structured format suitable for NLP models. This could involve creating tables or other structured representations from free-text.

- Robust Models: Using machine learning models that are more tolerant to noise and missing data. For example, using models that are not sensitive to outliers or that utilize data augmentation to boost robustness.

- Pre-training: Using pre-trained language models (like BERT or RoBERTa) that have been trained on massive datasets of text. These models often exhibit improved robustness to noise and can handle less-clean input effectively.

Example: A noisy dataset might contain irrelevant symbols or misspelled words. Data cleaning would remove these, and robust models would handle remaining imperfections during training.

Q 14. What are some common pre-processing steps for text data?

Pre-processing text data is crucial for improving the performance of NLP models. Common steps include:

- Tokenization: Breaking text into individual words or sub-word units. Simple tokenization splits on spaces, but more advanced techniques handle punctuation and contractions.

- Lowercasing: Converting all text to lowercase to reduce the vocabulary size and avoid treating the same word differently based on capitalization.

- Stop Word Removal: Removing common words (e.g., “the,” “a,” “is”) that often don’t contribute much to the meaning but increase processing time.

- Stemming/Lemmatization: Reducing words to their root form (stemming) or dictionary form (lemmatization). This reduces vocabulary size and improves model accuracy.

- Punctuation Removal: Removing punctuation marks, although sometimes punctuation can be important.

Example: The sentence “The quick brown fox jumps over the lazy dog.” might be pre-processed to: ['quick', 'brown', 'fox', 'jump', 'lazy', 'dog'] after tokenization, lowercasing, stop word removal, and lemmatization.

Q 15. Explain the concept of attention mechanisms in sequence-to-sequence models.

Attention mechanisms are a crucial component of modern sequence-to-sequence (seq2seq) models, dramatically improving their performance on tasks like machine translation and text summarization. Imagine you’re translating a sentence: Instead of processing the entire source sentence equally, an attention mechanism allows the model to focus on the most relevant parts of the input when generating each word of the output. It’s like selectively highlighting the most important words in the source sentence as you translate.

Technically, attention works by assigning weights to each word in the input sequence. These weights represent the importance of each word in predicting the current output word. The model computes a weighted sum of the input word embeddings, effectively focusing on the most pertinent information. This weighted sum is then used to generate the next word in the output sequence. Different attention mechanisms exist, such as additive attention and multiplicative attention, each with its subtle variations in how these weights are calculated.

For example, in machine translation, if the source sentence is “The cat sat on the mat,” and the model is currently generating the translation for “mat,” the attention mechanism would likely assign higher weights to “mat” and “on” in the source sentence, because those words are most relevant to the current output word. This allows the model to capture complex relationships between words across the input and output sequences, leading to more accurate and contextually aware translations.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some common deep learning architectures used in NLP?

The NLP field utilizes a diverse range of deep learning architectures, each with its strengths and weaknesses. Here are some common ones:

- Recurrent Neural Networks (RNNs): Process sequential data by maintaining an internal state that’s updated with each new input. Excellent for capturing temporal dependencies but can struggle with long sequences due to vanishing/exploding gradients.

- Long Short-Term Memory networks (LSTMs): A type of RNN designed to address the vanishing/exploding gradient problem. LSTMs use sophisticated gating mechanisms to regulate the flow of information, allowing them to remember information over longer sequences. Very effective for tasks involving long-range dependencies.

- Gated Recurrent Units (GRUs): Similar to LSTMs, GRUs also mitigate the vanishing gradient problem. They simplify the LSTM architecture with fewer parameters, making them faster to train and often achieving comparable performance.

- Transformers: Based on the attention mechanism, transformers process the entire input sequence in parallel, eliminating the sequential processing limitations of RNNs. They have revolutionized NLP, achieving state-of-the-art results on many tasks. Examples include BERT, GPT-3, and others.

- Convolutional Neural Networks (CNNs): While traditionally used for image processing, CNNs can also be effective in NLP for tasks like sentence classification and named entity recognition, capturing local patterns in text.

The choice of architecture depends heavily on the specific NLP task and the nature of the data.

Q 17. Describe the differences between RNNs, LSTMs, and GRUs.

RNNs, LSTMs, and GRUs are all recurrent neural networks designed to process sequential data, but they differ in their internal mechanisms for handling long-range dependencies.

- RNNs: The simplest, relying on a single hidden state updated at each time step. They suffer from the vanishing/exploding gradient problem, meaning they struggle to learn long-range dependencies because gradients either shrink to insignificance or explode during backpropagation.

- LSTMs: Address the vanishing gradient problem using a complex system of gates (input, forget, output). These gates control the flow of information into and out of the cell state, allowing the network to selectively remember or forget information over long sequences. More complex than GRUs but generally more powerful.

- GRUs: Simplify the LSTM architecture by combining the cell state and hidden state, and using fewer gates (update and reset). They’re faster to train than LSTMs and often achieve comparable performance, striking a balance between complexity and performance.

Think of it like this: an RNN is a simple bucket holding information; an LSTM is a sophisticated filing cabinet with mechanisms for adding, retrieving, and deleting files, allowing it to retain important info longer. GRUs are a more streamlined filing cabinet that manages the same functions but with less complexity.

Q 18. How do you address overfitting in NLP models?

Overfitting in NLP models occurs when the model learns the training data too well, including its noise and peculiarities, and performs poorly on unseen data. Several techniques help mitigate this:

- Data Augmentation: Increase the size and diversity of the training data by techniques like synonym replacement, back translation, or random insertion/deletion of words. This makes the model more robust to variations in language.

- Regularization: Add penalty terms to the loss function that discourages overly complex models. Common techniques include L1 and L2 regularization (weight decay) and dropout, which randomly ignores neurons during training.

- Cross-Validation: Split the data into multiple folds, training the model on some folds and validating on others. This provides a more reliable estimate of the model’s generalization performance.

- Early Stopping: Monitor the model’s performance on a validation set during training and stop training when the performance starts to degrade. This prevents the model from overfitting to the training data.

- Hyperparameter Tuning: Carefully select hyperparameters (learning rate, batch size, etc.) using techniques like grid search or Bayesian optimization. Improper hyperparameters can lead to overfitting.

The best approach often involves a combination of these techniques.

Q 19. Explain the concept of transfer learning in NLP.

Transfer learning leverages knowledge gained from solving one problem to improve performance on a related problem. In NLP, this means using pre-trained models (like BERT, ELMo, or GloVe) on a massive dataset as a starting point for a new task. Instead of training a model from scratch, you fine-tune the pre-trained model on your specific task’s data. This reduces training time, improves performance, and requires significantly less data.

For instance, if you’re building a sentiment analysis model for movie reviews, you could start with a BERT model pre-trained on a massive corpus of text. You would then fine-tune BERT’s weights on your movie review dataset, allowing it to adapt its knowledge to the nuances of movie review language. This is much more efficient than training a model from scratch, especially if you have a limited amount of labeled movie review data. The pre-trained model provides a strong foundation, enabling your model to learn more effectively and generalize better.

Q 20. Describe different methods for handling rare words in NLP.

Rare words pose a significant challenge in NLP because they are under-represented in training data, leading to poor model performance. Here are some common strategies:

- Out-of-Vocabulary (OOV) Tokens: Represent all rare words with a special token like `

`. Simple but loses information about the rare word’s meaning. - Smoothing Techniques (e.g., Laplace Smoothing, Good-Turing Smoothing): Adjust word probabilities to account for unseen words, adding a small probability mass to each word. This reduces the impact of zero counts.

- Character-Level Embeddings: Represent words as sequences of characters, allowing the model to learn representations even for unseen words.

- Byte Pair Encoding (BPE): A subword tokenization method that iteratively merges frequent character pairs into new tokens. This helps the model learn representations for subparts of words, making it more robust to rare words.

- WordPiece and SentencePiece: Refinements of BPE that use unsupervised learning to find optimal subword units for a given dataset.

The best method depends on the specific application and the available resources.

Q 21. How do you evaluate the quality of word embeddings?

Evaluating the quality of word embeddings is crucial because their quality directly impacts the performance of downstream NLP tasks. Several methods exist:

- Intrinsic Evaluation: Assess the quality of embeddings independent of a specific NLP task. Common intrinsic evaluation methods include measuring the similarity between words with known semantic relationships (e.g., using cosine similarity) or evaluating the ability of the embeddings to perform analogy tasks (e.g., “king – man + woman = queen”).

- Extrinsic Evaluation: Evaluate embeddings based on their performance in a downstream NLP task. This involves training an NLP model using the embeddings and evaluating its performance on a standard benchmark dataset. The higher the performance of the downstream task, the better the quality of the embeddings.

- Human Evaluation: Assess the quality of embeddings subjectively by asking human judges to rate the quality of word similarity scores or the appropriateness of embedding analogies. This is often time-consuming but provides valuable insights.

A comprehensive evaluation typically combines intrinsic and extrinsic methods to get a holistic understanding of the embedding quality. Ideally, embeddings should perform well on both intrinsic tests (demonstrating good semantic representation) and extrinsic tasks (demonstrating usefulness for real-world applications).

Q 22. What are some ethical considerations in NLP applications?

Ethical considerations in NLP are crucial because these models can perpetuate and amplify existing societal biases, leading to unfair or discriminatory outcomes. Imagine a hiring tool trained on biased data – it might unfairly favor certain demographics. Key ethical concerns include:

- Bias and Fairness: NLP models trained on biased data will reflect and even exacerbate those biases in their outputs. This can lead to unfair or discriminatory outcomes in areas like loan applications, hiring processes, and even criminal justice.

- Privacy: NLP models often require vast amounts of data, raising concerns about the privacy of individuals whose data is used for training or inference. Data anonymization and secure storage are vital.

- Transparency and Explainability: Understanding *why* an NLP model made a specific prediction is critical. Lack of transparency can lead to distrust and difficulty in identifying and correcting biases or errors.

- Misinformation and Manipulation: Sophisticated NLP models can be used to generate convincing but false information, impacting public opinion and potentially causing harm.

- Job displacement: Automation driven by NLP can lead to job losses in certain sectors, necessitating thoughtful strategies for workforce retraining and adaptation.

Addressing these ethical concerns requires careful data curation, model evaluation, and ongoing monitoring of the deployed systems. Transparency in model development and deployment is key to building trust and mitigating potential harm.

Q 23. Explain the concept of bias in NLP models and how to mitigate it.

Bias in NLP models arises when the training data reflects existing societal biases, leading the model to learn and perpetuate these prejudices. For example, a sentiment analysis model trained primarily on positive reviews of products made by men might rate similar products made by women less favorably, reflecting gender bias present in the data. Mitigating bias requires a multi-pronged approach:

- Data Augmentation: Adding more data to balance underrepresented groups. If your data has few examples of positive reviews for female-made products, you need to actively seek out and include more.

- Data Preprocessing: Cleaning the data to remove or mitigate biases. This could involve removing potentially offensive language or re-weighting biased samples.

- Algorithm Selection: Choosing algorithms less prone to bias. Some algorithms are inherently more sensitive to biases in the data than others.

- Fairness-Aware Algorithms: Utilizing algorithms specifically designed to address fairness concerns, for example, by incorporating fairness constraints into the model training process.

- Regular Auditing and Monitoring: Continuously evaluating the model’s performance across different demographic groups to detect and address emerging biases. This is an ongoing process, not a one-time fix.

Remember, bias mitigation is an iterative process; you must carefully consider the context and implications of your model and its output.

Q 24. How can you improve the robustness of your NLP models?

Robust NLP models are resilient to noisy or unexpected input and maintain high performance across diverse conditions. To improve robustness:

- Data Augmentation: Introduce variations in your training data to simulate real-world scenarios, such as adding noise, typos, or different writing styles.

- Regularization Techniques: Employ techniques like dropout or weight decay to prevent overfitting and improve generalization to unseen data.

- Ensemble Methods: Combine multiple models to improve overall robustness and reduce the impact of individual model errors. This is like having several experts review a document instead of relying on just one.

- Adversarial Training: Train your model against adversarial examples – intentionally crafted inputs designed to fool it. This ‘stress test’ helps uncover vulnerabilities and make the model more resilient.

- Transfer Learning: Leverage pre-trained models on large datasets, adapting them to your specific task. This often leads to improved performance and robustness, especially with limited data.

- Error Analysis: Carefully analyze model errors to understand their sources and identify areas for improvement. This helps pinpoint weaknesses that need addressing.

Robustness is not a one-time achievement but an ongoing effort that requires careful consideration of the model’s environment and potential challenges.

Q 25. Describe different methods for building a chatbot.

Building a chatbot involves several approaches, each with its strengths and weaknesses:

- Rule-based Chatbots: These rely on predefined rules and decision trees to respond to user input. They are simple to build but limited in flexibility and unable to handle unexpected inputs. Think of a simple interactive menu system.

- Retrieval-based Chatbots: These use a database of pre-written responses and select the most appropriate one based on user input. They are relatively easy to implement and scale but may lack natural conversation flow.

- Generative Chatbots: These utilize machine learning models, typically sequence-to-sequence models like transformers, to generate responses dynamically. They offer more natural conversation but require significant training data and computational resources. Examples include models based on GPT technologies.

- Hybrid Approaches: Combining rule-based and machine learning techniques. This can leverage the strengths of both approaches, offering a good balance of flexibility and ease of implementation.

The choice of approach depends on the complexity of the task, the available resources, and the desired level of conversational ability. Larger, more sophisticated chatbots frequently use a hybrid approach, employing rule-based systems for simple queries and generative models for more complex interactions.

Q 26. What are some emerging trends in NLP?

Several exciting trends are shaping the future of NLP:

- Multimodal Learning: Integrating NLP with other modalities like vision and audio to create more comprehensive and nuanced understanding. Imagine a chatbot that understands both text and images.

- Explainable AI (XAI) for NLP: Improving the transparency and interpretability of NLP models, making them more trustworthy and easier to debug. Knowing *why* a model makes a decision is crucial.

- Few-Shot and Zero-Shot Learning: Training models that can perform well with limited or no labeled data, significantly reducing the need for extensive data annotation.

- Continual Learning: Enabling NLP models to continuously learn and adapt to new data without catastrophic forgetting of previously learned information. This is vital for systems that need to stay updated.

- Neuro-symbolic AI: Combining the strengths of neural networks and symbolic reasoning to create more robust and intelligent systems. This combines statistical learning with explicit knowledge representation.

These advancements are pushing the boundaries of what’s possible with NLP, leading to more powerful, adaptable, and trustworthy applications.

Q 27. Discuss the role of NLP in various industries (e.g., healthcare, finance).

NLP is transforming numerous industries:

- Healthcare: NLP aids in automating medical transcription, analyzing patient records to identify risks and improve diagnoses, and developing chatbots for patient support.

- Finance: NLP assists in sentiment analysis of financial news, fraud detection, and automating customer service through chatbots and virtual assistants.

- Legal: NLP can automate legal document review, contract analysis, and legal research, significantly improving efficiency.

- Customer Service: NLP powers chatbots and virtual assistants, providing 24/7 customer support and automating responses to common queries.

- Education: NLP aids in automated essay grading, personalized learning recommendations, and language learning tools.

The applications are vast, and the impact of NLP continues to grow as models become more sophisticated and datasets larger. The key is to responsibly and ethically integrate NLP into various domains to maximize its benefits.

Q 28. How do you stay updated with the latest advancements in NLP?

Staying current in the rapidly evolving field of NLP requires a multi-faceted approach:

- Reading research papers: Following top-tier conferences like ACL, EMNLP, and NeurIPS is crucial for access to cutting-edge research.

- Attending conferences and workshops: Networking with other researchers and practitioners provides valuable insights and exposure to new ideas.

- Following online resources: Staying updated on blogs, articles, and online communities dedicated to NLP is essential.

- Exploring open-source projects and code: Actively engaging with open-source projects on platforms like GitHub helps understand practical implementation details.

- Taking online courses and workshops: Many excellent online courses are available to deepen your understanding of specific NLP techniques.

- Engaging with the NLP community: Participating in online forums and discussions helps keep your knowledge fresh and broadens your perspectives.

Continuous learning is paramount in this field. A commitment to staying informed ensures you remain at the forefront of NLP innovation.

Key Topics to Learn for Natural Language Processing (NLP) Interviews

- Core NLP Techniques: Understand the fundamentals of tokenization, stemming, lemmatization, part-of-speech tagging, and named entity recognition. Be prepared to discuss their applications and limitations.

- Language Models: Familiarize yourself with different types of language models, including n-grams, recurrent neural networks (RNNs), and transformers (like BERT, GPT). Be ready to explain their architectures and how they generate text or understand meaning.

- Word Embeddings: Grasp the concept of word embeddings (Word2Vec, GloVe, FastText) and their role in representing semantic relationships between words. Be able to compare and contrast different embedding techniques.

- Sentiment Analysis & Opinion Mining: Understand how to extract sentiment (positive, negative, neutral) from text data. Be prepared to discuss different approaches and challenges, such as sarcasm detection.

- Practical Applications: Be ready to discuss real-world applications of NLP, such as chatbots, machine translation, text summarization, question answering systems, and information retrieval. Consider examples from your own experience.

- NLP Evaluation Metrics: Understand common evaluation metrics used in NLP tasks, such as precision, recall, F1-score, BLEU score, and ROUGE score. Know how to interpret these metrics and choose appropriate ones for different tasks.

- Deep Learning for NLP: If relevant to your experience, be prepared to discuss the application of deep learning architectures (CNNs, RNNs, Transformers) to NLP problems. Focus on your understanding of how these models work and their strengths and weaknesses.

- Problem-Solving Approach: Practice approaching NLP problems systematically. Be able to articulate your thought process, including data preprocessing, model selection, training, evaluation, and deployment considerations.

Next Steps

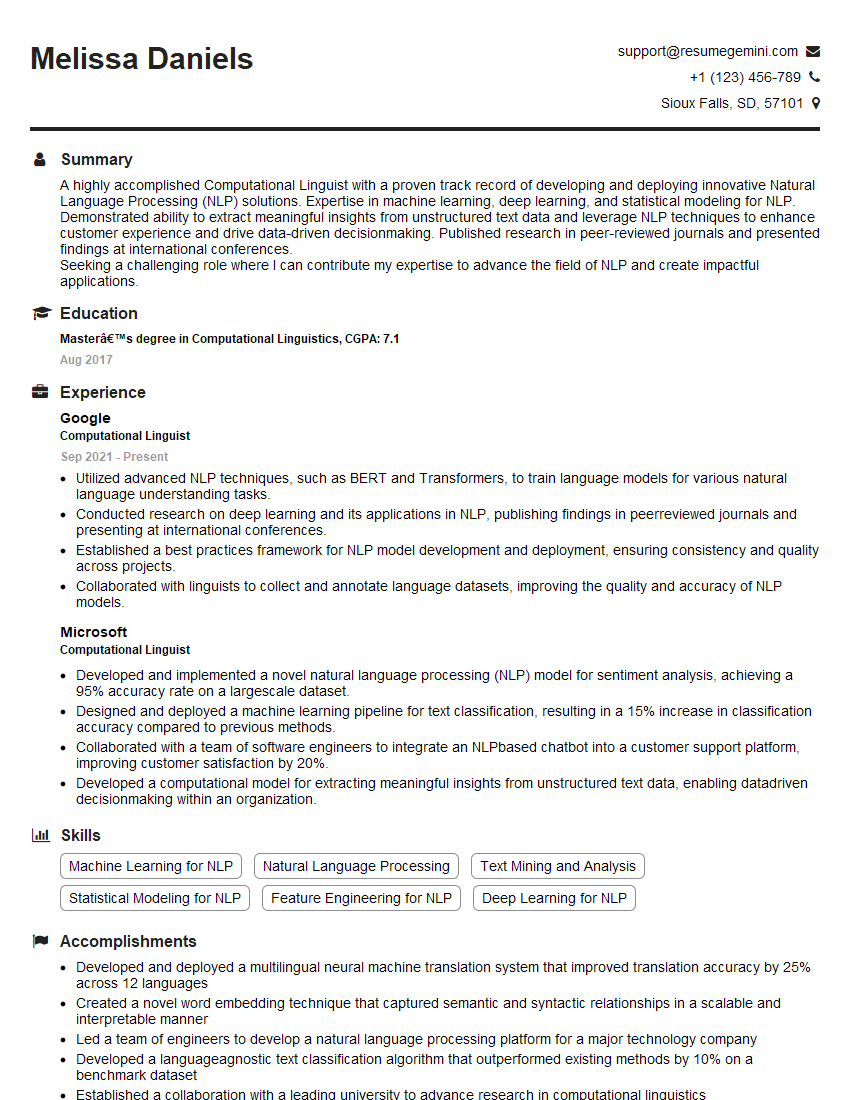

Mastering NLP opens doors to exciting and high-demand roles in various industries. To maximize your job prospects, creating a strong, ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you craft a compelling resume highlighting your NLP expertise. They offer examples of resumes tailored specifically to NLP roles, ensuring your skills and experience are presented effectively to potential employers. Invest time in building a professional resume that accurately reflects your capabilities and increases your chances of landing your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO