Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Knowledge of machine learning algorithms interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Knowledge of machine learning algorithms Interview

Q 1. Explain the difference between supervised, unsupervised, and reinforcement learning.

Machine learning algorithms are broadly categorized into three types: supervised, unsupervised, and reinforcement learning. They differ fundamentally in how they learn from data.

- Supervised Learning: This is like having a teacher. You provide the algorithm with labeled data – input data paired with the correct output. The algorithm learns to map inputs to outputs. Think of training a dog: you show it pictures of cats and say “cat,” pictures of dogs and say “dog.” Eventually, it learns to identify them. Examples include image classification, spam detection, and predicting house prices.

Example: Training a model to predict customer churn based on historical data where each data point includes customer features and whether they churned (labeled). - Unsupervised Learning: Here, you give the algorithm unlabeled data, and it tries to find structure or patterns on its own. It’s like giving a child a box of LEGOs and letting them build whatever they want. The algorithm might discover clusters of similar data points or reduce the dimensionality of the data. Examples include customer segmentation, anomaly detection, and dimensionality reduction using Principal Component Analysis (PCA).

Example: Clustering customers into different groups based on their purchasing behavior without knowing the group labels beforehand. - Reinforcement Learning: This is like training a robot to navigate a maze. The algorithm learns through trial and error, receiving rewards for good actions and penalties for bad ones. It learns a policy that maximizes its cumulative reward over time. Examples include game playing (e.g., AlphaGo), robotics control, and resource management.

Example: Training an AI agent to play a game like chess by rewarding it for winning and penalizing it for losing.

Q 2. What are the common types of biases in machine learning models?

Biases in machine learning models can significantly impact their fairness, accuracy, and reliability. They arise from various sources:

- Sampling Bias: The training data doesn’t accurately represent the real-world distribution. For example, a model trained only on data from one demographic might perform poorly on others.

- Measurement Bias: Inaccuracies or inconsistencies in how data is collected or measured. Imagine a survey with leading questions that skew responses.

- Confirmation Bias: The model is trained to confirm existing biases in the data. This might reflect societal biases present in the data.

- Algorithmic Bias: The algorithm itself might be inherently biased, favoring certain outcomes over others. This can be subtle and hard to detect.

- Prejudice Bias: This bias reflects prejudices present in the dataset. For example, if a dataset used to train a facial recognition system is primarily composed of images of white people, the system will likely perform worse on people with different ethnicities.

Identifying and mitigating these biases requires careful data collection, preprocessing, algorithm selection, and ongoing monitoring.

Q 3. Describe the bias-variance tradeoff.

The bias-variance tradeoff is a fundamental concept in machine learning. It describes the relationship between the model’s ability to fit the training data (variance) and its ability to generalize to unseen data (bias).

Bias refers to the error introduced by approximating a real-world problem, which might be complex, by a simplified model. High bias can lead to underfitting, where the model is too simple to capture the underlying patterns in the data. Think of trying to fit a straight line to a clearly curved dataset.

Variance refers to the model’s sensitivity to fluctuations in the training data. High variance can lead to overfitting, where the model learns the training data too well, including its noise, and performs poorly on unseen data. Imagine memorizing the answers to a test instead of understanding the concepts.

The goal is to find a sweet spot – a model with low bias and low variance. This often involves careful model selection, tuning hyperparameters, and using techniques like regularization.

Q 4. Explain overfitting and underfitting. How can you address them?

Overfitting occurs when a model learns the training data too well, including its noise and random fluctuations. It performs exceptionally well on the training data but poorly on unseen data. Imagine a student memorizing the answers to a practice test without understanding the material; they’ll do well on the practice test but poorly on the real one.

Underfitting occurs when a model is too simple to capture the underlying patterns in the data. It performs poorly on both the training and unseen data. This is like trying to describe a complex phenomenon with a very simple explanation.

Addressing these issues:

- Overfitting:

- More data: Gathering more representative data can help.

- Regularization: Techniques like L1 and L2 regularization add penalties to the model’s complexity, discouraging overfitting.

- Cross-validation: Evaluating the model on multiple subsets of the data helps assess its generalization ability.

- Pruning (for decision trees): Removing unnecessary branches from a decision tree can reduce complexity.

- Dropout (for neural networks): Randomly ignoring neurons during training improves generalization.

- Underfitting:

- More complex model: Choosing a model with more capacity (e.g., a higher-degree polynomial in regression).

- Feature engineering: Creating new features from existing ones that are more informative.

- Different algorithm: Using a more powerful algorithm suitable for the complexity of the data.

Q 5. Compare and contrast different regression algorithms (linear, logistic, polynomial).

Let’s compare linear, logistic, and polynomial regression:

- Linear Regression: Models the relationship between a dependent variable and one or more independent variables using a linear equation. It predicts a continuous output.

y = mx + c. Simple, interpretable, but assumes a linear relationship. - Logistic Regression: A type of linear regression that predicts a categorical output (typically binary, 0 or 1). It uses a sigmoid function to map the linear equation’s output to probabilities between 0 and 1. Useful for classification problems.

- Polynomial Regression: Models the relationship between variables using a polynomial equation, allowing for more complex curves. It can capture non-linear relationships but can easily overfit if the degree of the polynomial is too high.

y = a + bx + cx^2 + ...

Comparison:

- Linear and logistic regression are linear models, while polynomial regression is non-linear.

- Linear regression predicts continuous values, while logistic regression predicts probabilities for categories.

- Polynomial regression can capture more complex relationships but is prone to overfitting.

The choice depends on the nature of the data and the problem being solved. Linear regression is suitable for simple linear relationships, logistic regression for binary classification, and polynomial regression for non-linear relationships, but with careful consideration of overfitting.

Q 6. Explain the concept of regularization and its importance.

Regularization is a technique used to prevent overfitting in machine learning models. It does this by adding a penalty term to the model’s loss function. This penalty discourages the model from learning overly complex relationships by shrinking the magnitude of the model’s coefficients.

Types of Regularization:

- L1 Regularization (Lasso): Adds a penalty proportional to the absolute value of the coefficients. This encourages sparsity – some coefficients become exactly zero, effectively performing feature selection.

- L2 Regularization (Ridge): Adds a penalty proportional to the square of the coefficients. This shrinks the coefficients toward zero but doesn’t necessarily set them to zero.

Importance: Regularization improves the model’s ability to generalize to unseen data by reducing its sensitivity to noise in the training data. This leads to better performance on new, unseen data. The choice between L1 and L2 often depends on the specific problem and whether feature selection is desirable.

Q 7. How does gradient descent work? Explain different variants (batch, stochastic, mini-batch).

Gradient descent is an iterative optimization algorithm used to find the minimum of a function. In machine learning, this function is typically the model’s loss function. The goal is to find the model’s parameters that minimize this loss.

It works by repeatedly taking steps in the direction of the negative gradient (the direction of steepest descent). The size of each step is controlled by a learning rate.

Variants:

- Batch Gradient Descent: Calculates the gradient using the entire training dataset in each iteration. This leads to accurate gradient updates but can be slow for large datasets.

- Stochastic Gradient Descent (SGD): Calculates the gradient using only one data point in each iteration. This is much faster than batch GD but can lead to noisy updates and oscillations around the minimum.

- Mini-batch Gradient Descent: A compromise between batch and stochastic GD. It calculates the gradient using a small random subset (mini-batch) of the data in each iteration. This balances speed and accuracy.

The choice of variant depends on the size of the dataset and the desired tradeoff between speed and accuracy. Mini-batch GD is often preferred for its efficiency and relatively stable convergence.

Q 8. What are the different types of cost functions used in machine learning?

Cost functions, also known as loss functions or objective functions, quantify the error between predicted and actual values in a machine learning model. The goal of training is to minimize this cost. Different types cater to various problem types and model architectures.

- Mean Squared Error (MSE): Common for regression problems, MSE calculates the average squared difference between predicted and actual values. It’s sensitive to outliers.

MSE = (1/n) * Σ(yi - ŷi)^2, where yi is the actual value, ŷi is the predicted value, and n is the number of data points. - Mean Absolute Error (MAE): Also for regression, MAE calculates the average absolute difference. It’s less sensitive to outliers than MSE.

MAE = (1/n) * Σ|yi - ŷi| - Binary Cross-Entropy: Used for binary classification problems (two classes). It measures the dissimilarity between predicted probabilities and true labels.

Loss = -Σ[yi * log(ŷi) + (1 - yi) * log(1 - ŷi)], where yi is 0 or 1 (true label) and ŷi is the predicted probability. - Categorical Cross-Entropy: Extension of binary cross-entropy for multi-class classification problems (more than two classes). It measures the dissimilarity between predicted probability distributions and true class labels.

- Hinge Loss: Often used in Support Vector Machines (SVMs). It penalizes predictions that are not correctly classified by a margin.

Choosing the right cost function depends on the problem type, model, and desired properties. For instance, MSE is suitable for predicting continuous variables like house prices, while binary cross-entropy is ideal for classifying emails as spam or not spam.

Q 9. Explain the concept of cross-validation and its purpose.

Cross-validation is a powerful technique to evaluate the performance of a machine learning model and prevent overfitting. It involves splitting the data into multiple subsets (folds), training the model on some folds, and testing it on the remaining fold(s). This process is repeated multiple times, with different folds used for testing each time.

Purpose: Cross-validation provides a more robust estimate of the model’s generalization performance compared to a single train-test split. It helps to avoid overfitting, where the model performs well on the training data but poorly on unseen data. It also gives you a sense of how variable your model’s performance might be across different samples of your data.

Types: There are several types of cross-validation, such as k-fold cross-validation (the most common), leave-one-out cross-validation, and stratified k-fold cross-validation (useful for imbalanced datasets).

Example: In 5-fold cross-validation, the data is divided into 5 folds. The model is trained on 4 folds and tested on the remaining fold. This is repeated 5 times, with each fold serving as the test set once. The average performance across these 5 iterations provides a more reliable estimate.

Q 10. What is the difference between precision and recall? Explain the F1-score.

Precision and recall are metrics used to evaluate the performance of a classification model, particularly in imbalanced datasets. They offer different perspectives on the model’s accuracy.

- Precision: Out of all the instances predicted as positive, what proportion were actually positive? It answers: “Of all the positive predictions, how many were actually correct?” High precision means a low rate of false positives.

Precision = True Positives / (True Positives + False Positives) - Recall (Sensitivity): Out of all the actual positive instances, what proportion did the model correctly identify? It answers: “Of all the actual positives, how many did the model find?” High recall means a low rate of false negatives.

Recall = True Positives / (True Positives + False Negatives)

F1-Score: The F1-score is the harmonic mean of precision and recall. It provides a single metric that balances both precision and recall. It’s particularly useful when you need to consider both false positives and false negatives. A high F1-score indicates good performance in both precision and recall. F1-score = 2 * (Precision * Recall) / (Precision + Recall)

Example: Imagine a spam detection system. High precision means few legitimate emails are classified as spam (low false positives), while high recall means most spam emails are correctly identified (low false negatives). The F1-score balances the importance of both aspects.

Q 11. Describe the ROC curve and AUC.

The Receiver Operating Characteristic (ROC) curve and Area Under the Curve (AUC) are used to evaluate the performance of a binary classification model. They provide a comprehensive view of the model’s ability to distinguish between classes at various thresholds.

ROC Curve: The ROC curve plots the true positive rate (TPR, also recall) against the false positive rate (FPR) at various classification thresholds. The TPR represents the proportion of actual positives correctly identified, while the FPR represents the proportion of actual negatives incorrectly identified as positives. A good model will have a ROC curve that bows significantly towards the top-left corner.

AUC: The AUC is the area under the ROC curve. It represents the probability that the model will rank a randomly chosen positive instance higher than a randomly chosen negative instance. An AUC of 1 indicates perfect classification, while an AUC of 0.5 indicates random classification. AUC values generally range from 0.5 to 1.

Example: Imagine a medical test diagnosing a disease. A high AUC means the test is highly effective at differentiating between patients with and without the disease, regardless of the chosen threshold for a positive diagnosis.

Q 12. How do you handle imbalanced datasets?

Imbalanced datasets, where one class significantly outnumbers others, pose a challenge for machine learning models as they tend to be biased towards the majority class. Several techniques can be used to address this:

- Resampling Techniques:

- Oversampling: Increase the number of instances in the minority class. Techniques include SMOTE (Synthetic Minority Over-sampling Technique), which generates synthetic samples, and random oversampling, which duplicates existing samples. Be cautious about oversampling as it can lead to overfitting.

- Undersampling: Reduce the number of instances in the majority class. Techniques include random undersampling and more sophisticated methods like Tomek links and NearMiss.

- Cost-Sensitive Learning: Assign higher misclassification costs to the minority class during model training. This encourages the model to pay more attention to the minority class, reducing the impact of the class imbalance.

- Ensemble Methods: Combine multiple models trained on different subsets of the data or with different resampling techniques to improve overall performance. Bagging and boosting algorithms can be particularly effective.

- Algorithm Selection: Certain algorithms are less sensitive to class imbalance than others. Decision trees and support vector machines are often more robust compared to naive Bayes or logistic regression.

The best approach depends on the specific dataset and the problem. Experimentation and careful evaluation are crucial to find the most effective strategy.

Q 13. Explain different feature scaling techniques (standardization, normalization).

Feature scaling transforms the features of a dataset to a similar range of values. This is important because many machine learning algorithms are sensitive to the scale of features. Unscaled features can lead to inaccurate or inefficient model training.

- Standardization (Z-score normalization): Transforms features to have a mean of 0 and a standard deviation of 1.

z = (x - μ) / σ, where x is the original value, μ is the mean, and σ is the standard deviation. Standardization is effective when the features have a Gaussian distribution. - Normalization (Min-Max scaling): Transforms features to a range between 0 and 1.

x' = (x - min) / (max - min), where x is the original value, min is the minimum value, and max is the maximum value. Normalization is useful when the features don’t follow a Gaussian distribution.

Example: Imagine a dataset with features ‘age’ (range 18-65) and ‘income’ (range 20000-200000). Without scaling, the ‘income’ feature would dominate the model because of its larger scale. Standardization or normalization would bring both features to a comparable scale, allowing the model to learn their relative importance more accurately.

Q 14. What is dimensionality reduction and why is it important?

Dimensionality reduction is the process of reducing the number of features (variables) in a dataset while preserving important information. It’s crucial for several reasons:

- Improved Model Performance: Reducing irrelevant or redundant features can lead to better model accuracy, faster training times, and reduced overfitting.

- Reduced Computational Cost: Processing fewer features makes the model computationally less expensive and faster to train.

- Data Visualization: Dimensionality reduction techniques can help to visualize high-dimensional data in lower dimensions (e.g., 2D or 3D), making it easier to understand the data patterns.

- Reduced Storage Requirements: Storing and managing fewer features saves storage space.

Techniques: Popular dimensionality reduction techniques include:

- Principal Component Analysis (PCA): A linear transformation that projects the data onto a lower-dimensional subspace while maximizing variance. It identifies the principal components, which are orthogonal directions representing the most significant variation in the data.

- Linear Discriminant Analysis (LDA): A supervised technique that maximizes the separation between classes. It’s effective when dealing with classification problems.

- t-distributed Stochastic Neighbor Embedding (t-SNE): A non-linear technique that is particularly useful for visualizing high-dimensional data. It’s computationally expensive, but it often produces insightful visualizations.

Choosing the right dimensionality reduction technique depends on the nature of the data and the problem being addressed. PCA is widely used for unsupervised dimensionality reduction, while LDA is often preferred for supervised tasks. t-SNE is mainly used for data visualization.

Q 15. Compare PCA and LDA.

Both Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) are dimensionality reduction techniques, but they serve different purposes. PCA focuses on maximizing variance, finding the directions of greatest spread in the data, regardless of class labels. LDA, on the other hand, aims to maximize the separation between different classes. Think of it like this: PCA finds the axes that best describe the overall data spread, while LDA finds the axes that best separate different groups within the data.

PCA: Imagine a scatter plot of data points. PCA finds the principal components, new axes that capture the most variance in the data. The first principal component accounts for the largest amount of variance, the second accounts for the second largest, and so on. We can then project the data onto these new axes, reducing the dimensionality while retaining as much information as possible. This is useful for noise reduction and data visualization.

LDA: Now imagine the same scatter plot, but the points are colored according to their class labels (e.g., red for class A, blue for class B). LDA finds linear combinations of the features that best separate these classes. It tries to maximize the distance between the means of different classes while minimizing the variance within each class. This is particularly useful for classification tasks because it enhances class separability.

In short: PCA is unsupervised (ignores class labels), focuses on variance, and is good for dimensionality reduction and visualization. LDA is supervised (uses class labels), focuses on class separability, and is better suited for classification tasks.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain different clustering algorithms (K-means, hierarchical, DBSCAN).

Clustering algorithms group similar data points together. Several popular algorithms exist, each with its own strengths and weaknesses:

- K-means: This is a partitioning algorithm that aims to partition n observations into k clusters, where each observation belongs to the cluster with the nearest mean (centroid). It iteratively refines cluster assignments until convergence. The biggest challenge is choosing the optimal k (number of clusters), often using techniques like the elbow method or silhouette analysis. Imagine grouping customers based on their purchase history – k-means could be used to identify distinct customer segments.

- Hierarchical Clustering: This builds a hierarchy of clusters. Agglomerative hierarchical clustering starts with each point as a separate cluster and iteratively merges the closest clusters. Divisive hierarchical clustering starts with all points in one cluster and recursively splits it. This method produces a dendrogram (tree-like diagram) visualizing the cluster hierarchy. Hierarchical clustering can be useful in understanding the relationships between different clusters and their relative similarity.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): This algorithm groups together points that are closely packed together (points with many nearby neighbors), marking as outliers points that lie alone in low-density regions. It’s particularly robust to noise and can discover clusters of arbitrary shapes, unlike k-means which assumes spherical clusters. Imagine identifying densely populated areas on a map – DBSCAN would be well-suited for this task.

Q 17. What are support vector machines (SVMs)? How do they work?

Support Vector Machines (SVMs) are powerful supervised learning models used for both classification and regression. The core idea is to find the optimal hyperplane that maximally separates data points of different classes. In the simplest case (linearly separable data), this hyperplane is a line (in 2D) or a plane (in 3D) that divides the data into two groups with the largest possible margin.

For non-linearly separable data, SVMs use the kernel trick. This involves mapping the data into a higher-dimensional space where it becomes linearly separable. Common kernels include linear, polynomial, radial basis function (RBF), and sigmoid. The choice of kernel is crucial and depends on the data characteristics.

How they work: SVMs aim to find the support vectors – the data points closest to the hyperplane. These support vectors define the margin, and the hyperplane is positioned to maximize this margin. New data points are classified based on which side of the hyperplane they fall on.

Example: Imagine classifying emails as spam or not spam. An SVM can learn a decision boundary (hyperplane) in a high-dimensional feature space (based on word frequencies, sender information, etc.) to separate spam from non-spam emails effectively.

Q 18. Explain the concept of decision trees and ensemble methods (Random Forest, Gradient Boosting).

Decision Trees: These are tree-like models where each internal node represents a test on an attribute, each branch represents the outcome of the test, and each leaf node represents a class label or a value. They recursively partition the data based on feature values to create a hierarchical structure for making predictions. Decision trees are easy to understand and interpret, but they can be prone to overfitting (performing well on training data but poorly on unseen data).

Ensemble Methods: These methods combine multiple decision trees to improve accuracy and robustness. Two popular examples are:

- Random Forest: This builds multiple decision trees on different subsets of the data and features. The final prediction is made by aggregating the predictions from all trees (e.g., majority voting for classification). This reduces overfitting and improves generalization.

- Gradient Boosting: This sequentially builds trees, where each subsequent tree corrects the errors made by the previous trees. It uses a gradient descent algorithm to minimize a loss function, improving the overall model accuracy. Gradient boosting methods like XGBoost, LightGBM, and CatBoost are known for their high performance in many machine learning competitions.

Example: Imagine predicting customer churn. A random forest could combine multiple decision trees trained on different subsets of customer data to predict which customers are likely to churn with greater accuracy than a single decision tree.

Q 19. What are neural networks? Describe the architecture of a simple perceptron.

Neural networks are computational models inspired by the biological neural networks in our brains. They consist of interconnected nodes (neurons) organized in layers. These layers process information through weighted connections, performing complex computations.

Simple Perceptron: This is the simplest type of neural network, a single-layer network with one input layer, one output layer, and no hidden layers. Each input is weighted, summed, and passed through an activation function (e.g., a step function or sigmoid function) to produce an output. The perceptron learns by adjusting the weights based on the errors in its predictions.

Architecture:

- Input Layer: Receives the input features.

- Weights: Each connection between neurons has an associated weight that determines the strength of the connection.

- Summation: The weighted inputs are summed.

- Activation Function: This introduces non-linearity, allowing the network to learn complex patterns. The output of the activation function is the neuron’s output.

- Output Layer: Produces the final prediction.

Imagine a perceptron trying to classify images of cats and dogs. The input layer would receive pixel values, each connection would have a weight representing the importance of that pixel in the classification, and the output layer would produce a prediction (cat or dog).

Q 20. Explain backpropagation.

Backpropagation is an algorithm used to train neural networks. It’s a method for computing gradients of the loss function with respect to the network’s weights. The goal is to adjust the weights to minimize the loss, improving the network’s performance.

How it works:

- Forward Pass: The input data is fed forward through the network, producing predictions.

- Loss Calculation: The difference between the predicted output and the actual target is calculated using a loss function (e.g., mean squared error).

- Backward Pass: The error is propagated back through the network, computing the gradient of the loss function with respect to each weight. This involves applying the chain rule of calculus.

- Weight Update: The weights are updated using an optimization algorithm (e.g., gradient descent) to minimize the loss. This is typically done using an update rule like:

w_new = w_old - learning_rate * gradientThis process is repeated iteratively until the loss is sufficiently minimized or a certain number of epochs (iterations through the training data) is reached. Backpropagation efficiently computes the gradients needed to adjust the weights and learn the optimal parameters for the network.

Q 21. What are convolutional neural networks (CNNs) and their applications?

Convolutional Neural Networks (CNNs) are a specialized type of neural network designed for processing grid-like data, such as images and videos. They use convolutional layers, which apply filters (kernels) to the input data to extract features. These filters detect patterns like edges, corners, and textures.

Key components:

- Convolutional Layers: Apply filters to the input data, extracting features.

- Pooling Layers: Reduce the dimensionality of the feature maps, making the network more robust to variations in input and reducing computational complexity.

- Fully Connected Layers: Connect all neurons from the previous layer to all neurons in the current layer, performing classification or regression.

Applications: CNNs have revolutionized many fields, including:

- Image Classification: Identifying objects in images (e.g., cats, dogs, cars).

- Object Detection: Locating and classifying objects within images (e.g., self-driving cars).

- Image Segmentation: Partitioning an image into meaningful regions (e.g., medical image analysis).

- Video Analysis: Analyzing video content for action recognition or event detection.

Imagine a self-driving car using a CNN to detect pedestrians and traffic signs. The CNN would process images from the car’s camera, identifying and classifying different objects in real-time to ensure safe navigation.

Q 22. What are recurrent neural networks (RNNs) and their applications?

Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to work with sequential data, such as time series, text, and speech. Unlike feedforward neural networks that process data in a single pass, RNNs have loops in their architecture, allowing information to persist across time steps. This allows them to ‘remember’ previous inputs, making them suitable for tasks where context is crucial.

Imagine reading a sentence. You need to remember the earlier words to understand the meaning of the later words. RNNs work similarly, using their internal state to maintain information across the sequence. A simple RNN updates its state at each time step with a function that combines the current input and the previous state.

- Applications: RNNs find wide applications in:

- Natural Language Processing (NLP): Machine translation, text summarization, sentiment analysis, chatbot development

- Time Series Analysis: Stock price prediction, weather forecasting, anomaly detection

- Speech Recognition: Converting spoken language into text

- Image Captioning: Generating descriptive captions for images

However, standard RNNs suffer from the vanishing gradient problem, which limits their ability to learn long-range dependencies. More advanced architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) address this limitation, significantly improving their performance on longer sequences.

Q 23. Explain the concept of attention mechanisms in neural networks.

Attention mechanisms are a crucial component of modern neural networks, particularly in sequence-to-sequence tasks. They allow the network to focus on different parts of the input sequence when generating the output, rather than relying solely on the final hidden state. Think of it as a ‘spotlight’ that highlights the most relevant parts of the input.

For example, in machine translation, the attention mechanism allows the network to pay more attention to the words in the source sentence that are most relevant to the current word being generated in the target sentence. This leads to more accurate and fluent translations.

Technically, an attention mechanism calculates a weight for each input element, indicating its importance. These weights are then used to create a weighted sum of the input elements, which is then used to generate the output. The weights are learned during training, allowing the network to automatically determine which parts of the input are most relevant.

Example: In a sequence-to-sequence model, the attention weights might be represented by a matrix where each row represents a time step in the output sequence, and each column represents a time step in the input sequence. The value in each cell indicates the attention weight between the corresponding input and output time steps.Q 24. How do you evaluate the performance of a machine learning model?

Evaluating a machine learning model involves assessing its performance on unseen data. The choice of metrics depends heavily on the type of problem (classification, regression, clustering, etc.).

- Classification: Accuracy, precision, recall, F1-score, AUC-ROC (Area Under the Receiver Operating Characteristic Curve)

- Regression: Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), R-squared

- Clustering: Silhouette score, Davies-Bouldin index

It’s crucial to use appropriate evaluation techniques, such as:

- Train-Test Split: Dividing the data into training and testing sets. The model is trained on the training set and evaluated on the test set to assess its generalization ability.

- Cross-Validation: A more robust technique where the data is divided into multiple folds, and the model is trained and evaluated multiple times, using a different fold as the test set each time. This reduces the impact of data variability.

- Confusion Matrix: A visual representation of the model’s performance on a classification problem, showing the counts of true positives, true negatives, false positives, and false negatives.

The choice of metric and evaluation technique is problem-specific, and understanding the trade-offs between different metrics is vital for selecting the best model.

Q 25. What are some common challenges faced in implementing machine learning models?

Implementing machine learning models presents several common challenges:

- Data quality: Noisy, incomplete, or inconsistent data can significantly impact model performance. Data cleaning and preprocessing are crucial steps.

- Data bias: Biased data can lead to unfair or inaccurate models. Identifying and mitigating bias is critical for ethical and reliable machine learning.

- Overfitting: The model learns the training data too well and performs poorly on unseen data. Techniques like regularization, cross-validation, and early stopping can help prevent overfitting.

- Underfitting: The model is too simple to capture the underlying patterns in the data. Increasing model complexity, adding more features, or using a more powerful algorithm can help.

- Computational resources: Training complex models can require significant computational resources and time.

- Model interpretability: Understanding why a model makes specific predictions can be challenging, especially for complex models like deep neural networks. Techniques like SHAP values or LIME can improve interpretability.

- Deployment and monitoring: Deploying and monitoring models in production environments requires careful planning and infrastructure.

Q 26. Describe your experience with a specific machine learning project. What challenges did you encounter and how did you overcome them?

In a previous project, I worked on a customer churn prediction model for a telecommunications company. We used a variety of features, including customer demographics, usage patterns, and billing information, to build a model that could predict which customers were likely to churn. We initially used a logistic regression model, which gave a reasonable baseline performance. However, we found that the model struggled to capture the complex relationships between the features.

Challenges: The main challenges were handling imbalanced classes (many more non-churning customers than churning customers) and feature engineering. The imbalanced data led to a biased model that performed poorly on the minority class (churning customers). We also needed to engineer new features from the raw data to improve model accuracy.

Solutions: We addressed the class imbalance using techniques such as oversampling the minority class and using cost-sensitive learning. For feature engineering, we experimented with different feature transformations and created interaction terms. We also explored different models, including Random Forests and Gradient Boosting Machines, which proved more effective in capturing the non-linear relationships in the data. Ultimately, a Gradient Boosting model provided the best performance, achieving a significant improvement in the recall score for the churning customers, leading to a better targeting strategy for retention efforts.

Q 27. What are your preferred tools and technologies for machine learning?

My preferred tools and technologies for machine learning include:

- Programming Languages: Python (with libraries like NumPy, Pandas, Scikit-learn, TensorFlow, PyTorch)

- Cloud Platforms: Google Cloud Platform (GCP), Amazon Web Services (AWS), Microsoft Azure (for scalable model training and deployment)

- Model Deployment Tools: TensorFlow Serving, Kubeflow, Docker

- Version Control: Git

- Data Visualization Tools: Matplotlib, Seaborn

- Big Data Technologies: Spark (for handling large datasets)

The choice of tools depends on the specific project requirements and the scale of the data.

Q 28. Explain your understanding of model deployment and monitoring.

Model deployment refers to the process of putting a trained machine learning model into a production environment where it can be used to make predictions on new data. This involves integrating the model into a system, often using APIs or web services, so other applications can access it.

Model monitoring is equally important. Once a model is deployed, it’s crucial to continuously monitor its performance. This involves tracking key metrics, such as accuracy, latency, and resource usage. Over time, the model’s performance may degrade due to changes in the data distribution (concept drift) or other factors. Monitoring allows us to detect such degradation and take appropriate action, such as retraining the model or adjusting its parameters.

A robust deployment and monitoring strategy ensures that the model remains accurate and reliable over time and that any issues are identified and addressed quickly.

Key Topics to Learn for Machine Learning Algorithms Interviews

Mastering machine learning algorithms is crucial for interview success. Focus your preparation on a deep understanding of both theory and practical application. This will allow you to confidently discuss your skills and experience with potential employers.

- Supervised Learning: Understand the core concepts of regression (linear, logistic, polynomial) and classification (SVM, decision trees, Naive Bayes). Explore the bias-variance tradeoff and techniques for model evaluation (e.g., cross-validation, precision-recall).

- Unsupervised Learning: Grasp the fundamentals of clustering (k-means, hierarchical clustering) and dimensionality reduction (PCA, t-SNE). Be prepared to discuss applications in anomaly detection and recommendation systems.

- Deep Learning: Familiarize yourself with neural networks, including feedforward networks, convolutional neural networks (CNNs) for image processing, and recurrent neural networks (RNNs) for sequential data. Understand the concept of backpropagation and different activation functions.

- Model Selection and Evaluation: Develop a strong understanding of techniques for model selection, including hyperparameter tuning (grid search, random search), and performance evaluation metrics (accuracy, precision, recall, F1-score, AUC-ROC).

- Practical Applications: Prepare examples from your projects or experience that demonstrate your ability to apply these algorithms to solve real-world problems. Focus on the challenges faced, the solutions implemented, and the results achieved.

- Algorithmic Complexity and Efficiency: Be ready to discuss the time and space complexity of different algorithms and how to optimize them for performance.

Next Steps

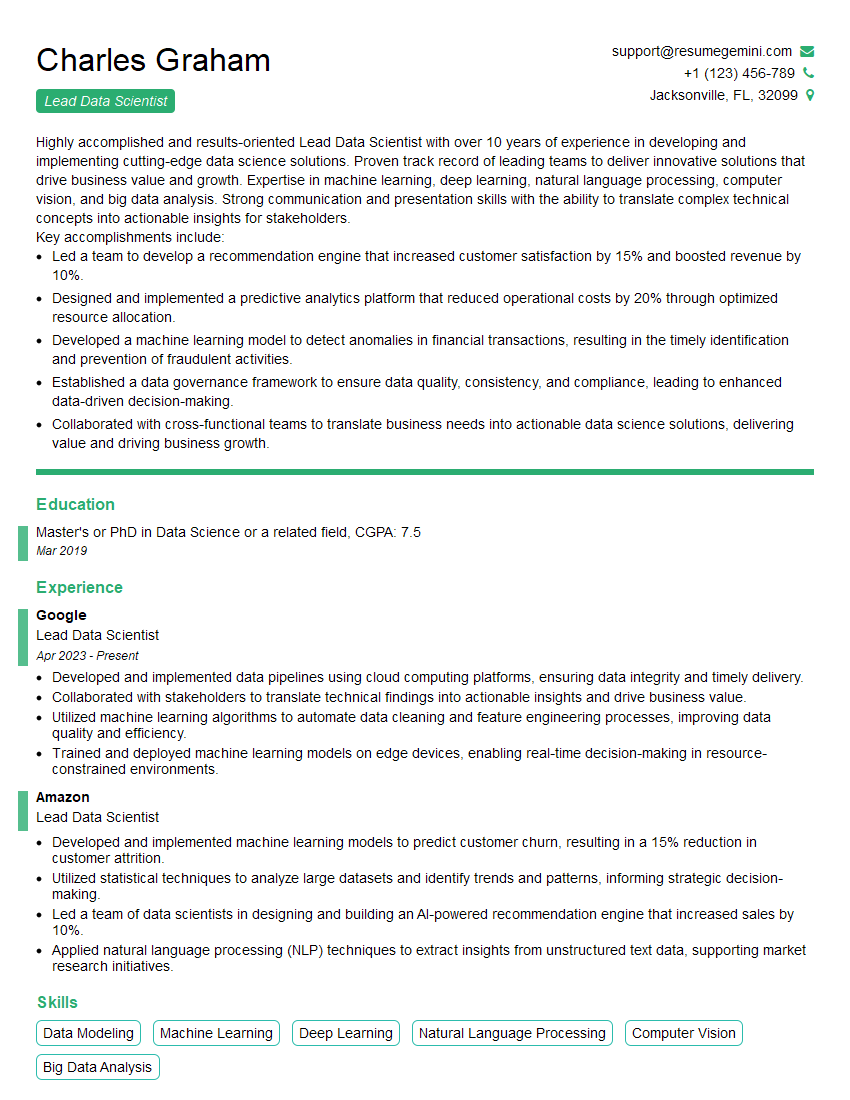

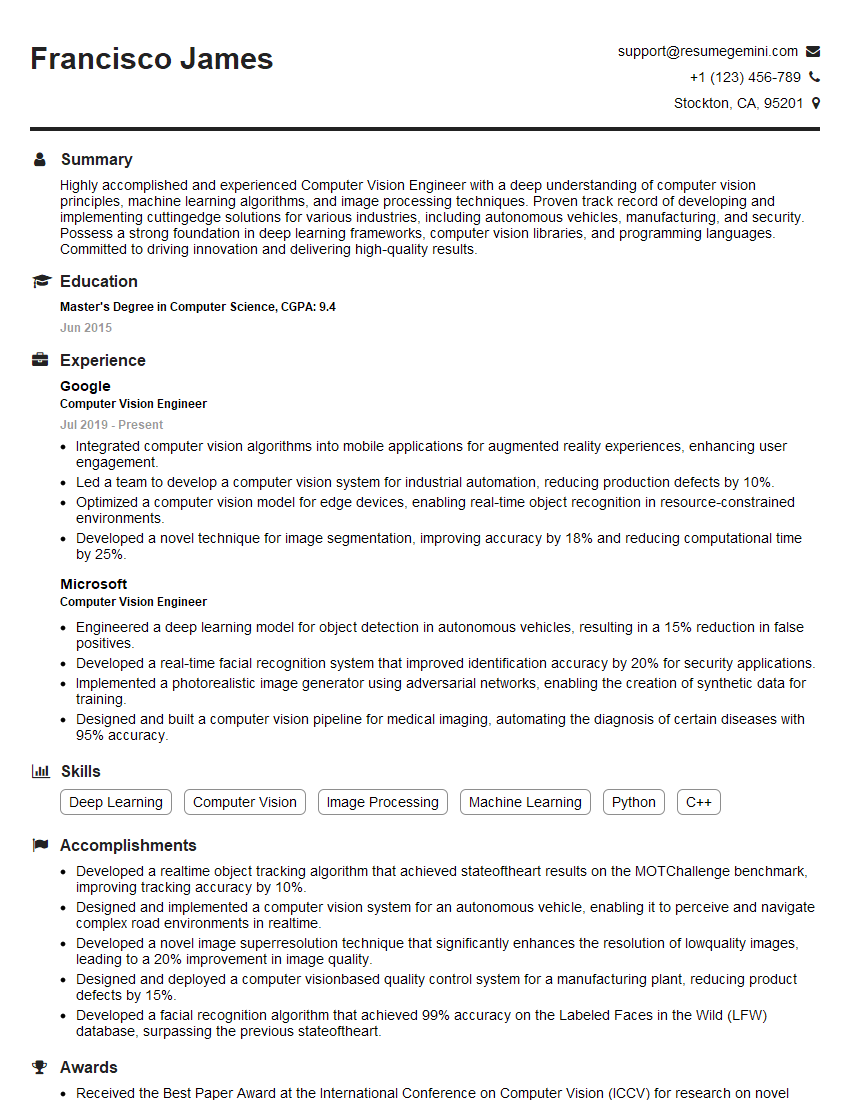

A strong understanding of machine learning algorithms is highly valued in today’s job market, significantly increasing your career prospects. To showcase your skills effectively, crafting a compelling and ATS-friendly resume is vital. This ensures your qualifications are highlighted to potential employers, leading to more interview opportunities.

ResumeGemini is a trusted resource to help you build a professional and impactful resume. We offer examples of resumes tailored specifically to highlight expertise in machine learning algorithms, guiding you to create a document that truly represents your abilities. Use ResumeGemini to elevate your job search and land your dream role.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO