The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to AWS Certified Solutions Architect – Associate interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in AWS Certified Solutions Architect – Associate Interview

Q 1. Explain the difference between EC2 and Lambda.

Amazon EC2 (Elastic Compute Cloud) and AWS Lambda are both compute services, but they differ significantly in how they are used and managed.

EC2 provides virtual servers (instances) that you control completely. You manage the operating system, software, and updates. Think of it like renting a physical server—you have full control but are responsible for all maintenance. This is great for applications requiring significant customization or specific software stacks.

Lambda, on the other hand, is a serverless compute service. You only write and upload your code; AWS manages the underlying infrastructure. Lambda executes your code in response to events, such as changes in an S3 bucket or an API Gateway request. It’s like hiring a contractor—you specify the task, and the contractor (AWS) handles all the details. This is ideal for event-driven architectures and microservices, greatly simplifying development and management.

In short: EC2 is for managing your own servers, while Lambda is for executing code without managing servers.

Example: You might use EC2 to host a web application requiring a specific database configuration, while you’d use Lambda to process images uploaded to S3.

Q 2. Describe different AWS deployment models (e.g., blue/green, canary).

AWS offers several deployment models to minimize downtime and risk during updates. Here are two common approaches:

- Blue/Green Deployments: This method maintains two identical environments: a ‘blue’ (production) and a ‘green’ (staging). You deploy the updated application to the green environment. Once testing is complete, you switch traffic from blue to green, making the updated version live. If problems arise, you can quickly switch back to the blue environment. This ensures zero downtime.

- Canary Deployments: A more gradual approach. You deploy the updated application to a small subset of users (the ‘canary’). You monitor the performance and stability in this subset. If everything is fine, you gradually roll out the update to the remaining users. This allows you to identify and address issues early before affecting all users.

Example: Imagine updating a high-traffic e-commerce website. A blue/green deployment minimizes disruption during the update, while a canary deployment would allow you to test a new checkout process on a small group of users before releasing it to everyone.

Q 3. How do you handle high availability and disaster recovery in AWS?

High availability and disaster recovery (DR) in AWS rely on several key strategies:

- Multiple Availability Zones (AZs): Distributing resources across different AZs within a region ensures resilience against AZ failures. If one AZ goes down, your application continues running in other AZs.

- Load Balancing: Using Elastic Load Balancing (ELB) distributes traffic across multiple EC2 instances, preventing overload and ensuring high availability.

- Amazon S3 Replication: Replicating data to different regions (cross-region replication) provides DR capabilities. If a region suffers an outage, your data is safe and accessible in another region.

- Amazon RDS Multi-AZ Deployments: For databases, using Multi-AZ deployments ensures high availability and data replication across AZs.

- AWS Global Accelerator: Improves the availability and performance of applications by routing traffic efficiently across multiple regions.

Example: A critical application might use EC2 instances across multiple AZs, with ELB distributing traffic, and S3 cross-region replication for data backup and DR.

Q 4. What are the different types of Amazon S3 storage classes?

Amazon S3 offers various storage classes, each optimized for different use cases and cost considerations:

- Amazon S3 Standard: The most commonly used storage class, suitable for frequently accessed data. It offers high availability and durability.

- Amazon S3 Intelligent-Tiering: Automatically moves data between access tiers based on usage patterns, optimizing storage costs.

- Amazon S3 Standard-IA (Infrequent Access): Designed for data accessed less frequently. It offers lower storage costs than S3 Standard.

- Amazon S3 One Zone-IA (Infrequent Access): Similar to S3 Standard-IA but stores data in a single AZ, providing lower cost but reduced redundancy.

- Amazon S3 Glacier Instant Retrieval: For archiving data, offering rapid access when needed, although it incurs retrieval fees.

- Amazon S3 Glacier Flexible Retrieval: Another archiving option with various retrieval times and associated costs.

- Amazon S3 Glacier Deep Archive: The lowest-cost storage class for long-term archival, with the longest retrieval time.

Choosing the right storage class is crucial for optimizing cost and performance. For example, frequently accessed logs might use S3 Standard, while backups or archival data might be suitable for S3 Glacier.

Q 5. Explain the concept of auto-scaling in AWS.

Auto Scaling automatically adjusts the number of EC2 instances in response to demand. This ensures your application can handle fluctuating workloads without manual intervention. It monitors metrics like CPU utilization, memory usage, or custom metrics and scales the number of instances up or down accordingly.

How it works: You define scaling policies based on predefined metrics and thresholds. When a metric exceeds a defined threshold, Auto Scaling launches new instances. When the demand decreases, it terminates idle instances.

Benefits: Improved application performance, cost optimization, and simplified management.

Example: A web application experiences a surge in traffic during peak hours. Auto Scaling automatically launches additional EC2 instances to handle the increased load, ensuring responsiveness and preventing service disruptions. Once the traffic subsides, it terminates the extra instances, reducing costs.

Q 6. How do you secure an EC2 instance?

Securing an EC2 instance involves a multi-layered approach:

- Security Groups: Act as virtual firewalls, controlling inbound and outbound traffic at the instance level. Define rules specifying which ports and protocols are allowed.

- IAM Roles: Granting specific permissions to EC2 instances instead of using static credentials enhances security.

- Operating System Hardening: Regularly updating the operating system and software, disabling unnecessary services, and using strong passwords are crucial.

- Encryption: Encrypting data at rest (using tools like EBS encryption) and in transit (using HTTPS) protects sensitive information.

- Regular Security Audits and Monitoring: Using tools like AWS CloudTrail, CloudWatch, and security scanners helps detect and respond to security threats.

- AWS Shield: A managed DDoS protection service that safeguards your instances from distributed denial-of-service attacks.

Example: A web server instance might have a security group allowing only HTTP and HTTPS traffic on port 80 and 443, respectively. Using an IAM role ensures the instance only accesses the necessary AWS services.

Q 7. What are the different types of Amazon VPCs?

While there isn’t a formal categorization of *types* of Amazon VPCs, there are key configurations and features that significantly alter how a VPC functions. These can be seen as different configurations or setups rather than distinct types:

- VPCs with single or multiple Availability Zones: VPCs can span a single Availability Zone or multiple AZs within a region for improved availability and fault tolerance. Multi-AZ deployments are critical for high availability.

- VPCs with different IP address ranges: VPCs can be configured with custom IPv4 and IPv6 address ranges to meet specific networking requirements.

- VPCs with different peering connections: VPC peering allows connecting two VPCs within the same AWS account or across different accounts to share resources.

- VPCs with transit gateways: AWS Transit Gateway allows connecting multiple VPCs and on-premises networks, creating a central point for routing.

Essentially, the ‘type’ of VPC is determined by its configuration, and the characteristics listed above provide great flexibility in addressing various networking needs.

Q 8. Explain the role of IAM in AWS security.

IAM, or Identity and Access Management, is the cornerstone of AWS security. Think of it as the bouncer of your AWS account, meticulously controlling who gets access to what resources and what they can do with them. It doesn’t protect your data directly, but it determines who can even *try* to access it. IAM works by assigning users, groups, and roles specific permissions. Users are individual accounts; groups bundle users with similar permissions; and roles are temporary security credentials that can be assumed by users or services.

For example, a database administrator might be assigned a role with permissions to manage RDS instances, but *not* to access S3 buckets. Another user, a web developer, might only have permission to upload code to an S3 bucket and nothing else. This granular control prevents unauthorized access and limits the damage from a compromised account. Best practices include using the principle of least privilege (granting only the necessary access), regularly reviewing and updating permissions, and leveraging multi-factor authentication (MFA) for all users.

Imagine a company with multiple departments. IAM allows the IT team to create specific roles for each department, granting only the permissions needed for their tasks, significantly enhancing security and preventing accidental data breaches or misconfigurations.

Q 9. How do you manage costs in an AWS environment?

Managing costs in AWS requires a multi-faceted approach. It’s not a one-size-fits-all solution but rather a continuous process of monitoring, optimization, and planning. The key is to understand where your money is going and then implement strategies to reduce unnecessary expenses.

- Utilize the Cost Explorer: This built-in AWS tool provides detailed cost visualizations and analysis, helping identify spending patterns and potential areas for optimization.

- Use Reserved Instances (RIs) or Savings Plans: These options offer significant discounts on your compute instances if you commit to using them for a certain period. They are ideal if you have predictable workloads.

- Right-size your instances: Don’t over-provision resources. Choose instance types appropriate to your application’s needs. Monitor your resource utilization and downsize if necessary.

- Leverage Spot Instances: These are spare EC2 instances offered at a significantly reduced price. They are suitable for fault-tolerant applications that can handle interruptions.

- Automate resource cleanup: Use tools like CloudFormation or Terraform to easily create and destroy resources, preventing unnecessary charges from idle or forgotten instances.

- Tagging: Implement a robust tagging strategy to organize and track your resources, allowing for easier cost allocation and identification of underutilized assets.

- CloudWatch cost alarms: Set up alarms to alert you when your costs exceed a predefined threshold, enabling proactive cost management.

Imagine a development team deploying a testing environment. By using Spot Instances and setting up automatic cleanup scripts, they can drastically reduce costs compared to using on-demand instances that are left running unnecessarily.

Q 10. What is the difference between Route 53 and CloudFront?

Route 53 and CloudFront are both AWS services for managing DNS and content delivery, but they serve different purposes. Route 53 is a fully managed DNS service, while CloudFront is a content delivery network (CDN).

Route 53 acts as your domain name system. It maps domain names (like www.example.com) to IP addresses, allowing users to access your services. It offers features like health checks, routing policies (like weighted routing and geolocation routing), and traffic management.

CloudFront is a CDN that caches your content (like static assets, videos, and applications) across multiple edge locations worldwide. This reduces latency by serving content from a location closer to users, improving performance and reducing the load on your origin servers. CloudFront often works in conjunction with Route 53 to route users to the appropriate CloudFront distribution.

Think of it like this: Route 53 is the address book, providing the address of your website. CloudFront is the postal service that delivers the content to users efficiently and quickly, regardless of their location.

Q 11. Describe different AWS databases (e.g., RDS, DynamoDB, Redshift).

AWS offers a variety of database services to cater to different needs. Here are three prominent examples:

- Amazon RDS (Relational Database Service): This is a managed service that makes it easy to set up, operate, and scale relational databases like MySQL, PostgreSQL, Oracle, and SQL Server. RDS handles tasks like backups, patching, and maintenance, allowing you to focus on your application logic. It’s best suited for applications requiring ACID properties (Atomicity, Consistency, Isolation, Durability).

- Amazon DynamoDB: A fully managed NoSQL database service. It’s a key-value and document database designed for high performance and scalability. It’s ideal for applications with high throughput and low latency requirements, such as mobile gaming or e-commerce platforms. It’s schema-less and offers flexible scaling options.

- Amazon Redshift: A fully managed, petabyte-scale data warehouse service in the cloud. It’s built for analyzing large datasets and provides powerful querying capabilities using SQL. Redshift excels at business intelligence and data analytics use cases.

Choosing the right database depends on your application’s requirements. If you need ACID properties and familiar SQL syntax, RDS is a good choice. If you need high performance and scalability with flexible schema, DynamoDB might be better. And for large-scale data warehousing and analytics, Redshift is ideal.

Q 12. Explain the concept of serverless computing.

Serverless computing is a cloud execution model where the cloud provider dynamically manages the allocation of computing resources. You don’t manage servers directly; instead, you focus on writing and deploying your code, and the provider handles the underlying infrastructure, scaling, and maintenance. Think of it like renting a room in a hotel—you use the room when you need it and pay only for the time you use it, without worrying about the building’s upkeep.

Key features of serverless computing include:

- Event-driven architecture: Functions are triggered by events, such as HTTP requests, database changes, or messages in a queue.

- Automatic scaling: The provider scales resources up or down based on demand, ensuring optimal performance and cost efficiency.

- Pay-per-use pricing: You only pay for the compute time your functions consume, eliminating the cost of idle servers.

Popular serverless services in AWS include AWS Lambda (for compute), API Gateway (for APIs), and S3 (for storage). Serverless is ideal for applications with unpredictable workloads, microservices architectures, and applications that require rapid scaling.

Q 13. How do you monitor and log AWS resources?

Monitoring and logging AWS resources is crucial for ensuring application health, identifying potential issues, and maintaining security. AWS provides a suite of services for this purpose.

- Amazon CloudWatch: This is the central monitoring and logging service in AWS. It collects metrics from various AWS resources (like EC2 instances, databases, and Lambda functions), allowing you to track resource utilization, application performance, and system health. You can create custom dashboards, set up alarms to notify you of unusual activity, and analyze logs for debugging and troubleshooting.

- Amazon CloudTrail: This service logs API calls made within your AWS account, providing a record of actions performed. This is crucial for security auditing and compliance. It can detect unauthorized access attempts or suspicious activity.

- Amazon S3: You can store logs from CloudWatch and CloudTrail in S3 for long-term storage and analysis.

Imagine a web application experiencing unexpected downtime. Using CloudWatch metrics, you can identify the root cause by monitoring CPU usage, network traffic, and error rates. CloudTrail logs can help determine if any unauthorized activity led to the problem.

Q 14. What is AWS CloudFormation and how do you use it?

AWS CloudFormation is an infrastructure-as-code (IaC) service that allows you to define and manage your AWS resources using JSON or YAML templates. Think of it as a blueprint for your AWS infrastructure. Instead of manually creating and configuring resources through the console, you define them in a template and CloudFormation handles the deployment and management.

Benefits include:

- Automation: Automate the creation and deployment of complex infrastructure.

- Consistency: Ensure consistent environments across different regions and accounts.

- Version control: Manage infrastructure changes using version control systems like Git.

- Repeatability: Easily recreate your infrastructure from a template.

A simple CloudFormation template might look like this (simplified example):

{ "Resources": { "MyEC2Instance": { "Type": "AWS::EC2::Instance", "Properties": { "ImageId": "ami-0c55b31ad2299a701", "InstanceType": "t2.micro" } } } } This template defines an EC2 instance. CloudFormation will automatically create the instance based on the specified properties. CloudFormation significantly improves efficiency and reduces errors in infrastructure management.

Q 15. Explain the different types of AWS load balancers.

AWS offers several types of load balancers, each designed for different needs. They all distribute incoming traffic across multiple targets (like EC2 instances), ensuring high availability and scalability. The key distinctions lie in their functionality and where they operate within your architecture.

- Application Load Balancers (ALB): These operate at the application layer (Layer 7) and understand HTTP and HTTPS. They can route traffic based on factors like path, host header, and cookies, allowing for sophisticated routing rules. Think of them as intelligent traffic cops that can direct requests based on the content of the request itself. For example, you could route traffic to different versions of your application based on the URL path.

- Network Load Balancers (NLB): These work at the transport layer (Layer 4) and handle TCP and UDP traffic. They are incredibly fast and ideal for applications that don’t need sophisticated routing rules – things like gaming servers or applications requiring minimal processing at the load balancer. They primarily focus on distributing traffic based on IP address and port.

- Classic Load Balancers (CLB): These are older, less feature-rich load balancers and generally considered less preferred for new deployments. They primarily target EC2 instances in a single Availability Zone and lack many of the features found in ALB and NLB.

- Gateway Load Balancers (GWLB): These are used for directing traffic to highly available and scalable edge services such as Amazon WAF (Web Application Firewall) or a self-managed application.

Choosing the right load balancer depends entirely on your application’s needs. If you need advanced routing rules based on application-level data, use an ALB. If speed and simplicity are paramount, use an NLB. Classic Load Balancers should only be used for legacy applications.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you implement a CI/CD pipeline in AWS?

Implementing a CI/CD pipeline on AWS typically involves several services working together. A common approach uses CodeCommit for source code management, CodeBuild for building and testing your application, CodePipeline for orchestrating the pipeline, and CodeDeploy for deploying to your target environment. Let’s break this down:

- CodeCommit (optional): A managed Git repository service. You can store your application code here.

- CodePipeline: This service acts as the orchestrator, defining the stages of your pipeline (Source, Build, Test, Deploy). It triggers actions based on code changes pushed to your repository.

- CodeBuild: This is your build server, compiling your code, running tests, and creating deployable artifacts. You define build specifications to customize the build environment and processes.

- CodeDeploy: This service handles the deployment of your application to your target environment (e.g., EC2 instances, ECS containers, or Elastic Beanstalk). It manages updates with different deployment strategies (in-place, blue/green).

For example, a change to your code in CodeCommit would trigger CodePipeline. CodePipeline would then invoke CodeBuild to build the code. After successful testing, CodePipeline would utilize CodeDeploy to deploy the updated application to your EC2 instances. You can also integrate other services, like AWS Lambda for custom actions or automated testing.

Q 17. What are the key components of an AWS global infrastructure?

AWS’s global infrastructure is vast and complex, but its key components can be summarized as follows:

- Global Regions: AWS operates numerous geographical regions across the globe. Each region is composed of multiple Availability Zones.

- Availability Zones (AZs): Within each region, AZs are isolated locations with independent power, networking, and connectivity. This design helps prevent single points of failure.

- Edge Locations: These are points of presence located around the world. They offer services like CloudFront (content delivery network) for faster content delivery to users.

- Networking Infrastructure: A massive network of interconnected data centers and global networks linking regions and AZs. This ensures high bandwidth and low latency for communications between resources.

- Global Load Balancing: AWS’s load balancers (ALB, NLB) are key for distributing traffic across multiple AZs and even regions to ensure high availability and scalability.

This distributed architecture enables redundancy, fault tolerance, and low latency for applications serving a global user base. The geographical dispersion ensures resilience against regional outages.

Q 18. How do you handle network security in AWS?

Network security in AWS is multifaceted and crucial. It relies on a layered approach encompassing:

- Virtual Private Cloud (VPC): This creates a logically isolated section of the AWS cloud, providing a secure environment for your resources. It’s your foundation for network security.

- Security Groups: These act as firewalls for your EC2 instances and other resources within the VPC. They control inbound and outbound traffic based on rules you define (port numbers, protocols, IP addresses).

- Network Access Control Lists (NACLs): These provide another layer of security, acting as filters at the subnet level. They are more restrictive than security groups and control traffic based on rules that you define.

- IAM Roles and Policies: These manage access control at the user and resource level. Restricting user permissions is vital for protecting your data and resources from unauthorized access.

- AWS WAF (Web Application Firewall): This protects your web applications from common web exploits like SQL injection and cross-site scripting (XSS).

- VPN and Direct Connect: These establish secure connections between your on-premises network and your AWS VPC, enabling hybrid cloud environments.

A well-designed AWS environment incorporates all these security mechanisms to establish defense in depth – multiple layers of protection to prevent unauthorized access.

Q 19. Describe different AWS networking components (e.g., subnets, security groups).

Let’s explore some key AWS networking components:

- VPC (Virtual Private Cloud): Your isolated section of the AWS cloud. Think of it as your own private network in the cloud.

- Subnets: Subnets are divisions within your VPC. They’re ranges of IP addresses. You can create public subnets (with internet access) and private subnets (without direct internet access).

- Security Groups: Act like firewalls for your instances, controlling inbound and outbound traffic based on rules.

- Network ACLs (Network Access Control Lists): Provide an additional layer of security at the subnet level, controlling traffic flow.

- Internet Gateway: Allows communication between your VPC and the internet.

- NAT Gateway/NAT Instance: Enables instances in private subnets to access the internet without exposing them directly.

- Route Tables: Define how traffic is routed within your VPC.

- Elastic IP Addresses: Static public IP addresses that you can associate with your instances.

For instance, you might have a VPC with a public subnet for web servers and a private subnet for databases. Security groups would control access to those servers, and the NAT Gateway would allow private instances to connect to the internet.

Q 20. Explain how to design a highly available application on AWS.

Designing a highly available application on AWS requires careful consideration of redundancy and fault tolerance. A common approach uses multiple Availability Zones (AZs) and leverages services designed for high availability.

- Multiple AZs: Distribute your application components across multiple AZs. This ensures that if one AZ experiences an outage, your application can continue operating from other AZs.

- Load Balancing: Use an Application Load Balancer (ALB) or Network Load Balancer (NLB) to distribute traffic across your instances in different AZs. This prevents a single point of failure.

- Redundant Databases: Employ a database solution like Amazon RDS with multi-AZ deployments, providing automatic failover to a standby instance in a different AZ.

- Auto Scaling: Configure Auto Scaling groups to automatically add or remove instances based on demand, ensuring scalability and responsiveness.

- Storage Redundancy: Use services like S3 with multiple redundancy options (e.g., S3 Standard) to ensure data durability and availability.

For example, a web application could have its web servers, application servers, and database distributed across two AZs, with an ALB distributing traffic. If one AZ fails, the ALB automatically routes traffic to the instances in the other AZ, maintaining application availability.

Q 21. How do you choose the right AWS instance type for your application?

Choosing the right AWS instance type depends heavily on your application’s specific needs. There’s no one-size-fits-all answer.

Consider these factors:

- CPU: How much processing power does your application require? CPU-intensive tasks need instances with many vCPUs.

- Memory: How much RAM does your application need? Applications with large datasets or many concurrent users require more memory.

- Storage: What type and amount of storage is needed? Options include instance store (fast, ephemeral), EBS (persistent, various performance tiers), and instance store.

- Network Performance: How important is network bandwidth and latency? Applications with high network traffic need instances with high network performance capabilities.

- Operating System: Do you need a specific operating system? AWS offers a wide variety of operating systems and environments.

- Cost: Balance performance needs with cost considerations. Choose the least expensive instance type that meets your application requirements.

For example, a web server might need a general-purpose instance with a good balance of CPU, memory, and network performance, while a database server would likely require a high-memory instance with high I/O performance.

AWS provides a detailed comparison tool to help you select the best instance type based on your application’s needs.

Q 22. What are the different AWS regions and availability zones?

AWS operates globally using a network of regions and availability zones. A region is a large geographical area, like US East (N. Virginia) or Europe (Ireland), offering multiple Availability Zones within it. Availability Zones (AZs) are distinct locations within a region that are isolated from each other. This isolation ensures that if one AZ experiences an outage, your applications running in other AZs within the same region remain operational. Think of regions as states and AZs as cities within those states. Each region provides redundancy and resilience, but it’s crucial to architect your applications across multiple AZs within a region for high availability. The exact number of regions and AZs varies and is constantly expanding as AWS continues its global growth.

For example, if you deploy a web application across multiple AZs in the US East (N. Virginia) region, even if one AZ has a power failure, your application will likely remain accessible from other AZs in the same region, minimizing downtime.

Q 23. Explain the concept of Amazon EBS volumes.

Amazon Elastic Block Store (EBS) volumes are block-level storage volumes that you can attach to your Amazon Elastic Compute Cloud (EC2) instances. Imagine them as hard drives for your virtual servers. They provide persistent storage, meaning data remains even if you stop or terminate your EC2 instance. There are various EBS volume types, each optimized for different workloads. For example, gp3 offers a general-purpose balance of price and performance, while io2 is ideal for I/O-intensive applications.

Choosing the right EBS volume type is crucial for performance and cost optimization. A database server, for instance, will benefit greatly from using a high-performance, high-IOPS volume type like io2 or gp3 with sufficient provisioned IOPS. Meanwhile, a less demanding web server might be perfectly served by a less expensive gp3 volume with standard IOPS.

Q 24. How do you manage AWS access and permissions?

Managing AWS access and permissions is critical for security. AWS Identity and Access Management (IAM) is the cornerstone of this process. IAM allows you to create users, groups, and roles, assigning granular permissions to each. Instead of giving everyone full access, you adhere to the principle of least privilege, granting only the necessary permissions for each user or role to perform their tasks.

For example, a database administrator might only need permissions to manage databases, while a developer might only need access to specific S3 buckets for storing code. Using IAM policies, you precisely define these permissions, ensuring that access is restricted and only authorized personnel can access specific AWS resources. This robust approach greatly reduces the risk of unauthorized access and data breaches.

IAM roles are particularly useful for EC2 instances, allowing them to access other AWS services without needing explicit credentials. This enhances security by eliminating the need to embed credentials directly into your applications.

Q 25. What is AWS CloudTrail and how is it used?

AWS CloudTrail is a service that provides a log file of AWS API calls made in your account. Think of it as a detailed audit trail of all activity within your AWS environment. It records information such as the user, the time of the action, the source IP address, and the API call itself. This helps with security auditing, troubleshooting, and compliance.

CloudTrail logs are invaluable for security investigations. If a security incident occurs, you can review the CloudTrail logs to identify the actions that led to the incident. This helps you understand the scope of the incident, identify the responsible party, and take appropriate remedial actions. It’s also helpful for identifying unusual patterns of activity that might indicate malicious behavior.

CloudTrail logs can also be used for compliance purposes. Many regulatory frameworks require organizations to maintain detailed logs of their activities. CloudTrail provides a simple way to comply with these requirements.

Q 26. Explain the benefits of using AWS KMS.

AWS Key Management Service (KMS) is a managed service that allows you to create and manage cryptographic keys. These keys are used to encrypt and decrypt data, protecting your sensitive information both in transit and at rest. The major benefits of using KMS include:

- Enhanced Security: KMS manages your keys securely, reducing the risk of compromise. The keys are protected using hardware security modules (HSMs) in AWS data centers.

- Simplified Key Management: KMS handles the complexity of key management, such as key rotation and lifecycle management, making it easy to manage keys across your applications.

- Integration with other AWS Services: KMS integrates seamlessly with other AWS services, such as S3, EBS, and RDS, making it easy to encrypt data using KMS-managed keys.

- Compliance: Using KMS helps you meet compliance requirements by providing a secure and auditable way to manage your encryption keys.

For example, you can encrypt data stored in S3 using KMS-managed keys, ensuring that only authorized users can access the data. This safeguards against unauthorized access, even if someone gains access to your S3 buckets.

Q 27. How would you troubleshoot a connectivity issue in an AWS environment?

Troubleshooting connectivity issues in AWS can involve a systematic approach. I would start by identifying the specific problem. Is it an EC2 instance unable to connect to the internet, or is it a problem with inter-instance communication within a VPC? Once the issue is clearly defined, the troubleshooting steps depend on the nature of the problem:

- Check Security Groups: Ensure that the necessary inbound and outbound rules are configured in the security groups associated with the affected instances. Are ports open for the required protocols (e.g., SSH, HTTP, HTTPS)?

- Network ACLs: Check if Network Access Control Lists (NACLs) are blocking traffic. NACLs operate at the subnet level.

- Route Tables: Verify that the correct route table is associated with the subnet and that the routes are correctly configured.

- DNS Resolution: Check if DNS resolution is working correctly. Can the instance resolve domain names?

- Instance Status: Confirm that the EC2 instance is in a running state and is healthy.

- AWS CLI or SDK: Using the AWS CLI or an SDK to check the instance’s network interfaces and their associated information.

- CloudWatch Logs and Metrics: Examining CloudWatch logs and metrics related to the instance and network can provide insights into the issue.

A methodical approach, starting with basic checks and progressively moving to more complex analysis using AWS tools, will efficiently resolve most connectivity issues.

Q 28. Describe your experience with AWS cost optimization strategies.

AWS cost optimization is an ongoing process requiring a multi-pronged strategy. My experience involves several key areas:

- Right-sizing Instances: Choosing the appropriate EC2 instance size for the workload is crucial. Using larger than needed instances is a common source of wasted cost. Regularly review instance utilization metrics and resize as necessary. Tools like AWS Cost Explorer and the Rightsizing Recommendations feature can help you identify potential savings.

- Spot Instances: For non-critical workloads, leveraging Spot Instances can significantly reduce costs. They offer unused EC2 compute capacity at significantly discounted prices.

- Reserved Instances/Savings Plans: Committing to long-term usage with Reserved Instances or Savings Plans can offer substantial discounts depending on your usage patterns.

- EBS Optimization: Selecting the appropriate EBS volume type and size based on workload requirements avoids unnecessary costs. Regularly review volume usage and delete unneeded volumes.

- Resource Tagging: Implementing a comprehensive tagging strategy enables effective cost allocation and tracking. This helps identify cost drivers and optimize spending across different departments or projects.

- Automated Scaling: Implementing auto-scaling ensures that your infrastructure scales appropriately to meet demand, avoiding over-provisioning during low-traffic periods.

- Monitoring and Analysis: Regularly reviewing AWS Cost Explorer and other cost management tools allows us to track spending, identify cost trends, and make informed decisions about resource utilization.

In one project, by analyzing CloudWatch metrics and applying right-sizing, we reduced EC2 costs by 30% without impacting application performance. This illustrates the significance of proactive cost management within AWS.

Key Topics to Learn for AWS Certified Solutions Architect – Associate Interview

Ace your AWS Certified Solutions Architect – Associate interview by focusing on these key areas. Remember, understanding the “why” behind each concept is as important as knowing the “how.”

- Compute Services (EC2, Lambda): Understand instance types, auto-scaling, load balancing, serverless architectures, and cost optimization strategies. Think about how you would design a highly available and scalable application using these services.

- Storage Services (S3, EBS, Glacier): Master different storage tiers, data lifecycle management, data security, and cost-effective storage solutions. Consider how you’d architect a data backup and recovery solution.

- Networking (VPC, Route 53, CloudFront): Grasp VPC peering, subnets, security groups, NAT gateways, and DNS management. Practice designing secure and efficient network architectures for different applications.

- Database Services (RDS, DynamoDB): Learn about relational and NoSQL databases, database scaling, backups, and high availability. Be prepared to discuss choosing the right database for a specific use case.

- Security (IAM, KMS): Understand Identity and Access Management (IAM) roles and policies, Key Management Service (KMS), and best practices for securing your AWS environment. Consider how you’d implement a least privilege security model.

- Deployment & Management (CloudFormation, CloudWatch): Learn about infrastructure as code, automating deployments, monitoring, and logging. Practice designing a robust monitoring system for your applications.

- High Availability and Disaster Recovery: Understand designing solutions that are resilient to failures and able to recover quickly from disasters. Consider different strategies and their trade-offs.

- Cost Optimization Strategies: Learn about various cost optimization techniques for different AWS services. Be prepared to discuss how you’d identify and reduce unnecessary costs.

Next Steps

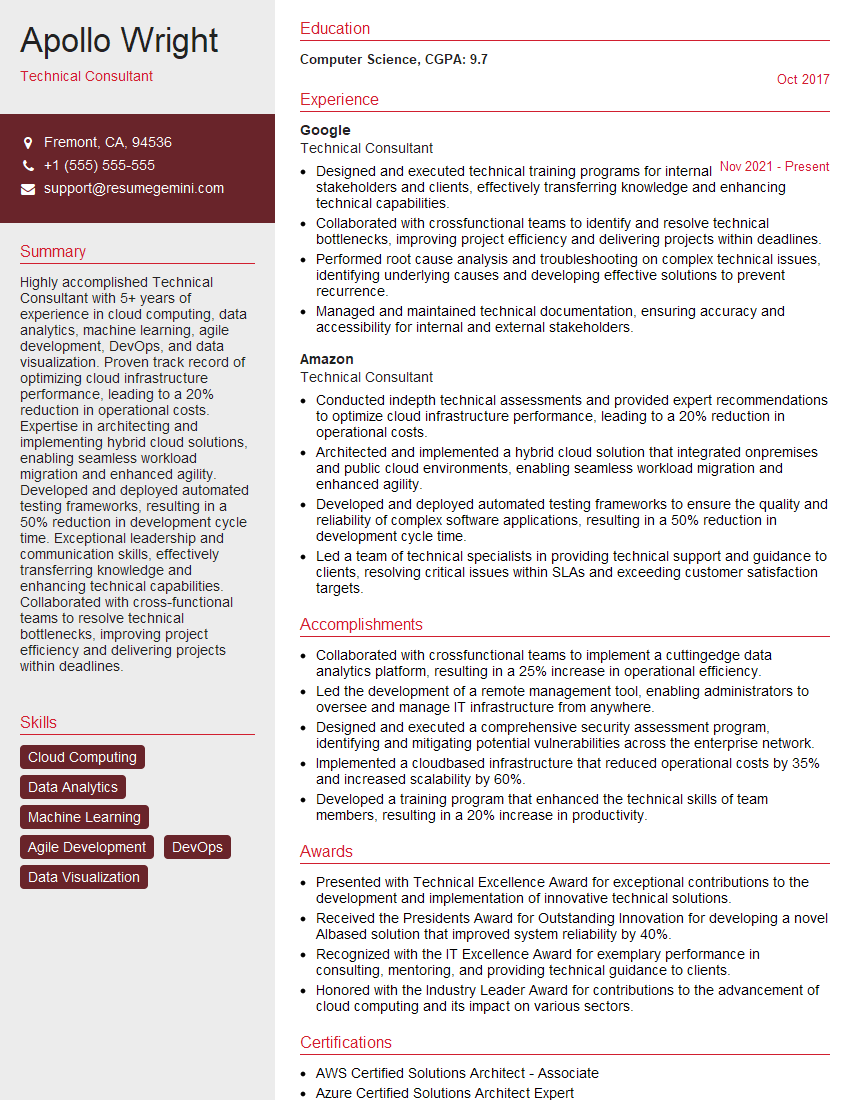

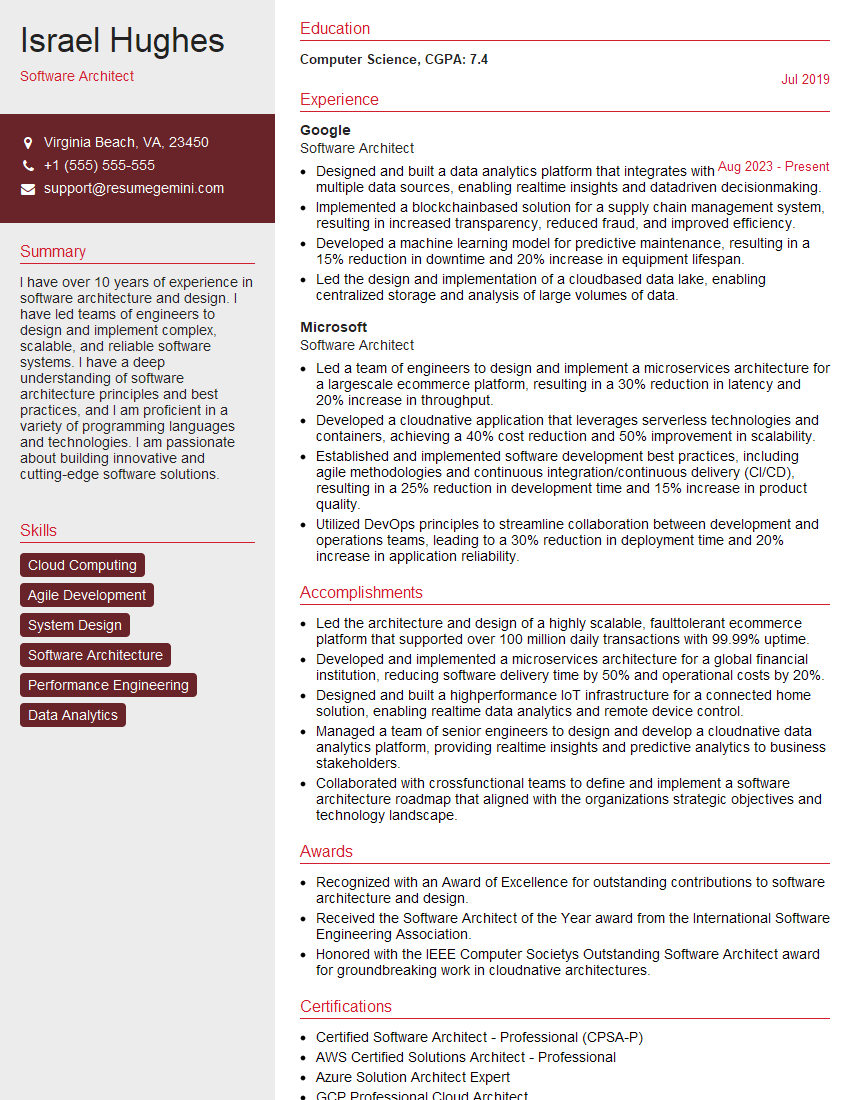

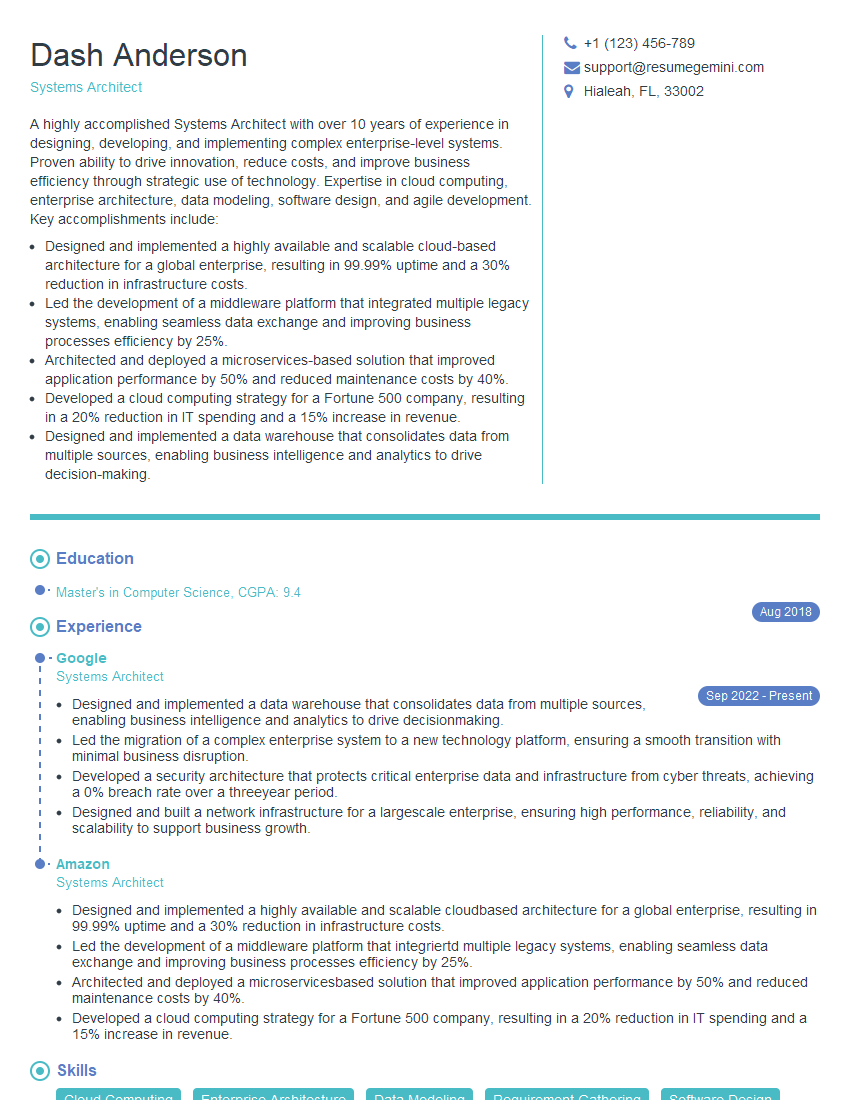

Earning your AWS Certified Solutions Architect – Associate certification significantly boosts your career prospects, opening doors to higher-paying roles and greater responsibility. To maximize your job search success, a well-crafted, ATS-friendly resume is crucial. ResumeGemini can help you build a professional, impactful resume that highlights your skills and experience. They provide examples specifically tailored for AWS Certified Solutions Architect – Associate candidates, ensuring your resume stands out. Invest the time to build a strong resume – it’s your first impression with potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO