Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Cloud-Based Data Warehousing (e.g., AWS Redshift, Azure Synapse) interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Cloud-Based Data Warehousing (e.g., AWS Redshift, Azure Synapse) Interview

Q 1. Explain the difference between a data warehouse and a data lake.

Think of a data warehouse as a highly organized, neatly stacked library, optimized for fast retrieval of specific books (data). It’s structured, schema-on-write, meaning the data structure is defined before data is loaded. A data lake, on the other hand, is like a massive, unorganized warehouse where you dump all kinds of materials (data) – raw, structured, semi-structured, unstructured. It’s schema-on-read, meaning the data structure is defined only when you query it. The data lake provides flexibility for exploration but requires more processing power for analysis.

- Data Warehouse: Structured, schema-on-write, optimized for analytics, suitable for business intelligence reporting.

- Data Lake: Schema-on-read, less structured, supports various data types, ideal for exploratory data analysis and machine learning.

For instance, a retail company might use a data warehouse to store summarized sales data for creating reports on monthly sales trends, while using a data lake to store raw transaction data for more advanced analytics, like identifying customer segments for targeted marketing campaigns.

Q 2. Describe your experience with ETL processes in a cloud environment.

My experience with ETL (Extract, Transform, Load) processes in cloud environments spans several years and various tools. I’ve extensively used AWS Glue, Azure Data Factory, and Informatica Cloud to build robust and scalable ETL pipelines. A typical project might involve extracting data from various sources (databases, flat files, APIs), transforming it using SQL or Python scripts (cleaning, formatting, enriching), and loading it into a cloud data warehouse like Redshift or Synapse. I’m adept at optimizing these pipelines for performance and cost-effectiveness, utilizing parallel processing, partitioning, and data compression techniques. For example, I once optimized an ETL process for a large e-commerce company that reduced processing time by 60% by implementing data partitioning and using optimized data types in Redshift.

I am also experienced in handling error handling and monitoring within the ETL pipelines, ensuring data quality and reliability. This often involves implementing robust logging and alerting mechanisms to catch and resolve issues quickly.

Q 3. What are the key performance indicators (KPIs) you would monitor in a cloud data warehouse?

Key performance indicators (KPIs) for a cloud data warehouse are crucial for monitoring its health and performance. I focus on a combination of operational and query-related KPIs. These include:

- Query latency: Average time to execute queries. High latency indicates performance issues.

- Query throughput: Number of queries processed per unit of time. Low throughput suggests bottlenecks.

- Storage utilization: Amount of storage space used and its growth rate.

- Data loading speed: How quickly data is ingested into the warehouse.

- Cluster resource utilization (CPU, memory, network): Helps identify underutilized or overloaded resources.

- Data refresh frequency: How often the data is updated to reflect current business conditions.

- Error rate: Percentage of failed queries or data loading attempts.

By constantly monitoring these KPIs, we can proactively identify and address performance issues, ensuring the data warehouse remains efficient and reliable for business intelligence.

Q 4. How would you optimize query performance in AWS Redshift?

Optimizing query performance in AWS Redshift involves a multifaceted approach. It’s not just about writing efficient SQL; it’s about the entire system’s configuration and data organization. Here’s how I approach it:

- Optimize SQL queries: Use appropriate data types, indexes (sort keys, dist keys), and avoid full table scans. Use

EXPLAINto analyze query execution plans. - Data modeling: Design star or snowflake schemas for efficient data access.

- Data partitioning and sorting: Distribute data across nodes strategically using appropriate distribution and sort keys based on frequently queried columns.

- Vacuum and Analyze: Regularly run

VACUUMto remove deleted rows andANALYZEto update table statistics for accurate query planning. - Cluster sizing: Ensure sufficient compute resources (nodes, instance type) for your workload. Consider using auto-scaling.

- Workload management: Prioritize critical queries and manage concurrency to prevent resource contention.

- Caching: Utilize Redshift’s caching mechanisms to reduce disk I/O.

For example, I once improved a slow-running query in Redshift by 80% by simply adding a composite sort key to the target table, improving data retrieval efficiency.

Q 5. How would you handle data security and access control in Azure Synapse?

Data security and access control in Azure Synapse are paramount. I leverage several features to ensure only authorized users can access sensitive data:

- Azure Active Directory (Azure AD) Integration: Using Azure AD for authentication and authorization provides a centralized identity management solution. This allows for fine-grained access control based on roles and permissions.

- Row-Level Security (RLS): Implementing RLS policies restricts access to specific rows based on user attributes or security contexts. This enables selective data visibility without modifying the underlying data.

- Column-Level Security (CLS): Similar to RLS, CLS restricts access to specific columns based on user roles.

- Azure Synapse workspace security settings: Managing network access, encryption at rest and in transit are critical aspects of securing the entire workspace.

- Data encryption: Employing encryption at rest and in transit safeguards data from unauthorized access.

- Data masking: Obfuscating sensitive data to protect privacy while maintaining data utility.

For instance, I might grant a sales team access to only sales-related data and mask customer credit card information using a combination of RLS and data masking in Azure Synapse.

Q 6. Explain your understanding of data warehousing architectures.

Data warehousing architectures encompass various designs to efficiently manage and process large datasets. Common architectures include:

- Star Schema: A central fact table surrounded by dimension tables. Simple and efficient for reporting.

- Snowflake Schema: An extension of the star schema where dimension tables are further normalized. Reduces data redundancy.

- Data Vault: A model designed for high data volume and agility; suitable for complex transformations.

- 3-tier architecture: Separates data acquisition, processing, and presentation layers for improved scalability and maintainability.

The choice of architecture depends on factors such as the volume, variety, and velocity of data, and the specific business requirements. For example, a business intelligence dashboard might employ a star schema for its simplicity and reporting performance, while a machine learning application might benefit from a more flexible, less normalized architecture such as a data lake.

Q 7. Compare and contrast AWS Redshift and Azure Synapse.

AWS Redshift and Azure Synapse are both massively parallel processing (MPP) cloud data warehouses, but they have some key differences:

- Pricing Model: Redshift typically uses a pay-per-use model based on compute and storage, while Synapse offers different pricing models (serverless, dedicated SQL pools) depending on workload.

- Integration with other cloud services: Redshift integrates tightly with other AWS services (S3, Glue, EMR), while Synapse integrates well with other Azure services (Azure Data Factory, Databricks).

- Scalability: Both offer excellent scalability, but Synapse’s serverless SQL pool allows for more dynamic scaling based on demand.

- SQL dialect: Both use SQL, but there are slight differences in supported functions and syntax.

- Features: Synapse offers a broader range of features, including serverless compute, Apache Spark integration, and dedicated data exploration capabilities.

Choosing between Redshift and Synapse depends on your existing cloud infrastructure, budget constraints, and specific analytical needs. For example, an organization fully invested in AWS might prefer Redshift for seamless integration and reduced operational overhead, while an organization already using Azure services might find Synapse to be a more natural fit.

Q 8. Describe your experience with data modeling for a data warehouse.

Data modeling for a data warehouse is the process of designing the structure and organization of data to efficiently store and retrieve information for analytical purposes. It involves defining dimensions, facts, and relationships between them to create a star schema or snowflake schema, commonly used for analytical queries. My experience includes designing dimensional models using both top-down and bottom-up approaches, depending on the project requirements and the existing data landscape. For example, in a project for a retail company, I designed a star schema with a central fact table containing sales transactions and dimensions like time, product, customer, and store. This allowed for efficient querying and analysis of sales data across various dimensions. Another project involved a more complex snowflake schema to accommodate a large number of attributes and handle hierarchical relationships. I utilized tools like Erwin and PowerDesigner to visually model the database schema and document data relationships. The key is to strike a balance between simplicity and completeness, ensuring the model is easy to understand and maintain while supporting the analytical needs of the business.

Q 9. How would you troubleshoot a slow-performing query in a cloud data warehouse?

Troubleshooting slow-performing queries in a cloud data warehouse is a systematic process. My approach begins with identifying the bottleneck. I start by using the query monitoring tools provided by the cloud provider (e.g., AWS Redshift’s query monitoring, Azure Synapse’s query performance insights). These tools provide valuable information about query execution time, resource utilization (CPU, memory, network), and execution plan. For example, a long execution time might indicate a poorly optimized query. A high CPU usage might suggest insufficient resources. A slow network might indicate that data needs to be moved between nodes. Once the bottleneck is identified, I use a combination of strategies to improve query performance. This could involve rewriting the SQL query, using appropriate indexing strategies (e.g., clustered and distributed indexes in Redshift), optimizing data types, partitioning the data tables, or adding more resources to the data warehouse cluster. For instance, if a query scans a large table without an index, adding a suitable index drastically reduces the execution time. If the query is computationally intensive, I might consider using more powerful nodes or increasing the cluster size. It’s often an iterative process, requiring analysis, optimization, and retesting until satisfactory performance is achieved.

Q 10. What are some best practices for designing a scalable data warehouse in the cloud?

Designing a scalable data warehouse in the cloud requires careful planning and consideration of several key factors. Scalability is achieved by adopting a modular and flexible architecture. Some crucial best practices include:

- Choosing the right cloud provider and service: Selecting a cloud data warehouse service that suits your specific needs and anticipated growth is essential. Factors to consider include cost, performance, scalability, and ease of management.

- Data partitioning and clustering: Partitioning data into smaller, manageable units and strategically distributing them across multiple nodes improves query performance and enables parallel processing. This significantly enhances scalability for large datasets.

- Using columnar storage: Columnar storage is optimized for analytical queries, allowing the database to only read the required columns, reducing the I/O operations and improving query speeds, particularly when dealing with large fact tables.

- Implementing proper indexing strategies: Creating appropriate indexes on frequently queried columns dramatically improves query performance and supports data warehouse scalability. This speeds up data retrieval during queries.

- Leveraging cloud-native services: Using cloud services for data loading, transformation, and orchestration streamlines the data pipeline and improves overall system efficiency. Tools like AWS Glue and Azure Data Factory are examples of such services.

- Monitoring and automation: Implement monitoring tools to track performance metrics, and use automation for tasks like scaling the cluster based on usage patterns. This allows for dynamic resource allocation, ensuring optimal performance at all times.

By following these best practices, we ensure the data warehouse can handle increasing data volumes and query loads without compromising performance. This ensures the business can continue to extract value from their data as their needs evolve.

Q 11. Explain your experience with different data integration tools.

My experience encompasses various data integration tools, including ETL (Extract, Transform, Load) tools like Informatica PowerCenter, SSIS (SQL Server Integration Services), and cloud-native services such as AWS Glue and Azure Data Factory. Each tool has its strengths and weaknesses. Informatica and SSIS are robust, mature tools suitable for complex ETL processes, but they can be more expensive and require specialized skills. Cloud-native services offer more scalability and cost-effectiveness, particularly for cloud-based data warehouses. For instance, I have used AWS Glue to build serverless ETL jobs to extract data from various sources, transform them using PySpark, and load them into AWS Redshift. This approach eliminates the need to manage infrastructure and offers automatic scaling based on the workload. Similarly, I leveraged Azure Data Factory to orchestrate data pipelines that integrate data from various on-premises and cloud-based sources into Azure Synapse Analytics. The choice of tool depends on factors like budget, complexity, and the existing IT infrastructure. A crucial aspect is to understand the limitations and capabilities of each tool to make informed decisions.

Q 12. How would you implement data governance in a cloud data warehouse?

Implementing data governance in a cloud data warehouse is crucial for ensuring data quality, security, and compliance. My approach involves a multi-faceted strategy. First, I establish clear data ownership and accountability. This involves identifying data owners responsible for the accuracy, completeness, and quality of their respective datasets. Second, I define data quality rules and implement automated data validation checks to detect and resolve data issues proactively. For example, we can define rules for data type validation, range checks, and consistency checks. Third, I enforce access control policies using the cloud provider’s security features (e.g., IAM roles in AWS, Azure Active Directory). This ensures that only authorized users can access sensitive data. Fourth, I create and maintain comprehensive data dictionaries and metadata catalogs to document data definitions, sources, and usage. This improves data discoverability and understanding. Finally, I regularly review and update data governance policies and procedures to adapt to changing business needs and regulatory requirements. This ensures long-term data reliability and compliance.

Q 13. Describe your experience with data warehousing automation tools.

My experience includes working with various data warehousing automation tools to streamline data pipeline operations and improve efficiency. These tools automate repetitive tasks, reduce manual intervention, and improve overall data quality. I’ve worked extensively with orchestration tools like Apache Airflow and cloud-native services such as AWS Step Functions and Azure Logic Apps to create automated workflows for data loading, transformation, and monitoring. For example, I designed an Airflow pipeline that automatically extracts data from various sources, cleans and transforms it, and loads it into a data warehouse on a scheduled basis. This automation eliminates manual intervention and ensures timely and consistent data updates. I also utilized AWS Step Functions to create state machines for complex data processing workflows, providing better error handling and monitoring capabilities. The choice of tool depends on the complexity of the workflow and the existing infrastructure. The overall objective is to maximize automation to reduce operational overhead and minimize human error.

Q 14. What are the different types of data warehousing solutions?

Data warehousing solutions can be broadly categorized into several types:

- Operational Data Store (ODS): An ODS is a type of data warehouse that focuses on current operational data, providing real-time or near real-time insights for operational decision-making. It typically handles a smaller volume of data compared to a traditional data warehouse.

- Data Lake: A data lake is a centralized repository that stores structured, semi-structured, and unstructured data in its raw form. It’s often used as a precursor to a data warehouse, allowing for greater flexibility and the ability to explore diverse data types. Think of it as a raw material stockpile before refining into a structured data warehouse.

- Data Mart: A data mart is a smaller, focused subset of a data warehouse designed to serve the specific analytical needs of a single department or business unit. They are usually created from a larger data warehouse for improved performance and targeted analytics.

- Cloud Data Warehouse: This refers to data warehouses hosted on a cloud platform, such as AWS Redshift, Azure Synapse Analytics, or Google BigQuery. They offer scalability, cost-effectiveness, and elasticity, making them increasingly popular.

- Enterprise Data Warehouse (EDW): An EDW is a comprehensive data warehouse that consolidates data from across an entire organization, serving as a single source of truth for analytical reporting and decision-making. EDWs are large and complex systems and require significant planning and resources.

The choice of data warehousing solution depends on the organization’s specific needs, data volume, analytical requirements, and budget.

Q 15. How do you handle data quality issues in a cloud data warehouse?

Data quality is paramount in any data warehouse, especially in the cloud. Think of it like building a house – you can’t build a strong structure on a weak foundation. Poor data quality leads to inaccurate insights and flawed decision-making. To address this, we employ a multi-pronged approach:

- Data Profiling and Validation: Before loading data, we thoroughly profile it to understand its structure, identify inconsistencies, and check for completeness. This often involves automated tools that check data types, ranges, and null values. For example, we might use SQL queries to identify rows with missing values in critical columns like ‘customer_id’ or ‘order_date’.

- Data Cleansing and Transformation: Once identified, data quality issues are addressed through cleansing and transformation. This could involve handling missing values (imputation or removal), correcting inconsistent formats (e.g., standardizing date formats), and removing duplicates. We might use ETL (Extract, Transform, Load) tools like AWS Glue or Azure Data Factory to automate these processes.

- Data Monitoring and Alerting: Ongoing monitoring is crucial. We establish automated checks and alerts that trigger when data quality metrics fall below a defined threshold. This allows for quick identification and remediation of emerging issues. For example, if the percentage of null values in a key column exceeds 5%, an alert will be generated.

- Data Governance and Policies: Clear data governance policies and procedures are established and enforced. This includes defining data quality standards, roles and responsibilities, and data validation processes. Regular audits ensure compliance with these standards.

By combining these strategies, we ensure the data in our cloud data warehouse is reliable, consistent, and fit for purpose, ultimately leading to trustworthy business intelligence.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your understanding of different data warehouse deployment models.

Cloud data warehouse deployment models offer various architectural choices, each with its own trade-offs. Imagine choosing between renting an apartment, buying a house, or building one from scratch. The best choice depends on your needs and budget.

- Fully Managed Services: This is like renting an apartment. Services like AWS Redshift or Azure Synapse Analytics handle everything – infrastructure, maintenance, and scaling. It’s simple, cost-effective for smaller deployments, and requires minimal management overhead.

- Serverless Architectures: Think of this as a flexible co-working space. You pay only for the compute resources you consume. This is ideal for unpredictable workloads, providing excellent scalability and cost optimization. Snowflake is a prime example of this model.

- Managed Services with Customization: This is like buying a house – you have more control but also more responsibility. You might use a managed service but customize the configuration, networking, or security settings. This offers a balance between ease of use and control.

- Self-Managed: This is like building a house from scratch. You manage everything from the infrastructure to the software. This gives maximum control but requires significant expertise and resources. This is less common for cloud data warehouses due to the availability of fully managed services.

The choice of deployment model depends heavily on the organization’s technical expertise, budget, and the specific requirements of the data warehouse.

Q 17. How would you migrate an on-premises data warehouse to the cloud?

Migrating an on-premises data warehouse to the cloud is a significant undertaking, akin to moving a large, complex office. A phased approach is essential to minimize disruption and ensure a smooth transition:

- Assessment and Planning: Thoroughly assess the current on-premises environment, including data volume, schema, ETL processes, and performance requirements. Develop a detailed migration plan outlining timelines, resources, and potential risks.

- Data Profiling and Cleansing: Before migrating, thoroughly profile and clean the data to improve data quality and ensure a clean transition to the cloud. Identify and address inconsistencies, duplicates, and missing values.

- Proof of Concept (POC): Implement a small-scale proof of concept to test the migration process and validate the chosen cloud platform’s performance. This minimizes risks and identifies potential issues early on.

- Data Migration: Choose the appropriate migration strategy – lift and shift, replatforming, or refactoring. Utilize tools provided by cloud providers to facilitate data movement. For example, AWS Schema Conversion Tool or Azure Data Migration Service.

- ETL and Application Integration: Migrate and integrate the existing ETL processes and applications to the cloud. Consider using cloud-native ETL services to leverage cloud capabilities.

- Testing and Validation: Rigorous testing is vital to ensure data integrity and application functionality. This includes data validation, performance testing, and security testing.

- Go-Live and Monitoring: Gradually migrate data and deploy applications to the cloud. Implement monitoring and alerting systems to track performance and identify potential issues.

A successful migration requires careful planning, experienced personnel, and a robust testing strategy. Close collaboration with the cloud provider is highly recommended.

Q 18. What are the benefits of using a cloud-based data warehouse?

Cloud-based data warehouses offer numerous advantages over on-premises solutions. Think of it like moving from a small, cramped office to a spacious, modern one with better amenities.

- Scalability and Elasticity: Cloud data warehouses can easily scale up or down based on demand, accommodating fluctuations in data volume and query load. This eliminates the need for significant upfront investment in infrastructure.

- Cost-Effectiveness: Pay-as-you-go pricing models reduce upfront costs and align expenses with actual usage. You only pay for the resources consumed.

- Enhanced Performance: Cloud providers invest heavily in high-performance infrastructure, resulting in faster query processing and improved analytics capabilities.

- Improved Security: Cloud providers offer robust security features, including data encryption, access control, and compliance certifications. This simplifies security management and reduces operational risks.

- Increased Availability and Reliability: Cloud data warehouses offer high availability and fault tolerance, minimizing downtime and ensuring data accessibility.

- Simplified Management: Cloud providers handle infrastructure management, reducing operational overhead and freeing up IT staff to focus on higher-value tasks.

These benefits contribute to faster time to insights, lower total cost of ownership, and improved agility for businesses.

Q 19. How would you choose the right cloud data warehouse solution for a given business need?

Choosing the right cloud data warehouse solution requires careful consideration of several factors. Think of it like selecting the right car – a small, fuel-efficient car for city driving is different from a large SUV for off-road adventures.

- Data Volume and Velocity: How much data do you need to store and process, and how quickly is it growing? This will influence the choice of storage capacity and processing power.

- Query Patterns and Performance Requirements: What types of queries will be run against the data, and what is the required response time? This influences the choice of compute engine and architecture.

- Budget and Cost Optimization: What’s your budget, and how can you optimize costs by choosing the appropriate pricing model and service tiers?

- Integration with Existing Systems: How well does the chosen solution integrate with your existing data pipelines, ETL tools, and business intelligence applications?

- Security and Compliance Requirements: What security and compliance requirements must be met? This influences the choice of security features and certifications.

- Team Expertise and Skills: What level of expertise does your team have with different cloud platforms and data warehousing technologies?

By carefully evaluating these factors, you can choose a cloud data warehouse solution that effectively meets your business needs and aligns with your budget and resources.

Q 20. Describe your experience with different data warehousing technologies (e.g., Snowflake, BigQuery).

I have extensive experience with several leading cloud data warehousing technologies, each with its strengths and weaknesses. Think of them as different types of tools in a toolbox, each suited for different jobs.

- Snowflake: Known for its scalability, performance, and ease of use. Its serverless architecture makes it highly cost-effective for variable workloads. I’ve used Snowflake for projects involving massive datasets and complex analytical queries, leveraging its powerful features like data sharing and time-travel.

- BigQuery: Google’s offering excels in handling large datasets and complex analytical workloads. Its integration with other Google Cloud services makes it a strong choice for organizations already invested in the Google ecosystem. I’ve utilized BigQuery for large-scale machine learning projects, leveraging its SQL capabilities and integration with TensorFlow.

- AWS Redshift: A mature and robust solution that offers a balance between performance, scalability, and cost. Its columnar storage and query optimization features make it suitable for analytical workloads. I’ve utilized Redshift in projects requiring high performance and strong integration with other AWS services. I’ve also leveraged its ability to scale seamlessly to meet demand peaks.

- Azure Synapse Analytics: Microsoft’s offering provides a unified platform for data integration, warehousing, and analytics. Its support for both serverless and dedicated SQL pools makes it versatile. I’ve used Synapse for projects requiring integration with on-premises data sources and leveraging the power of Azure’s ecosystem.

My experience with these technologies allows me to select the optimal solution based on project-specific needs and client requirements.

Q 21. How do you handle large datasets in a cloud data warehouse?

Handling large datasets in a cloud data warehouse requires a strategic approach. Imagine organizing a massive library – you need efficient systems for storage, retrieval, and organization.

- Data Partitioning and Clustering: Divide the data into smaller, manageable partitions based on relevant attributes (e.g., date, region). This improves query performance by reducing the amount of data scanned. Clustering allows for co-locating related data, further enhancing performance.

- Columnar Storage: Cloud data warehouses typically use columnar storage, which is optimized for analytical queries. It only reads the columns necessary for a given query, improving query speed significantly.

- Compression: Data compression reduces storage costs and improves query performance by minimizing the amount of data transferred. Cloud providers offer various compression options.

- Query Optimization: Writing efficient SQL queries is critical. Techniques like using appropriate indexes, filtering data early in the query, and avoiding unnecessary joins can greatly improve query performance.

- Data Sampling and Aggregation: For exploratory data analysis or prototyping, using data samples can significantly reduce processing time. Pre-aggregating data at lower levels can speed up complex aggregate queries.

- Materialized Views: Creating materialized views of frequently queried data can significantly reduce query processing time. This pre-calculates the results of common queries and stores them for rapid access.

By combining these techniques, we can efficiently handle and analyze even the largest datasets in a cloud data warehouse, ensuring quick response times and cost-effective operations.

Q 22. Explain your understanding of different data warehousing pricing models.

Cloud data warehouse pricing models are diverse and often depend on the specific provider (AWS Redshift, Azure Synapse, Google BigQuery, etc.) and the services used. Generally, they fall into these categories:

- Compute Pricing: This is based on the amount of processing power (compute nodes) you consume and the duration of usage. Think of it like renting virtual servers – you pay for the time you use them. This is usually hourly or per-second billing. Higher-performance nodes naturally cost more.

- Storage Pricing: This charges you based on the amount of data stored in your data warehouse. This is usually priced per gigabyte (GB) or terabyte (TB) of data stored monthly. Consider this your ‘rent’ for the storage space.

- Data Ingestion Pricing: Moving data into your warehouse can incur costs, especially for high-volume or complex ingestion processes. Providers might charge per terabyte of data loaded or based on the number of operations performed.

- Data Transfer Pricing: Transferring data between your data warehouse and other services within the cloud or to on-premises systems is often charged separately. This pricing can vary based on data volume and location.

- Data Processing Pricing: Some operations like querying, data transformation, and other processes might have separate costs depending on the complexity and resources utilized.

Example: In AWS Redshift, you might pay for compute using RA3 nodes (which include storage) on an hourly basis. You also pay separately for storage consumed beyond what’s included with your compute nodes. Azure Synapse Analytics similarly charges for compute (dedicated SQL pools or serverless SQL pools) and storage separately. Understanding these component costs is key to effective budget management and choosing the right service tier.

Q 23. How would you design a data warehouse for real-time analytics?

Designing a data warehouse for real-time analytics requires a different approach than traditional batch processing. The key is minimizing latency. Here’s a design strategy:

- Choose the Right Cloud Platform: Services like AWS Redshift Spectrum (for querying data in S3) or Azure Synapse’s dedicated SQL pool with real-time capabilities are suitable. Serverless options are particularly good for handling unpredictable workloads.

- Streaming Data Ingestion: Instead of batch loading, implement continuous data ingestion using streaming services like Amazon Kinesis, Apache Kafka, or Azure Event Hubs. This ensures data is available immediately.

- Change Data Capture (CDC): Use CDC techniques to capture only the changes in your source systems, minimizing the amount of data processed and improving efficiency. This can significantly reduce the volume of data to be ingested and processed, leading to lower latency.

- Data Modeling for Speed: Optimize your data model for fast query performance. This involves techniques like denormalization (duplicating data to reduce joins), partitioning, and clustering. Consider columnar storage formats, which are optimized for analytical queries.

- Materialized Views: Create materialized views (pre-computed aggregations) of frequently queried data subsets. This significantly speeds up query response times, making your data warehouse truly responsive.

- Caching: Leverage caching mechanisms to store frequently accessed data in memory for ultrafast retrieval. Many cloud data warehouses offer built-in caching capabilities.

- Real-time Data Visualization Tools: Integrate real-time dashboards and visualization tools (e.g., Tableau, Power BI) that connect directly to your data warehouse to display results as they become available.

Example: Imagine a financial trading platform. Every trade needs to be immediately reflected in dashboards for analysts. By using Kinesis to stream trade data into a Redshift cluster with materialized views for key metrics, we can provide near real-time analytics for traders.

Q 24. What are some common challenges faced when implementing a cloud data warehouse?

Implementing a cloud data warehouse presents several challenges:

- Data Migration Complexity: Moving large datasets from on-premises systems or other cloud services can be time-consuming, complex, and costly, requiring careful planning and execution.

- Cost Management: Cloud data warehouse costs can escalate quickly if not managed proactively. Monitoring resource consumption and optimizing queries are crucial to avoid unexpected expenses.

- Schema Design and Optimization: Designing an efficient schema that balances performance, scalability, and maintainability is crucial. Poor design can lead to performance bottlenecks and high costs.

- Data Governance and Security: Ensuring data security, compliance with regulations (GDPR, HIPAA, etc.), and establishing robust data governance processes are essential. This includes access control, data encryption, and auditing.

- Skill Gap: Managing and optimizing a cloud data warehouse requires specialized skills in data engineering, cloud technologies, and data analytics. Finding and retaining qualified personnel can be challenging.

- Vendor Lock-in: Choosing a cloud provider (AWS, Azure, Google) can lead to vendor lock-in. Carefully consider your long-term strategy to avoid potential future migration challenges.

Example: I once worked on a migration project where underestimating data transformation needs resulted in significant delays and cost overruns. Proper planning, including thorough data profiling and testing, is vital to prevent such issues.

Q 25. How do you ensure data consistency and accuracy in a cloud data warehouse?

Data consistency and accuracy are paramount in a data warehouse. Here’s how to ensure them:

- Data Validation at Ingestion: Implement data quality checks at the ingestion stage to identify and correct or reject invalid data before it enters the warehouse. This includes checks for data type validation, range checks, and consistency checks.

- Data Transformation and Cleansing: Apply data transformation and cleansing procedures to handle inconsistencies, missing values, and outliers. This might involve standardization, deduplication, and data enrichment.

- Version Control and Auditing: Track changes to the data warehouse schema and data using version control. Maintain comprehensive audit logs to track data modifications and access events. This facilitates troubleshooting and data lineage tracing.

- Data Lineage Tracking: Understand the origin and flow of data throughout its lifecycle. This helps to identify the source of data inconsistencies and ensure accountability.

- Regular Data Quality Monitoring: Establish a data quality monitoring process to regularly assess data accuracy, completeness, and consistency. Automated monitoring tools can flag potential issues proactively.

- ETL/ELT Processes: Use robust ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) processes to manage data pipelines reliably. Ensure these processes are designed for accuracy and handle potential errors gracefully.

Example: Using data profiling tools to identify unusual spikes or drops in data values during ingestion can help detect data quality problems early on, before they negatively impact analysis.

Q 26. Describe your experience with data visualization tools used with cloud data warehouses.

I’ve extensively used various data visualization tools with cloud data warehouses. Popular choices include:

- Tableau: Excellent for interactive dashboards and complex visualizations. Its connectivity to various cloud data warehouses is seamless.

- Power BI: A strong competitor to Tableau, offering similar functionalities with a focus on ease of use and Microsoft ecosystem integration.

- Qlik Sense: Known for its associative data exploration capabilities, enabling users to uncover hidden insights through intuitive navigation.

- Looker (Google Cloud): Integrates tightly with Google BigQuery and offers a powerful combination of data modeling, exploration, and visualization.

- Amazon QuickSight: AWS’s native BI service, offering a good balance between cost-effectiveness and functionality, especially when working with Redshift.

My experience involves designing interactive dashboards, creating custom visualizations, and automating report generation using these tools. Choosing the right tool depends on factors like budget, technical expertise, integration requirements, and the specific analytical needs of the project.

Example: In a project analyzing customer behavior, I used Tableau to create dashboards showing sales trends, customer segmentation, and product performance, all connected to our Azure Synapse warehouse.

Q 27. How would you perform capacity planning for a cloud data warehouse?

Capacity planning for a cloud data warehouse is crucial to ensure performance and cost-efficiency. It involves these steps:

- Data Volume Estimation: Project future data volume growth based on historical trends and business projections. Consider factors like data ingestion rates and data retention policies.

- Workload Characterization: Analyze query patterns, including query complexity, frequency, and concurrency. Understand the peak workload demands to avoid performance bottlenecks.

- Performance Testing: Conduct thorough performance testing using realistic workloads to determine the necessary compute and storage capacity. This might involve simulating expected data growth and query patterns.

- Resource Scaling: Cloud data warehouses allow for easy scaling of compute and storage resources. The goal is to find the optimal balance between performance and cost. Consider using auto-scaling features to automatically adjust resources based on demand.

- Monitoring and Adjustment: Continuously monitor resource utilization and performance metrics. Adjust capacity as needed based on observed patterns and performance requirements.

- Cost Optimization Strategies: Implement cost optimization strategies, such as data compression, query optimization, and using cost-effective storage tiers. Regularly review and optimize resource allocation to minimize unnecessary expenses.

Example: Using AWS Redshift’s workload management features, we can provision different node types for different workloads, ensuring optimal resource utilization and cost savings.

Q 28. Explain your experience with monitoring and logging in a cloud data warehouse environment.

Monitoring and logging are essential for ensuring the health, performance, and security of a cloud data warehouse. My experience includes:

- Cloud Provider Monitoring Services: Leveraging built-in monitoring tools like Amazon CloudWatch for AWS Redshift or Azure Monitor for Azure Synapse. These services provide metrics on CPU usage, memory utilization, storage I/O, and query performance.

- Query Performance Monitoring: Tracking slow-running queries, analyzing query plans, and optimizing queries to improve performance. Tools like Redshift’s query monitoring features are indispensable.

- Error Logging and Alerting: Setting up error logging and alerts to proactively identify and address issues. This includes configuring alerts for critical errors, high resource consumption, and performance degradation.

- Custom Monitoring and Alerting: Implementing custom monitoring and alerting solutions using tools like Prometheus or Grafana for more granular control and tailored monitoring. This is crucial for situations requiring custom metrics or integrations.

Example: By setting up CloudWatch alarms for Redshift cluster CPU utilization exceeding a certain threshold, we proactively receive alerts, allowing us to scale resources or investigate potential performance bottlenecks before they impact users.

Key Topics to Learn for Cloud-Based Data Warehousing (e.g., AWS Redshift, Azure Synapse) Interview

- Data Modeling for Cloud Data Warehouses: Understanding dimensional modeling, snowflake schema, and star schema, and choosing the appropriate model for specific business needs. Practical application includes designing a data warehouse schema for a given business scenario.

- Data Loading and Transformation: Mastering ETL (Extract, Transform, Load) processes, including data ingestion methods, data cleaning techniques, and data transformation using SQL and other tools. Practical application involves optimizing data loading performance and ensuring data quality.

- Query Optimization and Performance Tuning: Understanding query execution plans, identifying performance bottlenecks, and applying optimization strategies specific to Redshift/Synapse (e.g., using clustered tables, optimizing JOINs). Practical application includes resolving performance issues in existing queries.

- Security and Access Control: Implementing robust security measures, including IAM roles, access control lists (ACLs), and data encryption. Practical application involves designing a secure data warehouse architecture.

- Cost Optimization: Understanding the pricing models of Redshift and Synapse, and implementing strategies to minimize costs. Practical application includes right-sizing compute resources and optimizing data storage.

- Monitoring and Maintenance: Implementing monitoring tools and strategies to track performance, identify issues, and ensure the health of the data warehouse. Practical application includes proactively addressing potential issues before they impact business operations.

- High Availability and Disaster Recovery: Understanding high availability configurations and disaster recovery strategies to ensure business continuity. Practical application includes designing a highly available and resilient data warehouse architecture.

- Data Warehousing Concepts: A solid grasp of core data warehousing principles, including data warehousing architectures, data integration techniques, and the differences between operational and analytical databases.

Next Steps

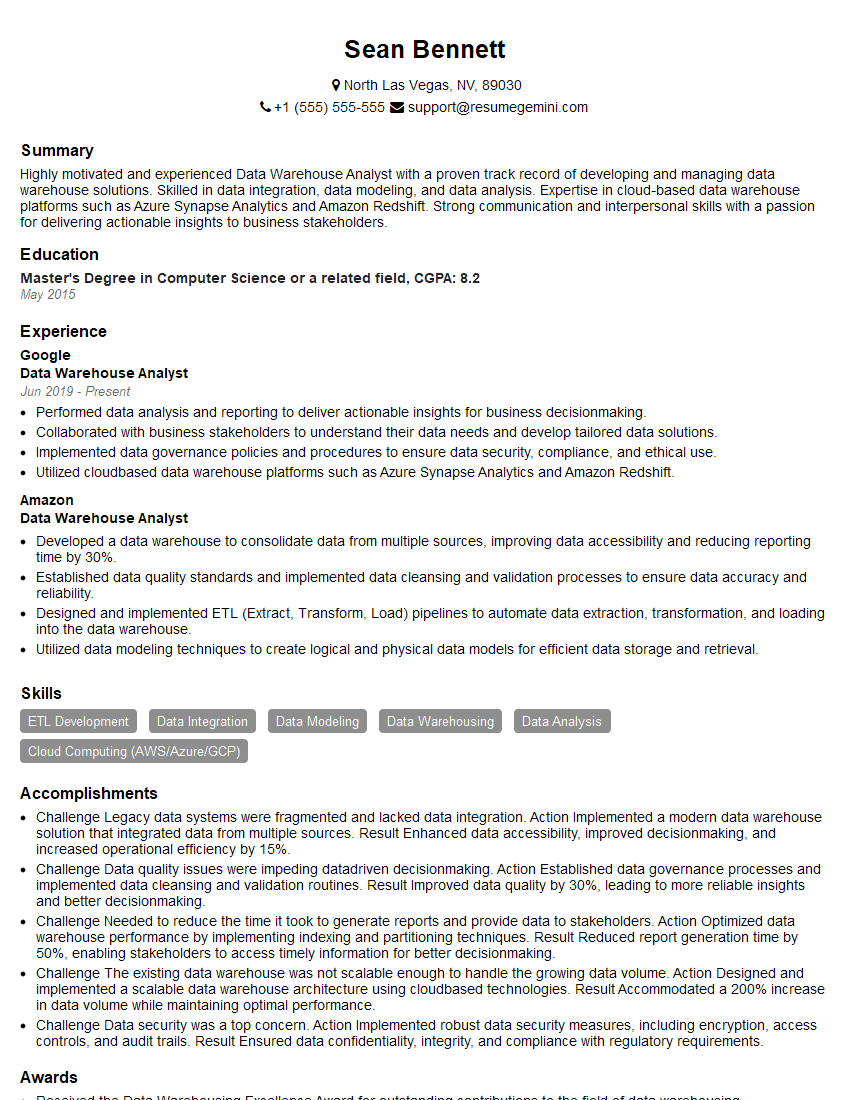

Mastering cloud-based data warehousing with platforms like AWS Redshift and Azure Synapse is crucial for career advancement in today’s data-driven world. These skills are highly sought after, opening doors to exciting roles with significant growth potential. To maximize your job prospects, crafting a compelling and ATS-friendly resume is essential. ResumeGemini is a trusted resource that can help you build a professional and impactful resume, showcasing your expertise effectively. Examples of resumes tailored to Cloud-Based Data Warehousing roles using AWS Redshift and Azure Synapse are available to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO