Unlock your full potential by mastering the most common Codes interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Codes Interview

Q 1. Explain the difference between stack and queue data structures.

Stack and queue are both fundamental data structures used to store and manage collections of elements, but they differ significantly in how elements are added and removed.

A stack follows the Last-In, First-Out (LIFO) principle. Imagine a stack of plates; you can only add a new plate to the top and remove the top plate. The last element added is the first one removed. Common stack operations include push (add an element) and pop (remove an element). Stacks are used extensively in function call management (call stack), undo/redo functionality, and expression evaluation.

A queue, on the other hand, follows the First-In, First-Out (FIFO) principle. Think of a queue at a grocery store; the first person in line is the first person served. The first element added is the first one removed. Common queue operations include enqueue (add an element) and dequeue (remove an element). Queues are used in managing tasks, buffering data in systems, and implementing breadth-first search algorithms.

Here’s a simple analogy: A stack is like a stack of pancakes – you eat the top one first. A queue is like a line at the movies – the first person in line gets in first.

Q 2. What are the common sorting algorithms? Discuss their time and space complexities.

Several sorting algorithms exist, each with its own strengths and weaknesses concerning time and space complexity. Time complexity describes how the runtime scales with the input size (n), while space complexity refers to the amount of extra memory used.

- Bubble Sort: Simple but inefficient. Time complexity: O(n^2), Space complexity: O(1) (in-place).

- Insertion Sort: Efficient for small datasets or nearly sorted data. Time complexity: O(n^2), Space complexity: O(1) (in-place).

- Selection Sort: Another simple but inefficient algorithm. Time complexity: O(n^2), Space complexity: O(1) (in-place).

- Merge Sort: Efficient and stable; uses divide and conquer. Time complexity: O(n log n), Space complexity: O(n) (not in-place).

- Quick Sort: Generally very efficient, but worst-case time complexity can be O(n^2). Average case: O(n log n), Space complexity: O(log n) on average (due to recursive calls).

- Heap Sort: Guaranteed O(n log n) time complexity, making it a reliable choice. Space complexity: O(1) (in-place).

The choice of sorting algorithm depends on factors like dataset size, whether stability is required (maintaining the relative order of equal elements), and memory constraints. For large datasets, Merge Sort or Heap Sort are preferred for their consistent performance, while Quick Sort is often a good choice for its average-case efficiency.

Q 3. Describe the process of merge sort.

Merge sort is a highly efficient sorting algorithm based on the divide and conquer paradigm. It works by recursively breaking down the unsorted list into smaller sublists until each sublist contains only one element (which is inherently sorted). Then, it repeatedly merges the sublists to produce new sorted sublists until there is only one sorted list remaining.

- Divide: Recursively divide the unsorted list into halves until you have sublists of size one.

- Conquer: Merge the sublists pairwise to create new sorted sublists. This is the core of the algorithm.

- Combine: Repeat the merge process until you have a single sorted list.

Example: Let’s sort the list [8, 3, 1, 7, 0, 10, 2]:

- Divide: [8, 3, 1, 7] and [0, 10, 2]

- Divide further: [8, 3], [1, 7], [0, 10], [2]

- Divide further: [8], [3], [1], [7], [0], [10], [2]

- Conquer (Merge): Merge [8] and [3] to get [3, 8]. Merge [1] and [7] to get [1, 7]. Merge [0] and [10] to get [0, 10].

- Conquer (Merge): Merge [3, 8] and [1, 7] to get [1, 3, 7, 8]. Merge [0, 10] and [2] to get [0, 2, 10].

- Conquer (Merge): Merge [1, 3, 7, 8] and [0, 2, 10] to get [0, 1, 2, 3, 7, 8, 10].

The merging step is crucial; it involves comparing elements from the two sublists and placing them in the correct order into a new list.

Q 4. Explain the concept of recursion and give an example.

Recursion is a powerful programming technique where a function calls itself within its own definition. It’s like a set of Russian nesting dolls; each doll contains a smaller version of itself. This approach is ideal for solving problems that can be broken down into smaller, self-similar subproblems.

A recursive function must have two key components:

- Base Case: A condition that stops the recursion. Without a base case, the function would call itself indefinitely, leading to a stack overflow error.

- Recursive Step: The part where the function calls itself with a modified input, moving closer to the base case.

Example: Calculating the factorial of a number:

function factorial(n) {

if (n === 0) { // Base case

return 1;

} else {

return n * factorial(n - 1); // Recursive step

}

}

console.log(factorial(5)); // Output: 120

In this example, factorial(5) calls factorial(4), which calls factorial(3), and so on until it reaches the base case (n === 0). The results are then multiplied back up the chain of calls to produce the final result.

Q 5. What is dynamic programming? Provide an example of its application.

Dynamic programming is an optimization technique that solves complex problems by breaking them down into smaller, overlapping subproblems, solving each subproblem only once, and storing their solutions to avoid redundant computations. This stored information is often organized in a table or memoization structure.

It’s particularly useful for problems exhibiting optimal substructure (the optimal solution can be constructed from optimal solutions of its subproblems) and overlapping subproblems (the same subproblems are encountered multiple times).

Example: Fibonacci sequence calculation:

The Fibonacci sequence (0, 1, 1, 2, 3, 5, 8…) can be computed recursively, but this approach is highly inefficient due to repeated calculations. Dynamic programming provides an efficient solution:

function fibonacciDP(n) {

const memo = [0, 1];

for (let i = 2; i <= n; i++) {

memo[i] = memo[i - 1] + memo[i - 2];

}

return memo[n];

}

console.log(fibonacciDP(6)); // Output: 8

Here, we store the results of previous calculations in the memo array, avoiding recalculations. This significantly improves efficiency, especially for larger values of n.

Dynamic programming is applied in various areas such as sequence alignment in bioinformatics, finding the shortest path in graphs, and resource allocation problems.

Q 6. How do you handle exceptions in your code?

Exception handling is crucial for writing robust and reliable code. It involves anticipating and gracefully managing errors that may occur during program execution. This prevents unexpected crashes and allows the program to continue running even in the face of adversity.

In many programming languages (like Python, Java, C++), exception handling uses try-catch (or similar) blocks:

try {

// Code that might throw an exception

const result = 10 / 0; // This will throw a division by zero error

} catch (error) {

// Handle the exception

console.error("An error occurred:", error.message);

}

The try block contains the code that could potentially generate an exception. If an exception occurs, the program's control flow jumps to the catch block, where you can handle the error appropriately (e.g., logging the error, displaying a user-friendly message, or attempting a recovery strategy). Proper exception handling ensures the program doesn't terminate unexpectedly and potentially provides users with informative feedback.

Beyond basic try-catch, strategies like using custom exception types, implementing multiple catch blocks for different exception types, and using finally blocks for cleanup operations (releasing resources) are important aspects of effective exception management.

Q 7. Explain the difference between breadth-first search (BFS) and depth-first search (DFS).

Breadth-First Search (BFS) and Depth-First Search (DFS) are two fundamental graph traversal algorithms used to explore nodes and edges in a graph. They differ significantly in the order they visit nodes.

BFS explores the graph level by level. It starts at a root node and visits all its neighbors before moving to the neighbors of those neighbors. Think of it as exploring a city block by block. It uses a queue to manage the nodes to visit. BFS is ideal for finding the shortest path in an unweighted graph.

DFS explores the graph by going as deep as possible along each branch before backtracking. It's like exploring a maze by going down one path as far as you can before turning back and trying another path. It uses a stack (implicitly through recursion or explicitly using a stack data structure) to keep track of the nodes to visit. DFS is commonly used in topological sorting and detecting cycles in graphs.

Analogy: Imagine you're searching for a specific house in a city (the graph). BFS is like searching street by street, level by level; you explore all houses on one street before moving to the next. DFS is like exploring one street completely before moving to the next street, searching as deep as possible before backtracking.

Q 8. What are design patterns? Give examples of commonly used design patterns.

Design patterns are reusable solutions to commonly occurring problems in software design. They provide a blueprint for structuring code, making it more efficient, maintainable, and understandable. Think of them as pre-fabricated modules for your code building.

- Singleton Pattern: Ensures that only one instance of a class is created. Imagine a database connection – you only want one open connection at a time to avoid resource conflicts.

class DatabaseConnection { private static DatabaseConnection instance; private DatabaseConnection() {} public static DatabaseConnection getInstance() { if (instance == null) { instance = new DatabaseConnection(); } return instance; } } - Factory Pattern: Creates objects without specifying their concrete classes. Suppose you have different types of vehicles (cars, trucks, bikes). A factory pattern can create the right vehicle type based on input, hiding the complex creation logic.

- Observer Pattern: Defines a one-to-many dependency between objects. A classic example is a weather station that notifies multiple clients (weather apps, news websites) when there's a change in weather conditions.

- MVC (Model-View-Controller): Separates application concerns into three interconnected parts: Model (data), View (presentation), and Controller (user input). This is extremely common in web applications, improving code organization and maintainability.

Using design patterns leads to better code readability, reduces development time, and promotes collaboration among developers.

Q 9. How do you ensure code quality and maintainability?

Ensuring code quality and maintainability is crucial for long-term success of any software project. It involves a multi-pronged approach:

- Code Reviews: Having peers review your code helps catch bugs early, improves code style consistency, and shares knowledge within the team. It's like having a second pair of eyes to spot potential issues.

- Testing (Unit, Integration, System): Thorough testing is essential to verify functionality and identify defects. Unit tests test individual components, integration tests verify interactions between components, and system tests validate the overall system behavior.

- Linting and Static Analysis: Tools like ESLint (for JavaScript) or Pylint (for Python) automatically analyze your code for style violations, potential bugs, and maintainability issues. It's like having a grammar checker for your code.

- Code Style Guidelines: Adhering to a consistent coding style (e.g., PEP 8 for Python) makes code easier to read and understand. Think of it as using consistent formatting in a document – it greatly improves readability.

- Documentation: Clear, concise, and up-to-date documentation is paramount. This includes comments within the code explaining complex logic, as well as external documentation explaining the system's architecture and usage.

- Refactoring: Regularly improving the internal structure of the code without changing its external behavior. It's like decluttering your house to make it more efficient and organized. This enhances maintainability and reduces technical debt.

By consistently applying these practices, you significantly improve the quality, maintainability, and long-term viability of your codebase.

Q 10. What is version control and why is it important?

Version control is a system that records changes to a file or set of files over time so that you can recall specific versions later. Think of it like Google Docs' revision history, but for code. Git is the most popular version control system.

Its importance stems from:

- Tracking Changes: Keeps a detailed history of every modification, allowing you to easily revert to previous versions if needed.

- Collaboration: Enables multiple developers to work on the same project concurrently without overwriting each other's changes.

- Branching and Merging: Allows developers to create separate branches to work on new features or bug fixes without affecting the main codebase. Merging integrates these changes seamlessly once they are ready.

- Backup and Recovery: Provides a safe backup of your code, protecting against accidental data loss.

In a professional setting, version control is indispensable for teamwork, project management, and ensuring software stability.

Q 11. Explain the difference between Git pull and Git fetch.

Both git pull and git fetch download changes from a remote repository, but they differ in what they do after the download:

git fetch: Downloads the changes from the remote repository to your local machine, but it does not automatically merge them into your current branch. It simply updates your local repository's knowledge of the remote repository's state. Think of it as checking your email – you see what new messages are available, but you haven't read them yet.git pull: Downloads the changes from the remote repository and immediately merges them into your current branch. It is essentially a shortcut forgit fetchfollowed bygit merge. Think of it as checking your email and automatically opening and reading the new messages.

Using git fetch before git merge gives you more control, allowing you to review the changes before integrating them into your local branch. This helps to avoid unexpected merge conflicts.

Q 12. What are the advantages and disadvantages of different database systems?

Different database systems offer various advantages and disadvantages depending on the specific needs of an application. Here's a comparison of some popular types:

- Relational Databases (e.g., MySQL, PostgreSQL, SQL Server):

- Advantages: Data integrity through ACID properties (Atomicity, Consistency, Isolation, Durability), mature ecosystem, well-established tools and expertise.

- Disadvantages: Can be less scalable than NoSQL databases for certain workloads, complex schema design can be time-consuming.

- NoSQL Databases (e.g., MongoDB, Cassandra, Redis):

- Advantages: High scalability and flexibility, better handling of unstructured data, often simpler to set up and manage.

- Disadvantages: Can lack data integrity features of relational databases, less mature ecosystem in some cases.

- Graph Databases (e.g., Neo4j):

- Advantages: Excellent for managing relationships between data points, suitable for social networks, recommendation systems, etc.

- Disadvantages: Not as widely adopted as relational or NoSQL databases, requires specialized knowledge.

The best choice depends on factors such as data volume, data structure, required scalability, consistency needs, and existing expertise within the team.

Q 13. Explain the concept of normalization in database design.

Normalization in database design is a systematic process of organizing data to reduce redundancy and improve data integrity. It's like tidying up your filing cabinet to avoid having multiple copies of the same information.

It involves breaking down larger tables into smaller ones and defining relationships between them. This reduces data redundancy, improving efficiency and preventing data inconsistencies. For example, instead of having a single table with repeating customer addresses, you'd create separate tables for customers and addresses, linked by a customer ID. This ensures that each address is stored only once, even if multiple customers share the same address.

Different levels of normalization (1NF, 2NF, 3NF, etc.) exist, with each level imposing stricter rules on data organization. The choice of normalization level depends on the trade-off between data redundancy and query performance.

Q 14. What is SQL injection and how can it be prevented?

SQL injection is a code injection technique that exploits vulnerabilities in database applications to inject malicious SQL code into database queries. Imagine a sneaky thief injecting instructions into your shopping list to steal items instead of buying groceries.

Attackers can use this to steal data, modify data, or even gain control of the database server. A common attack involves manipulating input fields (like a login form) to inject malicious SQL code that bypasses security checks.

Prevention techniques include:

- Parameterized Queries or Prepared Statements: These treat user inputs as data, not executable code, preventing malicious SQL from being interpreted. Think of this as putting user input inside a secured box that the database understands but cannot modify.

- Input Validation and Sanitization: Carefully validating and sanitizing all user inputs to remove or escape potentially harmful characters before they are used in database queries.

- Least Privilege Principle: Granting database users only the necessary permissions, limiting potential damage from a successful attack. Only give access to what's needed, similar to using a limited account rather than an administrator account.

- Escaping Special Characters: Using functions to properly escape special characters that are used as SQL commands (e.g., single quotes, semicolons).

- Stored Procedures: Using stored procedures can help encapsulate SQL code and prevent direct execution of user-supplied SQL.

Implementing these security measures is vital to protecting database applications from SQL injection attacks.

Q 15. What are the different types of database relationships?

Database relationships define how data in different tables are connected. Understanding these relationships is crucial for designing efficient and well-structured databases. There are three primary types:

- One-to-one (1:1): One record in a table is related to only one record in another table. Think of a person and their passport; each person ideally has only one passport, and each passport belongs to only one person. This is often implemented using a foreign key in one of the tables.

- One-to-many (1:M) or Many-to-one (M:1): One record in a table can be related to multiple records in another table. A classic example is a customer and their orders; one customer can place multiple orders, but each order belongs to only one customer. The foreign key typically resides in the 'many' side of the relationship (the orders table in this case).

- Many-to-many (M:N): Records in one table can be related to multiple records in another table, and vice-versa. For instance, a student can take many courses, and each course can have many students. This relationship often requires a junction table (also known as an associative entity) to manage the connections.

Choosing the correct relationship type ensures data integrity and avoids redundancy. For example, incorrectly modeling a one-to-many as a one-to-one would lead to data loss or inconsistencies.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini's guide. Showcase your unique qualifications and achievements effectively.

- Don't miss out on holiday savings! Build your dream resume with ResumeGemini's ATS optimized templates.

Q 16. Explain the concept of RESTful APIs.

RESTful APIs (Representational State Transfer Application Programming Interfaces) are a architectural style for building web services. They use HTTP methods (GET, POST, PUT, DELETE) to interact with resources. A key characteristic is their statelessness; each request contains all the information needed to process it, without relying on previous interactions. This makes them scalable and easy to maintain.

Key principles of a RESTful API include:

- Client-server architecture: Separates client and server concerns for better maintainability.

- Statelessness: Each request is independent.

- Cacheability: Responses can be cached to improve performance.

- Uniform interface: Uses standard HTTP methods and data formats like JSON or XML.

- Layered system: Clients may not know whether they're interacting directly with the server or an intermediary layer.

- Code on demand (optional): The server can extend client functionality by transferring executable code.

Imagine ordering food online. The RESTful API would handle requests like 'GET /menu' (retrieve menu), 'POST /order' (place an order), and 'PUT /order/{id}' (update an order). Each request is self-contained and doesn't rely on the server remembering past interactions.

Q 17. Describe the difference between HTTP GET and POST requests.

Both HTTP GET and POST are used to send requests to a server, but they serve different purposes:

- GET: Retrieves data from the server. It's idempotent, meaning making the same request multiple times produces the same result. The data is typically included in the URL itself, making it visible in the browser's address bar. It's generally used for querying information.

- POST: Submits data to be processed to the server. It's not idempotent; submitting the same data multiple times will likely have different results (e.g., creating multiple entries). The data is sent in the request body, not the URL, making it less visible. It's frequently used for creating or updating resources.

Example: Consider a blog application.GET /posts would retrieve a list of blog posts.POST /posts would submit a new blog post.

The choice between GET and POST depends on the operation: GET for retrieving, POST for creating/updating/deleting. Using GET for operations that modify data is generally discouraged due to security and idempotency concerns.

Q 18. How do you handle concurrency in your code?

Handling concurrency is crucial for building robust and efficient applications, especially those dealing with multiple users or processes simultaneously. My approach depends on the specific context, but common strategies include:

- Locking mechanisms: Using mutexes (mutual exclusion locks) or semaphores to control access to shared resources. This prevents race conditions, where multiple threads modify the same data simultaneously, leading to unpredictable results.

- Transactions: In database operations, transactions ensure atomicity—either all changes are applied or none. This protects data integrity in concurrent environments.

- Thread-safe data structures: Using data structures designed for concurrent access, such as concurrent dictionaries or queues, minimizes the need for explicit locking.

- Asynchronous programming: Using techniques like callbacks, promises, or async/await to handle long-running operations without blocking the main thread, improving responsiveness.

- Message queues: Decoupling components through message queues allows for parallel processing and improved scalability. A task is sent to a queue and processed asynchronously by a worker.

For instance, in a banking application, locking mechanisms would prevent two threads from simultaneously withdrawing money from the same account. This ensures the correct balance is maintained even with concurrent transactions.

Q 19. Explain the concept of threading and multiprocessing.

Both threading and multiprocessing are used to achieve concurrency, but they differ in how they manage it:

- Threading: Multiple threads of execution run within the same process. Threads share the same memory space, making communication between them efficient but requiring careful management of shared resources to avoid race conditions. This is suitable for I/O-bound tasks (e.g., network operations) where threads can wait for external resources without blocking each other.

- Multiprocessing: Multiple processes run independently, each with its own memory space. This is inherently safer than threading because processes cannot directly access each other's memory. It's better suited for CPU-bound tasks (e.g., complex calculations) where processes can fully utilize multiple CPU cores. Inter-process communication is more complex but safer.

Analogy: Imagine preparing a meal. Threading is like having multiple cooks in the same kitchen, sharing ingredients and tools – potentially leading to chaos if not coordinated properly. Multiprocessing is like having separate kitchens for each part of the meal—more independent but requiring more coordination between kitchens.

Q 20. What are the different types of network protocols?

Network protocols define the rules and standards for communication over a network. There are numerous protocols, each serving a specific purpose. Some key categories include:

- Transport Layer Protocols: These protocols handle reliable and unreliable data transmission between applications. Examples include TCP (Transmission Control Protocol) and UDP (User Datagram Protocol).

- Network Layer Protocols: These protocols handle addressing and routing of data packets across the network. The most prominent example is IP (Internet Protocol).

- Application Layer Protocols: These protocols provide specific services to applications. Examples include HTTP (Hypertext Transfer Protocol) for web browsing, SMTP (Simple Mail Transfer Protocol) for email, and DNS (Domain Name System) for name resolution.

- Data Link Layer Protocols: These protocols define how data is transmitted over physical links. Examples include Ethernet and Wi-Fi.

These protocols work in a layered architecture, building upon each other to enable complex network communication. Each layer has its specific responsibilities, ensuring efficient and reliable data transfer.

Q 21. Explain the difference between TCP and UDP.

TCP and UDP are both transport layer protocols, but they differ significantly in their approach to data transmission:

- TCP (Transmission Control Protocol): Provides a reliable, ordered, and connection-oriented service. It guarantees delivery of data, detects errors, and retransmits lost packets. This makes it suitable for applications requiring high reliability, such as web browsing or file transfer. It's connection-oriented, meaning a connection is established before data is transmitted, and the connection remains open until explicitly closed.

- UDP (User Datagram Protocol): Provides an unreliable, connectionless service. It doesn't guarantee delivery or order of data packets. This makes it faster but less reliable than TCP. It's suitable for applications where some packet loss is acceptable, such as streaming video or online gaming. No connection is established before transmitting data; each packet is treated independently.

Analogy: TCP is like sending a registered letter – you're guaranteed delivery and tracking. UDP is like sending a postcard – it's faster, but there's no guarantee it will arrive.

Q 22. What are the common security vulnerabilities in web applications?

Web application security vulnerabilities are a serious concern. They can range from simple coding errors to sophisticated attacks exploiting system weaknesses. Common vulnerabilities include:

- SQL Injection: This occurs when malicious code is inserted into database queries, allowing attackers to manipulate or access sensitive data. For example, an attacker might craft a URL like

http://example.com/users?id=1;DROP TABLE users;--to delete the entire users table. - Cross-Site Scripting (XSS): This allows attackers to inject malicious scripts into websites viewed by other users. Imagine a comment section where an attacker posts a script that steals user cookies. Proper input sanitization and output encoding are crucial defenses.

- Cross-Site Request Forgery (CSRF): This tricks a user into performing unwanted actions on a website they're already authenticated to. A classic example is a malicious link disguised as a legitimate button that performs an action like transferring funds.

- Broken Authentication and Session Management: Weak passwords, predictable session IDs, or lack of proper authentication mechanisms can allow attackers to gain unauthorized access.

- Insecure Direct Object References (IDOR): This vulnerability arises when a web application exposes direct references to internal objects, allowing users to bypass access controls. For instance, if a URL directly references a file like

http://example.com/files/admin.conf, unauthorized users might gain access. - Security Misconfiguration: This is often a broad category encompassing many issues, such as default credentials remaining unchanged, exposing sensitive information in configuration files, or incorrect server settings.

Mitigating these vulnerabilities requires a multi-layered approach including secure coding practices, regular security audits, and the use of web application firewalls (WAFs).

Q 23. How do you test your code?

Testing is an integral part of my development process. I employ a variety of testing methods, depending on the project's complexity and requirements. This typically includes:

- Unit Testing: I use unit tests to verify the functionality of individual components or modules in isolation. Frameworks like JUnit (Java) or pytest (Python) are invaluable here. I strive for high test coverage, aiming for 80% or more.

- Integration Testing: After unit testing, I move to integration testing, where I verify the interaction between different modules. This helps catch issues related to data flow and communication between components.

- System Testing: This broader level of testing verifies the entire system against specified requirements. It often involves testing different user flows and scenarios.

- End-to-End Testing: This type of testing simulates the user's experience from start to finish, ensuring all system components work together seamlessly.

- Automated Testing: Whenever possible, I automate tests using frameworks like Selenium (UI testing) or RestAssured (API testing) to improve efficiency and consistency.

My testing approach is driven by a belief in continuous integration and continuous delivery (CI/CD), allowing for frequent code testing and rapid identification of bugs.

Q 24. Explain the difference between unit testing and integration testing.

Unit testing and integration testing are both crucial aspects of software testing, but they focus on different levels of the software system.

- Unit Testing: This tests individual units (modules, components, functions) of the code in isolation. The goal is to verify that each unit functions correctly on its own, without considering the interaction with other parts of the system. For example, a unit test for a function that calculates the area of a circle would only test the function's ability to perform this calculation correctly, given different inputs. It wouldn't consider how that function interacts with other parts of a larger application.

- Integration Testing: This focuses on verifying the interactions between different units or modules. It tests how these units work together as a larger system. Continuing with the circle area example, integration testing might verify that the function properly interfaces with a database to store or retrieve circle data.

Think of unit testing as testing individual LEGO bricks, while integration testing is testing how those bricks fit together to create a larger structure. Both are essential to ensure the overall functionality and stability of the software.

Q 25. What are the various stages involved in the software development life cycle (SDLC)?

The Software Development Life Cycle (SDLC) outlines the various stages involved in creating and maintaining software. While there are many models, a common one includes:

- Planning: Defining project goals, requirements, and feasibility.

- Requirements Gathering and Analysis: Understanding stakeholder needs and documenting software specifications.

- Design: Creating blueprints for the software architecture, database design, and user interface.

- Implementation (Coding): Writing and testing the software code.

- Testing: Verifying the software's functionality and identifying bugs (as described in previous answers).

- Deployment: Releasing the software to end users.

- Maintenance: Addressing bugs, adding new features, and providing ongoing support.

The specific stages and their emphasis may vary based on the chosen SDLC model (e.g., Waterfall, Agile, Spiral). Agile methodologies, for example, emphasize iterative development and frequent feedback loops.

Q 26. How do you handle conflicts during code merging?

Code merging conflicts are inevitable when multiple developers work on the same codebase simultaneously. Effective strategies for handling these conflicts include:

- Understanding the Conflict: Carefully examine the conflicting changes highlighted by the version control system (like Git). Understand why the changes are conflicting.

- Communication: If possible, communicate with the other developer(s) involved to discuss the changes and find a resolution.

- Manual Resolution: In many cases, you'll need to manually edit the conflicting code, choosing the best approach based on the changes. This often involves integrating both changes in a way that makes sense, sometimes requiring a rewrite of the section.

- Version Control System Features: Utilize the features of your version control system (e.g., Git's merge tools) to assist in resolving conflicts. These tools often provide a visual interface to compare and combine changes.

- Testing After Merge: After resolving the conflict, always thoroughly test the affected code to ensure the merge did not introduce new bugs or break existing functionality.

Adopting clear coding standards, frequent commits, and a robust branching strategy can help minimize the frequency and complexity of merge conflicts.

Q 27. What are some common debugging techniques?

Debugging is a crucial skill for any developer. Techniques I frequently use include:

- Print Statements/Logging: A simple but effective way to track variable values and program flow. Strategic placement of print statements or log messages can pinpoint where problems occur.

- Debuggers: Debuggers (like GDB or integrated debuggers in IDEs) allow stepping through code line by line, inspecting variables, setting breakpoints, and analyzing program execution.

- Code Inspection: Carefully reviewing the code to identify potential errors, inconsistencies, or logic flaws. Often, the simplest errors are found through a thorough review.

- Rubber Duck Debugging: Explaining the code and its behavior to an inanimate object (like a rubber duck) can help identify errors through the process of verbalizing your thoughts and logic.

- Unit Tests: Well-written unit tests can help pinpoint exactly which parts of the code are failing.

- Static Analysis Tools: These tools can automatically scan code for potential problems like memory leaks or style inconsistencies.

The best debugging approach often involves a combination of these techniques, depending on the complexity and nature of the bug.

Q 28. Describe your experience with a specific coding project and challenges you faced.

In a recent project, I developed a RESTful API for a large-scale e-commerce platform. One significant challenge was handling concurrent requests efficiently and preventing data inconsistencies. The platform anticipated a high volume of concurrent users, requiring optimized database queries and a robust caching mechanism.

Initially, we experienced performance bottlenecks due to inefficient database interactions. To overcome this, I implemented connection pooling, optimized SQL queries, and introduced Redis caching to store frequently accessed data. This significantly improved response times and reduced database load. We also implemented optimistic locking to handle concurrent updates and ensure data consistency. Careful monitoring and performance testing were crucial throughout the process to fine-tune the API's performance and stability.

The project highlighted the importance of performance optimization and data consistency in high-traffic applications. It reinforced the value of thorough testing and careful planning to manage the complexity of large-scale systems.

Key Topics to Learn for Codes Interview

- Data Structures: Understanding arrays, linked lists, trees, graphs, and hash tables is fundamental. Focus on their properties, operations, and when to choose one over another.

- Algorithms: Master common algorithms like searching (binary search, depth-first search, breadth-first search), sorting (merge sort, quick sort), and graph traversal. Practice implementing them and analyzing their time and space complexity.

- Object-Oriented Programming (OOP): Understand core OOP principles such as encapsulation, inheritance, and polymorphism. Be prepared to discuss design patterns and their applications.

- Software Design Principles: Familiarize yourself with SOLID principles and other design patterns to showcase your ability to build robust and maintainable software.

- Problem-Solving Techniques: Practice breaking down complex problems into smaller, manageable parts. Develop your skills in debugging and testing your code.

- System Design (Depending on the Role): For more senior roles, understanding system design principles, scalability, and database design is crucial. Consider studying common architectural patterns.

- Specific Coding Languages (as applicable): While the emphasis is often on problem-solving, demonstrate proficiency in the languages specified in the job description.

Next Steps

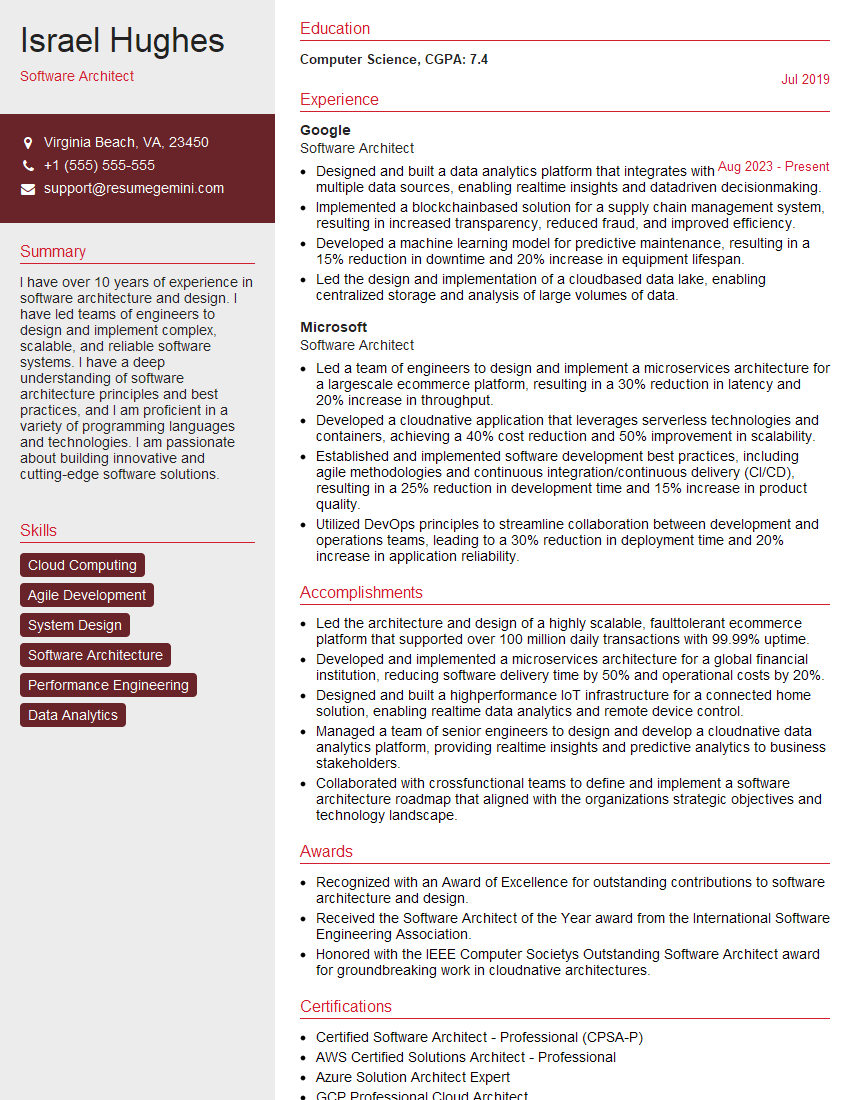

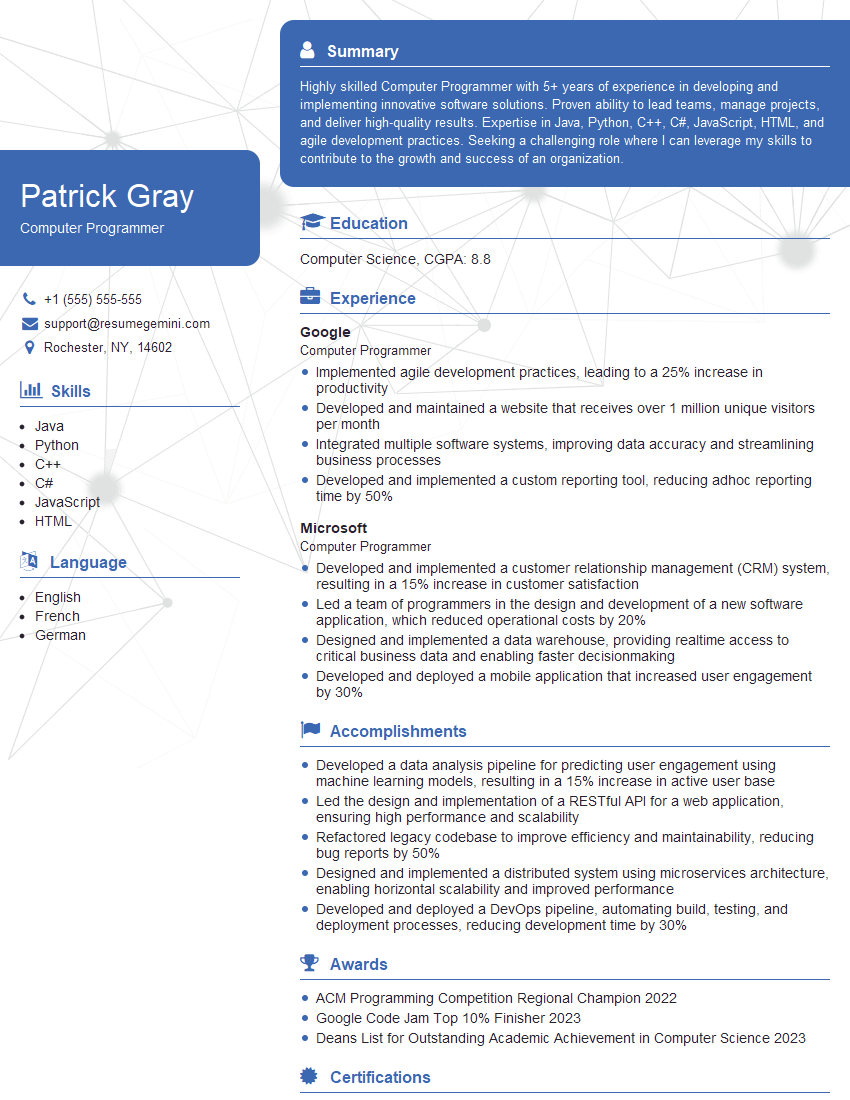

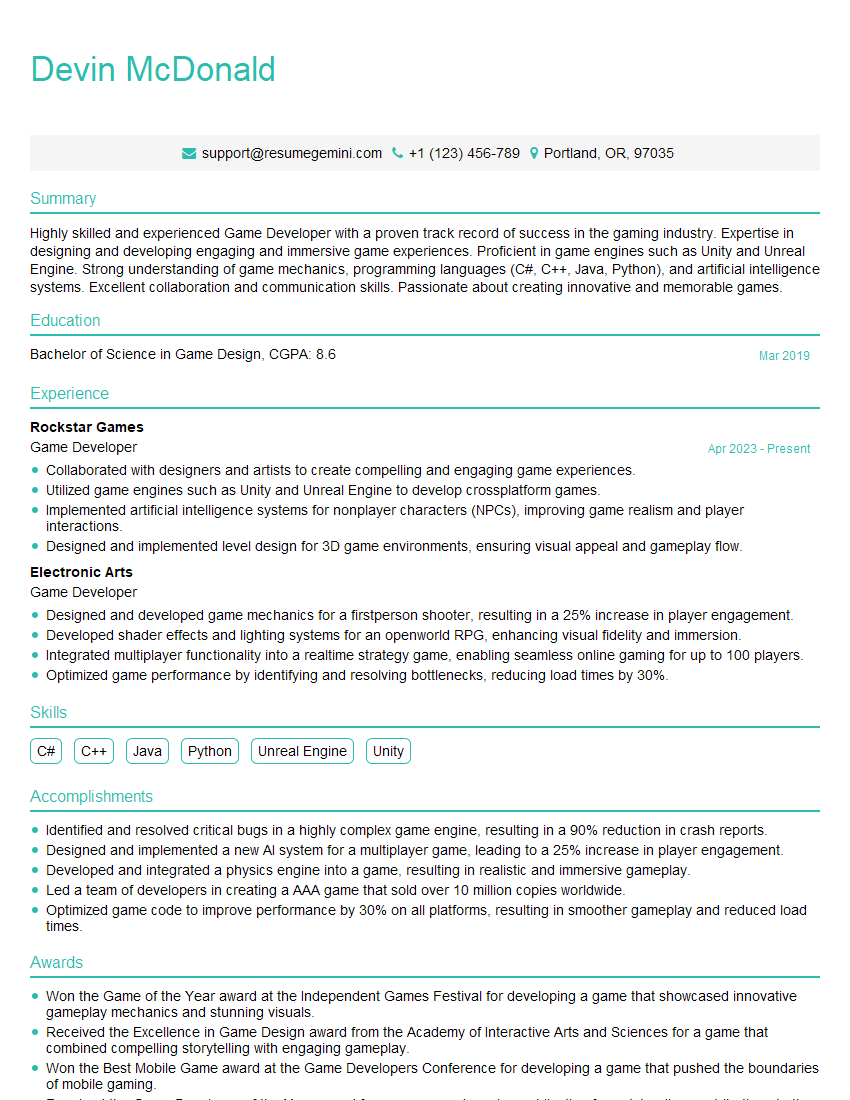

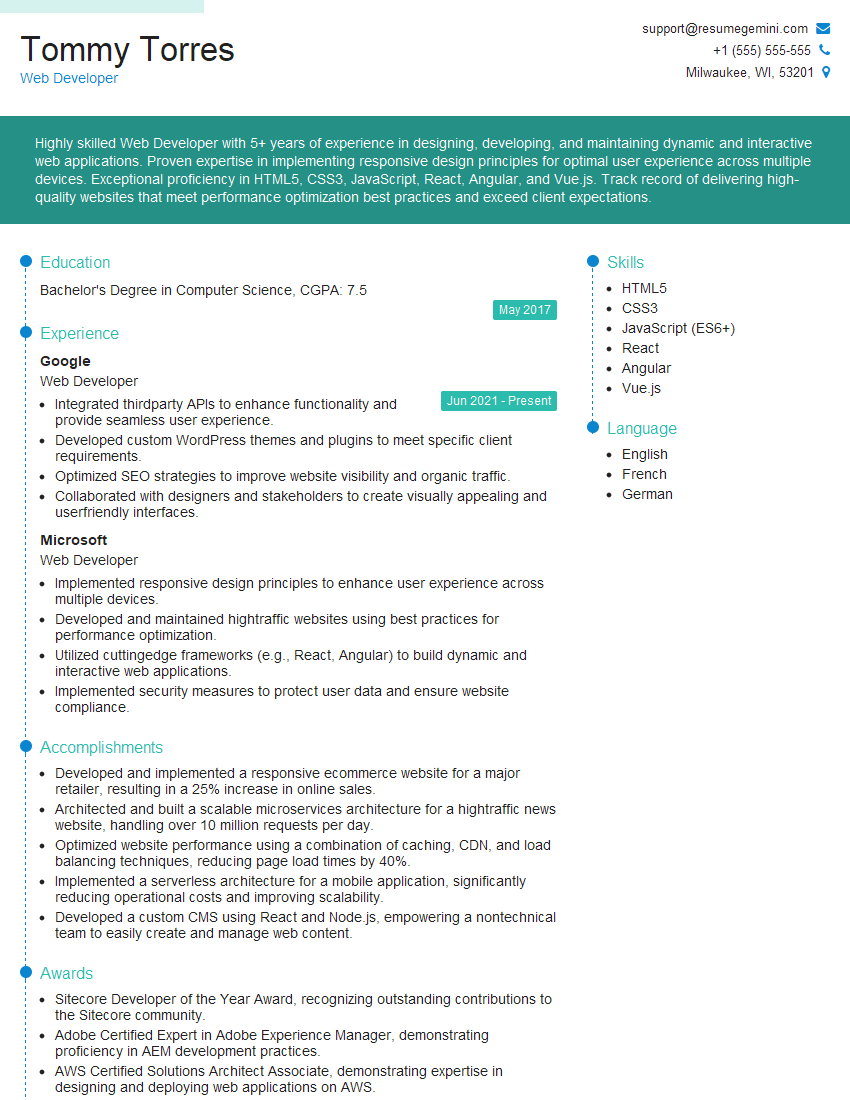

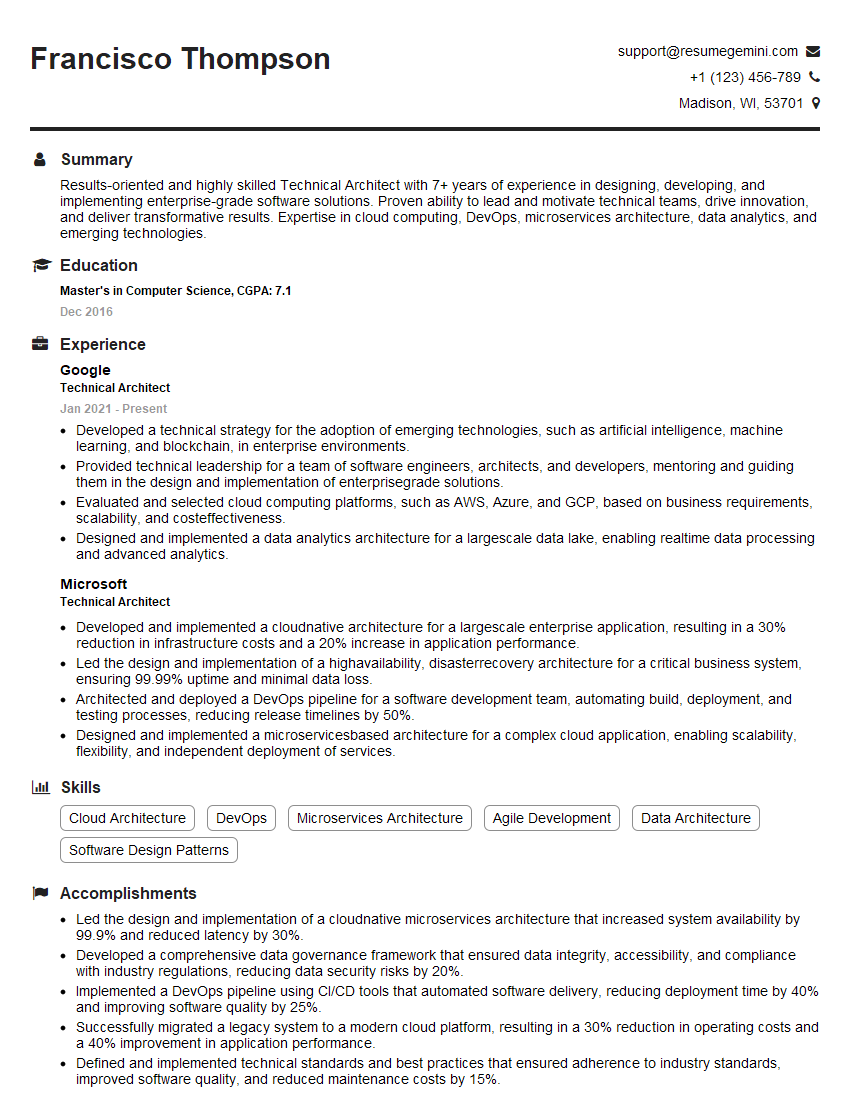

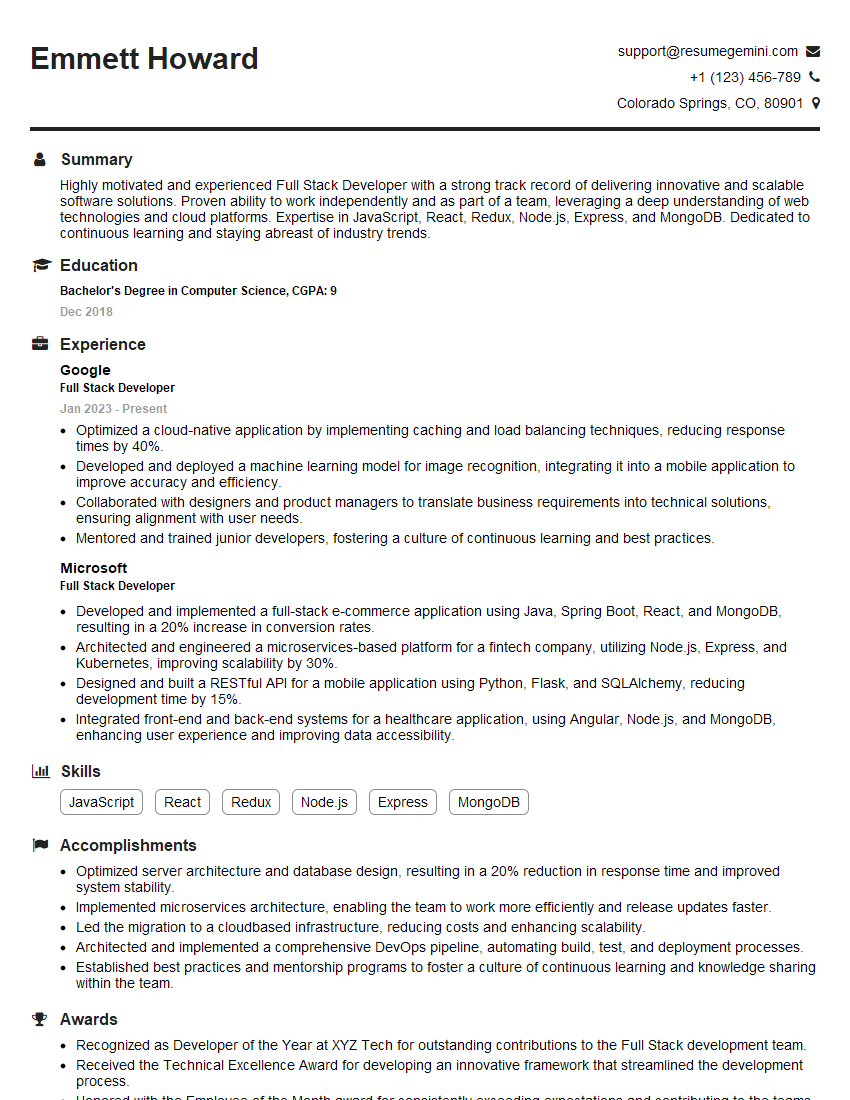

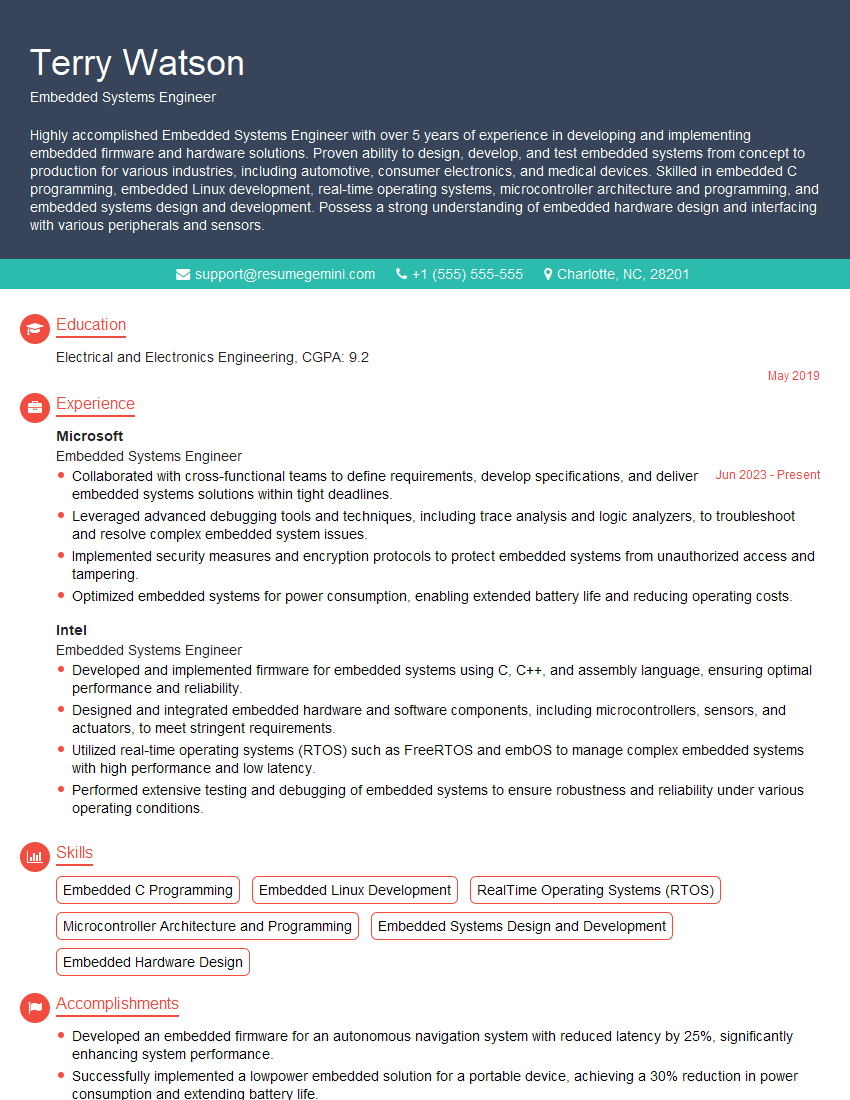

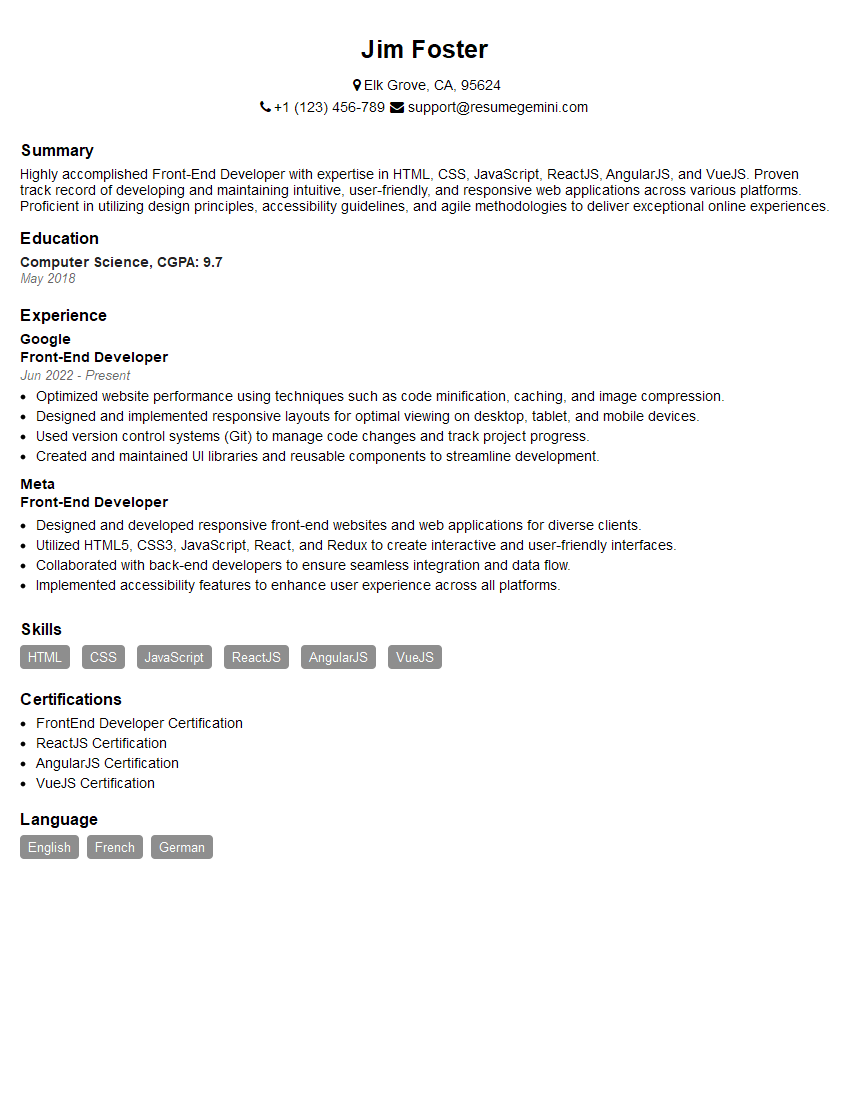

Mastering Codes is essential for a successful and fulfilling career in technology. A strong understanding of these concepts opens doors to exciting opportunities and allows you to contribute meaningfully to innovative projects. To maximize your job prospects, crafting a compelling and ATS-friendly resume is critical. ResumeGemini is a trusted resource to help you build a professional resume that highlights your skills and experience effectively. Examples of resumes tailored to Codes positions are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO