The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Continuous Integration (CI)/Continuous Delivery (CD) interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Continuous Integration (CI)/Continuous Delivery (CD) Interview

Q 1. Explain the difference between Continuous Integration and Continuous Delivery.

Continuous Integration (CI) and Continuous Delivery (CD) are closely related but distinct practices aimed at automating the software release process. Think of CI as the engine and CD as the highway.

Continuous Integration (CI) focuses on frequently integrating code changes into a shared repository. Each integration is then verified by an automated build and automated tests. This early and frequent integration helps identify and resolve integration issues quickly, preventing them from snowballing into larger problems later in the development cycle. Imagine a team of builders working on different parts of a house. CI ensures they frequently check their work against each other, preventing incompatibilities when they try to put everything together.

Continuous Delivery (CD) takes CI a step further by automating the release process. Once code passes the CI checks, CD automatically deploys it to a staging or production environment. This makes releasing new features and bug fixes faster and more reliable. This is like the highway that smoothly transports the finished house to its final destination.

Key Difference: CI focuses on integrating code and verifying its functionality; CD automates the deployment of that verified code to various environments.

Q 2. Describe your experience with different CI/CD tools (e.g., Jenkins, GitLab CI, Azure DevOps, CircleCI).

I have extensive experience with various CI/CD tools, including Jenkins, GitLab CI, Azure DevOps, and CircleCI. My choice of tool depends heavily on the project’s needs and the existing infrastructure. Each has its strengths and weaknesses.

- Jenkins: A highly customizable and versatile open-source tool. I’ve used it for complex pipelines involving multiple stages, various build tools, and deployments across different platforms. Its plugin ecosystem allows for great flexibility, but can also lead to complexity in managing the pipeline.

- GitLab CI: Tightly integrated with GitLab, making it streamlined and easy to use for projects hosted on GitLab. The configuration is often simpler than Jenkins, making it ideal for smaller teams or projects that don’t require extensive customization. I’ve utilized it successfully for projects needing fast setup and seamless integration with the Git workflow.

- Azure DevOps: A comprehensive platform providing CI/CD alongside other development tools. Its strength lies in its robust integration with the Azure cloud ecosystem. I’ve used it for projects specifically deployed on Azure, benefiting from its native integration with services like Azure Kubernetes Service (AKS).

- CircleCI: A cloud-based CI/CD platform known for its ease of use and scalability. I’ve found it particularly useful for projects requiring fast build times and easy scaling based on demand. It’s often a good choice for projects needing reliable, managed infrastructure.

In my experience, the best tool isn’t universally applicable. The optimal choice hinges on factors such as project size, team expertise, infrastructure, and budget.

Q 3. What are some common challenges faced when implementing CI/CD?

Implementing CI/CD presents several challenges, often stemming from organizational, technical, and cultural factors. Here are some common ones:

- Legacy Systems: Integrating CI/CD into systems built without automation in mind can be complex and time-consuming. Refactoring and modernization are often required.

- Test Coverage: Insufficient automated tests hinder the reliability of the CI/CD pipeline. Thorough testing is critical for catching bugs early.

- Dependency Management: Managing and resolving dependencies between different components and services is crucial for smooth builds and deployments. Poorly managed dependencies often lead to build failures.

- Infrastructure as Code (IaC): Deploying and managing infrastructure manually is error-prone. IaC solutions such as Terraform or Ansible are essential for reliable and reproducible deployments.

- Team Buy-in and Collaboration: Successfully implementing CI/CD requires cross-functional collaboration and buy-in from developers, testers, operations, and other stakeholders. Without a shared understanding and commitment, the process can fail.

- Monitoring and Logging: Comprehensive monitoring and logging are crucial for identifying and resolving issues in the CI/CD pipeline. Without proper monitoring, you’re essentially flying blind.

Addressing these challenges requires a structured approach, careful planning, and a phased rollout, often starting with smaller, less critical parts of the system.

Q 4. How do you handle failed builds in a CI/CD pipeline?

Handling failed builds is critical for maintaining a healthy CI/CD pipeline. My approach involves a multi-pronged strategy:

- Automated Alerts: Immediate notifications are sent to the relevant team members via email, Slack, or other communication channels. This ensures quick identification and response to failures.

- Detailed Logging: The pipeline should provide comprehensive logs detailing the cause of the failure. This allows for efficient debugging and problem resolution.

- Root Cause Analysis: Investigating the root cause is crucial. This often involves examining the logs, code changes, and the build environment.

- Rollback Strategy: Having a well-defined rollback mechanism is essential in case of deployments to production. This minimizes downtime and reduces the impact of errors.

- Automated Retries: For transient errors (e.g., network issues), automated retries with appropriate backoff strategies can be implemented.

- Continuous Improvement: Analyzing trends in failed builds helps identify recurring issues and implement preventative measures. This could involve improving test coverage, refactoring code, or enhancing the pipeline’s infrastructure.

The goal is to minimize the impact of failed builds, accelerate resolution, and continually improve the stability and robustness of the pipeline.

Q 5. Explain your experience with version control systems (e.g., Git).

I have extensive experience using Git as my primary version control system. I’m proficient in branching strategies (like Gitflow), merging, rebasing, resolving conflicts, and using Git for collaborative development. My experience goes beyond basic commands; I understand the importance of well-structured commits, clear commit messages, and the use of pull requests for code review.

I frequently utilize Git’s features for managing code changes, tracking history, and facilitating collaboration among developers. I’ve also worked with Git hooks to automate tasks such as pre-commit checks for code style and running tests before code is committed. In my experience, strong Git skills are vital for effective collaboration and maintaining a clean and well-organized codebase.

Q 6. How do you ensure code quality within a CI/CD pipeline?

Ensuring code quality is paramount in a CI/CD pipeline. My approach involves several key steps:

- Static Code Analysis: Integrating static code analysis tools like SonarQube or ESLint into the pipeline helps detect potential bugs, vulnerabilities, and style inconsistencies early in the development process. This prevents these issues from progressing to later stages.

- Automated Testing: A comprehensive suite of automated tests – unit, integration, and system tests – is crucial for verifying the functionality and reliability of the code. High test coverage is essential.

- Code Reviews: Pull requests and code reviews provide another layer of quality control by allowing multiple developers to inspect the code for potential issues. This promotes knowledge sharing and helps maintain code quality standards.

- Code Style Enforcement: Consistent code style enhances readability and maintainability. Tools like linters and formatters enforce coding standards and promote uniformity across the codebase.

- Metrics and Reporting: Tracking metrics like code coverage, test success rates, and code quality scores helps identify areas for improvement and monitor progress over time. Regular reporting provides valuable insights into the overall health of the codebase.

This multifaceted approach ensures consistent code quality throughout the development lifecycle, leading to more robust and reliable software.

Q 7. Describe your experience with automated testing (unit, integration, system).

Automated testing is a cornerstone of my CI/CD approach. I have extensive experience designing, implementing, and integrating various levels of automated tests:

- Unit Tests: These focus on testing individual units of code in isolation. I use frameworks like JUnit (Java), pytest (Python), or similar, ensuring high unit test coverage to catch errors early.

- Integration Tests: These verify the interactions between different components or modules. I employ techniques like mocking and stubbing to isolate components while ensuring correct integration behavior.

- System Tests (End-to-End Tests): These test the entire system as a whole, validating its functionality from start to finish. This requires a well-defined test environment and often involves simulated user interactions.

I advocate for a test pyramid approach, prioritizing unit tests, then integration tests, and finally a smaller number of system tests. The objective is to achieve a balance between comprehensive testing and efficient test execution. Automated testing significantly reduces testing time, improves software quality, and makes the CI/CD pipeline more reliable.

Q 8. Explain the concept of Infrastructure as Code (IaC).

Infrastructure as Code (IaC) is the management of and provisioning of computer data centers through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools. Think of it like a recipe for your infrastructure. Instead of manually setting up servers, networks, and databases, you write code that defines these components and their relationships. This code can then be version-controlled, tested, and deployed automatically, just like application code.

Benefits of IaC:

- Reproducibility: Easily recreate environments consistently across different clouds or on-premises.

- Automation: Automate infrastructure provisioning and management, saving time and reducing errors.

- Version Control: Track changes to your infrastructure, enabling rollback and auditing.

- Scalability: Easily scale infrastructure up or down based on demand.

Popular IaC tools include: Terraform, Ansible, CloudFormation, Pulumi.

Example: Imagine you need to set up a new web server. With IaC, you’d write a Terraform script defining the server’s specifications (instance type, operating system, security groups, etc.). Running this script automatically creates the server in your chosen cloud provider, eliminating manual configuration steps.

Q 9. What are some best practices for designing a CI/CD pipeline?

Designing a robust CI/CD pipeline requires careful consideration of several best practices. Think of it as building a well-oiled machine where each part works together seamlessly.

- Modular Design: Break the pipeline into smaller, independent stages (build, test, deploy). This makes debugging and maintenance easier.

- Version Control: Store all pipeline code (scripts, configuration files) in a version control system (e.g., Git) for traceability and reproducibility.

- Automated Testing: Implement comprehensive automated testing at each stage (unit, integration, system, end-to-end) to ensure code quality.

- Continuous Monitoring: Monitor pipeline performance and health using dashboards and alerts. This allows for proactive identification and resolution of issues.

- Environment Parity: Ensure that development, testing, and production environments are as similar as possible to avoid unexpected issues during deployment.

- Infrastructure as Code (IaC): Manage infrastructure using IaC tools, enabling automated provisioning and consistent environments.

- Rollback Strategy: Implement a rollback plan to quickly revert to a previous stable version in case of deployment failures.

- Security: Integrate security scans and checks at various points in the pipeline.

Example: A well-structured pipeline might involve building the application, running unit tests, deploying to a staging environment for integration testing, and then finally deploying to production using a blue/green deployment strategy.

Q 10. How do you manage dependencies in your CI/CD pipeline?

Managing dependencies effectively is crucial for a reliable CI/CD pipeline. Ignoring dependencies can lead to build failures and inconsistencies across environments. I usually employ a multi-pronged approach:

- Dependency Management Tools: Leverage tools like npm, Maven, Gradle, or pip to manage project dependencies and their versions. These tools help ensure that the correct versions are consistently used across different environments.

- Dependency Locking: Create a lock file (e.g.,

package-lock.jsonfor npm) that explicitly specifies the exact versions of all dependencies. This prevents unexpected updates that could break your build. - Dependency Isolation: Utilize containers (Docker) or virtual environments to isolate project dependencies. This prevents conflicts between different projects or versions of libraries.

- Dependency Scanning: Regularly scan dependencies for known vulnerabilities using tools like Snyk or OWASP Dependency-Check. This helps identify and remediate potential security risks.

- Reproducible Builds: Implement strategies to ensure that builds are reproducible. This means that the same build process should always produce the same output, regardless of the environment.

Example: A requirements.txt file in a Python project lists all the project’s dependencies and their versions. This ensures that the same environment is created every time the project is built, preventing dependency-related errors.

Q 11. Explain your experience with containerization technologies (e.g., Docker, Kubernetes).

I have extensive experience with Docker and Kubernetes. Docker provides a way to package applications and their dependencies into containers, ensuring consistent execution across different environments. Kubernetes is an orchestration platform that automates the deployment, scaling, and management of containerized applications.

Docker: I’ve used Docker to create images for various applications, streamlining the build process and making deployments more reliable. Docker’s lightweight nature and ability to encapsulate dependencies make it ideal for CI/CD pipelines.

Kubernetes: I have experience deploying applications to Kubernetes clusters, leveraging its features such as rolling updates, auto-scaling, and health checks to ensure high availability and efficient resource utilization. This includes using Kubernetes YAML manifests to define deployments and services.

Example: In a recent project, we used Docker to build container images of our application and its dependencies. These images were then pushed to a container registry. Kubernetes was used to manage the deployment of these images to our production environment, using rolling updates to minimize downtime.

Q 12. How do you monitor and troubleshoot a CI/CD pipeline?

Monitoring and troubleshooting a CI/CD pipeline is essential for maintaining its health and efficiency. My approach involves a combination of tools and techniques:

- Logging and Monitoring Tools: Integrate logging tools (e.g., Elasticsearch, Fluentd, Kibana – the ELK stack) to collect and analyze logs from various stages of the pipeline. Monitoring tools like Prometheus and Grafana provide real-time insights into pipeline performance.

- Alerting Systems: Set up alerting systems that notify the team of pipeline failures or performance issues. This allows for quick response times and minimizes downtime.

- Centralized Dashboards: Utilize centralized dashboards that visualize pipeline health and provide an overview of recent builds and deployments.

- Automated Testing and Retries: Implement automated tests and retry mechanisms to detect and handle errors automatically.

- Root Cause Analysis: When issues arise, perform thorough root cause analysis to identify the underlying problems and prevent future occurrences.

Example: If a build fails, I’d consult the logs to identify the error. If the error indicates a dependency issue, I’d investigate the dependency management system. If there’s a problem with a specific stage in the pipeline, I’d focus my analysis on that stage’s logs and configuration.

Q 13. Describe your experience with different deployment strategies (e.g., blue/green, canary).

I’ve worked with various deployment strategies, including blue/green and canary deployments. The choice of strategy depends on factors like application complexity, risk tolerance, and downtime requirements.

Blue/Green Deployment: In this approach, you maintain two identical environments: a blue (production) and a green (staging) environment. You deploy the new version to the green environment, thoroughly test it, and then switch traffic from blue to green. If issues arise, you can easily switch back to blue.

Canary Deployment: This involves deploying the new version to a small subset of users (canaries) before rolling it out to the entire user base. This allows for gradual rollout, minimizing the impact of any unforeseen problems.

Other Strategies: I also have experience with rolling deployments (gradually updating instances) and A/B testing (deploying multiple versions and comparing their performance).

Example: In a recent project, we used a blue/green deployment for a critical application. Deploying to the green environment allowed us to thoroughly test the new features without impacting users. Once satisfied, we switched traffic using a load balancer, ensuring a smooth transition.

Q 14. How do you ensure security within a CI/CD pipeline?

Security is paramount in a CI/CD pipeline. A compromised pipeline can have severe consequences. My security approach is layered and encompasses several key aspects:

- Secure Configuration Management: Use a secure configuration management system to store sensitive information (API keys, passwords) and prevent hardcoding secrets in scripts.

- Access Control: Implement robust access control mechanisms to limit who can access different parts of the pipeline.

- Security Scanning: Integrate security scanning tools (e.g., static and dynamic analysis tools) into the pipeline to detect vulnerabilities early on.

- Image Scanning: Scan container images for vulnerabilities before deploying them to production.

- Code Signing and Verification: Sign and verify code to ensure its integrity and prevent tampering.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address potential vulnerabilities.

- Least Privilege: Grant pipeline users only the necessary permissions.

- Secrets Management: Use dedicated secrets management tools like HashiCorp Vault or AWS Secrets Manager.

Example: We use a dedicated secrets management tool to store sensitive credentials. These credentials are never hardcoded directly into pipeline scripts, minimizing the risk of exposure.

Q 15. Explain your experience with rollback strategies.

Rollback strategies are crucial for mitigating the risks associated with deploying new code. They ensure that if a deployment fails or introduces unexpected issues, we can quickly revert to a known stable version. Think of it like having an ‘undo’ button for your software deployments. My experience encompasses several techniques, tailored to the specific technology stack and deployment frequency.

- Automated Rollbacks: This involves scripting the rollback process. For example, if we’re using Kubernetes, we might use a rollback command to switch back to a previous deployment. This ensures speed and consistency.

kubectl rollout undo deploymentis a common example. - Blue/Green Deployments: With this approach, we maintain two identical environments (Blue and Green). New code is deployed to the Green environment, and after thorough testing, traffic is switched over. If problems arise, we simply switch traffic back to the Blue environment – a near-instantaneous rollback.

- Canary Deployments: This involves gradually releasing the new code to a small subset of users (the ‘canary’). We monitor their experience; if issues are detected, the rollout stops. This limits the impact of a faulty deployment. It’s like testing a new feature on a small group before releasing it to everyone.

- Version Control and Tagging: This underpins all rollback strategies. We religiously tag our code in version control (Git) with meaningful names to identify specific versions for rollback. This allows for easy identification and retrieval of the stable version.

In my previous role, we experienced an unexpected performance degradation after a deployment. Our automated rollback system, which used a combination of Blue/Green and scripting, reverted the system to the previous version within minutes, minimizing downtime and preventing widespread disruption. Choosing the right strategy depends on the application’s criticality, deployment frequency, and infrastructure.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle merging conflicts in a CI/CD environment?

Merge conflicts are a common occurrence in collaborative software development. In a CI/CD environment, effective conflict resolution is paramount to maintain a smooth pipeline. My approach is a multi-pronged strategy focused on prevention and efficient resolution.

- Frequent Integration: Encouraging developers to integrate their code frequently reduces the risk and complexity of large merge conflicts. Short, focused development cycles mean smaller changes and easier merging.

- Clear Branching Strategy: Employing a well-defined branching strategy (like Gitflow) provides a structured approach to code management, minimizing the likelihood of conflicting changes. Features are developed in branches, thoroughly tested, and then merged into the main branch.

- Automated Merge Conflict Resolution (where possible): Some CI/CD tools provide capabilities to resolve simple conflicts automatically. However, this should be used judiciously as complex conflicts require manual intervention.

- Collaborative Conflict Resolution: For complex merge conflicts, I emphasize collaboration between developers. Using clear and effective communication, the developers can discuss the code changes, understand the points of conflict, and find a solution that integrates both sets of changes seamlessly. Tools like Git’s merge functionality make this easier.

- Code Reviews: Regular code reviews before merging helps identify potential conflicts early, leading to efficient resolutions before they reach the CI/CD pipeline.

In a recent project, a significant merge conflict emerged because of simultaneous modifications to the same component. By carefully reviewing the changes in a collaborative session, we identified the conflicting changes and resolved them efficiently, ensuring the integrity of the codebase and the uninterrupted flow of our CI/CD pipeline.

Q 17. What metrics do you use to measure the effectiveness of your CI/CD pipeline?

Measuring the effectiveness of a CI/CD pipeline involves tracking several key metrics that provide insights into its performance, reliability, and efficiency. It’s not just about speed; it’s about consistent, reliable delivery.

- Deployment Frequency: How often are we deploying new code to production? Higher frequency generally indicates a more agile and responsive process.

- Lead Time for Changes: How long does it take for a code change to go from commit to production? A shorter lead time suggests a more efficient pipeline.

- Mean Time To Recovery (MTTR): How long does it take to recover from a deployment failure? A low MTTR indicates a robust system with efficient rollback strategies.

- Change Failure Rate: What percentage of deployments result in failures? A low failure rate is a clear indicator of a well-tested and stable pipeline.

- Deployment Success Rate: The percentage of deployments that are successful without any issues. High success rates are crucial for consistent software delivery.

- Code Coverage: While not directly a CI/CD metric, high test coverage ensures the quality of code deployed and reduces the change failure rate.

By monitoring these metrics, we can identify bottlenecks and areas for improvement in the pipeline. For example, a high MTTR might indicate a need for better rollback procedures. Regular monitoring and analysis are crucial for continuous improvement.

Q 18. Describe your experience with infrastructure provisioning tools (e.g., Terraform, Ansible).

Infrastructure provisioning tools like Terraform and Ansible are essential for managing and automating the infrastructure needed for CI/CD pipelines. They allow for consistent and repeatable environment creation across different stages (development, testing, production).

- Terraform: I’ve extensively used Terraform for managing infrastructure as code (IaC). This allows us to define our infrastructure in declarative configuration files, which are version-controlled. Terraform then automates the creation, modification, and destruction of the infrastructure based on these definitions. It’s excellent for managing cloud-based infrastructure (AWS, Azure, GCP).

- Ansible: Ansible is a powerful tool for configuration management and automation. It excels at deploying and configuring applications on existing infrastructure. We use it to install software, configure servers, and manage dependencies. It’s agentless, making it easy to use and manage.

In a past project, we used Terraform to provision our entire CI/CD infrastructure on AWS, including EC2 instances, VPCs, and S3 buckets. Ansible was then used to configure these instances with the necessary software and dependencies for our pipeline. This approach ensures consistency and repeatability across all environments. It also allows for easy recreation of environments if needed.

Q 19. How do you manage secrets and sensitive information in a CI/CD pipeline?

Managing secrets and sensitive information (API keys, database credentials, passwords) within a CI/CD pipeline requires a robust and secure approach. Compromising these credentials can have severe security consequences.

- Secrets Management Tools: Dedicated secrets management tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault are highly recommended. These tools provide secure storage, access control, and auditing capabilities. They handle the encryption and retrieval of secrets securely within the pipeline.

- Environment Variables: For simpler scenarios, environment variables can be used to store sensitive data. However, this requires extra care to ensure that these variables are not hardcoded into scripts and are properly managed.

- Least Privilege Access: Ensure that each stage of the CI/CD pipeline only has access to the secrets it absolutely requires. Avoid granting unnecessary access to sensitive information.

- Regular Audits and Rotation: Conduct regular audits of secret access and rotation of credentials to mitigate the risk of compromise. Implement mechanisms to automatically rotate credentials at specified intervals.

In my experience, utilizing a dedicated secrets management tool like HashiCorp Vault is the most secure and scalable approach. This ensures that secrets are properly encrypted, their access is tightly controlled, and all accesses are logged for auditing purposes.

Q 20. Explain your experience with different artifact repositories (e.g., Nexus, Artifactory).

Artifact repositories are central to any CI/CD pipeline. They store and manage build artifacts (like JARs, WARs, Docker images) that are produced during the build process. Selecting the right repository depends on your project’s needs and scale.

- Nexus: Nexus is a popular open-source repository manager that supports various artifact types. It offers features such as proxy caching, artifact management, and security scanning. It’s versatile and easily integrated into many CI/CD pipelines.

- Artifactory: Artifactory is a more comprehensive and enterprise-grade solution, offering advanced features like advanced security controls, fine-grained access management, and high availability. It supports a wider range of artifact formats and integrates well with various tools and technologies.

The choice between Nexus and Artifactory often depends on the scale and complexity of the project. For smaller projects, Nexus might suffice. For larger enterprises with complex requirements, Artifactory’s advanced features might be necessary. In a previous project, we used Artifactory for its robust security and scalability features as we dealt with numerous microservices and multiple teams.

Q 21. How do you handle different environments (e.g., development, testing, production) in your CI/CD pipeline?

Managing different environments (development, testing, staging, production) within a CI/CD pipeline is crucial for ensuring that code is thoroughly tested and validated before reaching production. A well-structured approach ensures consistency and reduces the risk of issues in production.

- Environment-Specific Configurations: Utilize environment variables or configuration files to manage environment-specific settings (database connections, API endpoints). This avoids hardcoding values into the code and makes it easier to deploy to different environments.

- Separate Build Configurations: Consider creating separate build configurations for each environment to account for specific dependencies or configurations required for that environment.

- Infrastructure as Code (IaC): Use IaC tools (Terraform, Ansible) to provision and manage the infrastructure for each environment. This ensures consistency and repeatability.

- Automated Testing: Integrate automated testing throughout the pipeline, starting from unit tests to integration tests and end-to-end tests. This helps to detect issues early in the development process.

- Deployment Strategies: Employ appropriate deployment strategies (blue/green, canary) for each environment based on its characteristics and risk profile. Production deployments should typically be more cautious.

In a recent project, we used a combination of environment variables, Terraform, and a multi-stage pipeline to manage our different environments. Each stage had specific automated tests to ensure quality before moving to the next environment. This approach reduced errors and made the entire process much more efficient and reliable.

Q 22. Describe your experience with logging and monitoring tools.

Effective logging and monitoring are crucial for a healthy CI/CD pipeline. Think of them as the eyes and ears of your automation process, providing vital insights into its health and performance. I’ve extensively used tools like ELK stack (Elasticsearch, Logstash, Kibana) for centralized log management and visualization. This allows me to easily search, filter, and analyze logs from various stages of the pipeline, quickly identifying bottlenecks or errors. For monitoring, I leverage Prometheus and Grafana. Prometheus is a powerful monitoring system that scrapes metrics from various sources, while Grafana provides intuitive dashboards for visualizing these metrics in real-time. This combination helps me track key metrics like build times, deployment durations, and error rates, allowing for proactive issue identification and performance optimization. In one project, using Prometheus and Grafana allowed us to pinpoint a specific database query that was slowing down deployments significantly. We optimized the query, resulting in a 70% reduction in deployment time.

Beyond ELK, Prometheus, and Grafana, I’ve experience with other tools like Datadog and Splunk, which offer similar functionalities with additional features like alerting and anomaly detection. The choice of tools often depends on the specific needs of a project and the existing infrastructure.

Q 23. How do you ensure scalability and reliability in a CI/CD pipeline?

Ensuring scalability and reliability in a CI/CD pipeline involves several key strategies. Think of it like building a highway system: you need multiple lanes (parallel processing), strong bridges (robust infrastructure), and clear signage (monitoring and alerting). First, leverage parallel processing techniques to speed up builds and deployments. Tools like Jenkins with parallel execution plugins allow multiple build jobs to run concurrently, significantly reducing overall pipeline time. This scales horizontally as you add more build agents or cloud-based build resources. Second, utilize infrastructure as code (IaC) tools like Terraform or CloudFormation to define and manage your pipeline infrastructure. This ensures consistency and repeatability, making it easy to scale up or down resources based on demand. For reliability, employ robust error handling and automated rollback mechanisms. If a deployment fails, the system should automatically revert to the previous stable version, minimizing downtime. Implementing blue/green deployments or canary deployments further enhances reliability by reducing risk and enabling gradual rollouts.

Moreover, choosing the right cloud platform and employing autoscaling features is essential. For instance, on AWS, I’ve used Elastic Compute Cloud (EC2) with auto-scaling groups to adjust the number of build agents based on the workload. This ensures that the pipeline can handle peak demands without performance degradation.

Q 24. What are some common anti-patterns in CI/CD?

Several common anti-patterns plague CI/CD pipelines, hindering efficiency and reliability. One frequent mistake is monolithic pipelines, where every step is tightly coupled. Imagine a single, long assembly line – a failure at any point halts the entire process. Instead, pipelines should be modular and decoupled, allowing for independent testing and deployment of individual components. Another pitfall is neglecting automated testing. Thorough automated tests – unit, integration, and end-to-end – are essential for catching defects early and preventing them from reaching production. Lack of proper version control for infrastructure as code is also a significant issue, leading to inconsistent environments and difficulties in recreating them.

Furthermore, infrequent deployments or long feedback loops create delays and hinder rapid iteration. Aim for frequent, smaller deployments, enabling faster issue identification and resolution. Finally, ignoring monitoring and logging results in a lack of visibility into the pipeline’s health, making it harder to identify and address problems. This is like driving blindfolded – you have no idea what’s happening!

Q 25. How do you integrate CI/CD with other DevOps tools?

Integrating CI/CD with other DevOps tools is key to creating a cohesive and efficient workflow. It’s like connecting different departments in a company to streamline the product development process. I often integrate CI/CD pipelines with configuration management tools like Ansible or Chef to automate infrastructure provisioning and management. This ensures consistency across different environments and reduces manual intervention. We also link the pipeline with monitoring tools like Prometheus and Grafana to track key metrics and receive alerts about potential issues. This provides real-time visibility into the health and performance of the system. Another critical integration is with artifact repositories like Artifactory or Nexus to manage and distribute software packages securely.

Furthermore, integrating with testing frameworks like Selenium or JUnit allows for automated testing to be seamlessly incorporated into the pipeline. Finally, incorporating security scanning tools into the pipeline ensures the security of the software being deployed. Tools like SonarQube or Snyk can be integrated to perform static and dynamic code analysis.

Q 26. Explain your experience with cloud platforms (e.g., AWS, Azure, GCP).

I possess significant experience with various cloud platforms, including AWS, Azure, and GCP. Each platform offers unique strengths and caters to different needs. On AWS, I have extensively used services like EC2 for compute, S3 for storage, and CodePipeline/CodeDeploy for CI/CD orchestration. I’ve implemented infrastructure as code using Terraform and managed deployments using various strategies like blue/green deployments. On Azure, I’ve utilized Azure DevOps for CI/CD, Azure Kubernetes Service (AKS) for container orchestration, and Azure Blob Storage for artifact storage. Similarly, on GCP, I’ve worked with Compute Engine, Cloud Storage, and Cloud Build for CI/CD, leveraging tools like Terraform and Kubernetes Engine.

My experience includes designing, implementing, and managing CI/CD pipelines on all these platforms, utilizing their respective strengths to create scalable, reliable, and cost-effective solutions. The choice of platform depends on factors like existing infrastructure, organizational preferences, and project requirements. However, the core principles of CI/CD remain consistent across platforms.

Q 27. How do you approach troubleshooting complex CI/CD pipeline issues?

Troubleshooting complex CI/CD pipeline issues requires a systematic approach. It’s like being a detective, gathering clues to solve a mystery. First, I gather all available information: logs, error messages, and monitoring data. I thoroughly analyze these clues to understand the root cause. I then start with the most recent failure point, working backward through the pipeline to identify where the problem originated. This often involves using debugging tools and techniques specific to the pipeline components involved. Tools like debuggers integrated into build tools or remote debugging capabilities in cloud environments can provide deep insights. I break down the pipeline into smaller, manageable segments to isolate the issue. Once the root cause is identified, I develop and implement a solution, often involving code changes, configuration updates, or infrastructure modifications.

Finally, after implementing the fix, I thoroughly test it to ensure the problem is resolved and that the pipeline is stable. A crucial aspect is adding monitoring and logging enhancements to prevent similar issues in the future. This ensures that the pipeline becomes more robust and easily maintainable over time.

Q 28. Describe a time you had to significantly improve a CI/CD pipeline.

In a previous role, we had a CI/CD pipeline that was slow, unreliable, and lacked visibility. Builds were taking hours, deployments were frequently failing, and there was little insight into the pipeline’s health. This significantly impacted development velocity and team morale. To improve this, I implemented several changes. First, we migrated from a monolithic pipeline to a modular pipeline, breaking down the process into smaller, independent stages. This allowed for parallel processing and improved fault isolation. Second, we implemented comprehensive automated testing, catching many issues earlier in the development lifecycle.

Third, we enhanced logging and monitoring to improve visibility into the pipeline’s performance. By tracking key metrics and utilizing alerting mechanisms, we could proactively identify and resolve issues before they escalated. We also introduced infrastructure as code using Terraform to manage the pipeline’s infrastructure, ensuring consistency and repeatability. The result was a dramatic improvement in pipeline performance. Build times were reduced by 80%, deployment failures decreased significantly, and the team gained a far better understanding of the pipeline’s health. This led to increased developer productivity and greater confidence in our release process. It was a significant improvement that demonstrated the power of well-architected CI/CD.

Key Topics to Learn for Continuous Integration (CI)/Continuous Delivery (CD) Interview

- Version Control Systems (VCS): Understanding Git, branching strategies (Gitflow, GitHub Flow), merging, and resolving conflicts is fundamental. Consider practical application in a team environment and how to handle merge conflicts efficiently.

- CI/CD Pipelines: Learn the stages of a typical pipeline (build, test, deploy), common tools (Jenkins, GitLab CI, CircleCI, Azure DevOps), and how to configure and troubleshoot them. Focus on practical examples of automating builds and deployments.

- Testing Strategies: Master different testing levels (unit, integration, system, end-to-end) and their importance in a CI/CD pipeline. Understand how to incorporate testing frameworks and how to analyze test results to improve software quality.

- Infrastructure as Code (IaC): Explore tools like Terraform or Ansible for managing and automating infrastructure provisioning. Understand how IaC integrates with CI/CD for consistent and repeatable deployments.

- Containerization (Docker, Kubernetes): Learn the basics of containerizing applications and orchestrating them using Kubernetes. Understand the benefits of containerization in CI/CD workflows and how to build and deploy containerized applications.

- Continuous Monitoring and Logging: Understand the importance of monitoring application performance and logs in a production environment. Explore tools for collecting and analyzing logs and metrics to identify and resolve issues quickly.

- Security in CI/CD: Discuss secure coding practices, vulnerability scanning, and security best practices within the CI/CD pipeline. Understand how to integrate security checks into your pipeline to prevent vulnerabilities from reaching production.

Next Steps

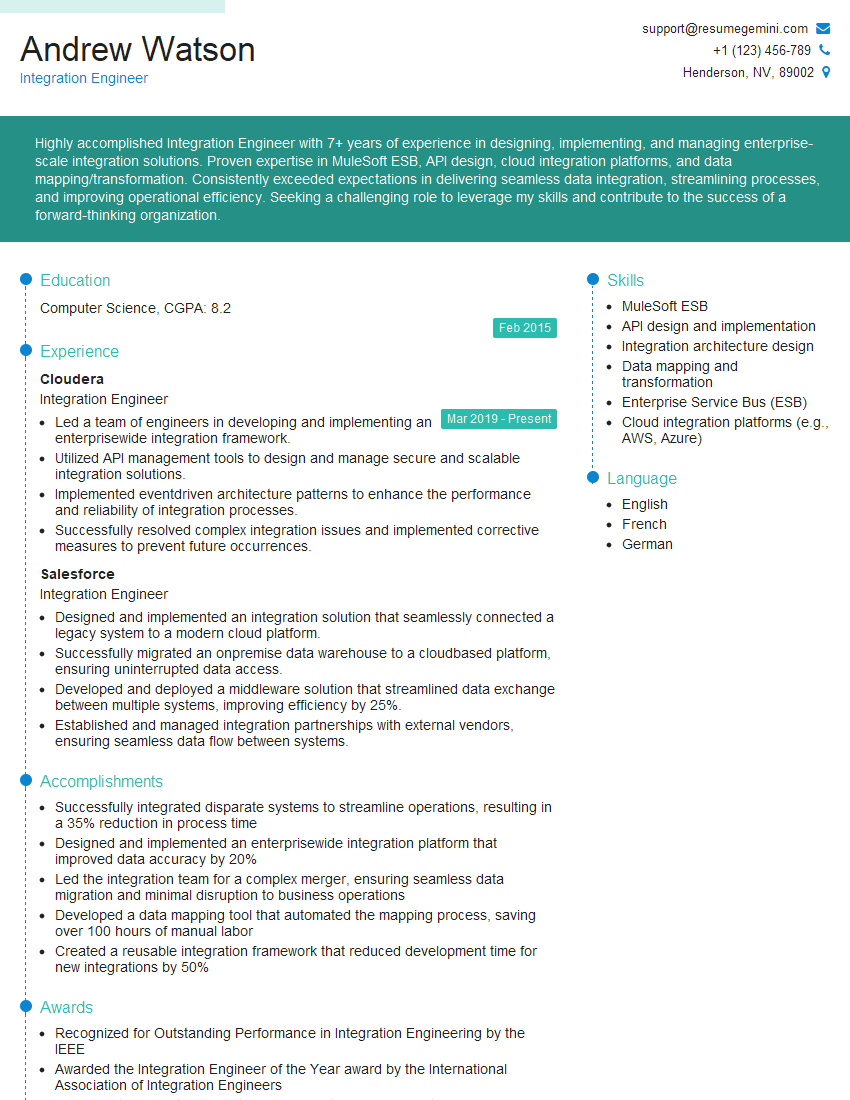

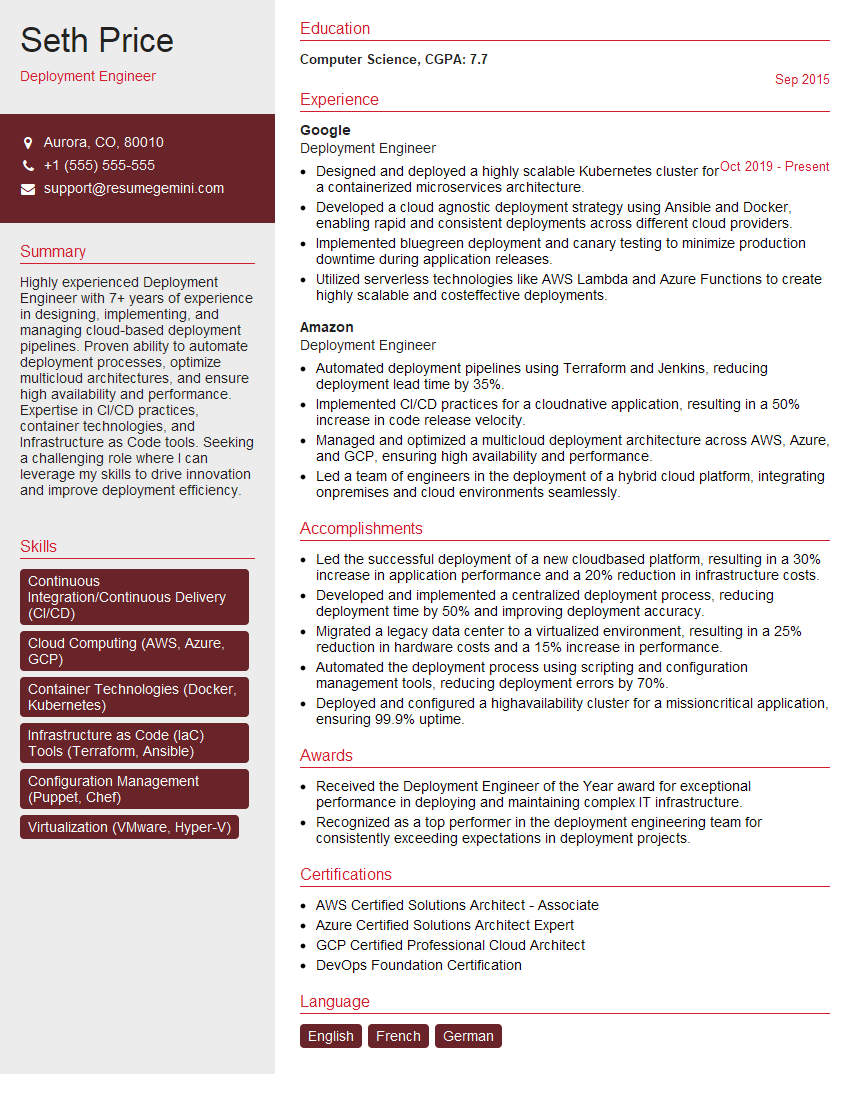

Mastering CI/CD is crucial for career advancement in software development. It demonstrates your ability to work efficiently in a team, deliver high-quality software quickly, and adapt to the ever-evolving demands of modern software engineering. To significantly boost your job prospects, crafting an ATS-friendly resume is vital. ResumeGemini is a trusted resource that can help you build a professional and impactful resume that showcases your CI/CD expertise. Examples of resumes tailored to Continuous Integration (CI)/Continuous Delivery (CD) roles are available to help you get started. Take the next step and invest in your career – build a compelling resume today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO