The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Database Administration Tools interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Database Administration Tools Interview

Q 1. Explain the difference between clustered and non-clustered indexes.

The core difference between clustered and non-clustered indexes lies in how they physically organize data within the database. Think of a library: a clustered index is like organizing books alphabetically on the shelves – the physical order of the books reflects the index order. A non-clustered index is like a library catalog – it provides a pointer to where each book is located, but the books themselves aren’t ordered according to the catalog.

Clustered Index: A clustered index physically sorts the table rows based on the indexed column(s). There can only be one clustered index per table because the data can only be physically sorted in one way. This improves the speed of data retrieval for queries based on the clustered index column(s), as the database doesn’t need to scan the entire table. Imagine searching for a book by its title (clustered index) – you go straight to the right shelf.

Non-Clustered Index: A non-clustered index creates a separate structure that points to the rows in the table. Multiple non-clustered indexes can exist on the same table. It’s like the library catalog: it doesn’t physically rearrange the books, but quickly directs you to the correct location. This is useful for frequently queried columns that aren’t suitable as a clustered index, or when you need quick access based on different criteria.

Example: Let’s say you have a customer table with columns ‘CustomerID’ (primary key), ‘Name’, and ‘City’. If you create a clustered index on ‘CustomerID’, the table rows will be physically sorted by CustomerID. A non-clustered index on ‘City’ will create a separate index structure listing cities and pointers to the corresponding customer rows, regardless of how the rows are physically sorted.

Q 2. Describe your experience with database backup and recovery strategies.

My experience with database backup and recovery strategies spans various platforms and methodologies. I understand the importance of a robust and comprehensive backup and recovery plan to ensure business continuity and data protection. This involves a multi-layered approach that balances speed, storage, and recovery time objectives (RTOs) with recovery point objectives (RPOs).

- Full Backups: These are complete copies of the database, offering a comprehensive recovery point. While they take longer, they’re crucial for infrequent but complete restorations.

- Differential Backups: These back up only the changes made since the last full backup, significantly reducing backup time and storage compared to full backups. Recovery is faster than using full backups but requires both the full and differential.

- Transactional Log Backups (or Incremental Backups): These capture only the transactions since the last backup (full or differential or incremental), minimizing storage and backup time. Recovery involves restoring the full backup, then applying the differential and log backups sequentially. This method is excellent for frequent, granular backups.

I am proficient in utilizing native backup utilities such as SQL Server’s BACKUP and RESTORE commands, Oracle’s RMAN (Recovery Manager), and MySQL’s mysqldump and other tools. I also have experience with third-party backup solutions for enhanced features like compression, encryption, and offsite storage.

In planning a strategy, I consider factors like database size, RTO and RPO requirements, storage costs, and the acceptable downtime during backups. Regular testing of the recovery process is vital to ensure its effectiveness.

Q 3. How do you monitor database performance and identify bottlenecks?

Database performance monitoring is crucial for identifying bottlenecks and ensuring optimal system performance. I employ a multi-faceted approach using a combination of built-in database monitoring tools and third-party performance analysis solutions. My strategy starts with understanding the application’s workload and identifying critical queries.

- Performance Monitoring Tools: I utilize tools like SQL Server Profiler, Oracle’s AWR (Automatic Workload Repository), and MySQL’s slow query log to capture performance metrics. This helps identify slow-running queries, resource contention (CPU, memory, I/O), and blocking issues.

- Query Analysis: Once slow queries are identified, I analyze their execution plans to determine the root cause of the performance problem. This might involve inefficient indexing, missing indexes, poorly written queries, or table design issues.

- Resource Monitoring: I monitor CPU utilization, memory usage, disk I/O, and network activity to pinpoint resource bottlenecks. This often involves looking at system-level metrics in addition to database-specific metrics.

- Third-Party Tools: For more advanced analysis, I leverage third-party tools offering comprehensive dashboards and reporting capabilities. These tools provide deeper insights into performance trends and potential issues.

By combining these methods, I can accurately identify the source of performance bottlenecks and implement targeted solutions.

Q 4. What are your preferred methods for database tuning and optimization?

Database tuning and optimization is an iterative process that requires a deep understanding of the database system and the application workload. My approach centers on identifying performance bottlenecks, analyzing the root cause, and implementing targeted solutions.

- Indexing Strategies: Proper indexing is critical for query performance. I analyze query patterns to identify columns that benefit from indexes, ensuring the right index type (clustered, non-clustered, covering) is used for optimal performance. I also regularly review indexes to remove any that are no longer beneficial.

- Query Optimization: Analyzing execution plans is paramount. This allows me to identify inefficient query patterns and rewrite them for better performance. Techniques like using appropriate join types, avoiding full table scans, and optimizing subqueries are crucial.

- Schema Optimization: Optimizing database schema involves designing tables and relationships effectively to minimize data redundancy and improve query efficiency. Normalization principles are key to ensuring good schema design.

- Hardware Upgrades: In some cases, upgrading hardware (e.g., faster CPUs, more memory, or faster storage) might be necessary to address performance limitations. This is often a last resort, but sometimes crucial for heavily utilized systems.

- Database Statistics: Ensuring database statistics are up-to-date is critical for the query optimizer to make informed decisions. Regularly updating statistics improves the efficiency of query planning.

I regularly use tools like execution plan analyzers, query profiling tools, and performance monitoring tools to guide my optimization efforts. The process is often iterative, requiring repeated testing and measurement to ensure improvements.

Q 5. Explain your experience with database replication and high availability solutions.

My experience with database replication and high availability solutions includes various techniques designed to ensure data redundancy, fault tolerance, and improved performance. I’ve worked with several replication methods and high-availability architectures, depending on the specific requirements of the application.

- Replication Technologies: I’m experienced with technologies such as SQL Server Always On Availability Groups, Oracle Data Guard, and MySQL replication (master-slave, group replication). These technologies offer different features and capabilities, like synchronous and asynchronous replication, failover mechanisms, and read scaling.

- High Availability Architectures: I understand how to design and implement high-availability architectures using clustering technologies and load balancing. This includes setting up failover clusters and ensuring seamless transition in case of hardware or software failures.

- Disaster Recovery: Replication is a vital component of disaster recovery plans. I’ve designed and implemented strategies to replicate data to geographically distant locations to ensure business continuity in the event of a major disaster.

Choosing the right replication and high-availability solution depends on several factors, including the RTO and RPO requirements, the complexity of the application, and the budget. I carefully evaluate these factors before making recommendations.

Q 6. How do you handle database security and access control?

Database security and access control are paramount. My approach to database security involves a multi-layered defense-in-depth strategy focused on minimizing vulnerabilities and controlling access to sensitive data. This includes implementing robust authentication, authorization, and encryption.

- Authentication: I enforce strong password policies and often integrate with centralized authentication systems like Active Directory or LDAP for secure user logins.

- Authorization: Using role-based access control (RBAC), I grant users only the necessary permissions to perform their tasks. The principle of least privilege is strictly enforced, limiting potential damage from compromised accounts.

- Data Encryption: I implement encryption both at rest and in transit to protect sensitive data. This involves using encryption technologies provided by the database platform or third-party tools.

- Network Security: Databases are secured using firewalls, restricting access from untrusted networks. Network segmentation further isolates the database servers from other systems.

- Regular Security Audits: Regular security audits and vulnerability scans are performed to identify and address potential security weaknesses. This includes reviewing database configurations, user permissions, and application code.

- Data Masking and Anonymization: For development and testing, I utilize data masking techniques to protect sensitive data while still providing useful data for testing purposes.

I stay updated with the latest security best practices and vulnerabilities to ensure the database systems are protected from emerging threats.

Q 7. Describe your experience with different database platforms (e.g., SQL Server, Oracle, MySQL).

My experience with different database platforms is extensive. I’ve worked extensively with SQL Server, Oracle, and MySQL, each with its own strengths and weaknesses.

- SQL Server: I’m proficient in administering SQL Server, including managing instances, configuring high availability using Always On Availability Groups, implementing performance monitoring, and handling backups and restores. I’m familiar with its features like T-SQL, stored procedures, and integration with .NET applications.

- Oracle: My Oracle experience includes managing Oracle databases, implementing RAC (Real Application Clusters) for high availability, performing performance tuning using AWR reports, and managing backups and restores using RMAN. I have a good understanding of PL/SQL and Oracle’s extensive feature set.

- MySQL: I have substantial experience administering MySQL databases, configuring replication, optimizing performance, and securing the database. I’m familiar with its command-line tools and open-source nature. I have experience with both InnoDB and MyISAM storage engines.

My experience extends beyond these platforms to include working with other database systems as needed. The core principles of database administration remain consistent across different platforms, while the specific tools and techniques may vary.

Q 8. What are your experiences with data migration and ETL processes?

Data migration and ETL (Extract, Transform, Load) processes are crucial for moving data between different systems. My experience encompasses planning, executing, and monitoring these processes across various database systems, including relational databases like PostgreSQL and MySQL, and NoSQL databases like MongoDB.

I’ve worked on projects involving migrating terabytes of data, ensuring minimal downtime and data integrity. This includes understanding data structures, cleansing and transforming data using scripting languages like Python and SQL, and employing tools such as Apache Kafka and Informatica PowerCenter to manage the ETL pipeline effectively. For instance, in one project, we migrated a legacy system’s data to a cloud-based solution using a phased approach to minimize disruption. This involved careful data validation at each stage, ensuring accuracy and completeness.

A key element of successful data migration is meticulous planning. I always begin by thoroughly assessing the source and target systems, identifying potential data inconsistencies, and developing a detailed migration plan with contingencies for handling errors. This plan includes defining clear success metrics and establishing a robust monitoring system to track the progress and identify potential problems early on.

Q 9. How familiar are you with different NoSQL databases (e.g., MongoDB, Cassandra)?

I’m proficient with several NoSQL databases, notably MongoDB and Cassandra. I understand their strengths and weaknesses and know when to apply each in different scenarios. MongoDB, with its document-oriented structure, excels in handling semi-structured data and flexible schema requirements – ideal for applications needing rapid prototyping and scalability. I’ve utilized MongoDB’s aggregation framework extensively for complex data analysis.

Cassandra, on the other hand, shines in distributed environments requiring high availability and fault tolerance. Its column-family model is perfect for handling massive datasets with high write throughput. I’ve leveraged Cassandra’s features in projects needing extreme scalability and resilience, such as real-time analytics platforms.

My experience includes designing database schemas, optimizing queries, and managing clusters for both MongoDB and Cassandra. I’m familiar with their respective administration tools and understand how to monitor performance, troubleshoot issues, and ensure data integrity within these environments. For example, I optimized a MongoDB cluster by implementing appropriate indexing strategies and sharding, resulting in a significant performance improvement.

Q 10. Explain your troubleshooting methodology for resolving database errors.

My troubleshooting methodology is systematic and data-driven. When a database error occurs, I follow a structured approach:

- Identify the Error: First, I pinpoint the exact error message and its context. This often involves checking logs, monitoring tools, and reviewing application error messages.

- Gather Information: I collect relevant information such as timestamps, affected tables or collections, and any preceding events. Examining the database’s health metrics like CPU usage, disk I/O, and memory consumption is critical.

- Isolate the Problem: I systematically narrow down the potential causes by ruling out possibilities. This could involve testing queries, reviewing schema definitions, or examining server configuration files.

- Implement a Solution: Once the root cause is identified, I implement a solution, which might involve fixing a code bug, adjusting database configuration parameters, re-indexing data, or even restoring from a backup.

- Test and Monitor: After implementing a solution, I thoroughly test the fix to ensure it addresses the problem without creating new issues. I then monitor the system to ensure stability and prevent recurrence.

For example, if I encounter a deadlock in a relational database, I’d analyze the lock contention, potentially optimize the database schema or application code to reduce contention, or adjust transaction isolation levels.

Q 11. How do you handle database performance issues under high load?

Handling database performance issues under high load demands a multi-pronged approach. My strategy typically involves these steps:

- Monitoring and Profiling: I use monitoring tools to identify performance bottlenecks. This might involve analyzing query execution plans, checking for slow queries, and investigating I/O wait times.

- Query Optimization: Inefficient queries are often the culprit. I optimize queries by adding indexes, rewriting queries, or using appropriate query hints.

- Schema Optimization: Reviewing the database schema for potential improvements is crucial. This might involve denormalization (carefully considered) or adding indexes to improve query performance.

- Hardware Upgrades: In some cases, upgrading hardware resources (CPU, memory, storage) may be necessary to accommodate increased load.

- Caching Strategies: Implementing appropriate caching mechanisms can significantly reduce database load. This could involve using database-level caching or application-level caching.

- Load Balancing: Distributing the load across multiple database servers is essential for high availability and scalability.

In a recent project, I identified a slow query that was causing significant performance degradation. By adding a composite index and optimizing the query execution plan, I improved query response time by over 80%.

Q 12. Describe your experience with database scripting and automation.

I have extensive experience with database scripting and automation using various tools and languages. I utilize SQL, PL/SQL (for Oracle), T-SQL (for SQL Server), and scripting languages like Python and PowerShell to automate routine database tasks and improve efficiency.

My experience includes creating scripts for:

- Database backups and restores: Automating these tasks ensures data protection and efficient recovery in case of failures.

- Data loading and transformation: Scripts can streamline data integration processes from various sources.

- Database monitoring and alerting: Automated monitoring scripts detect potential problems and trigger alerts.

- Schema management: Scripts can help manage schema changes, including creation, modification, and deletion of tables and other database objects.

- Report generation: Scripts can automate the creation of reports using data extracted from the database.

For example, I developed a Python script that automates the nightly backup and verification process for a critical production database, significantly reducing manual intervention and improving data safety.

Q 13. How do you ensure database integrity and data consistency?

Ensuring database integrity and data consistency is paramount. My approach involves a combination of techniques:

- Data Validation: Implementing constraints (e.g., primary keys, foreign keys, check constraints) at the database level prevents invalid data from entering the database.

- Transactions: Using database transactions guarantees that data modifications are atomic – either all changes are committed, or none are. This maintains consistency in case of errors.

- Data Backup and Recovery: Regular backups are essential for recovering from data loss or corruption. Having a well-defined recovery plan is crucial.

- Auditing: Tracking data changes and user actions allows for identifying and investigating inconsistencies.

- Regular Monitoring: Continuous monitoring of the database system’s health, performance, and data integrity is essential for proactive identification and resolution of potential problems.

For example, using triggers to maintain referential integrity across related tables ensures data consistency across the database.

Q 14. What are your experiences with database design and normalization?

Database design and normalization are fundamental to building robust and efficient databases. I’m experienced in designing databases using various normalization forms (1NF, 2NF, 3NF, BCNF) to reduce data redundancy and improve data integrity. I understand the trade-offs between normalization and performance and choose the appropriate level of normalization based on the specific application requirements.

My approach to database design starts with a thorough understanding of the business requirements. I use Entity-Relationship Diagrams (ERDs) to visually represent the data entities and relationships. This helps in identifying primary and foreign keys and establishing relationships between tables.

I’ve worked on various projects where well-structured databases were essential for efficient data management. For example, designing a database for an e-commerce platform required a thorough understanding of product categories, customer profiles, order management, and inventory tracking. By carefully planning the database schema, I was able to ensure that the database was scalable and performed well under high load.

Q 15. Explain your knowledge of different database transaction isolation levels.

Database transaction isolation levels define how transactions interact with each other and protect against concurrency issues. Imagine a bank system: multiple transactions might access the same account simultaneously. Isolation levels ensure that these concurrent operations don’t corrupt data or produce unexpected results. Different levels offer varying degrees of protection and performance trade-offs.

- Read Uncommitted: The lowest level. A transaction can read data that hasn’t been committed by another transaction (dirty reads). This is fast but highly susceptible to inconsistencies.

- Read Committed: A transaction only reads data that has been committed by other transactions. Dirty reads are prevented, but non-repeatable reads (reading the same data multiple times and getting different results) and phantom reads (new rows appearing during a transaction) are still possible.

- Repeatable Read: Prevents dirty reads and non-repeatable reads. A transaction sees a consistent snapshot of data throughout its duration. However, phantom reads are still possible.

- Serializable: The highest level. It ensures that transactions are executed as if they were run sequentially, one after the other. This prevents all concurrency issues but can significantly impact performance.

Practical Application: In a high-volume e-commerce application, choosing the right isolation level is crucial. While Serializable guarantees data integrity, it could severely hinder performance. Read Committed might be a more suitable compromise, balancing data consistency and throughput.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How familiar are you with database performance monitoring tools?

I’m very familiar with a wide range of database performance monitoring tools. My experience spans both open-source and commercial solutions, and I tailor my choice to the specific database system and the needs of the application.

- Commercial Tools: Tools like Dynatrace, Datadog, and SolarWinds provide comprehensive monitoring capabilities, including real-time performance metrics, query analysis, and alerting. They are particularly useful for complex environments requiring extensive reporting and integration with other monitoring systems.

- Open-Source Tools: Tools like Prometheus and Grafana offer a flexible and cost-effective solution. They require more configuration but provide great customization. I’ve successfully used these to build custom dashboards and monitor specific aspects of database performance, including query execution times, wait times, and resource utilization.

- Database-Specific Tools: Most database systems (e.g., Oracle Enterprise Manager, SQL Server Management Studio, pgAdmin) provide their own performance monitoring tools that provide deep insights into the internal workings of the database. These tools often offer features like query profiling and waiting events analysis, crucial for targeted performance tuning.

In my past roles, I’ve used these tools to identify bottlenecks, optimize queries, and troubleshoot performance issues, ultimately improving application responsiveness and user experience.

Q 17. Describe your experience with database capacity planning.

Database capacity planning is a critical aspect of database administration, ensuring the database can handle current and future workloads. It involves forecasting future growth, analyzing resource utilization, and designing a scalable architecture.

My approach typically involves:

- Analyzing historical trends: I examine past database usage patterns – growth rates, peak loads, and resource consumption – to predict future needs. This includes disk space, CPU utilization, memory usage, and connection counts.

- Modeling future growth: I use various forecasting techniques to model anticipated data growth and user activity, taking into account factors like seasonal variations and business growth projections.

- Resource optimization: I identify opportunities for optimization, such as query tuning, schema design improvements, and efficient indexing to maximize the current infrastructure’s capacity before scaling up.

- Capacity forecasting and sizing: Based on my analysis, I determine the required hardware resources (CPU, memory, storage) to meet future demands and design strategies for scaling, like vertical or horizontal scaling (adding more servers).

- Regular monitoring and review: Capacity planning isn’t a one-time event. I continuously monitor database performance and resource usage, adjusting my projections and recommendations as needed.

For example, in a previous project, by accurately forecasting database growth and implementing a proactive scaling strategy, we avoided a major performance bottleneck during a critical marketing campaign.

Q 18. What are your experiences with database security auditing and compliance?

Database security auditing and compliance are paramount. I have extensive experience ensuring databases adhere to industry best practices and regulatory requirements (e.g., HIPAA, PCI DSS). This involves a multi-faceted approach:

- Access control management: Implementing robust user authentication and authorization mechanisms (e.g., least privilege principle) to restrict access to sensitive data. Regularly reviewing and updating user permissions.

- Data encryption: Encrypting data both in transit (using SSL/TLS) and at rest (using database-level encryption) to protect it from unauthorized access.

- Regular security audits: Conducting regular security assessments to identify vulnerabilities and ensure compliance with security policies and standards. This includes vulnerability scanning, penetration testing and using tools to identify suspicious database activity.

- Logging and monitoring: Implementing comprehensive database logging to track all database activities and setting up monitoring tools to detect any suspicious behavior. This allows for quick identification and response to security incidents.

- Vulnerability management: Staying updated on the latest database vulnerabilities and promptly applying security patches to mitigate risks. Regularly reviewing and updating security baselines and hardening configurations.

My experience includes working with various auditing tools and frameworks to generate compliance reports, demonstrating adherence to regulatory requirements. A detailed audit trail provides accountability and enables efficient incident response in case of a security breach.

Q 19. Explain your understanding of database logging and archiving.

Database logging and archiving are essential for data recovery, auditing, and compliance. Logging records all changes made to the database, while archiving involves moving less frequently accessed data to secondary storage.

- Transaction Logs: These logs track all database transactions, allowing for point-in-time recovery in case of failures. The frequency of log backups is crucial, balancing recovery point objectives (RPO) and recovery time objectives (RTO).

- Redo Logs/Archive Logs: Different database systems use different terminology, but the concept remains the same: a record of all transactions needed to rebuild the database to a consistent state.

- Data Archiving: This involves moving older, less frequently accessed data to cheaper storage like cloud storage or tape. Archiving improves database performance and reduces storage costs. This requires a well-defined retention policy to ensure compliance with regulatory requirements.

- Log Shipping/Replication: For high availability and disaster recovery, log shipping or replication can create a secondary copy of the database, enhancing resilience against data loss.

Effective logging and archiving strategies are critical for maintaining data integrity, facilitating efficient recovery, and meeting compliance requirements. Properly configured logging reduces the risk of data loss and ensures business continuity.

Q 20. How do you handle database upgrades and patching?

Database upgrades and patching are critical for security and performance. My approach is methodical and risk-averse:

- Planning and Testing: Before any upgrade or patch, I thoroughly plan the process, including scheduling downtime, creating backups, and testing the upgrade in a non-production environment. Thorough testing is paramount to minimize disruptions.

- Version Control: I maintain detailed documentation of all database versions, patches, and upgrades. This is essential for tracking changes and troubleshooting potential issues.

- Rollback Plan: A robust rollback plan is crucial, outlining the steps needed to revert to the previous version in case of problems during the upgrade. This prevents extended downtime.

- Phased Rollout: For large-scale upgrades, I often recommend a phased rollout approach, starting with a test environment, followed by a pilot deployment to a small subset of users, and then a full deployment.

- Monitoring Post-Upgrade: After the upgrade, I closely monitor database performance and look for any anomalies. This proactive monitoring helps quickly identify and resolve any post-upgrade issues.

By following these steps, we minimize the risk of downtime, ensure data integrity, and maintain the optimal performance of the database system.

Q 21. Describe your experience with cloud-based database services (e.g., AWS RDS, Azure SQL Database).

I have significant experience with cloud-based database services like AWS RDS, Azure SQL Database, and Google Cloud SQL. These services offer several advantages, including scalability, high availability, and managed services.

- AWS RDS: I’ve used RDS extensively to deploy and manage relational databases such as MySQL, PostgreSQL, and Oracle. The managed nature of RDS simplifies administration tasks like backups, patching, and scaling. I’ve leveraged features like read replicas for improved performance and Multi-AZ deployments for high availability.

- Azure SQL Database: Similar to RDS, Azure SQL Database offers a fully managed platform for SQL Server. I’ve utilized features such as elastic pools for cost optimization and built-in security features to ensure data protection.

- Google Cloud SQL: I’ve also worked with Google Cloud SQL, particularly for MySQL and PostgreSQL instances. Its integration with other Google Cloud Platform services is a key advantage.

The choice of a cloud provider and specific service depends on various factors such as existing infrastructure, application requirements, and cost considerations. My experience allows me to choose and configure the appropriate cloud database solution that best aligns with the overall business strategy.

Q 22. What are your experiences with database sharding and partitioning?

Database sharding and partitioning are crucial techniques for scaling databases to handle massive datasets. Think of it like dividing a giant pizza into smaller, more manageable slices. Sharding horizontally partitions the database across multiple servers, distributing the data based on a specific criteria (e.g., user location, customer ID). Each shard is a fully functional database. Partitioning, on the other hand, divides a single database table into smaller, self-contained partitions based on attributes like date or range. This improves query performance by focusing on a smaller subset of data.

In my experience, I’ve worked on a project where we migrated a monolithic e-commerce database to a sharded architecture. We used customer ID as the sharding key, distributing the data across three database servers. This significantly improved query response times and increased our capacity to handle a larger number of concurrent users and transactions. For partitioning, I’ve used it effectively with a large log table, partitioning by month. This allowed for easier archiving and improved performance of queries targeting specific time periods. Choosing the right sharding key and partition key is vital – the key must be evenly distributed to avoid skewing data across shards/partitions.

Q 23. Explain your knowledge of different database storage engines.

Database storage engines are the underlying software components that manage how data is stored and accessed. Different engines offer varying trade-offs in terms of performance, scalability, and features. For example, MyISAM (in MySQL) is known for its speed and simplicity but lacks features like transactions and row-level locking, making it unsuitable for applications requiring data integrity. InnoDB, also in MySQL, is a transactional engine that provides ACID properties (Atomicity, Consistency, Isolation, Durability) which are crucial for many applications. In PostgreSQL, we have heap which is a general-purpose storage engine, and GIN which is specifically optimized for full-text indexing.

In my previous role, we chose InnoDB for our main application database because of its transaction support and robustness. We also utilized MyISAM for logging tables where data integrity wasn’t critical, taking advantage of its speed advantages for write-heavy operations. The choice of storage engine is highly context dependent; you need to understand the specific requirements of your application to make the right choice.

Q 24. How do you handle deadlocks in a database environment?

Deadlocks occur when two or more transactions are blocked indefinitely, waiting for each other to release resources. Imagine two cars stuck in a narrow alleyway, each unable to move until the other reverses. To handle deadlocks, we primarily rely on prevention and detection strategies. Prevention involves ensuring transactions acquire resources in a consistent order (avoiding circular dependencies). Detection involves monitoring transactions for deadlock situations. Most database systems have built-in deadlock detection and resolution mechanisms, usually involving rolling back one of the conflicting transactions.

In practice, I’ve used various tools and techniques to minimize deadlocks. We carefully design database schemas and application logic to minimize the chance of conflicting resource requests. When deadlocks do occur (as they inevitably will in complex systems), I leverage database monitoring tools to identify the culprit transactions and analyze the root cause. Optimizing transaction management, using shorter transactions, and careful resource allocation are crucial steps. Database logs are your best friend here.

Q 25. Describe your experience with database query optimization.

Database query optimization is the process of improving the efficiency of SQL queries to reduce execution time and resource consumption. It’s a critical skill for ensuring the performance of database applications. Techniques include using appropriate indexes, optimizing joins, minimizing data retrieval, and rewriting queries to utilize database features more efficiently.

My approach to optimization involves a combination of techniques: I start with analyzing query execution plans using tools provided by the database system (e.g., EXPLAIN PLAN in Oracle). These plans reveal how the database intends to execute a query, highlighting potential bottlenecks. I then use this information to identify areas for improvement, which might include adding or modifying indexes, using different join strategies, or rewriting the query to avoid full table scans. Profiling tools help pinpoint slow queries to prioritize. For instance, I once optimized a slow reporting query by adding a composite index and rewriting the query to use subqueries instead of complex joins, improving its performance by over 80%.

Q 26. What are your experiences with database failover and disaster recovery?

Database failover and disaster recovery are essential for maintaining data availability and business continuity. Failover is the process of switching over to a backup system in case of a primary system failure. Disaster recovery encompasses broader strategies for restoring the database system after a catastrophic event. This might include regular backups, replication, high-availability configurations, and detailed recovery procedures.

I have extensive experience implementing high-availability solutions using technologies like database replication (both synchronous and asynchronous) and clustered database setups. For example, in a previous project, we implemented a geographically redundant setup using asynchronous replication between two data centers. This provided a failover mechanism in case of an outage in the primary data center, with minimal data loss. We also conducted regular disaster recovery drills to ensure our procedures were effective and up-to-date. Thorough planning, including testing and documentation, is crucial to ensure a smooth recovery process.

Q 27. How do you manage database user accounts and permissions?

Managing database user accounts and permissions is crucial for security and data integrity. This involves creating user accounts, assigning roles, granting appropriate permissions, and regularly reviewing access rights. The goal is to implement the principle of least privilege – granting users only the access necessary to perform their tasks.

In my work, I utilize role-based access control (RBAC) to streamline user management and ensure consistency. I define specific roles (e.g., ‘data analyst’, ‘application developer’) with associated permissions. Users are then assigned to these roles, simplifying the management of permissions. Regular audits and reviews of user access are also conducted to identify any unnecessary or potentially risky privileges. Password policies and strong authentication measures are a critical component of user account management.

Q 28. Explain your experience with data warehousing and business intelligence tools.

Data warehousing and business intelligence (BI) tools are used for storing and analyzing large amounts of data to support business decision-making. Data warehousing involves extracting, transforming, and loading (ETL) data from various sources into a centralized data warehouse. BI tools then provide interactive dashboards, reports, and analytical capabilities for accessing and visualizing this data. Popular tools include Microsoft Power BI, Tableau, and Qlik Sense.

I’ve worked extensively with data warehousing projects, designing and implementing ETL processes using tools like Informatica PowerCenter. I have experience building dimensional models, optimizing data warehouse performance, and creating interactive dashboards using BI tools to present key performance indicators (KPIs) and actionable insights to business users. For instance, I created a data warehouse and BI solution for a retail company that enabled them to track sales trends, customer behavior, and inventory levels, allowing for more data-driven decision-making.

Key Topics to Learn for Database Administration Tools Interview

- Relational Database Management Systems (RDBMS): Understanding concepts like normalization, ACID properties, and different database models (relational, NoSQL).

- SQL Proficiency: Mastering DDL, DML, DCL commands, writing efficient queries, and optimizing database performance using indexing and query tuning.

- Database Design and Modeling: Creating efficient and scalable database schemas, understanding Entity-Relationship Diagrams (ERDs), and choosing appropriate data types.

- Backup and Recovery Strategies: Implementing robust backup and recovery plans, understanding point-in-time recovery, and dealing with data corruption scenarios.

- Performance Monitoring and Tuning: Utilizing monitoring tools to identify performance bottlenecks, optimizing query execution plans, and implementing strategies to enhance database efficiency.

- Security and Access Control: Implementing user roles and permissions, securing database connections, and applying security best practices to prevent unauthorized access.

- High Availability and Disaster Recovery: Understanding concepts like failover clusters, replication, and implementing disaster recovery strategies to ensure business continuity.

- Cloud-Based Database Services (AWS RDS, Azure SQL, Google Cloud SQL): Familiarity with cloud-based database solutions and their administration.

- Troubleshooting and Problem Solving: Developing analytical skills to diagnose and resolve database-related issues efficiently.

- Specific Tool Expertise: Deep dive into specific tools you’ve worked with (e.g., pgAdmin for PostgreSQL, SQL Server Management Studio, MySQL Workbench). Highlight your practical experience and accomplishments.

Next Steps

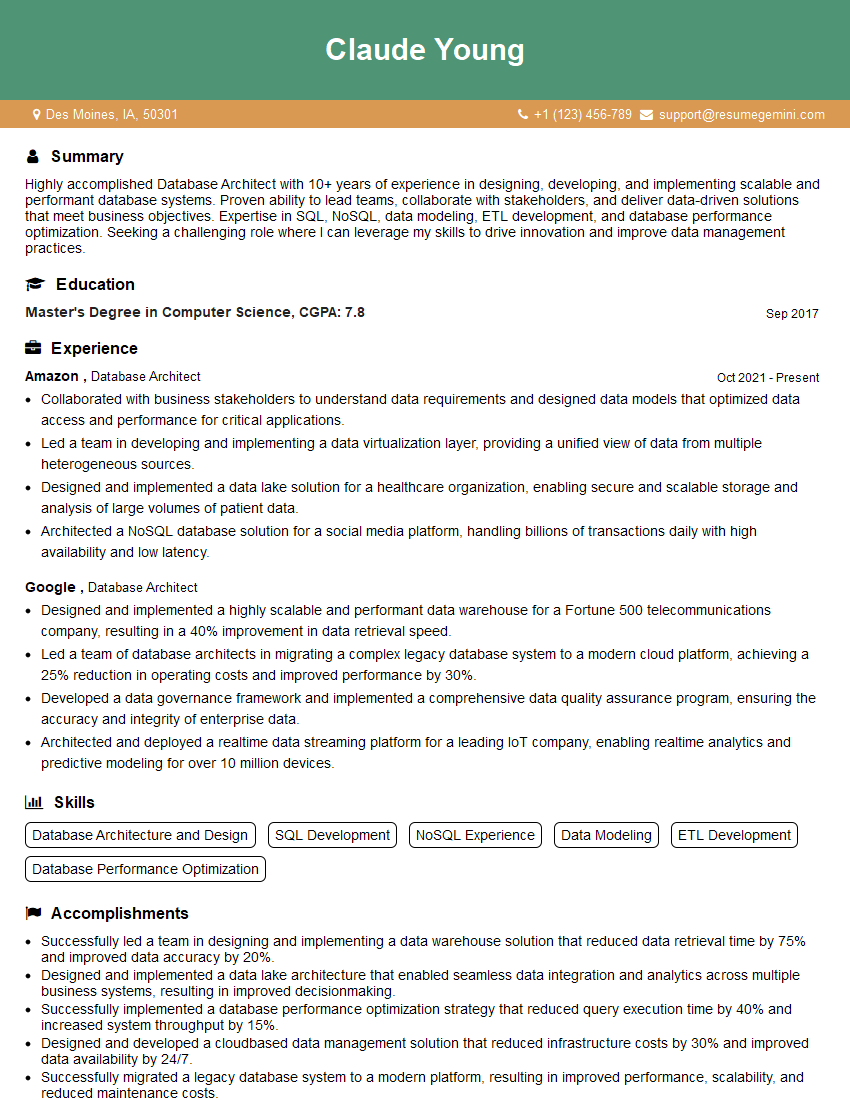

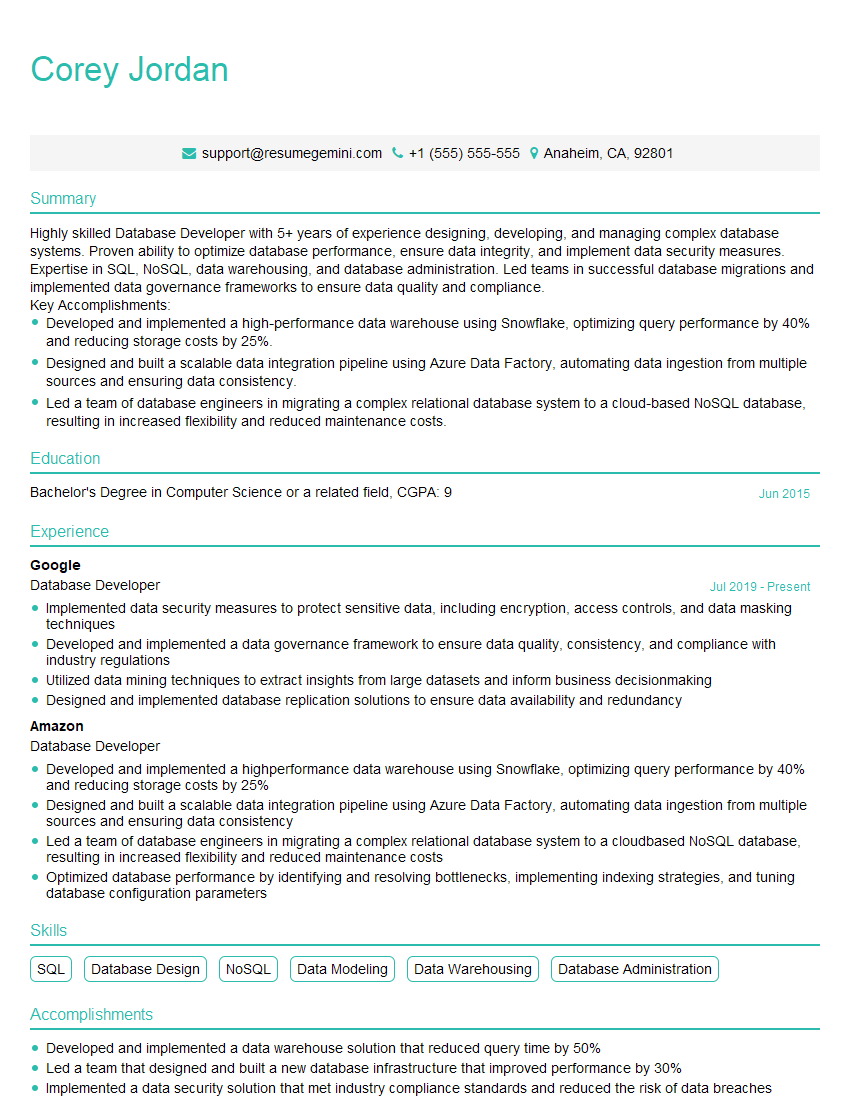

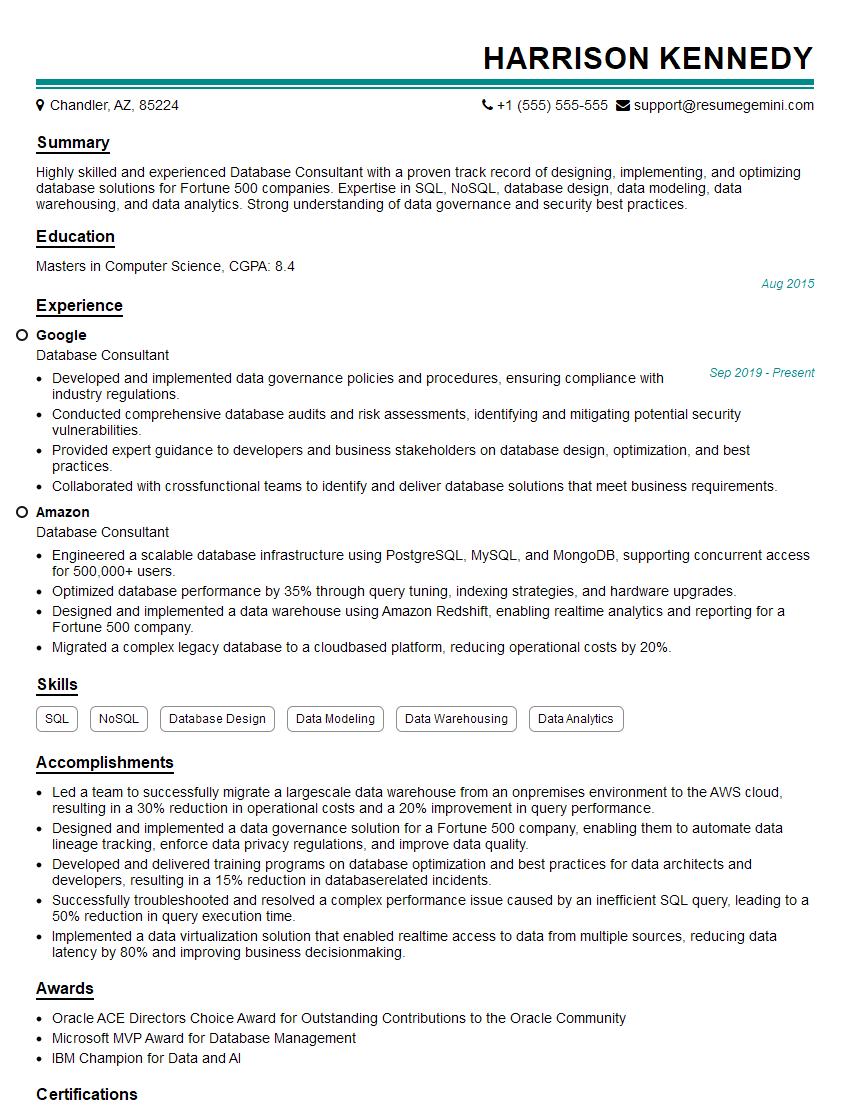

Mastering Database Administration Tools is crucial for career advancement in today’s data-driven world. Proficiency in these areas significantly increases your marketability and opens doors to exciting opportunities with higher earning potential. To maximize your job prospects, invest time in creating a compelling and ATS-friendly resume that effectively showcases your skills and experience. ResumeGemini is a trusted resource that can help you build a professional resume tailored to the Database Administration field. We provide examples of resumes specifically designed for Database Administration Tools roles to help you get started. Take control of your career future – start building that winning resume today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO