Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Database Management (e.g., MySQL, SQL Server) interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Database Management (e.g., MySQL, SQL Server) Interview

Q 1. Explain the difference between clustered and non-clustered indexes.

Clustered and non-clustered indexes are both ways to speed up data retrieval in a database, but they differ fundamentally in how they organize data.

A clustered index is a special type of index that reorders the physical data in the table based on the indexed column(s). Think of it like organizing books alphabetically on a shelf; the physical order of the books reflects the alphabetical order of their titles. Only one clustered index can exist per table because the physical data can only be ordered one way. This makes retrieving data based on the clustered index column(s) extremely fast, as the database knows exactly where to find the relevant data.

A non-clustered index, on the other hand, is a separate structure that contains a pointer to the actual data rows in the table. It’s like having a separate index card catalog for your library – each card points to the shelf where a book is located. You can have multiple non-clustered indexes on a single table, each indexing different columns, allowing for quick lookups on various fields. Accessing data through a non-clustered index requires an extra step: first find the pointer in the index, then retrieve the data using that pointer. While still fast, it’s not as fast as a clustered index.

Example: Imagine a table of customer data with columns like CustomerID (primary key), Name, Address, and City. If CustomerID has a clustered index, the rows will be physically sorted by CustomerID. A non-clustered index on City would create a separate structure allowing quick retrieval of all customers from a specific city.

Q 2. What are ACID properties in a database transaction?

ACID properties are crucial for ensuring data integrity and reliability in database transactions. They are:

- Atomicity: A transaction is treated as a single, indivisible unit of work. Either all changes within the transaction are successfully committed, or none are. It’s like an all-or-nothing approach. If a power outage happens mid-transaction, the database will automatically roll back any changes made so far.

- Consistency: A transaction must maintain the database’s integrity constraints. It starts in a valid state, performs operations, and ends in a valid state. For example, if a transaction transfers money between two accounts, the total amount of money in both accounts should remain the same after the transaction.

- Isolation: Multiple concurrent transactions appear to be executed sequentially. Each transaction operates as if it were the only transaction being executed, preventing interference between transactions (e.g., dirty reads, lost updates, non-repeatable reads).

- Durability: Once a transaction is committed, the changes are permanently saved, even in case of a system failure. The data is written to persistent storage (like disk) to ensure it survives crashes or power outages.

These properties ensure data consistency and reliability even when many users or applications are simultaneously accessing and modifying data. Without ACID properties, data corruption and inconsistencies could easily occur.

Q 3. Describe different types of database joins (INNER, LEFT, RIGHT, FULL OUTER).

Database joins combine rows from two or more tables based on a related column between them. Different types of joins provide different ways to handle rows that don’t have matches in the other table:

- INNER JOIN: Returns only the rows where the join condition is met in both tables. Think of it like finding the intersection of two sets. Rows that don’t have a match in the other table are excluded.

- LEFT (OUTER) JOIN: Returns all rows from the left table (the one specified before

LEFT JOIN), even if there’s no match in the right table. For rows without a match in the right table, the columns from the right table will containNULLvalues. - RIGHT (OUTER) JOIN: Returns all rows from the right table (the one specified after

RIGHT JOIN), even if there’s no match in the left table. For rows without a match in the left table, the columns from the left table will containNULLvalues. - FULL (OUTER) JOIN: Returns all rows from both tables. If a row has a match in the other table, the corresponding columns are populated; otherwise,

NULLvalues are used for the unmatched columns. It’s like combining the results of bothLEFT JOINandRIGHT JOIN.

Example (SQL): Let’s say we have tables Customers and Orders. An INNER JOIN would only return customers who have placed orders, a LEFT JOIN would return all customers and their orders (or NULL if no orders), and a FULL OUTER JOIN would return all customers and all orders, showing which customers have placed orders and which orders belong to customers.

Q 4. How do you optimize database queries for performance?

Optimizing database queries is crucial for application performance. Here are key strategies:

- Indexing: Create indexes on frequently queried columns to speed up data retrieval. Indexes are like the index in a book; they allow the database to quickly locate specific rows without scanning the entire table.

- Query Optimization Techniques: Use

EXPLAIN(in MySQL) or similar tools to analyze the query execution plan. Identify bottlenecks (e.g., full table scans) and rewrite the query to use indexes effectively. For example, avoid using functions withinWHEREclauses if possible, as this can prevent index usage. - Proper Data Types: Choose appropriate data types for columns; using smaller data types can reduce storage space and improve performance.

- Database Caching: Utilize database caching mechanisms to store frequently accessed data in memory for faster retrieval. This reduces the need to access the disk frequently.

- Database Connection Pooling: Reuse database connections instead of creating new ones for each request; this reduces overhead and improves application speed.

- Proper Schema Design: Carefully design database tables and relationships to reduce data redundancy and improve query efficiency. Normalization helps achieve this.

- Avoid Using SELECT * : Only select the columns you actually need, rather than selecting all columns using

SELECT *; this reduces the amount of data transferred. - Load Testing and Profiling: Perform load testing to identify performance bottlenecks under realistic conditions, then use profiling tools to analyze query performance in detail.

Example: If you have a query that frequently filters by a specific column, creating an index on that column can drastically improve performance. Analyzing slow queries using EXPLAIN can help identify areas for improvement.

Q 5. What are the different types of database normalization?

Database normalization is a process of organizing data to reduce redundancy and improve data integrity. Several normal forms exist, each addressing specific types of redundancy:

- First Normal Form (1NF): Eliminate repeating groups of data within a table. Each column should contain only atomic values (single values).

- Second Normal Form (2NF): Be in 1NF and eliminate redundant data that depends on only part of the primary key (in tables with composite keys).

- Third Normal Form (3NF): Be in 2NF and eliminate columns that depend on non-key columns (transitive dependency).

- Boyce-Codd Normal Form (BCNF): A stricter version of 3NF, addressing certain anomalies that 3NF might not handle.

Example: Consider a table with customer orders. A non-normalized table might have repeating order details in the same row. Normalizing this would involve creating separate tables for customers and their individual orders, linking them using a foreign key. This eliminates redundancy and simplifies data updates and modifications.

The higher the normal form, the less redundancy, but sometimes higher normal forms can result in more complex queries, requiring a trade-off between data integrity and query performance. Choosing the appropriate normal form depends on the specific needs of the application.

Q 6. Explain the concept of database sharding.

Database sharding is a technique of horizontally partitioning a large database across multiple database servers. Imagine splitting a large dictionary into multiple smaller dictionaries, each covering a part of the alphabet. Each shard contains a subset of the total data, improving scalability and performance.

This is particularly useful for very large databases that cannot fit on a single server or when the data access needs are spread across different geographical regions. Each shard is typically independent and can be managed separately. Sharding improves read and write performance by distributing the load across multiple servers.

Challenges: Properly distributing data across shards (sharding key selection) is crucial. Managing transactions spanning multiple shards and ensuring data consistency across them is complex. Queries that involve data across multiple shards require special handling (query routing and aggregation).

Q 7. How do you handle database concurrency issues?

Database concurrency issues arise when multiple users or applications access and modify the same data simultaneously. This can lead to data corruption or inconsistencies. Several techniques help manage concurrency:

- Locking: Database systems use locks to prevent multiple transactions from accessing and modifying the same data concurrently. Different types of locks exist, including shared locks (allowing reading) and exclusive locks (allowing both reading and writing). Appropriate lock selection is crucial for balancing concurrency and data integrity.

- Transactions: Using transactions with ACID properties guarantees that changes made by a transaction are atomic and consistent, even if other transactions are running concurrently.

- Optimistic Locking: This approach checks for conflicts before committing a transaction. It assumes that conflicts are rare and avoids using locks until a conflict is detected (e.g., checking a version number before updating a row).

- Pessimistic Locking: This approach uses locks to prevent conflicts proactively. It is suitable in situations where concurrency conflicts are frequent.

Choosing the right concurrency control mechanism depends on the application’s requirements and the frequency of concurrent access. A well-designed database schema and appropriate locking strategies are essential for preventing data corruption and ensuring data integrity in concurrent environments.

Q 8. What are stored procedures and how are they beneficial?

Stored procedures are pre-compiled SQL code blocks that can be stored and reused within a database. Think of them as functions or subroutines for your database. They encapsulate a set of SQL statements, improving code reusability and maintainability.

Benefits:

- Improved Performance: Since they’re pre-compiled, they execute faster than individual SQL statements. The database optimizer can analyze the entire procedure at once, leading to optimized execution plans.

- Enhanced Security: Stored procedures provide a layer of security by allowing you to grant access to the procedure itself, rather than granting direct access to underlying database tables. This helps prevent unauthorized data modification.

- Reduced Network Traffic: A single call to a stored procedure can replace multiple round trips between the application and the database, reducing network overhead.

- Code Reusability: Stored procedures can be called from multiple applications or parts of an application, reducing code duplication and making maintenance easier.

- Data Integrity: Transactions within a stored procedure guarantee atomicity – all operations either succeed or fail as a unit, maintaining data consistency.

Example (MySQL):

DELIMITER //

CREATE PROCEDURE GetCustomersByName(IN name VARCHAR(255))

BEGIN

SELECT * FROM Customers WHERE name LIKE CONCAT('%', name, '%');

END //

DELIMITER ;This procedure efficiently retrieves customers based on a partial name match.

Q 9. Explain the difference between DELETE and TRUNCATE commands.

Both DELETE and TRUNCATE commands remove data from a table, but they differ significantly in their behavior and implications:

DELETE: This command removes rows based on a specified condition (WHEREclause). It’s a DML (Data Manipulation Language) command, meaning it’s logged in the transaction log (in systems like SQL Server). It allows for row-by-row deletion and supports rollback.TRUNCATE: This command removes all rows from a table without logging individual row deletions. It’s a DDL (Data Definition Language) command, making it faster thanDELETEbut without the possibility of rollback. It deallocates data pages, making it more efficient for large tables.

Key Differences Summarized:

| Feature | DELETE | TRUNCATE |

|---|---|---|

| Speed | Slower | Faster |

| Logging | Logged | Not logged (generally) |

| Rollback | Possible | Not possible |

| WHERE clause | Supported | Not supported |

| Data page deallocation | No | Yes |

Example:

DELETE FROM Orders WHERE order_date < '2023-01-01'; -- Deletes specific rows

TRUNCATE TABLE Orders; -- Deletes all rows

Q 10. How do you troubleshoot database performance issues?

Troubleshooting database performance issues requires a systematic approach. Here's a breakdown:

- Identify the Problem: Start by pinpointing the slowness. Is it specific queries, overall system performance, or something else? Use tools like query analyzers (e.g., SQL Server Profiler, MySQL slow query log) to identify bottlenecks.

- Analyze Query Performance: Examine slow queries. Are there missing indexes? Are there table scans instead of index seeks? Use execution plans to understand how the database is processing queries.

- Check Resource Usage: Monitor CPU, memory, and disk I/O usage. High resource consumption can indicate bottlenecks. Check for memory leaks, excessive disk activity, or CPU saturation.

- Review Database Design: Inefficient database design (poor normalization, lack of appropriate indexes, etc.) can severely impact performance. Analyze table structures, relationships, and data types. Consider database optimization strategies, such as denormalization or partitioning.

- Examine Locking and Concurrency Issues: Long-running transactions or deadlocks (explained later) can cause significant performance degradation. Analyze locking patterns and transaction management.

- Optimize Indexes: Ensure appropriate indexes are present on frequently queried columns. However, too many indexes can also slow down write operations; there is a balance.

- Hardware Considerations: Inadequate hardware (CPU, RAM, disk speed) can be a major bottleneck. Assess your hardware resources and consider upgrades if necessary.

- Database Statistics: Keep your database statistics up-to-date. Outdated statistics can lead to suboptimal query plans.

- Connection Pooling: Using connection pooling reduces the overhead of establishing new database connections for each request.

- Caching: Implementing caching mechanisms can reduce database load by storing frequently accessed data in memory.

By systematically investigating these areas, you can effectively pinpoint and resolve database performance issues.

Q 11. What are triggers and how are they used?

Database triggers are stored procedures that automatically execute in response to certain events on a particular table or view. Imagine them as event listeners for your database. They run automatically without explicit calls.

Types of Triggers:

- BEFORE/AFTER Triggers: These triggers fire before or after a specific event (INSERT, UPDATE, DELETE).

- INSTEAD OF Triggers: Used with views to allow INSERT, UPDATE, or DELETE operations that would otherwise be restricted.

Uses:

- Auditing: Log changes to the database, recording who made the changes, when, and what was changed.

- Data Validation: Enforce constraints or rules on data before it's inserted or updated.

- Cascading Updates: Automatically update related tables when a change occurs in one.

- Maintaining Data Integrity: Ensure referential integrity and prevent inconsistent data.

Example (MySQL):

CREATE TRIGGER audit_customer_changes

BEFORE UPDATE ON Customers

FOR EACH ROW

BEGIN

INSERT INTO CustomerAudit (CustomerID, OldName, NewName, UpdatedBy, UpdatedAt)

VALUES (OLD.CustomerID, OLD.Name, NEW.Name, USER(), NOW());

END;This trigger logs changes to the 'Customers' table before an update occurs.

Q 12. Explain different types of database backups and recovery strategies.

Database backup and recovery strategies are crucial for data protection. Here are common types:

- Full Backup: A complete copy of the entire database at a specific point in time. It's the foundation for other backup types.

- Differential Backup: A copy of only the data that has changed since the last full backup. Faster than a full backup but requires a full backup to restore.

- Incremental Backup: A copy of the data that has changed since the last backup (full or incremental). Fastest type, but requires both a full and potentially multiple incremental backups to restore.

- Transaction Log Backup (for systems like SQL Server): Backups of the transaction log, which records all database changes. Crucial for point-in-time recovery.

Recovery Strategies:

- Full Recovery: Restoring from a full backup and applying subsequent transaction log backups. Offers granular point-in-time recovery.

- Bulk-Logged Recovery: Restoring from a full backup and applying minimal transaction log backups. Faster than full recovery, but less granular point-in-time recovery. Used for less critical data.

Choosing the right backup strategy depends on factors like the size of the database, recovery time objective (RTO), and recovery point objective (RPO).

Example Scenario: A company might use a full backup weekly, differential backups daily, and transaction log backups hourly to balance restoration speed with data loss minimization.

Q 13. How do you ensure database security?

Database security is paramount. Here's how to ensure it:

- User Access Control: Implement granular access control using roles and permissions. Grant only the necessary privileges to each user or group.

- Strong Passwords and Authentication: Enforce strong password policies and use multi-factor authentication (MFA) for enhanced security.

- Regular Security Audits: Conduct regular security assessments to identify vulnerabilities and potential threats.

- Data Encryption: Encrypt sensitive data both in transit (SSL/TLS) and at rest (database encryption).

- Network Security: Protect the database server from unauthorized network access using firewalls and intrusion detection/prevention systems.

- Input Validation: Sanitize user input to prevent SQL injection attacks. Never trust user-supplied data.

- Stored Procedures: Use stored procedures to encapsulate database logic and control access to data.

- Regular Patching and Updates: Keep the database software, operating system, and related components up-to-date to mitigate known vulnerabilities.

- Principle of Least Privilege: Grant users only the minimum permissions needed to perform their tasks.

- Monitoring and Logging: Monitor database activity and log all access attempts, successful and unsuccessful, to detect suspicious behavior.

A layered security approach, combining multiple techniques, provides the most comprehensive protection.

Q 14. What is a deadlock and how can you prevent it?

A deadlock occurs when two or more transactions are blocked indefinitely, waiting for each other to release the resources that they need. Imagine two people trying to walk through a narrow doorway at the same time – neither can proceed until the other moves.

Causes: Deadlocks arise when transactions access resources in different orders.

Prevention Techniques:

- Careful Transaction Design: Access resources in a consistent order. If transactions always acquire locks in the same sequence, the probability of a deadlock is significantly reduced.

- Short Transactions: Keep transactions short to minimize the time resources are held.

- Lock Timeouts: Set timeouts on locks. If a transaction waits too long for a lock, it will be rolled back, breaking the deadlock.

- Deadlock Detection and Resolution: Database systems have mechanisms to detect deadlocks. When a deadlock is detected, one of the transactions is usually rolled back to break the deadlock.

- Avoid unnecessary locks: Only lock the resources absolutely required.

The choice of deadlock prevention method depends on the specific application and database system.

Q 15. What is indexing and why is it important?

Indexing in a database is like creating a detailed table of contents for a book. It significantly speeds up data retrieval by allowing the database to quickly locate specific rows without having to scan the entire dataset. Think of it as a shortcut. Instead of reading the whole book to find a specific word, you use the index to quickly locate the page.

Indexes are created on one or more columns of a table, and they work by storing the values of those columns along with the row locations. When a query is executed, the database engine first checks the index to see if it can find the requested data. If it finds a match, it directly retrieves the corresponding rows, resulting in much faster query execution.

Importance: Indexes are crucial for improving database performance, especially in large databases where retrieving data without indexes can be incredibly slow. They're essential for applications that require quick response times, like online transaction processing systems or search engines.

Example: Imagine a table with millions of customer records. Searching for a specific customer by their name without an index would take a very long time. However, if you create an index on the 'name' column, the database can quickly locate the customer record and return it almost instantaneously.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini's guide. Showcase your unique qualifications and achievements effectively.

- Don't miss out on holiday savings! Build your dream resume with ResumeGemini's ATS optimized templates.

Q 16. Explain the concept of database replication.

Database replication is the process of copying data from one database (the primary or master) to one or more other databases (the replicas or slaves). This is like having a backup of your important files stored in a different location – in case something happens to the original, you have a copy.

Purpose: Replication is used for various reasons, including:

- High Availability: If the primary database fails, a replica can take over, ensuring continuous operation.

- Scalability: Distributing read operations across multiple replicas reduces the load on the primary database, improving performance and handling increased traffic.

- Geographic Distribution: Replicas can be placed in different geographic locations to reduce latency for users in those regions.

- Disaster Recovery: Replicas serve as a backup in case of a disaster at the primary site.

Types: There are different types of replication, including synchronous (the replica acknowledges data before the primary proceeds) and asynchronous (the replica updates later, potentially leading to a slight lag).

Example: A large e-commerce company might use database replication to distribute read traffic across multiple servers worldwide. This ensures fast response times for customers regardless of their location and also protects against database failures.

Q 17. What are views and how are they used?

A database view is a virtual table based on the result-set of an SQL statement. It doesn't physically store data, but instead presents a customized view of data from one or more underlying base tables. Think of it as a saved query or a custom report.

Uses:

- Data Security: Views can restrict access to sensitive data. You can create a view that only shows specific columns or rows, preventing users from seeing sensitive information.

- Data Simplification: Views can simplify complex queries by providing a simpler interface to the underlying data.

- Data Customization: Views allow you to present data in a specific format or with calculated columns, without altering the base tables.

- Maintainability: If the underlying table structure changes, you only need to update the view definition instead of modifying all queries that access that data.

Example: You could create a view that combines data from two tables – 'customers' and 'orders' – to show each customer's total order value. This simplifies reporting without needing to write complex joins every time you need this information.

CREATE VIEW CustomerTotalOrders AS SELECT c.CustomerID, c.Name, SUM(o.OrderTotal) AS TotalSpent FROM Customers c JOIN Orders o ON c.CustomerID = o.CustomerID GROUP BY c.CustomerID, c.Name;Q 18. Describe your experience with database design and modeling.

My experience with database design and modeling spans several years and various projects. I'm proficient in using Entity-Relationship Diagrams (ERDs) to model databases and translate business requirements into a relational database structure. I use tools like Lucidchart and draw.io to create ERDs, which provide a visual representation of the entities (tables), their attributes (columns), and the relationships between them.

I always start by thoroughly understanding the business requirements, identifying the key entities involved, their attributes, and how they relate to each other. I consider data normalization principles (1NF, 2NF, 3NF, etc.) to minimize data redundancy and ensure data integrity. I also take into account performance considerations, including indexing strategies, and the potential for future growth.

For example, in a recent project for an online store, I designed a database with tables for products, customers, orders, and inventory. I carefully considered the relationships between these tables, such as one-to-many relationships between customers and orders, and many-to-many relationships between products and orders.

Beyond relational databases, I have experience with NoSQL databases, particularly in situations where the relational model doesn't adequately meet specific needs. For instance, I've used MongoDB for applications that required highly flexible schema and fast document retrieval.

Q 19. How familiar are you with different database monitoring tools?

I have experience with several database monitoring tools, each with its strengths and weaknesses. My familiarity extends to both open-source and commercial solutions.

Commercial Tools: I have used tools like Datadog, New Relic, and SolarWinds. These offer comprehensive dashboards for monitoring key database metrics like CPU usage, memory consumption, query performance, connection pool sizes and transaction counts, as well as providing alerting capabilities. These are great for larger organizations that require sophisticated monitoring and alerting.

Open-Source Tools: I'm also experienced with tools like Prometheus and Grafana. Prometheus is a powerful metrics monitoring system, and Grafana provides the interface for visualization and dashboard creation. These are excellent for cost-effective monitoring, particularly useful in smaller settings.

The choice of tool often depends on the size and complexity of the database system, the budget available, and the level of integration needed with other monitoring tools within the organization. I’m comfortable configuring and interpreting the data provided by any of these tools to identify performance bottlenecks and troubleshoot issues.

Q 20. What is a transaction log and its importance?

A transaction log is a file that records all database transactions. It's essentially a detailed history of all changes made to the database. Think of it as a detailed undo/redo log for the database.

Importance: The transaction log plays a critical role in:

- Data Recovery: In case of a database crash or failure, the transaction log is used to restore the database to a consistent state. This ensures that no data is lost and that the database can recover from unexpected outages.

- Data Integrity: The transaction log ensures data integrity by recording each transaction as an atomic unit. This means that either all changes in a transaction are applied successfully, or none are applied, maintaining consistency.

- Point-in-Time Recovery: The transaction log enables point-in-time recovery, allowing restoration of the database to a specific point in time before a failure.

Example: If a power outage interrupts a transaction halfway through, the transaction log will allow for the database to rollback the incomplete changes. The next time the database starts up, the recovery process will utilize the log to bring the database into a valid and consistent state.

Q 21. Explain the difference between primary key and foreign key.

Both primary keys and foreign keys are essential constraints used in relational databases to maintain data integrity, but they serve different purposes.

Primary Key: A primary key uniquely identifies each row in a table. It's like a unique ID or serial number for each record. A table can have only one primary key, and it cannot contain null values.

Foreign Key: A foreign key is a column (or set of columns) in one table that refers to the primary key of another table. It establishes a relationship between the two tables. Foreign keys help ensure referential integrity – ensuring that related data exists in both tables.

Example: Consider two tables: 'Customers' (with columns CustomerID, Name, Address) and 'Orders' (with columns OrderID, CustomerID, OrderDate, Total). 'CustomerID' is the primary key in the 'Customers' table and a foreign key in the 'Orders' table. This foreign key enforces referential integrity: you cannot have an order in the 'Orders' table without a corresponding customer in the 'Customers' table.

In essence, a primary key uniquely identifies a record within its own table, while a foreign key creates links between records in different tables.

Q 22. How do you handle data integrity issues?

Data integrity is paramount in database management. It ensures the accuracy, consistency, and reliability of your data. I handle integrity issues through a multi-pronged approach, focusing on prevention and remediation.

- Constraints: I leverage database constraints like

UNIQUE,PRIMARY KEY,FOREIGN KEY,CHECK, andNOT NULLto enforce rules at the database level. For instance, aFOREIGN KEYconstraint prevents orphaned records by ensuring that a reference in one table always points to a valid entry in another. This prevents accidental deletions or updates that violate referential integrity. - Data Validation: Before data is inserted or updated, I rigorously validate it using stored procedures or triggers. This involves checks for data type, range, format, and business rules. For example, a trigger could prevent the insertion of a negative value into a column representing age.

- Regular Audits: I perform regular data audits to detect and correct inconsistencies or errors. This might involve comparing data against known good sources or using data profiling tools to identify anomalies. Early detection allows for swift correction before issues escalate.

- Transaction Management: Transactions are crucial for maintaining consistency. Using

ACIDproperties (Atomicity, Consistency, Isolation, Durability), I ensure that database operations are atomic and consistent, even in the event of failures. This prevents partial updates and ensures data remains reliable. - Data Cleansing: Periodically, I cleanse the data to correct existing inaccuracies, handle missing values, and eliminate duplicates. This might involve writing custom scripts or using data cleansing tools.

Imagine an e-commerce application: Constraints prevent customers from having duplicate email addresses, data validation ensures that product prices are positive, and regular audits catch any discrepancies in inventory numbers. A robust integrity approach is the foundation of a reliable and trustworthy database system.

Q 23. What are your experiences with database migration?

Database migration is a critical process I've undertaken many times. It involves moving data from one database system to another, or from one version of a database system to another. The process is complex and needs meticulous planning.

- Assessment: First, I thoroughly assess the source and target databases, comparing schemas, data types, and constraints. This helps identify any potential compatibility issues.

- Data Extraction: Next, I extract data from the source database using efficient methods like bulk export or change data capture (CDC). I optimize extraction for performance, especially with large datasets.

- Data Transformation: Often, data needs transformation to align with the target database schema. This might involve data type conversions, cleaning, or applying business rules. I usually use scripting languages like Python or SQL for this task.

- Data Loading: The transformed data is loaded into the target database. I choose the most efficient loading method based on the size and structure of the data. Strategies include bulk loading, batch processing, or using database utilities.

- Validation and Verification: After loading, I thoroughly validate and verify the data to ensure accuracy and completeness. This often involves comparison with the source database or running integrity checks.

- Testing: Thorough testing is paramount to ensure that applications and reports function correctly after migration. This involves testing application logic and querying migrated data.

In a recent project, I migrated a large e-commerce database from MySQL to a cloud-based PostgreSQL instance. The process involved careful planning, efficient data extraction using optimized SQL queries, and a phased approach to minimize downtime.

Q 24. What experience do you have with NoSQL databases?

My experience with NoSQL databases, specifically MongoDB and Cassandra, complements my relational database skills. NoSQL databases are ideal for handling large volumes of unstructured or semi-structured data. I understand their strengths and weaknesses and when to apply them.

- Schema Flexibility: NoSQL databases offer schema flexibility, enabling quick adaptation to evolving data structures. This is advantageous in rapidly changing environments.

- Scalability: They excel at horizontal scaling, easily accommodating massive datasets and high traffic. This makes them suitable for applications like social media or IoT.

- Data Modeling: I'm proficient in various NoSQL data models, including document (MongoDB), key-value (Redis), graph (Neo4j), and column-family (Cassandra) models. Choosing the right model depends on the specific application needs.

- Querying: NoSQL querying differs from SQL. I understand the limitations and optimization strategies for NoSQL query languages like MongoDB's query language.

- Data Consistency: It's crucial to understand that NoSQL databases often sacrifice strong consistency for availability and scalability. I choose the appropriate consistency level based on application requirements.

For instance, I used MongoDB in a project involving user profiles and activity data, benefiting from its flexible schema and scalability to handle a large user base.

Q 25. How do you optimize large datasets for query performance?

Optimizing large datasets for query performance is crucial. It's not just about writing efficient queries, but also about database design and infrastructure. My approach includes several key strategies.

- Indexing: Proper indexing is paramount. I strategically create indexes on frequently queried columns to speed up data retrieval. The choice of index type (B-tree, hash, full-text) depends on the query patterns.

- Query Optimization: Writing efficient SQL queries is critical. This involves using appropriate join types, avoiding full table scans, and using functions effectively. Analyzing query execution plans helps identify performance bottlenecks.

- Database Tuning: This involves adjusting database parameters (buffer pool size, memory allocation) to optimize resource utilization and minimize I/O operations.

- Data Partitioning: For extremely large datasets, partitioning can improve query performance by distributing data across multiple physical units. This enables parallel processing and reduces query response times.

- Database Caching: Caching frequently accessed data in memory significantly reduces database load and improves query response times. Database systems often provide built-in caching mechanisms.

- Read Replicas: For read-heavy workloads, deploying read replicas can offload read operations from the primary database server, improving overall performance.

For example, in a project involving a large sales database, I implemented composite indexes on frequently used combinations of columns, resulting in a significant reduction in query response time. I also used database tuning to optimize buffer pool size, and implemented caching to improve read operations.

Q 26. Describe your experience with database tuning and performance optimization.

Database tuning and performance optimization are iterative processes. I use a combination of tools and techniques to identify and resolve performance bottlenecks.

- Performance Monitoring: I use database monitoring tools to track key metrics such as CPU utilization, memory usage, I/O wait times, and query execution times. This helps identify areas for improvement.

- Query Analysis: I analyze query execution plans using tools like

EXPLAIN PLAN(in Oracle) or SQL Server Profiler to identify slow-running queries and optimize them. - Index Optimization: I regularly review existing indexes and add or remove them as needed, based on query patterns and data access patterns.

- Schema Optimization: This includes refining table structures, normalizing data, and using appropriate data types to minimize storage space and improve query performance.

- Hardware Optimization: For significant performance gains, it might be necessary to upgrade hardware such as adding more RAM or faster storage devices.

- Caching Strategies: I optimize the usage of caching mechanisms to store frequently accessed data in memory, thereby reducing database load.

In a previous role, I optimized a slow-performing reporting system by identifying and tuning poorly performing queries. This involved adding indexes, optimizing join conditions and rewriting several queries, resulting in a substantial improvement in report generation times.

Q 27. Explain your understanding of different query optimization techniques.

Query optimization techniques aim to improve the efficiency of database queries. My approach involves a combination of strategies.

- Choosing the Right Join: I select appropriate join types (INNER JOIN, LEFT JOIN, etc.) based on the query requirements, avoiding inefficient joins like CROSS JOIN when possible.

- Index Usage: Creating and using appropriate indexes to avoid full table scans is crucial. I analyze the query execution plan to identify missing indexes.

- Predicate Pushing: Filtering data as early as possible in the query execution process reduces the amount of data processed.

- Query Rewriting: Sometimes rewriting queries in a more efficient way can significantly improve performance. This involves understanding query execution plans and rewriting suboptimal queries.

- Subqueries Optimization: Complex subqueries can be slow. I often convert them to joins for improved efficiency.

- Using Hints: In some cases, using database hints can guide the query optimizer to use a particular execution plan.

- Stored Procedures: Using stored procedures can improve performance by pre-compiling queries and optimizing execution plans.

For example, I once optimized a query involving a series of nested subqueries by rewriting it using joins. This dramatically reduced query execution time.

Q 28. What is your experience with cloud-based database services (AWS RDS, Azure SQL, Google Cloud SQL)?

I have extensive experience with cloud-based database services, including AWS RDS, Azure SQL, and Google Cloud SQL. I understand their strengths, limitations, and best practices.

- AWS RDS: I've used AWS RDS for managing MySQL, PostgreSQL, and Oracle databases. I'm familiar with its features like automatic backups, high availability, and scaling options.

- Azure SQL: I have experience with Azure SQL Database, leveraging its features such as elastic pools for managing multiple databases, and its integration with other Azure services.

- Google Cloud SQL: I've used Google Cloud SQL for MySQL and PostgreSQL, taking advantage of its scalability and integration with other Google Cloud Platform (GCP) services.

- Cost Optimization: I'm adept at optimizing cloud database costs by choosing appropriate instance sizes, utilizing reserved instances, and implementing efficient data storage strategies.

- Security: I'm aware of security best practices for cloud databases, including network configurations, access controls, and encryption.

In a previous project, I migrated an on-premises SQL Server database to Azure SQL Database, leveraging Azure's scalability and managed services to improve performance and reduce maintenance overhead.

Key Topics to Learn for Database Management (e.g., MySQL, SQL Server) Interview

- Relational Database Concepts: Understand normalization, ACID properties, and different database models (Relational, NoSQL - briefly). Practice designing efficient database schemas.

- SQL Proficiency: Master SELECT, INSERT, UPDATE, DELETE statements. Learn about joins (INNER, LEFT, RIGHT, FULL), subqueries, aggregate functions (AVG, SUM, COUNT), and GROUP BY clauses. Practice writing complex queries efficiently.

- Data Modeling and Design: Practice designing efficient database schemas using Entity-Relationship Diagrams (ERDs). Understand the importance of data integrity and constraints (primary keys, foreign keys, unique constraints).

- Indexing and Query Optimization: Learn how indexes work and how to optimize queries for performance. Understand query execution plans and strategies for improving query speed.

- Transactions and Concurrency Control: Understand how transactions ensure data consistency. Learn about locking mechanisms and different isolation levels.

- Database Administration (Basic): Familiarize yourself with basic database administration tasks such as user management, backup and recovery, and performance monitoring (at a high level).

- Specific Database Features (MySQL/SQL Server): While general SQL skills are crucial, researching specific features of the database system mentioned in the job description will significantly boost your chances. This might include stored procedures, functions, or triggers.

- Problem-solving and Analytical Skills: Database interviews often involve solving problems related to data manipulation and query optimization. Practice working through challenging scenarios.

Next Steps

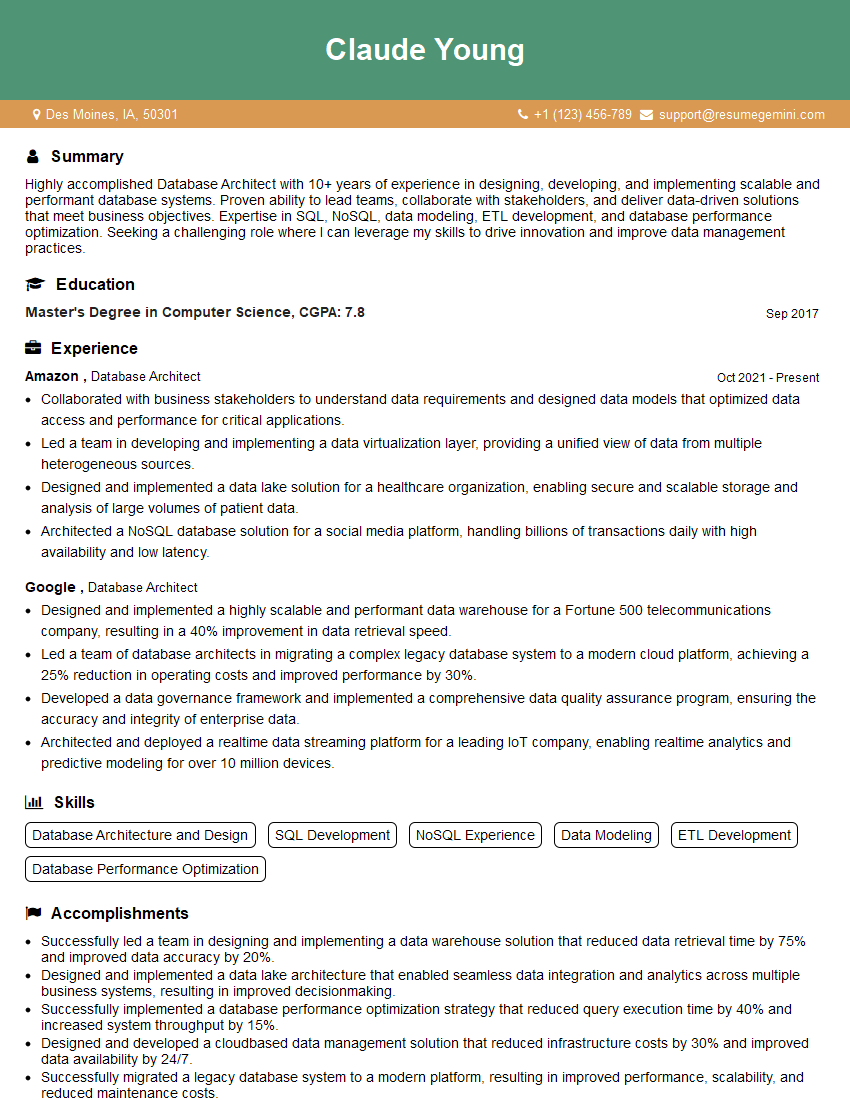

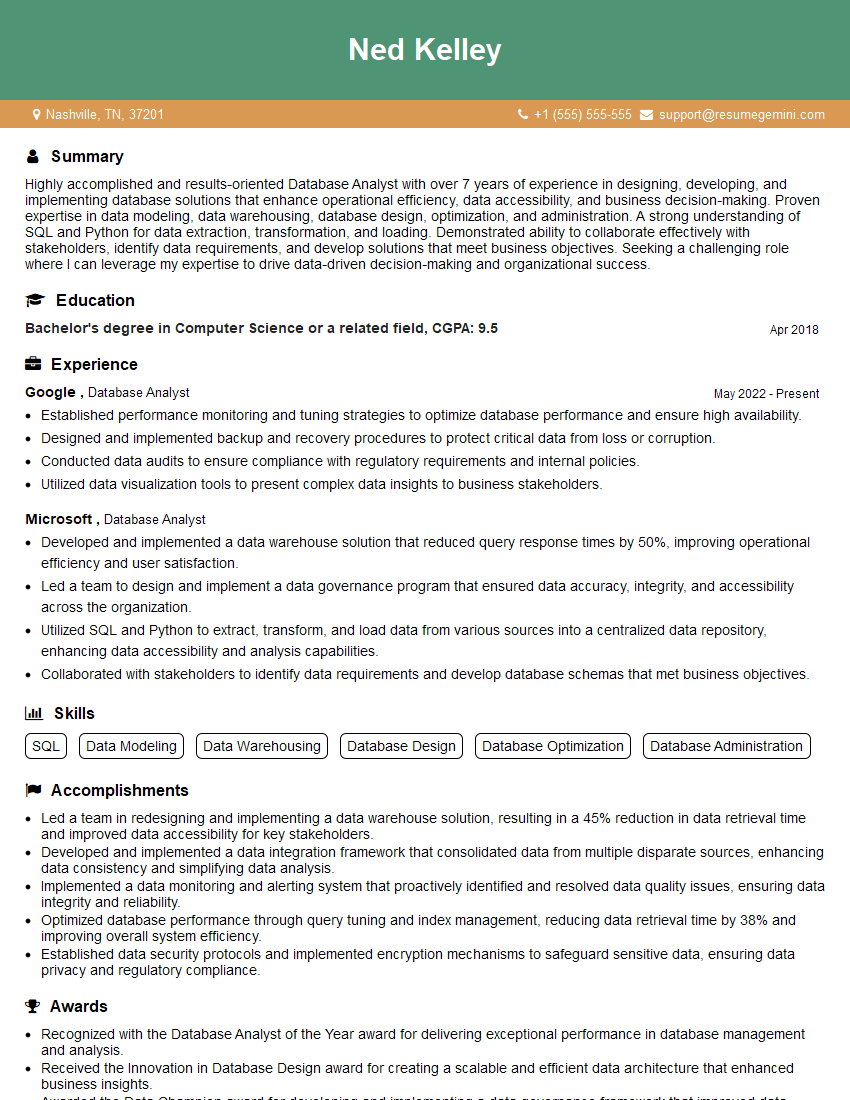

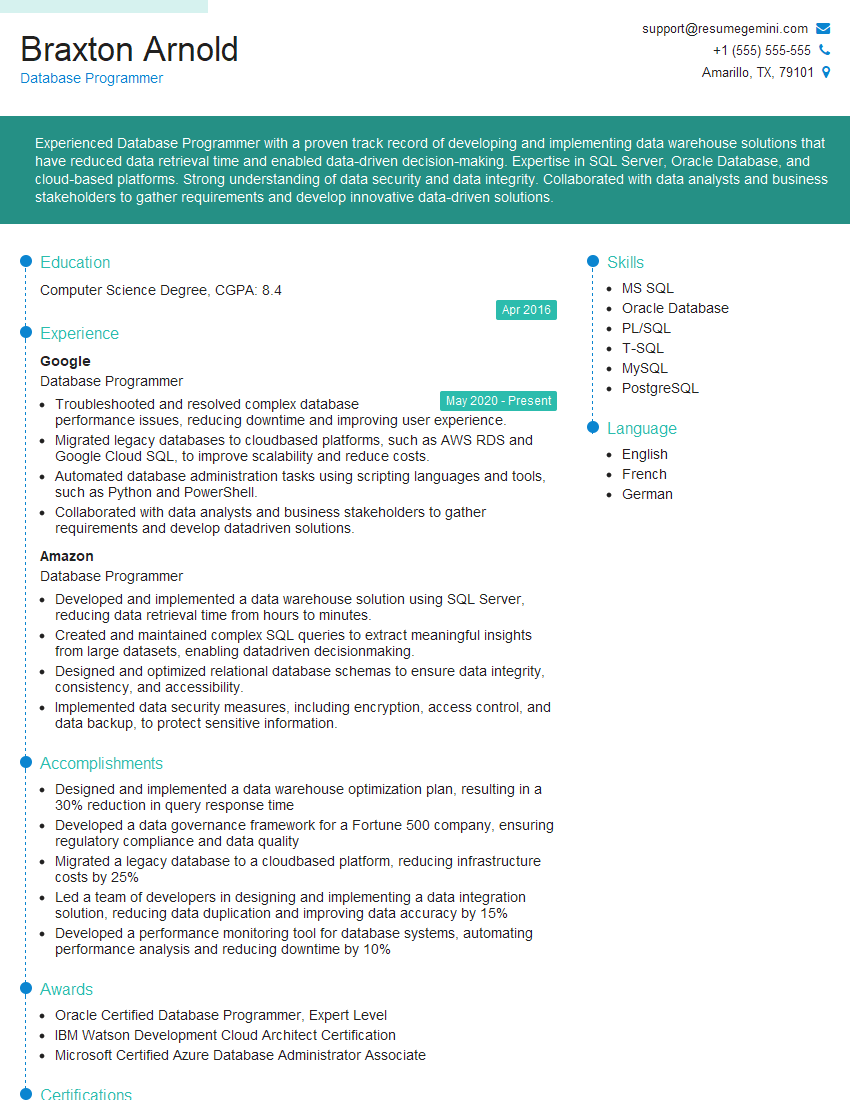

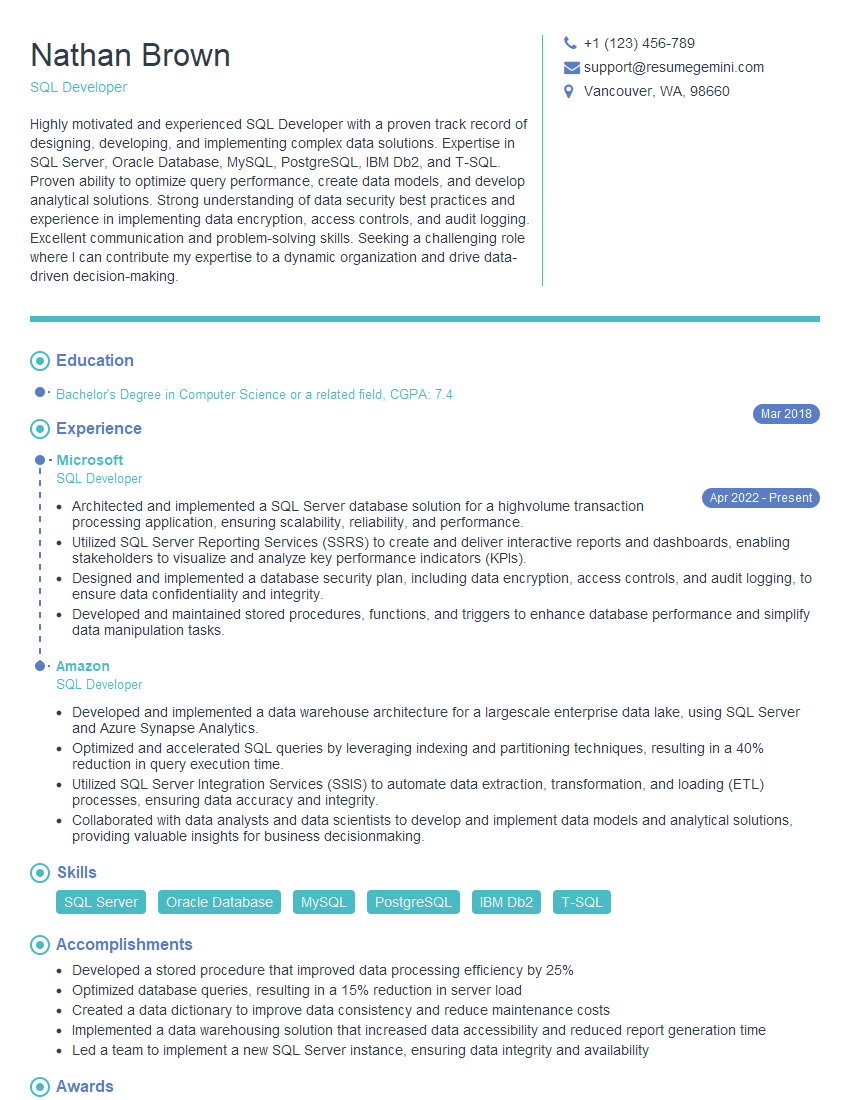

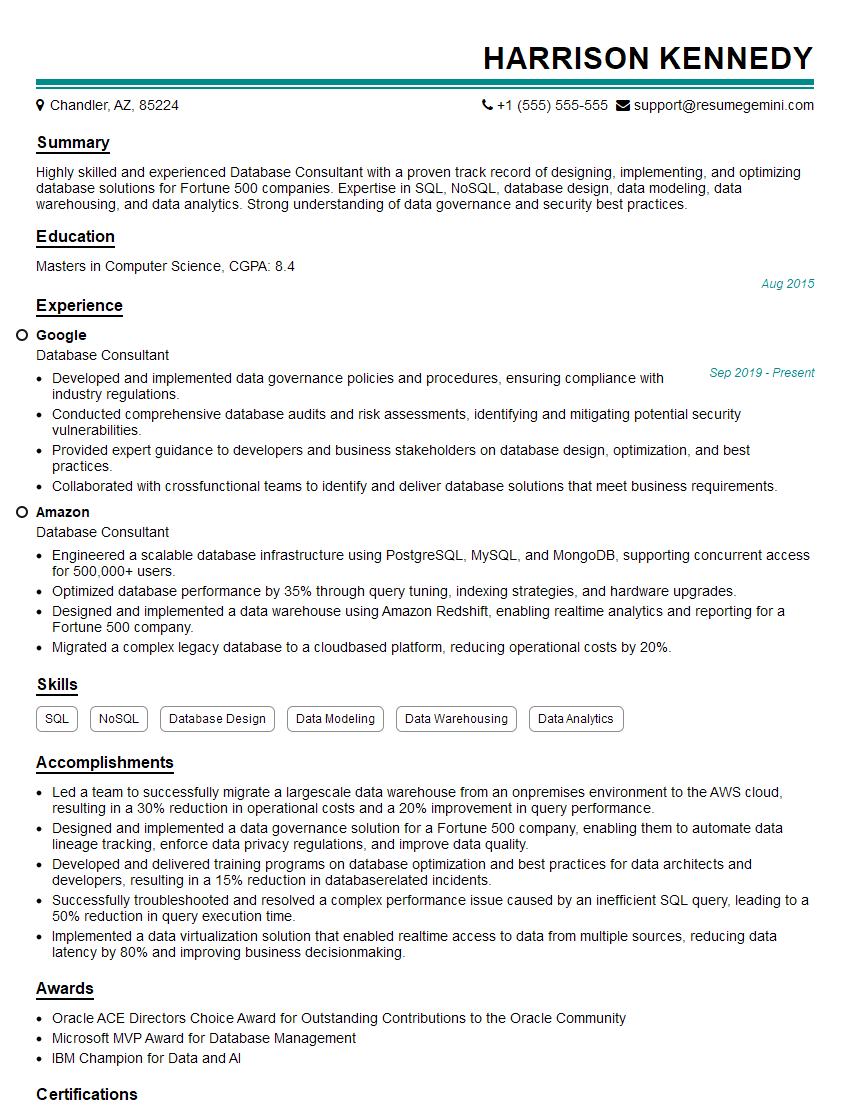

Mastering Database Management skills, especially with popular systems like MySQL and SQL Server, is crucial for a thriving career in software development, data science, and many other tech fields. These skills are highly sought after, leading to increased job opportunities and higher earning potential. To maximize your chances of landing your dream role, create a compelling and ATS-friendly resume that effectively showcases your expertise. ResumeGemini is a trusted resource to help you build a professional and impactful resume. We offer examples of resumes tailored to Database Management roles using MySQL and SQL Server, to help you present your skills in the best light.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO