Cracking a skill-specific interview, like one for Document Archival, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Document Archival Interview

Q 1. Explain the difference between physical and digital archiving.

Physical archiving involves storing documents in a tangible format, like paper or microfilm, in a physical location such as a warehouse or archive facility. Digital archiving, on the other hand, involves storing documents in an electronic format, on servers, cloud storage, or other digital media.

The key differences lie in accessibility, storage space, preservation challenges, and cost. Physical archives require significant physical space, are prone to damage from environmental factors and disasters, and accessing specific documents can be time-consuming. Digital archives, while offering easy accessibility and searchability, present their own challenges, such as data degradation, obsolescence of file formats, and the need for ongoing technological maintenance and migration. Think of it like this: a library’s physical collection versus its online catalog. Both serve the same purpose but with different methods and challenges.

Q 2. Describe your experience with metadata schemas and their importance in archival systems.

Metadata schemas are crucial for organizing and retrieving information within an archival system. They provide a structured way to describe the content and context of archived documents, using standardized tags and fields. My experience includes working extensively with Dublin Core and PREMIS, two widely adopted metadata schemas. Dublin Core provides a basic set of descriptive metadata elements, such as title, creator, and subject, whereas PREMIS offers a more comprehensive framework focusing on preservation metadata, including information about file formats, events affecting the digital object’s integrity, and its location.

The importance of robust metadata schemas cannot be overstated. They enable efficient searching, retrieval, and management of archived documents. For instance, using a well-defined schema allows me to easily find all documents related to a specific project, author, or date range. Without metadata, searching through a vast archive would be like finding a needle in a haystack.

Q 3. What are the best practices for ensuring the long-term preservation of digital documents?

Ensuring the long-term preservation of digital documents necessitates a multi-faceted approach. It’s not a ‘set it and forget it’ scenario; it demands continuous attention and adaptation. Best practices include:

- Employing a preservation strategy: This should detail file format migration, storage redundancy, and regular audits.

- Using appropriate file formats: Choosing open, well-documented, and widely supported formats like TIFF for images and XML for structured documents is critical to minimize format obsolescence.

- Implementing data integrity checks: Regular checksum verifications ensure that data hasn’t been corrupted over time.

- Utilizing multiple storage locations: Redundancy through geographically dispersed backups (cloud and on-site) mitigates risks from disasters.

- Regularly updating software and hardware: To ensure compatibility with future technologies.

- Establishing a clear archival policy: This policy should outline procedures, responsibilities, and technologies to be used.

In a real-world example, I once managed the migration of a large archive from a proprietary format to a more open standard, avoiding a potential loss of data when the original software became unsupported.

Q 4. How do you manage version control in a document archive?

Version control in a document archive is vital for maintaining the integrity of information and tracking changes over time. We typically implement version control using systems like Git or specialized archive management software. Each version of a document is stored separately, along with metadata documenting the changes made. This allows for easy retrieval of previous versions, facilitating auditing and the ability to revert to earlier states if necessary.

A practical example would be managing legal contracts: each revision, with its associated changes and timestamps, is clearly identifiable and easily retrievable for audit trails and to resolve conflicts.

Q 5. Explain your understanding of different file formats and their suitability for long-term archiving.

Understanding file formats is essential for long-term archiving. Not all formats are created equal; some are more durable and less prone to obsolescence than others.

- Open formats like TIFF, PDF/A, and XML are preferred as they’re less likely to become unsupported compared to proprietary formats.

- Proprietary formats, specific to individual software applications, are a concern as the software may become unavailable, making the files inaccessible.

- Legacy formats, older file types, can pose challenges due to lack of readily available software for viewing or conversion.

Consider this: a WordPerfect document from the 1990s might be unreadable without specialized software. Choosing the right format at the outset greatly increases the chances of long-term accessibility.

Q 6. Describe your experience with disaster recovery planning for archival systems.

Disaster recovery planning is paramount for archival systems. My experience involves developing and implementing comprehensive plans encompassing various aspects of risk management. These plans typically include:

- Off-site backups: Replicating data to geographically separate locations to protect against local disasters.

- Regular testing: Conducting simulated disaster recovery exercises to validate the effectiveness of the plan.

- Data restoration procedures: Clearly defined steps to restore data from backups in the event of a failure.

- Business continuity planning: Strategies to ensure essential archival functions can continue despite disruption.

A robust disaster recovery plan significantly reduces data loss and ensures business continuity in the face of unforeseen events. I’ve personally overseen several disaster recovery situations where our carefully documented and tested plans proved invaluable in minimizing the impact on our clients.

Q 7. How do you ensure the confidentiality and security of archived documents?

Confidentiality and security of archived documents are crucial. Multiple layers of security are implemented, including:

- Access control: Restricting access to authorized personnel through user authentication and authorization systems.

- Encryption: Protecting data both in transit and at rest using strong encryption algorithms.

- Regular security audits: Identifying and mitigating potential vulnerabilities.

- Data loss prevention (DLP) measures: Preventing unauthorized copying or removal of sensitive data.

- Compliance with relevant regulations: Adhering to industry standards and legal requirements (e.g., GDPR, HIPAA).

Imagine the consequences of a data breach in an archive containing sensitive patient records or financial information—catastrophic. Strong security protocols are not optional but mandatory to protect the confidentiality and integrity of archived documents.

Q 8. What are the key legal and compliance considerations for document archival?

Legal and compliance considerations in document archival are paramount, ensuring adherence to regulations and minimizing legal risks. These vary significantly based on industry, geography, and the nature of the documents themselves. Key considerations include:

- Data Privacy Regulations (e.g., GDPR, CCPA): These laws dictate how personal data is handled, stored, and protected. Archival systems must implement robust measures to ensure compliance, including encryption, access control, and data retention policies that align with these regulations.

- Industry-Specific Regulations (e.g., HIPAA, SOX): Healthcare (HIPAA) and financial (SOX) industries have stringent regulations around data security and retention. Archival strategies must reflect these specific requirements.

- E-discovery and Litigation: Organizations must be able to quickly and reliably produce relevant documents during legal proceedings. A well-organized and easily searchable archive is crucial for e-discovery.

- Retention Policies: Defining clear retention policies is vital. This involves determining how long different types of documents need to be kept, based on legal and business needs. Failure to comply can lead to significant penalties.

- Data Security and Integrity: Archiving systems must protect documents from unauthorized access, alteration, or destruction. This includes employing robust security measures like encryption, access controls, and regular backups.

For example, a healthcare provider must adhere to HIPAA regulations, ensuring patient data is encrypted both in transit and at rest, with strict access control measures implemented. Ignoring these regulations could result in hefty fines and reputational damage.

Q 9. Describe your experience with different archival storage solutions (e.g., cloud, on-premise).

My experience spans various archival storage solutions. I’ve worked extensively with both cloud-based and on-premise systems, recognizing their distinct advantages and disadvantages.

- Cloud-based solutions (e.g., AWS S3, Azure Blob Storage): Offer scalability, cost-effectiveness (often pay-as-you-go), and accessibility from anywhere with an internet connection. However, concerns around vendor lock-in, data sovereignty, and potential security breaches need careful consideration. I’ve successfully implemented cloud archives for organizations requiring rapid scalability and global accessibility.

- On-premise solutions: Provide greater control over data security and infrastructure. This is beneficial for organizations with highly sensitive data or strict regulatory requirements. However, on-premise solutions require significant upfront investment in hardware and ongoing maintenance. I’ve managed large on-premise archives using technologies like tape libraries and SAN storage, focusing on robust backup and disaster recovery strategies.

The choice between cloud and on-premise depends on factors like budget, regulatory compliance, data sensitivity, and the organization’s IT infrastructure. A hybrid approach, combining both, is often the most practical and effective solution.

Q 10. How do you handle the ingestion and processing of large volumes of documents?

Ingesting and processing large document volumes efficiently requires a well-defined strategy. It’s not simply about moving data; it’s about transforming it into a usable and searchable format. My approach involves:

- Automated Ingestion: Utilizing automated tools and scripts to ingest documents from various sources (e.g., email servers, file shares, applications). This minimizes manual intervention and ensures consistency.

- Metadata Extraction: Extracting relevant metadata (e.g., date, author, keywords) from documents is critical for searchability and organization. This often involves Optical Character Recognition (OCR) for scanned documents and automated metadata extraction from digital files.

- Data Validation and Cleaning: Identifying and correcting errors or inconsistencies in the data. This step is crucial for data integrity and ensures accurate search results.

- Data Transformation: Converting documents into a standardized format suitable for archiving (e.g., PDF/A). This ensures long-term accessibility and compatibility.

- Chunking and Parallel Processing: Breaking down large tasks into smaller, manageable chunks to improve processing speed. Parallel processing, utilizing multiple processors or cores, significantly speeds up the ingestion pipeline.

For instance, when ingesting millions of emails, I’d use a distributed processing framework to handle the volume efficiently, extracting metadata like sender, recipient, subject, and date. This allows for rapid searching and retrieval based on these attributes.

Q 11. Explain your experience with archival software and database management systems.

My experience with archival software and database management systems is extensive. I’m proficient in using various software solutions and databases to manage archival systems effectively.

- Archival Software: I have experience with enterprise-grade archival systems like OpenText, IBM FileNet, and others. These platforms provide features for metadata management, access control, retention policies, and e-discovery.

- Database Management Systems (DBMS): I’m skilled in using relational databases (e.g., MySQL, PostgreSQL) and NoSQL databases (e.g., MongoDB) for managing metadata and indexing documents. The choice of DBMS depends on the specific requirements of the archive.

- API Integration: I’ve worked extensively on integrating archival systems with other enterprise applications via APIs. This allows for seamless data flow and automation.

For example, I’ve integrated an archival system with an HR system to automate the archiving of employee documents, ensuring compliance with regulations and streamlining the document management process.

Q 12. How do you ensure the integrity and authenticity of archived documents?

Ensuring the integrity and authenticity of archived documents is crucial. This is achieved through a multi-faceted approach:

- Hashing and Digital Signatures: Creating cryptographic hashes of documents before archiving. Any change to the document will alter its hash, allowing for detection of tampering. Digital signatures provide authentication and non-repudiation, proving the origin and integrity of the document.

- Version Control: Tracking changes to documents over time. This is especially important for documents that undergo revisions. Version control systems allow for the recovery of previous versions if needed.

- Immutable Storage: Using storage solutions that prevent modification of archived documents after they are stored. This ensures data integrity and helps meet legal and compliance requirements.

- Regular Audits: Performing periodic audits to verify the integrity and authenticity of archived documents. This involves comparing hashes, validating digital signatures, and checking for any unauthorized access attempts.

- Chain of Custody: Maintaining a clear record of who has accessed and handled archived documents. This provides a clear audit trail and enhances accountability.

Imagine a legal case where a document’s authenticity is challenged. The hash and digital signature would serve as irrefutable proof of its integrity, ensuring its admissibility as evidence.

Q 13. Describe your approach to managing access control and permissions within an archive.

Managing access control and permissions is critical for data security and compliance. My approach involves:

- Role-Based Access Control (RBAC): Assigning permissions based on user roles. This simplifies access management and ensures that only authorized personnel can access sensitive information. For example, HR staff might only have access to employee records.

- Granular Permissions: Defining fine-grained permissions, allowing for precise control over access to specific documents or folders. Some users might have read-only access, while others have full access to modify documents.

- Audit Trails: Tracking all access attempts and modifications to archived documents. This enables monitoring for suspicious activity and provides evidence for audits or investigations.

- Multi-Factor Authentication (MFA): Implementing MFA for added security, requiring multiple verification methods (e.g., password and one-time code) before granting access.

- Data Loss Prevention (DLP): Employing DLP technologies to prevent sensitive data from leaving the controlled environment, ensuring confidentiality even if unauthorized access occurs.

In a financial institution, for example, RBAC would ensure that only authorized personnel can access sensitive client data, preventing unauthorized disclosures and maintaining compliance with regulations.

Q 14. What methods do you use to search and retrieve documents from an archive?

Efficient document retrieval relies on a robust search and indexing system. My approach utilizes a combination of techniques:

- Full-text Search: Allows users to search for keywords within the content of documents. This requires indexing the text content of all documents in the archive.

- Metadata Search: Searching based on metadata attributes, such as date, author, and document type. This is much faster than full-text search and allows for targeted retrieval.

- Faceting: Providing users with interactive filters to refine search results based on metadata attributes. This allows users to drill down and narrow their search efficiently.

- Advanced Search Operators: Supporting advanced search operators (e.g., Boolean operators, wildcard characters) to improve search precision and recall.

- Natural Language Processing (NLP): Employing NLP techniques to understand the meaning and context of search queries, allowing for more accurate results. This is particularly useful for complex or ambiguous queries.

For example, a user could search for all documents related to ‘customer complaints’ filed in ‘2023,’ using facets to further refine the results by specific product or region. The combination of metadata and full-text search ensures efficient and accurate document retrieval.

Q 15. How do you assess the value and retention requirements of documents?

Assessing the value and retention requirements of documents is crucial for effective records management. It involves a multi-faceted approach that considers legal, business, and historical significance. We start by identifying the document type and its purpose. For example, a contract has significantly different retention requirements than an employee’s daily timesheet.

- Legal Requirements: Many documents are subject to legal hold periods – think tax records, employment contracts, or customer agreements. These requirements often dictate minimum retention periods.

- Business Value: Documents may contain critical business information such as financial records, strategic plans, or client data. The value of these documents is assessed based on their continued relevance to ongoing operations and decision-making. We consider things like their use in audits, litigation, or future planning.

- Historical Value: Some documents may have long-term historical significance, contributing to the organization’s legacy or offering valuable insights for researchers. Archives will typically have longer retention periods for such documents.

The process often involves developing a comprehensive retention schedule, a formal document specifying the retention period for each document type. This is often done in collaboration with legal counsel and relevant stakeholders within the organization. Regular reviews of this schedule are essential to adapt to changing regulations and business needs.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with records disposition and destruction processes.

Records disposition and destruction are the final stages of the records lifecycle, ensuring compliance and responsible data management. I have extensive experience in developing and implementing secure destruction protocols. This involves more than simply shredding paper; it encompasses digital data as well. My approach considers:

- Legal and Regulatory Compliance: We always ensure adherence to all relevant laws and regulations regarding data privacy (e.g., GDPR, CCPA) and data destruction. This often includes certification of destruction methods.

- Secure Destruction Methods: Depending on the sensitivity of the information, we utilize appropriate methods such as secure shredding for paper, certified data wiping for hard drives, and secure cloud deletion for digital records.

- Chain of Custody: Maintaining a detailed chain of custody is essential to ensure accountability. This documentation tracks the movement and destruction of records, proving compliance with regulations.

- Documentation and Auditing: We create detailed records of the disposition and destruction process, including dates, methods used, and any witnesses. This allows for easy auditing and verification of compliance.

For example, in a previous role, we implemented a secure e-discovery process which involved identifying relevant documents, preparing them for legal review, and then securely deleting them after the legal process was complete, ensuring all steps adhered to legal and regulatory requirements.

Q 17. How do you create and maintain an archival inventory or catalog?

Creating and maintaining an archival inventory or catalog is fundamental to ensuring accessibility and preservation of archived materials. Think of it as a detailed library catalog, but for archival materials. It facilitates finding specific documents, understanding their context, and managing the collection as a whole. My approach involves:

- Metadata Development: We create a consistent metadata schema—a standardized set of descriptive elements for each item. This typically includes details such as title, date, creator, subject, and any unique identifiers. The more descriptive the metadata, the easier it is to search and retrieve information.

- Database Selection: We choose an appropriate database system – this could range from simple spreadsheets for small collections to sophisticated archival management systems (AMS) for larger repositories. The system should be scalable and capable of handling future growth.

- Data Entry and Quality Control: Accuracy is paramount. Data entry is a rigorous process with multiple checks and quality control measures in place to ensure accuracy and consistency.

- Regular Updates: The inventory must be kept up-to-date to reflect additions, changes, and deletions within the archive. Regular audits help identify any gaps or inconsistencies.

For example, we may utilize a database with fields for document ID, title, date created, author, keywords, file format, and location (physical or digital). This allows users to search using different criteria – for instance, by date, keyword, or author.

Q 18. Describe your experience with implementing and managing records management policies.

Implementing and managing records management policies requires a strategic approach that integrates with an organization’s overall goals and operations. It’s not just about creating a document; it’s about embedding responsible records management into the culture and daily workflow. My experience includes:

- Needs Assessment: We begin by assessing the organization’s needs, including its size, industry, regulatory environment, and existing records management practices. This informs the design of the policy.

- Policy Development: The policy should be clear, concise, and accessible, covering all aspects of records creation, management, retention, and disposition. It needs to address both physical and digital records.

- Training and Communication: Effective communication and training are critical to ensure staff understand and comply with the policy. We utilize various methods – workshops, online training, and readily available documentation.

- Monitoring and Enforcement: Regular audits and monitoring are needed to ensure compliance and identify areas for improvement. We’ll put measures in place to address any non-compliance issues.

For instance, in a previous role, we developed a records management policy that included guidelines on document naming conventions, metadata tagging, retention schedules, and approved disposal methods. This resulted in a significant improvement in the organization’s ability to manage its records effectively and comply with regulatory requirements.

Q 19. What are the challenges of managing born-digital records?

Managing born-digital records presents unique challenges compared to managing paper-based records. The sheer volume, variety of formats, and rapid technological obsolescence pose significant hurdles. Key challenges include:

- Format Obsolescence: Digital formats become outdated quickly. Ensuring long-term accessibility requires strategies like migration to newer formats or emulation of older systems.

- Data Integrity: Maintaining data integrity over time can be difficult. Data corruption, system failures, and accidental deletion can lead to data loss.

- Storage Capacity and Costs: The volume of digital data can grow exponentially, requiring significant storage capacity and associated costs. Efficient storage solutions and data compression techniques are crucial.

- Authentication and Metadata: Establishing authenticity and provenance of digital records is essential. Accurate metadata is critical for finding and understanding the records.

- Security and Privacy: Protecting sensitive digital information from unauthorized access and cyber threats is paramount.

For example, ensuring the long-term accessibility of a database created in a now-obsolete software requires careful planning, potentially involving data migration to a newer database system and preservation of the original software and its documentation.

Q 20. How do you handle migrating archival data to new systems or platforms?

Migrating archival data to new systems or platforms is a critical task requiring careful planning and execution. It aims to ensure the long-term accessibility and preservation of data while adapting to changing technology. The process usually involves:

- Needs Assessment: We assess the current system, the desired new system, and the volume and types of data being migrated. We identify any potential risks or challenges.

- Data Assessment and Cleaning: Before migration, we evaluate data quality, identify duplicates, and remove unnecessary or corrupt files. Data cleaning is essential for efficiency and to avoid carrying over problems to the new system.

- Migration Strategy: We develop a comprehensive migration plan detailing the process, timelines, and resources required. Different migration strategies can be employed, including parallel migration (running old and new systems concurrently) or cutover migration (switching directly to the new system).

- Testing and Validation: Before full migration, thorough testing is conducted to ensure data integrity and the functionality of the new system.

- Post-Migration Monitoring: After migration, ongoing monitoring is essential to detect and address any issues.

For example, migrating a large collection of digitized photographs from a legacy digital asset management system to a cloud-based repository would involve careful planning, data cleaning, and a phased approach to minimize disruption.

Q 21. Explain your familiarity with different archival standards (e.g., ISO 15489).

I am familiar with various archival standards, most notably ISO 15489:1 – Records Management. This international standard provides a framework for managing records throughout their lifecycle, encompassing aspects such as appraisal, retention, access, and disposal. Understanding these standards is crucial for ensuring compliance and best practices in archival management.

- ISO 15489:1: This standard focuses on the principles and functions of records management, covering policies, procedures, and technologies. Key concepts include records lifecycle management, metadata, and security.

- Other Standards: Other relevant standards may include those relating to metadata schemas (like Dublin Core), digital preservation (e.g., PREMIS), and data security (e.g., ISO 27001). The specific standards needed will depend on the nature of the archive and the organization’s needs.

My experience with these standards enables me to design and implement robust archival systems that meet international best practices, ensuring compliance and facilitating long-term data preservation. Applying these standards helps build trust and credibility in the integrity and authenticity of the archives.

Q 22. How do you ensure compliance with data privacy regulations in archival practices?

Ensuring compliance with data privacy regulations like GDPR, CCPA, and HIPAA is paramount in archival practices. It’s not just about storing data; it’s about managing it responsibly throughout its lifecycle. This involves a multi-faceted approach:

Data Minimization and Purpose Limitation: We only archive data necessary for legitimate business purposes and for the shortest time possible. This reduces the risk of exposure.

Access Control and Authentication: Strict access controls are implemented using role-based access, encryption, and multi-factor authentication to limit who can view or modify archived data. For example, only authorized personnel with specific security clearances can access sensitive patient data in a HIPAA-compliant archive.

Data Masking and Anonymization: Where appropriate, we use techniques to remove or obscure personally identifiable information (PII) to protect privacy while still retaining valuable data for analysis or auditing. This could involve replacing names with unique identifiers or removing identifying details from financial documents.

Data Retention Policies: Clear, documented policies define how long data is kept, based on regulatory requirements and business needs. This ensures we don’t retain data longer than necessary, minimizing risk.

Data Breach Response Plan: A comprehensive plan outlines procedures for identifying, containing, and remediating data breaches. Regular audits and security assessments help identify vulnerabilities before they can be exploited.

Regular Training: All personnel involved in archival processes receive regular training on data privacy regulations and best practices to ensure they understand their responsibilities.

By implementing these measures, we create a robust and compliant archival system that safeguards sensitive information.

Q 23. Describe your experience with e-discovery and legal hold processes.

E-discovery and legal hold processes are critical in responding to litigation or regulatory investigations. My experience involves working collaboratively with legal teams to identify, preserve, collect, and produce electronically stored information (ESI). This includes:

Legal Hold Implementation: I’ve managed the process of placing legal holds on relevant data sources – this includes emails, databases, and shared drives – to prevent accidental deletion or modification while the legal process is ongoing. This often involves using specialized software to track and manage hold requests.

Data Collection and Preservation: Using approved forensic techniques and tools, I’ve ensured the integrity and authenticity of collected ESI, creating forensically sound images or copies to preserve the original data.

Data Processing and Review: I’ve used e-discovery platforms to process and filter massive datasets, removing irrelevant information and identifying key documents for review by legal counsel. This frequently involves employing keyword searches, date filtering, and advanced analytics.

Production of ESI: I’ve prepared and produced relevant ESI in the required format (e.g., native format, PDF, TIFF) according to court orders or legal requests.

My experience has shown the importance of clear communication and collaboration with legal teams throughout the entire e-discovery process to ensure timely and efficient response to legal requests while maintaining data integrity and confidentiality.

Q 24. How do you assess and mitigate risks associated with data loss or corruption in an archive?

Assessing and mitigating risks associated with data loss or corruption requires a proactive approach. This starts with a thorough understanding of potential threats and vulnerabilities:

Risk Assessment: We regularly conduct risk assessments to identify potential threats, including hardware failures, software vulnerabilities, natural disasters, and human error. We prioritize risks based on their likelihood and potential impact.

Data Redundancy and Backup: We employ robust backup and recovery strategies, including multiple backups stored in different geographical locations (geo-redundancy). This ensures data availability even in the event of a major disaster. We also use different backup methods like disk-to-disk, cloud-based, and tape backups.

Data Validation and Integrity Checks: Regular checksums and hash calculations are performed to verify data integrity and detect any corruption. This is particularly crucial for long-term archiving.

Disaster Recovery Planning: We have a detailed disaster recovery plan that outlines procedures for restoring data and systems in the event of a disaster. This plan is regularly tested through drills and simulations.

Security Measures: We employ strong security measures to protect the archive from unauthorized access, cyberattacks, and malware. This includes firewalls, intrusion detection systems, and encryption.

Version Control and Audit Trails: Tracking changes made to archived data ensures accountability and facilitates restoration in case of accidental modification or deletion. This often involves detailed audit logs.

By combining these strategies, we minimize the risk of data loss or corruption, ensuring the long-term preservation of valuable information.

Q 25. Explain your experience with automating archival tasks and workflows.

Automating archival tasks and workflows significantly improves efficiency and reduces the risk of human error. My experience includes implementing various automation solutions:

Automated Ingestion: We utilize automated ingestion pipelines to streamline the process of importing documents into the archive. This often involves integrating with existing systems and using APIs to automate data transfer.

Metadata Extraction and Management: Automated metadata extraction helps categorize and organize documents efficiently, improving searchability and retrieval. We use tools that can extract metadata from various file types and apply consistent tagging schemes.

Automated Classification and Routing: Documents can be automatically classified and routed based on predefined rules or AI-powered systems. This improves accuracy and speeds up processing.

Workflow Automation: We have automated several workflows, such as notifications, approval processes, and reporting, using tools like RPA (Robotic Process Automation). This reduces manual intervention and frees up staff for higher-level tasks.

Scripting and Programming: I’ve written scripts (e.g., using Python or PowerShell) to automate repetitive tasks such as data migration, data cleaning, and report generation.

For example, I implemented a Python script to automate the process of migrating data from a legacy system to a new cloud-based archive, reducing the migration time from weeks to days.

Q 26. How do you prioritize and manage competing demands in a busy archival environment?

Prioritizing and managing competing demands in a busy archival environment requires effective planning and organization. I utilize several strategies:

Prioritization Matrix: I use a prioritization matrix (e.g., Eisenhower Matrix) to categorize tasks based on urgency and importance. This helps me focus on the most critical tasks first.

Project Management Methodologies: I apply project management methodologies like Agile or Kanban to manage multiple projects simultaneously, breaking down large tasks into smaller, manageable units.

Time Management Techniques: I use time management techniques like time blocking and the Pomodoro Technique to improve focus and efficiency.

Resource Allocation: I carefully allocate resources (personnel, budget, technology) to ensure that the most important projects receive the necessary support.

Communication and Collaboration: Open communication with stakeholders is essential. Regular updates and clear expectations help manage expectations and ensure alignment.

By applying these methods, I ensure that critical archival tasks are completed on time and within budget, while also accommodating unexpected requests or priorities.

Q 27. How do you stay current with best practices and emerging technologies in document archival?

Staying current with best practices and emerging technologies is essential in the dynamic field of document archival. My approach includes:

Professional Development: I actively participate in conferences, workshops, and webinars to learn about the latest technologies and best practices. I also pursue relevant certifications to enhance my expertise.

Industry Publications and Research: I regularly read industry publications, journals, and research papers to stay informed about new developments and emerging trends.

Networking and Collaboration: I engage with other professionals in the field through professional organizations and online communities to share knowledge and best practices.

Vendor Engagement: I actively engage with vendors of archival software and hardware to stay abreast of product updates and new functionalities.

Technology Trials and Evaluations: I evaluate and test new technologies to assess their suitability for our archival needs before implementing them.

This continuous learning process allows me to adapt quickly to changes in the field and to implement the most effective solutions for our archival needs.

Q 28. Describe a time you had to solve a complex problem related to document archival.

During a major system migration, we encountered a significant challenge with data integrity. After migrating a large portion of the archive to a new cloud-based system, we discovered inconsistencies in some document metadata. This risked compromising the ability to search and retrieve documents accurately. The problem was compounded by the lack of readily available tools for validating metadata at scale.

To solve this, I implemented a multi-step approach:

Root Cause Analysis: We meticulously investigated the root cause of the metadata inconsistencies, identifying a flaw in the data migration script. This involved analyzing log files, comparing data sets, and consulting with the software vendor.

Data Validation Tool Development: Since there wasn’t a suitable off-the-shelf tool, I developed a custom Python script to compare the metadata in the original and migrated archives, identifying discrepancies and flagging affected documents. This script used hashing to verify data integrity and compared metadata fields.

Data Remediation: Using the output of the validation script, we systematically corrected the metadata inconsistencies in the migrated archive. This involved manual corrections for some documents and automated updates for others.

Testing and Verification: After remediation, we conducted thorough testing to verify the accuracy and completeness of the metadata and to confirm the integrity of the migrated data.

This experience highlighted the importance of thorough planning, robust testing, and the ability to adapt and develop custom solutions to address unexpected challenges during large-scale data migrations. The successful resolution ensured the integrity of the archive and maintained the organization’s ability to access and manage its documents effectively.

Key Topics to Learn for Document Archival Interview

- Metadata and Classification: Understanding different metadata schemas (Dublin Core, PREMIS, etc.) and their application in organizing and retrieving documents. Practical application: Explain how you would design a metadata schema for a specific type of document (e.g., legal contracts, medical records).

- Storage and Retrieval Systems: Knowledge of various archival storage systems (cloud-based, on-premise), database management systems (DBMS) relevant to archival work, and efficient retrieval methods. Practical application: Discuss the advantages and disadvantages of different storage solutions for long-term document preservation.

- Digital Preservation Strategies: Understanding the challenges of long-term digital preservation, including format obsolescence, bit rot, and ensuring data integrity. Practical application: Describe your approach to migrating documents from an outdated format to a more stable one.

- Archival Standards and Best Practices: Familiarity with relevant archival standards (e.g., ISO 15489) and best practices for document handling, preservation, and access. Practical application: Explain how you would ensure compliance with relevant archival standards in a specific project.

- Disaster Recovery and Business Continuity: Planning for and implementing strategies to protect archival materials from loss or damage due to natural disasters or other unforeseen events. Practical application: Describe a disaster recovery plan for a specific archival environment.

- Data Security and Access Control: Implementing appropriate security measures to protect confidential information and manage access to archival materials. Practical application: Discuss different access control methods and their suitability for various types of archival documents.

- Workflow and Automation: Understanding and optimizing workflows for document ingestion, processing, storage, and retrieval, including the use of automation tools. Practical application: Describe how you would automate a repetitive task in a document archival process.

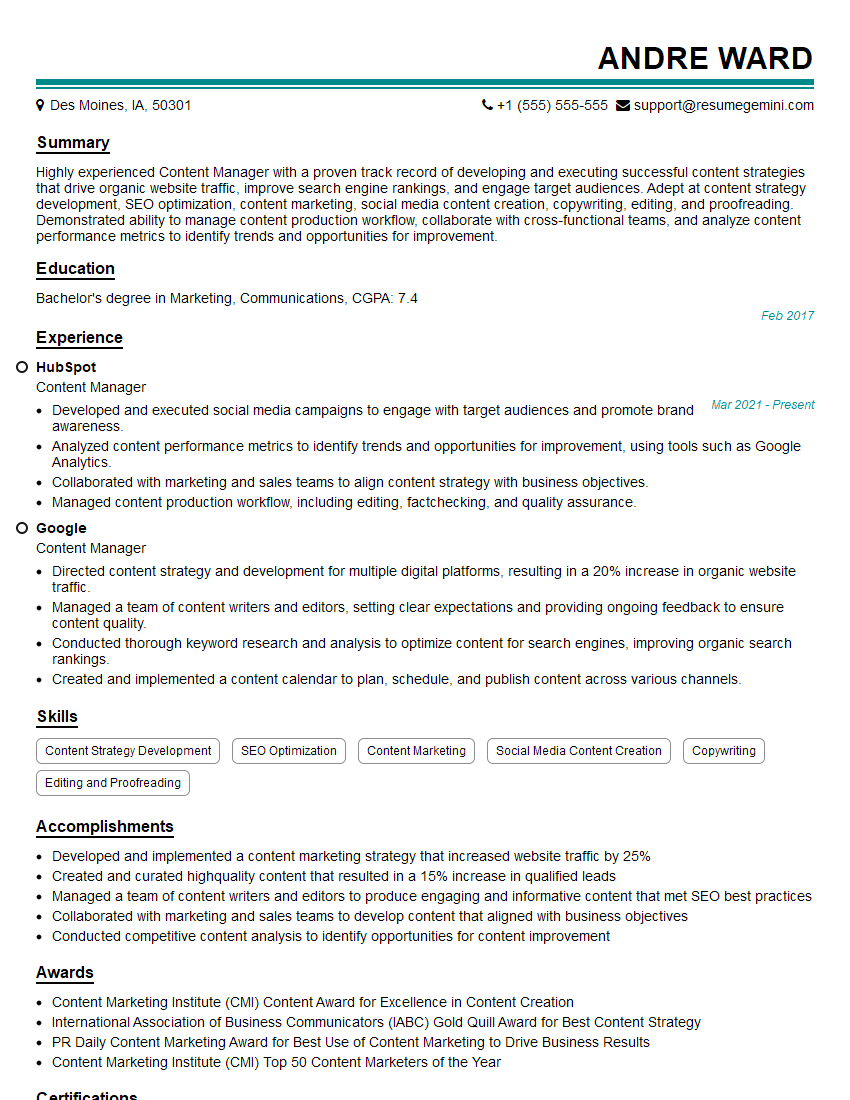

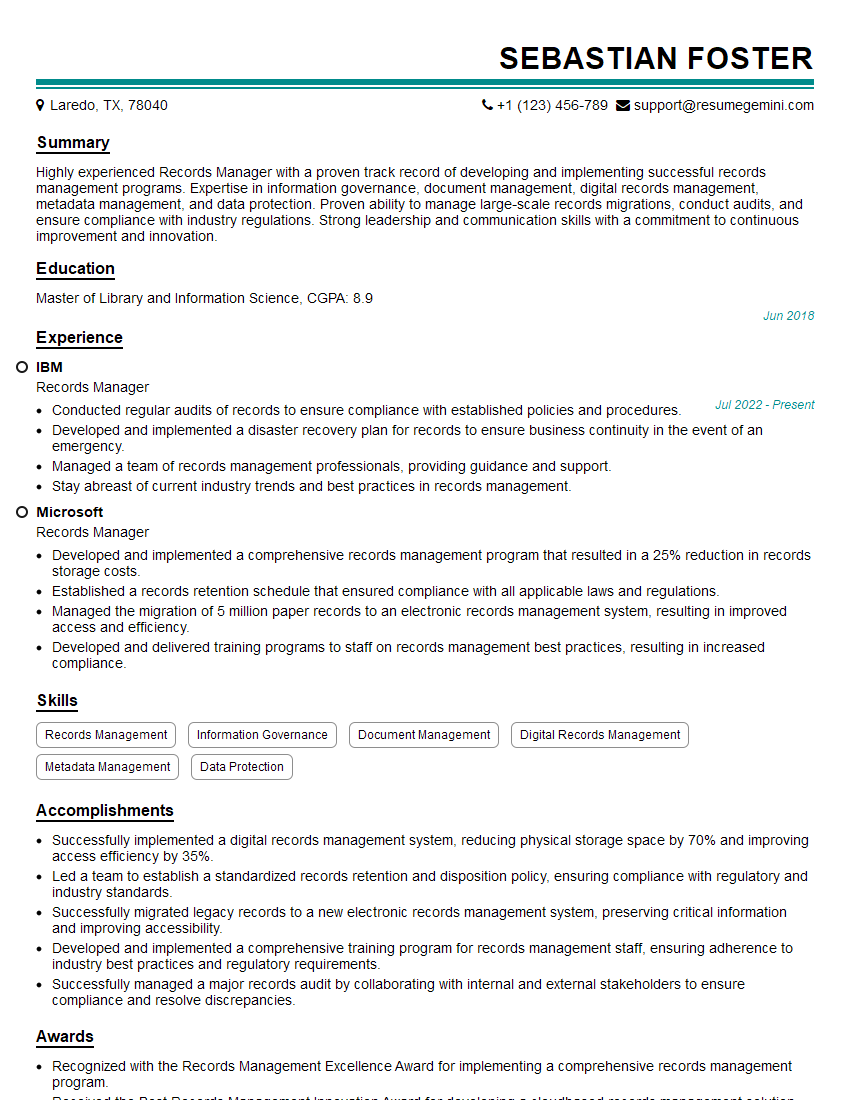

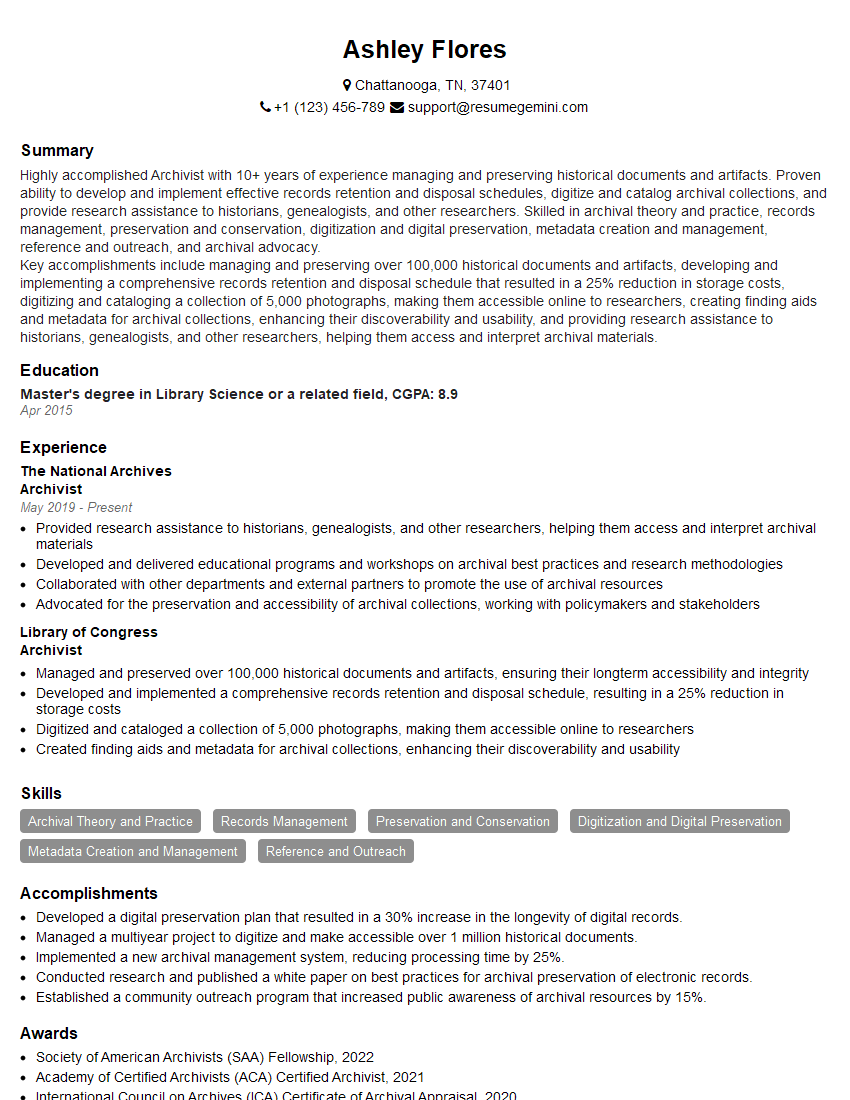

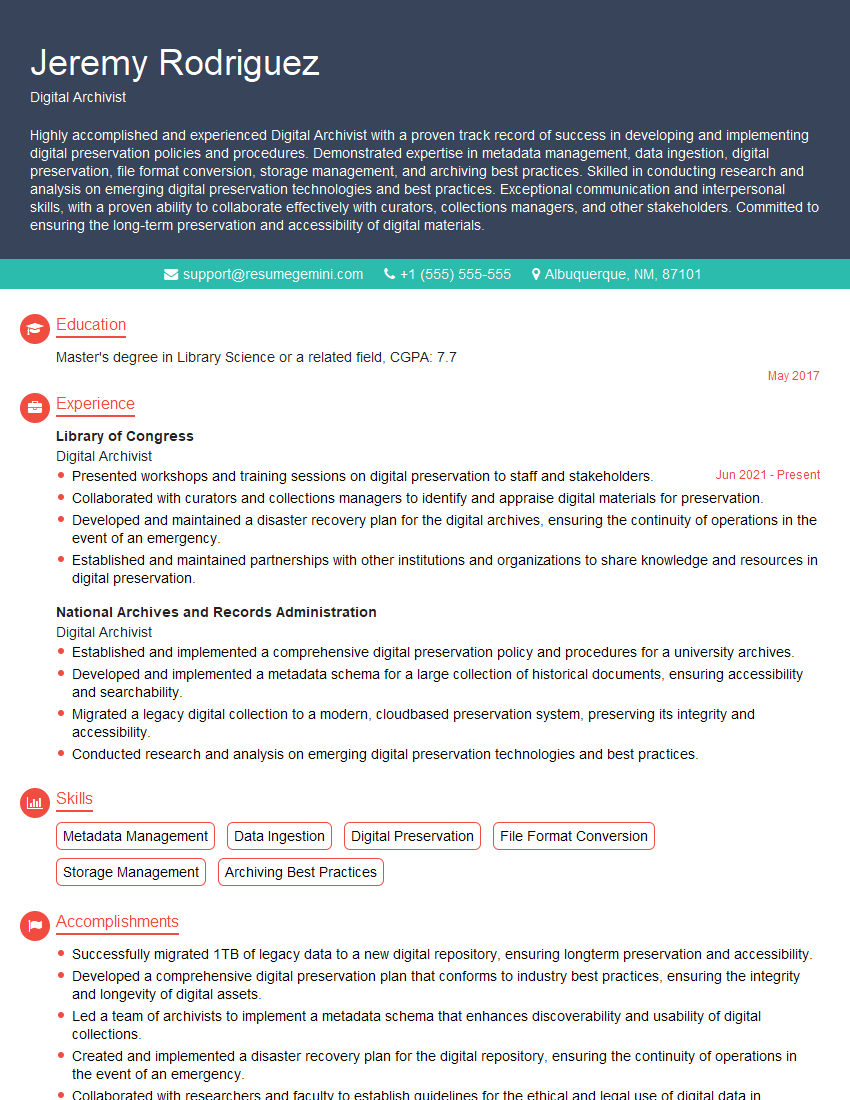

Next Steps

Mastering document archival skills significantly enhances your career prospects in information management, records management, and related fields. It demonstrates a valuable combination of technical expertise and organizational skills highly sought after by employers. To boost your job search success, create a compelling and ATS-friendly resume that highlights your relevant skills and experience. ResumeGemini is a trusted resource that can help you build a professional resume tailored to your specific career goals. Examples of resumes specifically designed for Document Archival professionals are available to provide inspiration and guidance.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO