Unlock your full potential by mastering the most common Evaluation and Feedback interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Evaluation and Feedback Interview

Q 1. Explain the difference between formative and summative evaluation.

Formative and summative evaluations are two crucial approaches in assessing progress and outcomes. Think of formative evaluation as the ongoing checkup during a project, while summative evaluation is the final exam at the end.

Formative evaluation focuses on improvement during the process. It’s about gathering feedback while something is being developed or implemented to make necessary adjustments. Examples include pilot testing a new training program, gathering feedback on a draft report, or conducting mid-project reviews. The goal is to identify strengths and weaknesses before the final product or outcome is complete.

Summative evaluation, on the other hand, measures the overall effectiveness or impact after something is completed. This is the final assessment of the project’s success in achieving its goals. Examples include evaluating the overall impact of a training program through post-training performance assessments, analyzing the final sales figures for a marketing campaign, or conducting an end-of-year performance review. The emphasis is on judging overall achievement.

Q 2. Describe three different methods for collecting feedback.

Collecting feedback effectively requires diverse methods to ensure a comprehensive understanding. Here are three powerful approaches:

- Surveys: These are efficient for gathering quantitative and qualitative data from a large group. Structured questionnaires with multiple-choice, rating scales, and open-ended questions can provide insights into satisfaction, perceptions, and areas for improvement. For example, a post-training survey can assess participant satisfaction and identify aspects needing revision.

- Interviews: These provide deeper qualitative insights through one-on-one conversations. They allow for follow-up questions and probing to gain a nuanced understanding of individual experiences. For instance, conducting interviews with employees after implementing a new workflow can reveal challenges and opportunities for optimization.

- Focus Groups: These involve moderated discussions with a small group of individuals to explore specific topics in detail. This method allows for rich interaction and exploration of shared perspectives. For example, a focus group with customers can provide feedback on a new product’s design and features.

Q 3. What are the key components of a well-structured performance review?

A well-structured performance review is a crucial tool for employee development and organizational success. It’s more than just a numerical rating; it’s a conversation.

- Clear Goals and Expectations: The review should begin by reviewing previously established goals and expectations for the review period. This sets the context for evaluating performance against a pre-defined benchmark.

- Objective Assessment of Performance: This section uses concrete examples and data to demonstrate performance against those goals. Avoid vague statements; use specific instances of achievements and areas needing improvement.

- Constructive Feedback: This is a crucial component, focusing on both strengths and weaknesses. It should be specific, actionable, and balanced, offering guidance for future improvement rather than simple criticism.

- Goal Setting for Future Performance: The review should conclude with collaborative goal setting for the next review period. This creates a clear path for future development and aligns individual contributions with organizational objectives.

- Two-Way Communication: The process should involve open dialogue and collaboration between the employee and manager, allowing for mutual feedback and understanding.

Q 4. How do you handle delivering negative feedback effectively?

Delivering negative feedback effectively is a critical skill. The goal isn’t to demoralize but to guide improvement.

- Private Setting: Choose a private and comfortable environment to ensure the individual feels safe and respected.

- Focus on Behavior, Not Personality: Frame the feedback around specific behaviors or actions, avoiding personal attacks. For example, instead of saying “You’re lazy,” say, “The project deadline was missed, which impacted team productivity.”

- Use the SBI Model: Structure your feedback using the Situation-Behavior-Impact model. Describe the situation, the observed behavior, and the impact of that behavior. This provides clarity and context.

- Offer Solutions: Don’t just identify problems; suggest concrete steps for improvement. This demonstrates support and a commitment to helping the individual grow.

- Focus on the Future: End the conversation on a positive note, emphasizing growth and future opportunities. Reiterate your belief in their potential for improvement.

Q 5. Explain your experience with different evaluation models (e.g., Kirkpatrick’s four levels).

Kirkpatrick’s four levels of evaluation provide a comprehensive framework for assessing training effectiveness. I have extensive experience applying this model in various contexts.

- Level 1: Reaction: This assesses participants’ satisfaction and their immediate reactions to the training. Methods include surveys and feedback forms.

- Level 2: Learning: This measures the acquisition of knowledge and skills. Methods include pre- and post-tests, quizzes, and practical demonstrations.

- Level 3: Behavior: This focuses on whether the training has influenced on-the-job performance. Methods include observations, performance reviews, and 360-degree feedback.

- Level 4: Results: This evaluates the overall impact of the training on organizational goals, such as improved productivity or reduced errors. Methods include analyzing key performance indicators (KPIs) and business metrics.

Beyond Kirkpatrick, I’ve also worked with models like the Phillips ROI model, which focuses on quantifying the return on investment of training programs, and the CIPP model (Context, Input, Process, Product), which offers a more holistic view of evaluation encompassing various stages of a program.

Q 6. How do you ensure feedback is actionable and leads to improvement?

Ensuring feedback is actionable requires careful design and delivery. The feedback needs to be specific, relevant, and tied to clear improvement strategies.

- Specificity: Avoid vague statements; use concrete examples and data to illustrate areas for improvement. For example, instead of saying “Improve your communication,” say “In the last team meeting, your contribution lacked clarity, making it difficult for others to understand your point.”

- Relevance: Focus on areas directly impacting performance and organizational goals. Don’t overwhelm the individual with irrelevant feedback.

- Actionable Steps: Provide concrete steps for improvement. This might include suggesting resources, providing training, or outlining specific actions to take.

- Follow-up: Schedule follow-up meetings to check on progress and provide additional support. This shows a commitment to helping the individual succeed.

- Open Dialogue: Encourage two-way communication. Allow the individual to share their perspective and contribute to the improvement plan. This fosters ownership and commitment.

Q 7. Describe a time you had to provide constructive criticism. What was your approach?

In a previous role, I had to provide constructive criticism to a team member whose project deliverables consistently missed deadlines. My approach involved careful planning and a focus on collaborative problem-solving.

First, I scheduled a private meeting. I began by acknowledging their contributions and positive qualities before focusing on the specific issue of missed deadlines. I used the SBI model: I described the situations where deadlines were missed, the specific behaviors observed (e.g., lack of task prioritization, insufficient time allocation), and the impact on the team and the project (e.g., delays, increased workload for others). I avoided blaming and instead focused on the impact of their actions.

Then, I worked collaboratively to identify the root causes of the missed deadlines. We explored potential solutions, such as improved time management techniques, prioritization strategies, or seeking assistance when needed. We set up clear, achievable goals and a plan for monitoring progress. We agreed on regular check-ins to track their progress and provide further support. The result was a significant improvement in their time management and project delivery, demonstrating that constructive criticism, delivered effectively, can lead to substantial positive change.

Q 8. What metrics do you use to measure the effectiveness of an evaluation or feedback program?

Measuring the effectiveness of an evaluation or feedback program requires a multi-faceted approach, going beyond simple satisfaction surveys. We need to assess both the process and the impact. Key metrics fall into several categories:

- Participation Rates: High participation indicates buy-in and engagement. Low participation might signal issues with the process, such as unclear instructions or lack of perceived value.

- Timeliness: Feedback should be delivered promptly to maximize its impact. Delays can reduce relevance and diminish the effect of the feedback.

- Actionable Insights: The feedback should lead to concrete changes and improvements. We track the number of actions taken based on the feedback received and measure their effectiveness. For example, we might track employee performance improvements after receiving feedback on specific skill gaps.

- Employee Satisfaction with the Process: Surveys measuring employee satisfaction with the feedback process are crucial. This helps identify areas for improvement in the delivery and overall experience. A low satisfaction score indicates problems that need to be addressed, like unclear expectations or perceived unfairness.

- Impact on Key Performance Indicators (KPIs): Ultimately, the feedback program should contribute to improved organizational KPIs. This could include increased productivity, reduced errors, higher employee retention, or improved customer satisfaction. We track these metrics to demonstrate the program’s ROI (Return on Investment).

For example, in a previous role, we implemented a 360-degree feedback program. We tracked participation rates, the time it took for managers to act on feedback, employee satisfaction scores, and the subsequent improvement in team performance metrics like project completion rates and client satisfaction. The data showed a clear correlation between receiving and acting on feedback and improvements in team performance.

Q 9. How do you adapt your feedback approach based on the recipient’s personality and learning style?

Adapting my feedback approach is crucial for effectiveness. Understanding individual personality and learning styles ensures the message resonates and leads to positive change. I use a personalized approach, considering factors such as:

- Personality (e.g., Myers-Briggs, DISC): Individuals with different personality types might respond better to different feedback styles. For example, someone who is highly detail-oriented might appreciate a structured, written report, while someone more extroverted might prefer a face-to-face conversation.

- Learning Style (e.g., Visual, Auditory, Kinesthetic): I tailor the delivery method to match the individual’s learning style. Visual learners might benefit from charts or diagrams, auditory learners from verbal explanations, and kinesthetic learners from hands-on activities or role-playing.

- Communication Preferences: Some individuals prefer direct and explicit feedback, while others prefer a more indirect and suggestive approach. I always aim to be respectful, empathetic, and focused on growth.

For instance, I once worked with an employee who was highly introverted and preferred written communication. Instead of scheduling a meeting, I provided a detailed written evaluation with specific examples of both strengths and weaknesses, giving her time to process the information before we discussed it. This approach resulted in a much more productive conversation and ultimately, improved performance.

Q 10. Describe your experience with using data to inform evaluation decisions.

Data is essential for informed evaluation decisions. My experience involves using data to:

- Identify trends and patterns: Analyzing feedback data from multiple sources reveals recurring themes or areas needing attention. For example, consistent negative feedback on a particular skill can indicate a need for training or coaching.

- Measure the impact of interventions: Tracking changes in performance after implementing interventions based on feedback data shows the effectiveness of those actions. For example, we can measure if a training program successfully improved the skills highlighted in previous feedback.

- Improve the evaluation process itself: Analyzing feedback on the evaluation process itself helps improve future iterations, making it more effective and efficient. For example, if employees consistently complain about the length of the survey, we can shorten it or break it into smaller segments.

- Benchmark against industry standards: Comparing our evaluation data with similar organizations helps us identify areas where we excel or fall short. This offers valuable insight into best practices and areas for improvement.

In a recent project, we used data from employee performance reviews and 360-degree feedback to identify skill gaps. This data informed the development of a targeted training program that improved overall team performance by 15% within six months, a measurable improvement directly attributed to our data-driven approach.

Q 11. How do you ensure confidentiality and privacy when collecting and sharing feedback?

Confidentiality and privacy are paramount. I ensure these are protected through:

- Anonymity and Confidentiality Agreements: Participants should be assured their responses will remain confidential. Clear agreements outline how data will be handled and protected.

- Data Anonymization Techniques: Wherever possible, data is anonymized, removing any personally identifiable information. This prevents the identification of individual contributors.

- Secure Data Storage and Access Controls: Feedback data is stored securely, with restricted access only to authorized personnel. We use encryption and password protection to secure the data.

- Transparent Data Handling Procedures: Participants are informed about how their data will be used and protected. Transparency builds trust and encourages participation.

- Compliance with Relevant Regulations: We adhere to all relevant data protection regulations, such as GDPR or CCPA, ensuring compliance with legal requirements.

For example, when administering 360-degree feedback, we clearly explain the anonymity of responses to the participants and employ techniques to ensure that responses cannot be linked back to specific individuals. The aggregated data is then used for reporting purposes.

Q 12. How do you handle conflicting feedback from multiple sources?

Conflicting feedback is common. Handling it effectively involves:

- Investigate the Source of the Discrepancy: Understanding the context behind different opinions is crucial. This may involve speaking with individuals who provided differing feedback to understand their perspectives.

- Look for Patterns and Common Themes: Even with conflicting feedback, underlying patterns often emerge. Focusing on recurring themes helps identify key areas for improvement.

- Consider the Source’s Credibility and Perspective: Not all feedback carries equal weight. The source’s relationship to the individual being evaluated and their own biases should be taken into account.

- Prioritize Constructive Feedback: Focus on feedback that is actionable and provides specific examples rather than vague or emotional comments.

- Communicate the Synthesis of Feedback: When presenting feedback to the individual, explain how conflicting viewpoints were considered and the overall conclusions drawn.

For instance, if one colleague praises an employee’s communication skills while another criticizes them, I would investigate further. Perhaps the positive feedback refers to written communication, while the negative feedback concerns verbal communication. Addressing both aspects ensures a balanced and comprehensive approach to improvement.

Q 13. What are some common biases to be aware of when evaluating performance?

Several biases can cloud our judgment during performance evaluations. Awareness and mitigation strategies are essential. Common biases include:

- Recency Bias: Overemphasizing recent events while neglecting earlier performance. Mitigation: Maintain detailed records of performance throughout the evaluation period.

- Halo Effect: Letting one positive trait influence overall assessment. Mitigation: Evaluate each skill or trait independently, using specific examples to support each rating.

- Horns Effect: Letting one negative trait overshadow other positive attributes. Mitigation: Similar to the Halo effect, evaluate each aspect individually and avoid letting one negative aspect taint the whole assessment.

- Confirmation Bias: Seeking out information confirming pre-existing beliefs. Mitigation: Actively look for evidence that contradicts initial assumptions. Seek diverse perspectives.

- Leniency Bias: Rating individuals too generously. Mitigation: Use a standardized rating scale and objective performance data to support ratings.

- Severity Bias: Rating individuals too harshly. Mitigation: Same as for leniency bias. Use objective data and avoid letting emotions cloud judgment.

To minimize bias, using structured evaluation forms with clear criteria, incorporating 360-degree feedback, and regularly calibrating ratings among evaluators can help ensure fairness and accuracy.

Q 14. What is your experience with 360-degree feedback?

My experience with 360-degree feedback has been extensive and positive. It’s a powerful tool for gaining a comprehensive understanding of an individual’s performance from multiple perspectives. These perspectives typically include:

- Self-assessment: The individual reflects on their own performance.

- Manager assessment: The direct manager provides feedback.

- Peer assessment: Colleagues provide feedback.

- Subordinate assessment (if applicable): Direct reports provide feedback.

- Client assessment (if applicable): External stakeholders provide feedback.

The benefits include a holistic view of performance, increased self-awareness for the individual, and improved identification of areas for growth. However, it’s important to carefully design and implement the 360-degree feedback process to ensure anonymity, manage potential biases, and provide constructive feedback. The feedback data should be analyzed cautiously, focusing on trends and patterns, rather than individual comments. Following the process with clear guidelines and training for participants is essential to ensure its success. In one instance, we used 360-degree feedback to support leadership development, leading to noticeable improvements in team collaboration and managerial skills.

Q 15. How do you ensure that evaluations are fair and unbiased?

Ensuring fairness and unbiased evaluations is paramount. It’s about minimizing personal biases and ensuring the evaluation process itself is objective and transparent. This involves several key steps:

- Clearly Defined Criteria: Develop evaluation criteria that are specific, measurable, achievable, relevant, and time-bound (SMART). This leaves less room for subjective interpretation. For example, instead of ‘good communication skills,’ specify ‘clearly articulates ideas, actively listens to others, and provides constructive feedback during meetings.’

- Multiple Raters/Data Sources: Employing multiple raters helps mitigate individual biases. Triangulation, using different data sources like self-assessment, peer review, and supervisor observation, further strengthens the validity of the evaluation.

- Training and Calibration: Train evaluators on the evaluation criteria and procedures to ensure consistent application. Calibration sessions where raters evaluate the same sample data and discuss discrepancies can help align interpretations.

- Anonymity and Confidentiality: Where appropriate, maintaining anonymity during the evaluation process helps reduce bias based on personal relationships or perceived status. Confidentiality is crucial to encourage honest self-reflection and feedback.

- Regular Review and Improvement: The evaluation process itself should be regularly reviewed and updated to identify and address potential biases or weaknesses. This ensures ongoing fairness and improvement.

For instance, in a performance review, instead of relying solely on a manager’s subjective judgment, incorporating 360-degree feedback from peers and subordinates creates a more balanced and comprehensive view of an employee’s performance.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with developing evaluation instruments (surveys, rubrics, etc.).

I have extensive experience designing and implementing various evaluation instruments. This includes:

- Surveys: I’ve designed numerous employee satisfaction surveys, customer feedback questionnaires, and program evaluation surveys. I’m proficient in using survey platforms like Qualtrics and SurveyMonkey, ensuring user-friendly interfaces and statistically sound questionnaire design. This includes carefully choosing question types (Likert scales, multiple choice, open-ended questions) to collect the most relevant and reliable data.

- Rubrics: I’ve developed detailed rubrics for assessing student projects, presentations, and essays, as well as employee performance against specific job requirements. These rubrics clearly define performance levels and associated criteria, allowing for consistent and objective scoring.

- Behavioral Observation Checklists: I have experience designing and implementing checklists for observing specific behaviors in training programs or real-world settings. This provides a structured approach to data collection and promotes objectivity.

For example, in creating a rubric for evaluating student presentations, I’d specify criteria like organization, clarity of communication, visual aids, use of evidence, and audience engagement, each with clearly defined levels of achievement (e.g., excellent, good, fair, poor). This ensures consistent and unbiased grading across different students.

Q 17. How do you track the impact of feedback interventions?

Tracking the impact of feedback interventions requires a systematic approach. It’s not enough to just give feedback; we need to measure whether it actually led to improvement. I typically employ these methods:

- Pre- and Post-Intervention Measurement: Gather data before and after the feedback intervention to assess changes in behavior, performance, or attitudes. This could involve repeated surveys, performance evaluations, or observations.

- Control Groups: Where feasible, comparing the results of a group that received feedback with a control group that didn’t helps isolate the impact of the intervention.

- Qualitative Data Collection: Interviews or focus groups can provide valuable insights into the impact of feedback. These qualitative data can enrich quantitative findings by explaining the ‘why’ behind observed changes.

- Statistical Analysis: Analyzing pre- and post-intervention data using appropriate statistical methods (e.g., t-tests, ANOVA) can determine the statistical significance of any observed changes. This ensures the changes aren’t due simply to chance.

For example, in a leadership development program, we might measure leadership skills using a pre-program assessment and then again after the program, incorporating feedback sessions as a key part of the program. Comparing the scores can indicate the effectiveness of the feedback integrated into the training.

Q 18. Explain your understanding of different feedback delivery methods (e.g., written, verbal, digital).

Different feedback delivery methods each have strengths and weaknesses. Choosing the right method depends on the context, the audience, and the nature of the feedback.

- Written Feedback: Provides a permanent record, allows for thoughtful reflection, and can be easily shared and referenced later. However, it can lack the nuance and immediate impact of verbal feedback, and misinterpretations are possible.

- Verbal Feedback: Allows for immediate clarification and interaction, builds rapport, and can be tailored to the individual. It’s more personal but may lack structure, and the content is easily forgotten without a follow-up.

- Digital Feedback: Offers flexibility, convenience, and allows for efficient delivery to multiple recipients. Tools like online platforms and learning management systems facilitate this, but issues like technological barriers or lack of personalization need consideration.

A blended approach often works best. For instance, delivering initial feedback verbally and then following up with written documentation ensures both immediate impact and long-term record-keeping. I adapt my methods to suit the situation – a quick verbal correction might be suitable for a minor issue, while more substantial feedback requires a more structured written format.

Q 19. How do you ensure that feedback is timely and relevant?

Timely and relevant feedback is crucial for its effectiveness. Delays can diminish the impact, and irrelevant feedback is simply ignored. Here’s how I ensure timeliness and relevance:

- Establish Clear Timeframes: Setting expectations regarding when feedback will be provided ensures timeliness. For example, indicating that feedback on a project will be delivered within one week helps manage expectations.

- Link Feedback to Specific Events: Feedback should be directly connected to specific events, behaviors, or performance. For instance, feedback on a presentation should immediately follow the presentation.

- Prioritize Specific, Actionable Points: Rather than offering vague or overwhelming feedback, I focus on a few key areas for improvement. This makes the feedback easier to absorb and act upon. Using the STAR method (Situation, Task, Action, Result) can make feedback more concrete and relevant.

- Regular Check-Ins: Regular, informal check-ins help establish ongoing dialogue and offer opportunities for relevant, timely feedback.

For example, instead of waiting for a formal annual performance review, I’d provide regular feedback after important meetings or project milestones. This allows the individual to adjust their work more promptly and improve their effectiveness.

Q 20. What strategies do you use to encourage open communication and feedback sharing?

Encouraging open communication and feedback sharing requires creating a safe and supportive environment. This involves:

- Building Trust: Establishing a culture of trust is essential for individuals to feel comfortable sharing both positive and negative feedback. This involves demonstrating respect, active listening, and a commitment to acting on feedback.

- Providing a Safe Space: Emphasizing confidentiality and anonymity where necessary helps encourage honest feedback. Clearly communicate the purpose of feedback and how it will be used.

- Active Listening and Validation: Show appreciation for the feedback given, acknowledging its value and importance, even if you don’t agree with everything.

- Two-way Communication: Feedback should be a two-way street. Actively seek feedback from others and share your own thoughts and perspectives.

- Implementing Feedback: Demonstrate that feedback is valued by acting on it and communicating the changes made as a result. This shows that feedback is taken seriously.

For instance, implementing an anonymous suggestion box allows individuals to offer feedback without fear of reprisal. Following up on suggestions and communicating changes made shows that the feedback was heard and valued. This demonstrates a commitment to continuous improvement and creates a more open and collaborative environment.

Q 21. How familiar are you with different statistical methods used in evaluation?

My familiarity with statistical methods used in evaluation is extensive. I’m proficient in applying various techniques depending on the type of data and research questions.

- Descriptive Statistics: Calculating measures of central tendency (mean, median, mode) and dispersion (standard deviation, variance) to summarize data and identify trends.

- Inferential Statistics: Using techniques like t-tests, ANOVA, and regression analysis to test hypotheses and draw inferences from sample data to the population. For example, determining if there’s a statistically significant difference in performance before and after a training program.

- Correlation Analysis: Examining relationships between variables to understand how changes in one variable affect another. For instance, determining the correlation between employee satisfaction and productivity.

- Factor Analysis: Reducing a large number of variables into a smaller set of underlying factors. This is useful for analyzing survey data and identifying key themes or dimensions.

I also understand the importance of selecting appropriate statistical tests based on the nature of the data (parametric vs. non-parametric) and ensuring the assumptions of each test are met. Software like SPSS or R are tools I routinely utilize for data analysis and interpretation. My expertise extends to interpreting results and communicating findings in a clear and concise manner for both technical and non-technical audiences.

Q 22. How do you deal with resistance to feedback?

Resistance to feedback is common, stemming from fear of criticism, defensiveness, or a belief that the feedback is inaccurate or unhelpful. My approach is multifaceted and focuses on building trust and creating a safe space for dialogue.

- Active Listening and Empathy: I begin by actively listening to the individual’s concerns, validating their feelings, and demonstrating empathy. Understanding their perspective is crucial before addressing the feedback itself.

- Framing Feedback Positively: I focus on framing the feedback as an opportunity for growth and development, highlighting the positive aspects of their performance alongside areas for improvement. Instead of saying “You made this mistake,” I might say “I noticed this challenge, and here are some strategies to address it.”

- Collaborative Problem-Solving: I treat the feedback session as a collaborative problem-solving exercise. I encourage the individual to contribute ideas and solutions, empowering them to take ownership of their development.

- Focusing on Behavior, Not Personality: I concentrate on specific behaviors and their impact, avoiding generalizations or personal attacks. For instance, instead of saying “You’re lazy,” I’d say “I’ve noticed that deadlines have been missed recently. Let’s discuss how we can improve time management.”

- Follow-up and Support: After the feedback session, I follow up to check on progress, offer support, and provide resources for further development. This consistent support demonstrates my commitment to their success.

For example, I once worked with a team member resistant to feedback on their communication style. By actively listening to their concerns about perceived criticism, reframing feedback as constructive advice, and collaboratively developing communication strategies, I successfully helped them improve their communication skills and become more receptive to feedback in the future.

Q 23. What is your approach to designing an evaluation plan for a new program or initiative?

Designing an evaluation plan requires a systematic approach, ensuring alignment with program objectives and the use of appropriate methodologies. My process involves these key steps:

- Defining Program Objectives: Clearly outlining the goals and intended outcomes of the new program or initiative is fundamental. This sets the stage for developing relevant evaluation criteria.

- Identifying Key Performance Indicators (KPIs): KPIs provide measurable metrics to track progress towards the program objectives. These should be SMART (Specific, Measurable, Achievable, Relevant, and Time-bound).

- Selecting Evaluation Methods: The choice of methods (e.g., quantitative surveys, qualitative interviews, focus groups, document review) depends on the program’s nature and objectives. A mixed-methods approach often provides the most comprehensive understanding.

- Developing Data Collection Instruments: This includes creating surveys, interview guides, observation checklists, or other tools to gather relevant data. Pilot testing these instruments is crucial to ensure their reliability and validity.

- Establishing a Timeline and Budget: A realistic timeline and budget must be developed considering the resources needed for data collection, analysis, and reporting.

- Data Analysis Plan: Defining how data will be analyzed to answer the evaluation questions is vital. This plan might include statistical analysis, thematic analysis, or other techniques appropriate for the collected data.

- Reporting and Dissemination: A plan for reporting the findings to stakeholders and using the results for program improvement is necessary. The report should be clear, concise, and actionable.

For instance, when launching a new employee training program, KPIs might include employee satisfaction scores, improvements in job performance metrics, and reduction in error rates. The evaluation plan would specify how these KPIs will be measured and analyzed to determine the program’s effectiveness.

Q 24. How do you utilize technology to enhance the feedback process?

Technology plays a crucial role in enhancing the feedback process, offering increased efficiency, anonymity, and data-driven insights. Here’s how I utilize technology:

- Online Survey Tools: Platforms like SurveyMonkey or Qualtrics allow for the easy creation and distribution of surveys, gathering large amounts of feedback efficiently. These tools offer data analysis features to generate reports and visualizations.

- Feedback Software: Tools like 15Five or TINYpulse facilitate regular check-ins, 360-degree feedback, and performance reviews, streamlining the process and providing a centralized platform for feedback management.

- Collaboration Platforms: Tools like Slack or Microsoft Teams enable real-time feedback and discussions, fostering a culture of open communication and continuous improvement.

- Project Management Software: Platforms such as Asana or Jira can integrate feedback directly into project workflows, allowing for continuous monitoring and improvement of projects based on real-time feedback.

- Data Analytics and Visualization: Using data analytics tools to process feedback data provides valuable insights into trends and patterns, informing decision-making and improving future programs or initiatives.

For example, using SurveyMonkey to collect anonymous feedback on a new product launch allows for honest feedback without fear of reprisal, providing insights that might otherwise be overlooked.

Q 25. Describe your experience using different feedback tools and software.

My experience encompasses a range of feedback tools and software, each with its own strengths and weaknesses. I’ve used:

- SurveyMonkey: Excellent for large-scale surveys, offering various question types and robust data analysis features. However, it can lack the flexibility for highly customized feedback processes.

- Qualtrics: A more sophisticated platform with advanced features like branching logic and experimental designs. It’s suitable for complex research and evaluation projects but requires more technical expertise.

- 15Five: A strong choice for regular check-ins and performance management, providing a streamlined process for both employees and managers. It excels in facilitating open communication and continuous feedback.

- TINYpulse: Similar to 15Five, focusing on employee engagement and feedback. Its strengths lie in its ease of use and integration with other HR systems.

- Microsoft Forms: A simpler, more readily accessible option suitable for smaller-scale surveys and quick feedback collection.

The choice of tool depends on the specific needs of the evaluation, considering factors like budget, technical expertise, and the complexity of the feedback process.

Q 26. How do you ensure alignment between individual goals and organizational objectives in performance evaluations?

Aligning individual goals with organizational objectives is crucial for effective performance evaluations. My approach involves:

- Clear Communication of Organizational Goals: Ensuring that employees understand the organization’s strategic direction, values, and key objectives is paramount. This might involve regular communication, workshops, or team meetings.

- Goal Setting Collaboration: I facilitate collaborative goal-setting sessions where employees actively participate in defining their individual goals, ensuring alignment with broader organizational objectives. This process makes employees feel more invested and accountable.

- SMART Goals: Employing the SMART framework (Specific, Measurable, Achievable, Relevant, and Time-bound) guarantees that individual goals are well-defined, measurable, and directly contribute to organizational success.

- Regular Check-ins and Progress Monitoring: Regular check-ins provide opportunities for feedback, adjustments to goals, and support to ensure progress towards both individual and organizational objectives.

- Performance Evaluation tied to Goals: Performance evaluations should directly assess the employee’s progress toward their goals, reflecting their contribution to the organization’s overall success.

For example, during the goal-setting process, a sales representative’s individual goal of increasing sales by 15% might be directly linked to the organization’s overall revenue target.

Q 27. How do you maintain objectivity and professionalism while providing performance feedback?

Maintaining objectivity and professionalism in providing performance feedback requires a conscious effort and a structured approach. I focus on:

- Data-Driven Feedback: Relying on objective data, such as performance metrics, project deliverables, and observable behaviors, minimizes bias and ensures feedback is grounded in evidence.

- Specific and Behavioral Examples: Using concrete examples of behavior rather than generalizations ensures clarity and avoids ambiguity. For instance, instead of saying “You need to improve your teamwork,” I’d say “During the recent project, I observed that you didn’t collaborate effectively with the design team, resulting in delays.”

- Focusing on Improvement, Not Blame: The goal of feedback is improvement, not blame. Framing the feedback constructively, focusing on solutions and future development, cultivates a growth mindset.

- Using a Balanced Approach: Highlighting both strengths and areas for improvement provides a comprehensive and fair assessment. This approach builds confidence and encourages growth.

- Neutral and Respectful Language: Using neutral language, avoiding emotional words or judgments, maintains professionalism and ensures the feedback is received constructively.

- Active Listening and Open Dialogue: Encouraging open dialogue and actively listening to the employee’s perspective creates a safe space for feedback and fosters a collaborative environment.

By following this structured approach, I ensure the feedback is fair, constructive, and promotes growth without compromising objectivity or professionalism.

Q 28. Describe a situation where you had to revise an evaluation method due to unexpected results or challenges.

In one instance, we implemented a new customer satisfaction survey to evaluate a newly launched service. The initial results revealed unexpectedly low satisfaction scores, contradicting our internal assessment. This discrepancy highlighted a flaw in our evaluation method.

After analyzing the data and conducting qualitative interviews, we discovered that the survey questions were poorly worded and didn’t capture the full customer experience. The questions lacked specificity, leading to biased responses and unclear insights.

To address this, we revised the survey, implementing more precise and targeted questions, focusing on specific aspects of the service. We also added open-ended questions to gather richer qualitative data. We also refined our sampling technique to ensure we targeted a more representative customer base.

The revised survey provided more accurate and insightful data, aligning better with the qualitative feedback. This experience underscored the importance of pilot testing, rigorous design, and iterative refinement in evaluation methodologies.

Key Topics to Learn for Evaluation and Feedback Interviews

- Constructive Feedback Delivery: Learn techniques for providing feedback that is both specific and actionable, focusing on behavior rather than personality. Consider models like the SBI (Situation-Behavior-Impact) method.

- Effective Performance Evaluation Methods: Explore various performance appraisal methods (e.g., 360-degree feedback, goal setting, performance management systems) and their strengths and weaknesses. Understand how to choose the most appropriate method for different contexts.

- Bias Mitigation in Evaluation: Address potential biases that can influence evaluations (e.g., confirmation bias, halo effect). Learn strategies for minimizing bias and ensuring fair and objective assessments.

- Feedback Reception and Self-Reflection: Understand how to receive feedback effectively, focusing on learning and growth. Explore techniques for self-reflection and identifying areas for improvement.

- Using Data to Inform Evaluations: Learn how to utilize data and metrics to support performance evaluations and provide concrete evidence for feedback. This includes understanding relevant KPIs and data analysis techniques.

- Developing and Implementing Performance Improvement Plans: Learn the process of creating and implementing effective performance improvement plans, including setting goals, monitoring progress, and providing ongoing support.

- Legal and Ethical Considerations: Understand the legal and ethical implications of performance evaluations and feedback, ensuring compliance with relevant regulations and promoting fairness and respect.

Next Steps

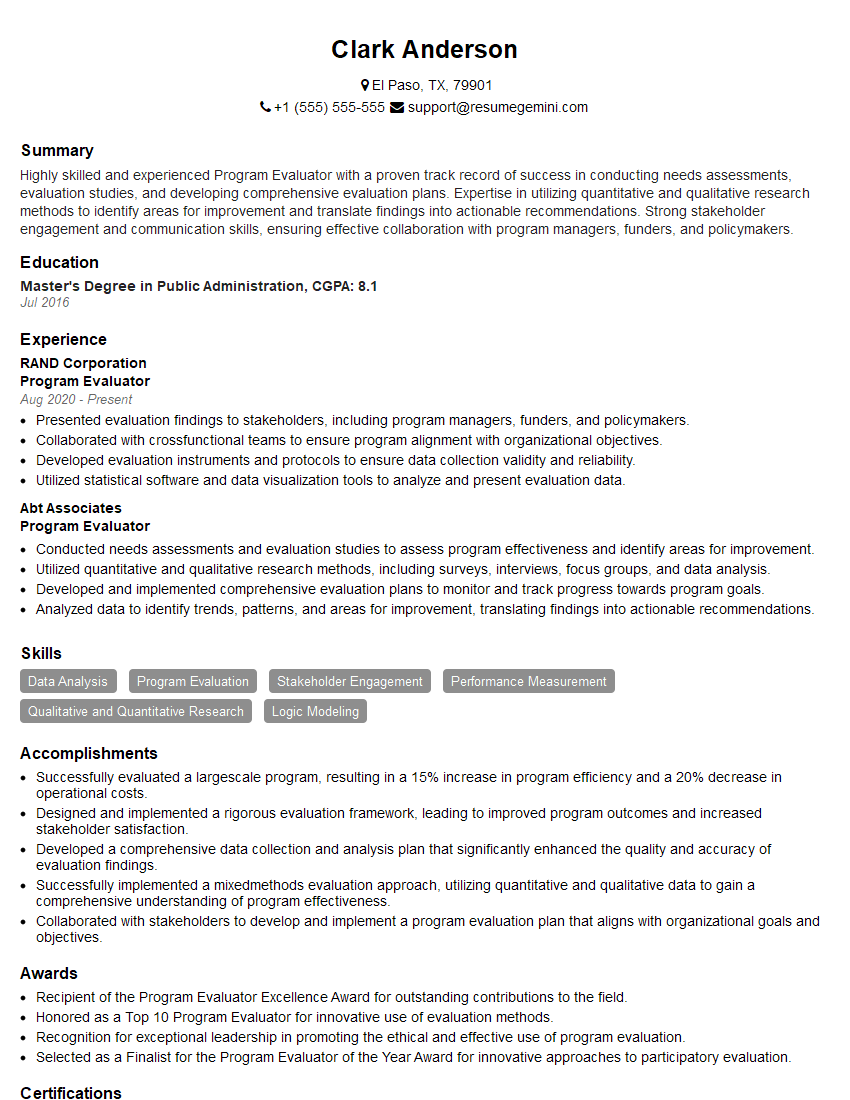

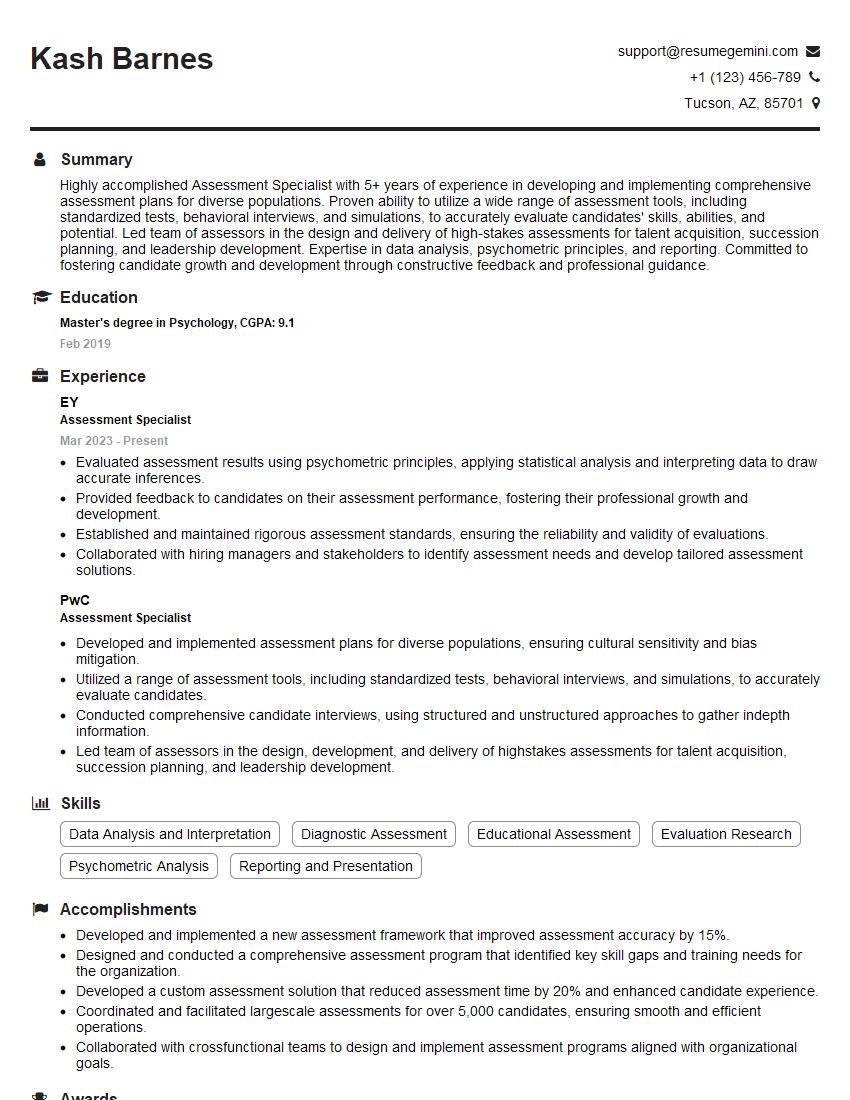

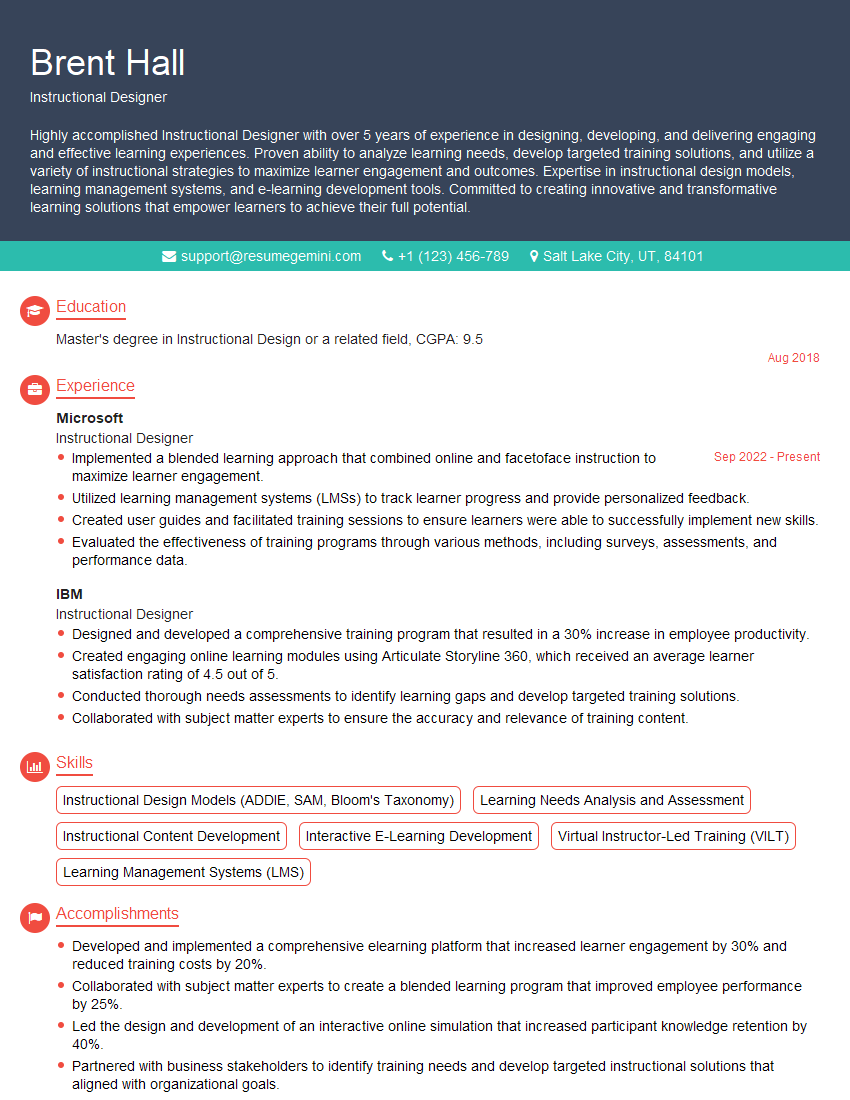

Mastering the art of evaluation and feedback is crucial for career advancement in almost any field. It demonstrates your ability to contribute to team growth, foster positive working relationships, and drive performance improvement. To significantly boost your job prospects, crafting a compelling and ATS-friendly resume is essential. ResumeGemini is a trusted resource that can help you build a professional resume tailored to showcase your expertise in Evaluation and Feedback. Examples of resumes tailored to this field are provided to help you create your perfect application. Take this opportunity to highlight your skills and experience – your future success awaits!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO