Cracking a skill-specific interview, like one for Experience in using virtualization technologies, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Experience in using virtualization technologies Interview

Q 1. Explain the difference between Type 1 and Type 2 hypervisors.

The key difference between Type 1 and Type 2 hypervisors lies in how they interact with the host hardware. Think of a hypervisor as a manager of computer resources, allowing multiple virtual machines (VMs) to run concurrently.

Type 1 hypervisors, also known as bare-metal hypervisors, are installed directly onto the host hardware. They run directly on the physical hardware, without an operating system (OS) mediating between the hypervisor and the hardware. This direct access results in better performance and security. Examples include VMware ESXi, Microsoft Hyper-V, and Xen. Imagine it as the building’s foundation directly supporting the apartments (VMs) above.

Type 2 hypervisors, on the other hand, run as software on top of an existing operating system (OS) like Windows or Linux. This acts as an intermediary layer between the VMs and the physical hardware. They are easier to install and manage, but generally offer slightly lower performance due to the added layer of abstraction. Examples include VMware Workstation Player and Oracle VirtualBox. Think of this as apartments built on top of an existing building – the OS is like the building’s existing structure.

Q 2. Describe the benefits and drawbacks of virtualization.

Virtualization offers several significant benefits, but also has some drawbacks.

- Benefits:

- Cost Savings: Consolidate multiple physical servers into fewer, more powerful machines, reducing hardware costs and energy consumption.

- Increased Efficiency: Optimize resource utilization by dynamically allocating CPU, memory, and storage to VMs based on demand.

- Improved Disaster Recovery: Create and maintain readily available VM backups for quick restoration in case of hardware failure or other disasters.

- Enhanced Flexibility and Scalability: Quickly deploy and scale VMs to meet changing business needs, adding or removing resources as required.

- Improved Security: Isolate applications and workloads within separate VMs, improving security and minimizing the impact of security breaches.

- Drawbacks:

- Performance Overhead: Although Type 1 hypervisors minimize this, virtualization still introduces some performance overhead compared to running directly on bare metal. The degree of overhead varies depending on the hypervisor and the resources being utilized.

- Complexity: Managing a virtualized environment requires specialized skills and knowledge, which can increase operational costs.

- Licensing Costs: Some hypervisors and virtualization management tools are expensive to license, particularly for large deployments.

- Security Risks: While virtualization can enhance security, misconfigurations or vulnerabilities in the hypervisor or guest OS can still create security risks.

Q 3. How does hardware-assisted virtualization work?

Hardware-assisted virtualization leverages specific instructions built into modern CPUs (like Intel VT-x or AMD-V) to significantly boost performance. Without hardware assistance, the hypervisor needs to emulate the functionality of the CPU, which is slow. With hardware assistance, the CPU can directly switch between VMs at the hardware level. This allows for near-native performance of the virtual machines.

Here’s how it works: The CPU’s virtualization extensions create a privileged mode for the hypervisor, allowing it to control and manage the VMs. This includes tasks like managing memory access, I/O operations, and scheduling. The CPU can switch between the VMs rapidly and efficiently without incurring the performance penalty of full emulation.

Think of it like a high-speed train switching tracks – with hardware assistance, the switch is very fast; without it, the train would have to completely stop and change direction, leading to significant delays.

Q 4. What are the key components of a virtualization infrastructure?

A robust virtualization infrastructure typically comprises several key components:

- Hypervisor: The core software that creates and manages VMs.

- Virtual Machines (VMs): The individual virtualized environments that run applications and operating systems.

- Virtual Switches: Software-defined switches that enable VMs to communicate with each other and the outside network.

- Virtual Storage: Mechanisms for storing and managing VM disk images (e.g., iSCSI, NFS, Fibre Channel).

- Management Tools: Software for managing and monitoring the entire virtualized infrastructure (e.g., vCenter, Hyper-V Manager).

- Host Servers: The physical servers on which the hypervisor and VMs run.

- Network Infrastructure: The underlying physical network that connects the host servers and provides network access to the VMs.

These components work together to provide a flexible and efficient environment for running and managing virtual machines.

Q 5. Explain the concept of virtual machine migration.

Virtual machine migration involves moving a running VM from one physical host to another without interrupting its operation. This is crucial for maintaining uptime, balancing workloads, and performing maintenance tasks. Think of it like moving a house without waking the occupants.

There are two primary types of VM migration:

- Live Migration (or Hot Migration): This moves a running VM without any noticeable downtime. The VM’s state is captured and transferred to the new host, and the VM continues to run seamlessly.

- Cold Migration (or Offline Migration): This involves shutting down the VM before moving it to the new host. This method is simpler but results in downtime.

The process typically involves transferring the VM’s memory, disk images, and configuration data to the target host. Advanced technologies often use sophisticated techniques to minimize disruption during live migration.

Q 6. Describe different virtual machine storage options.

Several options exist for storing virtual machine data:

- Local Storage: VMs are stored directly on the physical disks of the host server. Simple to set up, but limited scalability and backup/recovery challenges.

- Network Attached Storage (NAS): Centralized storage accessible via the network. Provides better scalability and centralized management but can be affected by network performance.

- Storage Area Network (SAN): High-performance storage connected via a dedicated network. Offers excellent performance and scalability but is typically more expensive.

- Cloud Storage: Utilizes cloud providers for storage (like AWS S3, Azure Blob Storage). Provides excellent scalability, redundancy, and cost efficiency, but can be dependent on network connectivity and cloud provider services.

- iSCSI: A networking protocol that allows storage devices to be presented as SCSI disks over a network. It is often used for shared storage in virtualization environments.

The choice depends on factors such as performance requirements, budget, and the need for scalability and redundancy.

Q 7. How do you manage virtual machine resources (CPU, memory, storage)?

Managing VM resources involves careful allocation and monitoring of CPU, memory, and storage to ensure optimal performance and efficiency. This is often handled through the hypervisor’s management tools.

- CPU: Can be allocated statically (a fixed number of cores) or dynamically (the VM can use more or less CPU based on demand). Over-allocation should be avoided to prevent performance degradation across multiple VMs.

- Memory: Similar to CPU, can be allocated statically or dynamically. Memory ballooning (dynamically reclaiming memory from a VM) and memory swapping (using hard drive space to store inactive memory) are common techniques to optimize memory use.

- Storage: Disk space is allocated to each VM’s virtual disks. Storage management includes capacity planning, performance monitoring, and creating snapshots or backups to protect data.

Many hypervisors offer sophisticated features for resource management, including resource pooling, reservation, and limits, to ensure fairness and prevent resource starvation. Regular monitoring is crucial to identify performance bottlenecks and make adjustments as needed.

Q 8. What are some common virtualization security considerations?

Virtualization, while offering many benefits, introduces unique security challenges. Think of it like this: you’re creating multiple apartments (VMs) within a single building (physical server). Securing this building requires attention to multiple layers.

- VM Isolation: Ensuring VMs cannot access each other’s resources is paramount. Hypervisors provide some isolation, but proper configuration of virtual networks and resource allocation is crucial. A misconfiguration could allow a compromised VM to access sensitive data on another.

- Host Security: The underlying physical server (the building) needs robust security. This includes patching the operating system, implementing strong firewalls, and regular security scans. A compromised host can affect all VMs running on it.

- Guest OS Security: Each VM requires its own security measures, just like each apartment needs its own locks. This involves regularly patching the guest operating systems, using strong passwords, and employing anti-virus software. Neglecting this can expose your VMs to vulnerabilities.

- Network Security: Virtual networks need to be carefully segmented and protected. Consider using virtual firewalls and intrusion detection/prevention systems within the virtualized environment. This is like having a security guard at the entrance of each apartment building.

- Data Security: Protecting data within VMs is essential. This includes encryption both at rest (on storage) and in transit (across networks). Regular data backups are also crucial to ensure business continuity in the event of a compromise.

In my experience, neglecting any one of these areas can significantly increase the risk of a security breach. A holistic approach, combining robust security measures at each layer, is vital.

Q 9. Explain your experience with VMware vSphere.

I have extensive experience with VMware vSphere, spanning over five years. I’ve worked with it in various capacities, from building and managing large-scale vSphere environments to troubleshooting performance issues and implementing high-availability solutions.

My responsibilities have included:

- Designing and deploying vSphere clusters, including resource provisioning and high availability configurations using VMware HA and DRS.

- Managing virtual machine lifecycle, from creation and deployment to maintenance and retirement.

- Implementing and managing VMware vCenter Server, including monitoring, alerting, and reporting.

- Utilizing VMware vSAN for storage virtualization and optimizing storage performance.

- Troubleshooting complex vSphere issues, including performance bottlenecks, storage problems, and network connectivity issues.

For instance, in a previous role, I was instrumental in migrating a large legacy application to a virtualized environment using vSphere. This involved careful planning, performance testing, and collaboration with various teams to ensure a seamless transition with minimal downtime. The project successfully migrated over 500 VMs, resulting in significant cost savings and improved resource utilization.

Q 10. Explain your experience with Microsoft Hyper-V.

My experience with Microsoft Hyper-V is equally substantial. I’ve used it in both standalone and clustered configurations, focusing on its integration with other Microsoft technologies such as System Center and Active Directory.

Specifically, I’ve:

- Deployed and managed Hyper-V clusters for high availability and failover clustering.

- Configured and managed virtual switches and networking policies within Hyper-V.

- Used Hyper-V Replica for disaster recovery and business continuity.

- Leveraged PowerShell scripting extensively for automation tasks such as VM creation, deployment, and management.

- Integrated Hyper-V with Microsoft Azure for hybrid cloud scenarios.

In one project, I used Hyper-V to create a robust and scalable test environment for a large enterprise application. This involved creating numerous VMs with specific configurations, allowing developers to test various scenarios concurrently. The environment was managed using PowerShell scripts, significantly reducing manual effort and improving efficiency.

Q 11. How do you troubleshoot virtual machine performance issues?

Troubleshooting virtual machine performance issues is a systematic process. I typically follow these steps:

- Identify the Symptoms: Begin by understanding the performance problem. Is it slow application response time, high CPU usage, low memory, or slow disk I/O? Collect performance metrics from the VM and the host.

- Gather Data: Use performance monitoring tools (VMware vCenter, Hyper-V Manager, Performance Monitor) to gather detailed information on CPU utilization, memory usage, disk I/O, network activity, and application performance. Look for bottlenecks and anomalies.

- Analyze the Data: Analyze the collected data to pinpoint the root cause. High CPU might indicate a resource-intensive application, while low memory could point to memory leaks or insufficient allocation. Slow disk I/O often indicates storage performance issues.

- Implement Solutions: Based on the analysis, implement appropriate solutions. This could involve increasing VM resources (CPU, memory, disk), optimizing application settings, upgrading storage hardware, or resolving network bottlenecks.

- Monitor and Verify: After implementing the solution, monitor the VM’s performance to ensure the problem is resolved. Repeat the process if necessary.

For example, I once diagnosed a performance bottleneck caused by a misconfigured virtual disk. By switching to a faster storage type and optimizing the virtual disk configuration, I significantly improved the VM’s performance.

Q 12. How do you handle virtual machine backups and recovery?

Virtual machine backups and recovery are crucial for business continuity. My approach involves a combination of strategies and technologies:

- Backup Strategy: I design a comprehensive backup strategy that considers the Recovery Time Objective (RTO) and Recovery Point Objective (RPO). This determines the frequency and methods of backups. Full backups are typically performed less frequently, supplemented by incremental or differential backups for efficiency.

- Backup Tools: I utilize both vendor-specific tools (VMware vSphere Replication, Microsoft Hyper-V Replica) and third-party backup solutions (e.g., Veeam, Acronis). The choice depends on the specific environment and requirements.

- Backup Storage: Backups are stored on dedicated backup storage, often using cloud storage or network-attached storage (NAS) for redundancy and scalability. This storage should be geographically separate for disaster recovery purposes.

- Recovery Process: A well-defined recovery process is essential. This includes detailed steps for restoring VMs from backups, testing the recovery process regularly, and ensuring that the recovery process is easily understandable by other team members.

In a previous role, I implemented a robust backup and recovery solution using Veeam, ensuring rapid VM restoration in the event of hardware failure or data corruption. This significantly reduced downtime and minimized data loss.

Q 13. Describe your experience with virtual networking.

Virtual networking is a cornerstone of virtualization. It allows for the creation of isolated and logically separated networks within a physical environment. I have extensive experience with both VMware’s vSphere Distributed Switch and Microsoft Hyper-V Virtual Switches.

My experience includes:

- Designing and implementing virtual networks: Creating virtual networks, configuring VLANs, and assigning IP addresses and subnets to VMs.

- Network security: Implementing virtual firewalls and network security policies to protect virtual machines and the network infrastructure.

- Network monitoring and troubleshooting: Using network monitoring tools to identify and troubleshoot network performance issues and connectivity problems.

- Load balancing: Configuring virtual load balancers to distribute network traffic across multiple VMs and improve application availability.

- Software Defined Networking (SDN): Experience with SDN solutions that provide centralized control and automation of virtual networks.

For example, I once designed and implemented a virtual network for a large e-commerce platform, ensuring high availability and scalability through the use of VLANs, load balancing, and network segmentation.

Q 14. What are your experiences with high availability and disaster recovery in a virtualized environment?

High availability (HA) and disaster recovery (DR) are critical in virtualized environments. HA ensures minimal downtime in case of hardware failure, while DR protects against larger-scale disasters like natural calamities or site outages.

My experience includes:

- VMware HA and DRS: Implementing and managing VMware HA and DRS for automatic failover and resource balancing. This ensures that VMs are automatically restarted on a different host if the original host fails.

- Microsoft Failover Clustering: Configuring and managing Hyper-V failover clusters for high availability.

- Replication technologies: Using replication technologies such as VMware vSphere Replication or Microsoft Hyper-V Replica to create copies of VMs in a different location, ensuring quick recovery in case of a disaster.

- Backup and recovery strategies: Implementing comprehensive backup and recovery strategies for VMs, including offsite backups and disaster recovery drills.

- Cloud-based DR solutions: Leveraging cloud services like Azure or AWS for disaster recovery, providing a geographically distant site for VM restoration.

In a recent project, I designed and implemented a disaster recovery plan for a critical application using a combination of VMware vSphere Replication and a cloud-based DR solution. This ensured minimal downtime in the event of a major disaster.

Q 15. Explain your experience with vCenter Server.

vCenter Server is the central management platform for VMware vSphere, a leading virtualization platform. Think of it as the brain of your virtualized infrastructure. My experience encompasses deploying, configuring, and managing vCenter Server across multiple versions, including vCenter 7 and 8. This includes tasks such as setting up high availability (HA) clusters for redundancy and disaster recovery, configuring role-based access control (RBAC) for enhanced security, managing licenses, and performing regular maintenance tasks. For example, in a previous role, I was responsible for migrating a large enterprise environment to vCenter 7, a process which involved careful planning, phased rollout, and thorough testing to ensure zero downtime during the transition. I’ve also used vCenter’s powerful monitoring and alerting capabilities to proactively address performance bottlenecks and potential issues, leading to improved uptime and reduced troubleshooting time.

I am proficient in using vCenter’s various features including resource management (CPU, memory, storage), VM creation and management, networking configuration (vSwitches, distributed switches, port groups), storage management (datastores, storage vMotion), and template management. I’ve successfully utilized vCenter’s automation capabilities through scripting (PowerCLI) to streamline tasks and improve operational efficiency. One specific instance involved automating the deployment of virtual machines based on pre-defined templates, significantly reducing manual intervention and deployment time.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are snapshots and how are they used in virtualization?

Snapshots in virtualization are essentially point-in-time copies of a virtual machine’s state. Think of it like taking a photo of your VM at a specific moment. They capture the entire disk state, memory, and configuration of the VM. Snapshots are invaluable for several reasons:

- Testing and Development: Experiment with new software or configurations without risking the primary VM. If something goes wrong, simply revert to the snapshot.

- Backup and Recovery: While not a full replacement for proper backups, snapshots provide a quick way to restore a VM to a previous state in case of minor issues.

- Disaster Recovery: Snapshots can be used as a quick recovery point if a VM suffers from a failure. Although not recommended as a primary DR solution.

However, it’s important to be mindful that snapshots consume storage and can impact VM performance if not managed properly. Over-reliance on snapshots and neglecting proper backups is a common mistake. I always advise a robust backup strategy in conjunction with using snapshots for temporary recovery or testing purposes. For instance, I’ve used snapshots extensively during the development and testing of critical applications, enabling quick rollbacks without affecting production systems. I also ensure to regularly clean up older, unnecessary snapshots to optimize storage and maintain performance.

Q 17. Describe your experience with virtual machine cloning.

Virtual machine cloning is the process of creating an exact copy of an existing VM. It’s a crucial task for rapid deployment and provisioning of VMs. My experience involves cloning VMs using various methods available in vCenter and other virtualization platforms. I’m proficient in both linked clones (which share storage with the parent VM, saving space) and full clones (which are independent copies). The choice of method depends on the specific requirements of the task. For example, linked clones are ideal for creating multiple development or testing environments quickly, while full clones are better for archiving or migrating VMs to a different location. I have used both vCenter's built-in cloning features and PowerCLI scripting to automate the process, making it more efficient and scalable.

In one instance, I was responsible for deploying 100+ identical VMs for a large-scale testing project. Using automated cloning with PowerCLI, I significantly reduced the deployment time from several days to just a few hours. Careful consideration was given to storage allocation and network configuration during the cloning process to ensure minimal performance impact on the existing infrastructure.

Q 18. How do you manage virtual machine templates?

Managing virtual machine templates is critical for maintaining consistency and efficiency. A template is essentially a pre-configured VM that serves as a blueprint for creating new VMs. This ensures that all newly deployed VMs have the same operating system, applications, and configurations. My experience includes creating, updating, and managing templates across multiple virtualization platforms. This includes optimizing templates to reduce their size and improve deployment times. I also focus on best practices, including regularly updating the base OS image in templates to maintain security patches and software updates. This helps in maintaining a standardized and secure environment.

In my previous role, we developed a standardized template library to streamline the provisioning of new virtual machines. Using vCenter’s template management capabilities, we categorized and organized these templates, providing an easy and centralized point of access for developers and system administrators. This improved consistency and reduced errors during VM deployment. Using proper tagging and versioning are crucial for managing this efficiently. I’ve also implemented version control for templates, ensuring that changes are tracked and can be easily reverted if needed.

Q 19. Explain your experience with different virtualization platforms (e.g., KVM, Xen).

My experience extends beyond VMware vSphere. I have hands-on experience with other virtualization platforms, including KVM (Kernel-based Virtual Machine) and Xen. KVM, a Linux-based hypervisor, is known for its performance and stability, often employed in cloud environments. I’ve used KVM extensively for building and managing virtualized servers within Linux environments. I understand its command-line interface and the underlying management tools, and have experience in configuring network interfaces, storage, and resource allocation within KVM.

Xen, another popular open-source hypervisor, offers flexibility and robust features. I’ve worked with Xen for deploying and managing VMs on various hardware platforms. My comparative experience with different virtualization platforms allows me to assess the strengths and weaknesses of each platform and make informed decisions based on specific requirements. For example, the choice between VMware, KVM, or Xen often depends on factors such as budget, existing infrastructure, and specific technical requirements. The open-source nature of KVM and Xen often makes them a cost-effective choice for smaller organizations.

Q 20. What is resource pooling in virtualization?

Resource pooling in virtualization is the process of centralizing and managing computing resources, such as CPU, memory, and storage, and making them available to virtual machines on demand. It’s a key element of virtualization that allows for efficient resource utilization and optimized performance. Instead of dedicating specific resources to each VM, resources are pooled together and dynamically allocated as needed. This eliminates resource waste associated with underutilized physical servers. Think of it like a shared pool of resources that VMs can draw from as needed, very similar to how a public utility provides water or electricity to homes.

Resource pooling enhances scalability and flexibility. It allows organizations to easily add or remove VMs as needed without investing in new hardware. I’ve implemented resource pooling in several environments, leveraging features like vSphere DRS (Distributed Resource Scheduler) and vSphere Storage DRS (Storage Distributed Resource Scheduler) in VMware. These tools automatically balance workloads across physical hosts and storage resources, optimizing resource utilization and ensuring high availability. Effective resource pooling requires careful planning and monitoring to ensure consistent performance and prevent resource contention.

Q 21. Describe your experience with SAN or NAS storage in a virtualized environment.

SAN (Storage Area Network) and NAS (Network Attached Storage) are common storage solutions in virtualized environments. I’ve worked extensively with both. SANs offer high performance and scalability, often using Fibre Channel or iSCSI protocols. They provide block-level storage, allowing direct access to storage devices by the hypervisor. This provides high performance but usually adds complexity and higher cost. NAS, on the other hand, provides file-level storage and is generally simpler to manage, less expensive, and is accessed via standard network protocols (like NFS or CIFS). The choice between SAN and NAS often depends on performance requirements, budget, and the level of management expertise.

My experience includes configuring and managing both SAN and NAS storage in virtualized environments. This includes setting up iSCSI and NFS shares, creating datastores, managing storage capacity and performance, and troubleshooting storage-related issues. I have experience using various storage management tools and monitoring storage performance to prevent performance bottlenecks and ensure high availability. Understanding the difference between block storage (SAN) and file storage (NAS) is crucial for deciding which solution is best suited for a particular virtualized environment.

Q 22. How do you monitor the health and performance of your virtualized infrastructure?

Monitoring the health and performance of a virtualized infrastructure is crucial for maintaining uptime and efficiency. It involves a multi-layered approach, combining proactive monitoring with reactive troubleshooting.

Firstly, I leverage the built-in monitoring tools provided by the hypervisor (e.g., vCenter for VMware vSphere, Hyper-V Manager for Microsoft Hyper-V). These tools offer real-time visibility into CPU utilization, memory consumption, storage I/O, and network performance for both the hypervisor itself and the individual virtual machines (VMs). I establish baselines for these metrics and set up alerts for deviations beyond defined thresholds. For example, I might set an alert if a VM’s CPU usage consistently exceeds 90% for more than 15 minutes.

Secondly, I use dedicated monitoring solutions like Prometheus and Grafana, or commercial tools like Nagios or Zabbix. These tools offer more granular control, allowing me to define custom metrics, create sophisticated dashboards, and integrate with other systems for comprehensive oversight. For example, I can track application performance alongside VM resource usage to pinpoint bottlenecks. I configure these tools to automatically generate reports, enabling trend analysis and proactive capacity planning.

Finally, I regularly review system logs for errors and warnings. This proactive approach, combined with automated monitoring, allows for early detection of issues, minimizing downtime and preventing performance degradation. Think of it like a doctor’s checkup – regular monitoring helps identify problems before they become critical.

Q 23. What are some best practices for virtual machine security?

Virtual machine security is paramount. It’s not just about securing the VMs themselves, but also the entire virtualization layer and the underlying physical infrastructure. Best practices include:

- Strong passwords and access control: Employ strong, unique passwords for all accounts, including administrative accounts for the hypervisor and individual VMs. Implement role-based access control (RBAC) to limit privileges.

- Regular patching and updates: Keep the hypervisor, host operating system, guest operating systems, and all applications updated with the latest security patches. This is crucial to mitigate known vulnerabilities.

- Network security: Utilize virtual networks (VLANs) to segment traffic and isolate VMs. Implement firewalls, intrusion detection/prevention systems (IDS/IPS), and regularly review network security policies.

- Virtual machine sprawl management: Regularly review and consolidate unused VMs. Reduce the attack surface by minimizing the number of running VMs.

- Regular backups: Implement a robust backup and disaster recovery strategy. This is crucial for quick recovery in case of malware infection or data loss.

- Security hardening: Configure VMs according to security best practices, including disabling unnecessary services and accounts, and enabling strong encryption.

- Regular security audits and penetration testing: Conduct regular security audits and penetration testing to identify vulnerabilities and ensure the effectiveness of security measures. Think of this like a security check-up.

Q 24. Explain your experience with automation tools for virtualization (e.g., Ansible, Puppet).

I have extensive experience using Ansible for automating virtualization tasks. Ansible’s agentless architecture simplifies deployment and management. I’ve used it to automate VM provisioning, configuration management, and deployment of applications across multiple hypervisors.

For example, I’ve created Ansible playbooks to automate the following tasks:

- VM provisioning: Creating VMs with specific configurations (CPU, memory, storage, networking) on VMware vSphere and AWS.

- Guest OS installation: Installing and configuring guest operating systems using Ansible modules.

- Application deployment: Deploying and configuring applications within VMs using Ansible’s extensive module library.

- Patch management: Automating the application of security updates to both the hypervisor and guest operating systems.

Here’s a snippet of an Ansible playbook for creating a VM:

- hosts: vcenter

connection: local

gather_facts: false

tasks:

- name: Create a VM

vmware_guest:

name: my_new_vm

resource_pool: my_resource_pool

datastore: my_datastore

cpu: 2

memory: 4096

While I haven’t used Puppet extensively, I am familiar with its declarative approach and its strength in managing large-scale infrastructure. The choice between Ansible and Puppet often depends on project specifics and team preferences.

Q 25. Describe a challenging virtualization project you’ve worked on and how you overcame the challenges.

One challenging project involved migrating a large legacy application from a physical server environment to a virtualized infrastructure with minimal downtime. The application was complex, with numerous dependencies and a tight deadline. The initial challenge was assessing the application’s dependencies and dependencies. We performed thorough dependency mapping which involved multiple meetings with the application developers. Then, we developed a phased migration plan, ensuring minimal disruption.

We started by virtualizing non-critical components, allowing us to test the migration process and refine our procedures before tackling the core application. We used VMware vSphere and implemented features like vMotion for live migration to reduce downtime during the cutover. We also developed a detailed rollback plan to mitigate potential issues. Close communication with the application team throughout the entire process was key to success. Regular monitoring and proactive adjustments to our plans allowed for quick mitigation of any issues encountered during the migration.

The project was successfully completed within the deadline and with minimal downtime, demonstrating the effectiveness of our meticulous planning and the robust capabilities of virtualization.

Q 26. What are the limitations of virtualization?

While virtualization offers numerous advantages, it does have limitations:

- Performance overhead: Virtualization introduces a layer of abstraction, which can result in a slight performance overhead compared to bare-metal deployments. This overhead is often negligible with modern hardware and virtualization technologies.

- Single point of failure: A failure of the hypervisor can affect all VMs hosted on it. This risk can be mitigated through clustering, high availability features, and disaster recovery plans.

- Security concerns: While VMs can be isolated, vulnerabilities in the hypervisor or host operating system can compromise the entire virtual environment. Strong security practices are essential to mitigate these risks.

- Complexity: Managing a virtualized environment can be more complex than managing physical servers, requiring specialized skills and tools.

- Licensing costs: Commercial virtualization software often involves licensing fees, which can be significant for large deployments.

Q 27. How would you approach optimizing resource utilization in a virtualized environment?

Optimizing resource utilization in a virtualized environment is key to maximizing efficiency and minimizing costs. It involves a combination of strategies:

- VM sizing and consolidation: Right-size VMs to their actual requirements. Avoid over-provisioning, as this wastes resources. Consolidate underutilized VMs onto fewer physical hosts.

- Resource allocation and scheduling: Utilize the hypervisor’s resource allocation and scheduling features to efficiently distribute resources among VMs, ensuring that critical VMs receive sufficient resources.

- Storage optimization: Use thin provisioning to allocate only the storage space actually used by VMs, reducing storage capacity requirements. Implement data deduplication and compression techniques.

- Network optimization: Use VLANs to segment traffic and optimize network performance. Implement network virtualization technologies to improve efficiency.

- Monitoring and analysis: Regularly monitor resource utilization and analyze performance data to identify bottlenecks and optimize resource allocation.

- Dynamic resource allocation: Utilize hypervisor features like DRS (Distributed Resource Scheduler in VMware vSphere) to automatically balance the resource utilization across the cluster and migrate VMs as needed.

Imagine a city’s water supply. Efficient resource utilization is like optimizing water distribution—ensuring sufficient water for everyone without wasteful overuse.

Q 28. Explain your experience with containerization technologies (e.g., Docker, Kubernetes) in relation to virtualization.

Containerization technologies like Docker and Kubernetes are closely related to virtualization, but they operate at a different level. Virtualization virtualizes the entire hardware, creating a virtual machine. Containerization, on the other hand, virtualizes the operating system kernel, sharing the host’s kernel and creating lightweight containers.

Containers are more lightweight and portable than VMs. They share the host’s kernel, resulting in less overhead and faster startup times. Kubernetes orchestrates containerized applications, automating deployment, scaling, and management. I’ve used Docker to package and deploy applications in a consistent and portable manner. Kubernetes simplifies the management of containerized applications at scale, automating tasks like deployment, scaling, and health checks.

In many cases, containers and virtual machines complement each other. For example, you might use VMs to host multiple containers, improving security and isolation. This approach leverages the benefits of both technologies. Imagine VMs as apartments in a building and containers as rooms within those apartments – each offering different levels of isolation and resource management.

Key Topics to Learn for Experience in using Virtualization Technologies Interview

- Hypervisor Types & Architectures: Understanding Type 1 (bare-metal) and Type 2 (hosted) hypervisors, their strengths and weaknesses, and examples like VMware vSphere, Hyper-V, KVM, and Xen.

- Virtual Machine (VM) Management: Creating, configuring, managing, and troubleshooting VMs, including resource allocation (CPU, memory, storage), networking, and guest operating system installation.

- Virtual Networking: Configuring virtual switches, VLANs, and virtual routers; understanding network virtualization technologies like VXLAN and SDN.

- Storage Virtualization: Working with iSCSI, Fibre Channel, and SAN/NAS technologies; understanding concepts like storage pools, snapshots, and replication.

- High Availability and Disaster Recovery: Implementing HA and DR strategies using virtualization technologies, including clustering, failover, and backup/restore procedures.

- Virtualization Platforms: Hands-on experience with at least one major virtualization platform (e.g., VMware vSphere, Microsoft Hyper-V, Citrix XenServer). Be prepared to discuss specific features and functionalities.

- Performance Tuning and Optimization: Identifying and resolving performance bottlenecks in virtualized environments; optimizing resource allocation and utilization.

- Security Best Practices: Implementing security measures in virtualized environments, including access control, encryption, and vulnerability management.

- Cloud Computing and Virtualization: Understanding how virtualization underpins cloud services like IaaS, PaaS, and SaaS; familiarity with cloud providers like AWS, Azure, or GCP.

- Troubleshooting and Problem Solving: Be ready to discuss common virtualization issues and how you approached solving them. Focus on your problem-solving methodology.

Next Steps

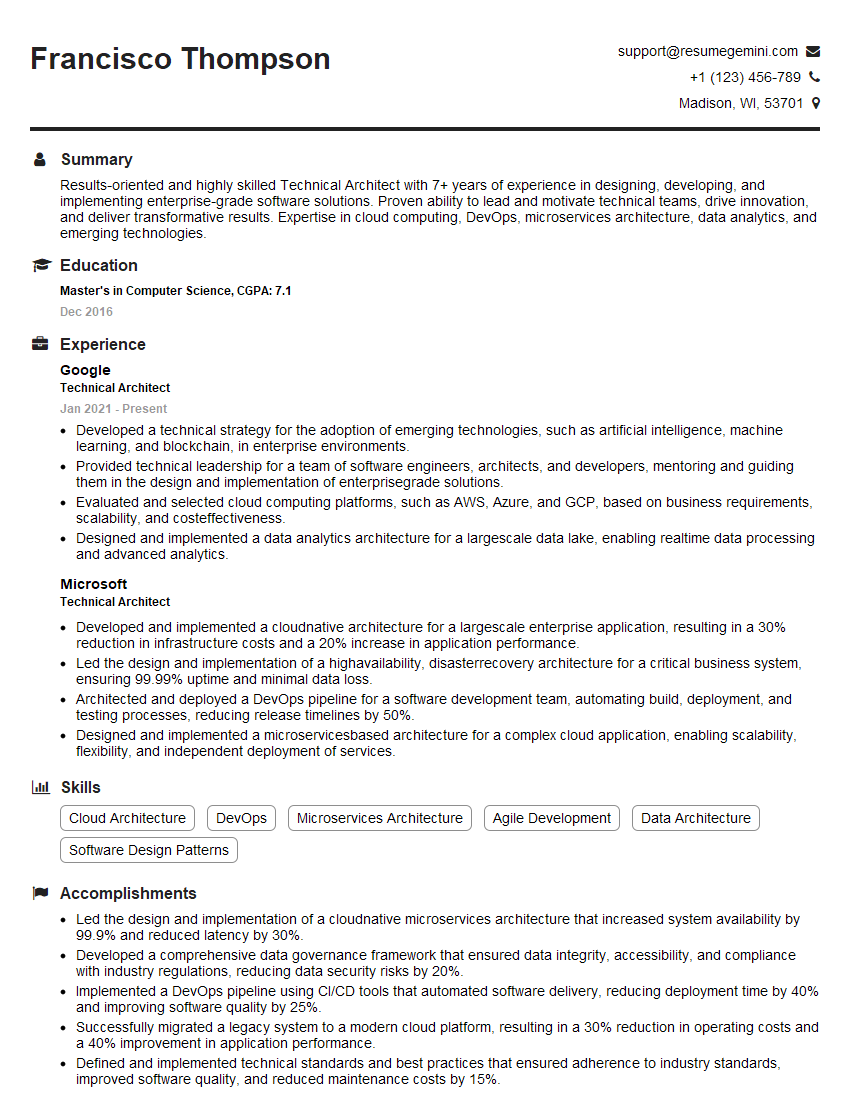

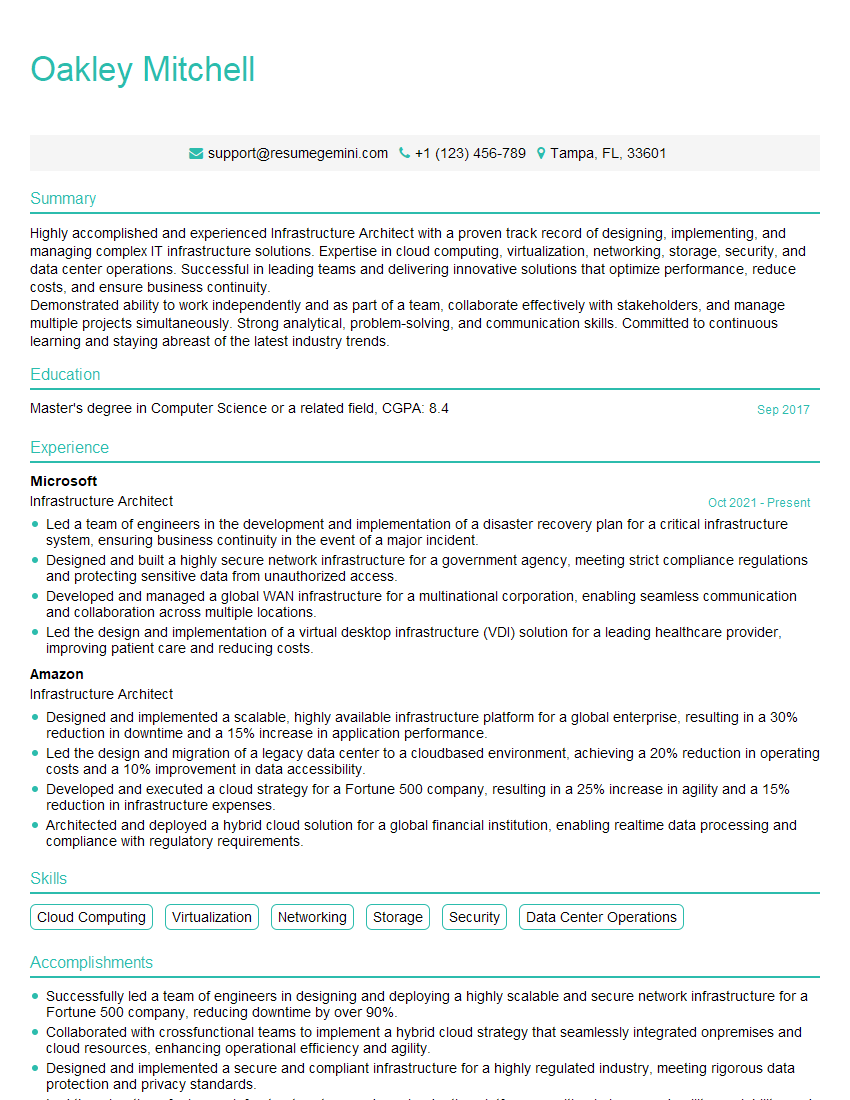

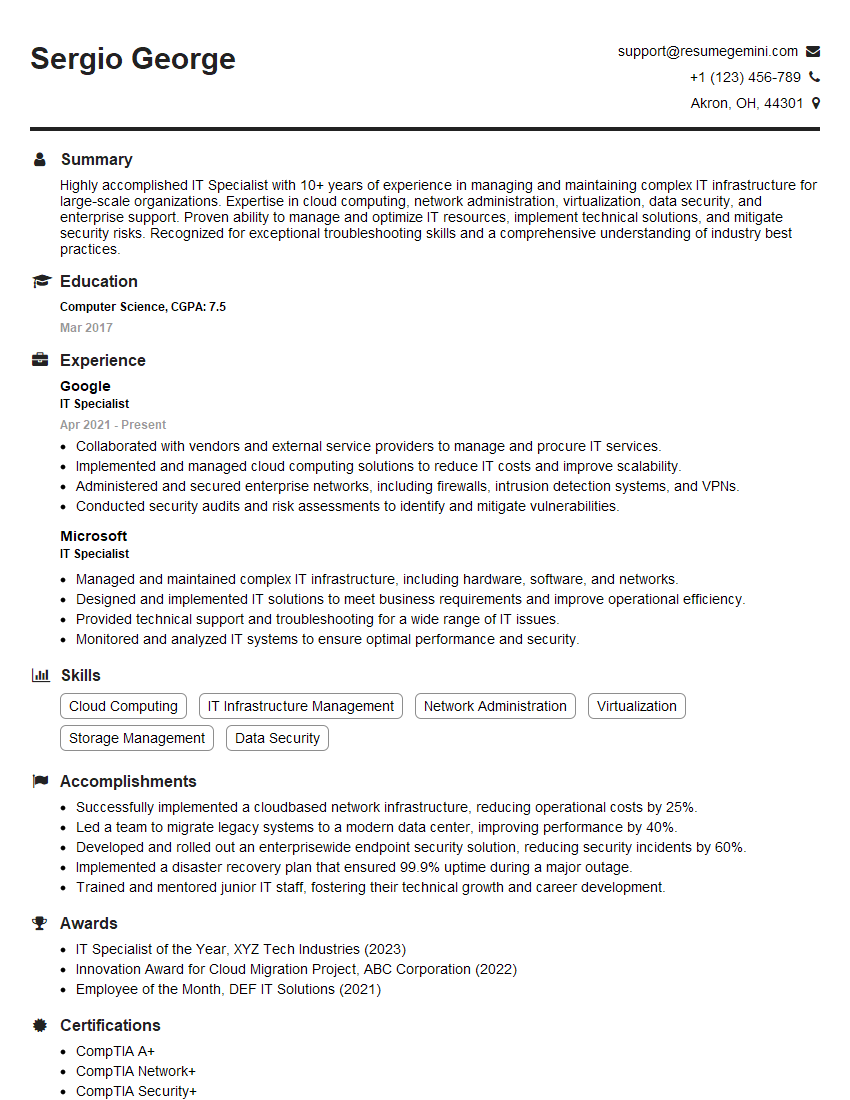

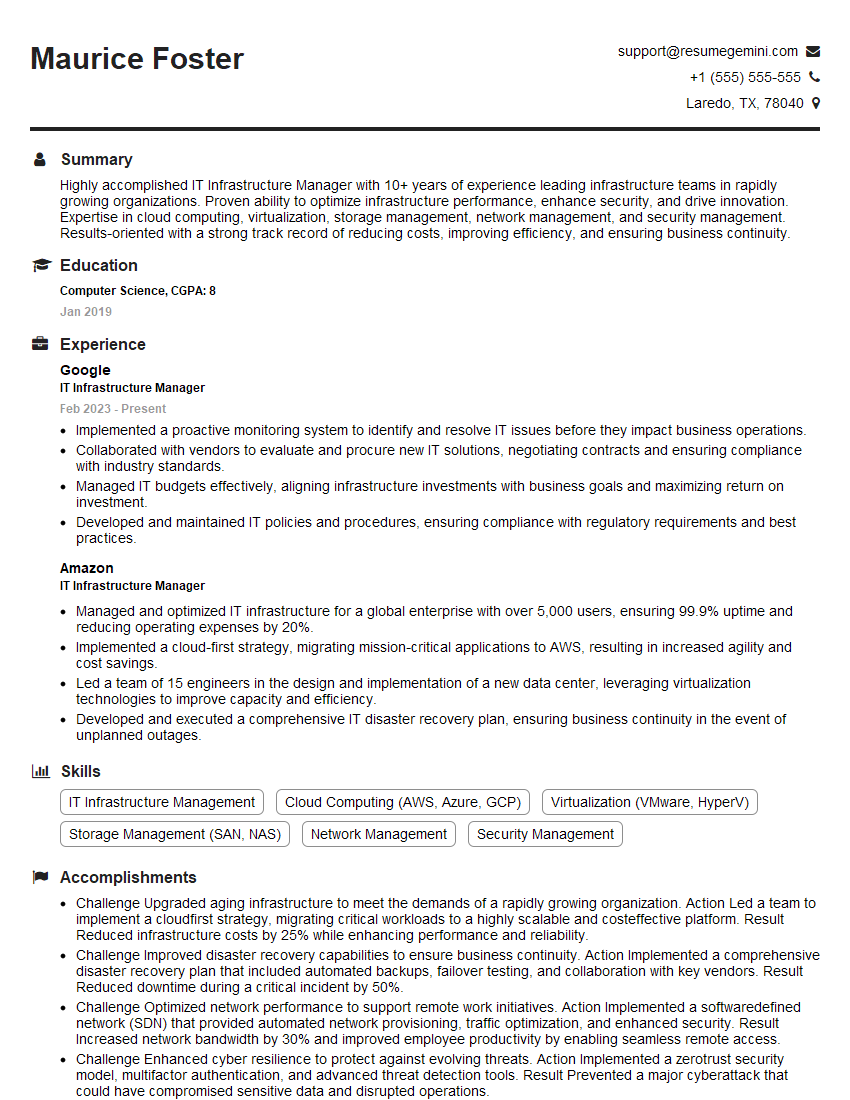

Mastering virtualization technologies significantly enhances your career prospects in IT, opening doors to roles with higher responsibility and compensation. An ATS-friendly resume is crucial for getting your application noticed. To create a compelling resume that showcases your skills effectively, we highly recommend using ResumeGemini. It’s a trusted resource designed to help you build a professional and impactful resume. Examples of resumes tailored to highlight experience in virtualization technologies are available to help guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO