Are you ready to stand out in your next interview? Understanding and preparing for Gathering and Numbering interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Gathering and Numbering Interview

Q 1. Describe your experience with data entry software.

My experience with data entry software spans over a decade, encompassing a wide range of applications, from simple spreadsheet programs like Microsoft Excel and Google Sheets to sophisticated database management systems such as MySQL and SQL Server, and specialized data entry tools. I’m proficient in using features like data validation, import/export functionalities, and automated data cleaning tools to ensure efficient and accurate data entry. For instance, in a previous role, I utilized a custom-built data entry application to process thousands of survey responses daily, leveraging its automated error checking to minimize manual intervention and maximize throughput.

I am also comfortable working with various data formats, including CSV, TXT, XML, and JSON, and can adapt quickly to new software as needed. My expertise includes not only inputting data but also designing and implementing effective data entry procedures to improve accuracy and efficiency.

Q 2. How do you ensure accuracy in data gathering?

Accuracy in data gathering is paramount. My approach is multi-pronged, focusing on prevention and verification. Prevention begins with meticulously designed data collection instruments – questionnaires, forms, etc. – that are clear, unambiguous, and user-friendly, minimizing the chances of misinterpretations. I also employ rigorous data validation techniques during entry, using software features like drop-down menus, input masks, and range checks to prevent invalid data from being entered.

Verification involves several steps: double-entry of critical data by a second person, automated consistency checks within the dataset (for example, ensuring that sums of subtotals match totals), and random sampling and manual verification of a subset of the data. For example, when working with financial data, I always perform cross-referencing with original source documents to confirm accuracy. This systematic approach dramatically minimizes errors and ensures reliable data.

Q 3. Explain your method for verifying numerical data.

Verifying numerical data requires a combination of automated checks and manual review. Automated checks include range checks (ensuring numbers fall within a reasonable range), consistency checks (comparing numbers across different data points), and plausibility checks (comparing numbers against known benchmarks or expectations). For example, if I’m entering age data, a range check would ensure no negative ages are entered. A consistency check would compare reported ages to birthdates.

Manual review involves a visual inspection of a sample of the data to detect outliers or inconsistencies that might be missed by automated checks. This often includes checking for typing errors or transcription errors. A simple example would be checking a hand-calculated sum against a reported total. Through this dual approach, I strive for high confidence in the accuracy of numerical data.

Q 4. What strategies do you use to maintain data integrity?

Maintaining data integrity involves a proactive and comprehensive strategy, encompassing several key practices. First and foremost is data security – protecting data from unauthorized access, modification, or destruction through secure storage, access controls, and regular backups. Think of this like securing a vault – only authorized personnel with proper credentials can access the contents.

Second, thorough documentation is essential. This includes detailing data collection methods, data cleaning procedures, and any transformations performed on the data. Metadata (data about the data) is crucial for understanding the context and limitations of the dataset. Finally, regular data audits, both manual and automated, help identify and correct any inconsistencies or errors before they become significant problems. This preventative approach is analogous to regularly servicing a car – preventing small issues from escalating into major repairs.

Q 5. How do you handle inconsistencies or errors in data?

When inconsistencies or errors are detected, a systematic approach is crucial. The first step involves identifying the nature and extent of the problem. Is it a single entry, a pattern of errors, or a systemic issue? Once the problem is characterized, I investigate the root cause. Was there an error in data collection, entry, or processing? This investigation might involve reviewing original source documents, interviewing data collectors, or examining the data entry process for flaws.

Depending on the severity and source of the error, various methods of correction are employed. Simple errors can be corrected directly. More complex or systematic errors may require re-entry of data, revision of data collection tools, or even adjustments to the overall data processing workflow. Proper documentation of all corrections and their rationale is crucial for maintaining data integrity and transparency.

Q 6. Describe your experience with different data collection methods.

My experience encompasses a diverse range of data collection methods. I’m proficient in both manual and automated techniques. Manual methods include conducting surveys (both paper-based and online), interviews, and direct observation. Automated methods include web scraping, utilizing APIs (Application Programming Interfaces) to access data from external sources, and employing various data capture technologies such as barcode scanners and RFID readers.

Each method has its strengths and weaknesses, and the optimal choice depends on the specific data being collected, the resources available, and the desired level of accuracy. For example, while web scraping can collect vast amounts of data quickly, it requires careful validation to ensure data quality. Conversely, while interviews offer rich qualitative data, they are time-consuming and potentially prone to interviewer bias.

Q 7. How do you prioritize tasks when dealing with large volumes of data?

Prioritizing tasks with large volumes of data requires a structured approach. I typically begin by assessing the urgency and importance of each task. Urgency relates to deadlines, while importance relates to the overall goals of the project and the potential impact of delays. This can be visualized using a matrix prioritizing tasks based on urgency and importance (urgent/important, important/not urgent, etc.).

Once priorities are established, I often break down large tasks into smaller, manageable subtasks to improve focus and track progress more effectively. I use project management tools and techniques such as Kanban or Scrum to visualize workflow and track progress, helping me stay organized and meet deadlines, even with large datasets.

Q 8. How familiar are you with database management systems?

I’m highly familiar with various database management systems (DBMS), including relational databases like MySQL, PostgreSQL, and SQL Server, as well as NoSQL databases such as MongoDB and Cassandra. My experience spans designing database schemas, writing complex SQL queries for data retrieval and manipulation, optimizing database performance, and ensuring data integrity. I understand the importance of choosing the right DBMS based on the specific needs of a project, considering factors like data volume, structure, and query patterns. For example, in a project involving large-scale geospatial data, I opted for PostgreSQL with PostGIS extension for its superior spatial querying capabilities.

Q 9. What is your experience with data validation and cleaning?

Data validation and cleaning are crucial steps in any data-centric project. My experience involves employing a multi-step process. Firstly, I identify potential data quality issues through profiling – examining data types, distributions, and identifying anomalies. Then, I implement validation rules using both automated scripts (e.g., using Python with Pandas) and manual checks to ensure data conforms to expected formats and ranges. For example, I’ve used regular expressions to validate email addresses and phone numbers. Cleaning involves handling missing values (imputation or removal), correcting inconsistencies (standardizing addresses), and resolving outliers. I always meticulously document the cleaning process, ensuring reproducibility and traceability.

Q 10. How do you identify and resolve data entry errors?

Identifying and resolving data entry errors requires a combination of techniques. I start by using data profiling tools to identify outliers and inconsistencies. For instance, a birthdate in the future would immediately flag an error. Statistical methods can also highlight unusual patterns. Next, I visually inspect data through dashboards and reports to identify obvious errors. For example, inconsistent capitalization or spelling errors can be easily spotted. Finally, I leverage data validation rules to prevent errors from entering the system in the first place. These rules can be implemented at the data entry level (e.g., using input masks) or during data import processes. If errors are detected, I investigate their root cause to prevent recurrence. This might involve retraining data entry staff or improving data entry procedures.

Q 11. Explain your experience with data quality control procedures.

Data quality control is paramount to me. My experience includes establishing robust procedures encompassing data profiling, validation, and cleaning, as described earlier. I also employ regular data audits to assess the overall quality and identify areas for improvement. These audits may include comparing data against known reliable sources. I leverage various metrics to measure data quality, such as completeness, accuracy, consistency, and timeliness. I create documentation outlining data quality standards and procedures, training data entry personnel to adhere to these standards. For instance, in a previous role, we implemented a system of regular data quality reports which flagged anomalies, allowing us to proactively address emerging issues.

Q 12. How do you handle missing or incomplete data?

Handling missing or incomplete data depends on the context and the amount of missing data. I employ several strategies: Deletion: If the missing data is a small percentage and randomly distributed, I might remove the incomplete rows or columns. Imputation: For larger datasets, I might use imputation techniques to fill in missing values. This could involve using the mean, median, or mode for numerical data, or using the most frequent category for categorical data. More sophisticated methods like k-Nearest Neighbors or multiple imputation might be used for more complex scenarios. Prediction: In cases where the missing data is predictable based on other variables, I might build a predictive model to estimate the missing values. The choice of method depends on the characteristics of the data and the potential impact of the missing data on the analysis.

Q 13. What is your experience with data transformation and manipulation?

Data transformation and manipulation are integral parts of my workflow. I have extensive experience using SQL and programming languages like Python (with libraries such as Pandas and NumPy) to perform various transformations. This includes data cleaning (as discussed above), data aggregation (e.g., calculating sums, averages, and counts), data normalization, data standardization (e.g., scaling variables), creating derived variables, and reshaping data. For example, I’ve used SQL to pivot tables, and Python to perform complex data manipulations, including feature engineering for machine learning models. I always document the transformations performed, to ensure the process is repeatable and understandable.

Q 14. Describe your experience working with spreadsheets and databases.

Spreadsheets (like Excel or Google Sheets) and databases are both essential tools in my toolkit. I use spreadsheets for initial data exploration, cleaning, and simple analysis, especially when dealing with smaller datasets. However, for larger datasets and complex analyses, databases are indispensable for their scalability and ability to handle complex queries efficiently. I frequently transfer data between spreadsheets and databases, often using tools like SQL or scripting languages to automate this process. I’m adept at building efficient database queries to extract and transform data for analysis and reporting. I prefer to leverage databases for long-term data storage and management due to their robustness and features for data integrity.

Q 15. How do you ensure the confidentiality and security of sensitive data?

Confidentiality and security of sensitive data are paramount in my work. I employ a multi-layered approach, starting with robust access controls. This involves using strong passwords, multi-factor authentication, and role-based access, ensuring that only authorized personnel can access specific datasets. Furthermore, I encrypt data both in transit and at rest using industry-standard encryption algorithms. For example, I might use AES-256 encryption for sensitive files stored on cloud servers or during data transfer. Data anonymization and pseudonymization techniques are also critical. This involves replacing identifying information with unique identifiers, protecting individual privacy while maintaining data integrity for analysis. Regular security audits and penetration testing help identify vulnerabilities and strengthen our defenses. Finally, I always adhere to relevant data privacy regulations like GDPR or HIPAA, ensuring compliance and minimizing risks.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you manage your time effectively when working with deadlines?

Effective time management is crucial when facing tight deadlines. I utilize project management techniques such as breaking down large tasks into smaller, manageable steps. This allows for better prioritization and tracking of progress. I rely heavily on task management tools like Trello or Asana to visually organize my workload and set realistic deadlines for each sub-task. Timeboxing is another essential strategy – allotting a specific time block for each task helps maintain focus and prevents task-switching, improving overall efficiency. Regularly reviewing my schedule and adjusting priorities as needed is key to staying on track. For instance, if an unexpected delay occurs, I immediately re-evaluate the schedule and communicate any potential impact to stakeholders. Proactive communication is vital to managing expectations and preventing last-minute rushes.

Q 17. What are some common challenges you encounter in data gathering and how do you overcome them?

Data gathering often presents challenges. Inconsistent data formats from different sources are common, requiring data cleaning and transformation. For example, merging datasets with varying date formats or missing values requires careful attention. Incomplete or inaccurate data is another hurdle. This necessitates verifying data quality through data validation techniques and potentially contacting data sources to clarify inconsistencies. Access limitations to certain datasets can also be a significant challenge. When encountering such issues, I employ collaborative problem-solving, potentially involving data owners or other experts. I use data cleaning and imputation techniques to handle missing or inaccurate data, and I meticulously document any transformations made for reproducibility and transparency. If access is restricted, I explore alternative data sources or explore strategies to negotiate data access while prioritizing ethical considerations.

Q 18. Explain your understanding of different data formats.

I’m familiar with a range of data formats, including structured formats like CSV (Comma Separated Values), which are easily imported into spreadsheets and databases; tabular formats like Excel spreadsheets; and relational database formats like SQL. I also work with semi-structured formats like JSON (JavaScript Object Notation) and XML (Extensible Markup Language), common in web applications and APIs. Finally, I have experience with unstructured data such as text documents, images, and audio files, often requiring specialized tools for processing and analysis. Understanding the strengths and weaknesses of each format is critical for selecting the right tools and techniques for a given task. For instance, CSV is great for simple data import, while SQL is needed for complex queries on relational databases. Handling unstructured data often requires natural language processing (NLP) or image recognition techniques.

Q 19. How do you stay organized when dealing with multiple data sources?

Managing multiple data sources requires a systematic approach. I typically begin by creating a comprehensive inventory of all data sources, documenting their characteristics, formats, and potential limitations. This inventory serves as a roadmap for my work. Then, I use a combination of file management systems and database solutions to organize data. For example, cloud-based storage services can be useful for organizing large datasets, while relational databases excel at managing structured data. Metadata management is crucial – meticulously documenting data sources, transformation steps, and any relevant information ensures traceability and reproducibility. A consistent naming convention for files and folders helps avoid confusion and maintain a clear organizational structure. Tools like version control systems (e.g., Git) can help manage different versions of datasets and track changes.

Q 20. What is your experience with data visualization tools?

I have extensive experience using data visualization tools such as Tableau, Power BI, and Python libraries like Matplotlib and Seaborn. These tools are indispensable for communicating data insights effectively. I select the appropriate tool based on the specific data and the desired level of interactivity and complexity. For instance, Tableau is excellent for creating interactive dashboards, while Matplotlib in Python is suited for generating publication-quality static plots. My skills encompass creating a variety of visualizations including bar charts, scatter plots, line graphs, heatmaps, and geographic maps. I understand the importance of selecting the most appropriate visualization type to effectively communicate a specific message, avoiding misleading or unclear representations. Data visualization is not just about aesthetics; it’s about clearly communicating findings to a diverse audience.

Q 21. Describe your experience using number sequences and series effectively.

My experience with number sequences and series is extensive, ranging from simple arithmetic and geometric progressions to more complex Fibonacci sequences and recursive patterns. I understand how to identify patterns in numerical data, extrapolate trends, and use these patterns to make predictions or solve problems. For instance, understanding the characteristics of an arithmetic progression (constant difference between consecutive terms) allows for quick calculation of the nth term or the sum of a series. Similarly, understanding geometric progressions (constant ratio between terms) is crucial for analyzing exponential growth or decay. This knowledge extends to more advanced areas such as time series analysis, where I utilize techniques like autoregressive models (AR) and moving averages (MA) to forecast future values based on past observations. Proficiency in programming languages like Python, with libraries such as NumPy and SciPy, significantly enhances my ability to manipulate and analyze numerical data.

Q 22. How do you deal with large numerical datasets?

Handling large numerical datasets efficiently requires a multi-pronged approach focusing on data management, processing, and analysis. Think of it like organizing a massive library – you wouldn’t try to manage it all at once!

Data Storage and Management: I leverage databases (like SQL or NoSQL) optimized for numerical data. These systems allow for efficient querying and retrieval of specific subsets of the data, preventing the need to load everything into memory at once. For example, using SQL’s

WHEREclause allows me to isolate and analyze only the relevant portions of the dataset.Data Processing: I utilize tools and techniques like parallel processing and distributed computing (like Apache Spark or Hadoop) to break down the dataset into smaller, manageable chunks that can be processed concurrently. This significantly reduces processing time for tasks like aggregation, filtering, and transformation. Imagine dividing a large research paper into sections and assigning each to a different expert for analysis before combining the findings.

Data Analysis: For analysis, I choose appropriate tools based on the size and complexity of the data. This could range from scripting languages like Python with libraries like Pandas and NumPy (great for smaller-to-medium datasets) to specialized analytical platforms like Tableau or Power BI for visualization and exploratory analysis of large datasets. Visualization is critical; identifying patterns and anomalies is much easier with well-designed charts and graphs.

Q 23. Explain how you would approach quality assurance of numbered data.

Quality assurance for numbered data is paramount. It’s about ensuring accuracy, consistency, and completeness. I approach this systematically, employing several checks and balances:

Data Validation: This involves using rules and checks to verify that the data meets predefined criteria. For instance, I might check for valid ranges (e.g., age must be between 0 and 120), consistent formats (e.g., date formatted as YYYY-MM-DD), and the absence of illogical values. Think of this as proofreading a document for grammatical errors – you want to catch inconsistencies before they become problems.

Data Comparison: Comparing data from multiple sources or against expected values helps identify discrepancies. For example, comparing sales figures from a point-of-sale system with inventory data can reveal potential errors or inconsistencies.

Random Sampling and Auditing: Manually reviewing a subset of the data to spot patterns and inconsistencies not caught by automated checks. This is like quality control in a factory – randomly checking a few products from a batch to ensure quality standards are maintained.

Automated Testing: Implementing automated tests in the data processing pipeline helps catch errors early and consistently. Unit tests, integration tests, and end-to-end tests can all be applied to different stages of the data handling process.

Q 24. What is your proficiency in using different numbering systems?

My proficiency extends across various numbering systems, including:

Decimal (Base-10): This is the most commonly used system in everyday life and data analysis.

Binary (Base-2): Crucial for understanding computer systems and data representation at a fundamental level.

Hexadecimal (Base-16): Frequently used in computer programming and data representation for its compactness.

Roman Numerals: While less common in data analysis, understanding them is helpful for historical data or specialized applications.

Other Bases: I am familiar with the concepts and can adapt to other bases as needed.

I can easily convert between these systems, and I understand the implications of using different numbering systems in various contexts. For example, in financial reporting, I would use decimal numbers, while analyzing network traffic might involve working with hexadecimal or binary.

Q 25. How familiar are you with different data entry standards and guidelines?

I am well-versed in various data entry standards and guidelines, including:

ISO standards: Familiar with relevant ISO standards pertaining to data quality and metadata. These standards provide a framework for consistent data handling and facilitate interoperability between systems.

Industry-specific guidelines: I adapt my approach based on the specific industry’s regulations and best practices (e.g., healthcare, finance). This ensures compliance and avoids potential issues.

Understanding these standards is essential for ensuring data integrity and preventing errors. Think of these guidelines as a set of best practices for consistent and accurate data entry, like a style guide for writing – they enhance clarity and reliability.

Q 26. Describe your approach to handling conflicting or duplicate data.

Handling conflicting or duplicate data requires a careful and methodical approach. The best solution depends on the context and the nature of the data. My steps include:

Identification: Utilizing data deduplication techniques and algorithms to identify potential duplicates and conflicts. This could involve comparing various fields or using fuzzy matching for similar but not identical entries.

Investigation: Determining the root cause of the conflict or duplication. This might involve examining data sources, processes, and identifying potential errors or omissions.

Resolution: Based on the investigation, I choose an appropriate resolution strategy:

- Manual Correction: Reviewing and correcting the data manually (suitable for small datasets or complex conflicts).

- Automated Correction: Using scripts or tools to automatically resolve conflicts based on predefined rules (appropriate for larger datasets with clear resolution criteria). For example, choosing the most recent entry or the one from a trusted source.

- Data Fusion: Combining data from multiple sources to create a more complete and accurate record (useful when data represents different aspects of the same entity).

- Flagging: Marking conflicting entries for later review and manual resolution if automation is not feasible.

Documentation: Recording the steps taken to resolve conflicts and any decisions made, maintaining a complete audit trail. This ensures transparency and accountability.

Q 27. How do you ensure data consistency across different platforms?

Maintaining data consistency across different platforms requires a systematic approach centered around data standardization and integration. The key is ensuring data follows a common format and structure regardless of where it resides.

Data Standardization: Establishing clear data definitions, formats, and validation rules to ensure uniformity across all platforms. This involves defining data types, allowed values, and units of measure. This is like establishing a standard language – everyone speaks the same dialect.

Data Integration: Using tools and techniques to synchronize data across different platforms. This might involve using APIs, ETL (Extract, Transform, Load) processes, or data replication techniques. This is like setting up a bridge to connect different databases.

Data Governance: Implementing policies and procedures for data management, including access control, data quality checks, and change management. This is the overall framework to make sure data stays consistent and accurate.

Metadata Management: Tracking essential information about the data, including its origin, structure, and quality, which helps in troubleshooting inconsistencies.

Q 28. Explain your problem-solving approach when faced with numerical discrepancies.

My approach to solving numerical discrepancies is systematic and data-driven. I follow these steps:

Identify and Document: Carefully define the discrepancy, noting its magnitude, location in the dataset, and any associated context. This ensures I clearly understand the problem.

Investigate Sources: Trace the source of the data and look for potential errors in the data collection, processing, or entry stages. This often involves examining logs, databases, and other relevant documentation.

Analyze Patterns: Look for patterns in the discrepancies. Are they random or systematic? If systematic, this might indicate an error in the data processing pipeline or a flaw in the data collection methodology.

Apply Diagnostic Tests: Run diagnostic tests to validate data integrity and identify potential causes. This could involve checking data validation rules, comparing data against expected values, or performing statistical analysis to identify outliers.

Develop and Implement Solutions: Based on the analysis, formulate a solution, which might involve correcting errors, updating data processes, or revising data collection methods. This could involve writing scripts, modifying data pipelines, or developing new data validation procedures.

Document Resolution and Prevention: Record the resolution steps and any preventative measures taken to avoid similar discrepancies in the future. This ensures that the problem doesn’t recur.

Key Topics to Learn for Gathering and Numbering Interview

- Data Acquisition Strategies: Explore various methods for gathering data, including manual entry, automated systems, and data extraction techniques. Consider the advantages and disadvantages of each method in different contexts.

- Data Validation and Cleaning: Understand the importance of data quality. Learn techniques for identifying and correcting errors, inconsistencies, and missing values. This includes understanding data types and appropriate validation rules.

- Numbering Systems and Conventions: Become familiar with different numbering systems (e.g., sequential, hierarchical, alphanumeric) and their applications in various industries. Understand how to choose and implement the most appropriate system for a given task.

- Data Organization and Structure: Master the principles of organizing gathered data into logical structures, such as databases or spreadsheets. Learn how to design efficient data structures for optimal retrieval and analysis.

- Error Handling and Troubleshooting: Develop strategies for identifying and resolving issues that arise during the gathering and numbering process. Learn how to document and report errors effectively.

- Data Security and Privacy: Understand best practices for protecting sensitive data throughout the gathering and numbering process, adhering to relevant regulations and ethical considerations.

- Automation and Scripting (where applicable): Explore the use of scripting languages or tools to automate repetitive tasks related to data gathering and numbering. This could improve efficiency and reduce manual effort.

Next Steps

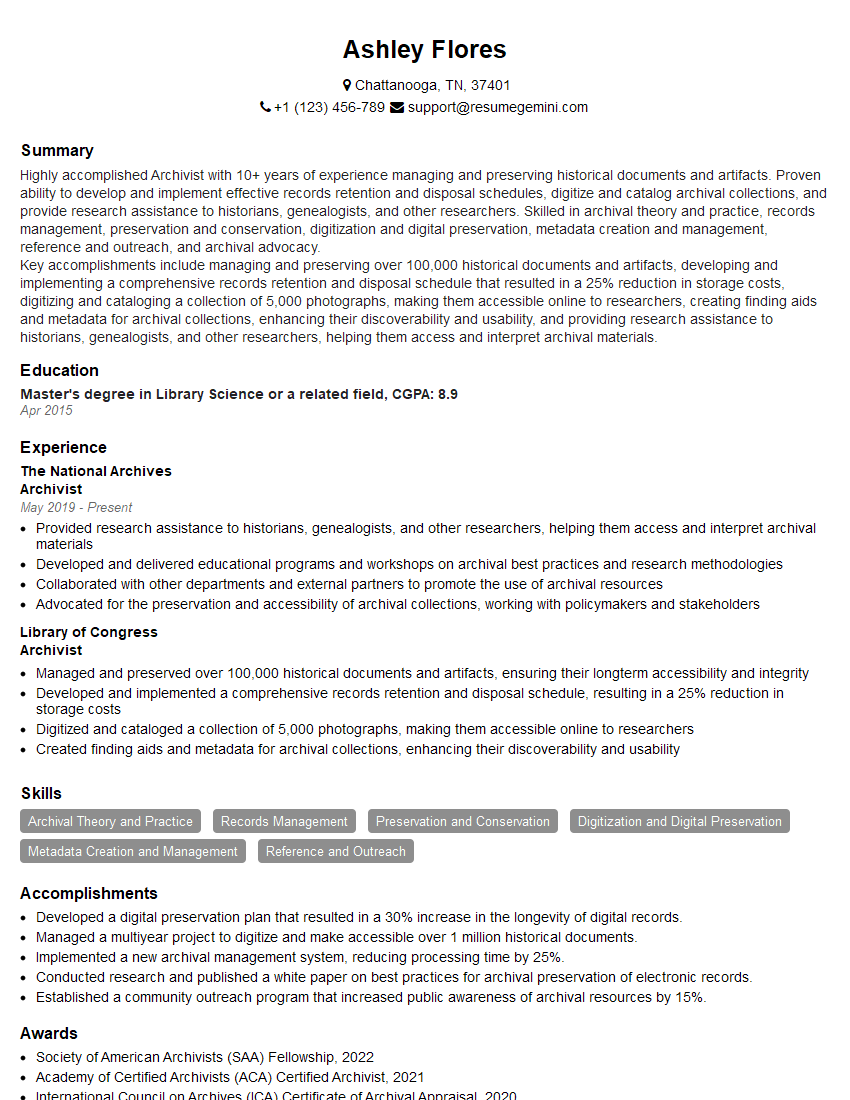

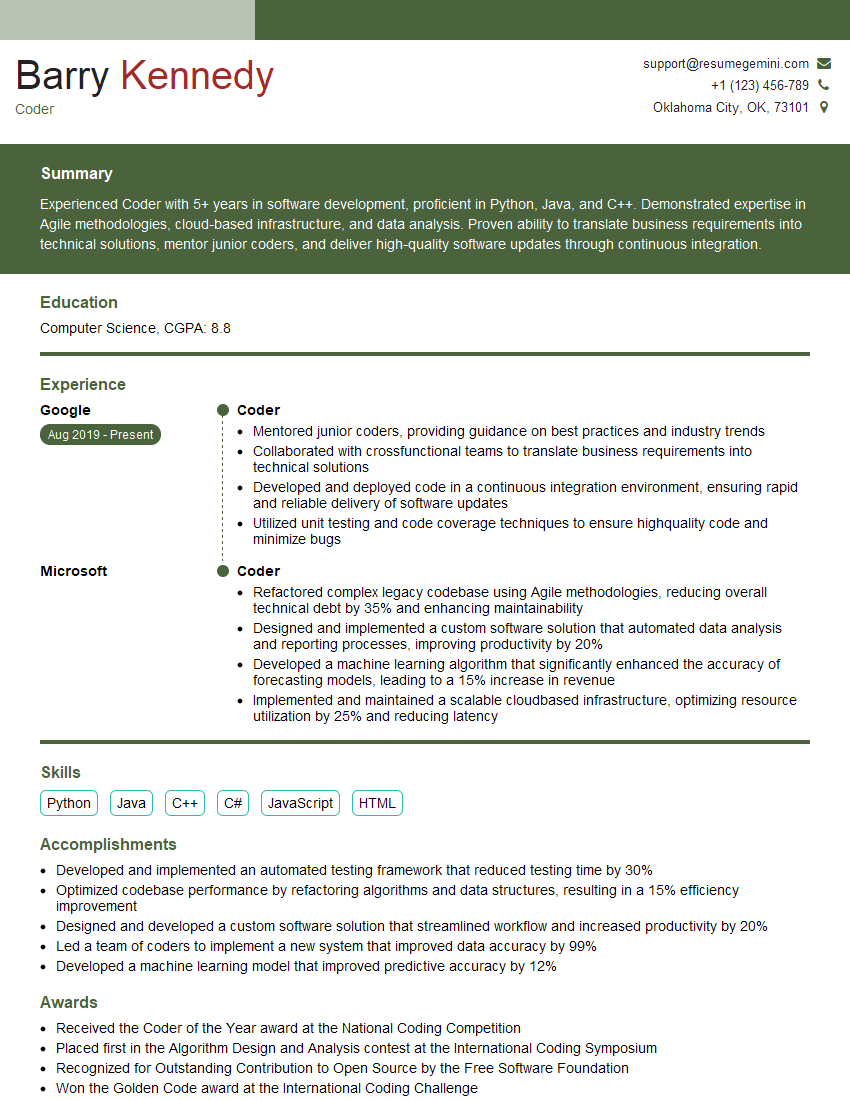

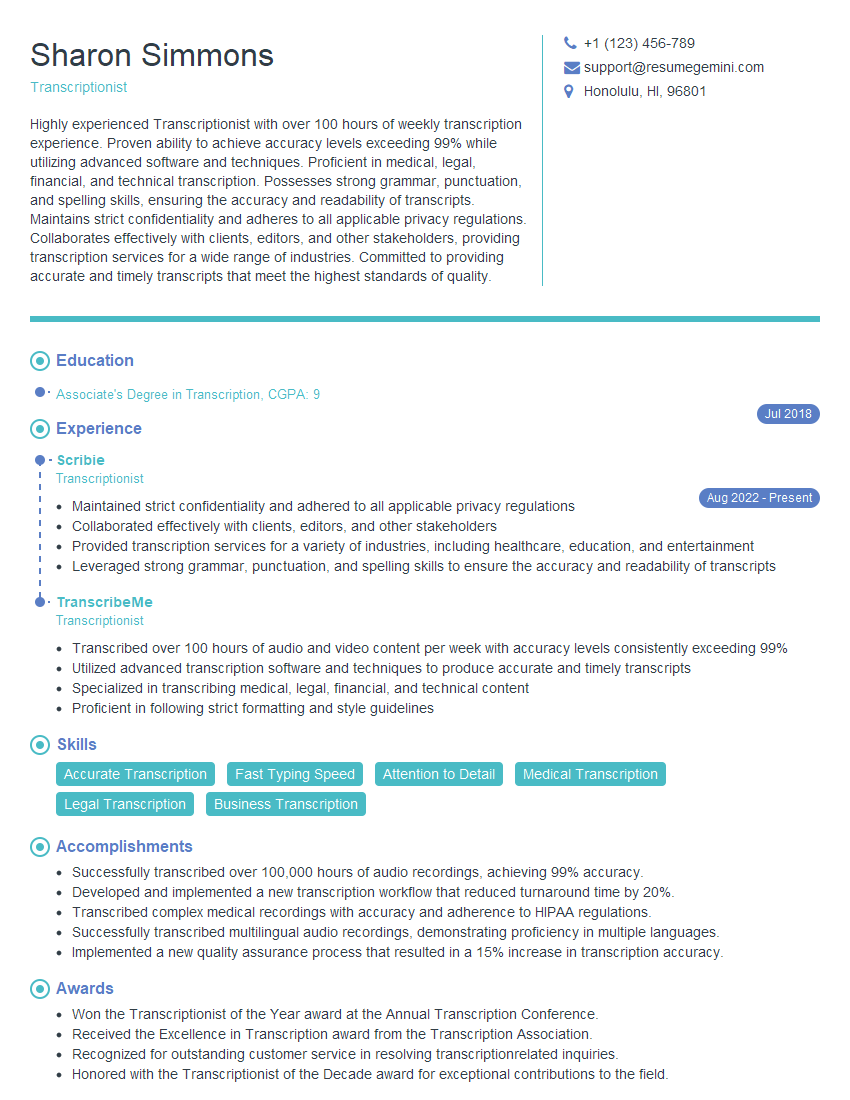

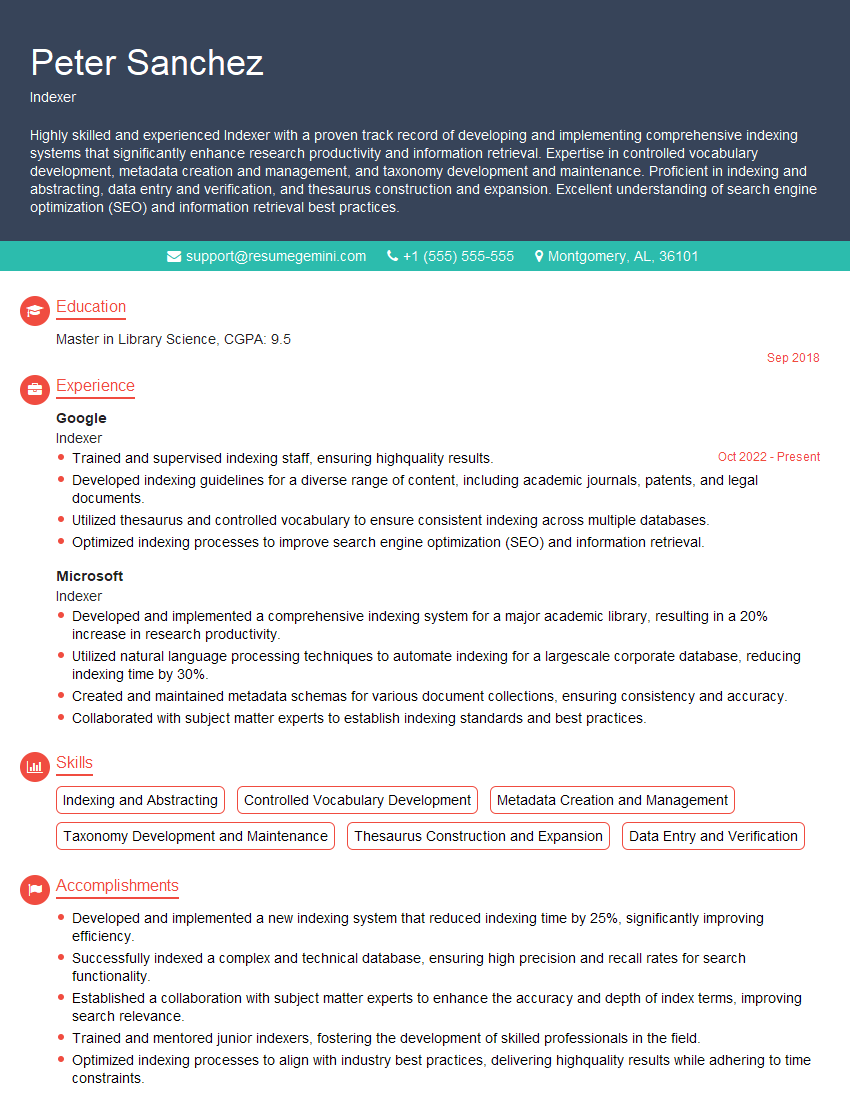

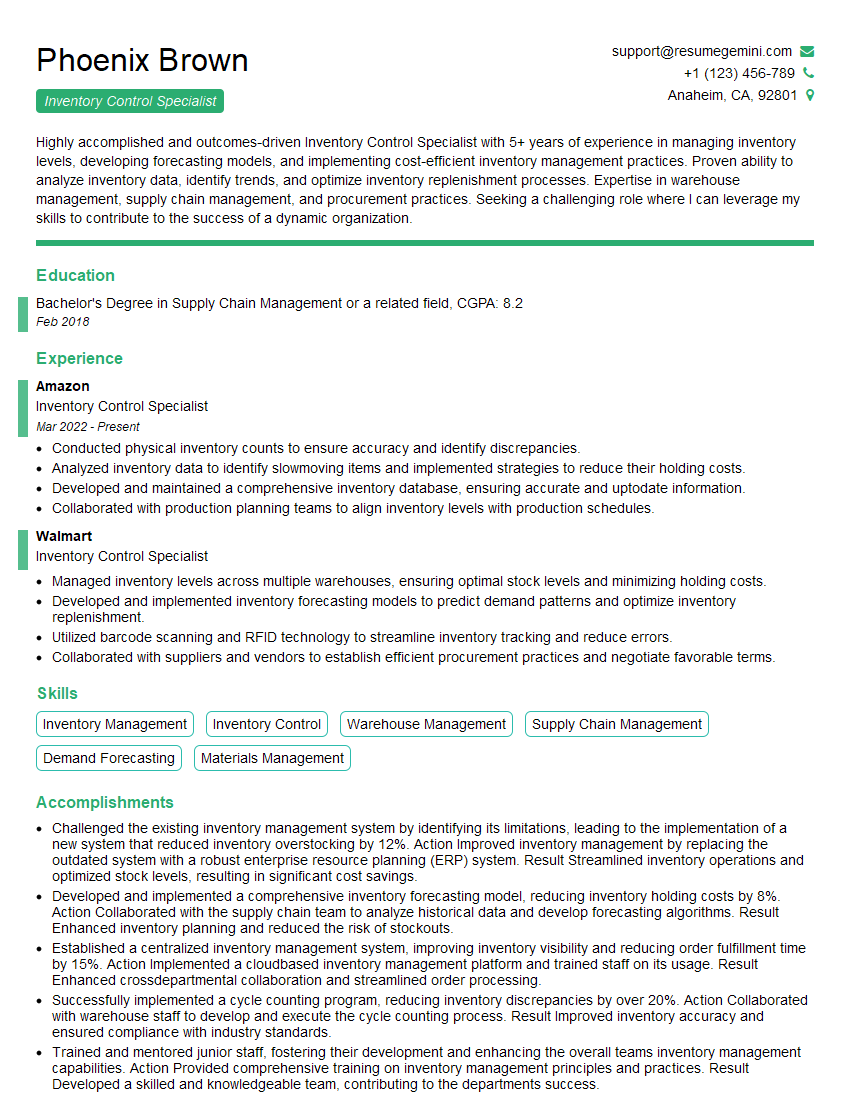

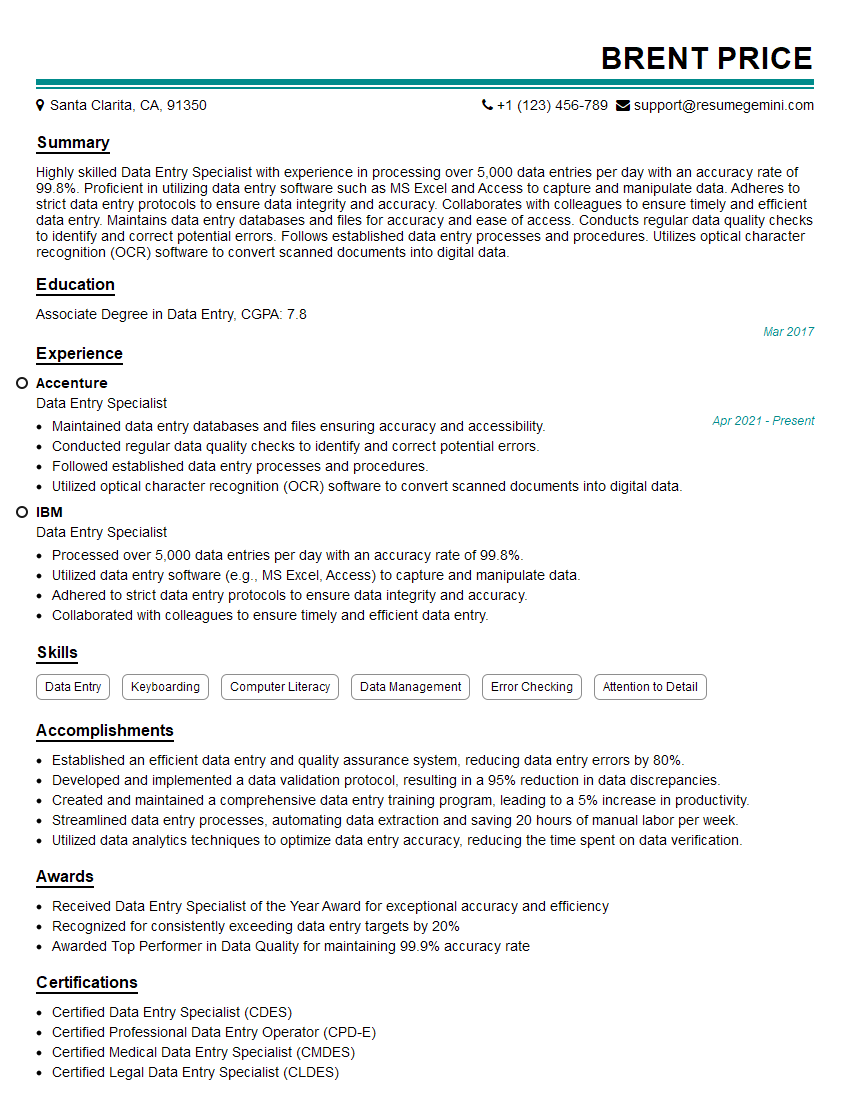

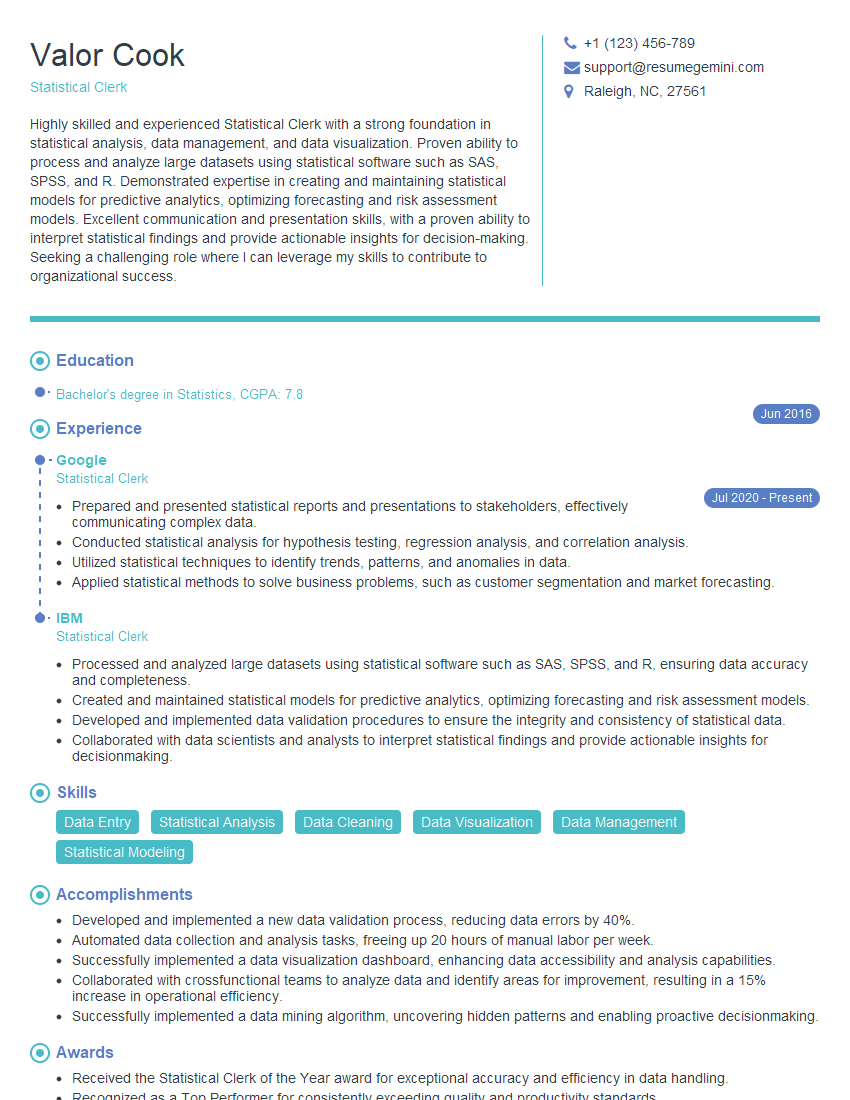

Mastering Gathering and Numbering skills is crucial for success in many data-centric roles, opening doors to exciting career opportunities and advancements. A strong resume is your key to unlocking these opportunities. Create an ATS-friendly resume that highlights your skills and experience effectively. To help you build a compelling resume that showcases your expertise in Gathering and Numbering, we encourage you to utilize ResumeGemini. ResumeGemini provides a user-friendly platform for creating professional resumes, and we even offer examples tailored specifically to Gathering and Numbering roles. Let ResumeGemini help you present yourself in the best possible light to prospective employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO