Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Geographic Data Infrastructure (GDI) interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Geographic Data Infrastructure (GDI) Interview

Q 1. Explain the key components of a Geographic Data Infrastructure (GDI).

A Geographic Data Infrastructure (GDI) is a framework of policies, standards, technologies, and human resources designed to ensure the efficient and effective access, use, and sharing of geographic information within an organization or across a region. Think of it as a well-organized library for spatial data, making it easily findable, understandable, and usable.

- Data: This is the core – the actual geographic information, including both vector (points, lines, polygons) and raster (images, grids) data. Examples include road networks, land parcels, elevation models, and satellite imagery.

- Metadata: This is crucial descriptive information about the data – who created it, when, its accuracy, what it represents, and how to access it. Think of it as the library catalog – helping you find exactly what you need.

- Technology: This includes hardware (servers, workstations), software (GIS applications, databases, web services), and communication networks needed to store, process, and share geographic data. This is the infrastructure that keeps the library running.

- Standards: These are guidelines and specifications that ensure consistency and interoperability of geographic data across different systems and organizations. These are the library’s organization and classification system.

- People: Skilled professionals (geographers, GIS specialists, data managers) are vital in all stages of the GDI lifecycle – from data acquisition and management to analysis and dissemination. These are the librarians and information specialists.

- Policies and Governance: These rules and regulations define how data is acquired, managed, shared, and accessed, ensuring security and data quality. These are the rules and regulations of the library.

Q 2. Describe the differences between vector and raster data.

Vector and raster data represent geographic information in fundamentally different ways. Imagine you’re drawing a map:

- Vector data uses points, lines, and polygons to represent geographic features. Each feature is defined by coordinates and attributes. Think of drawing a house with precise lines for its walls and roof – the walls and roof are the vector data, representing the house’s boundary. Vector data is ideal for representing discrete features with defined boundaries, offering high accuracy and scalability.

- Raster data uses a grid of cells (pixels) to represent geographic information. Each cell has a value representing a specific attribute. Think of drawing a satellite image—each pixel is a tiny square representing a certain color and intensity. Raster data is ideal for representing continuous phenomena like elevation or temperature.

Here’s a table summarizing the key differences:

| Feature | Vector | Raster |

|---|---|---|

| Data Structure | Points, lines, polygons | Grid of cells (pixels) |

| Representation | Discrete features | Continuous phenomena |

| Storage | Compact | Large file sizes |

| Accuracy | High | Limited by cell size |

| Scalability | Good | Can be challenging |

Q 3. What are the common data formats used in GDI?

GDIs utilize a variety of data formats, each with its strengths and weaknesses. Selecting the right format depends on the data type, application, and compatibility requirements.

- Shapefile (.shp): A widely used vector format, supporting points, lines, and polygons. Simple, but lacks the ability to store attributes separately from the geometry.

- GeoJSON (.geojson): A text-based vector format that’s gaining popularity due to its open standard and ease of use in web applications. Lightweight and easily parsed by various programming languages.

- GeoDatabase (.gdb): A proprietary vector format used in ESRI ArcGIS software. Offers powerful data management capabilities, including versioning and geodatabase topology. Requires specialized software for full functionality.

- KML/KMZ (.kml, .kmz): Primarily used for visualization and exchange of geographic data through Google Earth and other applications. Supports both vector and raster data in a compressed format (.kmz).

- TIFF (.tif, .tiff): A common raster format supporting various georeferencing methods. Widely used for satellite imagery and aerial photographs.

- GeoTIFF (.tif): An extension of TIFF that includes geospatial metadata, making it easy to integrate into GIS systems.

The choice often involves a trade-off between ease of use, data integrity, and compatibility with existing systems.

Q 4. Explain the concept of spatial referencing and coordinate systems.

Spatial referencing is the process of locating geographic features within a defined coordinate system. It’s like giving each point on a map a unique address. This allows different datasets to be overlaid and analyzed together.

Coordinate systems define the way we represent locations on the Earth’s surface. There are two main types:

- Geographic Coordinate Systems (GCS): These use latitude and longitude to define locations. Think of the lines of latitude and longitude on a globe. Latitude measures north-south position, and longitude measures east-west position.

- Projected Coordinate Systems (PCS): These transform the curved surface of the Earth into a flat plane, enabling accurate measurements of distances, areas, and angles. Many different projections exist, each with its own strengths and weaknesses. The choice of projection depends on the region of interest and the type of analysis being performed. For example, a Mercator projection preserves direction but distorts area, while an Albers Equal-Area projection preserves area but distorts angles and direction.

Incorrectly using or defining spatial referencing can lead to inaccurate analysis and map misrepresentations. Ensuring consistent spatial referencing across datasets is crucial for accurate integration and analysis within a GDI.

Q 5. What are the benefits of using a spatial database?

Spatial databases offer significant advantages over traditional relational databases when managing geographic information.

- Spatial Indexing and Querying: Efficiently locate and retrieve geographic data based on spatial relationships (e.g., finding all points within a polygon, finding the nearest neighbor). This significantly speeds up spatial queries compared to traditional databases which lack built-in spatial functions.

- Spatial Data Types: They support specialized data types for points, lines, polygons, and other geographic features. This allows for direct storage and manipulation of spatial information, unlike traditional databases that often require workarounds.

- Spatial Relationships and Analysis: Support spatial analysis functions such as overlay, buffer creation, proximity analysis, and network analysis. This allows complex spatial queries and analysis to be performed directly within the database, rather than requiring data export and processing in separate GIS software.

- Data Integrity: Can enforce topological rules (e.g., ensuring lines don’t overlap or polygons are closed) to maintain data consistency and accuracy. This ensures that data remains reliable and meaningful.

- Scalability: Can handle very large datasets, efficiently managing and indexing billions of geographic features.

Examples include PostGIS (an extension to PostgreSQL), and Oracle Spatial.

Q 6. How do you ensure data quality and accuracy within a GDI?

Ensuring data quality and accuracy in a GDI is paramount. It requires a multifaceted approach.

- Data Acquisition Procedures: Establishing clear guidelines and protocols for acquiring data from various sources, including specifications for accuracy, precision, and completeness.

- Data Validation and Verification: Implementing rigorous quality control checks at each stage of the data lifecycle. This could involve visual inspections, automated checks for inconsistencies, and comparisons against known accurate datasets.

- Metadata Standards: Adhering to metadata standards (like FGDC or ISO 19115) ensures that comprehensive and accurate information is available for each dataset, including information on data quality and limitations.

- Data Lineage Tracking: Maintaining a clear record of the origin, processing steps, and modifications made to each dataset. This aids in tracing potential errors and understanding data transformations.

- Regular Data Updates: Establishing a routine for updating datasets to reflect changes over time. This ensures the information remains current and relevant.

- Data Error Correction: Setting up procedures for identifying, documenting, and correcting data errors. This may involve creating workflows and tools for error detection and repair.

- User Training: Providing training and documentation for users on correct data handling procedures, reducing errors caused by misuse or misinterpretation.

A combination of these strategies leads to more reliable and trustworthy data within the GDI.

Q 7. Describe your experience with metadata standards (e.g., FGDC, ISO 19115).

Throughout my career, I’ve extensively used and implemented metadata standards, specifically FGDC (Federal Geographic Data Committee) and ISO 19115. I understand the importance of consistent and comprehensive metadata for data discovery, interoperability, and quality control. My experience includes:

- Creating and managing metadata records: I’ve developed and maintained metadata for various datasets, utilizing both FGDC and ISO 19115 standards. This involves documenting dataset characteristics, such as spatial extent, data quality, and data lineage.

- Implementing metadata schemas and workflows: I’ve helped design and implement metadata workflows in projects using various GIS software packages. This includes automating metadata creation and editing processes to streamline workflows and ensure consistency.

- Metadata validation and quality control: I’ve used metadata validation tools to ensure that metadata records comply with specified standards, identifying and correcting inconsistencies.

- Integrating metadata into data discovery portals: I’ve participated in developing data discovery portals, leveraging metadata standards to make geographic datasets easily searchable and accessible.

My experience with these standards goes beyond simple compliance. I understand their implications for data usability, interoperability, and long-term preservation of valuable geographic information. I’m familiar with the challenges of balancing the need for comprehensive metadata with the practical considerations of time and resources.

Q 8. Explain the process of data integration within a GDI.

Data integration within a Geographic Data Infrastructure (GDI) is the process of combining geospatial data from diverse sources into a unified, consistent, and readily accessible framework. Think of it like assembling a complex jigsaw puzzle – each piece represents a different dataset, and the final picture is the integrated GDI.

This process typically involves several key steps:

- Data Discovery and Assessment: Identifying all relevant datasets, evaluating their quality (completeness, accuracy, consistency), and understanding their formats and coordinate systems.

- Data Transformation: Converting data into a common format and coordinate system. This might involve projections changes (e.g., converting from UTM to WGS84), data type conversions, and attribute standardization.

- Data Cleaning and Validation: Identifying and correcting errors, inconsistencies, and redundancies in the data. This includes dealing with spatial inconsistencies like overlapping polygons or gaps in coverage.

- Data Integration: Combining the transformed and cleaned datasets. This could involve merging tables, overlaying spatial features, or using more complex techniques like spatial joins or data fusion.

- Data Loading and Storage: Importing the integrated data into a spatial database or data warehouse for efficient storage and access. This often involves choosing appropriate data models (e.g., relational, object-relational, NoSQL) and indexing strategies for optimal query performance.

- Metadata Management: Maintaining comprehensive metadata (information about the data) to ensure data discoverability, understandability, and usability. This includes information on data sources, processing steps, quality assessments, and contact information.

For example, integrating census data with land use data allows for sophisticated analysis of population density across different land use types, informing urban planning decisions.

Q 9. What are the challenges of managing large geospatial datasets?

Managing large geospatial datasets presents several significant challenges:

- Storage Capacity and Costs: Geospatial data is often voluminous, requiring substantial storage capacity and potentially incurring high storage and maintenance costs.

- Data Processing and Analysis: Processing and analyzing large datasets can be computationally intensive and time-consuming, requiring powerful hardware and efficient algorithms.

- Data Access and Retrieval: Efficiently accessing and retrieving specific subsets of data from massive datasets is crucial for effective analysis and decision-making. Poorly indexed data can lead to significant performance bottlenecks.

- Data Quality Control: Ensuring data accuracy, consistency, and completeness across large datasets is a considerable challenge, often requiring automated quality control mechanisms.

- Data Security and Privacy: Protecting sensitive geospatial data from unauthorized access and misuse is paramount, requiring robust security measures and compliance with relevant regulations.

- Data Interoperability: Ensuring that different datasets, potentially from disparate sources, can be seamlessly integrated and analyzed together requires careful attention to data standards and formats.

Consider a national-level land cover dataset – the sheer size of such data demands specialized hardware, optimized database management systems, and distributed processing techniques for efficient management.

Q 10. How do you address data inconsistencies in a GDI?

Addressing data inconsistencies in a GDI requires a multifaceted approach:

- Data Standardization: Implementing consistent standards for data formats, coordinate systems, attribute names, and data values is fundamental. This ensures that data from different sources can be meaningfully integrated.

- Data Cleaning and Validation: Employing automated and manual techniques to identify and correct errors, inconsistencies, and redundancies in the data. This can involve spatial data validation (e.g., checking for overlapping polygons), attribute validation (e.g., verifying data ranges), and consistency checks across multiple datasets.

- Data Reconciliation: Using techniques to resolve conflicts between different versions or interpretations of the same data. This might involve prioritizing data sources based on quality or using statistical methods to estimate missing or inconsistent values.

- Metadata Management: Carefully documenting the data sources, processing steps, quality assessments, and any known inconsistencies to ensure transparency and accountability.

- Data Fusion Techniques: Employing advanced techniques like data fusion to combine data from multiple sources, resolving discrepancies and improving data quality.

For example, if two datasets show slightly different boundaries for a particular administrative region, we might use a spatial overlay technique to create a reconciled boundary, documenting the source discrepancies in the metadata.

Q 11. Describe your experience with different GIS software (e.g., ArcGIS, QGIS).

I have extensive experience with both ArcGIS and QGIS, having used them for diverse projects ranging from urban planning to environmental monitoring.

ArcGIS: I’m proficient in using ArcGIS Pro and its various extensions for geoprocessing, spatial analysis, and data management. I’ve leveraged its powerful geodatabase capabilities for managing and querying large datasets, along with its advanced visualization tools for creating compelling maps and presentations.

QGIS: QGIS has been invaluable for its open-source nature, flexibility, and the vast array of plugins it offers. I’ve utilized it for tasks such as data processing, analysis, and map production, particularly where budget constraints or the need for open-source solutions were crucial. Its user-friendly interface and Python scripting capabilities have enhanced my workflow substantially.

In essence, my experience with both platforms allows me to choose the most appropriate tool for each project based on the specific requirements, budget, and project scope.

Q 12. Explain the concept of geoprocessing and its applications.

Geoprocessing refers to the manipulation and analysis of geographic data using GIS software. It encompasses a wide range of operations that transform, analyze, and derive new information from spatial data. Think of it as a set of tools for manipulating your geographic data ‘puzzle pieces’ to create a new, more informative picture.

Applications of Geoprocessing:

- Spatial Analysis: Performing operations like buffer creation, overlay analysis (union, intersection, difference), proximity analysis, and network analysis.

- Data Conversion and Transformation: Converting data between different formats, projections, and coordinate systems.

- Data Management: Creating, editing, and managing geospatial datasets, including attribute tables and spatial features.

- Data Modeling: Creating and manipulating spatial data models, defining relationships between different data layers.

- Automation: Creating automated workflows using scripting languages (e.g., Python) to streamline repetitive tasks and improve efficiency.

For instance, geoprocessing can automate the creation of flood risk maps by overlaying elevation data with flood hazard zones, generating a new layer showing areas at different risk levels.

Q 13. What are some common geospatial analysis techniques?

Common geospatial analysis techniques include:

- Spatial Overlay: Combining multiple datasets to identify spatial relationships and create new information. Examples include union, intersect, and erase.

- Buffering: Creating zones around geographic features to represent areas of influence or proximity. This is useful for analyzing areas within a certain distance of roads, rivers, or other features.

- Proximity Analysis: Measuring distances and identifying nearest neighbors. Useful for analyzing things like service area accessibility or identifying locations optimal for new facilities.

- Network Analysis: Analyzing networks like roads or pipelines to optimize routes, find shortest paths, or model flow.

- Spatial Interpolation: Estimating values at unsampled locations based on known values at other locations. Used for things like creating contour maps or predicting environmental variables.

- Density Analysis: Calculating the density of points or features within a given area, such as population density or crime density.

- Spatial Statistics: Employing statistical methods to analyze spatial patterns and relationships. Examples include autocorrelation and spatial regression.

For example, overlaying a population density map with a map of air quality monitoring stations allows for correlational analysis to study potential links between population density and air pollution.

Q 14. Describe your experience with spatial data modeling.

My experience in spatial data modeling spans various approaches, focusing on selecting the most appropriate model for the specific project requirements. I’m adept at using both vector and raster data models and understand the trade-offs between them.

Vector Models: I’ve worked extensively with vector data models representing geographic features as points, lines, and polygons. I’m familiar with different geometric representations (e.g., planar, geographic) and attribute table structures. I understand the importance of topological relationships and how they impact analysis. For example, I’ve worked on projects requiring the creation and maintenance of geodatabases, ensuring data integrity through topological rules and constraints.

Raster Models: My experience includes working with raster data models, representing spatial information as grids or matrices of cells (pixels). This involves understanding different raster formats (e.g., GeoTIFF, ERDAS IMAGINE) and techniques for processing and analyzing raster datasets. I have practical experience with image processing, remote sensing data analysis, and elevation modelling.

Ultimately, I focus on selecting the model best suited to representing the phenomena under study while ensuring data integrity, efficiency, and scalability.

Q 15. How do you ensure data security and access control within a GDI?

Data security and access control are paramount in a GDI. We achieve this through a multi-layered approach, combining technical safeguards with robust administrative policies. Think of it like a castle with multiple defenses.

- Role-Based Access Control (RBAC): This is the foundation. Different users (e.g., data administrators, analysts, public viewers) are assigned specific permissions, limiting their access to only the data they need. For example, a data analyst might have read and write access to specific datasets, while a public user might only have read-only access to a subset of publicly available data.

- Data Encryption: Both data at rest (on servers and storage) and data in transit (during network transmission) should be encrypted using strong algorithms (like AES-256) to prevent unauthorized access, even if data is compromised.

- Auditing and Logging: Detailed logs track all data access attempts, providing a valuable audit trail for identifying and investigating suspicious activity. This helps to trace back actions and understand how data was utilized.

- Network Security: Firewalls, intrusion detection systems, and regular security assessments are crucial to protect the GDI infrastructure from external threats. It’s like having a moat and guards protecting the castle walls.

- Data Sanitization and Anonymization: Where appropriate, sensitive data should be sanitized or anonymized to protect individual privacy while still allowing for data analysis. This might involve removing personally identifiable information (PII) or using techniques like data masking.

These measures, implemented diligently and reviewed regularly, form a robust security framework for a GDI, minimizing risks and ensuring data integrity and confidentiality.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the importance of data interoperability in a GDI.

Data interoperability is the ability of different systems and software to exchange and use geographic data seamlessly, regardless of their underlying formats or technologies. Imagine trying to build a house with bricks of different sizes and shapes – it would be a chaotic and inefficient process. Similarly, without interoperability, a GDI becomes fragmented and difficult to manage.

- Reduced Data Silos: Interoperability breaks down data silos, allowing different organizations and departments to share and utilize data effectively. This facilitates collaboration and prevents duplicate data entry.

- Enhanced Data Integration: It enables the integration of data from diverse sources, leading to a more comprehensive and accurate understanding of geographic phenomena.

- Improved Efficiency: Data can be reused and repurposed more easily, saving time and resources. No more re-creating the wheel.

- Cost Savings: By avoiding redundant data acquisition and processing, organizations can achieve significant cost savings.

Standardized data formats (like GeoJSON, Shapefile, and GML) and web services (WMS, WFS, WCS) are key to achieving interoperability. Without them, using data from various sources is incredibly time consuming and expensive, limiting the effectiveness of any GDI.

Q 17. What are some best practices for GDI design and implementation?

Best practices for GDI design and implementation center around creating a robust, scalable, and sustainable system. Think of it as building a strong foundation for a building.

- Clear Data Governance Framework: Establish clear policies and procedures for data acquisition, management, quality control, and access. Who is responsible for what? This prevents confusion and ensures consistent practices.

- Modular Design: Design the GDI in a modular way, enabling future expansion and adaptation without requiring major overhauls. Think of Legos; you can add and change parts easily.

- Metadata Management: Implement a robust metadata system to effectively document all data aspects – source, accuracy, processing, etc. Think of it as an index for your data, making it easily searchable and understandable.

- Data Quality Control: Establish processes for data validation, verification, and error correction. Regularly check for errors; data quality is key.

- Open Standards and Interoperability: Utilize open standards and technologies (e.g., OGC standards) to maximize data interoperability and system flexibility.

- User-Centric Design: The GDI should be designed with the needs of its users in mind, providing intuitive tools and interfaces for data access and analysis.

- Scalability and Performance: Ensure that the GDI can handle increasing data volumes and user demands. Plan for growth from the outset.

Following these best practices ensures the GDI meets current and future needs, providing a flexible and effective platform for managing and utilizing geographic data.

Q 18. Describe your experience with cloud-based GIS platforms.

My experience with cloud-based GIS platforms is extensive. I’ve worked with various platforms, including Amazon Web Services (AWS) with its suite of GIS tools, Google Cloud Platform (GCP), and Microsoft Azure. The advantages of cloud-based platforms are significant:

- Scalability: Cloud platforms offer immense scalability, allowing for easy adjustments based on data volumes and user demands. Need more processing power? You get it on demand.

- Cost-Effectiveness: Pay-as-you-go models often prove more cost-effective than maintaining on-premise infrastructure.

- Accessibility: Data and applications are accessible from anywhere with an internet connection, fostering collaboration and efficiency.

- Disaster Recovery: Cloud platforms offer robust disaster recovery capabilities, protecting data from loss or damage.

In a recent project, we migrated a large-scale GDI to AWS, leveraging their services for data storage (S3), processing (EC2), and spatial analysis (using tools like ArcGIS Enterprise on EC2 instances). This resulted in significant improvements in data accessibility and processing speed, with reduced infrastructure costs.

Q 19. How do you handle data versioning and change management in a GDI?

Data versioning and change management are vital for maintaining data integrity and traceability within a GDI. It’s like keeping a detailed history of edits to a document.

- Version Control Systems: Using a version control system (e.g., Git for data files or specialized GIS versioning tools) allows tracking of changes, reverting to previous versions if needed, and understanding who made what changes when.

- Metadata Updates: Whenever data changes, the associated metadata should be updated to reflect the changes. This ensures users are aware of the data’s evolution.

- Workflows and Procedures: Clear workflows should be established for data updates, including review and approval processes to maintain data quality and consistency. Think of it as a formal process for validating changes before they are implemented.

- Change Logs: Maintaining detailed change logs provides a clear audit trail for all data modifications. This helps identify errors and track down the cause of problems.

In practice, we often employ a combination of these methods to manage data changes, ensuring data quality, consistency, and accountability. This allows us to revert to previous versions if necessary and understand the history of our data.

Q 20. Explain your understanding of spatial statistics.

Spatial statistics involves applying statistical methods to geographically referenced data to identify patterns, relationships, and trends. It’s like using a magnifying glass and statistical tools to examine geographic data to discover interesting relationships.

For example, we might use spatial autocorrelation analysis (like Moran’s I) to determine if nearby locations exhibit similar values (e.g., high crime rates clustered together). Or, we might perform spatial regression to model the relationship between a dependent variable (e.g., house prices) and independent variables (e.g., proximity to schools, crime rates), taking into account the spatial influence of nearby locations. Other common applications include spatial interpolation (estimating values at unsampled locations), point pattern analysis (analyzing the distribution of points in space), and spatial clustering.

My experience includes utilizing spatial statistical techniques in various applications, such as crime mapping, environmental modeling, and public health analysis. I am proficient in using software packages like R, ArcGIS, and GeoDa to perform these analyses.

Q 21. Describe your experience with web map services (WMS, WFS, WCS).

Web Map Services (WMS, WFS, WCS) are crucial for sharing geographic data and maps across the internet. Think of them as standardized ways of accessing and sharing data.

- WMS (Web Map Service): Provides map images (rasters) in various formats (e.g., PNG, JPEG). It’s like getting a picture of a map; you see it but can’t interact with the underlying data directly.

- WFS (Web Feature Service): Provides vector data features (points, lines, polygons) in formats like GML. You receive the raw data, allowing for more interaction and analysis.

- WCS (Web Coverage Service): Provides raster data (like satellite imagery or elevation models). This allows you to request specific portions of the data and analyze them.

I have extensive experience implementing and using these services. I’ve developed WMS and WFS services for various clients, utilizing standards like OGC (Open Geospatial Consortium) specifications. This allows seamless integration with many GIS applications and ensures interoperability. In one project, we used WMS to publish maps of environmental data, and the WFS was leveraged to serve vector data to a mobile application for field workers.

Q 22. How do you ensure the scalability of a GDI?

Ensuring the scalability of a Geographic Data Infrastructure (GDI) is crucial for its long-term viability. It means the GDI can handle increasing amounts of data, user requests, and functional complexity without significant performance degradation. This requires a multi-pronged approach.

- Database Selection: Choosing a database system designed for spatial data and capable of horizontal scaling (adding more servers) is paramount. PostgreSQL/PostGIS and other cloud-based solutions are excellent choices. Consider using database sharding to distribute data across multiple servers.

- Service-Oriented Architecture (SOA): Implementing an SOA allows different components of the GDI to be developed, deployed, and scaled independently. This modularity makes it easier to handle increased workloads by scaling specific services as needed.

- Caching Strategies: Employing various caching mechanisms at different layers (e.g., database caching, application-level caching, CDN caching) can significantly reduce the load on backend systems, improving response times and scalability. This is like having a readily available supply of frequently-used information close at hand.

- Load Balancing: Distributing incoming requests across multiple servers ensures that no single server becomes overloaded. This involves using load balancers to direct traffic based on factors like server load and availability.

- Cloud-Based Solutions: Leveraging cloud platforms offers inherent scalability. Services like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure provide tools for managing and scaling GDI components effortlessly. Cloud resources can be adjusted dynamically based on demand.

For example, a national mapping agency might start with a smaller GDI serving a limited area, but with careful planning, the system can grow to encompass the entire country by scaling its database, adding more servers, and implementing appropriate load balancing strategies.

Q 23. What are some common performance issues encountered in a GDI, and how do you address them?

Performance issues in a GDI can severely impact its usability. Common problems include slow query responses, inadequate data access speeds, and high server latency. Addressing these requires a systematic approach.

- Slow Query Responses: Often caused by inefficient database queries or inadequate indexing. Optimizing queries, adding spatial indexes (like GiST or SP-GiST in PostGIS), and using query profiling tools can drastically improve performance. We can think of this as improving the route a query takes through the data to find its destination.

- Inadequate Data Access Speeds: This can be due to network bottlenecks, slow storage devices, or inefficient data retrieval methods. Solutions include using high-speed network connections, employing solid-state drives (SSDs) for storage, and optimizing data access patterns.

- High Server Latency: Caused by overloaded servers, network congestion, or inefficient server configurations. Employing load balancing techniques, upgrading server hardware, and optimizing server configurations to reduce overhead can mitigate latency.

- Data Volume: Handling massive datasets efficiently requires data partitioning, tiling, and appropriate data compression techniques. Think of dividing a massive map into manageable smaller tiles.

For instance, I once worked on a project where slow query responses were a major problem. Through query optimization and the addition of spatial indexes, we reduced query execution time by over 80%, resulting in a far more responsive application.

Q 24. Explain your experience with different map projections.

Map projections are crucial for representing the three-dimensional Earth on a two-dimensional map. Each projection distorts certain aspects of the Earth (like area, shape, distance, or direction) while preserving others. My experience includes working with various projections, including:

- Mercator Projection: Preserves angles, making it suitable for navigation but significantly distorting area near the poles. It’s one of the most commonly used projections, particularly for web maps.

- Lambert Conformal Conic Projection: A good compromise that minimizes distortion for mid-latitude regions. It’s often used for aeronautical charts and topographic maps.

- Albers Equal-Area Conic Projection: Preserves area, making it suitable for applications where area calculations are crucial, such as calculating land areas.

- UTM (Universal Transverse Mercator): Divides the Earth into zones, using a transverse Mercator projection within each zone to minimize distortion. It’s widely used for large-scale mapping, as it offers a balance between accuracy and consistency.

- WGS 84: While not strictly a projection itself, this is the most commonly used geodetic datum, providing a reference system for global coordinate systems. It’s the foundation upon which many map projections are based.

Selecting the right projection is vital. Choosing an unsuitable projection can lead to inaccurate measurements and misinterpretations of spatial data. My experience involves making informed decisions based on the specific application and desired accuracy requirements.

Q 25. What is your experience with open-source geospatial tools and libraries?

I have extensive experience with various open-source geospatial tools and libraries, including:

- QGIS: A powerful and versatile desktop GIS application used for data visualization, analysis, and management. I have used it extensively for tasks like map creation, data processing, and geospatial analysis.

- PostgreSQL/PostGIS: A robust open-source spatial database system that forms the backbone of many GDIs. My expertise includes designing and optimizing spatial databases, creating and managing spatial indexes, and writing SQL queries for geospatial data analysis.

- GDAL/OGR: A collection of powerful command-line tools and libraries for reading, writing, and translating a wide variety of geospatial data formats. I regularly use these for data conversion and manipulation.

- Python libraries (GeoPandas, Shapely, Fiona): Python provides a very flexible environment for geospatial programming. These libraries allow me to conduct complex spatial analysis, automate geoprocessing tasks, and create custom geospatial applications. For example,

import geopandas as gpdis a common starting point for many of my Python geospatial projects. - Leaflet and OpenLayers: I’m proficient in using these JavaScript libraries to create interactive web maps. This enables me to present data in dynamic and visually engaging ways.

The open-source nature of these tools has allowed me to develop cost-effective and flexible solutions for a variety of geospatial projects. The collaborative aspect of open source has also proved invaluable in solving problems and learning best practices.

Q 26. How do you ensure the long-term sustainability of a GDI?

The long-term sustainability of a GDI requires a holistic approach that addresses technical, organizational, and financial aspects.

- Data Governance: Establishing clear data standards, metadata schemas, and data quality control procedures ensures data consistency and accuracy over time. This is the cornerstone of long-term data usability.

- Technology Choices: Selecting open standards, using well-documented and supported technologies, and regularly upgrading software and hardware prevent vendor lock-in and reduce the risk of obsolescence. Choosing open-source solutions can significantly enhance long-term cost-effectiveness.

- Archiving and Backup: Implementing robust data archiving and backup strategies protects valuable spatial data from loss or corruption. Regular backups, and secure offsite storage are crucial. We don’t want to lose years of hard work due to a single technical failure.

- Staff Training and Knowledge Transfer: Investing in training and knowledge transfer ensures that institutional expertise is maintained and not lost due to staff turnover. Documenting procedures and best practices is also essential.

- Community Engagement: Engaging with stakeholders and fostering collaboration within the geospatial community improves the sustainability of a GDI. It ensures that the infrastructure meets the evolving needs of users.

- Financial Planning: Securing ongoing funding for maintenance, upgrades, and expansion is vital for long-term sustainability. Sustainable funding strategies are critical, and include securing government grants, and potentially seeking collaborative partnerships.

Ignoring any of these aspects can lead to a GDI becoming outdated, unusable, or unsustainable. Proactive planning and management are essential for a long-lasting, effective GDI.

Q 27. Describe a challenging project you worked on involving a GDI, and how you overcame the challenges.

One challenging project involved migrating a legacy GDI to a cloud-based platform. The legacy system was based on outdated software and hardware, and the data was poorly structured. The challenges included:

- Data Migration: Translating data from the legacy system to a new, cloud-based environment required careful planning and meticulous data cleaning. Inconsistent data formats and missing metadata presented significant obstacles. We tackled this using a phased approach, migrating data in chunks and rigorously validating each step.

- Software Integration: Integrating the new system with existing applications and workflows required extensive testing and custom development. We employed a modular approach, building and testing individual components before integrating them into the complete system.

- Performance Optimization: The initial cloud deployment suffered from performance bottlenecks. We addressed this by optimizing database queries, implementing caching mechanisms, and scaling the cloud infrastructure. Through careful monitoring and analysis, we identified performance bottlenecks and optimized each component.

- User Training: Training users on the new system was essential. We provided comprehensive training materials and hands-on support to ensure a smooth transition. User feedback was essential in the process.

We overcame these challenges by employing an iterative approach, using agile methodologies, and closely collaborating with stakeholders. Thorough planning, meticulous execution, and continuous monitoring were crucial in ensuring a successful migration. The result was a more scalable, reliable, and user-friendly GDI capable of handling significantly increased data volumes and user requests.

Key Topics to Learn for Geographic Data Infrastructure (GDI) Interview

- Data Models and Standards: Understanding spatial data models (vector, raster, TIN), common data formats (Shapefile, GeoTIFF, GeoJSON), and relevant standards (OGC, ISO 19100 series) is crucial. Consider the strengths and weaknesses of each model and format in various applications.

- Spatial Databases and Data Management: Explore the functionalities of spatial databases (PostGIS, Oracle Spatial, ArcGIS Enterprise), including data storage, querying (SQL with spatial extensions), and data indexing for efficient retrieval. Practice designing and implementing efficient spatial database schemas.

- Geospatial Data Processing and Analysis: Familiarize yourself with common geoprocessing tasks like spatial joins, buffering, overlay analysis, and interpolation. Understand the underlying algorithms and their implications for accuracy and efficiency. Consider exploring different software platforms for these tasks.

- Web Mapping and GIS Services: Gain a strong understanding of web map services (WMS, WFS, WMTS), REST APIs, and their role in accessing and distributing geospatial data. Explore the architecture of web mapping applications and the technologies used to build them (e.g., Leaflet, OpenLayers).

- Geospatial Metadata and Data Quality: Learn about the importance of metadata for data discovery, understanding and interoperability. Understand the concepts of data quality, accuracy, precision, and completeness, and how these relate to data validation and assessment.

- Cloud-Based GIS and Big Data: Explore cloud platforms (AWS, Azure, Google Cloud) and their offerings for geospatial data storage, processing, and analysis. Understand the challenges and opportunities presented by handling large geospatial datasets.

- Geoprocessing and Automation: Master scripting languages (Python with libraries like GDAL/OGR) to automate geoprocessing workflows and improve efficiency. This demonstrates valuable problem-solving skills.

Next Steps

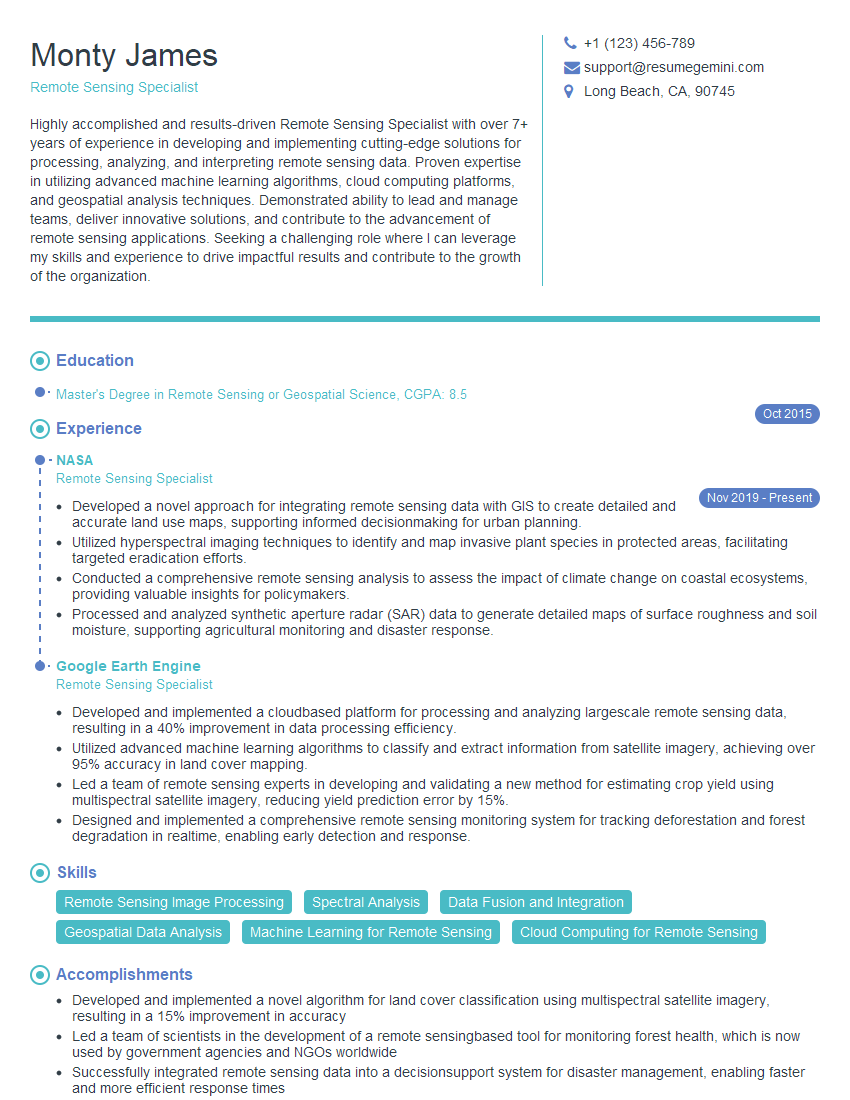

Mastering Geographic Data Infrastructure (GDI) opens doors to exciting and impactful careers in various sectors. A strong understanding of these technologies is highly sought after, leading to greater career advancement opportunities and higher earning potential. To maximize your job prospects, create an ATS-friendly resume that effectively showcases your skills and experience. ResumeGemini is a trusted resource to help you build a professional and impactful resume. Examples of resumes tailored to Geographic Data Infrastructure (GDI) roles are available to help you craft your perfect application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO