The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Knowledge of Emerging Technologies interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Knowledge of Emerging Technologies Interview

Q 1. Explain the difference between supervised and unsupervised machine learning.

Supervised and unsupervised machine learning differ fundamentally in how they’re trained and what they accomplish. Think of it like teaching a child: supervised learning is like showing them labeled examples (‘This is a cat, this is a dog’), while unsupervised learning is like giving them a box of toys and letting them figure out the categories themselves.

Supervised learning uses labeled datasets. Each data point is tagged with the correct answer. The algorithm learns to map inputs to outputs based on these examples. Common applications include image classification (identifying objects in images), spam detection (classifying emails as spam or not spam), and medical diagnosis (predicting diseases based on patient data). For example, a supervised learning model for image recognition might be trained on thousands of images of cats and dogs, each labeled accordingly. The model learns the features that distinguish cats from dogs and can then classify new, unseen images.

Unsupervised learning, on the other hand, works with unlabeled data. The algorithm identifies patterns, structures, and relationships in the data without explicit guidance. Common applications include clustering (grouping similar data points together), anomaly detection (identifying unusual data points), and dimensionality reduction (simplifying data by reducing the number of variables). For example, a customer segmentation model might use unsupervised learning to group customers with similar purchasing behaviors into distinct segments, enabling targeted marketing campaigns.

In short: supervised learning learns from labeled examples, while unsupervised learning explores data to find inherent structures.

Q 2. Describe the concept of blockchain and its potential applications beyond cryptocurrency.

Blockchain is a decentralized, distributed, and immutable ledger technology. Imagine a digital record book that’s shared across many computers. Every transaction is recorded as a ‘block,’ linked cryptographically to the previous block, forming a chain. This makes it incredibly secure and transparent, as altering one block would require altering every subsequent block across the entire network – a practically impossible task.

While initially known for its role in cryptocurrencies like Bitcoin, blockchain’s potential extends far beyond. Consider these applications:

- Supply chain management: Tracking goods from origin to consumer, ensuring authenticity and preventing counterfeiting.

- Healthcare: Securely storing and sharing patient medical records, improving data privacy and interoperability.

- Voting systems: Creating a transparent and tamper-proof voting system to enhance election integrity.

- Digital identity: Managing and verifying digital identities securely, reducing fraud and identity theft.

- Intellectual property management: Recording and verifying ownership of intellectual property, preventing infringement.

The key advantage of blockchain in these applications is its inherent security, transparency, and trust. It eliminates the need for a central authority, reducing the risk of manipulation and data breaches.

Q 3. What are the key security challenges associated with IoT devices?

The Internet of Things (IoT) presents unique security challenges due to the vast number of interconnected devices, many of which have limited processing power and security features. Key challenges include:

- Lack of robust security protocols: Many IoT devices lack strong encryption, authentication, and authorization mechanisms, making them vulnerable to attacks.

- Software vulnerabilities: Outdated or poorly written software can contain vulnerabilities that attackers can exploit to gain access to the device and its data.

- Data breaches: IoT devices often collect sensitive data, and a breach can expose this data to unauthorized access.

- Denial-of-service attacks: Overwhelming an IoT device or network with traffic can render it unusable.

- Device hijacking: Attackers can gain control of IoT devices, turning them into part of a botnet for malicious purposes (like distributed denial-of-service attacks).

- Lack of standardization: The lack of consistent security standards across IoT devices makes it difficult to ensure a uniform level of protection.

Addressing these challenges requires a multi-faceted approach including strong encryption, regular software updates, robust authentication mechanisms, and industry-wide adoption of security standards.

Q 4. How does cloud computing enhance data security and scalability?

Cloud computing significantly enhances data security and scalability in several ways:

- Enhanced Security Measures: Cloud providers invest heavily in robust security infrastructure, including firewalls, intrusion detection systems, and data encryption. This often surpasses the capabilities of individual organizations. They also employ expertise in security management and threat detection.

- Data Redundancy and Backup: Cloud platforms typically replicate data across multiple data centers, ensuring data availability and resilience in case of hardware failures or disasters. This redundancy provides a crucial layer of protection against data loss.

- Access Control and Authentication: Cloud services provide granular access control mechanisms, allowing organizations to manage who can access their data and what actions they can perform. Strong authentication protocols further enhance security.

- Scalability and Elasticity: Cloud computing enables organizations to easily scale their resources up or down based on demand. This flexibility avoids the need to over-provision resources, optimizing costs and reducing potential security risks associated with managing excess capacity.

- Compliance and Regulations: Cloud providers often comply with various industry regulations and standards, such as HIPAA or GDPR, simplifying compliance for their customers.

For example, a healthcare provider using a cloud platform can leverage its robust security measures to comply with HIPAA regulations while benefiting from scalability to handle fluctuating patient data volumes.

Q 5. Discuss the ethical implications of artificial intelligence.

The ethical implications of artificial intelligence are profound and multifaceted. As AI systems become increasingly sophisticated, concerns arise regarding:

- Bias and Discrimination: AI models trained on biased data can perpetuate and amplify existing societal biases, leading to unfair or discriminatory outcomes. For example, a facial recognition system trained primarily on images of white faces might perform poorly on people of color.

- Privacy and Surveillance: AI-powered surveillance technologies raise concerns about privacy violations and the potential for mass surveillance. Data collection and analysis for AI models need careful ethical consideration.

- Job Displacement: Automation driven by AI could lead to significant job displacement across various sectors, requiring strategies for retraining and adaptation.

- Accountability and Transparency: Determining accountability when AI systems make mistakes or cause harm can be challenging. Transparency in AI algorithms and decision-making processes is crucial.

- Autonomous Weapons Systems: The development of lethal autonomous weapons systems raises serious ethical questions about human control and the potential for unintended consequences.

Addressing these ethical concerns requires a multi-stakeholder approach involving researchers, developers, policymakers, and the public. Developing ethical guidelines, regulations, and standards for AI is crucial to ensure its responsible development and deployment.

Q 6. Explain the role of DevOps in the deployment and maintenance of cloud applications.

DevOps plays a crucial role in streamlining the deployment and maintenance of cloud applications. It’s a set of practices, tools, and a cultural philosophy that emphasizes collaboration and automation between development and operations teams.

In the context of cloud applications, DevOps enables:

- Faster Deployment Cycles: Automation of tasks like code deployment, testing, and infrastructure provisioning significantly speeds up the release process.

- Improved Collaboration: Breaking down silos between development and operations teams fosters better communication and shared responsibility.

- Continuous Integration and Continuous Delivery (CI/CD): DevOps utilizes CI/CD pipelines to automate the building, testing, and deployment of code changes, ensuring frequent and reliable releases.

- Infrastructure as Code (IaC): IaC allows for managing and provisioning infrastructure (servers, networks, etc.) through code, making it easier to replicate and manage environments across different cloud platforms.

- Monitoring and Logging: DevOps emphasizes continuous monitoring of applications and infrastructure to identify and resolve issues quickly. Centralized logging provides valuable insights into application performance and security.

By using tools like Docker, Kubernetes, and cloud-based CI/CD platforms, DevOps teams can automate the entire lifecycle of cloud applications, increasing efficiency, reducing downtime, and improving overall application quality.

Q 7. What are the key characteristics of big data and how is it analyzed?

Big data is characterized by its volume, velocity, variety, veracity, and value (often remembered as the five Vs). Let’s break down each aspect:

- Volume: The sheer amount of data is massive, often exceeding the capacity of traditional data processing tools.

- Velocity: Data arrives at a high speed and needs to be processed quickly to extract insights in a timely manner.

- Variety: Data comes in diverse formats, including structured data (like databases), semi-structured data (like JSON), and unstructured data (like text and images).

- Veracity: Data quality can vary, and ensuring data accuracy and reliability is crucial for reliable analysis.

- Value: The ultimate goal is to extract valuable insights from the data to support better decision-making.

Analyzing big data requires specialized tools and techniques. Common approaches include:

- Hadoop: A distributed storage and processing framework for handling massive datasets.

- Spark: A fast, in-memory data processing engine for large-scale data analysis.

- NoSQL databases: Databases designed to handle large volumes of unstructured or semi-structured data.

- Machine learning algorithms: Algorithms used to identify patterns, make predictions, and extract insights from large datasets.

For example, a social media company might analyze big data from user posts and interactions to understand trends, personalize content recommendations, and improve its services.

Q 8. Describe different types of neural networks and their applications.

Neural networks are computing systems inspired by the biological neural networks that constitute animal brains. They are a subset of machine learning and are at the heart of many AI applications. Different types exist, each suited to specific tasks:

- Feedforward Neural Networks (FNNs): The simplest type, information flows in one direction, from input to output, without loops. Think of it like a one-way street. Used for tasks like image classification (identifying objects in pictures) and basic pattern recognition.

- Convolutional Neural Networks (CNNs): Excellent for processing grid-like data like images and videos. They use convolutional layers to identify features at different levels of abstraction. Imagine zooming in on a picture to identify smaller and smaller details—that’s what CNNs do. Applications include image recognition, object detection, and medical image analysis.

- Recurrent Neural Networks (RNNs): Designed to handle sequential data like text and time series. They have loops, allowing information to persist and influence future outputs. Think of it like remembering previous words in a sentence to understand the meaning of the next one. Used in natural language processing (NLP), machine translation, and speech recognition.

- Long Short-Term Memory Networks (LSTMs): A special type of RNN designed to address the vanishing gradient problem (difficulty in learning long-term dependencies) in standard RNNs. LSTMs are particularly effective in handling long sequences of data. Applications include language modeling, machine translation, and sentiment analysis.

- Generative Adversarial Networks (GANs): Consist of two networks: a generator that creates data and a discriminator that tries to distinguish between real and generated data. They are used to generate new data instances, similar to the original data. Examples include generating realistic images, creating new music, and even designing new drugs.

Each type has its strengths and weaknesses, and the choice depends entirely on the specific application and the nature of the data.

Q 9. Explain the concept of quantum computing and its potential impact.

Quantum computing leverages the principles of quantum mechanics to perform computations. Unlike classical computers that store information as bits (0 or 1), quantum computers use qubits. Qubits can exist in a superposition, representing 0, 1, or a combination of both simultaneously. This allows quantum computers to explore multiple possibilities concurrently, potentially solving problems intractable for even the most powerful classical computers.

Potential Impact:

- Drug Discovery and Materials Science: Simulating molecular interactions to design new drugs and materials more efficiently.

- Financial Modeling: Developing more accurate and sophisticated financial models for risk management and portfolio optimization.

- Cryptography: Breaking current encryption methods and developing new, quantum-resistant algorithms.

- Optimization Problems: Solving complex optimization problems across various domains, like logistics and supply chain management.

- Artificial Intelligence: Accelerating machine learning algorithms and developing more powerful AI systems.

While still in its early stages, quantum computing holds immense potential to revolutionize various fields.

Q 10. What are the benefits and drawbacks of using serverless computing?

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of computing resources. You only pay for the actual compute time your code consumes, eliminating the need to manage servers.

Benefits:

- Cost-effectiveness: Pay only for the compute time used.

- Scalability: Automatically scales to handle fluctuating demand.

- Increased developer productivity: Focus on code, not infrastructure.

- Improved operational efficiency: Reduced operational overhead and management.

Drawbacks:

- Vendor lock-in: Migrating away from a provider can be challenging.

- Cold starts: Initial execution can be slower due to resource provisioning.

- Debugging complexities: Debugging can be more challenging due to the distributed nature of the environment.

- Limited control: Less control over the underlying infrastructure.

Choosing serverless depends on the application’s characteristics and your priorities. It’s ideal for event-driven applications and microservices, but might not be suitable for applications requiring consistent high performance or precise resource control.

Q 11. How can AI be used to improve customer service?

AI is transforming customer service by automating tasks, personalizing interactions, and providing 24/7 availability.

- Chatbots: AI-powered chatbots handle routine inquiries, freeing up human agents to focus on more complex issues. They can provide instant support, answer FAQs, and guide users through processes.

- Sentiment Analysis: Analyzing customer feedback from surveys, reviews, and social media to identify areas for improvement and gain insights into customer satisfaction.

- Personalized Recommendations: AI algorithms analyze customer data to offer personalized product recommendations and targeted marketing campaigns.

- Predictive Maintenance: Predicting potential customer issues before they arise, allowing proactive intervention and preventing escalations.

- Automated Call Routing: Directing calls to the most appropriate agent based on the customer’s needs and the agent’s expertise.

For example, a bank could use a chatbot to answer basic questions about account balances and transaction history, while human agents handle more sensitive issues like loan applications or fraud reports.

Q 12. Describe the concept of edge computing and its advantages.

Edge computing brings computation and data storage closer to the source of data generation, rather than relying solely on centralized cloud servers. Imagine processing data on a device before sending it to the cloud.

Advantages:

- Reduced Latency: Faster processing and response times, critical for real-time applications like autonomous vehicles and industrial automation.

- Increased Bandwidth Efficiency: Only essential data is transmitted to the cloud, reducing network congestion and costs.

- Improved Reliability: Reduced dependence on network connectivity, ensuring continued operation even in areas with limited or unreliable internet access.

- Enhanced Security: Processing sensitive data locally minimizes the risk of data breaches during transmission.

- Support for IoT devices: Enables efficient processing of data generated by large numbers of IoT devices.

Example: An autonomous vehicle uses edge computing to process sensor data in real-time to make immediate driving decisions, without relying on a cloud connection for every action. This significantly reduces latency and improves safety.

Q 13. Explain the challenges of implementing and managing a blockchain network.

Implementing and managing a blockchain network presents several challenges:

- Scalability: Handling a large number of transactions efficiently can be difficult, leading to slow transaction times and high fees.

- Security: Ensuring the security of the network from attacks like 51% attacks (where a single entity controls more than half the network’s computing power) is crucial.

- Regulation: The lack of clear regulatory frameworks in many jurisdictions creates uncertainty and legal complexities.

- Interoperability: Different blockchain networks often lack interoperability, making it difficult to exchange data or assets between them.

- Energy Consumption: Proof-of-work consensus mechanisms used in some blockchains, such as Bitcoin, consume significant amounts of energy.

- Complexity: Developing, deploying, and managing blockchain networks requires specialized skills and knowledge.

Addressing these challenges often involves exploring solutions like sharding (splitting the blockchain into smaller parts), using more energy-efficient consensus mechanisms (e.g., proof-of-stake), and developing interoperability standards.

Q 14. How can machine learning improve healthcare outcomes?

Machine learning (ML) has the potential to significantly improve healthcare outcomes in several ways:

- Early Disease Detection: ML algorithms can analyze medical images (X-rays, CT scans) and patient data to detect diseases like cancer at earlier stages, when treatment is more effective.

- Personalized Medicine: ML can tailor treatment plans to individual patients based on their genetic makeup, lifestyle, and medical history, leading to more effective and personalized care.

- Drug Discovery and Development: ML can accelerate the drug discovery process by analyzing large datasets of molecules to identify potential drug candidates and predict their efficacy.

- Improved Diagnostics: ML algorithms can assist doctors in making more accurate diagnoses by analyzing medical images, patient records, and other data.

- Predictive Analytics: ML can predict patient risk factors for various diseases, allowing for proactive interventions and preventive measures.

- Robotic Surgery: ML algorithms can enhance the precision and efficiency of robotic surgery procedures.

For example, an ML model trained on thousands of chest X-rays can identify subtle signs of pneumonia with high accuracy, helping doctors make faster and more informed decisions.

Q 15. What are some common security vulnerabilities in cloud environments?

Cloud environments, while offering scalability and flexibility, introduce unique security vulnerabilities. These vulnerabilities can be broadly categorized into misconfigurations, data breaches, and lack of control.

- Misconfigurations: Incorrectly configured security settings, such as overly permissive access controls or inadequate encryption, are surprisingly common. Imagine leaving the front door of your digital house unlocked – that’s essentially what a misconfiguration can do. For example, leaving an S3 bucket (Amazon’s cloud storage) publicly accessible allows anyone to download your sensitive data.

- Data breaches: These occur when unauthorized individuals gain access to sensitive data. This can happen through various methods, including exploiting vulnerabilities in applications, phishing attacks targeting employees, or insider threats. Think of it like a thief breaking into your house and stealing your valuables.

- Lack of control: The shared responsibility model in cloud computing means both the provider and the user share security responsibilities. A lack of proper monitoring, logging, and incident response planning can leave organizations vulnerable. It’s like not having a security system installed in your house and relying solely on luck.

- Insider threats: Malicious or negligent employees with access to cloud resources pose a significant risk. This includes accidental data deletion or intentional data theft.

- Third-party risks: Relying on third-party cloud providers or applications introduces additional security risks, especially if those providers have inadequate security measures in place.

Mitigating these risks requires a multi-layered approach, including robust access controls, encryption at rest and in transit, regular security audits, and employee training.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe different types of virtualization technologies.

Virtualization technology allows you to create multiple virtual instances of computing resources – such as operating systems, servers, and storage – on a single physical machine. Think of it like having multiple apartments within a single building. This significantly improves resource utilization and efficiency. There are several types:

- Full Virtualization (Type 1 Hypervisors): This is the most common type, where a hypervisor runs directly on the host hardware and manages multiple virtual machines (VMs). Examples include VMware vSphere, Microsoft Hyper-V, and Xen. Imagine the building manager directly managing each apartment.

- Paravirtualization (Type 2 Hypervisors): This type runs on top of an existing operating system (like Windows or Linux) on the host machine. It offers slightly less performance than full virtualization but is easier to set up. Think of a property management company handling the apartments, not the building owner directly.

- Hardware-Assisted Virtualization (Hardware-Assisted): Modern CPUs support specific instructions (like Intel VT-x and AMD-V) that greatly enhance virtualization performance. This speeds up the process and reduces overhead. Imagine having a building specifically designed for apartments, making management much easier.

- Containerization: This is a lightweight form of virtualization that shares the host operating system’s kernel. Containers are isolated processes sharing the same kernel, resulting in a smaller footprint and faster startup times compared to full VMs. Docker and Kubernetes are popular containerization technologies. This is like having studio apartments within a building – everyone shares some common resources but are still individually isolated.

The choice of virtualization technology depends on factors like performance requirements, security needs, and ease of management.

Q 17. What is the role of data analytics in decision-making?

Data analytics plays a crucial role in transforming raw data into actionable insights, leading to improved decision-making. By analyzing data patterns and trends, organizations can make informed choices, optimize processes, and gain a competitive edge.

For example, a retail company can analyze sales data to understand customer preferences, predict demand, and optimize inventory management. A healthcare provider can analyze patient data to identify risk factors for specific diseases, improve treatment plans, and reduce hospital readmissions. A financial institution can analyze market trends and customer behavior to develop targeted investment strategies and manage risk more effectively.

The process typically involves data collection, data cleaning, data analysis (using statistical methods and machine learning), and data visualization (presenting findings in an easily understandable format). Effective data analytics empowers organizations to:

- Reduce uncertainty: Data-driven decisions are less prone to biases and guesswork.

- Improve efficiency: Identify bottlenecks, streamline operations, and optimize resource allocation.

- Gain competitive advantage: Understand market trends and customer preferences better than competitors.

- Increase revenue: Develop targeted marketing campaigns and improve pricing strategies.

Q 18. Explain how AR/VR technologies can be applied in different industries.

Augmented Reality (AR) and Virtual Reality (VR) technologies are transforming various industries by providing immersive and interactive experiences.

- Healthcare: AR can be used for surgical planning and guidance, allowing surgeons to visualize internal organs in 3D before surgery. VR can help train medical professionals in simulated environments. Imagine practicing complex procedures without risking patient safety.

- Manufacturing: AR can overlay digital instructions and schematics onto real-world machinery, assisting technicians during maintenance and repair. VR can be used for training employees on complex machinery and procedures in a safe and controlled environment.

- Retail: AR allows customers to virtually try on clothes or furniture in their homes before purchasing, reducing return rates and enhancing the shopping experience. VR can create immersive shopping experiences.

- Education: VR allows students to explore historical sites or distant planets, making learning more engaging and interactive. AR can bring textbook content to life, making learning more interactive and memorable.

- Entertainment: VR is revolutionizing gaming and entertainment, providing immersive experiences that go beyond traditional screens. AR games are becoming increasingly popular, blending the real and virtual worlds.

AR and VR are powerful tools for improving efficiency, training, and the overall customer or user experience across various sectors. The key is to integrate these technologies strategically to solve specific business challenges.

Q 19. What are the potential risks and opportunities of 5G technology?

5G technology offers incredible opportunities, such as faster speeds, lower latency, and greater capacity, but it also presents potential risks.

Opportunities:

- Enhanced connectivity: Enabling faster downloads, smoother streaming, and the rise of IoT devices. Imagine downloading a high-definition movie in seconds.

- Low latency applications: Creating possibilities for real-time applications like autonomous vehicles and remote surgery, where minimal delay is critical.

- Increased capacity: Supporting a massive increase in connected devices, opening up new possibilities for smart cities and industrial IoT.

Risks:

- Security vulnerabilities: The increased connectivity and complexity of 5G networks make them more susceptible to cyberattacks and data breaches. It’s like having a bigger, faster highway – but also a larger target for hackers.

- Privacy concerns: The vast amount of data generated by 5G networks raises concerns about the collection and use of personal information. Think about the implications of constantly tracking location and device usage.

- Cost and deployment challenges: Building and maintaining 5G infrastructure is expensive and requires significant investment. Not everyone will have equal access.

- Health concerns: Some people express concerns about the potential health effects of 5G radio waves, although scientific consensus currently suggests the risks are low.

Addressing these risks requires a focus on robust security measures, privacy regulations, and responsible deployment planning to ensure that the benefits of 5G are realized safely and equitably.

Q 20. Describe the concept of natural language processing (NLP).

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) that focuses on enabling computers to understand, interpret, and generate human language. It’s about bridging the gap between human communication and computer understanding.

Think of it like teaching a computer to read and write like a human. It involves various techniques, including:

- Tokenization: Breaking down text into individual words or units.

- Part-of-speech tagging: Identifying the grammatical role of each word.

- Named entity recognition: Identifying and classifying named entities such as people, organizations, and locations.

- Sentiment analysis: Determining the emotional tone of text (positive, negative, or neutral).

- Machine translation: Automatically translating text from one language to another.

NLP is used in numerous applications, including chatbots, machine translation services, text summarization tools, sentiment analysis for social media monitoring, and voice assistants like Siri and Alexa. The underlying technology often utilizes deep learning models to achieve high accuracy and improve with more training data.

Q 21. How can AI be used to enhance cybersecurity?

AI can significantly enhance cybersecurity by automating tasks, detecting threats more effectively, and responding to attacks faster than humans alone.

- Threat detection: AI algorithms can analyze massive amounts of security data (network logs, system events, etc.) to identify patterns and anomalies that indicate potential threats. This allows for proactive detection of attacks before they cause significant damage – like a security guard constantly monitoring for suspicious activity.

- Vulnerability management: AI can help identify and prioritize security vulnerabilities in software and systems, allowing security teams to focus on the most critical issues first.

- Incident response: AI can automate parts of the incident response process, such as isolating infected systems and containing the spread of malware. This allows for faster containment and reduces the impact of attacks.

- Phishing detection: AI algorithms can analyze emails and websites to identify phishing attempts and other forms of social engineering attacks with high accuracy.

- Security information and event management (SIEM): AI-powered SIEM systems can correlate security events from various sources to provide a comprehensive view of the security posture of an organization.

However, AI is not a silver bullet. It is crucial to understand that AI systems can be fooled or manipulated by sophisticated adversaries. Therefore, a human-in-the-loop approach is vital, where humans review and validate AI-generated alerts and recommendations to ensure accuracy and prevent false positives or negatives.

Q 22. Explain the concept of computer vision and its applications.

Computer vision is essentially giving computers the ability to ‘see’ and interpret images and videos in the same way humans do. It involves using artificial intelligence (AI) and machine learning (ML) algorithms to analyze visual data, extract information, and make decisions based on that information. Think of it like teaching a computer to understand what’s in a picture or video, not just the pixels.

- Object Detection: Identifying objects within an image, such as cars, pedestrians, or faces. For example, self-driving cars use this to navigate.

- Image Classification: Categorizing images based on their content, like classifying pictures of cats versus dogs. This is used in medical image analysis to identify diseases.

- Image Segmentation: Partitioning an image into multiple segments, each representing a different object or region. This is critical for medical imaging to isolate tumors from healthy tissue.

- Video Analysis: Understanding the content of videos, including tracking objects over time. This is used in surveillance systems and sports analytics.

Applications span diverse fields: from autonomous vehicles and medical diagnostics to security systems and retail analytics. Imagine a smart security camera that automatically detects intruders, or a medical system that automatically diagnoses diseases from X-rays – these are all powered by computer vision.

Q 23. Discuss the impact of emerging technologies on the future of work.

Emerging technologies are reshaping the future of work dramatically. Automation, driven by AI and robotics, is increasing efficiency but also displacing some jobs. However, it’s creating new roles focused on technology development, maintenance, and data analysis.

- Automation of Routine Tasks: AI and automation are taking over repetitive, manual tasks, freeing human workers for more creative and strategic roles. Think of automated customer service chatbots or robotic process automation (RPA) in finance.

- Rise of the Gig Economy: The increased flexibility offered by technology is leading to a growth in freelance and contract work, offering diverse opportunities but requiring adaptability and self-management.

- Demand for Tech Skills: There’s a growing need for professionals skilled in data science, AI, cybersecurity, and cloud computing. Upskilling and reskilling initiatives are crucial to equip the workforce for these changes.

- Remote Work and Collaboration: Technology facilitates remote work, boosting flexibility but requiring effective communication and collaboration tools.

The future of work will require continuous learning, adaptability, and a focus on skills that complement technology, rather than compete with it. Human skills like critical thinking, creativity, and emotional intelligence will become even more valuable.

Q 24. What are the key considerations when designing a secure IoT system?

Designing a secure IoT system requires a multi-layered approach addressing various vulnerabilities inherent in interconnected devices.

- Device Security: Secure boot processes, strong encryption (both in transit and at rest), regular firmware updates, and secure hardware are paramount. Weak default passwords are a major attack vector.

- Network Security: Utilize secure network protocols like TLS/SSL, implement firewalls, and employ intrusion detection/prevention systems. Consider segmentation to isolate sensitive devices.

- Data Security: Encrypt data both during transmission and storage. Implement access controls to limit who can access what data. Regularly audit data access logs.

- Authentication and Authorization: Robust authentication mechanisms (e.g., multi-factor authentication) are needed to verify users and devices. Authorization controls determine what actions authenticated entities are permitted to perform.

- Over-the-Air (OTA) Updates: A secure mechanism for deploying software updates to devices is crucial for patching vulnerabilities and maintaining security.

Imagine a smart home system compromised: an attacker could control lights, appliances, and even security cameras. A robust security design prevents such scenarios.

Q 25. Explain the importance of data privacy in the age of emerging technologies.

Data privacy is increasingly crucial in the age of emerging technologies because these technologies often involve the collection, processing, and storage of vast amounts of personal data. Protecting this data is not just an ethical imperative; it’s often legally mandated.

- Data breaches: Leaks of personal information can lead to identity theft, financial loss, and reputational damage for individuals and organizations.

- Surveillance concerns: The use of facial recognition, location tracking, and other technologies raises concerns about mass surveillance and potential abuse of power.

- Algorithmic bias: AI systems trained on biased data can perpetuate and amplify existing inequalities, impacting individuals unfairly.

- Data misuse: Data collected for one purpose may be misused for another, violating user trust and potentially harming individuals.

Regulations like GDPR and CCPA highlight the importance of data privacy and require organizations to implement measures to protect personal information. This includes obtaining informed consent, providing transparency about data usage, and implementing robust security measures.

Q 26. Describe the role of data governance in managing data security and compliance.

Data governance is a crucial framework for managing data security and compliance. It establishes policies, processes, and responsibilities for data throughout its lifecycle, from creation to disposal.

- Data Security: Data governance helps define security policies, access controls, encryption methods, and data loss prevention strategies, ensuring data is protected from unauthorized access, use, disclosure, disruption, modification, or destruction.

- Compliance: It ensures adherence to relevant regulations like GDPR, CCPA, HIPAA, etc., by defining processes for data subject requests, data breach notification, and other compliance-related activities.

- Data Quality: Data governance establishes procedures for data quality management, ensuring data accuracy, completeness, consistency, and timeliness. This is vital for making sound business decisions.

- Data Retention: It defines policies for how long data should be retained and the procedures for data archiving and disposal, balancing business needs with legal and regulatory requirements.

Consider a healthcare organization: data governance ensures patient data is protected under HIPAA, maintained with high quality, and properly archived according to regulations. It’s a proactive approach to preventing problems and ensuring compliance.

Q 27. What are some of the emerging trends in artificial intelligence?

The field of AI is rapidly evolving, with several emerging trends shaping its future.

- Generative AI: Models capable of creating new content, like images, text, and music, are becoming increasingly sophisticated. Think DALL-E 2 or ChatGPT.

- Explainable AI (XAI): The focus is shifting towards building AI models whose decisions are transparent and understandable, addressing concerns about ‘black box’ AI.

- Edge AI: Processing AI tasks directly on devices rather than relying on the cloud, improving efficiency, reducing latency, and enhancing privacy. Think of smart devices processing data locally.

- AI for Science: AI is accelerating scientific discovery through drug discovery, materials science, and climate modeling.

- Reinforcement Learning from Human Feedback (RLHF): This technique combines reinforcement learning with human feedback to train AI models more effectively and align them better with human values.

These trends are not isolated; they often interact, leading to even more powerful and versatile AI systems.

Q 28. How can businesses leverage emerging technologies to gain a competitive advantage?

Businesses can leverage emerging technologies to gain a competitive advantage in several ways.

- Improved Efficiency and Productivity: Automation, AI-powered tools, and data analytics can streamline operations, reduce costs, and increase productivity. Imagine a manufacturing plant using robots to improve production speed.

- Enhanced Customer Experience: Personalized recommendations, AI-powered chatbots, and other technologies can enhance customer satisfaction and loyalty. Think of Netflix’s recommendation engine.

- New Product and Service Development: Emerging technologies can enable the creation of innovative products and services, opening new revenue streams. Examples include autonomous vehicles or personalized medicine.

- Data-Driven Decision Making: Advanced analytics and AI can provide valuable insights from data, helping businesses make more informed decisions. Think of a retail company using data to optimize inventory.

- Improved Risk Management: AI and machine learning can help identify and mitigate risks more effectively, such as fraud detection or supply chain disruptions.

Successful implementation requires a strategic approach, including investment in technology, talent acquisition, and change management. It’s not just about adopting technology; it’s about integrating it effectively into the business strategy.

Key Topics to Learn for Knowledge of Emerging Technologies Interview

- Artificial Intelligence (AI) & Machine Learning (ML): Understand fundamental concepts like supervised/unsupervised learning, deep learning, neural networks, and their applications in various industries. Consider exploring specific AI/ML tools and frameworks.

- Cloud Computing: Familiarize yourself with major cloud providers (AWS, Azure, GCP), their services (IaaS, PaaS, SaaS), and the advantages of cloud-based solutions. Practice explaining cloud deployment models and security considerations.

- Big Data & Data Analytics: Learn about data warehousing, data mining, and various analytical techniques used to extract insights from large datasets. Explore tools like Hadoop, Spark, and visualization platforms.

- Blockchain Technology: Grasp the core principles of blockchain, including decentralization, cryptography, and smart contracts. Understand its potential applications beyond cryptocurrencies.

- Internet of Things (IoT): Explore the concept of interconnected devices, data security challenges in IoT, and the potential impact on various sectors (e.g., healthcare, manufacturing).

- Cybersecurity & Data Privacy: Understand current threats and vulnerabilities, best practices for data protection, and relevant regulations (e.g., GDPR). Discuss approaches to implementing robust security measures.

- Virtual & Augmented Reality (VR/AR): Explore the technologies behind VR/AR, their applications in gaming, training, and other fields, and the future potential of immersive experiences.

- Quantum Computing: Develop a basic understanding of the principles of quantum computing and its potential to revolutionize various industries (though deep expertise is not always expected at entry-level).

Next Steps

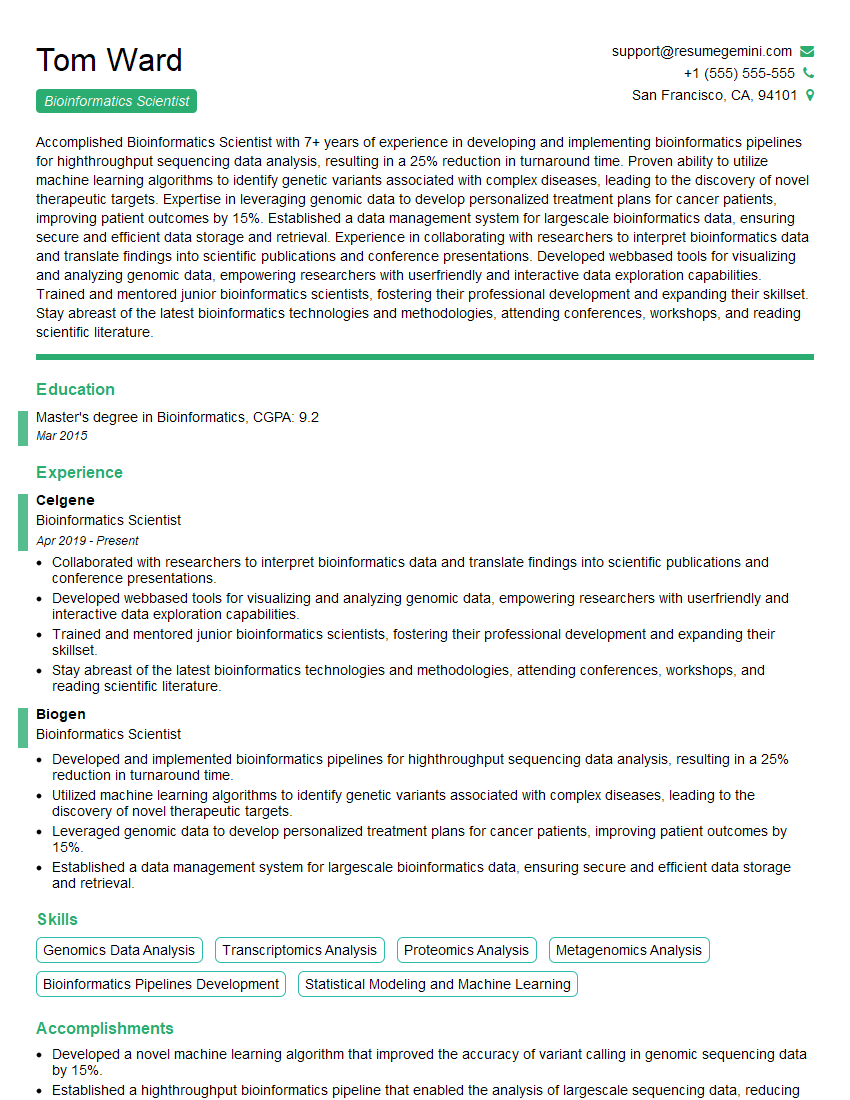

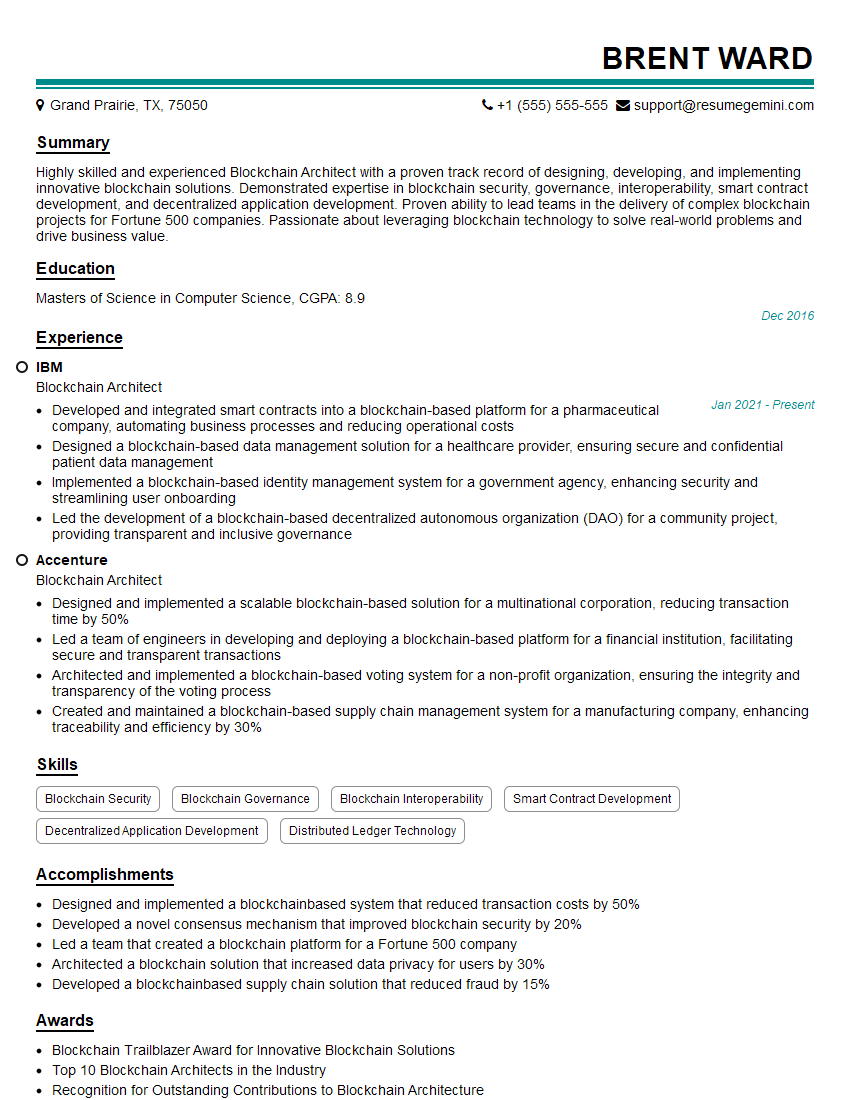

Mastering knowledge of emerging technologies is crucial for career advancement in today’s rapidly evolving landscape. It demonstrates adaptability, future-readiness, and a commitment to continuous learning – highly valued attributes by employers. To significantly improve your job prospects, creating a strong, ATS-friendly resume is essential. ResumeGemini can help you build a professional and impactful resume that showcases your skills and experience effectively. We provide examples of resumes tailored to highlight expertise in Knowledge of Emerging Technologies, allowing you to create a compelling application that stands out from the competition.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO