Preparation is the key to success in any interview. In this post, we’ll explore crucial Knowledge of File Structures and Indexing Systems interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Knowledge of File Structures and Indexing Systems Interview

Q 1. Explain the difference between a file and a directory.

In simple terms, a file is like a container holding data, while a directory is like a folder that organizes those containers. A file stores information – a document, an image, a program – in a structured format. A directory, on the other hand, doesn’t store data itself; instead, it provides a hierarchical way to arrange and manage files. Think of your computer’s file system: you have directories like ‘Documents’, ‘Pictures’, and ‘Downloads’, each containing multiple files. These directories can be nested within one another, creating a tree-like structure for efficient organization.

For example, a file named mydocument.txt contains text data. The Documents directory contains many such files, and the Documents directory itself might reside inside a larger directory like Users/username.

Q 2. Describe different file system types (e.g., FAT32, NTFS, ext4).

Several file system types exist, each with its own strengths and weaknesses. Let’s examine a few:

- FAT32 (File Allocation Table 32): A relatively simple and older file system, known for its broad compatibility with various operating systems. It’s suitable for smaller storage devices like USB drives, but has limitations on file size (up to 4GB) and lacks advanced features like access control lists.

- NTFS (New Technology File System): Microsoft’s primary file system for Windows. NTFS boasts features like journaling (for data recovery), access control lists (for security), and support for much larger files and volumes. It’s more robust and feature-rich than FAT32.

- ext4 (fourth extended file system): The default file system for many Linux distributions. ext4 is known for its performance and scalability, offering features like journaling, advanced metadata handling, and large file support. It’s a strong choice for servers and high-performance systems.

Other file systems include APFS (Apple File System) for macOS and iOS, and Btrfs (B-tree file system), another Linux option focused on data integrity and advanced features.

Q 3. What are the advantages and disadvantages of using different file systems?

The choice of file system depends heavily on the intended use. Here’s a comparison of advantages and disadvantages:

- FAT32: Advantages: Wide compatibility, simple. Disadvantages: Limited file size, no access control lists, less robust.

- NTFS: Advantages: Robust, feature-rich (access control, journaling), supports large files. Disadvantages: Less cross-platform compatibility than FAT32, can be slightly less performant than ext4 in certain scenarios.

- ext4: Advantages: High performance, scalability, good journaling. Disadvantages: Primarily used in Linux environments, less compatible with Windows.

For example, a USB drive intended for use across Windows and macOS systems might use FAT32 for maximum compatibility. A high-performance server would likely utilize ext4. A Windows workstation would benefit from NTFS’s security and robustness.

Q 4. Explain the concept of indexing in databases.

Database indexing is like creating a detailed table of contents for your data. It allows you to quickly locate specific information without having to search through every record. An index is a separate data structure that stores a subset of the database’s columns, along with pointers to the corresponding rows. These pointers allow the database system to efficiently jump directly to the relevant data, drastically reducing search time.

Imagine a library: instead of searching every single book, you use the catalog (the index) to find the book you need based on its title, author, or subject. Similarly, database indexes accelerate data retrieval.

Q 5. Describe different indexing techniques (e.g., B-trees, hash tables, inverted indexes).

Several indexing techniques exist, each with different properties:

- B-trees: Multi-level tree structures optimized for disk-based storage. They are highly efficient for range queries (e.g., finding all records within a specific date range) and are commonly used in relational database systems.

- Hash Tables: Use a hash function to map keys to indices in an array. They are extremely fast for exact-match lookups (e.g., finding a record with a specific ID), but don’t perform well for range queries.

- Inverted Indexes: Specifically designed for text search. They map terms to the documents containing those terms. This is crucial for full-text search capabilities, allowing quick retrieval of documents based on keywords.

For instance, a database of customer information might use a B-tree index on the ‘customer ID’ column for fast retrieval of specific customers, and a separate inverted index for searching customer names or addresses.

Q 6. What are the trade-offs between different indexing methods?

The optimal indexing method depends on the query patterns and data characteristics. Here are some trade-offs:

- B-trees vs. Hash Tables: B-trees excel at range queries but are slower for exact matches compared to hash tables. Hash tables are incredibly fast for exact matches but struggle with range queries.

- B-trees vs. Inverted Indexes: B-trees are general-purpose and suitable for various data types, while inverted indexes are specialized for text search. Inverted indexes are not efficient for numeric or date-based queries.

Choosing the right index is crucial. Over-indexing can slow down data modification operations (inserts, updates, deletes), while under-indexing will negatively impact query performance. Careful analysis of query patterns is key.

Q 7. How does indexing improve database query performance?

Indexing significantly improves database query performance by reducing the amount of data that needs to be scanned during a search. Instead of sequentially reading through every record (a full table scan), the database system utilizes the index to quickly pinpoint the relevant data locations. This is particularly important for large databases where full table scans can be prohibitively slow.

Think of searching a dictionary: without an index (alphabetical order), you would need to check every word. With an index, you instantly go to the right section. Similarly, database indexes dramatically reduce the time needed to answer queries, making applications more responsive and efficient.

Q 8. Explain the concept of a clustered index vs. a non-clustered index.

Imagine a library. A clustered index is like organizing books by their Dewey Decimal classification number – the physical order of the books reflects the index order. In databases, a clustered index dictates the physical storage order of the data rows. There can only be one clustered index per table because the data can only be physically sorted one way. Queries using the clustered index column are extremely fast because the database doesn’t need to jump around to find the data; it’s already organized.

A non-clustered index is like having a separate card catalog – it contains pointers to the books but the books themselves aren’t ordered by the card catalog. In databases, a non-clustered index exists separately from the data, containing a key value and a pointer to the row location. You can have multiple non-clustered indexes on a table.

Example: A table of customer orders might have a clustered index on the order_id (because order IDs are likely sequential) and a non-clustered index on customer_id for faster retrieval of all orders by a specific customer. Retrieving orders by order_id is very efficient with the clustered index, while finding orders by customer_id benefits from the non-clustered index.

Q 9. How do you choose the appropriate index for a particular query?

Choosing the right index is crucial for query performance. Consider these factors:

- Query type:

WHEREclauses heavily influence index selection. If a query filters by a specific column, an index on that column is beneficial. Queries involving joins need indexes on the join columns. - Data volume and distribution: Indexes are most useful with large datasets. If a column has a highly skewed distribution (e.g., one value appearing many more times than others), indexing might not yield significant performance improvements.

- Update frequency: Frequent updates to the indexed column can impact performance because the index needs to be updated as well. Weigh the benefits of fast lookups against the overhead of index maintenance.

- Index type: Consider whether a clustered or non-clustered index is appropriate based on how data is frequently accessed. Clustered indexes are great for sequential access, while non-clustered indexes are versatile for various lookup patterns.

Example: A query selecting all customers from a specific city would benefit from an index on the city column. A query joining orders and customers tables would benefit from indexes on the customer_id column in both tables. Experimentation and profiling are key to finding the optimal index strategy for a given workload.

Q 10. Describe the different types of file organizations (e.g., sequential, indexed sequential, direct).

Different file organizations cater to various data access patterns:

- Sequential: Records are stored sequentially, like in a text file. Access is sequential; to find a specific record, you need to read from the beginning. Simple, but slow for random access.

- Indexed Sequential: Combines sequential storage with an index for faster random access. The index points to the location of records based on a key. Efficient for both sequential and random access, but can be more complex to manage.

- Direct (or Hashing): The location of a record is calculated directly from a key value using a hashing function. Provides very fast random access but can suffer from collisions (multiple keys mapping to the same location), requiring collision resolution techniques. Ideal for applications requiring very fast lookups.

Example: A log file is typically sequential. A database index uses indexed sequential organization. A hash table in memory uses direct file organization.

Q 11. Explain how a B-tree works and its properties.

A B-tree is a self-balancing tree data structure that maintains sorted data and allows searches, sequential access, insertions, and deletions in logarithmic time. It’s designed for efficient disk access, minimizing the number of disk reads and writes. This is crucial for databases where data resides on disk.

Key Properties:

- Balanced: All leaf nodes are at the same level, ensuring efficient search times.

- Nodes are filled to a certain degree: This minimizes the height of the tree, further improving search performance.

- Multi-level: Unlike binary trees, B-trees can have multiple keys and children per node, making them very space-efficient.

- Self-balancing: It automatically adjusts its structure during insertions and deletions to maintain balance.

How it works: Search operations start at the root node and traverse down the tree based on key comparisons until the target key is found in a leaf node. Insertions and deletions involve splitting or merging nodes to maintain balance and the minimum fill factor.

Q 12. Explain how a hash table works and its properties.

A hash table (or hash map) is a data structure that uses a hash function to map keys to indices within an array. It provides average-case O(1) time complexity for insertion, deletion, and search operations. This makes it incredibly fast for lookups.

Key Properties:

- Hash Function: A function that maps keys to indices in the array. A good hash function minimizes collisions.

- Collision Handling: A strategy to handle cases where multiple keys map to the same index (e.g., separate chaining, open addressing).

- Array (or Bucket Array): The underlying data structure where key-value pairs are stored.

How it works: When you want to insert a key-value pair, the hash function calculates the index. The pair is stored at that index. To retrieve a value, the hash function calculates the index, and the value is fetched from that location. Collision handling techniques ensure that even with collisions, the lookup remains efficient (though not O(1) in the worst case).

Q 13. What is an inverted index and how is it used in search engines?

An inverted index is a data structure storing a mapping from content words to documents containing those words. It’s the cornerstone of many modern search engines.

How it works: Instead of storing documents sequentially, an inverted index stores a dictionary of words. For each word, it contains a list of documents where that word appears and optionally the frequency and positions of the word in each document. When a search query comes in, the engine uses the inverted index to quickly find documents containing the specified words.

Example: Consider documents D1 and D2. D1 contains “cat dog”, and D2 contains “dog bird”. The inverted index would look like:

cat: [D1]dog: [D1, D2]bird: [D2]

When a user searches for “dog”, the index immediately identifies D1 and D2 as relevant documents. This makes search much faster than scanning through all documents for each search.

Q 14. What is fragmentation in a file system and how is it handled?

File system fragmentation occurs when files are stored in non-contiguous locations on a storage device. This happens when files are created, deleted, and modified over time, leading to scattered file fragments.

Impact: Fragmented files slow down file access because the disk head needs to jump between different locations to read or write a single file. This can significantly impact system performance, especially on hard disk drives (HDDs).

Handling Fragmentation:

- Defragmentation (HDDs): This utility reorganizes files on the disk to place them in contiguous locations. This is less crucial for solid-state drives (SSDs) which don’t suffer as much from performance degradation due to fragmentation.

- Proper Disk Management: Avoid filling the drive to full capacity. Leave some free space for the OS to manage file allocation efficiently.

- Using SSDs: SSDs don’t experience the same performance problems with fragmentation as HDDs.

Example: A hard drive with many small files frequently created and deleted can become severely fragmented, leading to slower loading times for applications and files. Regular defragmentation (for HDDs) can improve performance.

Q 15. Explain the concept of data deduplication.

Data deduplication is a storage optimization technique that eliminates redundant copies of data. Imagine you have multiple versions of the same image file scattered across your hard drive. Deduplication identifies these identical files and stores only one copy, creating pointers or links to that single instance. This significantly reduces storage space and improves data management efficiency.

There are two main types: within-file deduplication, which finds and removes duplicate chunks within a single file, and between-file deduplication, which identifies and removes copies of entire files across different locations. The latter is more common and often used in backup systems and cloud storage. A powerful example is a version control system like Git, where it efficiently handles multiple versions of a project by storing only the changes between versions, not the entire project every time.

Benefits include reduced storage costs, faster backups and restores, and improved disaster recovery.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Discuss different techniques for optimizing file I/O.

Optimizing file I/O (Input/Output) is crucial for application performance, especially when dealing with large files. Key techniques include:

- Sequential Access: Read and write data sequentially whenever possible. This significantly reduces seek time, the time it takes for the read/write head to move to a specific location on the disk. Think of reading a book – it’s much faster to read page after page sequentially than to jump randomly between chapters.

- Buffering: Use buffers to minimize disk access. Buffers store data temporarily in memory before writing it to disk. This allows for batch writing, reducing the overhead of frequent disk operations. Imagine a courier making many small deliveries instead of grouping them to deliver at once; buffering is grouping.

- Asynchronous I/O: Overlap I/O operations with computation. While waiting for data to be read from or written to disk, your program can perform other tasks. This improves overall throughput.

- Caching: Employ operating system or application-level caching mechanisms to store frequently accessed data in memory for faster retrieval (discussed further in the next question).

- Data Compression: Compress data before storing it to reduce the amount of data that needs to be read or written, thereby reducing I/O time. ZIP files are a good example of this technique in action.

- Using appropriate file systems: Choose a file system that’s optimized for your workload. For example, XFS or Btrfs are often preferred for large files and datasets due to their better performance and features.

Q 17. How does caching improve file access performance?

Caching dramatically accelerates file access by storing frequently accessed data in a faster storage medium, typically RAM (Random Access Memory). When a file or a portion of a file is requested, the system first checks the cache. If the data is present (a ‘cache hit’), it’s retrieved instantly. If not (a ‘cache miss’), the data is read from the slower storage (like a hard drive or SSD) and copied into the cache for future use. This ‘hit ratio’ is a key performance indicator.

Imagine a library – the cache is like a readily accessible collection of frequently borrowed books near the front desk. Searching for these books is much faster than navigating the entire library.

Caching strategies vary; some commonly used techniques are Least Recently Used (LRU) and Least Frequently Used (LFU), algorithms that determine which data to evict from the cache when it becomes full. Modern operating systems and databases employ sophisticated caching mechanisms for optimal performance.

Q 18. What are the challenges of managing large files and datasets?

Managing large files and datasets presents several challenges:

- Storage Capacity: Requires substantial storage infrastructure, often necessitating cloud storage or specialized storage solutions.

- Processing Power: Processing large datasets demands significant computational resources. Parallel processing and distributed computing techniques often become necessary.

- Data Backup and Recovery: Backup and restoration of large datasets can be time-consuming and resource-intensive. Incremental backups and efficient data replication are crucial.

- Data Integrity: Maintaining data integrity across large datasets is paramount. Techniques such as checksums and error detection codes are vital.

- Data Security: Protecting large datasets from unauthorized access, modification, or deletion requires robust security measures.

- Scalability: The system must be scalable to accommodate future data growth. This often involves using distributed file systems and cloud-based solutions.

- Data Access and Retrieval: Efficiently accessing and retrieving specific information from massive datasets necessitates well-designed indexing and query mechanisms.

Q 19. How do you handle file locking and concurrency issues?

File locking and concurrency control are vital for preventing data corruption when multiple processes or threads access the same file simultaneously. Different locking mechanisms address this:

- Exclusive Locks: Only one process can access the file at a time. This prevents conflicts but can lead to performance bottlenecks if one process holds the lock for an extended period.

- Shared Locks: Multiple processes can read the file concurrently but cannot modify it. This allows for better concurrency for read-only operations.

Operating systems provide APIs for implementing these locks. In databases, transaction management systems handle concurrency automatically, ensuring data consistency. For example, a simple banking application requires meticulous concurrency control to prevent overdraft issues when multiple users access the same account simultaneously. If not managed properly, this can lead to inconsistent and incorrect account balances.

Q 20. Explain the concept of ACID properties in databases.

ACID properties are a set of criteria for ensuring that database transactions are processed reliably. They guarantee data integrity and consistency, even in the event of failures. The acronym stands for:

- Atomicity: The entire transaction is treated as a single, indivisible unit. Either all changes are applied, or none are. Imagine a bank transfer – either both accounts are updated correctly, or neither is.

- Consistency: The transaction maintains the database’s consistency constraints. The database remains in a valid state before and after the transaction.

- Isolation: Concurrent transactions are isolated from each other. Each transaction appears to execute sequentially, preventing interference.

- Durability: Once a transaction is committed, its changes persist even if a system failure occurs. The data changes survive power outages and crashes.

These properties are crucial for building reliable and trustworthy database applications. Without them, data corruption and inconsistencies could easily arise, leading to significant problems in applications from simple accounting systems to large-scale financial trading platforms.

Q 21. What is a transaction log and why is it important?

A transaction log is a sequential record of all database transactions. It acts as a safety net, providing a mechanism to recover the database to a consistent state in case of failures. Each transaction’s changes are recorded in the log before they are applied to the main database. This ensures that even if the database crashes before the transaction is fully written, the log can be used to redo the changes during recovery.

Imagine a detailed notebook where every change to a document is logged step-by-step. If the document is accidentally deleted, the notebook provides a perfect record to reconstruct it completely. Similarly, the transaction log allows the database to recover accurately and reliably.

The transaction log is essential for ensuring data durability (the ‘D’ in ACID) and enables database recovery after various failures, including system crashes, power outages, and media errors. Without it, data loss is a significant risk, especially for mission-critical applications.

Q 22. Describe different data compression techniques.

Data compression techniques aim to reduce the size of data without losing (lossless) or with acceptable loss (lossy) of information. This improves storage efficiency and transmission speeds. Let’s look at some common methods:

- Run-Length Encoding (RLE): This simple technique replaces consecutive repeating characters with a count and the character itself. For example, ‘AAABBBCC’ becomes ‘3A3B2C’. It’s effective for data with long runs of identical values but less so for complex data.

- Huffman Coding: A variable-length coding scheme that assigns shorter codes to more frequent symbols and longer codes to less frequent ones. Think of it like a specialized dictionary where common words get shorter abbreviations. This is lossless.

- Lempel-Ziv (LZ) Algorithms: These techniques identify repeating patterns in data and replace them with references to their earlier occurrences. Many popular compression formats like gzip and deflate use variations of LZ. This is lossless.

- Lossy Compression: Unlike lossless methods, these discard some information to achieve higher compression ratios. JPEG image compression and MP3 audio compression are prime examples. They exploit characteristics of human perception to remove less noticeable data.

Choosing the right technique depends on the type of data and the acceptable level of information loss. For archival data, lossless compression is crucial; for multimedia, a lossy approach might be preferred to achieve smaller file sizes.

Q 23. Explain the difference between logical and physical file structures.

The distinction between logical and physical file structures lies in how the data is perceived by the user versus how it’s actually stored on the physical medium.

- Logical File Structure: This describes how the data is organized from the user’s perspective. It concerns the way data is accessed and manipulated. Examples include sequential, indexed sequential, direct, and relative files. A sequential file is like a book; you read it from beginning to end. An indexed sequential file adds an index to speed up access to specific parts, like a book’s table of contents. Direct files allow random access to specific records using a key value, like accessing a specific song on a playlist.

- Physical File Structure: This refers to the actual arrangement of data on the storage device (hard drive, SSD, etc.). This includes details like block allocation, file allocation tables (FAT), and the organization of data within sectors and tracks. The user doesn’t directly interact with these aspects; the operating system handles the low-level details.

Consider a word processor document. Logically, you see paragraphs, sections, and formatting. Physically, the data might be fragmented across multiple disk sectors, and the operating system manages this fragmentation transparently.

Q 24. What are the implications of choosing different data types for indexing?

The choice of data type for indexing significantly impacts performance and storage space. Different data types have varying sizes and require different comparison operations.

- Integer Keys: Efficient for numerical indexing and quick lookups using binary search. They require less storage compared to strings.

- String Keys: More versatile but require more storage and slower comparison operations than integers. Using efficient string algorithms can mitigate this.

- Composite Keys: Combining multiple fields (e.g., a combination of last name, first name, and date of birth) improves uniqueness and can optimize querying for specific combinations. However, this leads to more complex indexing structures.

For example, indexing a library catalog by book ID (integer) offers faster searches than indexing by book title (string). However, a composite key including author’s name and year of publication might be necessary for efficient retrieval if the library has multiple books with the same title.

Q 25. How do you troubleshoot performance issues related to file systems or databases?

Troubleshooting performance issues in file systems or databases involves a systematic approach. Here’s a strategy:

- Identify the Bottleneck: Use system monitoring tools (e.g.,

top,iostat, database monitoring tools) to pinpoint slow areas. Is it disk I/O, CPU usage, network latency, or memory limitations? - Analyze Disk Activity: Check for high disk I/O wait times, which might indicate insufficient disk resources, inefficient indexing, or fragmentation. Defragmentation can help in some cases.

- Examine Query Performance: For databases, examine slow-running queries. Optimize queries, add indexes, or tune database settings.

- Check Memory Usage: Insufficient memory can lead to excessive swapping, significantly slowing down operations. Increase memory allocation if possible.

- Review File System Configuration: Verify file system settings, such as block size and allocation strategies. Inaccurate settings can negatively impact performance.

- Network Performance: If accessing data across a network, assess network latency and bandwidth. Network issues can create slowdowns.

- Logging and Monitoring: Comprehensive system and application logs are invaluable for tracking down errors and performance issues.

Remember to prioritize the steps based on the observed bottlenecks. Sometimes, simple changes like adding an index to a database table can dramatically improve query speed. Other times, replacing a failing hard drive may be necessary.

Q 26. Explain the concept of RAID and its different levels.

RAID (Redundant Array of Independent Disks) is a technology that combines multiple hard drives to improve performance, reliability, or both. Different RAID levels offer various trade-offs:

- RAID 0 (Striping): Data is split across multiple disks, improving read/write speeds. However, it offers no redundancy, meaning a single disk failure results in complete data loss.

- RAID 1 (Mirroring): Data is mirrored across multiple disks. High redundancy and good read performance, but storage utilization is halved.

- RAID 5 (Striping with Parity): Data is striped across multiple disks, with parity information distributed across all disks. Provides good performance and redundancy; one disk can fail without data loss. But, write operations are slower than RAID 0 or 1.

- RAID 6 (Striping with Double Parity): Similar to RAID 5, but with double parity, allowing two simultaneous disk failures without data loss. Even better redundancy but more complex and slower writes.

- RAID 10 (Mirroring and Striping): Combines mirroring and striping. Offers both high performance and redundancy.

The choice of RAID level depends on the application’s requirements for performance, redundancy, and storage capacity. A database server might benefit from RAID 10 for high performance and availability, while a less critical application might opt for RAID 5 for a balance between performance and redundancy.

Q 27. Describe different techniques for data backup and recovery.

Data backup and recovery techniques are crucial for data protection. Key methods include:

- Full Backup: A complete copy of all data at a specific point in time. It’s time-consuming but provides a complete recovery point. Think of this like photocopying an entire textbook.

- Incremental Backup: Only backs up data that has changed since the last full or incremental backup. Much faster than full backups but requires a full backup as a starting point. Like only copying the new pages added to your textbook since the last photocopy.

- Differential Backup: Backs up data that has changed since the last full backup. Faster than incremental backups but larger than incremental backups if done frequently. It’s like photocopying all the pages that were new since the original photocopying.

- Cloud Backup: Stores backups on remote servers, providing offsite protection from physical disasters. It adds a layer of redundancy and convenience.

- Versioning: Maintaining multiple versions of backups allows restoring data to previous states. It gives you the freedom to ‘undo’ changes in your data.

Effective backup strategies usually employ a combination of methods (e.g., full backup followed by incremental backups). Regular testing of the recovery process is crucial to ensure that backups are usable and the recovery plan works as intended.

Q 28. What are some common security considerations related to file systems and databases?

Security considerations for file systems and databases are paramount. Key aspects include:

- Access Control: Implementing robust access control mechanisms (e.g., user permissions, role-based access control) restricts access to sensitive data based on users’ roles and privileges. This ensures ‘need-to-know’ access only.

- Data Encryption: Encrypting data at rest (on storage) and in transit (over networks) protects data from unauthorized access even if the system is compromised. This is like using a secret code to protect the contents of your files.

- Regular Security Audits: Regularly auditing systems for vulnerabilities and misconfigurations is essential for proactive security. It’s like having a regular check-up to maintain your system’s health.

- Input Validation: Sanitizing user inputs to prevent SQL injection attacks and other vulnerabilities is crucial for database security. This prevents malicious users from exploiting weaknesses.

- Firewall Protection: Network firewalls prevent unauthorized access to file servers and databases from external sources. They act as gatekeepers to your system.

- Regular Updates and Patching: Timely application of security updates and patches mitigates known vulnerabilities. Staying updated is like getting vaccinations for your system.

A layered security approach is crucial, combining multiple security measures to create a robust defense against threats.

Key Topics to Learn for Knowledge of File Structures and Indexing Systems Interview

- File Organization: Understand different file organizations like sequential, indexed sequential, direct, and hashed files. Consider their strengths and weaknesses in various applications.

- Indexing Techniques: Explore various indexing methods such as B-trees, B+ trees, inverted indexes, and hash indexes. Be prepared to discuss their performance characteristics and suitability for different data types and query patterns.

- Data Structures: Review fundamental data structures like linked lists, trees, and hash tables, as they form the basis of many file structures and indexing systems. Analyze their time and space complexities.

- Database Systems: Understand how file structures and indexing systems are utilized within database management systems (DBMS) to efficiently store and retrieve data. Consider the role of indexes in optimizing query performance.

- Search Engines: Explore how indexing systems are crucial for efficient searching in large-scale applications like search engines. Discuss concepts like term frequency-inverse document frequency (TF-IDF) and relevance ranking.

- Space Management: Understand techniques for efficient space management in file systems, including file allocation methods and strategies for handling file fragmentation.

- Performance Analysis: Be prepared to discuss how to analyze and optimize the performance of file structures and indexing systems, considering factors like I/O operations, search time, and memory usage.

- Practical Application: Consider real-world examples of how file structures and indexing systems are used in various applications, such as document management, data warehousing, and e-commerce.

Next Steps

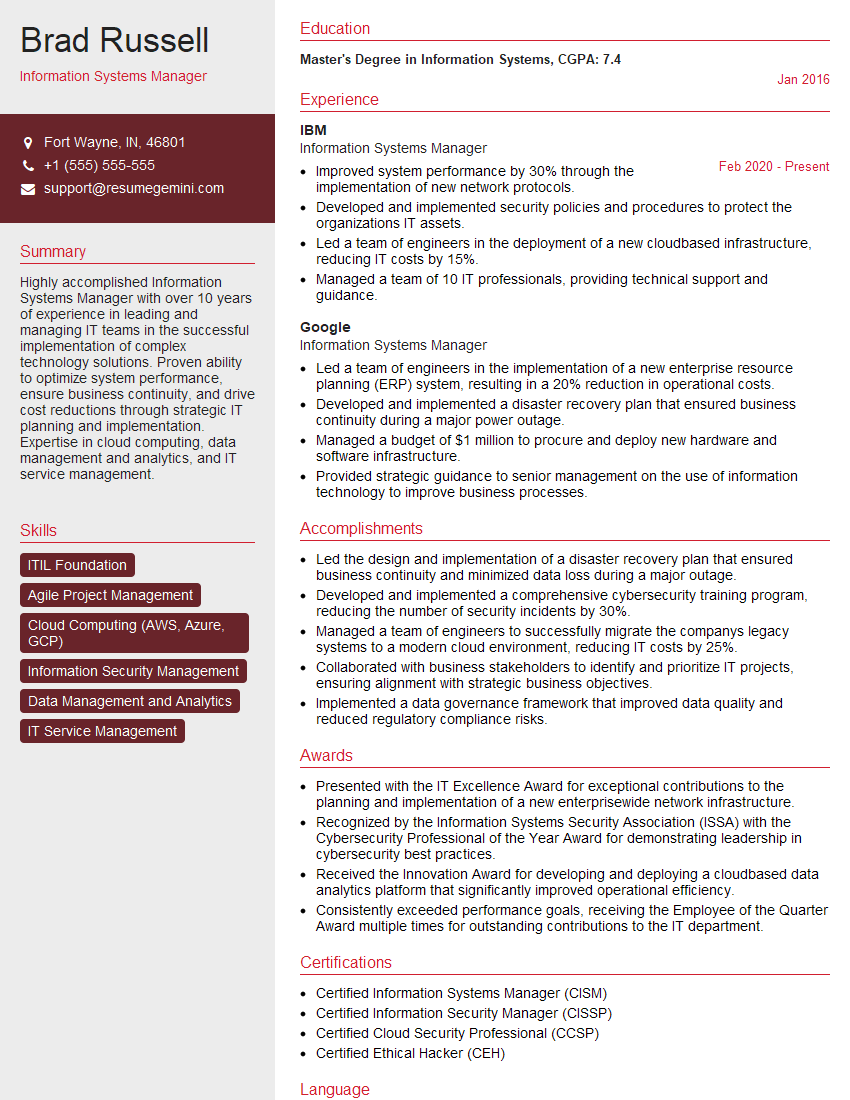

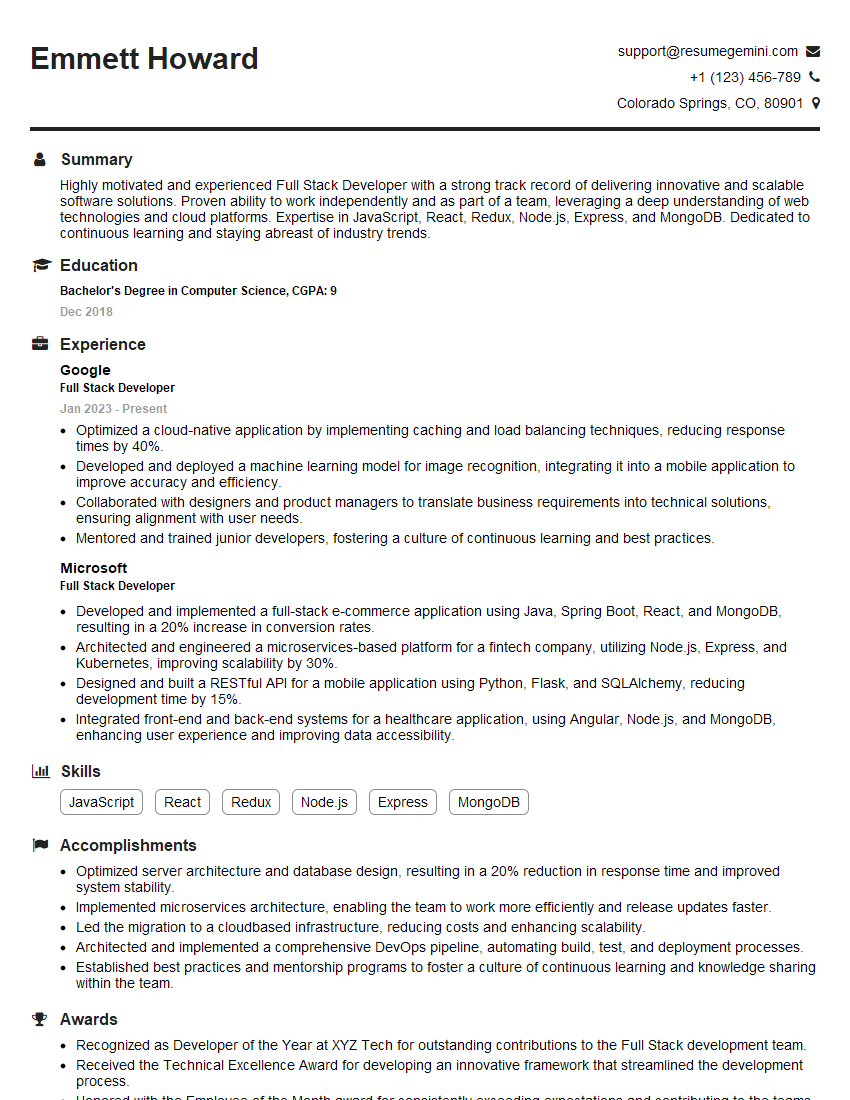

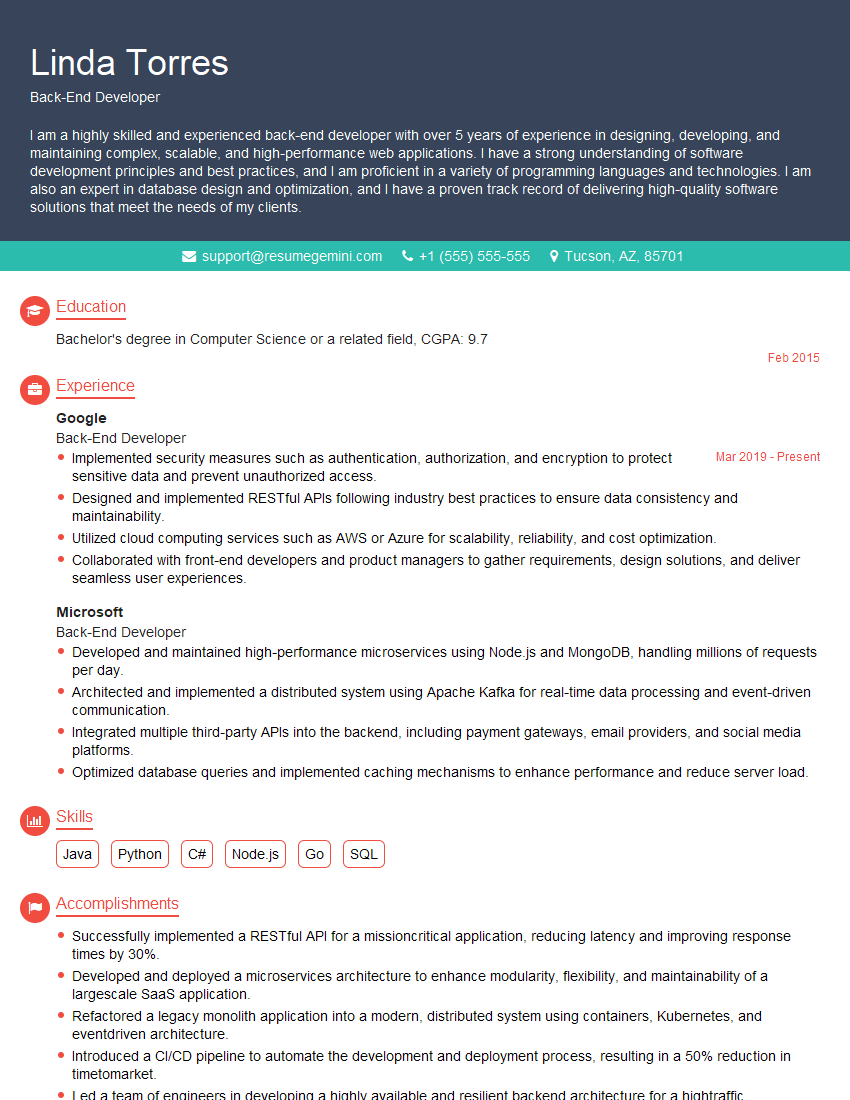

Mastering Knowledge of File Structures and Indexing Systems is crucial for career advancement in areas like database administration, software engineering, and data science. A strong understanding of these concepts demonstrates a solid foundation in computer science and data management, making you a highly desirable candidate. To maximize your job prospects, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional and impactful resume tailored to your specific skills and experience. Examples of resumes tailored to Knowledge of File Structures and Indexing Systems are available to guide you. Take the next step towards your dream job – build a compelling resume with ResumeGemini today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO