The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Knowledge of library automation systems interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Knowledge of library automation systems Interview

Q 1. Describe your experience with library automation systems (ILS).

My experience with Integrated Library Systems (ILS) spans over ten years, encompassing roles from system administrator to trainer and consultant. I’ve been involved in every stage of the ILS lifecycle, from initial needs assessment and vendor selection to implementation, customization, data migration, and ongoing support. This has included hands-on work with various systems, managing large datasets, and collaborating closely with library staff to ensure effective system integration and user adoption. I’ve successfully resolved numerous critical system issues, optimized workflows, and implemented new features to improve overall library operations.

For example, in my previous role, I led the migration of a university library’s ILS to a new platform. This involved careful planning, data cleansing, and extensive staff training. The successful completion of this project resulted in a significant improvement in the library’s efficiency and user experience. I’m adept at not just the technical aspects, but also the change management necessary to ensure a smooth transition for library staff and patrons.

Q 2. What library automation systems are you familiar with (e.g., Koha, Evergreen, Alma, Sierra)?

I’m proficient in several leading ILS platforms, including Koha (an open-source system known for its flexibility), Evergreen (another open-source option with strong community support), Alma (a comprehensive cloud-based system from Ex Libris, favored for its advanced features), and Sierra (a robust system from Innovative Interfaces, known for its stability and extensive functionality). My experience extends beyond just using these systems; I understand their underlying architectures, data models, and the nuances of their different modules, including acquisitions, cataloging, circulation, and serials management. This allows me to efficiently assess the strengths and weaknesses of each platform based on specific library needs.

Q 3. Explain the MARC record structure and its importance in library automation.

The MARC (Machine-Readable Cataloging) record is a standardized format for representing bibliographic information in a machine-readable way. Think of it as a highly structured database record, containing many data fields describing a library item. These fields are organized into Leader, Directory, and Data fields, using specific tags to identify the type of information. For example, tag 245 represents the title statement, 100 the main entry, and 020 the ISBN.

Its importance in library automation is paramount because it ensures interoperability between different ILS platforms and allows for consistent searching and retrieval of library materials across systems and databases worldwide. Without this standardization, searching and sharing bibliographic data would be extremely complex and inefficient. Imagine trying to find a book if every library used a different way of describing it!

Q 4. How do you ensure data integrity in a library automation system?

Ensuring data integrity in a library automation system is crucial for accurate searching, reporting, and overall system reliability. My approach involves a multi-faceted strategy:

- Data validation rules: Implementing robust data validation rules during data entry prevents incorrect or inconsistent information from being entered into the system. For instance, ensuring ISBNs are correctly formatted.

- Regular data backups and recovery procedures: Implementing a reliable backup and disaster recovery plan is essential to protect against data loss due to hardware failure, software errors, or cyberattacks.

- Data cleansing and deduplication: Periodically reviewing and cleaning the database to remove duplicate records and correct inconsistencies is crucial. This could involve using specialized tools or scripts.

- Access control and permissions: Restricting access to the system and implementing appropriate user permissions helps prevent accidental or malicious data modification.

- Automated data quality checks: Utilizing automated tools to regularly monitor and report on data quality issues is a proactive measure.

- Regular system maintenance and updates: Keeping the ILS software up-to-date and performing regular system maintenance is vital to ensuring optimal performance and stability.

By employing this comprehensive approach, I can significantly minimize the risk of data corruption and maintain the accuracy and reliability of the library’s bibliographic data.

Q 5. Describe your experience with metadata schemas (e.g., Dublin Core, MODS).

I have extensive experience working with various metadata schemas, including Dublin Core and MODS (Metadata Object Description Schema). Dublin Core is a simple, widely adopted schema that uses a set of fifteen elements to describe resources, such as title, creator, and subject. It’s ideal for its simplicity and ease of use, especially in less complex situations. MODS, on the other hand, is a more comprehensive and detailed schema providing more granular control over metadata elements, allowing for richer descriptions of resources. I choose the appropriate schema based on the specific needs of the project.

For example, when creating metadata for a digital library, MODS might be preferable due to its capacity for more precise description and linking to related resources. For simpler projects, like a basic library catalog, Dublin Core could suffice.

Q 6. What are the key differences between various ILS platforms?

The key differences between ILS platforms stem from factors like scalability, functionality, cost, and open-source vs. proprietary nature. For instance, Koha and Evergreen, being open-source, offer high customization but might require more technical expertise for implementation and maintenance. Commercial systems like Alma and Sierra provide robust features and extensive support but are often more expensive and less flexible in terms of customization.

Other differences lie in the user interface, reporting capabilities, integration with other library systems, and the level of support provided by the vendor. Some systems excel in specific areas – Alma, for example, is highly regarded for its discovery layer and integration with other library management tools; Sierra is known for its strength in managing large cataloging backlogs.

Q 7. How do you troubleshoot common issues in a library automation system?

Troubleshooting common ILS issues requires a systematic approach. My strategy typically involves:

- Identifying the problem: Clearly define the issue. Is it a user interface problem, a data issue, a server-side problem, or a network connectivity issue?

- Gathering information: Collect relevant information, such as error messages, logs, and user reports. The more details, the better.

- Checking the obvious: Start by checking simple things like network connectivity, server status, and user permissions. These are often the root cause.

- Using diagnostic tools: Utilize the system’s built-in diagnostic tools to identify potential issues and pinpoint the problem’s location.

- Searching for solutions: Consult documentation, online forums, and support resources to find solutions to common problems.

- Escalating the issue: If the problem persists, escalate it to the vendor’s support team or other relevant personnel.

A methodical approach and good record-keeping are essential for effective troubleshooting. I always document the steps taken to resolve an issue for future reference and to aid in preventing similar problems from occurring again.

Q 8. Explain your experience with data migration in a library automation system.

Data migration in a library automation system (ILS) is the process of moving library data from one system to another. This can involve moving bibliographic records, patron data, circulation history, and other crucial information. It’s a complex undertaking requiring meticulous planning and execution to ensure data integrity and minimal disruption to services.

In my experience, I’ve managed several migrations, including one from a legacy system to a cloud-based ILS. This involved:

- Data Assessment: Thoroughly analyzing the source system’s data structure, identifying potential issues like duplicate records or inconsistencies.

- Data Cleaning: Employing scripting and data manipulation techniques to cleanse and standardize data. This included resolving address inconsistencies, standardizing date formats, and handling missing values.

- Mapping & Transformation: Creating a detailed mapping document to align fields between the source and target systems. This is often done using ETL (Extract, Transform, Load) tools. Data transformations might include reformatting dates or concatenating data fields.

- Testing: Rigorous testing, including unit testing, integration testing, and user acceptance testing, is crucial to identify and resolve data errors before the go-live date.

- Go-Live & Post-Migration Support: Supervising the actual migration process, ensuring minimal downtime, and providing post-migration support to address any emerging issues.

For example, in one migration, we discovered inconsistencies in the author’s name fields. We used a combination of automated scripts and manual review to standardize author names according to Library of Congress rules, significantly improving the accuracy and searchability of the data in the new system.

Q 9. What are your experiences with system upgrades and maintenance?

System upgrades and maintenance are critical for ensuring the smooth operation and security of an ILS. They involve updating software, applying security patches, performing database maintenance, and optimizing system performance. This work requires a deep understanding of the ILS’s architecture, its dependencies, and the potential impact of changes on the library’s workflow.

My experience includes both planned upgrades and emergency maintenance. Planned upgrades usually involve:

- Version Research: Investigating the new version’s features, benefits, and potential compatibility issues with existing hardware and software.

- Testing: Setting up a test environment to thoroughly test the upgrade before deploying it to the production system.

- Downtime Planning: Minimizing downtime by scheduling upgrades during off-peak hours, and having a robust rollback plan in place.

- Training: Providing staff training on any new features or changes in the system.

Emergency maintenance often involves quick response to critical issues, such as database corruption or security vulnerabilities. In such situations, rapid problem identification, efficient troubleshooting, and quick resolution are essential to minimize disruption. The effective use of logs and monitoring tools are crucial for this.

Q 10. How do you manage user accounts and permissions within an ILS?

Managing user accounts and permissions in an ILS is crucial for maintaining data security and controlling access to library resources. This involves creating and managing user profiles, assigning roles and permissions, and monitoring user activity. Most ILS allow for different user roles with varying levels of access.

My experience includes creating user accounts for various library staff members and patrons with varying permission levels. This includes:

- Role-based access control: Assigning roles (e.g., librarian, circulation staff, patron) with specific permissions, such as the ability to catalog items, check out materials, or access administrative functions.

- Password management: Enforcing strong password policies, including password complexity requirements and regular password changes.

- Account monitoring: Regularly reviewing user activity to detect and address potential security breaches.

- User training: Educating users about proper login procedures and security best practices.

For example, I set up a system where librarians have full access to the ILS, while circulation staff only have access to functions related to circulation and patron management.

Q 11. Describe your experience with creating and maintaining authority control records.

Authority control is the process of creating and maintaining standardized records for names, subjects, and other descriptive elements used in cataloging. This ensures consistency and accuracy in library records, improving search and retrieval. It’s a critical aspect of library data management.

My experience in creating and maintaining authority control records includes:

- Using standard authority files: Utilizing existing authority files like Library of Congress Subject Headings (LCSH) and Name Authority Records (NAF) to ensure consistency.

- Creating new records: Creating new authority records when necessary, following established standards and best practices. This often involves meticulous research to ensure accuracy.

- Maintaining existing records: Regularly reviewing and updating existing records to reflect changes in terminology or information.

- Implementing authority control in the ILS: Configuring the ILS to enforce the use of authority records during cataloging, thereby ensuring consistency across the library’s catalog.

For example, I’ve worked on a project to standardize the subject headings for our collection of historical fiction, ensuring that similar books are grouped together logically.

Q 12. How familiar are you with Z39.50 or other library protocols?

Z39.50 is a powerful protocol that allows for searching and retrieving information across different library systems. It facilitates resource discovery and interoperability. Other library protocols, such as OAI-PMH (Open Archives Initiative Protocol for Metadata Harvesting) and SRU (Search/Retrieve via URL), provide similar functionality but with different strengths and weaknesses.

My experience involves using Z39.50 to integrate our ILS with other systems. This has allowed patrons to search our catalog alongside other databases, vastly expanding their research capabilities. Understanding Z39.50 involves knowledge of its different query types and the ability to configure it within the ILS to link different systems. I have also worked with OAI-PMH to harvest metadata from external repositories and integrate it into our digital collections. Understanding the strengths of each protocol allows one to build a robust and interconnected library system.

Q 13. Explain your understanding of OPACs (Online Public Access Catalogs).

An OPAC (Online Public Access Catalog) is the library’s online catalog that allows patrons to search for library materials. It’s the public face of the library’s collection and a critical tool for resource discovery.

My experience with OPACs includes:

- Customization: Working with the ILS to tailor the OPAC to meet the needs of our users, including customizing the search interface, refining search options, and adding user-friendly features.

- Troubleshooting: Resolving technical issues and user errors related to OPAC functionality.

- Training: Providing training to library staff and patrons on how to effectively use the OPAC.

- Content management: Ensuring the OPAC displays accurate and up-to-date information about the library’s holdings.

A well-designed OPAC makes it easier for patrons to find the resources they need, increasing user satisfaction and library usage. I’ve worked on projects to improve the user-friendliness of our OPAC by implementing better search functionality and a more intuitive interface. It’s always a focus to make this resource as simple as possible for the average library user.

Q 14. What is your experience with reporting and data analysis from library systems?

Reporting and data analysis from library systems are essential for assessing the effectiveness of library services and making informed decisions about resource allocation. This involves extracting data from the ILS, performing data analysis, and generating reports to track key performance indicators (KPIs).

My experience includes:

- Report generation: Using the ILS’s built-in reporting tools or external tools to create custom reports on circulation statistics, usage patterns, and collection analysis.

- Data analysis: Analyzing data to identify trends and patterns, such as popular items, underutilized resources, and patron demographics.

- Data visualization: Presenting findings using charts, graphs, and other visual aids to make the data more easily understandable. Tools such as Excel and data visualization software are helpful here.

- Performance evaluation: Using data analysis to evaluate the effectiveness of library programs and services.

For instance, I used data analysis to identify a decrease in circulation of ebooks, which led to a review of the library’s ebook collection development policy and prompted a campaign to encourage greater ebook use. Data-driven decision making is essential for ongoing efficiency and success in library management.

Q 15. How do you handle duplicate records in a library automation system?

Duplicate records are a common headache in library automation systems, leading to inefficiencies and inaccurate data. Handling them effectively involves a multi-pronged approach. First, we need robust data entry procedures, including careful checking and validation at each stage. This might involve using controlled vocabularies and standardized fields to minimize inconsistencies. Second, we employ deduplication tools within the system itself. Many systems offer automated deduplication features that compare records based on key fields like ISBN, title, and author. These tools often have adjustable parameters to fine-tune their sensitivity and prevent accidental merging of different editions or works. Third, manual review is crucial. Automated systems are not perfect, and human intervention is necessary to resolve complex cases where the system is uncertain. For instance, two entries may appear different because of minor variations in author names or slight differences in titles but still represent the same item. Finally, establishing clear protocols for handling duplicate records is essential. This might involve assigning a single ‘master’ record, merging data from multiple records into one, or deleting duplicates based on established criteria. Regular audits of the database also aid in identifying and resolving lingering duplicates.

For example, in my previous role, we used a combination of automated deduplication in our Koha system and a weekly review process by library staff to maintain data accuracy. We prioritized automated processes for clear duplicates while leaving more nuanced cases to human judgment. This strategy significantly reduced duplicate records and improved the overall efficiency of our catalog.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with RDA (Resource Description and Access).

Resource Description and Access (RDA) is the current standard for cataloging bibliographic resources. My experience with RDA includes not only creating and updating catalog records but also training staff in its application. RDA’s emphasis on flexibility and the use of controlled vocabularies aligns with my belief in creating consistent and findable library resources. I’m familiar with its key elements, including the use of element sets for different types of resources, and the importance of creating descriptive metadata that is both accurate and readily accessible to users. I’ve also had experience dealing with the complexities of RDA’s framework, including resolving issues related to differing interpretations of its guidelines and ensuring consistency across different records. We used RDA-compliant metadata to create rich records within our system, enriching users’ search experience and improving discoverability. Specifically, we used RDA in creating records for our ebooks and databases as part of broader efforts to make our digital collection accessible to patrons.

Q 17. How familiar are you with linked data and its application in libraries?

Linked data is a paradigm shift in how we organize and access information. It involves connecting data across different datasets using common identifiers and ontologies. In a library context, this means connecting library records with external datasets, such as authority files, subject headings, and even other libraries’ catalogs. This interconnectedness drastically improves discoverability. Imagine a user searching for information about a particular author; through linked data, the system could not only provide the library’s own holdings but also related articles, reviews, and biographical information from external sources, all seamlessly integrated within the search results. I’ve been involved in projects exploring the use of linked data in enriching library metadata. This includes familiarizing myself with various ontologies such as BIBFRAME and exploring how to map our existing data to these frameworks. While full-scale implementation of linked data requires significant investment, even small-scale integrations can yield remarkable results by improving the searchability and context of our library resources.

Q 18. Describe your experience with library discovery systems.

Library discovery systems are the user’s gateway to library resources. My experience with these systems covers both their technical aspects and their impact on user experience. I’ve worked with various discovery systems, from large-scale commercial platforms to open-source options. My focus has been on ensuring that the system delivers relevant, accurate, and user-friendly search results. This includes working with administrators to fine-tune search algorithms, mapping metadata correctly, and dealing with the integration of various databases (catalog, journals, ebooks, etc.) to provide a holistic view of the library’s collections. I’ve also worked on customizing the user interface to improve accessibility and usability for diverse user groups. For example, I helped implement a new discovery system in a previous library, carefully mapping metadata to ensure that it fully leveraged the system’s advanced search capabilities. The result was a significant improvement in user satisfaction and a noticeable increase in the usage of library resources.

Q 19. What is your experience with integrating library systems with other systems (e.g., library website, LMS)?

Integrating library systems with other systems is critical for creating a seamless user experience. I’ve extensive experience integrating library management systems (LMS) with library websites, digital repositories, and even learning management systems (LMS). This involves understanding the technical architecture of each system, defining data exchange protocols (like APIs), and managing the data flow. For example, I worked on a project to integrate our library’s catalog with our institution’s learning management system (LMS). This allowed instructors to easily link library resources directly to their course materials. The integration required careful mapping of metadata and designing robust error handling to ensure a smooth and reliable data exchange. Challenges often include dealing with differences in data formats and ensuring data consistency across integrated systems. Careful planning and testing are essential to guarantee a successful integration that enhances the user experience and streamlines workflows.

Q 20. How do you ensure the accessibility of library resources within an automation system?

Accessibility is paramount in library automation. This means making library resources available to users with disabilities. This involves multiple layers within the system. First, metadata needs to be created with accessibility in mind. This includes using descriptive alternative text for images, providing accurate and detailed subject headings for effective searching, and ensuring that metadata conforms to accessibility guidelines (like WCAG). Second, the user interface must be designed with accessibility in mind. This implies following accessibility standards (like WCAG) in the design and development of the website and ensuring the system is compatible with assistive technologies like screen readers. Third, the system itself should provide tools that improve accessibility, such as providing options for adjusting text size, font, and color contrast. For example, we implemented a project to improve the accessibility of our digital collection by ensuring all ebooks were provided with accessible metadata. This included adding alternative text for images and structuring the document’s content in a logical manner for screen readers. Moreover, we tested our website and online catalog with screen readers to ensure usability for blind and visually impaired users. Regular accessibility audits are crucial to identify and rectify any issues.

Q 21. Explain your experience with managing digital collections in a library system.

Managing digital collections presents unique challenges and opportunities. My experience encompasses various aspects, from ingesting digital content into the system to providing access and preservation. Ingestion involves using tools to import different file formats, ensuring metadata is properly captured and assigned, and then organizing the materials within the digital repository. Access management involves providing users with ways to search, browse, and download digital materials, balancing ease of use with protection of intellectual property rights. Preservation is critical and involves establishing long-term strategies for storage and format migration to prevent data loss. For instance, I’ve worked on projects digitizing archives of historical documents, involving both the physical scanning process and the subsequent organization and metadata creation within our digital repository. We employed a combination of open-source tools and commercial platforms to manage the entire lifecycle of the digital collection. Metadata standards, such as Dublin Core, were crucial to ensuring discoverability and long-term access.

Q 22. How would you train staff on a new library automation system?

Training staff on a new library automation system (ILS) requires a multi-faceted approach focusing on both technical skills and practical application. I begin by assessing the staff’s existing computer literacy and familiarity with library processes. The training program should then be tailored to different skill levels.

Phased Approach: I’d start with introductory sessions covering the system’s basic functionalities – logging in, navigating the interface, and performing simple tasks like searching the catalog. Subsequent sessions would delve into more complex modules such as circulation, cataloging, and acquisitions.

Hands-on Training: I firmly believe in hands-on training. Instead of just lectures, I would set up mock scenarios and guided exercises. For example, staff members could practice checking in and out books, adding new items to the catalog, or processing holds.

Modular Training: I’d break down the training into smaller, manageable modules, allowing staff to focus on specific tasks. This is less overwhelming and allows for more targeted practice.

Job Aids & Documentation: Comprehensive documentation, including quick reference guides and tutorials, would be created and made easily accessible. This allows staff to refresh their knowledge or look up processes independently.

Ongoing Support: Training doesn’t end after the initial sessions. I’d establish an ongoing support system through regular Q&A sessions, online forums, or readily available help desk support. This fosters a culture of continuous learning and problem-solving.

For instance, during the training on the circulation module, we would simulate a busy check-out counter environment. Staff would learn how to handle various scenarios, such as dealing with overdue books, placing holds, and managing patron accounts. This real-world practice significantly improves their confidence and competence.

Q 23. Describe your experience with troubleshooting network connectivity issues related to the ILS.

Troubleshooting network connectivity issues related to the ILS requires a systematic approach. My experience involves identifying the root cause, whether it’s a hardware failure, software glitch, or network problem. I usually start with the basics:

Check the Obvious: First, I verify that the ILS server is running, the network cables are properly connected, and the computer is powered on. This may seem rudimentary, but it often solves simple issues.

Ping Test: I utilize a ping test (

ping) to determine if the ILS server is reachable. This helps pinpoint whether the problem lies within the local network or beyond.Traceroute: If the ping test fails, a traceroute (

tracert) identifies potential bottlenecks or points of failure along the network path. This helps isolate the problem area.Network Diagnostics: I use network monitoring tools to check for packet loss, latency, or bandwidth issues. These tools provide a deeper insight into network performance.

Check Firewall Rules: I would verify that the firewall is not blocking access to the ILS server. Improperly configured firewalls are a common cause of connectivity problems.

Consult Network Administrator: For complex network problems, I work closely with the network administrator. Their expertise is crucial in resolving issues that extend beyond the ILS itself.

In one instance, a sudden outage was traced to a faulty network switch. By replacing the switch, we quickly restored connectivity. In another, a misconfigured firewall rule was blocking the ILS client applications; a quick fix ensured seamless operation once the rules were adjusted.

Q 24. What is your experience with RFID technology in libraries?

My experience with RFID technology in libraries is extensive. I’ve been involved in the planning, implementation, and management of RFID systems in several libraries. RFID (Radio-Frequency Identification) technology significantly enhances efficiency in library operations. It enables self-checkout kiosks, rapid inventory management, and streamlined circulation processes.

Self-Checkout: RFID tags allow patrons to check out materials independently, reducing wait times at the circulation desk. This frees up staff to focus on other tasks.

Inventory Management: RFID technology enables rapid and accurate inventory management. The entire collection can be quickly scanned to identify missing or misplaced items, leading to significant improvements in collection accuracy.

Security: RFID tags, when integrated with security gates, can detect unauthorized removal of library materials, deterring theft and improving security.

Integration with ILS: The RFID system integrates seamlessly with the ILS, allowing for real-time updates to the library catalog and patron records.

For example, during the implementation of an RFID system in a large university library, we experienced challenges with tag sensitivity and reader placement. Careful calibration and strategic placement of readers were crucial for optimal performance and prevented read errors.

Q 25. How do you manage system security and data backups?

Managing system security and data backups is paramount in a library environment, where sensitive patron data and valuable collection information are stored. My approach to security involves multiple layers of protection.

Access Control: Implementing robust access controls, including role-based permissions, ensures that only authorized personnel can access sensitive data and system functions.

Regular Security Audits: Conducting regular security audits and vulnerability assessments helps identify and address potential security threats before they can be exploited.

Firewall & Antivirus: A strong firewall and up-to-date antivirus software are essential in protecting the ILS from external threats and malware.

Data Encryption: Encrypting sensitive data, both in transit and at rest, protects it from unauthorized access even if the system is compromised.

Regular Backups: Implementing a comprehensive data backup strategy is crucial. This includes regular backups to offsite locations to ensure data recoverability in case of hardware failure, natural disaster, or cyberattack. The backup strategy should be regularly tested.

For example, we implemented a 3-2-1 backup strategy: 3 copies of the data, on 2 different media types, with 1 copy stored offsite. This ensures data redundancy and protection against various potential data loss scenarios.

Q 26. Describe your experience with the implementation of a new library automation system.

Implementing a new ILS is a complex project requiring meticulous planning and execution. My experience includes leading teams through all phases of the implementation process, from needs assessment to post-implementation support.

Needs Assessment: The process begins with a thorough needs assessment to identify the library’s specific requirements and select the most suitable ILS. This includes evaluating existing workflows, staff training needs, and budget constraints.

Vendor Selection: Careful vendor selection is crucial. This involves evaluating different vendors based on their track record, system features, and customer support.

Data Migration: Migrating data from the old system to the new one requires careful planning and testing. Data cleansing and validation are crucial to ensure data integrity.

System Configuration: The new system needs to be carefully configured to meet the library’s specific needs. This includes customizing workflows, setting up user accounts, and configuring system parameters.

Staff Training: As mentioned previously, extensive staff training is a key element of successful implementation.

Go-Live & Post-Implementation Support: The go-live phase involves a gradual transition to the new system, with ongoing monitoring and support to address any issues.

During one implementation, we used a phased rollout approach, starting with a pilot group before expanding to the entire library. This allowed us to identify and address any problems before a full-scale deployment. This minimized disruption and improved user acceptance.

Q 27. What is your experience with creating custom reports or workflows within an ILS?

Creating custom reports and workflows within an ILS is a critical skill. Many ILSs allow for customization through their reporting tools and workflow engines. My experience includes developing custom reports for various purposes and designing specialized workflows to improve efficiency.

Custom Reports: I have created custom reports for tracking circulation statistics, analyzing collection usage, monitoring overdue items, and generating acquisition reports. This involves selecting relevant data fields, defining report parameters, and formatting the output. Many ILS systems offer report writers with drag-and-drop interfaces to simplify the process.

Workflow Customization: I’ve designed custom workflows to automate tasks like acquisitions processing, interlibrary loan requests, and cataloging procedures. This involves configuring the system to automatically route items based on predefined criteria.

For instance, I created a custom report that identified underutilized materials, helping the library make informed decisions about weeding and collection development. This saved the library both space and resources. I also created a workflow to automate the processing of new acquisitions, significantly reducing the time and effort required to add new materials to the catalog.

Q 28. How familiar are you with the different modules within your experience with ILS (e.g., acquisitions, circulation, cataloging)?

My experience with ILS modules is comprehensive. I’m proficient in all major modules, including acquisitions, circulation, and cataloging, and have a good understanding of other modules such as serials management, electronic resources management, and discovery layers.

Acquisitions: I’m familiar with the entire acquisitions workflow, from fund allocation and order placement to receiving and processing invoices. I’m adept at managing vendor accounts and resolving ordering discrepancies.

Circulation: I have extensive experience with circulation processes, including check-in/check-out, managing overdue items, handling holds, and generating circulation statistics. I’m familiar with various circulation policies and their implementation.

Cataloging: I’m proficient in cataloging using AACR2 or RDA standards, creating bibliographic records, and assigning subject headings. I have experience with both manual and automated cataloging processes.

Other Modules: I’m also familiar with the serials management module for handling periodicals and journals; the electronic resources module for managing access to databases and e-books; and the discovery layer for providing a unified search interface across various library resources.

This broad understanding of ILS modules allows me to effectively troubleshoot problems, optimize workflows, and ensure seamless integration between different aspects of the library system. My expertise enables me to make informed decisions about the library’s technology needs.

Key Topics to Learn for Knowledge of Library Automation Systems Interview

- Cataloging and Classification: Understand the principles of cataloging and classification within library automation systems, including MARC records, subject headings, and authority control. Be prepared to discuss the practical application of these concepts in managing library metadata.

- Circulation Management: Explore the functionalities of automated circulation systems, including member registration, item check-in/check-out, overdue notices, and reporting. Consider how these systems impact user experience and library operations.

- Acquisition and Serials Management: Familiarize yourself with the processes involved in acquiring and managing library materials using automated systems. Discuss the role of these systems in budgeting, ordering, and tracking acquisitions.

- OPAC (Online Public Access Catalog) Functionality and User Experience: Understand the design and functionality of OPACs, including search interfaces, record display, and user-assistance features. Be ready to discuss how to optimize the user experience and improve discoverability of library resources.

- Data Migration and System Integration: Explore the challenges and strategies involved in migrating data between library automation systems or integrating them with other library systems. Consider data integrity and potential compatibility issues.

- Troubleshooting and System Maintenance: Gain familiarity with common problems encountered in library automation systems and the troubleshooting techniques used to resolve them. Discuss the importance of regular system maintenance and backups.

- Security and Access Control: Understand the security measures implemented in library automation systems to protect sensitive data and ensure authorized access. Discuss user authentication, authorization, and data encryption.

Next Steps

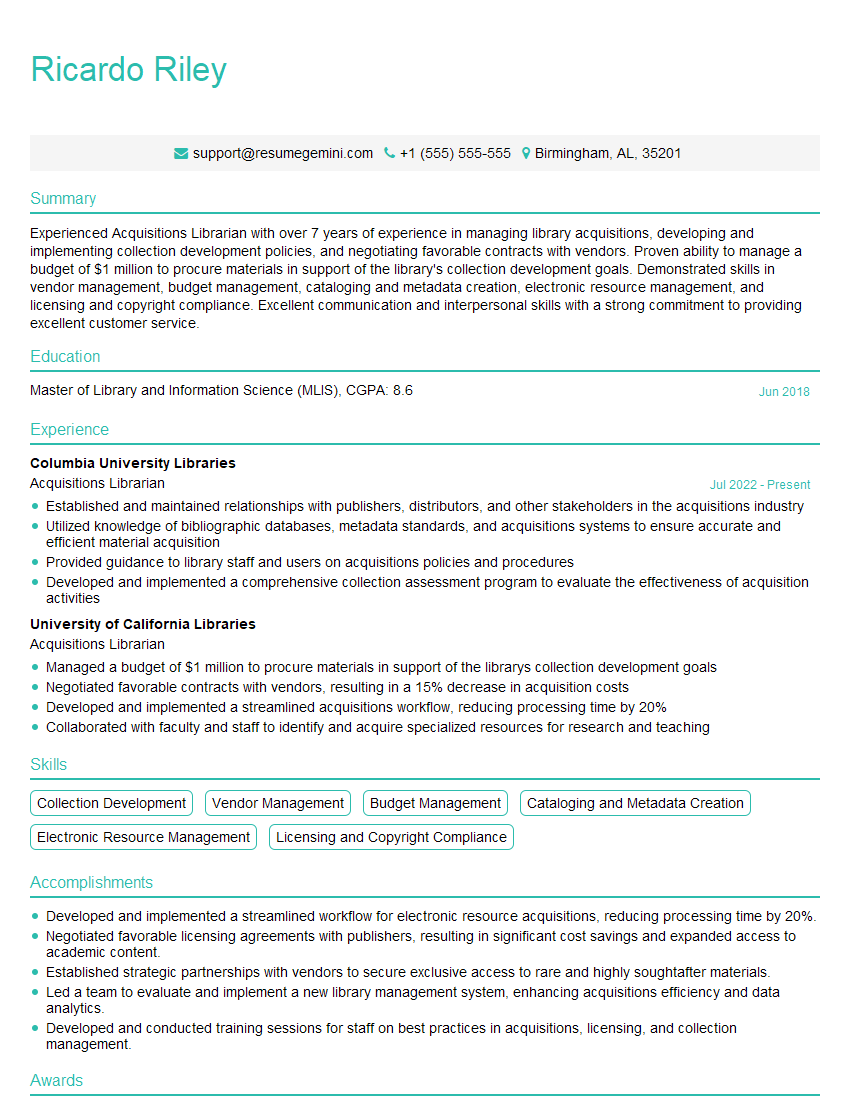

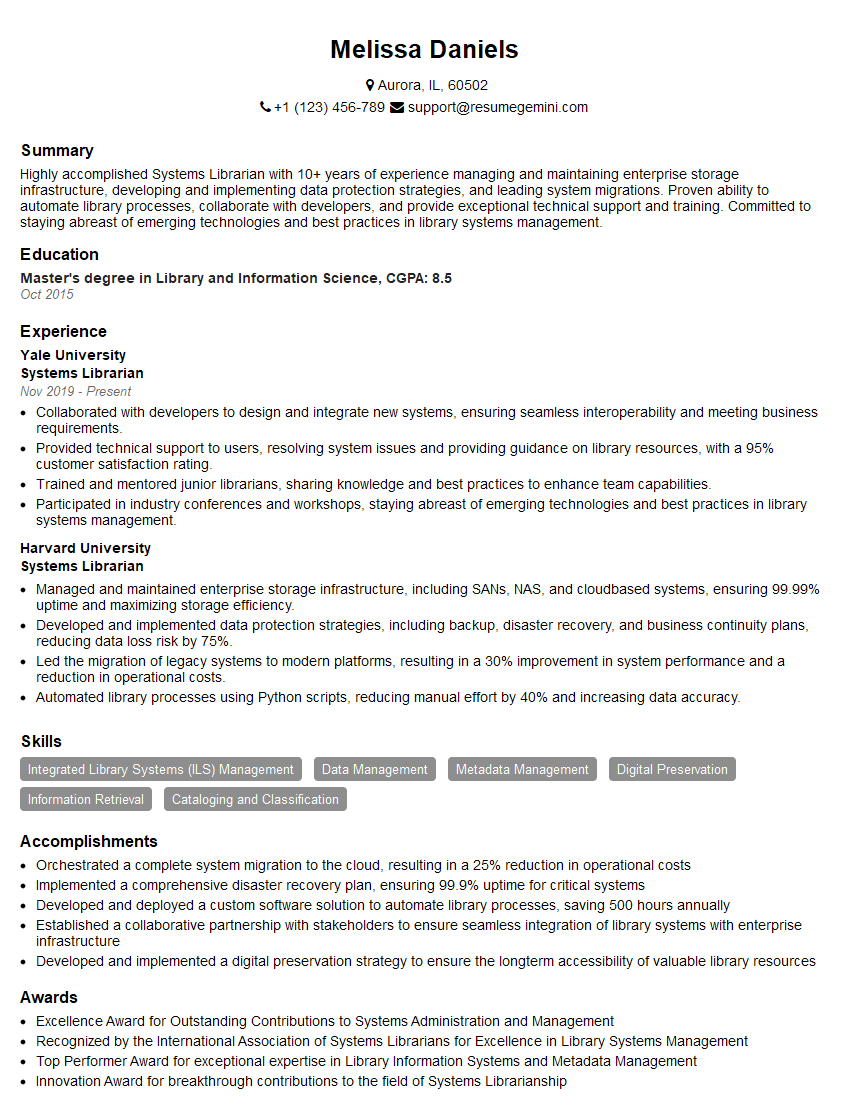

Mastering library automation systems is crucial for career advancement in the library and information science field. A strong understanding of these systems significantly enhances your ability to manage library resources effectively and provide excellent user services. To maximize your job prospects, create an ATS-friendly resume that showcases your skills and experience clearly. ResumeGemini is a trusted resource to help you build a professional and impactful resume. They provide examples of resumes tailored specifically to highlight expertise in library automation systems, giving you a head start in creating a compelling application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO