The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Linear Regression interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Linear Regression Interview

Q 1. Explain the concept of Linear Regression.

Linear Regression is a fundamental statistical method used to model the relationship between a dependent variable and one or more independent variables. Imagine you’re trying to predict house prices (dependent variable) based on their size (independent variable). Linear regression finds the best-fitting straight line through the data points, allowing us to estimate the price based on the size. This line represents the linear relationship, showing how much the price changes for each unit increase in size. Mathematically, it’s represented by an equation like: y = mx + c, where ‘y’ is the dependent variable, ‘x’ is the independent variable, ‘m’ is the slope (representing the change in y for a unit change in x), and ‘c’ is the y-intercept (the value of y when x is 0).

In essence, linear regression helps us understand and predict how changes in one or more variables impact another.

Q 2. What are the assumptions of Linear Regression?

Several assumptions underpin the validity and reliability of linear regression results. Violating these assumptions can lead to inaccurate or misleading conclusions. These crucial assumptions are:

- Linearity: The relationship between the dependent and independent variables should be linear. A scatter plot can visually check this.

- Independence: Observations should be independent of each other. For instance, if analyzing student test scores, each student’s score should not influence another’s.

- Homoscedasticity: The variance of the errors (residuals) should be constant across all levels of the independent variable. This means the spread of the data points around the regression line should be roughly uniform.

- Normality: The residuals should be normally distributed. This can be checked using histograms or normality tests.

- No Multicollinearity (for multiple linear regression): Independent variables should not be highly correlated with each other. High correlation leads to unstable estimates.

It’s important to note that minor deviations from these assumptions might not always invalidate the model, but significant violations can seriously compromise its reliability.

Q 3. How do you handle outliers in Linear Regression?

Outliers are data points that significantly deviate from the overall pattern in the data. They can disproportionately influence the regression line, leading to inaccurate predictions. Handling outliers requires careful consideration:

- Identify Outliers: Visual inspection using scatter plots and box plots, coupled with statistical methods like calculating standardized residuals (z-scores), can help identify potential outliers.

- Investigate the Cause: Before removing an outlier, understand why it exists. Is it a data entry error? Does it represent a genuine, albeit extreme, case?

- Transformation: Applying transformations to the data, like logarithmic transformation, can sometimes reduce the influence of outliers.

- Robust Regression Methods: Methods like quantile regression or robust regression are less sensitive to outliers.

- Removal (with caution): As a last resort, outliers can be removed, but only after careful investigation and justification. Always document the reason for removal.

The best approach depends on the context and the nature of the outliers. Blindly removing data without understanding the cause is generally discouraged.

Q 4. What is the difference between simple and multiple linear regression?

The core difference lies in the number of independent variables:

- Simple Linear Regression: Uses only one independent variable to predict the dependent variable. For example, predicting house prices based solely on their size.

- Multiple Linear Regression: Uses two or more independent variables to predict the dependent variable. For example, predicting house prices based on size, location, number of bedrooms, and age.

Multiple linear regression provides a more comprehensive model when several factors influence the outcome, offering a more nuanced prediction compared to a simple model that only considers one factor. However, it also increases model complexity and raises concerns about multicollinearity.

Q 5. Explain R-squared and its limitations.

R-squared (R²) is a statistical measure that represents the proportion of the variance for a dependent variable that’s predictable from the independent variables in a regression model. It essentially tells us how well the model fits the data. An R² of 0.8 means 80% of the variation in the dependent variable is explained by the independent variables.

Limitations:

- Doesn’t indicate causality: A high R² doesn’t imply a causal relationship between variables. Correlation doesn’t equal causation.

- Sensitive to the number of predictors: Adding more independent variables, even irrelevant ones, can artificially inflate R².

- Doesn’t assess model validity: A high R² doesn’t guarantee the model’s validity or generalizability to new data.

Therefore, while R² provides valuable information about model fit, it shouldn’t be the sole criterion for model evaluation.

Q 6. What is Adjusted R-squared and why is it preferred over R-squared in some cases?

Adjusted R-squared (Adjusted R²) is a modified version of R² that adjusts for the number of predictors in the model. It penalizes the addition of irrelevant variables, preventing the artificial inflation of R² seen when including many predictors. Adjusted R² is always less than or equal to R².

Why it’s preferred: When comparing models with different numbers of independent variables, Adjusted R² provides a fairer comparison. A model with a higher Adjusted R² suggests a better fit after accounting for the number of predictors. It’s particularly useful when evaluating models with a large number of variables or when comparing models with differing numbers of predictors.

Q 7. Explain the concept of multicollinearity and how to detect it.

Multicollinearity refers to a high correlation between two or more independent variables in a multiple regression model. This high correlation makes it difficult to isolate the individual effect of each variable on the dependent variable. Imagine trying to predict a student’s exam score using both ‘hours studied’ and ‘number of practice tests taken’ – these are likely highly correlated.

Detecting Multicollinearity:

- Correlation Matrix: Examine the correlation matrix of independent variables. High correlation coefficients (e.g., above 0.7 or 0.8) indicate potential multicollinearity.

- Variance Inflation Factor (VIF): VIF measures how much the variance of a regression coefficient is inflated due to multicollinearity. A VIF above 5 or 10 generally suggests a problem.

- Eigenvalues and Condition Index: Analyzing the eigenvalues of the correlation matrix and the condition index can also help detect multicollinearity. A high condition index indicates multicollinearity.

Handling Multicollinearity: Strategies include removing one of the correlated variables, combining correlated variables into a single index, or using regularization techniques like Ridge or Lasso regression.

Q 8. How do you handle multicollinearity?

Multicollinearity occurs when two or more predictor variables in a multiple regression model are highly correlated. This means they provide essentially the same information, making it difficult for the model to isolate the independent effect of each variable on the outcome. Imagine trying to determine the effect of fertilizer A and fertilizer B on crop yield when A and B are always applied together in the same ratio – you can’t tell which one is doing what!

Handling multicollinearity involves several strategies:

- Feature Selection: Removing one or more of the highly correlated variables. This is the simplest approach but could lead to information loss if the removed variables contain some unique explanatory power.

- Principal Component Analysis (PCA): Transforming the original correlated variables into a smaller set of uncorrelated variables (principal components) that capture most of the variance. This reduces dimensionality while retaining crucial information.

- Regularization techniques (Ridge and Lasso Regression): These methods shrink the coefficients of correlated predictors, reducing their influence and stabilizing the model. We’ll discuss these in more detail later.

- Dimensionality Reduction Techniques: Techniques like Linear Discriminant Analysis (LDA) or other feature extraction methods can reduce the number of features while retaining the important information.

Choosing the best approach depends on the specific dataset and the goals of the analysis. If you can remove a variable without significantly impacting model performance, that’s often the preferred route for its simplicity and interpretability.

Q 9. What is heteroscedasticity and how does it affect linear regression?

Heteroscedasticity refers to the violation of the assumption of constant variance (homoscedasticity) in the errors of a regression model. In simpler terms, the spread of the residuals (the differences between the observed and predicted values) is not consistent across the range of predictor variables. Imagine shooting arrows at a target: homoscedasticity means the arrows are clustered similarly tightly regardless of how far from the bullseye they land; heteroscedasticity means the arrows are more spread out in some areas than others.

Heteroscedasticity affects linear regression by making the standard errors of the regression coefficients unreliable. This leads to inaccurate confidence intervals and p-values, potentially leading to incorrect inferences about the significance of predictors. In essence, your model might look great, but its conclusions might be completely off.

Q 10. How do you detect and address heteroscedasticity?

Detecting heteroscedasticity can be done visually through residual plots (plotting residuals against predicted values or predictor variables). A classic sign is a cone or funnel shape, showing increasing or decreasing variance. Formally, statistical tests like the Breusch-Pagan test or White test can assess heteroscedasticity.

Addressing heteroscedasticity involves:

- Data Transformation: Transforming the dependent or independent variables (e.g., using logarithmic, square root, or Box-Cox transformations) can often stabilize the variance. This is a popular and often effective first step.

- Weighted Least Squares (WLS): This method assigns weights to observations, giving more weight to those with smaller variances. This effectively down-weights the influence of the high-variance points, making the model less sensitive to them.

- Robust Regression Techniques: Methods like robust linear regression are less sensitive to outliers and heteroscedasticity. They use different estimation methods to mitigate the influence of extreme values.

The choice of method depends on the nature of the heteroscedasticity. Data transformation is usually attempted first due to its simplicity, and if that fails then WLS or robust techniques can be applied.

Q 11. Explain the difference between Ridge and Lasso Regression.

Both Ridge and Lasso regression are regularization techniques used to address multicollinearity and overfitting. They work by adding a penalty term to the ordinary least squares (OLS) cost function. The key difference lies in the type of penalty:

- Ridge Regression (L2 regularization): Adds a penalty proportional to the square of the magnitude of the coefficients. This shrinks the coefficients towards zero but doesn’t force them to be exactly zero.

- Lasso Regression (L1 regularization): Adds a penalty proportional to the absolute value of the coefficients. This can shrink some coefficients to exactly zero, effectively performing feature selection.

Think of it like this: Ridge regression gently nudges the coefficients towards zero, while Lasso regression can aggressively push some coefficients all the way to zero, essentially removing those features from the model.

Q 12. When would you use Ridge Regression over Lasso Regression, or vice versa?

The choice between Ridge and Lasso regression depends on the context:

- Use Ridge Regression when: You have many correlated predictors, and you want to shrink their coefficients but not necessarily eliminate any. Ridge is better at handling multicollinearity, as it doesn’t completely discard variables that might still be contributing useful information.

- Use Lasso Regression when: You suspect that only a small subset of predictors are truly important, and you want to perform feature selection by eliminating the less important ones. Lasso can lead to more interpretable models by simplifying the feature set.

In practice, it’s often helpful to try both and compare their performance. Cross-validation is crucial to select the best regularization parameter (lambda) and the best model overall.

Q 13. Explain Elastic Net Regression.

Elastic Net regression combines the penalty terms of both Ridge and Lasso regression. It uses a weighted average of the L1 and L2 penalties. This allows it to benefit from both the shrinkage properties of Ridge (handling multicollinearity) and the feature selection capabilities of Lasso (improving interpretability).

The model is formulated as:

minimize ||y - Xβ||² + λ[(1 - α)||β||² + α||β||₁]where:

λis the overall regularization strength.αis the mixing parameter (0 ≤ α ≤ 1).α = 0corresponds to Ridge,α = 1corresponds to Lasso, and values in between combine both penalties.

Elastic Net often offers a good compromise between the two methods, particularly when dealing with datasets containing high multicollinearity and a potential for feature selection.

Q 14. How do you interpret the coefficients in a linear regression model?

In a linear regression model, the coefficients represent the change in the dependent variable associated with a one-unit change in the corresponding independent variable, holding all other variables constant. This ‘holding all other variables constant’ is crucial; it’s the effect of that specific variable in isolation.

For example, if we have a model predicting house prices (dependent variable) using size (in square feet) and number of bedrooms as predictors, a coefficient of 100 for ‘size’ means that, for every additional square foot, the predicted house price increases by $100, assuming the number of bedrooms remains the same. A positive coefficient indicates a positive relationship (e.g., larger houses are more expensive), while a negative coefficient indicates a negative relationship. The magnitude of the coefficient reflects the strength of the effect.

Interpreting coefficients requires careful consideration of the units of measurement for both dependent and independent variables and awareness of potential confounding effects from other predictors in the model. It’s important to focus on the effect size and significance (p-value) to understand the strength and reliability of the relationship, rather than just the raw coefficient value.

Q 15. How do you evaluate the performance of a linear regression model?

Evaluating a linear regression model involves assessing how well it fits the data and predicts unseen values. We want to understand if the model is capturing the underlying relationship between our independent and dependent variables effectively, or if it’s oversimplifying or overfitting the data.

This involves looking at both the model’s performance on the training data (used to build the model) and its performance on a separate test data set (data the model hasn’t seen before). This helps distinguish between good generalization and overfitting.

Career Expert Tips:

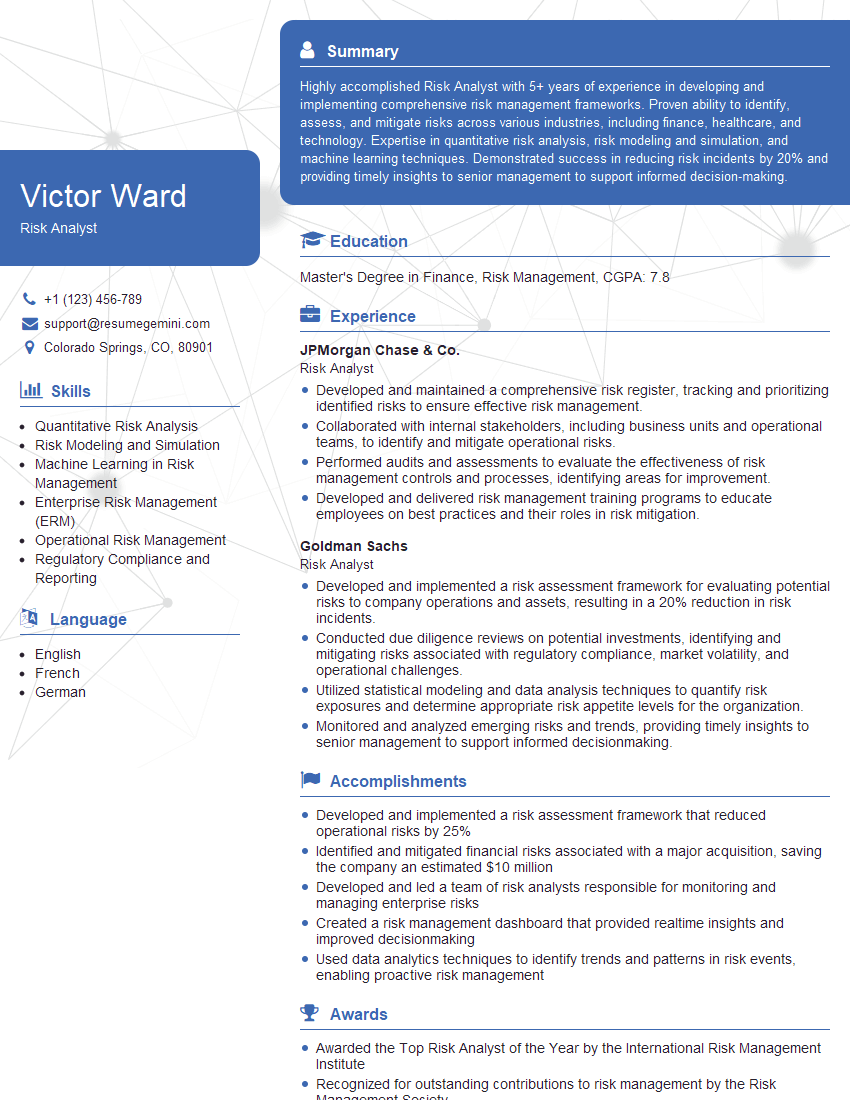

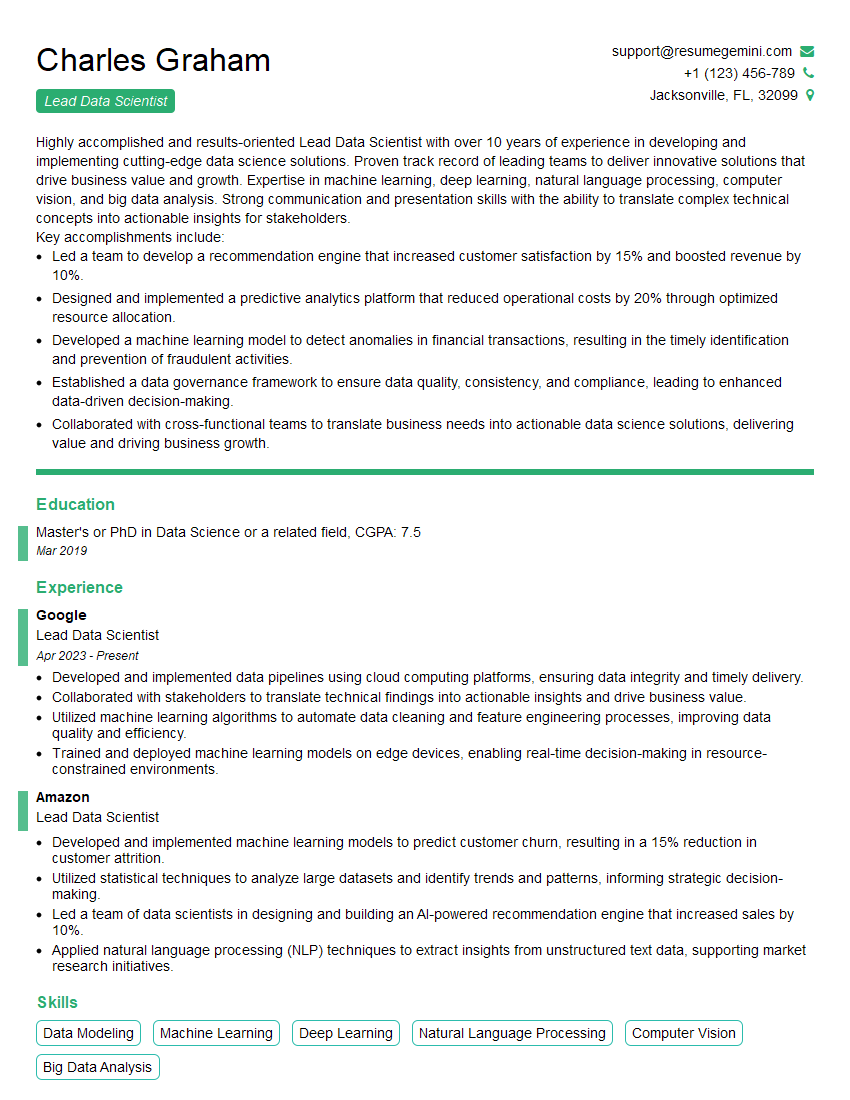

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the common metrics used to evaluate a linear regression model?

Several common metrics evaluate linear regression models. They broadly fall into two categories: those based on the residuals (differences between actual and predicted values), and those assessing the overall model fit.

- R-squared (R²): Represents the proportion of variance in the dependent variable explained by the independent variables. A higher R² (closer to 1) indicates a better fit, but be wary of overfitting; a high R² on training data doesn’t guarantee good performance on unseen data.

- Adjusted R²: A modified version of R² that adjusts for the number of predictors in the model. It penalizes the inclusion of irrelevant variables, which is crucial to avoid overfitting.

- Mean Squared Error (MSE): The average of the squared differences between actual and predicted values. It penalizes larger errors more heavily than smaller ones.

- Root Mean Squared Error (RMSE): The square root of MSE. It’s useful because it’s in the same units as the dependent variable, making it easier to interpret.

- Mean Absolute Error (MAE): The average of the absolute differences between actual and predicted values. Less sensitive to outliers than MSE or RMSE.

Q 17. What is the difference between RMSE and MAE?

Both RMSE and MAE measure the average prediction error, but they differ in how they handle errors. RMSE squares the errors before averaging, while MAE uses absolute values. This means RMSE gives more weight to larger errors.

Imagine predicting house prices. A large error on a very expensive house will significantly impact the RMSE, while the MAE would be less affected. Therefore, choosing between them depends on the context and whether you want to be more sensitive to large errors (RMSE) or treat all errors equally (MAE).

Q 18. Explain the bias-variance tradeoff in the context of linear regression.

The bias-variance tradeoff is a fundamental concept in machine learning, including linear regression. It describes the balance between model simplicity (low variance, high bias) and model complexity (high variance, low bias).

- High Bias (Underfitting): A model with high bias is too simple to capture the complexity of the data. It consistently misses the target (high systematic error). Imagine trying to fit a straight line to a clearly curved dataset.

- High Variance (Overfitting): A model with high variance is too complex and fits the training data too closely, including its noise. It performs well on the training data but poorly on unseen data (high random error). Think of a highly wiggly line that perfectly captures every point in the training data but is far too specific to generalize well.

The goal is to find the ‘sweet spot’ with a balance between bias and variance—a model complex enough to capture the data’s underlying structure but not so complex that it fits noise.

Q 19. How can you improve the accuracy of a linear regression model?

Improving the accuracy of a linear regression model involves several strategies:

- Feature Engineering: Creating new features from existing ones can capture non-linear relationships. For example, combining two variables multiplicatively might reveal a previously hidden pattern.

- Feature Selection: Removing irrelevant or redundant features can improve the model’s performance and reduce overfitting. Techniques like stepwise regression help identify the most important features.

- Regularization (L1 or L2): Adding penalties to the model’s complexity reduces overfitting by shrinking the coefficients of less important variables towards zero. L1 regularization (LASSO) can also perform feature selection.

- Polynomial Regression: If the relationship is not linear, transforming the features (e.g., creating squared or cubed terms) can fit a more complex curve.

- Data Transformation: Transforming the dependent or independent variables (e.g., using log transformation) can improve linearity and address heteroscedasticity (unequal variance of residuals).

- Addressing Outliers: Outliers disproportionately influence the model. They should be investigated to understand their cause, and if appropriate, removed or transformed.

Q 20. How do you handle missing values in your dataset before applying linear regression?

Handling missing values is crucial before applying linear regression. Ignoring them can lead to biased and inaccurate results.

- Deletion: Removing rows or columns with missing values is simple but can lead to significant data loss, especially if the missing data isn’t randomly distributed.

- Imputation: Replacing missing values with estimated values. Common methods include:

- Mean/Median/Mode Imputation: Replacing missing values with the mean, median, or mode of the respective feature. Simple, but can distort the data’s distribution.

- K-Nearest Neighbors (KNN) Imputation: Predicting missing values based on the values of similar data points.

- Multiple Imputation: Generating multiple imputed datasets and combining the results to account for the uncertainty in imputation.

The best method depends on the nature of the data, the amount of missing data, and the potential impact on the analysis.

Q 21. Describe the process of feature scaling and its importance in linear regression.

Feature scaling transforms the features to a similar scale. This is important in linear regression because algorithms are sensitive to differences in feature magnitudes. Features with larger values can disproportionately influence the model.

Common scaling methods include:

- Standardization (Z-score normalization): Centers the data around zero with a standard deviation of one.

z = (x - μ) / σwherexis the feature value,μis the mean, andσis the standard deviation. - Min-Max scaling: Scales the data to a range between 0 and 1.

x' = (x - min) / (max - min)wherex'is the scaled value,minis the minimum value, andmaxis the maximum value.

Scaling prevents features with larger values from dominating the model and improves the efficiency and performance of gradient descent-based optimization algorithms.

Q 22. Explain regularization techniques in linear regression.

Regularization techniques in linear regression help prevent overfitting, a situation where the model performs well on training data but poorly on unseen data. This happens when the model learns the noise in the training data instead of the underlying patterns. Regularization adds a penalty to the model’s complexity, discouraging it from fitting the training data too closely.

Two common regularization techniques are:

- L1 Regularization (LASSO): Adds a penalty proportional to the absolute value of the model’s coefficients. This can lead to some coefficients becoming exactly zero, effectively performing feature selection. The penalty term is added to the loss function:

Loss = MSE + λ * Σ|βi|whereλis the regularization parameter andβiare the coefficients. - L2 Regularization (Ridge): Adds a penalty proportional to the square of the model’s coefficients. This shrinks the coefficients towards zero, but doesn’t force them to be exactly zero. The penalty term is added to the loss function:

Loss = MSE + λ * Σβi²

Choosing the right λ is crucial. Too small a λ leads to overfitting, while too large a λ leads to underfitting. Techniques like cross-validation are used to find the optimal λ.

Q 23. What are some common pitfalls to avoid when using linear regression?

Several pitfalls can hinder the effectiveness of linear regression. Here are some common ones:

- Non-linearity: Linear regression assumes a linear relationship between variables. If the relationship is non-linear, the model will be inaccurate. Consider transforming variables (e.g., logarithmic transformation) or using a non-linear model.

- Multicollinearity: High correlation between predictor variables makes it difficult to isolate the individual effect of each variable on the response. Techniques like principal component analysis (PCA) or removing one of the correlated variables can help.

- Outliers: Outliers can significantly influence the regression line. Identifying and handling outliers (e.g., removing them or using robust regression techniques) is crucial.

- Heteroscedasticity: This refers to unequal variance in the error terms. It violates one of the assumptions of linear regression and can be addressed by transformations or using weighted least squares.

- Ignoring Interactions: Failing to consider interactions between predictor variables can lead to an incomplete and inaccurate model. Include interaction terms in the model if there’s evidence of interaction effects.

Q 24. How do you interpret the p-values in linear regression?

P-values in linear regression represent the probability of observing the obtained results (or more extreme results) if there is no relationship between the predictor variable and the response variable (i.e., the null hypothesis is true). A low p-value (typically below a significance level of 0.05) suggests strong evidence against the null hypothesis, indicating a statistically significant relationship. However, a high p-value doesn’t necessarily mean there’s no relationship, it just means there isn’t enough evidence to reject the null hypothesis.

It’s important to remember that p-values don’t indicate the strength or importance of the relationship, only its statistical significance. Consider effect sizes and confidence intervals for a more complete understanding.

Q 25. Explain the concept of ordinary least squares (OLS).

Ordinary Least Squares (OLS) is a method for estimating the parameters in a linear regression model. It aims to find the line that minimizes the sum of the squared differences between the observed values and the values predicted by the model. In simpler terms, it finds the line that’s closest to all the data points, where ‘closest’ is measured by the sum of squared vertical distances.

Mathematically, OLS seeks to minimize the sum of squared residuals: Σ(yi - ŷi)², where yi are the observed values and ŷi are the predicted values.

OLS provides efficient and unbiased estimates of the regression coefficients if the assumptions of linear regression are met.

Q 26. What is Gradient Descent and how is it used in linear regression?

Gradient Descent is an iterative optimization algorithm used to find the best fit line in linear regression by minimizing the loss function (often the Mean Squared Error). It works by repeatedly adjusting the model’s parameters (coefficients) in the direction of the steepest descent of the loss function.

Imagine walking down a hill; Gradient Descent is like taking small steps downhill, always choosing the direction that leads to the lowest point (the minimum of the loss function). The ‘step size’ is controlled by a parameter called the learning rate. A smaller learning rate leads to slower but potentially more accurate convergence, while a larger learning rate can lead to faster but potentially less accurate convergence or even overshooting the minimum.

The process involves calculating the gradient (slope) of the loss function with respect to each parameter, and updating the parameters using the formula: θj = θj - α * ∂J(θ)/∂θj, where θj is the j-th parameter, α is the learning rate, and ∂J(θ)/∂θj is the partial derivative of the loss function with respect to θj.

Q 27. How does the choice of loss function affect linear regression?

The choice of loss function significantly impacts the linear regression model. The most common loss function is the Mean Squared Error (MSE), which calculates the average squared difference between the observed and predicted values. MSE is sensitive to outliers because squaring large errors amplifies their effect.

Alternatives include the Mean Absolute Error (MAE), which calculates the average absolute difference between observed and predicted values. MAE is less sensitive to outliers than MSE. The choice depends on the specific problem and the nature of the data. If outliers are a significant concern, MAE might be preferred. If the goal is to minimize the overall squared error, MSE is a suitable choice.

Q 28. Describe a situation where you used linear regression to solve a real-world problem.

In a previous project, I used linear regression to predict customer churn for a telecommunications company. We had a dataset containing various customer features (e.g., age, contract length, monthly bill, customer service calls) and a binary churn variable (1 for churned, 0 for not churned).

First, I cleaned and preprocessed the data, handling missing values and converting categorical variables into numerical representations (using one-hot encoding). Then, I used linear regression to build a model predicting the probability of churn based on the customer features. While logistic regression is more appropriate for binary classification, linear regression provided a good baseline model and insights into the relative importance of features in predicting churn. I evaluated the model’s performance using metrics like RMSE and R-squared, and the results showed that contract length and the number of customer service calls were significant predictors of churn. The model provided valuable insights into customer retention strategies, enabling the company to identify and proactively target at-risk customers.

Key Topics to Learn for Linear Regression Interview

- Simple Linear Regression: Understanding the fundamental concepts, assumptions, and limitations. Practical application: Predicting sales based on advertising spend.

- Multiple Linear Regression: Extending the model to incorporate multiple predictor variables. Practical application: Predicting house prices based on size, location, and age.

- Model Evaluation Metrics: Mastering R-squared, Adjusted R-squared, RMSE, and MAE to assess model performance. Practical application: Choosing the best model among several candidates.

- Assumptions of Linear Regression: Understanding and diagnosing issues like linearity, independence of errors, homoscedasticity, and normality of residuals. Practical application: Identifying and addressing violations of assumptions to improve model accuracy.

- Feature Engineering and Selection: Techniques for creating new features and selecting the most relevant ones to improve model performance. Practical application: Transforming categorical variables into numerical representations for use in the model.

- Regularization Techniques (Ridge and Lasso): Understanding and applying techniques to prevent overfitting and improve model generalization. Practical application: Handling high-dimensional datasets with many predictor variables.

- Interpreting Regression Coefficients: Understanding the meaning and significance of coefficients in the context of the problem. Practical application: Explaining the impact of each predictor variable on the outcome.

- Model Diagnostics and Residual Analysis: Identifying outliers, influential points, and potential violations of assumptions through residual plots and other diagnostic tools. Practical application: Detecting and handling anomalies in the data.

Next Steps

Mastering linear regression is crucial for a successful career in data science, machine learning, and related fields. A strong understanding of these concepts will significantly enhance your interview performance and open doors to exciting opportunities. To maximize your job prospects, it’s essential to create a compelling and ATS-friendly resume that highlights your skills and experience. We highly recommend using ResumeGemini to build a professional resume that showcases your expertise in linear regression. ResumeGemini provides helpful resources and examples of resumes tailored to highlight skills in Linear Regression, making the process easier and more effective. Take the next step towards your dream job today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO