Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Linux/Unix Server Administration interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Linux/Unix Server Administration Interview

Q 1. Explain the differences between hard links and symbolic links.

Hard links and symbolic links are both ways to create references to files, but they differ significantly in how they work. Think of it like this: a hard link is like having multiple identical copies of a key that open the same door (the file), while a symbolic link is like having a note that says “This key is located at…” pointing to the original key’s location.

Hard Links: A hard link is a directory entry that points to the same inode (index node) as the original file. Multiple hard links can exist to the same file. Deleting one hard link doesn’t affect the others, as long as at least one link remains. Hard links cannot point to directories, only regular files.

- Advantage: Improved file system efficiency; deleting a hard link simply decreases the link count, without deleting the actual data.

- Disadvantage: Cannot link across file systems; cannot link to directories.

Symbolic Links (or Symlinks): A symbolic link, or soft link, is a file that contains a path to another file or directory. It’s essentially a shortcut. Deleting a symbolic link does not affect the target file or directory. Symlinks can point to directories and even to files across file systems.

- Advantage: More flexible, can point to directories and across file systems.

- Disadvantage: If the target file is deleted, the symlink becomes broken (dead link).

Example: Let’s say you have a large file named mydata.txt. You could create a hard link to it named mydata_link. Both names would point to the same data. If you deleted mydata.txt, mydata_link would still exist and work. A symbolic link, on the other hand, would simply point to the location of mydata.txt; if mydata.txt is removed, accessing the symbolic link would result in an error.

ln mydata.txt mydata_link # Creates a hard linkln -s mydata.txt mydata_symlink # Creates a symbolic linkQ 2. Describe the Linux boot process.

The Linux boot process is a multi-stage procedure that starts from powering on the system and ends with a fully functional operating system. It involves several crucial steps:

- BIOS/UEFI Initialization: The system’s Basic Input/Output System (BIOS) or Unified Extensible Firmware Interface (UEFI) performs a POST (Power-On Self-Test), identifies hardware components, and loads the boot loader.

- Boot Loader: The boot loader (like GRUB or systemd-boot) is a small program that loads the Linux kernel.

- Kernel Loading: The kernel is the core of the operating system. It initializes hardware drivers, mounts the root filesystem, and starts the init process.

- Init Process: Traditionally, init (process ID 1) manages system initialization. Systemd is the modern standard init system. It starts essential services and daemons that run in the background.

- System Services: The init system starts system services such as networking, logging, and user management.

- Login/Runlevel: Once initialization is complete, the system transitions to a user login prompt (e.g., graphical login or command-line login). The system then executes the commands in the user’s shell, and your Linux session begins.

In a nutshell: BIOS/UEFI wakes up the hardware → Boot Loader finds the kernel → Kernel starts up the system → Init system starts services → You get to your desktop or shell.

Q 3. How do you troubleshoot network connectivity issues on a Linux server?

Troubleshooting network connectivity problems on a Linux server requires a systematic approach. Here’s a step-by-step process:

- Check the basics: Verify the network cable is properly connected, the network interface card (NIC) is working, and the server’s IP address, subnet mask, and gateway are correctly configured (using

ifconfigorip addr show). - Ping the gateway: Try pinging the default gateway (

ping). If it fails, there’s a problem between the server and the network infrastructure. - Ping an external host: Ping a known working external host (e.g.,

ping google.comorping 8.8.8.8) to see if you have internet access. - Check network services: Ensure network services (like SSH, HTTP, DNS) are running (using

systemctl status sshd,systemctl status apache2etc.). If not, start them or troubleshoot the service itself. - Check firewall rules: Examine firewall rules using

iptables -L(orfirewall-cmd --list-allfor firewalld) to see if they are blocking incoming or outgoing connections on the required ports. - Examine network configuration files: Inspect the files (usually found in

/etc/sysconfig/network-scripts/for older systems or usingnmclifor NetworkManager) for any errors or misconfigurations. - Check the network logs: Look at relevant logs (like

/var/log/syslogor specific logs for your network manager) to identify any network related errors. - Use network tools: Tools such as

tracerouteortcpdumpcan provide additional information about the network path and packet traffic.

Remember to replace placeholders like

Q 4. What are different ways to monitor system performance in Linux?

Linux offers a plethora of tools for system performance monitoring. The choice depends on your specific needs and the level of detail you require:

top: A dynamic real-time display of system processes. Shows CPU usage, memory usage, and more. Pressing various keys (like1,M, orP) allows you to sort processes based on different criteria (CPU, memory, etc.).htop: An enhanced interactive version oftopwith a more user-friendly interface. It provides a better visual representation of processes and resource usage.ps: A powerful tool that displays information about running processes. Provides a snapshot of processes at a point in time. Different options provide different levels of detail (e.g.,ps aux).iostat: Monitors disk I/O statistics. Shows disk transfer rates, average wait times, and more.vmstat: Shows various virtual memory statistics. Useful for assessing memory usage and swapping activity.mpstat: Provides CPU statistics per core or processor. Helpful for identifying CPU bottlenecks.- Monitoring tools: More sophisticated monitoring tools like Nagios, Zabbix, or Prometheus offer centralized dashboards, automated alerts, and long-term trend analysis. These are particularly useful for large-scale deployments.

Example: To quickly assess CPU and memory usage, you might start with top or htop. If you see a process consuming excessive resources, ps can help identify the process ID, after which you can investigate further.

Q 5. How do you manage user accounts and permissions in Linux?

User account and permission management is critical for Linux system security. Here’s how it works:

- User creation and deletion: The primary command is

useradd(for creating) anduserdel(for deleting) . You can specify options like the user’s home directory, shell, and group membership (e.g.,useradd -m -g users newusercreates a user named ‘newuser’, gives them a home directory and adds them to the ‘users’ group). - Password management: Use

passwdto change passwords for users (passwd newuser). - Group management: Use

groupaddandgroupdelto manage user groups, andgpasswdto modify group membership. Groups allow you to assign permissions to sets of users collectively. - Permissions: Linux uses a three-part permission system (owner, group, others) using read(r), write(w), and execute(x) permissions. These permissions are typically set using the

chmodcommand (e.g.,chmod 755 myfilesets read, write, and execute for the owner, and read and execute permissions for group and others). - Ownership: The

chowncommand modifies the owner of a file or directory, andchgrpchanges its group ownership. sudo: Allows specific users to execute commands with elevated privileges (as root) without needing to log in as root directly, which is best practice for security.

Example: To create a new user named ‘john’ belonging to the ‘developers’ group, with a home directory, and set their password, you’d use:

useradd -m -g developers johnpasswd johnQ 6. Explain the concept of process management in Linux (using tools like top, htop, ps).

Process management in Linux involves monitoring, controlling, and managing the various processes running on the system. Tools like top, htop, and ps are instrumental in this:

top: Provides a dynamic, real-time view of running processes, sorted by CPU usage, memory usage, etc. It’s ideal for quickly identifying resource-intensive processes.htop: An enhanced version oftopwith a more user-friendly interface. It makes it easier to visually identify and manage processes.ps: A more static tool that provides a snapshot of processes at a given point in time. Offers various options to show different levels of information about processes (e.g., process ID, memory usage, command line).ps auxis a commonly used variant that shows a comprehensive list of processes.

Beyond monitoring, these tools often provide basic management features. In htop, you can typically kill or stop processes directly via the interface. Using the process ID obtained from ps or top, you can send signals to processes using kill (e.g., kill -9 forcefully terminates a process).

Example: If your server is experiencing slow performance, top or htop would quickly show you which process is consuming most of the CPU or memory. You could then use ps to get the process ID and investigate further to fix the cause.

Q 7. How do you handle disk space issues on a Linux server?

Handling disk space issues on a Linux server involves identifying the cause of the problem and then implementing the appropriate solution. Here’s a systematic approach:

- Identify the problem: Use the

df -hcommand to view disk space usage. It shows the disk partitions, their mount points, the amount of used and available space, and the percentage used. This highlights which partition is running low on space. - Locate large files or directories: Once you’ve identified the partition, use

du -sh *(in the root directory of the partition) ordu -shto find which files or directories are consuming the most space.du -h --max-depth=1shows the size of directories at the current level. - Remove unnecessary files: Delete unnecessary log files, temporary files, or old backups. Always be cautious when deleting files. It’s always wise to back up files before deleting them!

- Clean up log files: Regularly rotate log files using

logrotateto avoid them growing indefinitely. - Optimize databases: If you have databases, optimize them to remove redundant data and unused indexes.

- Archive old data: Archive old data to external storage to free up space on the server. Consider using compression (e.g.,

tar -czvf archive.tar.gz). - Increase disk space: If you’ve exhausted all other options, consider increasing disk space either by adding a new physical drive or expanding the current partition (this should be done carefully and requires understanding of partitioning tools like

fdiskorgparted). Use appropriate tools for your system. There are risks to improper use!

Example: If df -h shows your /var partition is full, you would use du -sh /var/* to locate the largest directories within /var. You might find that /var/log is consuming excessive space and then proceed to cleanup or rotate those log files.

Q 8. Describe different file systems used in Linux (ext4, xfs, etc.).

Linux offers a variety of file systems, each with its strengths and weaknesses. The choice depends heavily on the specific needs of the server and its applications. Here are a few popular examples:

- ext4 (Fourth Extended File System): This is the default file system for many modern Linux distributions. It’s a journaling file system, meaning it logs changes before they’re written to the disk, improving data integrity and recovery. Ext4 offers good performance, scalability, and robust features like advanced metadata handling and large file support. It’s a safe and reliable choice for most general-purpose servers.

- XFS (X Filesystem): Designed for very large filesystems and high performance, XFS excels in environments with massive datasets, like database servers or media storage. It handles large files and directories exceptionally well and boasts excellent performance under heavy I/O load. Its journaling capabilities offer similar data integrity benefits to ext4. However, it’s not as widely supported on older systems.

- Btrfs (B-tree file system): A relatively newer file system focused on advanced features like data integrity, copy-on-write snapshots, and built-in RAID capabilities. Btrfs aims to provide enhanced data safety and flexibility. While promising, it’s still considered under active development in some aspects, meaning potential instability compared to mature options like ext4 and XFS.

- Other File Systems: While less common for general server deployments, you might encounter others such as FAT32 (for compatibility with Windows systems), NTFS (also for Windows compatibility, though read-only access is common on Linux), and ReiserFS (an older option with less widespread use).

Choosing the right file system involves considering factors like the size of your data, the expected I/O load, the importance of data integrity, and the level of support available.

Q 9. What are the advantages and disadvantages of using virtualization?

Virtualization offers significant advantages in server management, but also has some drawbacks. Let’s explore both:

Advantages:

- Resource Optimization: Virtualization allows you to run multiple operating systems and applications on a single physical server, maximizing hardware utilization and reducing costs. Think of it as having several apartments within one building – far more efficient than having separate buildings for each tenant.

- Improved Disaster Recovery: Creating virtual machine (VM) snapshots enables quick and easy recovery from failures. You can revert to a previous state within minutes, limiting downtime. It’s like having an automatic backup of your entire apartment, allowing for immediate restoration after a problem.

- Simplified Management: Virtual machines are easily created, copied, moved, and managed, leading to simplified server administration. You can easily clone a VM to rapidly provision new servers.

- Increased Flexibility and Scalability: VMs can be easily migrated between different physical servers, allowing for flexibility and scalability. If one server fails, you can easily move the VMs to another.

- Cost Savings: Reduced hardware costs, power consumption, and cooling needs compared to using separate physical servers.

Disadvantages:

- Performance Overhead: Hypervisors (the software that manages the VMs) introduce some performance overhead. However, modern hypervisors minimize this impact.

- Complexity: Managing a virtualized environment can be more complex than managing individual physical servers. Requires mastering specific hypervisor software.

- Security Concerns: Proper security measures are critical in virtualized environments to prevent VM escape, malicious VM activity, and hypervisor vulnerabilities. This requires careful configuration and security policies.

- Single Point of Failure: If the hypervisor fails, all VMs hosted on that physical server can be affected. Redundancy is essential.

In conclusion, virtualization is a powerful tool, but a well-planned and carefully managed virtual environment is key to realizing its benefits while mitigating potential challenges.

Q 10. Explain the concept of SSH and its secure configurations.

SSH (Secure Shell) is a cryptographic network protocol that allows for secure remote login and other secure network services over an unsecured network. In simpler terms, it’s a much safer alternative to telnet. Think of it as using a secure, encrypted tunnel to communicate with a server, protecting your login credentials and all data transmitted.

Secure SSH Configurations: Securing SSH involves several critical steps:

- Disable Password Authentication: This is the most crucial step. Instead of passwords, use public-key authentication. This involves generating an SSH key pair (public and private key) on your client machine, and placing the public key on the server. This prevents brute-force attacks targeting passwords.

- Restrict SSH Access: Configure your firewall to only allow SSH connections from specific IP addresses or networks. This prevents unauthorized access from untrusted sources.

- Use a Strong SSH Port: Change the default SSH port (22) to a less common port. This makes it more difficult for attackers to locate your SSH server.

- Regularly Update SSH: Keep your SSH server software updated to patch security vulnerabilities. This is critical for keeping the SSH service secure.

- Enable SSH Logging: Log all SSH connections, both successful and failed, to monitor for suspicious activity. You can then audit this data to ensure security.

- Fail2ban: Implement a tool like Fail2ban, which automatically blocks IP addresses that attempt multiple failed SSH logins. This acts as an automated defense against brute-force attacks.

Example of disabling password authentication (requires root privileges):

sudo nano /etc/ssh/sshd_config

Find the lines PasswordAuthentication and PubkeyAuthentication and modify them to:

PasswordAuthentication no PubkeyAuthentication yes

Restart the SSH service with sudo systemctl restart sshd.

Implementing these configurations will make your SSH server significantly more secure.

Q 11. How do you secure a Linux server against common attacks?

Securing a Linux server involves a multi-layered approach. Here’s a breakdown of key strategies:

- Regular Updates: Keep the operating system and all installed software updated with the latest security patches. This addresses known vulnerabilities before attackers can exploit them. Think of this like regularly servicing your car to prevent breakdowns.

- Strong Passwords and Access Control: Use strong, unique passwords for all user accounts, and implement the principle of least privilege (only grant users the permissions they absolutely need). Use tools like

sudoto manage elevated privileges. - Firewall Configuration: Configure a firewall (like iptables or firewalld) to only allow necessary network traffic. This prevents unauthorized access from outside the network.

- Intrusion Detection/Prevention System (IDS/IPS): Implement an IDS/IPS to monitor network traffic for malicious activity. These systems can alert you to potential attacks and even block them automatically.

- Regular Security Audits: Conduct regular security audits to identify vulnerabilities and ensure security measures are effective. This is a proactive approach to identification and fixing problems.

- Vulnerability Scanning: Use vulnerability scanners (such as OpenVAS) to automatically identify potential security weaknesses in your server’s configuration and software.

- Security Hardening: Disable unnecessary services, remove unnecessary accounts, and regularly check for potentially compromised accounts or files. Removing unnecessary components is like cleaning your home regularly.

- Log Monitoring: Regularly monitor server logs for suspicious activity. This can help you detect and respond to attacks early.

- SSH Hardening (as discussed in previous question): Secure SSH access to prevent unauthorized login attempts.

- Regular Backups: Regularly back up important data to protect against data loss due to attacks or other incidents. This is like having insurance for your server.

A robust security posture requires a combination of these measures and ongoing vigilance. No single solution is foolproof, but a layered approach dramatically improves security.

Q 12. What are different types of Linux log files and how are they used for troubleshooting?

Linux systems generate various log files to record system events, application activity, and security information. These logs are vital for troubleshooting and security analysis. Here are a few key examples:

- `/var/log/syslog` or `/var/log/messages` (system log): Contains a wide range of system messages, including kernel messages, boot-up information, and messages from various system daemons. This log provides a comprehensive overview of system events. Similar to a diary for your server.

- `/var/log/auth.log`: Records authentication attempts (successful and failed logins) and other security-related events. Essential for security analysis. It’s like a security camera recording every login attempt.

- `/var/log/secure`: (Similar to auth.log, depending on the distribution) contains additional security-related logs, particularly relevant for SSH and other security-sensitive actions.

- `/var/log/kern.log`: Focuses on kernel messages, often related to hardware and driver issues. This is where you would find errors from kernel modules or drivers.

- Application-specific logs: Many applications create their own log files. These logs provide details about application activity, errors, and performance issues. The locations vary by application but typically reside under `/var/log`.

- `/var/log/dmesg` (kernel ring buffer): A snapshot of recent kernel messages, extremely helpful during boot issues or hardware problems.

Troubleshooting with Logs: When troubleshooting, examine relevant log files to identify error messages, warnings, and unusual activity. The location of errors within a log file can aid in determining the cause of a problem. For example, repeated failed SSH login attempts in `/var/log/auth.log` suggest a potential brute-force attack.

Tools like grep, awk, and logrotate (for managing log file sizes) are invaluable for managing and analyzing log files.

Q 13. Explain the differences between cron and anacron jobs.

Both cron and anacron are used to schedule tasks in Linux, but they differ in their approach:

- Cron: Cron runs on systems that are regularly online. It checks its configuration file (`/etc/crontab` and files in `/etc/cron.d/`) every minute to determine which tasks to execute at scheduled times. Think of it as a punctual, time-based scheduler.

- Anacron: Anacron is designed for systems that aren’t always online, like batch jobs or servers that are sometimes shut down. If a cron job is missed because the system was offline, anacron runs it as soon as the system boots again. It’s the backup scheduler for situations when cron might miss scheduled jobs.

Key Differences Summarized:

- Online Requirement: Cron requires a continuously running system; Anacron is for systems with periods of downtime.

- Execution Frequency: Cron checks its schedule every minute; Anacron runs periodically, often daily, to check for missed jobs.

- Job Handling: Cron executes tasks based on a strict schedule; Anacron executes tasks that cron might have missed.

In essence, anacron is a supplementary tool to cron, ensuring tasks are executed even when the system has experienced periods of inactivity. They often work together to guarantee scheduled tasks are run.

Q 14. How do you configure and manage network interfaces in Linux?

Network interface configuration in Linux is typically managed through configuration files (often located in `/etc/sysconfig/network-scripts/` or similar directories, depending on the distribution) and command-line tools.

Methods of Configuration:

- Configuration Files: Most distributions use text-based configuration files (e.g., `ifcfg-eth0`, `ifcfg-ens33` for Ethernet interfaces) to specify settings like IP address, netmask, gateway, and DNS servers. These files are then used by the networking services to bring up the network interfaces.

- Command-Line Tools: Tools like

ipandifconfig(thoughipis now preferred) are used to manage network interfaces directly from the command line. They allow dynamic changes, useful for troubleshooting or temporary configuration adjustments.

Example using ip (requires root privileges):

To configure an Ethernet interface named `eth0`:

sudo ip addr add 192.168.1.100/24 dev eth0 sudo ip link set eth0 up sudo ip route add default via 192.168.1.1

This sets the IP address, brings the interface up, and adds a default route. Replace values with your actual network configuration details.

Example using a configuration file (specific structure varies by distribution):

A sample configuration file might look like:

DEVICE=eth0 BOOTPROTO=static IPADDR=192.168.1.100 NETMASK=255.255.255.0 GATEWAY=192.168.1.1 DNS1=8.8.8.8 DNS2=8.8.4.4

After modifying the file, you typically need to restart the networking service (sudo systemctl restart networking or a similar command depending on your distribution) for changes to take effect.

Modern systemd-based systems often use a more sophisticated approach to networking configuration, utilizing systemd network units which allows for more declarative and manageable configuration.

Q 15. What is the difference between iptables and firewalld?

Both iptables and firewalld are Linux firewall tools, but they differ significantly in their approach and complexity. iptables is a low-level command-line utility that directly manipulates the Linux kernel’s netfilter framework. It’s powerful and flexible, allowing for intricate firewall rules, but requires a deeper understanding of networking concepts and can be quite complex to manage. Think of it like working directly with the engine of a car – you have ultimate control but it’s more difficult to learn.

firewalld, on the other hand, is a more user-friendly daemon (a background process) that provides a higher-level interface to manage firewalls. It simplifies the process of creating and managing firewall rules, using zones (like ‘public’, ‘internal’, ‘dmz’) to group rules logically. It’s easier to learn and use, making it ideal for administrators who need a simpler, more intuitive experience. Think of it as using the car’s dashboard – easier to control, but you have less direct control over the engine’s inner workings.

In short: iptables offers fine-grained control but is complex; firewalld provides a user-friendly interface with less direct control.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with scripting (Bash, Python, etc.) for automation tasks.

I have extensive experience with both Bash and Python scripting for server automation. Bash is my go-to for quick tasks and system administration due to its ubiquity and integration with the Linux shell. For example, I frequently use Bash to automate log analysis, user account management, and cron job scheduling. A typical example might be a script to check disk space and send an email alert if it falls below a certain threshold.

#!/bin/bash

# Check disk space

diskspace=$(df -h / | awk 'NR==2{print $5}')

if [[ "$diskspace" < "10%" ]]; then

# Send email alert (requires mailutils)

echo "Disk space low!" | mail -s "Low Disk Space Alert" [email protected]

fiPython, however, offers more power and structure for larger, more complex automation projects. I've used it extensively for tasks involving interacting with APIs, data processing, and building robust applications for server monitoring and management. For instance, I developed a Python script that monitors multiple servers, collects performance metrics, and generates insightful reports.

My scripting skills contribute significantly to my efficiency and help me ensure consistent, repeatable tasks across multiple servers. I prioritize writing clean, well-documented code that's easily maintainable and adaptable to future needs.

Q 17. How do you manage system updates and patches on a Linux server?

Managing system updates and patches is crucial for server security and stability. My approach is multi-faceted and emphasizes a secure and controlled rollout. I primarily use the built-in package managers like apt (Debian/Ubuntu) or yum/dnf (Red Hat/CentOS/Fedora). Before applying updates, I always back up the system, just in case something goes wrong. I then perform a thorough review of the available updates, prioritizing security patches first.

For crucial servers, I often update them in a staged approach: first, testing the updates in a non-production environment (e.g., a staging server or a virtual machine) to ensure compatibility and stability. Only after successful testing do I roll them out to production servers. This ensures minimal disruption and reduces the risk of service interruptions. After updates are applied, I always reboot the server to finalize the changes and verify their effectiveness.

Regularly reviewing security advisories from vendors and utilizing a vulnerability scanner adds another layer of security to the update process. This allows for proactive patching and minimizes the window of vulnerability. Automated update mechanisms can also be employed, but must be carefully monitored and configured to avoid unintended consequences.

Q 18. Explain your experience with different backup and recovery strategies.

Backup and recovery strategies are fundamental to system resilience. My experience encompasses a variety of techniques tailored to the specific needs of the system being protected. I avoid relying on a single backup solution, and instead implement a multi-layered approach which combines local and remote backups. This includes utilizing tools like rsync for differential backups, ensuring only changed files are copied, thus saving space and time.

Local backups might involve using tar to create compressed archives stored on a separate disk or partition. Remote backups utilize services such as cloud storage (AWS S3, Azure Blob Storage, Google Cloud Storage) or a dedicated backup server. I also regularly test restorations to ensure data recoverability. The frequency of backups varies depending on the criticality of the data and rate of change, with some servers backed up daily, others weekly or monthly.

I tailor the backup strategy based on factors like Recovery Time Objective (RTO) and Recovery Point Objective (RPO). For mission-critical systems, a very short RTO and RPO are essential and necessitate more frequent backups and faster restoration methods. Different backup software or scripts provide different functionality, enabling me to carefully choose tools to meet the needs of specific applications and data.

Q 19. How do you troubleshoot a Linux server experiencing high CPU utilization?

High CPU utilization can indicate various issues, so systematic troubleshooting is crucial. My approach involves a series of steps to identify the root cause. I start by using top or htop to identify the processes consuming the most CPU resources. This gives a quick overview of what’s using the most CPU.

Next, I examine the processes' characteristics: Is it a known process (like a database or web server)? Is it a system process or a user-level process? If it's a known process, checking its logs might reveal bottlenecks or errors. If it's an unknown process, I’d investigate further using commands like ps aux | grep for more detailed information, or looking it up online to determine its legitimacy.

Tools like iostat, vmstat, and iotop can reveal if disk I/O or memory limitations are contributing to the CPU problem. Memory leaks, inefficient code, runaway processes, or malware are also potential culprits. Profiling tools can help pinpoint the specific lines of code that are creating bottlenecks in an application. Once the problem is identified, solutions range from resource allocation adjustments, code optimization, killing runaway processes, or addressing software bugs.

Q 20. How do you handle a system crash or reboot?

Handling system crashes or reboots requires a systematic approach, focusing on data integrity and service restoration. Immediately after a crash or reboot, my first step is to check the system logs (/var/log/syslog, /var/log/messages, etc.) for clues about the cause of the failure. This log analysis often identifies errors or warnings that preceded the crash.

Next, I verify the integrity of the file system using commands like fsck (after ensuring the server is in single-user mode if necessary). I then examine the system’s overall health – checking CPU, memory, and disk usage. I might also run a memory test (memtest86+) if I suspect RAM issues. Once I've stabilized the system, I investigate any applications or services that failed to start and take appropriate steps to restart or recover them. Depending on the severity, this may involve restoring from a backup.

Finally, I investigate the root cause of the crash to prevent recurrence. This could involve software updates, hardware repairs, or configuration changes. Regular backups and a well-defined recovery plan are crucial in minimizing downtime during such events. Documenting the incident and the resolution steps ensures future incidents can be addressed more efficiently.

Q 21. What are different ways to monitor and manage system resources (memory, CPU, disk I/O)?

Monitoring and managing system resources (memory, CPU, disk I/O) is essential for maintaining server performance and stability. I employ a combination of tools and techniques. For real-time monitoring, top, htop, iostat, vmstat, and iotop are invaluable. These command-line utilities provide detailed, up-to-the-minute information on CPU usage, memory consumption, disk activity, and network traffic. htop provides an intuitive interactive graphical view.

For long-term monitoring and historical data analysis, I utilize monitoring tools such as Nagios, Zabbix, or Prometheus. These tools collect data from the server over time, providing detailed graphs and alerts for unusual behavior. They can be set to trigger alerts via email, SMS, or other methods when resource thresholds are exceeded. This allows for proactive problem resolution.

Beyond these, dedicated resource management tools (cgroups for Linux containers, for example) can be used to set limits on individual processes or groups of processes to prevent resource exhaustion by any single application. Regular log analysis also plays a part in resource management, allowing identification of persistent problems or resource inefficiencies that aren't readily apparent from direct system monitoring.

Q 22. Explain your experience with different Linux distributions (Red Hat, CentOS, Ubuntu, etc.).

My experience spans several prominent Linux distributions, each with its strengths and weaknesses. Red Hat Enterprise Linux (RHEL) and its CentOS counterpart are known for their stability and robust security features, making them ideal for mission-critical applications and enterprise environments. I've extensively used RHEL for setting up and managing highly available clusters, leveraging its advanced features like high-availability clustering (Pacemaker) and advanced networking configurations. CentOS, being a community-supported clone of RHEL, offered a cost-effective alternative for similar deployments. On the other hand, Ubuntu, with its large community and extensive package repository (apt), is a favorite for its ease of use and rapid development-cycle updates; I've utilized Ubuntu for building and deploying web applications, leveraging its user-friendly package management system. My experience also includes Debian, a foundation for many distributions, providing a stable and predictable environment. I find that selecting a distribution often comes down to balancing the trade-off between stability, cost, and the ease of package management.

For example, in a recent project involving a highly sensitive database server, RHEL's stringent security model and robust support infrastructure were crucial. Conversely, for a rapid prototyping project involving a web application, the ease of use and extensive package selection of Ubuntu proved much more beneficial. My choice of distribution always depends on the specific requirements of the project and the long-term maintenance considerations.

Q 23. How do you manage user authentication and authorization?

User authentication and authorization are cornerstones of secure server administration. I typically manage these using a combination of tools and techniques depending on the specific needs of the system. The foundational approach involves leveraging the Linux system's built-in user management tools. This usually involves creating user accounts using the useradd command, setting passwords with passwd, and assigning users to groups using gpasswd or usermod to define access privileges. These groups are then used to control file permissions via chmod.

For more sophisticated scenarios, I leverage centralized authentication services like LDAP (Lightweight Directory Access Protocol) or Active Directory. LDAP offers a centralized repository for user accounts and group memberships, providing single sign-on capabilities across multiple systems. This significantly simplifies user management and ensures consistency in authentication policies. In larger environments, integration with Kerberos for mutual authentication and ticket-based security provides enhanced security and protection against replay attacks.

Authorization is managed through access control lists (ACLs) and file permissions. By carefully controlling who can read, write, or execute specific files and directories, system integrity and data security are maintained. Regular auditing and logging of user activity are essential to identify and prevent security breaches. For example, a web server might use Apache's configuration to map users to specific directories, leveraging Apache's authentication modules for secure access control.

Q 24. What is your experience with configuring and managing databases (MySQL, PostgreSQL, etc.)?

My experience with database administration encompasses both MySQL and PostgreSQL. I've worked extensively with MySQL in numerous web application deployments, managing everything from database design and schema creation to performance tuning and replication. For example, I've optimized query performance using indexing strategies, query analysis tools, and database caching mechanisms. I'm also proficient in MySQL replication, setting up master-slave configurations for high availability and disaster recovery.

PostgreSQL, with its advanced features and robust security, is my preferred choice for enterprise-level applications demanding data integrity and complex data modeling. I've used it for projects requiring robust transaction management, spatial data handling, and advanced data types. My experience includes managing PostgreSQL clusters, configuring replication, and ensuring data backups using tools like pg_dump and pg_basebackup. Understanding database optimization strategies, including query optimization, indexing, and efficient data modeling, is key to managing databases effectively. I regularly monitor database performance metrics, using tools like mysqladmin (MySQL) and psql (PostgreSQL) to proactively identify and resolve performance bottlenecks.

Q 25. Explain your experience with containerization technologies (Docker, Kubernetes).

Containerization technologies like Docker and Kubernetes are integral parts of my modern DevOps workflow. Docker allows me to create isolated, portable environments for applications, which simplifies deployment and ensures consistency across different environments. I frequently use Dockerfiles to automate the creation of container images and Docker Compose to manage multi-container applications. This ensures that applications run reliably, regardless of the underlying infrastructure.

Kubernetes takes container orchestration to the next level. I leverage Kubernetes to manage and scale containerized applications across clusters of machines. I've worked extensively with Kubernetes concepts like deployments, services, and namespaces to automate application deployment, scaling, and management. I also have experience with Kubernetes' self-healing capabilities and its built-in mechanisms for monitoring and logging. For example, managing a microservice architecture with Kubernetes allows for independent scaling of individual components, which leads to efficient resource usage and improved resilience. In a recent project, I used Kubernetes to deploy and manage a highly available web application comprising multiple microservices, each running in its own Docker container.

Q 26. Describe your experience with cloud platforms (AWS, Azure, GCP).

I have significant experience with AWS, Azure, and GCP, each offering a distinct set of services and strengths. On AWS, I've deployed and managed virtual machines (EC2), databases (RDS), load balancers (ELB), and other services to build scalable and highly available applications. I'm familiar with AWS's various security features, including IAM (Identity and Access Management) for controlling access to resources.

Azure offers a similar suite of services, and I've used it for building and deploying applications leveraging Azure Virtual Machines, Azure SQL Database, and Azure App Service. I appreciate Azure's integration capabilities with other Microsoft products. GCP provides a strong alternative, and I have hands-on experience with Compute Engine, Cloud SQL, and Cloud Storage. I find GCP's focus on data analytics and machine learning compelling. My cloud experience includes architecting solutions for high availability, disaster recovery, and cost optimization. Selecting the appropriate cloud provider is often a business decision, considering cost, existing infrastructure, and specific service requirements.

Q 27. How do you approach troubleshooting complex server issues?

Troubleshooting complex server issues requires a systematic and methodical approach. My strategy typically starts with gathering information – checking system logs (/var/log), monitoring system resource utilization (top, htop, iostat), and reviewing network connectivity (ping, traceroute, netstat). I meticulously analyze error messages, looking for clues to pinpoint the root cause.

Once I have a better understanding of the problem, I isolate the affected components to narrow down the search area. If the issue involves a specific service, I'll examine its configuration files and logs. Sometimes, reproducing the issue in a controlled environment (a staging or test server) can aid in diagnosis. If the problem involves network connectivity, packet capture tools like tcpdump can be invaluable. For example, identifying a memory leak might involve using tools like valgrind or memcheck to analyze memory usage patterns. In other situations, investigating slow database queries might require using database-specific profiling tools. A thorough understanding of the system's architecture, dependencies, and the involved software is essential for effective troubleshooting.

Collaboration and seeking assistance are also key to effective troubleshooting. If I’m stumped, I will consult online resources, communities, or colleagues for advice. Documenting the troubleshooting process is equally important, both for future reference and for sharing solutions with others. The key is to be systematic, patient, and persistent in investigating and resolving complex issues.

Key Topics to Learn for Your Linux/Unix Server Administration Interview

Landing your dream Linux/Unix Server Administration role requires a solid understanding of both theory and practice. Focus your preparation on these key areas:

- Core System Administration Tasks: User and group management, file system management (including LVM), package management (apt, yum, dnf, pacman), and basic shell scripting (Bash, Zsh).

- Networking Fundamentals: IP addressing, DNS configuration, network services (SSH, HTTP, FTP), firewall management (iptables, firewalld), and basic network troubleshooting.

- Security Best Practices: Understanding security vulnerabilities, implementing security hardening techniques, user authentication and authorization, and log management and analysis.

- Server Monitoring and Troubleshooting: Utilizing monitoring tools (e.g., Nagios, Zabbix), analyzing system logs for errors, and employing effective problem-solving methodologies for resolving server issues.

- Virtualization and Containerization: Familiarity with virtualization technologies (e.g., VMware, VirtualBox, KVM) and containerization platforms (e.g., Docker, Kubernetes) is increasingly important.

- Automation and Configuration Management: Understanding and applying tools like Ansible, Puppet, or Chef to automate repetitive tasks and manage server configurations efficiently.

- Cloud Computing Concepts (Optional but beneficial): Basic understanding of cloud platforms (AWS, Azure, GCP) and their services relevant to server administration.

- Practical Application: Think about how you've applied these concepts in past projects. Be ready to discuss your experience in detail, emphasizing problem-solving and results.

Next Steps: Unlock Your Career Potential

Mastering Linux/Unix Server Administration opens doors to exciting and high-demand careers. To make the most of your skills and experience, a well-crafted resume is essential. An ATS-friendly resume, optimized for Applicant Tracking Systems, dramatically increases your chances of landing an interview.

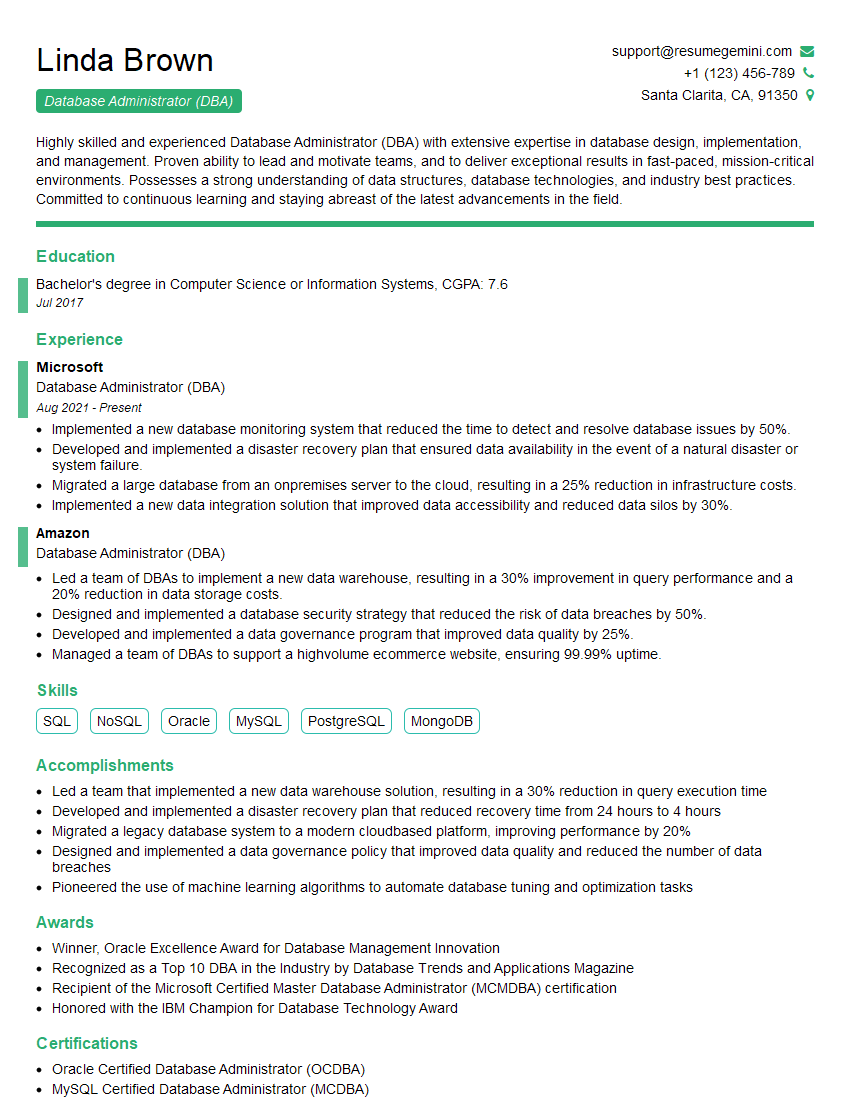

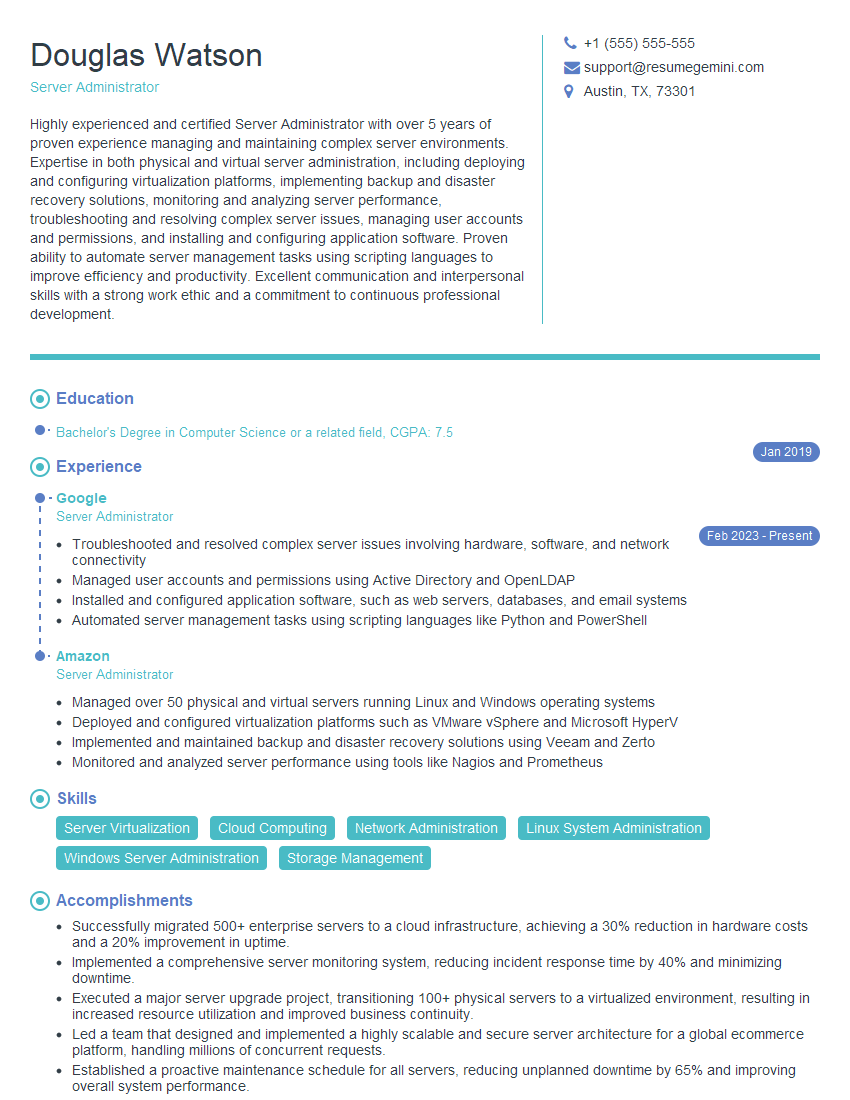

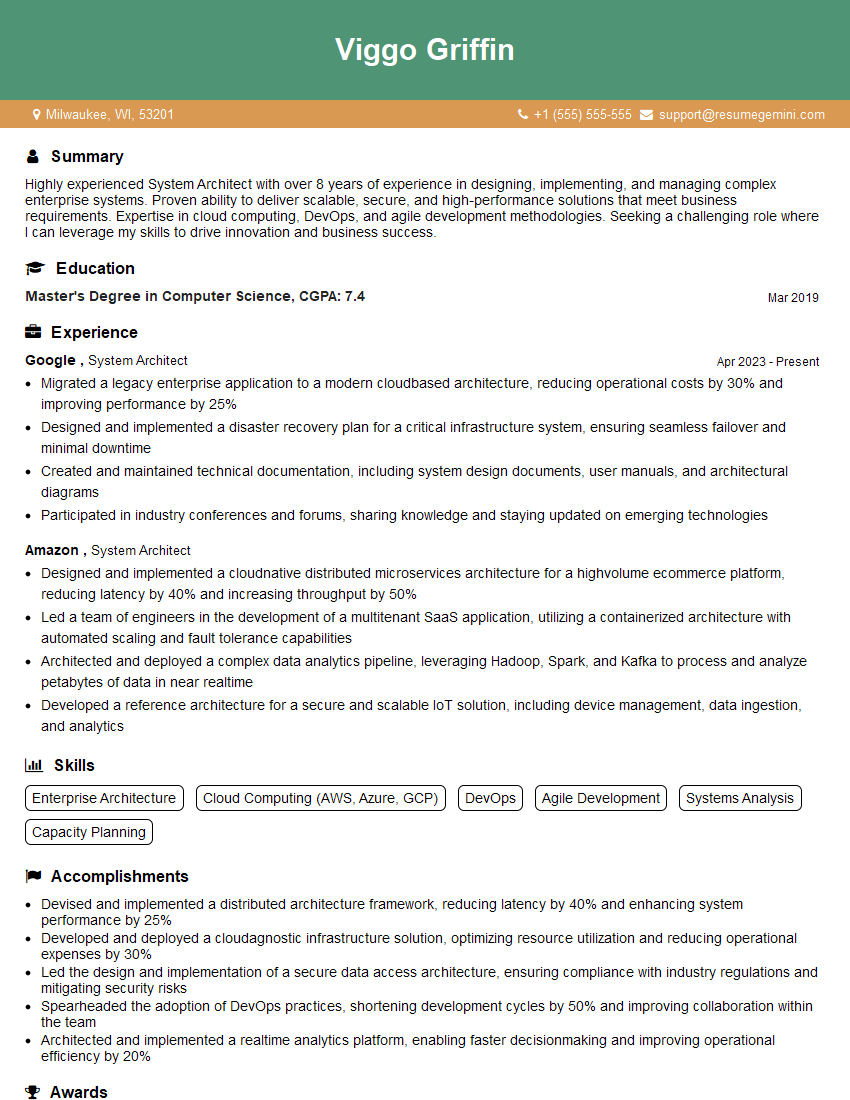

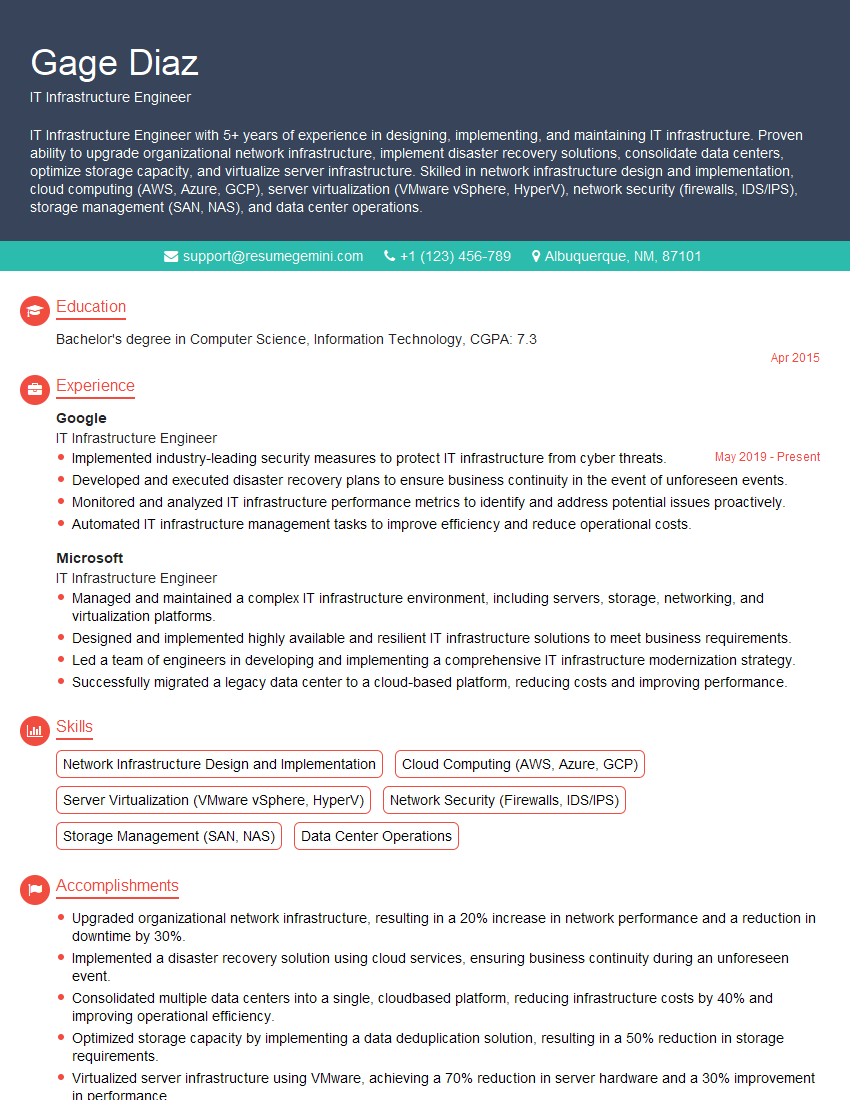

We recommend using ResumeGemini to build a powerful, ATS-optimized resume that showcases your expertise. ResumeGemini provides tools and resources to create a professional document that highlights your accomplishments. Examples of resumes tailored to Linux/Unix Server Administration are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO