Preparation is the key to success in any interview. In this post, we’ll explore crucial Metrology and Dimensional Measurement interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Metrology and Dimensional Measurement Interview

Q 1. Explain the difference between accuracy and precision in measurement.

Accuracy and precision are often confused, but they represent distinct aspects of measurement quality. Think of it like archery: Accuracy refers to how close your measurements are to the true value. A highly accurate measurement consistently hits the bullseye. Precision, on the other hand, refers to how close your measurements are to each other, regardless of whether they’re close to the true value. A precise archer might consistently hit the same spot on the target, but if that spot is far from the bullseye, their accuracy is low.

For example, imagine measuring the length of a 10cm rod. A highly accurate measurement might be 10.01cm, very close to the true value. A precise but inaccurate measurement might consistently yield 10.5cm, demonstrating good repeatability but a significant deviation from the true value. In metrology, we strive for both high accuracy and high precision.

Q 2. Describe various types of measurement uncertainties and their sources.

Measurement uncertainties are unavoidable variations in our measurements. They stem from various sources, broadly classified into:

- Random uncertainties: These are unpredictable variations caused by factors like environmental fluctuations (temperature, humidity), instrument noise, or human error in reading the instrument. They follow a statistical distribution, often normal.

- Systematic uncertainties: These are consistent deviations from the true value, often stemming from a bias in the measurement process. For instance, an improperly calibrated instrument will introduce a systematic error. These are more difficult to identify and correct than random uncertainties.

- Environmental uncertainties: Variations in temperature, pressure, humidity, and vibration can significantly influence measurements, particularly for precision instruments. Proper environmental control is essential.

- Observer uncertainties: Human error, such as parallax error (incorrectly reading a scale due to viewing angle), can introduce significant uncertainties. Using appropriate measurement techniques and multiple observers can mitigate this.

- Instrument uncertainties: The instrument itself introduces uncertainty due to its inherent limitations, such as resolution, linearity, and drift. Manufacturer specifications provide information on these uncertainties.

Understanding and quantifying these sources of uncertainty is crucial for reporting measurement results with confidence. We often use statistical methods to estimate and propagate these uncertainties to obtain an overall uncertainty value.

Q 3. How do you select appropriate measuring instruments for a specific task?

Selecting the right measuring instrument depends heavily on the specific task requirements. Key factors to consider are:

- Accuracy and precision required: The tolerance limits of the part dictate the needed accuracy and precision of the instrument. A high-precision part necessitates a highly accurate and precise measurement system.

- Range of measurement: The instrument’s range must encompass the expected dimensions of the part.

- Resolution: The smallest increment the instrument can measure should be appropriate for the required precision. A higher resolution provides more detail but may not always be necessary or cost-effective.

- Measurement method: Different methods are suitable for different geometries and materials. For example, optical comparators are useful for 2D measurements, while CMMs are preferred for complex 3D geometries.

- Cost and availability: The budget and availability of instruments are practical considerations.

- Ease of use and operator training: The chosen instrument should be easy for the operator to use correctly.

For instance, measuring the diameter of a wire requires a micrometer for high precision, whereas measuring the dimensions of a large machine component might require a CMM.

Q 4. What are the key principles of dimensional metrology?

Dimensional metrology’s key principles center around obtaining accurate and reliable measurements of physical dimensions. These include:

- Traceability: All measurements must be traceable to national or international standards (e.g., NIST, BIPM).

- Uncertainty quantification: Accurately determining and reporting the uncertainty associated with each measurement.

- Calibration and verification: Regularly calibrating measuring instruments against traceable standards to ensure accuracy and reliability.

- Standard operating procedures (SOPs): Establishing clear procedures for measurement to minimize variability and ensure consistency.

- Statistical methods: Applying statistical techniques for data analysis, uncertainty evaluation, and process capability assessment.

- Proper handling and environmental control: Safeguarding instruments from damage and maintaining a stable measurement environment.

Adherence to these principles ensures the quality and reliability of dimensional measurements, which are crucial for manufacturing, quality control, and research.

Q 5. Explain the concept of traceability in measurement.

Traceability in measurement is the ability to demonstrate an unbroken chain of comparisons that link a measurement result to a recognized standard. It ensures the reliability and comparability of measurements across different locations, times, and laboratories. Imagine a hierarchy: your instrument is calibrated against a secondary standard, which is calibrated against a national standard, which is ultimately linked to an international standard (like the SI units). This unbroken chain provides the traceability.

Without traceability, measurement results lack credibility and comparability. For example, if two manufacturers use differently calibrated instruments to measure the same part, their results may not agree, leading to inconsistencies in manufacturing and quality control. Traceability documents provide evidence of the calibration history and ensure that measurements are reliable and consistent.

Q 6. Describe your experience with different types of Coordinate Measuring Machines (CMMs).

My experience encompasses various CMM types, including:

- Bridge-type CMMs: These are robust and widely used for large-scale measurements. I’ve worked extensively with these in automotive component inspections, verifying part dimensions and surface finishes. Their large work envelope makes them suitable for bulky parts.

- Gantry-type CMMs: These offer a significant advantage in terms of measurement speed and accuracy for complex geometries. I’ve used these in aerospace applications to inspect intricate parts with high precision requirements.

- Horizontal-arm CMMs: These are particularly useful for measuring large parts or those with complex shapes that are difficult to access with traditional bridge or gantry types. I utilized these in the inspection of large castings and molds, ensuring dimensional conformity.

My experience also includes using various probing systems, such as touch-trigger probes, scanning probes, and optical probes, each suited for different measurement needs. Proficiency in operating and programming these different CMM types is essential for optimal measurement efficiency and accuracy.

Q 7. How do you perform a gauge R&R study?

A Gauge R&R (repeatability and reproducibility) study assesses the variability in measurement due to the gauge (measuring instrument) and the operator. This is crucial to determine if the measurement system is suitable for its intended purpose. It quantifies the proportion of total variation attributed to the gauge and operator versus the actual part-to-part variation.

The study typically involves:

- Selecting parts: Choose a representative sample of parts spanning the expected range of variation.

- Selecting operators: Include all operators who will use the gauge.

- Measurement process: Each operator measures each part multiple times (typically 2-3 repetitions). This process is repeated over multiple trials.

- Data analysis: Statistical methods (ANOVA is commonly used) analyze the data to estimate the variance components due to repeatability (variation within an operator), reproducibility (variation between operators), and part-to-part variation.

- %Contribution calculation: The percentages of variation are calculated to evaluate the measurement system’s capability.

- Interpretation: The results are interpreted using guidelines like the AIAG (Automotive Industry Action Group) guidelines to determine if the measurement system is acceptable. An acceptable system shows that most of the variation is due to the part-to-part difference, not the measurement system.

A well-conducted Gauge R&R study ensures that the measurement system doesn’t introduce unacceptable variability, leading to confident decision making regarding part quality.

Q 8. Explain the importance of calibration in metrology.

Calibration in metrology is paramount; it’s the process of comparing a measuring instrument’s readings to a known standard of higher accuracy. Think of it like checking your watch against an atomic clock – you’re verifying its accuracy. Without calibration, measurement uncertainties grow, leading to flawed data and potentially costly errors in manufacturing, quality control, or research.

For example, if a micrometer used to measure the diameter of a precision shaft isn’t calibrated regularly, its readings might drift, resulting in shafts being manufactured outside the specified tolerances. This could cause malfunctions, rejects, and wasted materials. Regular calibration ensures that our measurement tools remain accurate and reliable, providing trustworthy data for informed decision-making.

Q 9. What are the different types of calibration standards?

Calibration standards fall into several categories, ranging from international standards to working standards used on the shop floor.

- International and National Standards: These are the most accurate standards, often maintained by national metrology institutes. They’re the top of the traceability chain, ensuring consistency across measurements globally.

- Reference Standards: These are highly accurate standards used to calibrate working standards. They provide a level of accuracy between the international standard and everyday use.

- Working Standards: These are used daily in the lab or on the production floor to calibrate measuring instruments. They are checked periodically against the reference standards.

The choice of standard depends on the required accuracy and the application. For instance, measuring the dimensions of a microchip requires a much higher level of accuracy than measuring the length of a piece of lumber, so different standards would be used.

Q 10. How do you handle measurement discrepancies?

Measurement discrepancies demand a systematic approach. The first step is to identify the source of the discrepancy. This often involves checking the measuring instrument’s calibration status, examining the measurement process for errors (operator error, environmental factors, instrument malfunction), and verifying the sample’s condition.

Let’s say we have a discrepancy in measuring the thickness of a metal sheet. We’d start by recalibrating the micrometer. If the discrepancy persists, we’d investigate the measurement technique – was the sheet properly supported? Was the measurement taken at the same location each time? We’d also check the environment for factors like temperature fluctuations, which might influence the sheet’s dimensions. If the problem persists, the metal sheet itself could be non-uniform. A thorough investigation helps pinpoint the root cause and implement corrective actions.

Q 11. Describe your experience with statistical process control (SPC) in metrology.

Statistical Process Control (SPC) is integral to metrology, allowing us to monitor measurement processes for variability and identify trends indicating potential issues. I’ve extensively used control charts, such as X-bar and R charts, to analyze measurement data from various manufacturing processes. For example, during a project involving the machining of precision parts, we monitored the diameter of the parts using SPC. By tracking the data on control charts, we identified a pattern of increasing variability which pointed towards a problem with the machine tool’s wear.

This allowed for timely preventative maintenance, preventing the production of defective parts. SPC helps minimize waste and maximize efficiency by proactively identifying potential issues before they lead to significant problems.

Q 12. Explain the use of different measurement units (e.g., inches, millimeters, microns).

The choice of measurement units – inches, millimeters, microns – depends entirely on the scale of the object being measured and the required precision. Inches are commonly used in some engineering applications, millimeters are more widespread internationally, and microns are essential for measuring very small dimensions.

For example, we use inches when measuring the length of a wooden plank, millimeters for measuring the size of a screw, and microns for inspecting the surface roughness of a microchip. It’s crucial to maintain consistency within a project and to correctly convert units when necessary to avoid errors and misunderstandings. Using the wrong unit of measurement can have significant consequences.

Q 13. How do you interpret measurement data and draw conclusions?

Interpreting measurement data involves more than simply looking at the numbers. It requires understanding the context – the measurement method, the instrument used, the environmental conditions, and the potential sources of error. Statistical analysis is often needed to assess the data’s reliability and identify trends. I typically use descriptive statistics (mean, standard deviation, range) and graphical representations (histograms, scatter plots) to understand data distribution and look for patterns.

For example, if I find a high standard deviation in a set of measurements, it suggests significant variability that needs to be addressed. Conclusions are drawn by comparing the measured values to the specifications or tolerances and considering the uncertainties involved. This process demands careful consideration to ensure accurate and reliable conclusions.

Q 14. What software packages are you familiar with for dimensional measurement?

My experience encompasses several software packages used in dimensional measurement. I’m proficient in using CMM (Coordinate Measuring Machine) software such as PC-DMIS and QUINDOS for programming inspection routines and analyzing results. I’m also familiar with statistical software like Minitab for data analysis and SPC charting. Furthermore, I’ve worked with CAD software (e.g., SolidWorks) to create 3D models for comparison with measured data and other specialized software for specific measurement tasks.

Q 15. Describe your experience with geometric dimensioning and tolerancing (GD&T).

Geometric Dimensioning and Tolerancing (GD&T) is a symbolic language used on engineering drawings to define the size, shape, orientation, location, and runout of features. It’s crucial for ensuring parts fit together correctly and function as intended. My experience with GD&T spans over [Number] years, encompassing the design, manufacturing, and inspection phases. I’ve worked extensively with ASME Y14.5 standards, using various GD&T symbols such as position, perpendicularity, flatness, and circularity to specify tolerances. For example, I’ve used position tolerances to ensure that holes on a printed circuit board are located within specific limits, preventing incorrect component placement. I’ve also used form tolerances, like flatness, to ensure the surface of a precision machined part meets stringent requirements for proper sealing. I understand that effective GD&T implementation requires close collaboration between design, manufacturing, and quality control teams, and I have a strong track record of leading such collaborations to successful project outcomes.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of tolerance stacking.

Tolerance stacking, also known as tolerance accumulation, refers to the compounding effect of individual tolerances in a system. Imagine building a tower of LEGO bricks: each brick has a slight tolerance in its size. If you stack many bricks, the total height variation becomes much larger than the individual brick’s variation. Similarly, in manufacturing, the cumulative effect of tolerances in multiple parts can lead to a significant deviation from the intended assembly dimensions. This can cause problems like interference fits, incorrect clearances, or even functional failure. Understanding tolerance stacking is crucial for design and manufacturing, and it requires meticulous analysis of the entire assembly. Techniques like statistical tolerance analysis can be used to predict the overall tolerance and identify potential problem areas. For instance, when designing a complex assembly like an engine, I might use Monte Carlo simulations to model the variations in individual components and determine the likelihood of the assembly exceeding specified tolerances.

Q 17. How do you ensure the integrity and security of measurement data?

Ensuring the integrity and security of measurement data is paramount. It involves a multi-faceted approach. Firstly, we use calibrated and traceable measurement equipment, ensuring all instruments are regularly checked against national or international standards. Secondly, we employ strict data acquisition protocols, including standardized procedures for data logging, handling, and storage. This typically involves using robust software systems with audit trails that track any modifications made to the data. Thirdly, we implement robust cybersecurity measures to protect the data from unauthorized access, modification, or deletion. This includes secure network infrastructure, access control mechanisms, and regular security audits. Fourthly, we establish clear chain of custody procedures to document the handling of samples and the associated data, tracing their movement from acquisition through analysis. Finally, we maintain detailed records, including instrument calibration certificates, software validation reports, and operator qualifications. A system of regular backups and version control is also crucial for data integrity.

Q 18. What are some common measurement errors and how to avoid them?

Common measurement errors can stem from various sources, including instrument errors (e.g., miscalibration, drift), environmental factors (e.g., temperature, humidity), operator errors (e.g., improper setup, incorrect reading), and workpiece factors (e.g., surface finish, distortion). To minimize errors, we employ several strategies. We use calibrated instruments and regularly check their accuracy. Environmental control, like maintaining a stable temperature and humidity, is also vital. We train operators rigorously, focusing on proper techniques and procedures, and establish standardized operating procedures (SOPs). To address workpiece errors, careful fixturing, appropriate surface preparation, and the use of appropriate measurement techniques are crucial. For example, measuring the diameter of a soft material might require specialized techniques to avoid deformation during measurement. By carefully addressing these factors, we reduce uncertainties and obtain accurate and reliable measurement data.

Q 19. Explain your understanding of different measurement techniques (e.g., optical, tactile, laser).

Different measurement techniques offer varying advantages and are chosen based on the specific application and required accuracy.

- Optical measurement: Techniques like microscopy, interferometry, and image analysis offer high precision and non-contact measurement. This is ideal for delicate or fragile components. For example, interferometry can measure surface roughness with nanometer precision.

- Tactile measurement: This involves physical contact between the probe and the workpiece, such as using coordinate measuring machines (CMMs) or dial indicators. Tactile methods are versatile and can measure a wide range of features, though they are sometimes unsuitable for delicate surfaces.

- Laser scanning: This uses laser beams to scan an object’s surface, creating a 3D point cloud. Laser scanning is quick and efficient, providing a complete 3D model of the part, ideal for complex geometries. It’s particularly useful for reverse engineering and rapid prototyping.

Q 20. Describe your experience with laser scanning technology.

My experience with laser scanning technology encompasses several applications, including reverse engineering, quality control, and inspection. I’ve worked with various types of scanners, from handheld devices for smaller parts to large-scale systems for scanning entire vehicle bodies. I’m proficient in processing and analyzing point cloud data, using software like [Mention Specific Software] to generate 3D models, identify deviations from CAD models, and perform dimensional analysis. For instance, I used laser scanning to create a 3D model of a heritage artifact for preservation purposes, accurately capturing its complex details. In manufacturing, I utilized laser scanning to quickly inspect large batches of parts for surface defects or dimensional inaccuracies, enhancing production efficiency and quality control. I understand the importance of proper scanning parameters, data registration, and post-processing techniques for accurate and reliable results.

Q 21. How do you troubleshoot measurement equipment issues?

Troubleshooting measurement equipment issues follows a systematic approach. I begin by identifying the problem: Is it an inaccurate measurement, a malfunctioning component, or a software issue? Then, I review the instrument’s calibration records and operating log to see if any anomalies are present. For instance, a sudden temperature change might cause drift in some instruments. If the problem persists, I systematically check the instrument’s various components, such as power supply, sensors, and cabling, for any signs of damage or malfunction. I also check the surrounding environment for factors that could affect the measurements, such as vibrations, electromagnetic interference, or improper lighting. If the issue is software-related, I review error logs and attempt standard troubleshooting steps, such as restarting the software or updating drivers. If all else fails, I consult the manufacturer’s documentation or seek assistance from specialized technical support. Throughout the process, I maintain detailed records of the troubleshooting steps taken, including dates, times, and solutions implemented. This ensures the efficient resolution of problems and aids in the prevention of future issues.

Q 22. Describe a challenging measurement problem you solved and how you approached it.

One of the most challenging measurement problems I encountered involved verifying the dimensional accuracy of micro-fabricated components for a high-precision sensor. These components were incredibly small, with features measured in micrometers, and the material was highly susceptible to deformation during handling. Traditional contact measurement methods were unsuitable due to the risk of damaging the delicate structures.

My approach involved a multi-faceted strategy:

- Selecting the Right Technique: I opted for non-contact optical metrology using a high-resolution confocal microscope. This method allowed for precise three-dimensional surface mapping without physical contact, minimizing the risk of damage.

- Environmental Control: We established a controlled environment to mitigate the effects of temperature and humidity fluctuations that could affect the dimensions of the components. This involved using a climate-controlled chamber during the measurement process.

- Data Acquisition and Processing: The confocal microscope generated a vast amount of data. To manage this, I utilized specialized software for image processing and data analysis to extract the necessary dimensional information with high accuracy and repeatability. This included implementing algorithms to correct for optical distortions and other systematic errors.

- Uncertainty Analysis: A critical aspect was a rigorous uncertainty analysis to quantify the overall uncertainty associated with the measurement process. This involved considering the uncertainties inherent in the measurement equipment, the measurement process itself, and environmental factors.

By combining these approaches, we were able to successfully verify the dimensional accuracy of the micro-fabricated components within the required tolerances, enabling the successful completion of the project.

Q 23. What are your preferred methods for documenting measurement procedures?

My preferred methods for documenting measurement procedures emphasize clarity, traceability, and ease of replication. I typically use a combination of approaches:

- Standard Operating Procedures (SOPs): I develop detailed SOPs that encompass all steps of the measurement process, including equipment setup, calibration, sample preparation, data acquisition, analysis, and reporting. These are written in a clear and concise manner, utilizing diagrams and flowcharts where appropriate. For example, an SOP for measuring the diameter of a cylindrical part would include details about the use of a micrometer, the number of measurements, and the proper handling of the instrument.

- Measurement Traceability Matrix: To ensure traceability, I create a matrix that links each measurement to the relevant standards, calibration certificates, and equipment used. This documentation is crucial for demonstrating the validity and reliability of the measurement results. Think of it as a family tree for each measurement, showing its lineage back to nationally recognized standards.

- Digital Data Management: Raw data, processed data, and reports are stored in a secure digital database. This allows for efficient data retrieval and analysis, and facilitates collaboration among team members. Data is time-stamped, and access is controlled to ensure data integrity.

This multi-layered approach ensures complete documentation of measurement procedures, facilitating consistency, repeatability, and ease of audit.

Q 24. Explain the importance of proper handling and storage of measurement equipment.

Proper handling and storage of measurement equipment are paramount to maintaining accuracy and extending the lifespan of the instruments. Neglect in this area can lead to costly repairs, inaccurate measurements, and compromised product quality.

Key aspects include:

- Following Manufacturer’s Instructions: Always adhere to the manufacturer’s recommendations regarding handling, cleaning, and storage. This often includes specific procedures for calibration, environmental conditions, and the use of appropriate cleaning solutions.

- Cleanliness: Maintaining cleanliness is crucial. Dust, debris, and fingerprints can introduce errors, especially in precision measurement. Regular cleaning using appropriate methods is essential. For example, optical instruments often require specialized cleaning solutions to avoid scratching delicate lenses.

- Environmental Conditions: Temperature and humidity fluctuations can affect the accuracy of many measuring instruments. Equipment should be stored in stable environmental conditions to minimize these effects. This often involves climate-controlled storage areas.

- Calibration: Regular calibration against traceable standards is vital to ensure the accuracy of measurements. A calibration schedule should be established and adhered to, and calibration certificates should be meticulously maintained.

- Secure Storage: Equipment should be stored securely to prevent damage or theft. This may involve using protective cases, specialized racks, or locked storage areas.

Imagine a situation where a micrometer is dropped and damaged, leading to inaccurate measurements throughout a production run. This would necessitate scrapping faulty products, resulting in significant financial losses. Diligent care of equipment is a fundamental aspect of maintaining measurement integrity and business efficiency.

Q 25. What are the common standards and regulations relevant to metrology in your industry?

The common standards and regulations relevant to metrology vary depending on the specific industry, but some overarching standards are widely applicable. In many industries, including manufacturing and aerospace, standards such as ISO 17025 (General requirements for the competence of testing and calibration laboratories) are paramount. This standard provides a framework for demonstrating technical competence and ensuring the quality of calibration and testing services.

Other relevant standards include:

- NIST (National Institute of Standards and Technology) Traceability: Many metrology practices rely on traceability to national or international standards, often through NIST in the United States or equivalent organizations in other countries. This ensures consistency and comparability of measurements.

- Industry-Specific Standards: Industries often have their own specific standards and regulations governing dimensional measurements. For example, the automotive industry has stringent standards regarding the dimensional accuracy of parts. Aerospace has even stricter requirements due to safety considerations.

- Regulatory Compliance: Depending on the industry and the products being manufactured, various regulatory bodies may have specific requirements regarding measurement methods and documentation. These could include environmental regulations or safety standards.

Adherence to these standards is essential for ensuring the accuracy, reliability, and legal compliance of measurement processes. Failure to comply can lead to product recalls, legal actions, and damage to reputation.

Q 26. Describe your experience with quality management systems (e.g., ISO 9001).

My experience with quality management systems, specifically ISO 9001, is extensive. I’ve been involved in implementing and maintaining ISO 9001 compliant systems in several organizations. This involves a deep understanding of the requirements related to measurement management, calibration, and traceability.

My contributions typically include:

- Developing and Maintaining Measurement Procedures: Ensuring all measurement procedures are documented, validated, and regularly reviewed in accordance with ISO 9001 guidelines.

- Calibration Management: Establishing and managing the calibration schedule for all measurement equipment, ensuring traceability to national or international standards.

- Internal Audits: Conducting internal audits to assess compliance with ISO 9001 requirements and identify areas for improvement.

- Corrective and Preventative Actions (CAPA): Contributing to the investigation and resolution of nonconformances, implementing corrective and preventative actions to prevent recurrence.

- Training: Training personnel on proper measurement techniques, the importance of quality control, and the requirements of the ISO 9001 quality management system.

Working within an ISO 9001 framework emphasizes a systematic approach to quality control, leading to improved accuracy, efficiency, and customer satisfaction. It is a framework that encourages continuous improvement, something I deeply value in my work.

Q 27. How do you stay updated with the latest advancements in metrology?

Staying updated in the rapidly evolving field of metrology requires a multifaceted approach:

- Professional Organizations: I actively participate in professional organizations like the American Society of Mechanical Engineers (ASME) or the National Institute of Standards and Technology (NIST) to attend conferences, workshops, and training sessions. These events provide opportunities to learn about the latest advancements from leading experts.

- Peer-Reviewed Publications: I regularly read peer-reviewed journals and technical publications in the field to keep abreast of new techniques, technologies, and research findings. This includes publications focusing on advancements in optical metrology, coordinate measuring machines, and other relevant technologies.

- Industry Trade Shows and Conferences: Attending trade shows and conferences offers firsthand exposure to new measurement equipment and technologies, allowing for direct interaction with manufacturers and other professionals.

- Online Resources and Webinars: I utilize online resources, webinars, and e-learning platforms to access information about the latest developments and best practices in the field.

- Networking: Building and maintaining a network of contacts within the metrology community provides opportunities for knowledge sharing and collaboration.

Continuous learning is essential to remain competitive and provide high-quality metrology services. It’s a constantly evolving field, so a proactive and multifaceted approach to learning is critical.

Q 28. Explain your understanding of surface roughness measurement.

Surface roughness measurement quantifies the texture of a surface, indicating its deviation from an ideal smooth surface. This is crucial in many industries because surface roughness impacts functionality, durability, and aesthetic appeal. A rough surface might have poor lubricity, increased wear, or an undesirable appearance. Conversely, a very smooth surface might be prone to scratching or have undesirable optical properties.

Several methods are used for surface roughness measurement:

- Profilometry: This involves tracing a stylus across the surface to create a profile of the surface texture. The parameters such as Ra (average roughness), Rz (ten-point height), and Rmax (maximum height) are then calculated from this profile. Think of it like using a needle to trace the contours of a landscape, then mathematically analyzing the elevation differences.

- Optical Methods: These methods use optical techniques, such as interferometry or confocal microscopy, to create a three-dimensional map of the surface. This provides more comprehensive information about the surface texture than profilometry.

- Focus Variation Microscopy: This technique utilizes a high-resolution camera and a focus variation system to capture surface profile information at very high resolution, without physical contact.

The choice of method depends on factors such as the material, the required accuracy, and the scale of the surface features being measured. The data obtained from surface roughness measurements is crucial for quality control, process optimization, and ensuring that the surface texture meets the required specifications.

Key Topics to Learn for Metrology and Dimensional Measurement Interview

- Fundamental Measurement Principles: Understanding accuracy, precision, uncertainty, traceability, and calibration methods. This forms the bedrock of all metrology practices.

- Dimensional Measurement Techniques: Become proficient in various techniques like coordinate measuring machines (CMMs), optical comparators, laser scanners, and other relevant instruments. Be prepared to discuss their applications and limitations.

- Statistical Process Control (SPC) in Metrology: Understand how SPC charts, control limits, and capability analysis are used to monitor and improve measurement processes. This is crucial for quality control.

- Geometric Dimensioning and Tolerancing (GD&T): Mastering GD&T symbols and their application in engineering drawings is essential for interpreting and communicating dimensional requirements.

- Calibration and Traceability: Know the importance of traceable calibration standards and the procedures involved in ensuring the accuracy of measuring instruments. Understanding ISO 17025 is beneficial.

- Data Acquisition and Analysis: Familiarize yourself with software used for data acquisition, analysis, and reporting. Discuss your experience with data interpretation and problem-solving using metrology data.

- Specific Metrology Applications: Depending on the job description, prepare for questions on specific applications relevant to the industry, such as automotive, aerospace, or manufacturing.

- Problem-Solving & Troubleshooting: Prepare examples showcasing your ability to identify and resolve measurement inconsistencies, instrument malfunctions, or data discrepancies.

Next Steps

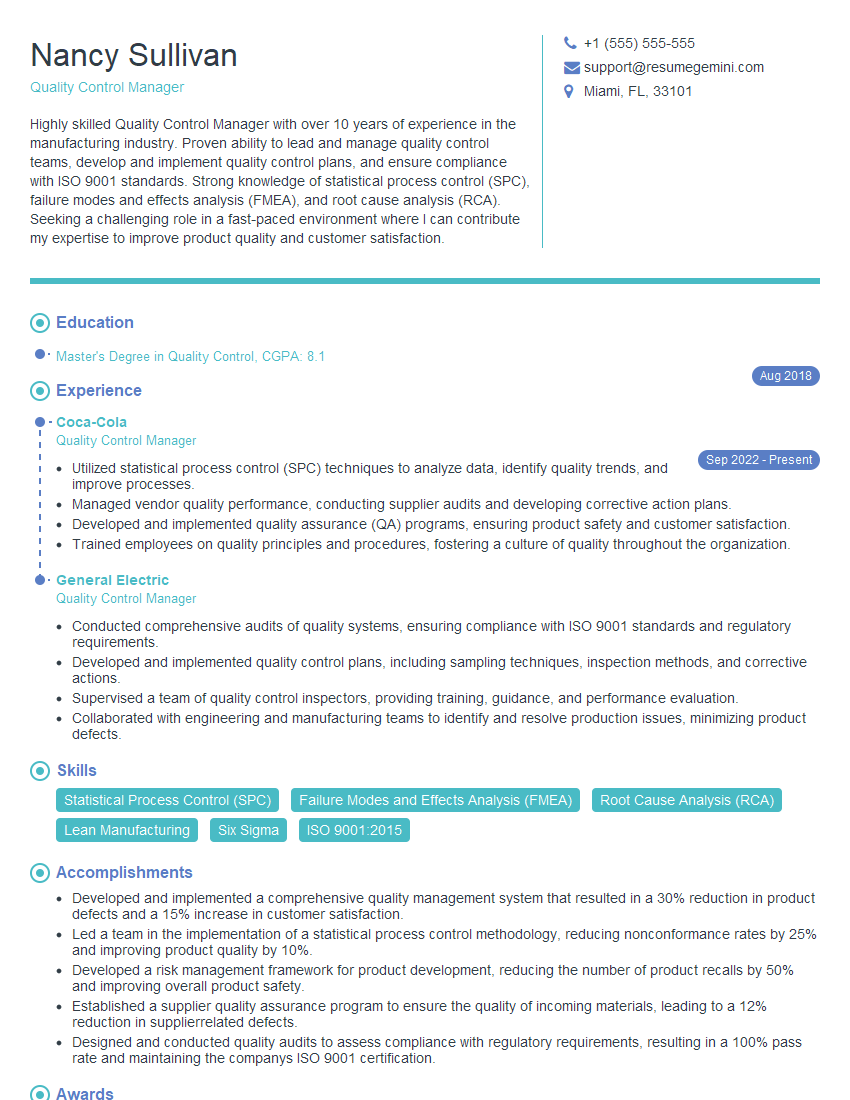

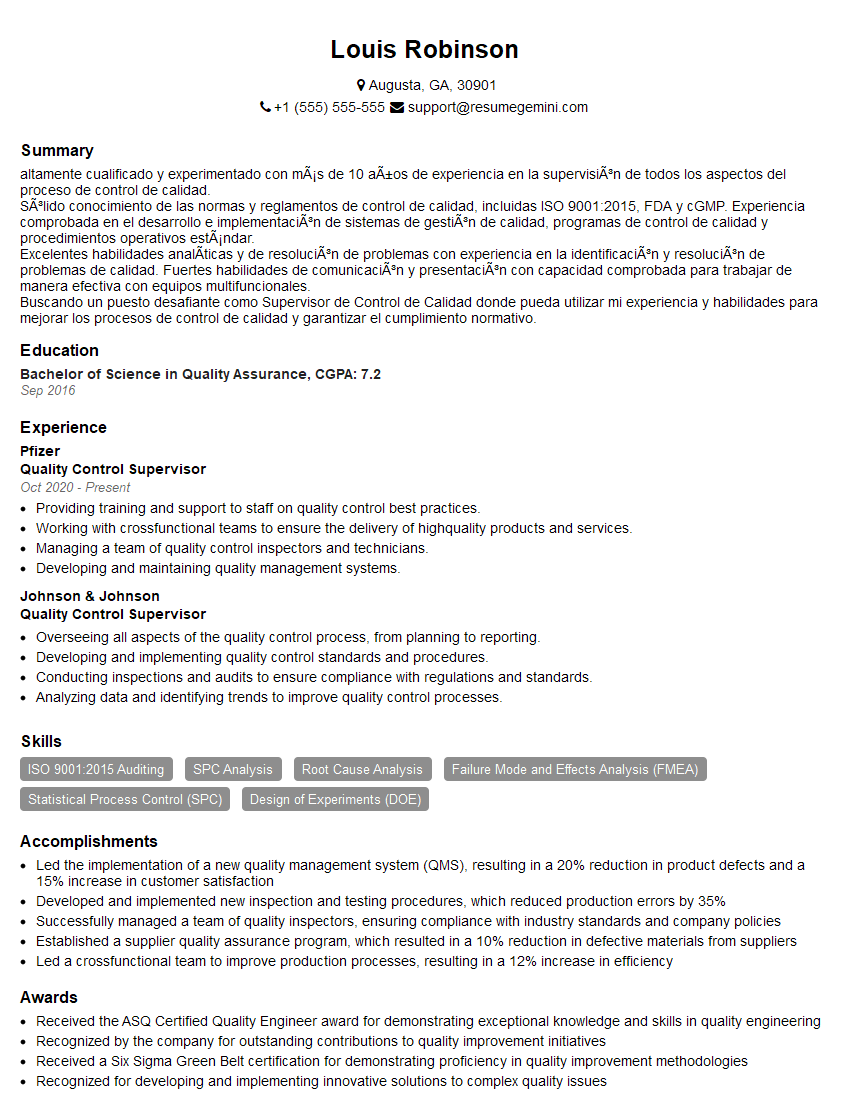

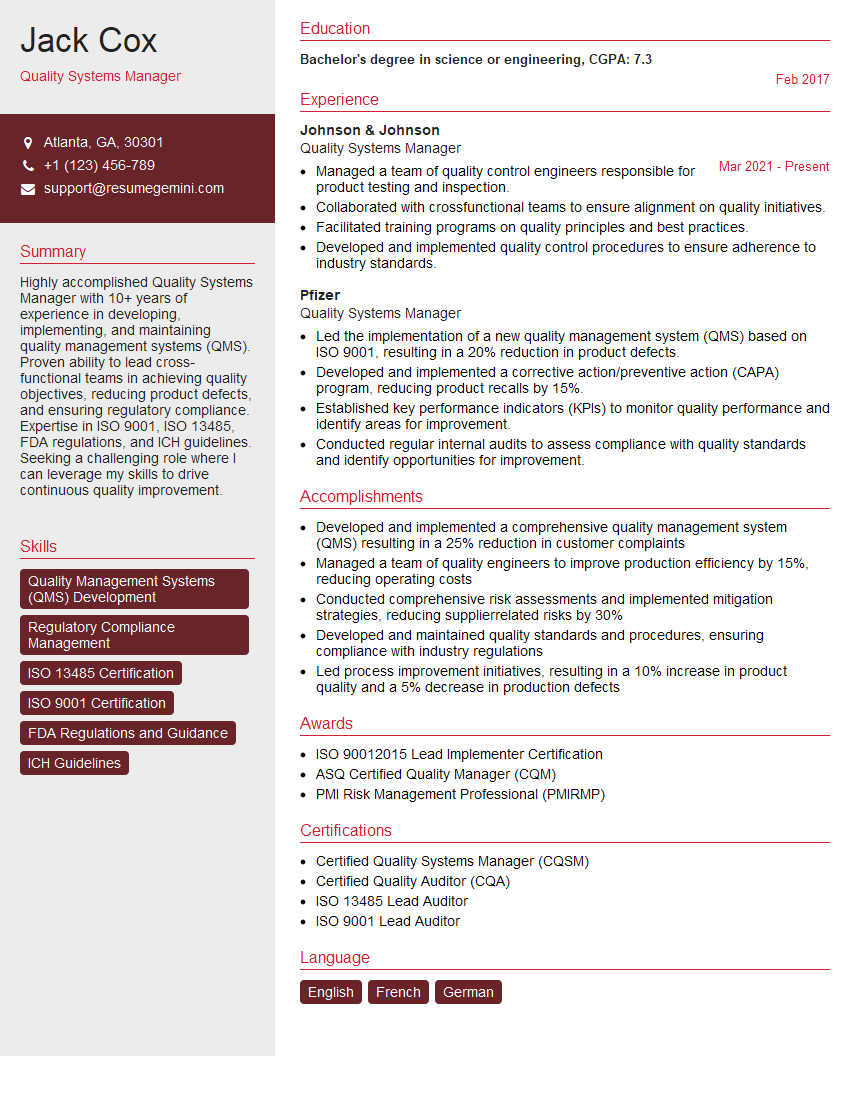

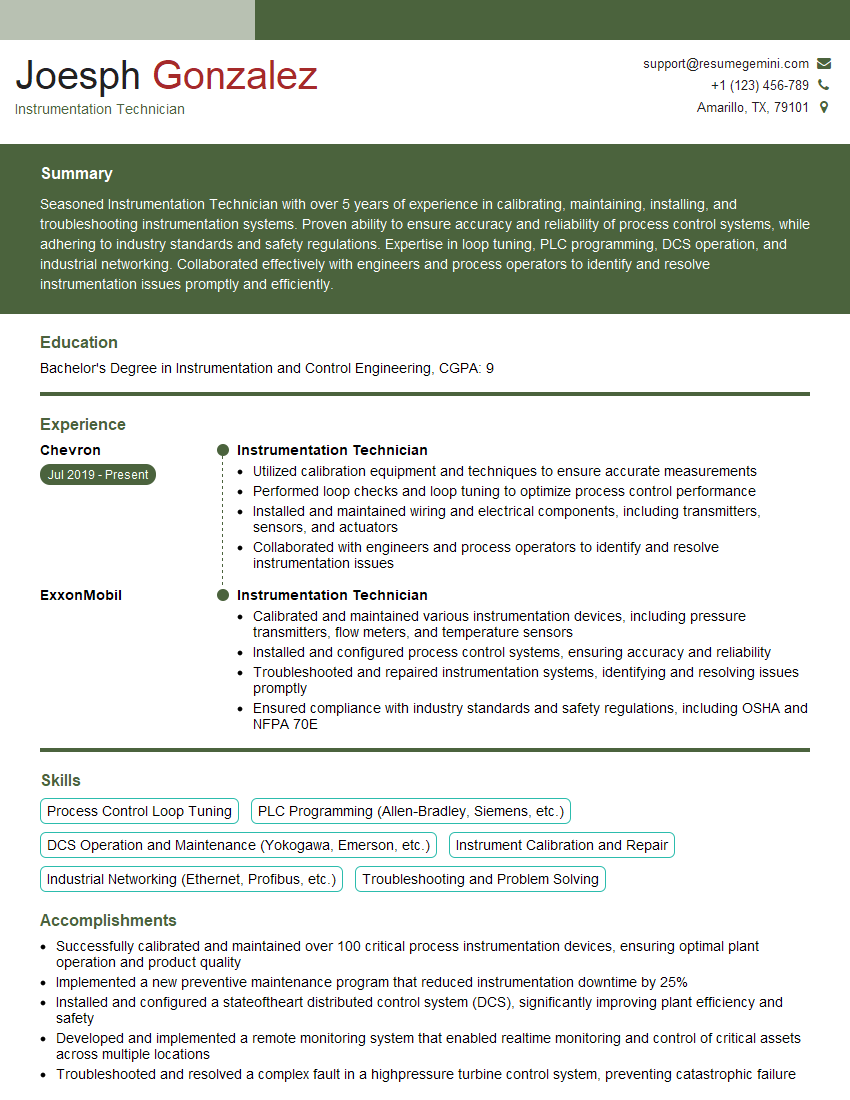

Mastering Metrology and Dimensional Measurement opens doors to exciting career opportunities in various industries demanding high precision and quality control. A strong understanding of these principles significantly enhances your value as a skilled professional and increases your earning potential. To maximize your job prospects, crafting a well-structured, ATS-friendly resume is crucial. ResumeGemini offers a valuable resource for building a professional and impactful resume that highlights your skills and experience effectively. Take advantage of their tools and resources, including examples of resumes tailored specifically to Metrology and Dimensional Measurement, to present yourself as the ideal candidate.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO