Cracking a skill-specific interview, like one for Platform Operation, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Platform Operation Interview

Q 1. Explain your experience with CI/CD pipelines.

CI/CD, or Continuous Integration/Continuous Delivery, is a set of practices that automates the process of building, testing, and deploying software. Think of it as an assembly line for software, ensuring consistent and reliable releases. My experience spans various CI/CD tools, including Jenkins, GitLab CI, and Azure DevOps.

In a previous role, we transitioned from a manual deployment process, prone to human error and delays, to a fully automated CI/CD pipeline. This involved integrating various tools: Git for version control, Jenkins for orchestration, automated testing frameworks like Selenium and JUnit, and deployment tools tailored to our cloud infrastructure (AWS in this case). We achieved a significant reduction in deployment time—from days to hours—and a marked improvement in the stability of our releases. We used a branching strategy (Gitflow) to manage different versions and features, ensuring a robust and controlled deployment process. A key metric was the reduction in deployment failures, which fell by 75% post-implementation.

Another project involved implementing canary deployments, where a new version is initially released to a small subset of users before a full rollout. This allowed us to identify and address potential issues early on, mitigating the risk of widespread service disruption.

Q 2. Describe your experience with monitoring and alerting systems.

Monitoring and alerting systems are crucial for maintaining the health and performance of any platform. They provide real-time visibility into system behavior and alert us to potential problems before they impact users. My experience encompasses a range of tools, including Prometheus, Grafana, Datadog, and ELK stack (Elasticsearch, Logstash, Kibana).

For instance, in a past project, we implemented a comprehensive monitoring system using Prometheus and Grafana. We defined key performance indicators (KPIs) like CPU utilization, memory usage, request latency, and error rates. Grafana dashboards provided visually appealing representations of these metrics, facilitating quick identification of anomalies. We configured alerts based on predefined thresholds, notifying the on-call team via PagerDuty whenever critical metrics deviated from the norm. This proactive approach significantly reduced our mean time to resolution (MTTR) for incidents.

Alerting strategies are crucial. We implemented tiered alerts, prioritizing critical issues over less severe ones to avoid alert fatigue. We also invested heavily in automated root cause analysis tools, which helped us quickly pinpoint the source of problems, saving valuable time during incident response.

Q 3. How do you handle incidents and outages?

Handling incidents and outages requires a structured and methodical approach. I adhere to incident management best practices, typically following the Incident Command System (ICS) or a similar framework. This involves clear communication, swift escalation, and meticulous documentation.

My approach typically follows these steps: 1. Acknowledge: Quickly acknowledge the incident and its impact. 2. Investigate: Gather information to understand the root cause. 3. Mitigate: Implement immediate actions to reduce the impact. 4. Recover: Restore the system to its normal state. 5. Review: Conduct a post-incident review to identify improvements for future prevention.

For example, during a recent outage caused by a database replication issue, we followed this framework. We immediately acknowledged the issue to affected users, then investigated the problem using logs and monitoring tools, identifying the replication lag as the culprit. We mitigated the impact by rerouting traffic to a healthy database replica. After recovery, we conducted a thorough post-incident review, identifying weaknesses in our replication monitoring and implementing improvements to prevent similar incidents in the future. The key is collaboration and clear communication within the team and to stakeholders.

Q 4. What are your preferred methods for capacity planning?

Capacity planning involves predicting future resource needs to ensure the platform can handle anticipated workloads. It’s a balance between optimizing costs and guaranteeing performance. I employ various methods, including historical data analysis, load testing, and forecasting models.

I typically start by analyzing historical performance data to identify trends and patterns in resource consumption. This involves examining metrics like CPU usage, memory consumption, network traffic, and storage utilization. I then use this data to project future needs, considering factors like seasonality, growth trends, and planned feature releases. Load testing, using tools like JMeter or k6, is crucial to validate our capacity predictions under simulated peak loads. This helps identify bottlenecks and potential scaling issues early on. Finally, I often employ forecasting techniques, such as exponential smoothing or ARIMA models, to refine our capacity projections and anticipate unexpected spikes in demand. The goal is to ensure we have sufficient resources to handle peak loads without compromising performance, while avoiding over-provisioning that leads to unnecessary costs.

Q 5. Explain your experience with automation and scripting.

Automation and scripting are fundamental to efficient platform operation. I’m proficient in various scripting languages including Python, Bash, and PowerShell. I utilize these skills for tasks such as automating deployments, configuring servers, monitoring systems, and responding to incidents.

For example, I developed a Python script to automate the provisioning of new servers on AWS, significantly reducing the time and effort required for onboarding new infrastructure. This script handles tasks such as creating EC2 instances, configuring security groups, installing necessary software, and deploying applications. Another script I created automates the process of backing up critical database instances. Automation not only saves time but also reduces human error, enhancing the reliability and consistency of our operations. This is vital for efficient scaling and maintenance.

Q 6. How do you ensure platform security?

Ensuring platform security is paramount. My approach involves implementing a multi-layered security strategy encompassing various measures. This includes access control, network security, data encryption, vulnerability management, and security monitoring.

For example, I implemented a robust access control system using role-based access control (RBAC) to restrict access to sensitive resources. We leverage strong password policies, multi-factor authentication (MFA), and regular security audits to reduce the risk of unauthorized access. Network security measures include firewalls, intrusion detection/prevention systems (IDS/IPS), and regular penetration testing to identify and address vulnerabilities. Data encryption, both in transit and at rest, is critical, protecting sensitive information from unauthorized access. We utilize a vulnerability scanning tool to identify security weaknesses and prioritize their remediation. Continuous security monitoring involves actively monitoring logs and security alerts to detect suspicious activities and respond quickly to potential threats.

Q 7. Describe your experience with performance tuning and optimization.

Performance tuning and optimization are ongoing processes to enhance the speed, efficiency, and scalability of the platform. This involves identifying bottlenecks, optimizing code, and improving resource utilization.

In one project, we improved the performance of a web application by optimizing database queries. Through query analysis and indexing, we reduced query execution time by 50%, resulting in faster response times for users. We also implemented caching strategies to reduce the load on the database and improve response times. Profiling code using tools such as YourKit or JProfiler helped identify performance bottlenecks in the application code, enabling us to optimize specific areas and improve overall efficiency. Load testing played a key role in validating these optimizations and ensuring that performance improvements were sustainable under various load conditions. Continuous performance monitoring allowed us to track the effects of our changes and make further optimizations over time.

Q 8. How do you manage and prioritize technical debt?

Managing technical debt is like managing any debt – you need a strategy to pay it down without crippling your current operations. It’s about prioritizing which issues to address first based on their impact and risk. We typically use a combination of methods:

- Prioritization Matrix: We categorize technical debt based on impact (e.g., low, medium, high) and risk (e.g., low, medium, high). High impact, high-risk debt gets immediate attention. Think of a critical security vulnerability – that’s a high-impact, high-risk item needing immediate remediation.

- Cost of Delay Analysis: We estimate the cost of *not* addressing a piece of debt, factoring in potential future issues, maintenance overhead, and lost opportunities. This helps in prioritizing issues that will become significantly more expensive to fix later.

- Regular Audits: We conduct regular code reviews, security assessments, and performance testing to identify and document technical debt. Think of it as a financial audit for your codebase.

- Dedicated Time Allocation: We allocate a specific percentage of sprint capacity or project time to tackle technical debt. This ensures that it doesn’t get completely neglected.

For instance, in a past project, we identified a poorly documented API causing frequent integration issues. Using the cost of delay analysis, we prioritized refactoring it, leading to a significant reduction in support tickets and faster development cycles.

Q 9. What is your experience with containerization technologies (e.g., Docker, Kubernetes)?

I have extensive experience with Docker and Kubernetes. Docker provides lightweight, portable containers for applications, ensuring consistent execution across environments. Kubernetes takes this further, automating deployment, scaling, and management of containerized applications at scale.

I’ve used Docker to create consistent development and production environments, eliminating the ‘it works on my machine’ problem. I’ve leveraged Kubernetes to orchestrate complex deployments across multiple nodes, ensuring high availability and automatic scaling based on demand. This includes experience with managing deployments using YAML manifests, setting up ingress controllers for external access, and utilizing persistent volumes for data storage.

For example, in a recent project, we migrated a monolithic application to microservices using Docker and Kubernetes. This significantly improved scalability, resilience, and deployment speed. We used Kubernetes’ built-in auto-scaling features to handle traffic spikes during peak hours, ensuring optimal performance and resource utilization. We also employed various monitoring tools to track resource usage and application health within the Kubernetes cluster.

Q 10. Describe your experience with cloud platforms (e.g., AWS, Azure, GCP).

My cloud experience spans AWS, Azure, and GCP. I’m comfortable designing, deploying, and managing applications on all three platforms. Each has its strengths: AWS is known for its extensive services, Azure integrates tightly with Windows environments, and GCP excels in big data processing and machine learning.

My experience includes managing virtual machines, databases, storage services, networking infrastructure, and security configurations across these platforms. I’m proficient in using their respective command-line interfaces (CLIs) and management consoles. I understand the importance of security best practices like IAM roles, access control lists (ACLs), and network segmentation.

For example, I recently led a migration from on-premise servers to AWS. This involved migrating databases, web servers, and application logic using tools like AWS Database Migration Service and Elastic Beanstalk. We implemented robust security measures including encryption at rest and transit, and multi-factor authentication to ensure the migrated systems were secure and compliant.

Q 11. How do you troubleshoot complex technical problems?

Troubleshooting complex problems involves a systematic approach. I typically follow these steps:

- Gather Information: Start by collecting logs, metrics, and error messages. Understand the symptoms of the problem.

- Reproduce the Problem: If possible, try to reproduce the issue in a controlled environment to isolate the root cause. This helps in ruling out intermittent issues.

- Isolate the Source: Use tracing and debugging tools to pinpoint the specific component causing the problem. Consider network traffic, database queries, and application logs.

- Formulate Hypotheses: Develop potential explanations for the issue based on the information gathered. This requires deep technical knowledge of the system.

- Test Hypotheses: Systematically test each hypothesis to validate or refute it. Make small, incremental changes to observe the effects.

- Document Findings: Record the troubleshooting steps, root cause, and solution to prevent future occurrences. This is critical for knowledge sharing and team collaboration.

For example, I once encountered a performance bottleneck in a high-traffic application. By analyzing application logs, database queries, and network traffic, we identified a specific database query as the culprit. We optimized the query and implemented caching, which drastically improved the application’s performance.

Q 12. What is your experience with infrastructure as code (IaC)?

Infrastructure as Code (IaC) is essential for automating and managing infrastructure. It treats infrastructure as code, allowing version control, testing, and repeatable deployments. I have experience using tools like Terraform and Ansible.

Terraform allows defining infrastructure in a declarative manner, specifying the desired state. Ansible utilizes agentless architecture for configuration management and automation, allowing for efficient management of servers and applications. I’ve used both to provision cloud resources, configure servers, and deploy applications in a consistent and reliable manner.

In a previous role, we used Terraform to manage our entire AWS infrastructure. This allowed us to automate the creation of EC2 instances, VPCs, S3 buckets, and other resources. Version control enabled us to track infrastructure changes and roll back to previous versions if needed. This approach greatly reduced the risk of human error and improved deployment speed and reliability.

Q 13. Explain your understanding of different logging and tracing systems.

Logging and tracing systems are crucial for monitoring and troubleshooting applications. Logging provides structured records of events, while tracing follows requests through the entire system, providing end-to-end visibility.

I have experience using various logging systems, including Elasticsearch, Fluentd, and Kibana (the ELK stack), as well as centralized logging solutions like Splunk and Datadog. For tracing, I’ve used tools like Jaeger, Zipkin, and distributed tracing capabilities within cloud platforms.

For example, in a recent project, we implemented the ELK stack to collect, analyze, and visualize logs from various microservices. This provided invaluable insights into application behavior, enabling quicker identification and resolution of issues. We also used Jaeger for distributed tracing to track requests as they flowed through multiple services, helping us pinpoint performance bottlenecks and failures within complex application architectures.

Q 14. How do you ensure high availability and scalability of a platform?

Ensuring high availability and scalability requires a multi-faceted approach. Key strategies include:

- Redundancy: Deploying multiple instances of components across multiple availability zones or regions ensures that if one instance fails, others can take over seamlessly. Load balancers distribute traffic across these instances.

- Auto-Scaling: Automatically scaling resources up or down based on demand ensures optimal resource utilization and handles traffic spikes effectively. This typically involves cloud-based auto-scaling services.

- Database Replication: Replicating databases across multiple regions ensures data availability even if one region experiences an outage.

- Caching: Caching frequently accessed data closer to users reduces the load on backend systems and improves response times. CDNs (Content Delivery Networks) can also be employed.

- Load Balancing: Distributing traffic across multiple servers prevents overload on any single instance.

- Health Checks and Monitoring: Continuous monitoring of system health and performance is critical for detecting and responding to issues proactively.

For example, in a previous project, we designed a highly available web application using multiple EC2 instances across multiple availability zones in AWS. We used Elastic Load Balancing to distribute traffic and Auto Scaling to automatically add or remove instances based on demand. This ensured the application remained available even during significant traffic surges.

Q 15. Describe your experience with database administration and management.

My experience with database administration and management spans over eight years, encompassing various relational and NoSQL databases. I’ve worked extensively with MySQL, PostgreSQL, MongoDB, and Cassandra, managing everything from database design and implementation to performance tuning, security, and backup/recovery strategies. For instance, at my previous role, I led the migration of our company’s primary database from MySQL to PostgreSQL to handle increasing data volume and improve query performance. This involved careful planning, data migration scripting, thorough testing, and ongoing monitoring. I’m proficient in using SQL and NoSQL query languages, schema design best practices, and database monitoring tools like Prometheus and Grafana. I also possess strong experience in optimizing database queries and resolving performance bottlenecks, often leveraging techniques like indexing, query rewriting, and connection pooling.

Furthermore, I understand the importance of database security, incorporating measures such as access control lists, encryption, and regular security audits. I also have a deep understanding of database replication and high availability strategies to ensure business continuity.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are your preferred methods for disaster recovery planning?

My preferred methods for disaster recovery planning hinge on a multi-layered approach emphasizing redundancy and automation. This includes a combination of hot/warm standby systems, automated backups to geographically diverse locations (cloud-based solutions preferred), and robust failover mechanisms. I advocate for regular disaster recovery drills to test the effectiveness of the plan and identify any weaknesses.

For example, in a past project, we implemented a hot standby setup using a geographically separate data center with automated failover, utilizing tools like Terraform and Ansible for infrastructure orchestration. This minimized downtime in case of a primary data center outage. We also implemented a robust version control system for our infrastructure-as-code to maintain reproducibility and track changes.

A critical aspect of disaster recovery is not just the technical implementation, but also clear communication protocols and well-defined roles and responsibilities. This ensures a coordinated response during an actual disaster.

Q 17. How do you measure and improve platform performance?

Measuring and improving platform performance is an iterative process that requires a combination of proactive monitoring and reactive troubleshooting. I typically use a multi-pronged approach: First, defining key performance indicators (KPIs). These can range from response times and throughput to error rates and resource utilization. Then, I leverage monitoring tools such as Prometheus, Grafana, and Datadog to collect real-time data on these KPIs. These tools allow for the creation of dashboards providing a clear overview of the platform’s health.

Once potential bottlenecks are identified, I employ a combination of techniques to address them, ranging from optimizing code and database queries to scaling infrastructure (horizontal or vertical). Profiling tools help pinpoint performance issues within the application code. After implementing changes, I rigorously monitor the impact of those changes to ensure improvements and avoid unintended consequences. A/B testing can be invaluable here.

Lastly, continuous performance improvement is key. I regularly review and refine our monitoring strategy, incorporating new metrics as needed and adapting to evolving system requirements. This is crucial for staying ahead of potential problems and ensuring optimal performance.

Q 18. What are your experience with different load balancing strategies?

I have experience with various load balancing strategies, including round-robin, least connections, IP hash, and more advanced techniques like weighted round-robin and consistent hashing. The choice of strategy depends heavily on the application and its specific requirements. For example, in a scenario with heterogeneous server capabilities, a weighted round-robin algorithm is superior to a simple round-robin, distributing load proportionally to each server’s capacity. Similarly, if session persistence is crucial, an IP hash approach might be preferred to maintain client-server affinity.

I’ve worked with both hardware and software-based load balancers, including popular solutions like HAProxy, Nginx, and AWS Elastic Load Balancing. My experience also includes configuring and managing these load balancers, including health checks, SSL termination, and traffic shaping. Effective load balancing necessitates not just choosing the right algorithm but also careful monitoring and adjustment to ensure optimal performance and high availability.

Q 19. Describe your experience with implementing platform observability.

Implementing platform observability involves establishing a comprehensive system for monitoring and analyzing the platform’s behavior. This goes beyond basic monitoring by providing deep insights into the system’s inner workings and enabling proactive identification of potential issues. Key components include:

- Logging: Centralized logging using tools like Elasticsearch, Fluentd, and Kibana (the ELK stack) or similar solutions allow for efficient searching and analysis of log data.

- Metrics: Collecting metrics on various aspects of the system (CPU utilization, memory usage, network traffic, etc.) using tools like Prometheus and Grafana.

- Tracing: Employing distributed tracing systems like Jaeger or Zipkin to track requests as they flow through the entire system, helping pinpoint performance bottlenecks and identifying the root cause of errors.

In a recent project, we implemented a comprehensive observability solution utilizing the ELK stack for logging, Prometheus for metrics, and Jaeger for tracing. This allowed us to effectively monitor and troubleshoot our microservices-based architecture, leading to significant improvements in our response time and overall system stability.

Q 20. How do you handle communication during critical incidents?

During critical incidents, effective communication is paramount. I utilize a well-defined communication plan that includes clearly defined roles, escalation paths, and communication channels. This usually involves a combination of tools like Slack or similar chat platforms for rapid updates, and conference calls or video conferences for more detailed discussions.

Maintaining transparency is crucial. Keeping all stakeholders informed of the situation, the steps being taken, and the estimated time to resolution helps build trust and minimizes panic. Regular updates, even if they contain only limited progress, are vital. Post-incident reviews are also key to identifying areas for improvement in our response procedures and overall communication effectiveness.

A well-structured incident management process, often following the Incident Command System (ICS) principles, further enhances communication and coordination.

Q 21. What is your experience with implementing and managing backups?

My experience with implementing and managing backups encompasses a variety of strategies and technologies. I emphasize the 3-2-1 rule: maintaining three copies of data, on two different media types, with one copy offsite. This ensures data redundancy and protection against various failure scenarios. I have experience with both physical and cloud-based backup solutions.

For example, I have implemented and managed backup strategies using tools like Bacula, rsync, and cloud-based services such as AWS S3 and Azure Blob Storage. I always prioritize testing the backup and restore process regularly to ensure its effectiveness and identify any potential problems before an actual disaster strikes. This includes performing full and incremental backups, verifying the integrity of backups, and practicing restores to ensure data can be recovered quickly and accurately. Automation plays a significant role in ensuring backups are performed reliably and efficiently.

Q 22. What metrics do you use to measure platform health?

Measuring platform health involves monitoring a variety of key performance indicators (KPIs) and metrics, categorized for better understanding and actionable insights. We don’t rely on a single metric, but rather a holistic view.

- Availability: This measures uptime and the percentage of time the platform is operational. Tools like Nagios or Prometheus are essential for tracking this, with specific alerts triggered at pre-defined thresholds (e.g., 99.99% uptime target). A drop below this triggers immediate investigation.

- Performance: We monitor response times, latency, and throughput. For instance, we might track average API response time, database query execution time, and overall system load. Slowdowns often indicate bottlenecks requiring optimization or scaling.

- Error Rates: Tracking the frequency and types of errors is vital. Tools like Sentry or ELK stack allow for detailed error analysis. High error rates suggest underlying issues that need to be addressed immediately to prevent cascading failures.

- Resource Utilization: Monitoring CPU, memory, disk I/O, and network bandwidth utilization helps to proactively identify potential resource constraints. Tools like Datadog or Grafana provide visual dashboards for easy identification of resource bottlenecks.

- Security: This includes monitoring for security breaches, unauthorized access attempts, and successful logins. Security Information and Event Management (SIEM) systems are crucial for this, providing real-time alerts and detailed logs for incident response.

In one project, we identified a performance bottleneck by monitoring database query times. Our investigation revealed an inefficient query that was slowing down the entire system. Optimizing this query resulted in a significant improvement in overall platform performance.

Q 23. Describe your experience with different deployment strategies.

My experience encompasses various deployment strategies, each with its own strengths and weaknesses, and the choice often depends on the application’s complexity and the required level of downtime.

- Blue/Green Deployments: This involves maintaining two identical environments – blue (production) and green (staging). New code is deployed to the green environment, thoroughly tested, and then traffic is switched from blue to green. This minimizes downtime and provides a quick rollback option. We used this strategy for a high-traffic e-commerce platform.

- Canary Deployments: A subset of users is directed to the new version while the majority remains on the old version. This allows for gradual rollout and immediate feedback, minimizing the risk of widespread issues. We successfully implemented this for a new feature release, allowing us to address minor bugs before a full deployment.

- Rolling Deployments: New versions are gradually rolled out across multiple servers, one at a time. This approach minimizes downtime and allows for continuous monitoring of the new version’s performance. It’s ideal for microservices architectures.

- Immutable Infrastructure: Instead of updating existing servers, new servers are provisioned with the updated code and configurations. This approach improves consistency and reduces the risk of configuration drift. We’ve adopted this approach increasingly in our cloud-native projects.

Choosing the right strategy requires careful consideration of factors such as application complexity, traffic volume, and risk tolerance. Each project demands a tailored approach.

Q 24. How do you ensure platform compliance with security and regulatory requirements?

Ensuring platform compliance involves a multi-layered approach that combines technical safeguards with robust operational processes.

- Security Audits and Penetration Testing: Regular security audits and penetration testing are crucial to identify vulnerabilities and ensure compliance with relevant standards such as ISO 27001 or SOC 2. Findings are documented and addressed promptly.

- Access Control and Identity Management: We employ strict access control policies, using tools like IAM (Identity and Access Management) systems to manage user permissions and access levels. Principle of least privilege is strictly enforced.

- Data Encryption and Protection: Sensitive data is encrypted both in transit and at rest, following industry best practices. Regular data backups and disaster recovery plans are in place.

- Vulnerability Management: Automated vulnerability scanning tools are used to identify and remediate security vulnerabilities on a regular basis. We use vulnerability databases and follow security advisories.

- Compliance Documentation and Reporting: Detailed documentation of security policies, procedures, and incident response plans is maintained. Regular compliance reports are generated to demonstrate ongoing compliance.

In one instance, we discovered a vulnerability during a penetration test that could have allowed unauthorized access to sensitive data. We immediately remediated the vulnerability and implemented additional security controls to prevent future occurrences, updating our documentation accordingly.

Q 25. Explain your experience with different service mesh technologies.

My experience with service mesh technologies centers around enhancing the communication and management of microservices. Service meshes provide critical functionalities for managing complex deployments.

- Istio: I have extensive experience with Istio, leveraging its features for service discovery, traffic management (including routing and load balancing), security (mTLS, authorization), and observability. Istio allows for fine-grained control over service-to-service communication.

- Linkerd: I’ve worked with Linkerd, appreciating its simplicity and focus on reliability. Its lightweight architecture is well-suited for deployments where minimal overhead is desired. We successfully implemented Linkerd in a project to improve the observability of a critical microservice.

- Consul Connect: I have experience using Consul Connect for service discovery and secure communication. Its integration with the HashiCorp ecosystem makes it a strong option for organizations already using Consul.

The choice between these technologies depends on specific project requirements. Istio provides a rich feature set, but Linkerd’s simplicity might be preferred in certain contexts. Consul Connect excels when integrated with existing HashiCorp infrastructure. Understanding these tradeoffs is crucial for selecting the right service mesh.

Q 26. Describe your experience with different configuration management tools.

Configuration management tools are essential for automating and managing infrastructure and application configurations consistently across different environments. My experience spans several popular tools.

- Ansible: I’ve used Ansible extensively for automating infrastructure provisioning, configuration management, and application deployments. Its agentless architecture and simplicity make it ideal for managing diverse environments.

- Chef: I have experience with Chef, particularly its infrastructure-as-code capabilities. Chef’s robust features are well-suited for managing complex and large-scale deployments.

- Puppet: I’ve worked with Puppet, appreciating its declarative approach to configuration management. Puppet excels in managing complex infrastructure configurations with a focus on compliance and consistency.

- Terraform: While not strictly a configuration management tool, Terraform is essential for managing infrastructure-as-code. I’ve used it extensively to provision and manage cloud infrastructure.

The selection of a configuration management tool depends on the specific project needs and team expertise. Ansible’s agentless nature is attractive for simplicity, while Chef and Puppet offer more robust features for complex environments. Terraform’s role in defining and managing infrastructure remains critical in any modern deployment pipeline.

Q 27. What is your experience with implementing and managing API gateways?

API gateways act as a central point of entry for all API requests, providing essential functionalities for managing, securing, and monitoring APIs.

- Kong: I’ve extensively used Kong, a highly scalable and versatile API gateway. Its plugin architecture allows for easy customization and extension of its functionalities, addressing various needs such as authentication, rate limiting, and request transformation.

- Apigee: I have experience with Apigee, a comprehensive API management platform offering advanced features for analytics, security, and developer portals. Its enterprise-grade capabilities make it ideal for large-scale deployments.

- Amazon API Gateway: I’ve leveraged Amazon API Gateway for managing APIs deployed on AWS. Its seamless integration with other AWS services makes it a convenient choice for cloud-native architectures.

Implementing and managing API gateways involve careful planning and consideration of factors like security, scalability, and performance. Choosing the right gateway involves evaluating features, scalability, and integration with existing infrastructure. In one project, implementing Kong significantly improved the security and performance of our API ecosystem.

Q 28. How do you stay up-to-date with the latest technologies in platform operations?

Staying current in the rapidly evolving field of platform operations requires a multi-pronged approach.

- Industry Conferences and Events: Attending conferences like KubeCon, AWS re:Invent, and DockerCon provides valuable insights into the latest technologies and best practices.

- Online Courses and Tutorials: Platforms like Coursera, Udemy, and A Cloud Guru offer excellent courses on cloud computing, DevOps, and related topics. These provide structured learning opportunities.

- Technical Blogs and Publications: Following industry blogs and publications, such as those from CNCF (Cloud Native Computing Foundation), keeps me abreast of the latest developments and trends.

- Open Source Contributions: Contributing to open-source projects provides hands-on experience with the latest technologies and fosters collaboration with other experts.

- Community Engagement: Actively participating in online communities and forums allows for knowledge sharing and problem-solving with peers.

Continuous learning is not just a job requirement; it’s a passion. Engaging with the community and experimenting with new technologies keeps me excited and ensures I can apply the best solutions to the challenges we face.

Key Topics to Learn for Platform Operation Interview

- Platform Architecture: Understanding the underlying infrastructure, including hardware, software, and network components. Consider exploring different architectural patterns and their trade-offs.

- Monitoring and Alerting: Practical application of monitoring tools to identify and resolve performance issues. Focus on proactive monitoring strategies and effective alert management to minimize downtime.

- Incident Management: Mastering incident response methodologies, including root cause analysis, and effective communication during critical situations. Practice applying incident management frameworks.

- Capacity Planning and Scaling: Theoretical concepts of capacity planning and practical application of scaling techniques to handle fluctuating workloads. Explore different scaling strategies (vertical, horizontal).

- Automation and Scripting: Demonstrate proficiency in automating routine tasks and troubleshooting using scripting languages. Highlight experience with relevant tools and technologies.

- Security Best Practices: Understanding and applying security principles relevant to platform operation, including access control, vulnerability management, and incident response procedures.

- High Availability and Disaster Recovery: Theoretical understanding of HA and DR strategies and their practical implementation to ensure business continuity. Explore different recovery strategies and their implications.

- Performance Optimization: Practical techniques for identifying and resolving performance bottlenecks. Demonstrate an understanding of performance metrics and optimization strategies.

- Cloud Technologies (if applicable): If relevant to the role, showcase your understanding of cloud platforms (AWS, Azure, GCP) and their services related to platform operations.

Next Steps

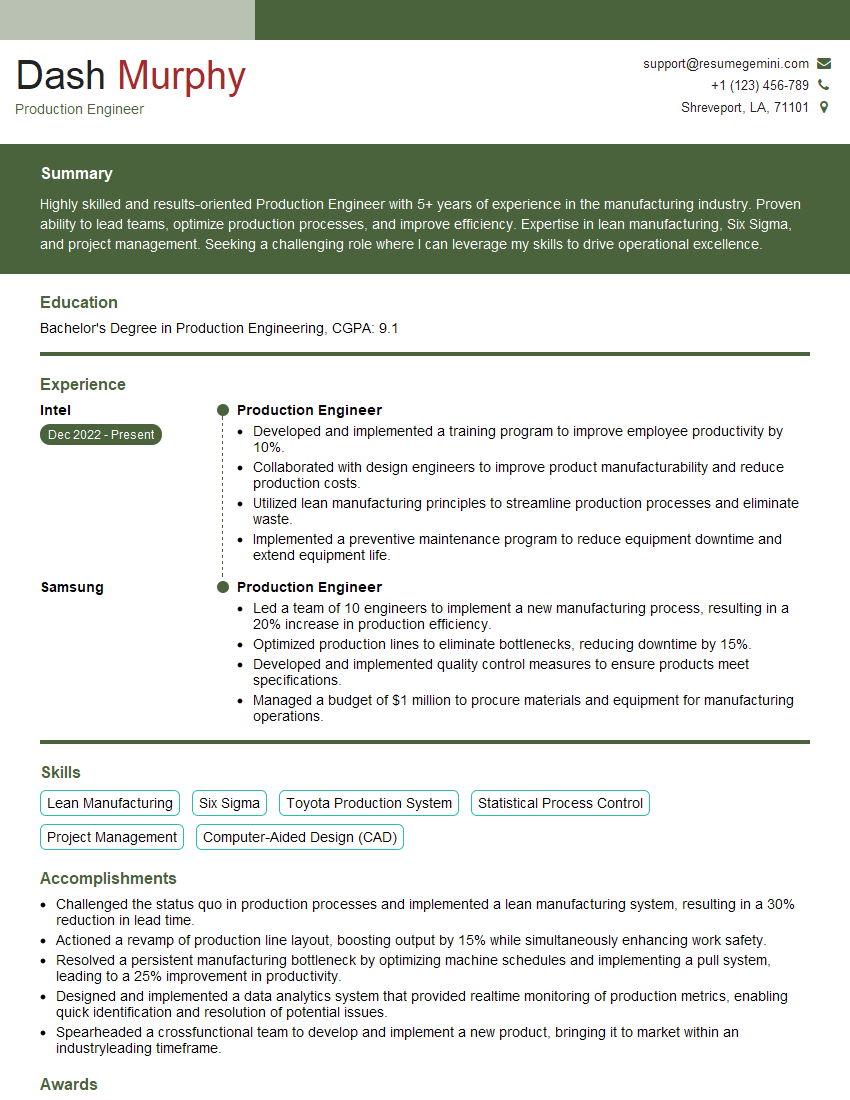

Mastering Platform Operation is crucial for a thriving career in technology, opening doors to leadership roles and high-impact projects. A strong resume is your key to unlocking these opportunities. Create an ATS-friendly resume that highlights your skills and experience effectively. Use ResumeGemini to build a professional and impactful resume tailored to your specific experience. Examples of resumes tailored to Platform Operation roles are available within ResumeGemini to help guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO