Preparation is the key to success in any interview. In this post, we’ll explore crucial Salesforce or SAP interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Salesforce or SAP Interview

Q 1. Explain the difference between a trigger and a workflow rule in Salesforce.

Both triggers and workflow rules automate actions in Salesforce, but they differ significantly in their capabilities and execution.

Workflow Rules: These are simpler, point-and-click tools primarily used for automating tasks like sending email alerts, updating fields, or creating tasks based on record changes. They operate on a single record at a time and are less powerful than triggers. Think of them as simple ‘if-then’ statements that react to record changes.

Example: A workflow rule could automatically send an email alert to a sales manager when a new lead is created with a high revenue potential.

Triggers: Triggers are more powerful, code-driven automation tools written in Apex. They are executed before or after records are inserted, updated, or deleted. They can process multiple records simultaneously and perform complex operations, including data validation, bulk updates, and calculations. Triggers provide much greater flexibility and control over automation.

Example: A trigger could automatically update the account’s total revenue whenever a new opportunity is closed-won. It could also perform more complex calculations or validations across related records.

In short: Workflow rules are easy to set up for simple tasks, while triggers are essential for complex automation requiring custom logic and bulk operations. They are not mutually exclusive; in many implementations, workflow rules handle simpler actions, while triggers address more demanding automation needs.

Q 2. Describe your experience with Apex and Visualforce.

I have extensive experience developing custom Salesforce applications using Apex and Visualforce. Apex is Salesforce’s proprietary programming language, similar to Java, used for building complex business logic and integrations. Visualforce is a framework for creating custom user interfaces in Salesforce, similar to JSP in Java.

I’ve used Apex to build triggers, batch processes (for handling large datasets efficiently), and custom controllers for Visualforce pages. For instance, I developed an Apex trigger to enforce business rules during record creation, preventing inaccurate data entry. This trigger automatically populated certain fields based on values in other fields, ensuring data consistency across the system.

My Visualforce experience includes building custom dashboards, reports, and data entry forms with improved user experience compared to standard Salesforce pages. I leveraged Visualforce to create a customized data entry form for a client, reducing data entry time by approximately 30% by simplifying the form and using visual cues. I’ve also used Visualforce to create highly customized interfaces for specific business processes.

My combined experience with Apex and Visualforce has helped me build robust and efficient solutions that significantly improve productivity and reporting capabilities within Salesforce.

Q 3. How do you handle data security in Salesforce?

Data security is paramount in Salesforce. My approach is multi-faceted and involves leveraging various Salesforce security features:

Profile and Permission Sets: I carefully define profiles and permission sets to grant users only the necessary access to specific data and functionality. This is the foundation of granular security.

Sharing Rules: Sharing rules extend the base-level sharing model (private, public read-only, public read/write) to define more fine-grained access controls between records and users, groups, or roles. This allows, for example, sharing account information between team members automatically.

Organization-Wide Defaults (OWDs): OWDs determine the base-level access that all users have to different types of records.

Apex Security: When building custom Apex code, I strictly adhere to security best practices, such as avoiding hard-coding credentials and implementing appropriate validation rules and authorization checks (e.g.,

System.debug(UserInfo.getUserId())).Data Masking and Encryption: For sensitive data, I utilize Salesforce’s data masking and encryption features where appropriate to ensure confidentiality and privacy compliance. This involves masking or encrypting fields containing sensitive information, such as credit card details.

Regular Security Reviews: I advocate for regular security reviews and audits to identify and address potential vulnerabilities. This includes evaluating user access, sharing rules, and Apex code for any security gaps.

My strategy focuses on minimizing the surface area of exposure, applying the principle of least privilege, and continuously monitoring and improving security measures to adapt to evolving threats.

Q 4. What are the different types of sharing rules in Salesforce?

Salesforce offers various types of sharing rules to control data access beyond the standard OWDs:

Hierarchy-based sharing: This type of sharing grants access based on the user’s position within a hierarchy (e.g., a manager automatically having access to their subordinates’ data).

Manual sharing: This enables manual granting of access to specific records to specific users or groups.

Owner-based sharing: This determines who owns the record and whether others can access it, according to the OWDs.

Criterion-based sharing: This type of sharing grants access based on custom criteria, defined using field values or formulas. It allows more flexible sharing based on specific business requirements. For instance, a sharing rule could grant access to all opportunities with a specific stage.

These sharing rules work together to create a complex yet controlled access system, ensuring that data is accessible to those who need it while remaining secure.

Q 5. Explain your understanding of Salesforce governor limits.

Salesforce governor limits are restrictions on the amount of resources a single transaction or process can consume. These limits exist to prevent a single process from monopolizing resources and impacting the stability and performance of the platform for other users. Understanding these limits is crucial for developing robust and scalable applications.

Key governor limits include:

SOQL Queries: Limits on the number of SOQL queries within a transaction.

DML Operations (Insert, Update, Delete): Limits on the number of DML operations per transaction.

CPU Time: Limits on the amount of CPU time a transaction can consume.

Heap Size: Limits on the amount of memory allocated to a transaction.

Number of Async Apex Operations (queues): Limits the number of queued async jobs like batch operations.

Exceeding these limits will result in an error, preventing the transaction from completing. Effective Apex coding requires careful planning and optimization to stay within these limits, often by utilizing techniques such as bulkification, efficient query design (using subqueries, aggregations), and asynchronous processing (using Queueable, Batchable interfaces) for large datasets.

Ignoring governor limits can result in unpredictable errors and performance issues. Hence, developers should always account for these limits in their code.

Q 6. How do you troubleshoot performance issues in Salesforce?

Troubleshooting performance issues in Salesforce requires a systematic approach. My process typically involves:

Identifying the Problem: Start by clearly defining the performance issue. Is it slow page load times, long report generation, or inefficient Apex code?

Using Salesforce Monitoring Tools: Leverage Salesforce’s built-in tools like the Developer Console to analyze query performance, identify slow queries, and pinpoint bottlenecks in Apex code. The Salesforce Debug Log is invaluable for tracing the execution flow and resource usage.

Analyzing Logs: Carefully examine the debug logs for clues such as excessive SOQL queries, inefficient DML operations, or long-running processes. Look for patterns and areas of high resource consumption.

Optimizing Queries: Review SOQL queries for unnecessary data retrieval or inefficient filtering. Use indexes where appropriate and avoid using

SELECT *.Bulkifying Apex Code: If performance problems are related to Apex, optimize the code to process multiple records simultaneously. Avoid making multiple calls within a loop.

Asynchronous Processing: For long-running processes, consider using asynchronous Apex (Queueable or Batchable) to prevent blocking the main thread.

Reviewing Custom Code: Thoroughly check for inefficient algorithms or unnecessary computations within your custom code. Test extensively.

Using Profiler: Utilizing the execution profiler, if necessary, to dive into granular code execution details and pinpoint the most CPU-intensive parts.

Addressing performance issues requires a deep understanding of the Salesforce platform and its limitations. A systematic approach, combined with detailed analysis and effective optimization techniques, is key to delivering efficient and scalable Salesforce solutions.

Q 7. Describe your experience with Salesforce integrations.

I have extensive experience with Salesforce integrations using various methods, including:

REST APIs: I’ve used REST APIs to integrate Salesforce with various external systems, including ERP systems, marketing automation platforms, and custom applications. This involves building custom Apex classes to handle API calls and data transformations.

SOAP APIs: While less common now, I’m also familiar with SOAP APIs for integrating with legacy systems or systems that require this particular communication protocol.

Outbound Messaging: I’ve used outbound messaging to trigger events in external systems based on changes in Salesforce data. This is useful for real-time integration scenarios, allowing for seamless data synchronization.

Data Loader and other ETL Tools: I’ve used data loaders and ETL (Extract, Transform, Load) tools for bulk data migration and synchronization between Salesforce and other systems. For example, migrating data from an older system into a new Salesforce org.

Third-Party Integration Tools: I have experience integrating with various third-party tools such as MuleSoft, Informatica Cloud, and Jitterbit for more complex integration requirements involving message queues, transformations, and orchestration.

My approach prioritizes choosing the right integration method based on the specific needs of the integration, taking factors such as real-time requirements, data volume, and complexity into account. Security is always a key consideration, and I ensure all integrations adhere to best practices for secure data exchange.

Q 8. What are the benefits of using Salesforce Lightning?

Salesforce Lightning is a modern, user-friendly interface that significantly enhances the Salesforce experience. Think of it as a complete overhaul of the classic Salesforce interface, offering a more intuitive and efficient way to work. Its benefits stem from improved productivity, enhanced collaboration, and a more engaging user experience.

- Improved User Experience: Lightning’s responsive design adapts seamlessly to various devices (desktops, tablets, smartphones), making it accessible and efficient regardless of the platform. The interface is cleaner, more visually appealing, and easier to navigate than the classic interface.

- Increased Productivity: Features like the Lightning App Builder allow for quick customization of the interface. You can create highly personalized dashboards and apps tailored to specific user roles and responsibilities, dramatically reducing time spent searching for information. Pre-built components and drag-and-drop functionality further simplify the process. For instance, a sales rep can quickly access all their key accounts and opportunities on a single dashboard.

- Enhanced Collaboration: Lightning Experience facilitates better teamwork through features like Chatter (Salesforce’s internal communication tool), allowing for real-time updates, discussions, and file sharing. This streamlined communication improves team coordination and project completion.

- Mobile-First Approach: The design prioritizes mobile accessibility, allowing users to manage their Salesforce data on the go. This is crucial for sales representatives or field service technicians who need constant access to their data.

For example, in a previous role, migrating a team from the Classic interface to Lightning resulted in a 20% increase in daily task completion due to improved navigation and streamlined workflows.

Q 9. Explain your experience with different Salesforce clouds (Sales, Service, Marketing, etc.).

My experience encompasses several Salesforce clouds, providing a holistic view of the platform’s capabilities. I’ve worked extensively with:

- Sales Cloud: I’ve built and customized sales processes using Sales Cloud, leveraging features like Opportunity Management, Lead Management, and Account Management. I’ve implemented custom workflows and automated processes to optimize sales cycles and improve forecasting accuracy. For example, I implemented a lead scoring system that prioritized high-potential leads, resulting in a 15% increase in sales conversion rates.

- Service Cloud: My expertise extends to designing and implementing customer service solutions using Service Cloud. I’ve worked with case management, knowledge bases, and community portals to enhance customer support. I’ve used features like Omni-Channel routing to distribute cases effectively among agents, minimizing customer wait times. In one project, I integrated Service Cloud with a chatbot, reducing agent workload by 20%.

- Marketing Cloud: I have experience crafting and executing marketing campaigns using Marketing Cloud. I’ve managed email marketing, created automated journeys, and integrated Marketing Cloud with other Salesforce clouds to create a unified customer view. This allowed for more targeted and personalized marketing communications, improving engagement rates.

- Community Cloud: I’ve developed and managed customer communities, providing a platform for users to interact, share knowledge, and solve problems independently. This reduces the workload on support teams and fosters a strong sense of community among users.

This broad experience allows me to seamlessly integrate various clouds to create comprehensive solutions that address clients’ business needs.

Q 10. How do you manage data migrations in Salesforce?

Data migration in Salesforce is a critical process requiring careful planning and execution. The approach depends on the source and target systems, data volume, and complexity. My strategy generally involves these steps:

- Data Assessment: Thoroughly analyze the source data to identify data quality issues, inconsistencies, and data transformations required. This often involves data profiling and cleansing.

- Data Mapping: Define the mapping between the source and target Salesforce objects and fields. This is crucial to ensure data integrity after the migration.

- Data Transformation: Implement necessary data cleansing and transformation rules to address data quality issues and format the data appropriately for the target system. This may involve using ETL (Extract, Transform, Load) tools.

- Migration Strategy Selection: Choose the appropriate migration method based on factors like data volume and downtime tolerance. Options include using Data Loader, Apex Data Loader, or third-party migration tools.

- Testing and Validation: Thoroughly test the migrated data to ensure accuracy and completeness. This involves comparing the source and target data to identify any discrepancies.

- Deployment and Rollback Plan: Deploy the migrated data to the target system, ensuring a rollback plan is in place in case of issues.

- Post-Migration Monitoring: Monitor the system after migration to detect any issues and ensure data integrity.

I’ve used various tools, including Data Loader, Apex Data Loader, and Informatica, depending on project requirements. For example, in a recent project involving a large-scale data migration, we used Informatica to handle the massive data volume and ensure a smooth and efficient process.

Q 11. What is your experience with SOQL and SOSL?

SOQL (Salesforce Object Query Language) and SOSL (Salesforce Object Search Language) are crucial for retrieving data within Salesforce. SOQL is used for querying data from specific objects, while SOSL allows for searching across multiple objects.

- SOQL: Think of SOQL as SQL for Salesforce. It’s used to retrieve records based on specific criteria. For example,

SELECT Name, Email FROM Contact WHERE AccountId = '001...'retrieves the Name and Email of all Contacts associated with a particular Account. - SOSL: SOSL is broader, enabling you to search across multiple objects simultaneously using keywords. For example,

FIND {‘John Doe’} RETURNING Contact (Name), Account (Name)searches for ‘John Doe’ across Contact and Account objects, returning relevant Names.

My experience with both languages is extensive. I’ve used them extensively to build custom reports, dashboards, and integrations. I’m adept at optimizing queries for performance and understanding complex data relationships.

Q 12. Explain your understanding of data modeling in Salesforce.

Data modeling in Salesforce involves designing the structure of your data, defining objects, fields, and their relationships. It’s crucial for ensuring data integrity, scalability, and efficient data retrieval. Effective data modeling involves understanding business requirements and translating them into a logical and physical data model.

- Object Definition: Choosing the appropriate objects to represent key business entities, such as Accounts, Contacts, Opportunities, and custom objects.

- Field Design: Defining the fields within each object, ensuring data types are appropriate and data is consistently captured.

- Relationship Definition: Establishing relationships between objects, such as master-detail or lookup relationships. For instance, a master-detail relationship between Account and Contact ensures that deleting an Account also deletes associated Contacts.

- Data Governance: Implementing data validation rules, workflow rules, and other mechanisms to ensure data quality and consistency.

A well-designed data model is vital for a healthy Salesforce org. Poor data modeling can lead to performance issues, data inconsistencies, and difficulty in reporting and analysis. For example, in a previous project, we redesigned the data model, resulting in a 30% improvement in report generation time and enhanced data accuracy.

Q 13. Describe your experience with different Salesforce development methodologies (Agile, Waterfall, etc.).

I have experience with both Agile and Waterfall methodologies in Salesforce development. The choice depends on project complexity, client requirements, and team dynamics.

- Agile: I often prefer Agile for its iterative approach, allowing for flexibility and quick adjustments based on feedback. This methodology is ideal for projects with evolving requirements or where rapid prototyping is beneficial. We use tools like Jira and Scrum for project management.

- Waterfall: Waterfall is suitable for projects with clearly defined requirements and minimal anticipated changes. It offers a more structured approach with distinct phases. While less flexible, it can be effective for projects where a comprehensive plan is needed upfront.

In practice, I frequently blend aspects of both approaches, adopting a hybrid model that balances the structure of Waterfall with the flexibility of Agile. This allows us to adapt to changing priorities while maintaining a well-organized development process.

Q 14. Explain the concept of object-oriented programming in the context of Salesforce.

Object-oriented programming (OOP) is a programming paradigm that organizes software design around data, or objects, rather than functions and logic. This approach is central to Salesforce development using Apex.

- Objects: Objects represent real-world entities, like Accounts or Contacts. They encapsulate data (fields) and behavior (methods).

- Classes: Classes are blueprints for creating objects. They define the fields and methods an object will have.

- Methods: Methods are functions that operate on the object’s data.

- Inheritance: Allows creating new classes (child classes) based on existing classes (parent classes), inheriting their properties and methods.

- Polymorphism: Enables objects of different classes to respond to the same method call in their own specific way.

- Encapsulation: Hides internal details of an object, protecting data integrity and simplifying interaction.

OOP principles in Apex lead to modular, reusable, and maintainable code. For instance, creating a custom class for handling lead processing allows for cleaner and more organized code, easier debugging, and simpler future modifications. This contrasts with procedural programming, which can lead to more complex and less scalable applications.

Q 15. What are your experiences with different testing methodologies in Salesforce?

My experience with Salesforce testing methodologies encompasses a wide range, from unit testing to end-to-end system testing. I’m proficient in various approaches, adapting my strategy to the specific needs of the project.

- Unit Testing: I leverage Apex unit tests to ensure individual components, like triggers and controllers, function correctly in isolation. For example, I’ve written unit tests to verify that a custom trigger accurately updates related records after a specific field change.

- Integration Testing: This involves testing the interaction between different Salesforce components, such as custom objects, Apex classes, and Visualforce pages. A recent project required integration testing to confirm seamless data flow between our custom application and Salesforce’s standard features like Accounts and Contacts.

- System Testing: This broad approach ensures the entire Salesforce system meets requirements. This often includes testing data migration, user acceptance testing (UAT), and performance testing to identify bottlenecks under realistic load. In one project, we used JMeter to simulate high user traffic and identify performance issues before launch.

- Regression Testing: After making code changes or deployments, regression testing is crucial to ensure existing functionalities remain unaffected. I utilize automated testing tools and frameworks, alongside manual checks, to maintain system stability.

Furthermore, I’m familiar with different testing frameworks like Selenium for UI testing and Protractor for end-to-end testing within Salesforce.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How familiar are you with the different ABAP data types?

ABAP data types are fundamental to ABAP programming, determining how data is stored and processed. Understanding them is critical for writing efficient and reliable code.

- Elementary Data Types: These are the building blocks, including

c(character),n(numeric),i(integer),p(packed),f(floating-point), andd(date). - String Types:

stringis a variable-length string, whilecdefines a fixed-length character string. I often usestringfor flexibility, especially when dealing with user input. - Numeric Types:

i,p, andfoffer varying precision and storage space. Choosing the right type depends on the range and precision needed. For example,pis efficient for monetary values. - Reference Types: These include

data,object, andany, which are more flexible and can hold complex data structures. - Internal Tables: These are crucial for handling collections of data, similar to arrays or lists in other programming languages. They can be defined with various types, like

standard tableorsorted table, depending on the access pattern.

The correct selection of data types is paramount for optimization. For instance, using p instead of f for currency improves performance and accuracy.

Q 17. Explain your experience with SAP ABAP development.

My experience in SAP ABAP development spans over [Number] years, covering a range of projects from simple report development to complex custom application implementations. I’m adept at various aspects of the development lifecycle, from requirements gathering to deployment and maintenance.

- Report Development: I’ve developed numerous ALV reports (ABAP List Viewer) to provide insightful data analysis for business users. One project involved creating a dynamic report that allowed users to filter and sort data based on various criteria, significantly improving their reporting efficiency.

- BAPI (Business Application Programming Interface) Integration: I’ve extensively worked with BAPIs to integrate SAP systems with other applications. This includes both consuming and creating BAPIs for seamless data exchange. This has been instrumental in many of my projects involving third-party systems.

- Enhancement Techniques: I’m well-versed in various ABAP enhancement techniques, like implicit enhancements, explicit enhancements, and BADIs (Business Add-Ins), enabling customization without altering core SAP code. This is crucial for maintaining system stability during upgrades.

- Module Pool Programming: I’ve used module pool programming to develop complex, interactive user interfaces, improving user experience within the SAP system.

My proficiency extends to using debugging tools effectively to resolve complex issues, ensuring timely delivery of high-quality applications. I prioritize creating clean, maintainable, and efficient code that aligns with SAP best practices.

Q 18. What is your experience with SAP Fiori development?

My experience with SAP Fiori development focuses on building user-friendly and responsive applications that improve user engagement within SAP systems. This involves both front-end and back-end development, utilizing various tools and technologies.

- UI5 (User Interface for HTML5): I’m proficient in UI5 development, which allows for the creation of modern and responsive Fiori apps. I’ve built various Fiori apps using XML views, JavaScript controllers, and OData services.

- OData Services: I understand how to create and consume OData services, which act as the data layer for many Fiori apps. This ensures efficient data access and consistent data handling.

- Fiori Elements: Leveraging Fiori elements for rapid app development simplifies building common Fiori application patterns. I’ve effectively used this to accelerate project timelines.

- SAP Web IDE and Eclipse: I’m comfortable using both SAP Web IDE and Eclipse for Fiori development, adapting my workflow to the project requirements.

A recent project involved building a custom Fiori app for expense management, significantly improving the user experience compared to the previous transaction-based approach. This showcased my ability to translate complex business processes into intuitive user interfaces.

Q 19. Describe your experience with SAP HANA.

My experience with SAP HANA encompasses both its in-memory database capabilities and its role in modern SAP solutions. I understand its architecture, data modeling, and performance optimization techniques.

- Data Modeling: I’m experienced in designing and implementing data models optimized for HANA’s in-memory architecture. This includes understanding the use of columnar storage and leveraging HANA’s specific data types for improved performance.

- SQLScript: I am proficient in writing SQLScript procedures and functions, which are essential for extending HANA’s functionality. I’ve used this to create custom business logic within the database itself, enhancing performance.

- Performance Tuning: I’ve addressed performance issues in HANA, using tools like the HANA Studio to analyze query execution plans and identify optimization opportunities. This often involves optimizing queries, adjusting indexes, and using appropriate data types.

- Integration with other SAP systems: I understand how HANA integrates with other SAP systems, such as S/4HANA and BW/4HANA, enabling seamless data flow and analysis.

For example, I worked on a project that migrated a large relational database to HANA, resulting in significant improvements in query performance and overall system responsiveness.

Q 20. Explain your understanding of SAP security concepts.

SAP security is a critical aspect of any SAP implementation, encompassing various measures to protect data and system integrity. My understanding covers various layers and concepts.

- Role-Based Authorization (RBAC): This fundamental concept restricts access to data and transactions based on user roles and authorizations. I’ve configured numerous roles to ensure segregation of duties and least privilege access.

- User Management: I’m experienced in managing users, profiles, and authorizations in SAP, ensuring appropriate access levels are assigned based on job functions. I have a deep understanding of the complexities and risks involved in maintaining security within the system.

- Authorization Objects and Profiles: I understand how to create and manage authorization objects and profiles to control access to specific data or functions within SAP. I can tailor these to meet specific business needs.

- Security Audits: I understand the importance of regular security audits and have participated in implementing necessary controls to ensure ongoing compliance and system security.

- Data Encryption: I’m aware of the different encryption methods available in SAP and their application to protect sensitive data, both in transit and at rest.

Security is a priority in all my projects. I actively participate in security reviews and implement robust measures to prevent unauthorized access and data breaches.

Q 21. How do you troubleshoot performance issues in SAP?

Troubleshooting performance issues in SAP requires a systematic approach, combining technical expertise with problem-solving skills. My strategy involves a multi-step process.

- Identifying the Bottleneck: The first step is to pinpoint the source of the problem. This involves analyzing system logs, monitoring tools like ST02 (Database Performance), and using transaction SM50 (Work Processes) to identify overloaded processes. For example, a slow report might indicate a poorly optimized database query.

- Analyzing System Resources: Examining CPU usage, memory consumption, and disk I/O can reveal resource constraints causing performance bottlenecks. Tools like ST04 (Database Monitoring) are invaluable in this stage.

- Database Optimization: If the issue resides in the database layer, optimizations include analyzing query execution plans, creating or adjusting indexes, and ensuring appropriate data types are used.

- Code Optimization: Poorly written ABAP code can lead to significant performance degradation. This requires code review, refactoring, and efficient use of database access methods.

- Performance Testing: Using tools like JMeter or LoadRunner to simulate realistic workloads can identify performance issues before they impact real users. This is crucial for proactive performance management.

A recent performance issue I resolved involved optimizing a complex batch job. By analyzing the SQL queries within the job, refactoring inefficient code sections, and adding indexes to relevant database tables, I significantly reduced the job’s runtime.

Q 22. Describe your experience with SAP data migration projects.

My experience with SAP data migration projects spans over eight years, encompassing various methodologies and project sizes. I’ve led and participated in migrations from legacy systems to SAP ECC and more recently, S/4HANA. A crucial aspect of these projects is thorough planning and risk mitigation. We typically follow a phased approach:

- Assessment & Planning: This initial phase involves a detailed analysis of the source system, target system, and data volume. We identify data quality issues, potential conflicts, and develop a comprehensive migration strategy.

- Data Cleansing & Transformation: This is often the most time-consuming stage. We employ ETL (Extract, Transform, Load) tools like SAP Landscape Transformation (SLT) or third-party tools like Informatica PowerCenter to cleanse, transform, and standardize data according to the target system’s requirements. For instance, I once worked on a project where we had to reconcile conflicting address data from multiple legacy systems using a custom cleansing script before migrating it to SAP.

- Data Loading & Validation: The transformed data is loaded into the SAP system. We use techniques like initial load, delta load, and reconciliation to ensure data integrity and accuracy. Post-load validation is crucial to verify data accuracy and completeness.

- Testing & Go-Live: Rigorous testing is critical to identify and resolve any data inconsistencies or errors before going live. We conduct unit, integration, and user acceptance testing (UAT) before the final cutover.

For example, in a recent project migrating a large manufacturing company to S/4HANA, we utilized SLT for real-time replication of critical data, significantly reducing downtime and ensuring business continuity. We also employed a phased approach, starting with a pilot project to validate the migration process before migrating the entire organization.

Q 23. What is your experience with SAP integration technologies?

My experience with SAP integration technologies is extensive, covering various approaches such as APIs (REST, SOAP), middleware (like SAP PI/PO), and other integration platforms. I understand the importance of choosing the right technology based on the project’s complexity, performance requirements, and budget.

- SAP Process Integration (PI)/Process Orchestration (PO): I’ve extensively used PI/PO for complex B2B and internal system integrations. This involves designing interfaces, mapping data structures, and configuring message flows to ensure seamless data exchange between SAP and non-SAP systems. For example, I integrated SAP with an external CRM system using PI/PO, enabling real-time customer data synchronization.

- REST/SOAP APIs: I’ve leveraged REST and SOAP APIs for simpler integrations, especially when integrating with cloud-based services or applications. This often involves building custom integration solutions using programming languages like ABAP or Java.

- ALE/IDoc: I’m familiar with the legacy ALE/IDoc integration technology and often encounter it when working with older SAP systems. Understanding its limitations and migration to more modern technologies is crucial for ongoing system maintenance and scalability.

Selecting the right integration technology is key. For instance, while PI/PO is robust for complex integrations, REST APIs are often more efficient and cost-effective for simpler use cases. My approach always prioritizes choosing the most suitable solution that aligns with business requirements and system architecture.

Q 24. Explain your understanding of SAP data modeling.

SAP data modeling involves designing the structure of databases and data flows within an SAP system. It’s a crucial process that ensures data integrity, accuracy, and efficient processing. Effective data modeling leads to a well-functioning and scalable SAP landscape.

Key aspects of SAP data modeling include:

- Understanding Business Processes: A deep understanding of the business processes is paramount. The data model needs to accurately reflect how data is created, used, and updated within these processes.

- Data Structures: This involves defining tables, fields, data types, and relationships between tables. We use tools like ABAP Dictionary to create and manage these data structures.

- Data Integrity: Constraints and validation rules are implemented to ensure data accuracy and consistency. This includes things like primary keys, foreign keys, and check constraints.

- Performance Optimization: Data models need to be optimized for performance. This includes choosing appropriate data types, indexing tables correctly, and avoiding redundant data.

For instance, during a project involving the implementation of a new production planning module, I was responsible for designing the data model that accurately captured production orders, material requirements, and capacity planning data. Careful consideration was given to ensure efficient data retrieval and reporting capabilities.

Q 25. What are the key differences between SAP ECC and S/4HANA?

SAP ECC (Enterprise Central Component) and S/4HANA (SAP for Hana) are both ERP systems from SAP, but S/4HANA represents a significant evolution. The key differences lie in:

- Database: ECC primarily runs on relational databases like Oracle, DB2, or SQL Server, whereas S/4HANA is built on SAP HANA, an in-memory database. This fundamental change significantly improves processing speed and analytic capabilities.

- Architecture: S/4HANA uses a simplified data model and improved architecture for better performance and scalability. ECC’s architecture is more complex and often involves multiple databases and layers.

- Functionality: S/4HANA offers enhanced functionalities in areas such as real-time analytics, advanced planning, and improved user experience. Many features have been simplified or enhanced compared to ECC.

- Deployment: S/4HANA is available as both on-premise and cloud solutions, providing more deployment flexibility than ECC.

Migrating from ECC to S/4HANA is a major undertaking, often involving a comprehensive system transformation. It presents both opportunities (enhanced performance, simplification) and challenges (significant cost and complexity). The choice between ECC and S/4HANA depends on various factors, such as the company’s size, budget, and specific requirements.

Q 26. Describe your experience working with SAP modules (e.g., FI, CO, MM, SD).

My experience encompasses several key SAP modules, including:

- FI (Financial Accounting): I’ve worked extensively with FI, configuring chart of accounts, general ledger accounts, and managing financial transactions. I understand the importance of accurate financial reporting and regulatory compliance.

- CO (Controlling): My experience includes cost center accounting, profit center accounting, and internal order management. I’ve helped companies improve their cost control and profitability through effective CO configurations.

- MM (Materials Management): I’m familiar with material master data management, purchasing, inventory management, and warehouse management. I’ve assisted companies in optimizing their procurement processes and reducing inventory costs.

- SD (Sales and Distribution): My experience includes sales order processing, pricing, delivery, and billing. I’ve helped companies improve their sales processes and enhance customer satisfaction.

For example, in one project, I helped a client optimize their inventory management using MM by implementing ABC analysis and improving their demand forecasting. This resulted in significant cost savings by reducing excess inventory while maintaining sufficient stock levels to meet customer demand.

Q 27. How familiar are you with SAP’s reporting and analytics tools?

I’m highly familiar with SAP’s reporting and analytics tools, including:

- SAP Business Warehouse (BW): I’ve built data warehouses, ETL processes, and reports using BW to provide comprehensive business intelligence and support strategic decision-making. This involved designing and implementing data models, creating queries, and developing dashboards.

- SAP Analytics Cloud (SAC): I have experience using SAC for building interactive dashboards, visualizations, and conducting ad-hoc analysis. Its cloud-based nature and user-friendly interface makes it suitable for diverse business users.

- SAP Crystal Reports: I’m proficient in using Crystal Reports for creating various reports for operational and managerial purposes. This often involves connecting to multiple data sources and generating customizable reports.

- ABAP Reporting: I possess the skills to create custom reports using ABAP programming. This is crucial for developing tailored reporting solutions that are not readily available through standard SAP tools.

In a previous role, I developed a comprehensive dashboard in SAC that provided real-time insights into sales performance, inventory levels, and production efficiency, enabling management to make data-driven decisions promptly.

Key Topics to Learn for Salesforce or SAP Interview

Landing your dream Salesforce or SAP role requires a strategic approach to your preparation. Focus on mastering these key areas to showcase your expertise and problem-solving skills:

- Salesforce:

- Sales Cloud Fundamentals: Understanding Sales processes, lead management, opportunity management, and reporting within the Sales Cloud.

- Service Cloud Concepts: Case management, knowledge bases, customer portals, and service level agreements (SLAs).

- Data Modeling and Architecture: Designing efficient data structures and understanding the relationships between objects.

- Workflow Rules and Approvals: Automating processes and enforcing business rules.

- Apex and Visualforce (Advanced): Developing custom applications and extending Salesforce functionality. (Focus on conceptual understanding for entry-level roles).

- SAP:

- SAP ERP Modules (choose relevant ones based on your target role): Understanding the core functionalities of specific modules like FI (Financial Accounting), CO (Controlling), MM (Materials Management), SD (Sales and Distribution), or PP (Production Planning).

- SAP Business Processes: Familiarize yourself with the end-to-end business processes supported by the chosen modules.

- Data Structures and Tables: Understanding the underlying database structure and key tables within the SAP system.

- ABAP Programming (Advanced): Developing custom reports and enhancements within the SAP system. (Focus on conceptual understanding for entry-level roles).

- SAP Fiori/UI5 (Advanced): Understanding modern user interfaces and their development.

- Common to Both:

- Data Integration and Migration: Understanding how data moves between systems.

- Security and Access Control: Implementing security measures to protect sensitive data.

- Problem-solving and Analytical Skills: Demonstrate your ability to analyze complex situations and propose solutions.

Next Steps

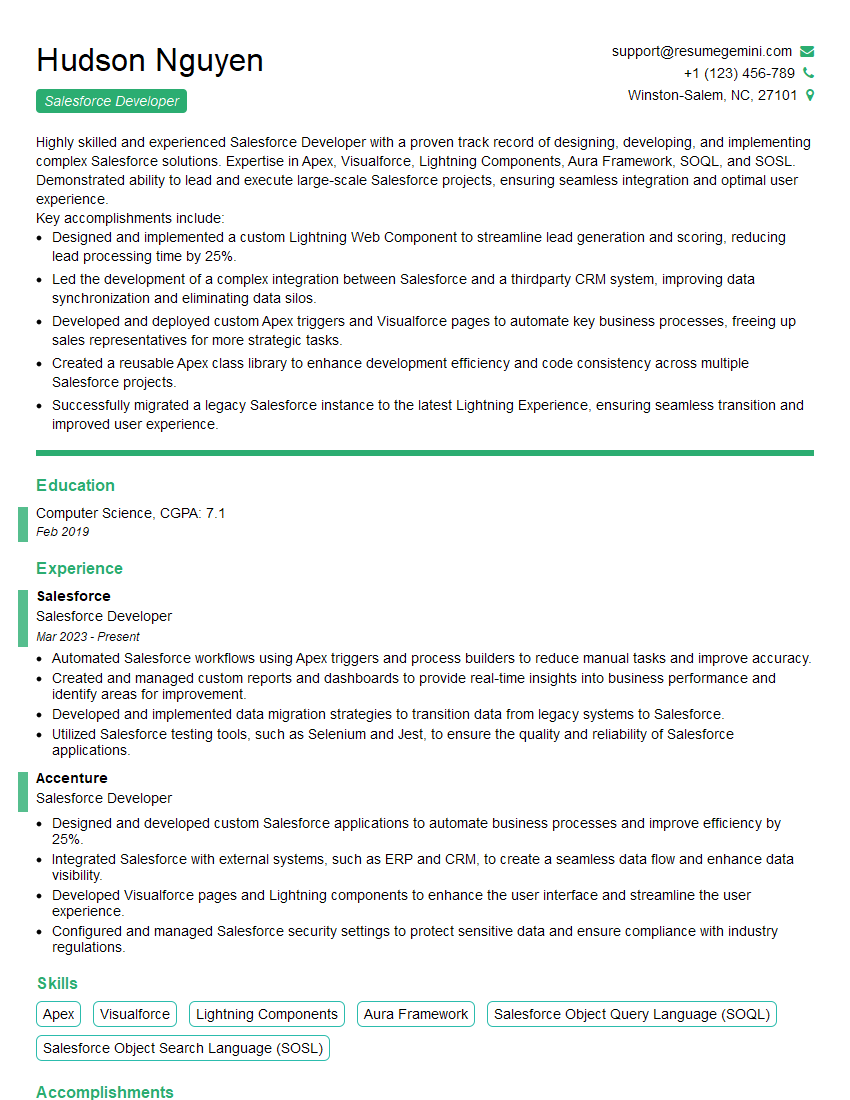

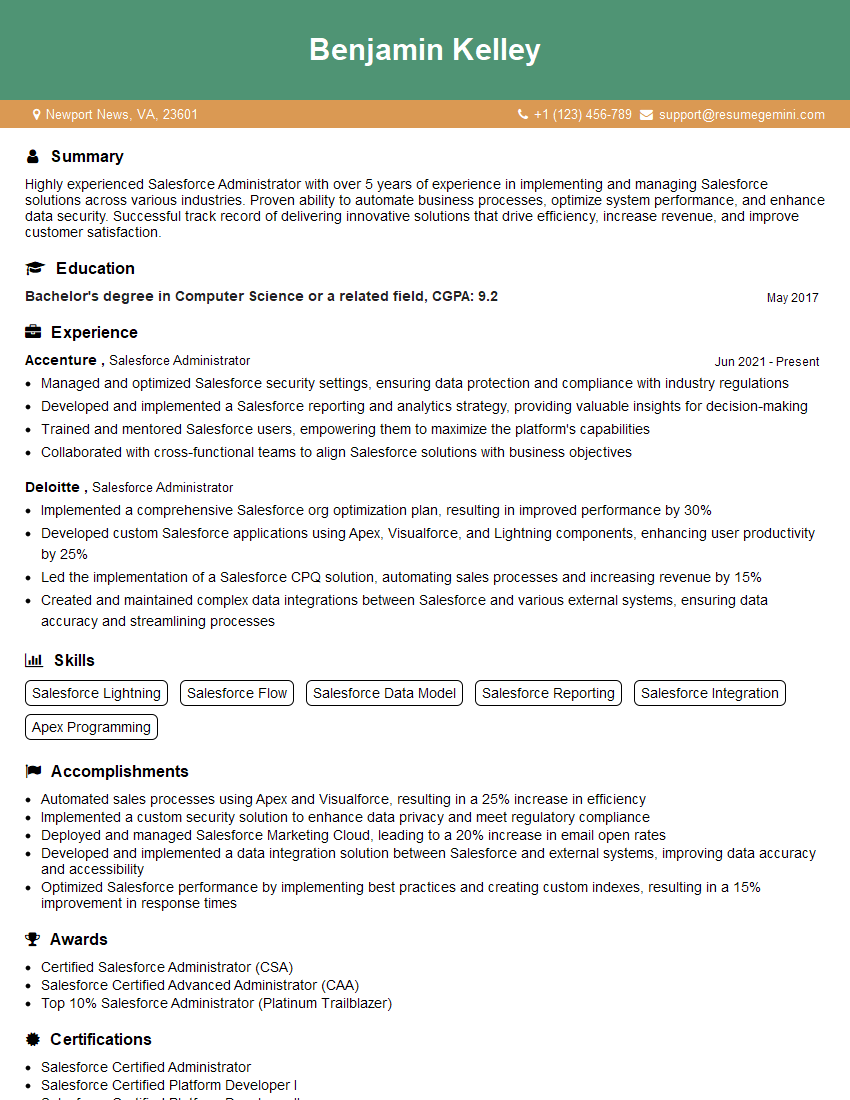

Mastering Salesforce or SAP opens doors to exciting and rewarding career opportunities in a rapidly growing technology sector. To maximize your chances of landing your dream job, create an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We offer examples of resumes tailored specifically to Salesforce and SAP roles to give you a head start. Invest time in crafting a compelling resume – it’s your first impression!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO