Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Software Development and Deployment interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Software Development and Deployment Interview

Q 1. Explain the difference between CI and CD.

CI/CD stands for Continuous Integration and Continuous Delivery/Deployment. While often used together, they represent distinct but related processes in software development.

Continuous Integration (CI) focuses on automating the integration of code changes from multiple developers into a shared repository. This involves frequent commits, automated builds, and automated testing to quickly identify and fix integration issues. Think of it as a constantly running quality control check for your codebase. A developer commits their code, CI runs the build and tests, and immediately reports back on any failures, preventing bugs from propagating far into the development process.

Continuous Delivery (CD) extends CI by automating the release process. This means that once code passes CI’s checks, it’s automatically packaged and prepared for deployment to a staging or production environment. While it automates the *ability* to deploy frequently, it doesn’t necessarily *deploy* automatically to production.

Continuous Deployment (also CD) goes a step further and automatically deploys to production after successful CI/CD pipeline completion. This requires a high degree of confidence in automated testing and monitoring.

In short: CI is about automating the integration process; CD is about automating the release process; and Continuous Deployment is automatically deploying every change that passes all automated tests.

Q 2. Describe your experience with different deployment strategies (e.g., blue-green, canary).

I have extensive experience with various deployment strategies, including blue-green, canary, and rolling deployments.

Blue-Green Deployment: This involves maintaining two identical environments: ‘blue’ (live) and ‘green’ (staging). New code is deployed to the ‘green’ environment, thoroughly tested, and then traffic is switched from ‘blue’ to ‘green’. If issues arise, traffic can quickly be switched back to ‘blue’. This minimizes downtime and risk.

Canary Deployment: A more gradual approach. A small subset of users are routed to the new version of the application. This allows for monitoring the new release’s performance and stability in a real-world setting before rolling it out to the entire user base. If problems arise, the rollout can be stopped immediately, limiting the impact.

Rolling Deployment: In this method, new versions are incrementally deployed to a set of servers. This is often done using load balancers and sophisticated monitoring. As each server is updated, it’s tested; if any issues occur the process is halted until resolved.

Example: In a recent project, we opted for a blue-green deployment for a critical e-commerce platform update. This ensured minimal disruption to sales during the upgrade.

Q 3. What are some common challenges in software deployment, and how have you overcome them?

Common challenges in software deployment include:

- Downtime: Unexpected outages caused by poorly planned deployments.

- Data loss or corruption: Issues during database migrations or updates.

- Rollback complexity: Difficulty reverting to previous versions in case of failures.

- Testing gaps: Not adequately testing the application in a production-like environment.

- Configuration issues: Problems with environment variables or server settings.

To overcome these, I leverage robust CI/CD pipelines with automated testing, thorough rollback strategies (including automated rollback procedures), comprehensive monitoring, and detailed deployment checklists. I also advocate for rigorous testing in staging environments that closely mirror production, employing techniques like Infrastructure-as-Code (IaC) to maintain consistency across environments. For example, to prevent data loss, we implemented a robust database migration process using schema versioning and automated backups, alongside a solid rollback plan.

Q 4. How do you ensure the security of your deployments?

Deployment security is paramount. My approach involves several key strategies:

- Secure infrastructure: Utilizing secure servers, firewalls, and intrusion detection systems.

- Secure code practices: Following secure coding guidelines, conducting regular security audits, and incorporating security testing into the CI/CD pipeline (SAST/DAST).

- Secrets management: Utilizing tools like HashiCorp Vault or AWS Secrets Manager to securely store and manage sensitive information, preventing hardcoding of credentials.

- Access control: Implementing role-based access control (RBAC) to restrict access to sensitive resources.

- Vulnerability scanning: Regularly scanning the application and infrastructure for vulnerabilities using automated tools.

- Encryption: Encrypting data both in transit and at rest.

I also prioritize following security best practices throughout the software development lifecycle (SDLC), from design to deployment.

Q 5. What monitoring tools are you familiar with, and how do you use them to track deployment health?

I’m familiar with a range of monitoring tools including:

- Datadog: For comprehensive application and infrastructure monitoring.

- Prometheus and Grafana: A powerful open-source monitoring stack.

- New Relic: Application performance monitoring (APM).

- CloudWatch (AWS): For monitoring AWS services.

- Splunk: For log management and analysis.

My approach involves setting up comprehensive monitoring from the beginning of the development process. Key metrics I track include:

- Application performance: Response times, error rates, throughput.

- Server health: CPU utilization, memory usage, disk I/O.

- Network performance: Latency, packet loss.

- Log analysis: Identifying errors and exceptions.

By leveraging alerts and dashboards, we can proactively identify and address potential issues before they impact users.

Q 6. Describe your experience with Infrastructure as Code (IaC).

Infrastructure as Code (IaC) is a crucial part of my workflow. I have extensive experience using tools like Terraform and Ansible to manage and provision infrastructure. IaC allows for defining infrastructure in code, enabling automation, version control, and consistency across environments (Dev, Staging, Production). This drastically reduces human error and improves deployment speed and repeatability.

Example: In a recent project, we used Terraform to define and manage our entire AWS infrastructure, including EC2 instances, databases, load balancers, and security groups. This ensured that our infrastructure was consistent across all environments and that changes could be easily managed and tracked using version control (Git).

Q 7. What are your preferred scripting languages for automation tasks?

My preferred scripting languages for automation tasks include Python and Bash. Python is a versatile language suitable for complex automation tasks, leveraging its extensive libraries for various purposes. Bash is ideal for quick scripting and interacting directly with the operating system, particularly in Linux environments. The choice of language often depends on the specific task and environment.

Example: I often use Python with libraries like `requests` for API interactions during automated testing and deployment. I also utilize Bash for quick tasks like managing files or running commands on servers.

Q 8. Explain your understanding of containerization (e.g., Docker, Kubernetes).

Containerization, in essence, packages an application and its dependencies into a single unit, called a container. Think of it like a self-contained apartment—everything the application needs to run is included, preventing conflicts with other applications on the same system. Docker is the most popular containerization technology, creating these isolated environments. Kubernetes, on the other hand, orchestrates and manages these containers at scale, automating deployment, scaling, and management across clusters of machines.

Imagine you have a web application that requires a specific version of Python and a particular database. With Docker, you create a Dockerfile that specifies these dependencies. This file acts as a blueprint to build a container image. This image contains the application code, Python, the database, and all necessary libraries. This ensures consistency across different environments – development, testing, and production. Kubernetes then takes over, deploying many instances of this container across multiple servers, automatically handling load balancing and scaling to meet demand. It’s like having a building manager (Kubernetes) overseeing a building (cluster) full of self-contained apartments (containers).

In a real-world scenario, we used Docker and Kubernetes to deploy a microservice-based e-commerce platform. Each microservice (e.g., product catalog, shopping cart, payment gateway) ran in its own container, making updates and scaling individual services much simpler and less risky.

Q 9. How do you handle rollback in case of a failed deployment?

Rollback strategies are critical for mitigating deployment failures. My approach involves a multi-layered strategy combining automated mechanisms and manual intervention as needed.

- Automated Rollbacks: I leverage tools like Kubernetes’ rollouts and rollbacks features. These mechanisms track deployments and allow for quick reverts to previous, known-good versions if metrics (e.g., error rates, latency) exceed defined thresholds. For example, if a new deployment causes a significant spike in error rates, Kubernetes can automatically roll back to the previous version.

- Blue/Green Deployments: This strategy involves having two identical environments, ‘blue’ (live) and ‘green’ (staging). The new version is deployed to the ‘green’ environment. Once testing and validation are complete, traffic is switched from ‘blue’ to ‘green’. If the new deployment fails, traffic simply remains on the ‘blue’ environment, minimizing downtime.

- Canary Deployments: This is a phased rollout where the new version is deployed to a small subset of users. This allows for monitoring its performance in a real-world setting before a full release. If issues emerge, the rollout can be halted before affecting a large number of users.

- Manual Intervention: In cases of complex or unforeseen issues, manual intervention is sometimes necessary. This might involve using deployment tools to revert to a previous version or using scripts to manually restore data.

Choosing the right strategy depends on the application’s criticality, infrastructure, and development process. For high-availability systems, blue/green or canary deployments are generally preferred. For less critical systems, automated rollbacks might suffice.

Q 10. What are your experiences with different cloud platforms (e.g., AWS, Azure, GCP)?

I have extensive experience with AWS, Azure, and GCP, each having strengths and weaknesses. My choice of platform depends on the project’s specific requirements and constraints.

- AWS (Amazon Web Services): I’ve used AWS extensively for its mature ecosystem, comprehensive services (e.g., EC2, S3, Lambda), and vast community support. A recent project involved using AWS ECS (Elastic Container Service) for container orchestration and S3 for storage.

- Azure (Microsoft Azure): Azure’s strong integration with Microsoft technologies makes it ideal for businesses heavily invested in the Microsoft stack. I’ve utilized Azure App Service for deploying web applications and Azure DevOps for CI/CD.

- GCP (Google Cloud Platform): GCP’s strengths lie in its powerful data analytics capabilities and serverless computing options (Cloud Functions). I’ve used GCP for projects requiring large-scale data processing and analysis.

Beyond specific services, I’m proficient in managing cloud resources, including networking, security, and cost optimization across all three platforms. The key is understanding the strengths of each and leveraging them effectively based on the project needs.

Q 11. Describe your experience with version control systems (e.g., Git).

Git is my primary version control system. I am highly proficient in using Git for branching strategies (e.g., Gitflow), merging, rebasing, resolving conflicts, and managing remote repositories. I’m also comfortable using Git for collaboration in large teams, understanding the importance of clear commit messages and regular code reviews.

In a recent project, we used Gitflow branching to manage feature development, bug fixes, and releases. This allowed developers to work independently on features without interfering with each other’s code. Pull requests with code reviews ensured code quality and consistency before merging into the main branch. I utilize command-line Git for efficiency and deeper understanding, but am also comfortable with GUI clients like Sourcetree when needed.

Beyond basic Git usage, I understand concepts like cherry-picking, stashing, and using Git hooks for automation (e.g., pre-commit hooks for code linting).

Q 12. Explain the concept of immutable infrastructure.

Immutable infrastructure is a concept where servers and other infrastructure components are treated as immutable entities. Instead of updating existing servers in place (which can lead to configuration drift and inconsistencies), you create entirely new instances with the desired configurations whenever a change is needed. Once an instance is deployed, it is never modified.

Think of it like this: imagine building a LEGO castle. Instead of changing individual bricks, you tear down the entire castle and rebuild it with the new design. This eliminates many configuration management challenges. If a problem arises, rolling back is as simple as reverting to the previous version of the image.

In practice, this is often achieved through containerization and automation tools like Terraform or Ansible. These tools can automatically build and deploy new instances, ensuring consistency and repeatability.

Q 13. How do you manage dependencies in your projects?

Dependency management is crucial for ensuring project stability and reproducibility. My approach combines using package managers and version control to specify exact versions of dependencies.

- Package Managers: For various programming languages, I use appropriate package managers such as npm for JavaScript, pip for Python, Maven for Java, and NuGet for .NET. These managers track dependencies and their versions, making it easy to install and manage them.

- Dependency Locking: I always utilize dependency locking mechanisms (

package-lock.jsonfor npm,requirements.txtfor pip) to ensure that the exact versions used during development are consistently reproduced across different environments (developer machines, testing servers, production). This prevents unexpected behaviour due to dependency updates. - Virtual Environments: I consistently use virtual environments (e.g.,

venvfor Python, Node.js virtual environments) to isolate project dependencies from the global environment. This avoids conflicts and ensures clean builds. - Dependency Graphs: I regularly review dependency graphs to identify potential conflicts or outdated packages. Tools like `npm ls` or `pipdeptree` can be helpful in visualizing and managing dependencies.

By meticulously managing dependencies, I ensure consistent build processes and avoid many common deployment issues.

Q 14. How do you ensure code quality and maintainability?

Ensuring code quality and maintainability is a continuous effort encompassing several key practices.

- Code Reviews: Code reviews are essential for catching bugs early, improving code style, and sharing knowledge. I actively participate in code reviews, providing constructive feedback and ensuring adherence to coding standards.

- Static Code Analysis: I use linters (e.g., ESLint for JavaScript, Pylint for Python) and static analysis tools to automatically detect potential issues such as bugs, style violations, and security vulnerabilities before runtime.

- Unit Testing: Writing comprehensive unit tests is crucial for ensuring that individual components of the code work as expected. I follow Test-Driven Development (TDD) principles where possible, writing tests before writing code.

- Integration Testing: I use integration tests to verify that different components of the system work together correctly.

- Automated Testing: I integrate automated tests into the CI/CD pipeline to ensure that new code doesn’t break existing functionality. This includes unit, integration, and potentially end-to-end tests.

- Code Style Guidelines: I adhere to consistent code style guidelines (e.g., PEP 8 for Python, Airbnb style guide for JavaScript) to improve readability and maintainability. This helps ensure that the codebase is easy to understand and modify for different developers.

By consistently applying these practices, we can create a more robust, reliable, and maintainable codebase.

Q 15. Explain your experience with testing frameworks and methodologies.

My experience with testing frameworks and methodologies spans a wide range, encompassing both unit and integration testing. I’ve extensively used frameworks like JUnit (Java), pytest (Python), and Jest (JavaScript), depending on the project’s technology stack. My approach to testing aligns with best practices, including Test-Driven Development (TDD) where applicable. In TDD, tests are written *before* the code, guiding development and ensuring functionality from the outset. I’m also proficient in various testing methodologies:

- Unit Testing: Isolating individual components to verify their functionality. For example, in a banking application, I’d test a function calculating interest independently of the database interaction.

- Integration Testing: Verifying the interaction between different components. Continuing the banking example, I’d test the interaction between the interest calculation function and the database update function.

- System Testing: Testing the entire system as a whole to ensure all components work together correctly. This might involve end-to-end testing of the banking application, simulating real-world user scenarios.

- Regression Testing: Running tests after code changes to ensure that new features haven’t broken existing functionality. This is crucial for maintaining software quality over time.

Beyond these core methods, I also incorporate techniques like mocking and stubbing to isolate units during testing and simplify complex interactions. My experience includes working with both manual and automated testing processes, with a strong emphasis on automating as much as possible to ensure efficiency and reduce human error. For example, using CI/CD pipelines to automatically run tests upon code commits.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with logging and monitoring solutions?

Robust logging and monitoring are critical for maintaining and troubleshooting applications. My experience includes using a variety of tools, from simple file-based logging to sophisticated centralized logging systems like ELK stack (Elasticsearch, Logstash, Kibana) and the Splunk platform. I’m familiar with various logging levels (DEBUG, INFO, WARN, ERROR, FATAL) and understand the importance of providing detailed, contextual information in log messages. This allows for effective debugging and rapid identification of issues.

For monitoring, I’ve worked with tools such as Prometheus and Grafana for metrics visualization and alerting. These tools allow us to track key performance indicators (KPIs) like response times, error rates, and resource utilization. I’ve used these insights to proactively identify performance bottlenecks and potential issues *before* they impact users. For example, a sudden spike in error rates might indicate a failing component, prompting immediate investigation. Alerting systems are configured to notify the relevant teams immediately when critical thresholds are breached.

Q 17. Describe your understanding of different database systems.

My understanding of database systems covers a range of relational and NoSQL databases. I have extensive experience with relational databases like PostgreSQL and MySQL, including designing schemas, writing optimized SQL queries, and managing database performance. I’m also familiar with various NoSQL databases like MongoDB and Cassandra, understanding their strengths and weaknesses, and when to choose one over the other.

For instance, PostgreSQL’s ACID properties make it ideal for applications requiring high data integrity, such as financial systems. Conversely, MongoDB’s flexible schema is well-suited for applications with rapidly evolving data structures, like social media platforms. The choice of database depends entirely on the specific application requirements, including scalability needs, data structure complexity, and performance demands. My experience includes database replication, sharding, and optimization techniques to ensure high availability and performance.

Q 18. How do you approach debugging and troubleshooting deployment issues?

Debugging and troubleshooting deployment issues requires a systematic approach. My process typically involves the following steps:

- Reproduce the issue: First, I try to reproduce the problem consistently. This often involves carefully examining logs and collecting relevant data.

- Isolate the root cause: Once I can reproduce the issue, I use various tools and techniques (e.g., debuggers, logging, monitoring dashboards) to pinpoint the root cause. This might involve examining network traffic, analyzing database queries, or reviewing application logs.

- Implement a solution: Based on my understanding of the root cause, I develop and implement a solution. This may involve code changes, configuration tweaks, or infrastructure adjustments.

- Verify the fix: After implementing a solution, I thoroughly test it to ensure that it addresses the problem without introducing new issues.

- Document the issue and resolution: I document both the problem and the solution to prevent similar issues from occurring in the future. This includes adding to the knowledge base or updating relevant documentation.

For example, a recent deployment issue involved a slow database query. By analyzing the query execution plan and optimizing the database schema, we were able to significantly improve performance and resolve the deployment bottleneck.

Q 19. What is your experience with automated testing (e.g., unit, integration, system)?

Automated testing is a cornerstone of my development workflow. I have significant experience with unit, integration, and system-level automated testing. My approach prioritizes writing clear, concise, and maintainable tests using appropriate frameworks (as mentioned in question 1). I follow best practices like using test-driven development (TDD) when feasible and aiming for high test coverage.

Unit testing focuses on individual functions or modules. I’ll use mocking to simulate external dependencies, ensuring that only the specific unit is tested. Integration testing verifies the interactions between various units or components. I might use a testing framework like pytest to orchestrate these tests. Finally, system testing involves testing the entire system as a complete entity, typically using end-to-end tests that mirror real-world scenarios.

I leverage CI/CD pipelines to automatically execute tests as part of the build and deployment process. This ensures early detection of bugs and reduces the risk of releasing faulty code into production. This automated approach is critical for maintaining high quality and accelerating development cycles.

Q 20. Explain your experience with performance tuning and optimization.

Performance tuning and optimization are crucial for ensuring applications run efficiently and scale effectively. My approach involves a multi-pronged strategy:

- Profiling: Identifying performance bottlenecks using profiling tools. These tools help to pinpoint slow functions or inefficient algorithms.

- Code Optimization: Refactoring code to improve efficiency. This may involve optimizing algorithms, reducing database queries, or improving caching strategies.

- Database Optimization: Optimizing database queries and schema design. This can involve indexing tables, using efficient query patterns, and optimizing database configurations.

- Caching: Implementing caching mechanisms to reduce the number of database calls or API requests.

- Load Testing: Simulating realistic user loads to identify performance bottlenecks under stress.

For example, I once optimized a slow web application by caching frequently accessed data in memory, reducing database load and improving response times by a factor of five. Load testing revealed the need for this optimization and allowed me to measure the effectiveness of the changes.

Q 21. How do you handle scaling issues in your deployments?

Handling scaling issues involves a combination of architectural choices and deployment strategies. My approach is guided by the specific needs of the application and the expected growth trajectory.

Techniques I’ve used include:

- Horizontal Scaling: Adding more servers to distribute the load. This approach leverages the power of multiple machines to handle increased traffic.

- Vertical Scaling: Upgrading existing servers with more powerful hardware. This approach increases the capacity of individual machines.

- Load Balancing: Distributing traffic across multiple servers to prevent overload. This ensures that no single server becomes a bottleneck.

- Microservices Architecture: Breaking down the application into smaller, independent services that can be scaled individually. This allows for granular control over resource allocation.

- Database Sharding: Distributing data across multiple database servers. This approach improves database scalability and performance.

- Caching: Caching frequently accessed data to reduce the load on servers and databases.

The choice of scaling strategy depends on various factors, including cost, complexity, and the nature of the application. For instance, a highly trafficked e-commerce platform might benefit from a microservices architecture with horizontal scaling and database sharding, whereas a less demanding application might only require vertical scaling.

Q 22. Describe your experience with different build tools (e.g., Maven, Gradle).

My experience encompasses a wide range of build tools, primarily Maven and Gradle, both essential for managing dependencies and automating the build process. Maven, with its XML-based configuration, offers a structured approach, particularly beneficial for larger projects needing strict dependency management. Its repository system simplifies dependency resolution. However, its XML configuration can become verbose and cumbersome for complex projects.

Gradle, on the other hand, uses a more flexible Groovy-based configuration language, providing greater power and customization. Its support for incremental builds significantly reduces build times, especially crucial in continuous integration/continuous delivery (CI/CD) pipelines. I’ve found Gradle’s build caching and parallel task execution particularly advantageous for large, multi-module projects. For instance, in a recent project involving microservices, Gradle’s ability to build individual services concurrently drastically shortened our build times, improving our overall delivery speed.

In essence, my choice between Maven and Gradle depends on the project’s complexity and requirements. For simpler projects, Maven’s structure offers sufficient control, while for complex, large-scale projects, Gradle’s flexibility and performance enhancements prove invaluable.

Q 23. Explain your understanding of Agile methodologies.

Agile methodologies, such as Scrum and Kanban, are at the core of my development philosophy. They emphasize iterative development, collaboration, and rapid adaptation to change. I’m particularly adept at working within Scrum frameworks, participating in sprint planning, daily stand-ups, sprint reviews, and retrospectives. The iterative nature of sprints allows for continuous feedback and improvement, ensuring the product aligns with evolving requirements. For example, in a past project, we initially underestimated the complexity of a specific feature. However, using Scrum’s iterative approach, we identified this during the first sprint and made adjustments accordingly, avoiding a major setback later in the project.

Beyond the specific frameworks, the core principles of Agile – collaboration, flexibility, and customer focus – are what I value most. They foster a dynamic and responsive development environment, resulting in higher-quality software delivered more efficiently.

Q 24. How do you collaborate with other teams (e.g., QA, operations) during the deployment process?

Collaboration with QA and operations teams is paramount for successful deployments. With QA, I ensure early involvement in the process, with regular testing throughout the development lifecycle, not just at the end. This proactive approach helps identify and resolve issues much earlier. I facilitate this by providing QA teams with access to staging environments that mirror production, ensuring that their testing closely replicates real-world scenarios.

My collaboration with operations typically involves joint planning sessions for deployments. This includes discussions on rollback strategies, monitoring plans, and defining success criteria. I also participate in post-deployment reviews with the operations team to identify areas for improvement in our deployment processes. For instance, by working closely with the operations team, we improved our deployment process by incorporating automated rollback mechanisms, significantly reducing downtime in case of unexpected issues.

Communication is key throughout; I make use of collaborative tools like Slack and Jira to maintain open communication, promptly addressing any concerns or roadblocks.

Q 25. What are some best practices for software deployment?

Several best practices guide successful software deployments. These include:

- Automated Deployments: Automating the deployment process reduces human error and increases consistency. Tools like Jenkins or GitLab CI/CD are invaluable here.

- Infrastructure as Code (IaC): Managing infrastructure using code (e.g., Terraform, Ansible) allows for reproducible and version-controlled infrastructure setups.

- Blue/Green Deployments or Canary Deployments: These strategies minimize downtime and risk. Blue/Green deploys a new version alongside the existing one, switching traffic once the new version is validated. Canary releases gradually roll out the new version to a subset of users.

- Rollback Strategy: Always have a plan to quickly revert to a previous stable version if problems arise.

- Comprehensive Monitoring: Real-time monitoring of key metrics is essential for early detection of issues.

- Thorough Testing: Rigorous testing in environments that closely mimic production ensures the application functions as expected.

Following these best practices enhances reliability, reduces risks, and increases the overall efficiency of the deployment process.

Q 26. How do you stay up-to-date with the latest trends in software development and deployment?

Staying current is crucial in the rapidly evolving field of software development and deployment. I actively engage in several strategies to achieve this:

- Following industry blogs and publications: I regularly read blogs from companies like InfoQ and dedicated publications to stay abreast of new technologies and best practices.

- Attending conferences and webinars: Participation in industry events provides valuable insights and networking opportunities.

- Contributing to open-source projects: This provides hands-on experience with new technologies and allows me to learn from others.

- Taking online courses and certifications: Platforms like Coursera and Udemy offer courses on the latest technologies and methodologies.

- Active participation in online communities: Engaging in online forums and discussions allows me to learn from peers and experts.

This multi-faceted approach keeps me informed about the latest trends, enabling me to adapt and adopt the best approaches for my work.

Q 27. Describe a time you had to troubleshoot a complex deployment issue. What was the problem, and how did you solve it?

During a recent deployment of a large e-commerce application, we encountered an unexpected issue where database connections were intermittently failing after the deployment. Initial investigation pointed towards a configuration problem, but after hours of debugging, we found that the problem stemmed from a subtle incompatibility between the new application version and the database driver. The new version used a slightly different connection string format, which wasn’t properly handled by the database connection pool.

The solution involved a three-step process:

- Identifying the root cause: Through careful log analysis and network monitoring, we pinpointed the communication failure between the application and the database.

- Implementing a hotfix: We quickly developed and deployed a hotfix that corrected the connection string format in the application code.

- Rolling back and re-deploying: We rolled back the original deployment to mitigate further disruptions while the hotfix was prepared. Once the hotfix was tested thoroughly, we re-deployed.

This incident highlighted the importance of thorough regression testing and the need for robust monitoring and rollback strategies. We improved our testing procedures by adding specific tests for database connection compatibility to prevent similar incidents in the future.

Q 28. What are some metrics you use to assess the success of a deployment?

Several key metrics help assess deployment success. These include:

- Deployment time: How long did the deployment take?

- Downtime: Was there any downtime, and if so, how long?

- Error rate: How many errors occurred during and after the deployment?

- Rollback frequency: How often was a rollback necessary?

- User experience metrics: Were there any significant changes in key performance indicators (KPIs) such as page load times or conversion rates after the deployment?

- Resource utilization: Did resource consumption (CPU, memory, network) change significantly after deployment?

By carefully tracking these metrics, we can identify areas for improvement in our deployment processes and continuously enhance our efficiency and reliability.

Key Topics to Learn for Software Development and Deployment Interview

- Version Control Systems (e.g., Git): Understanding branching strategies, merging, conflict resolution, and best practices for collaborative development is crucial. Practical application: Explain how you’ve used Git to manage a complex project with multiple developers.

- Software Development Methodologies (e.g., Agile, Waterfall): Knowing the strengths and weaknesses of different methodologies and how they impact development and deployment processes is key. Practical application: Describe your experience working within an Agile framework, highlighting your contributions to sprint planning and execution.

- Continuous Integration/Continuous Deployment (CI/CD): Demonstrate understanding of automating build, test, and deployment pipelines. Practical application: Explain how you’ve implemented or improved a CI/CD pipeline in a past project.

- Cloud Platforms (e.g., AWS, Azure, GCP): Familiarity with at least one major cloud platform, including its services for deployment and infrastructure management, is highly beneficial. Practical application: Discuss your experience deploying applications to a cloud environment, addressing scalability and security considerations.

- Containerization (e.g., Docker, Kubernetes): Understanding containerization technologies and their role in simplifying deployment and scaling applications is important. Practical application: Explain how you’ve used Docker to create and manage containerized applications.

- Testing and Debugging: Showcase your proficiency in writing unit tests, integration tests, and debugging techniques. Practical application: Describe your approach to identifying and resolving bugs in a complex system.

- Security Best Practices: Demonstrate awareness of security vulnerabilities and best practices for securing applications throughout the development lifecycle. Practical application: Explain how you incorporated security considerations into a past project.

Next Steps

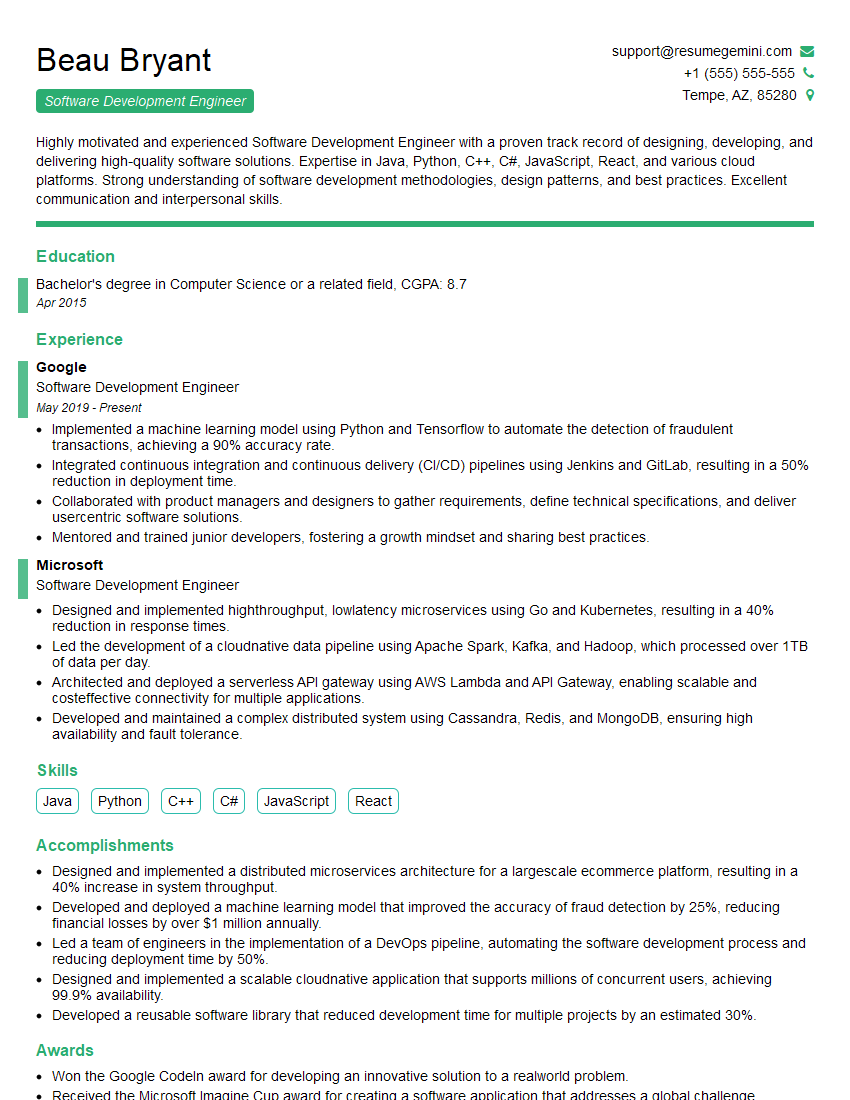

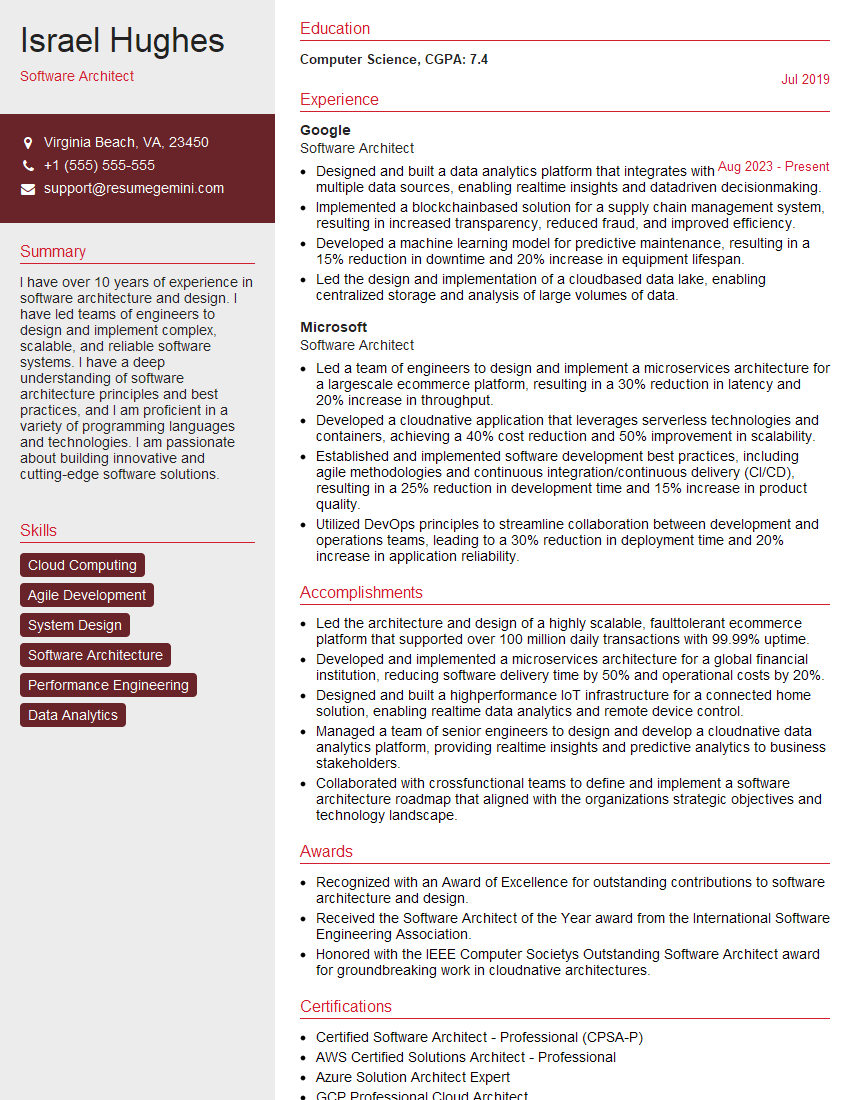

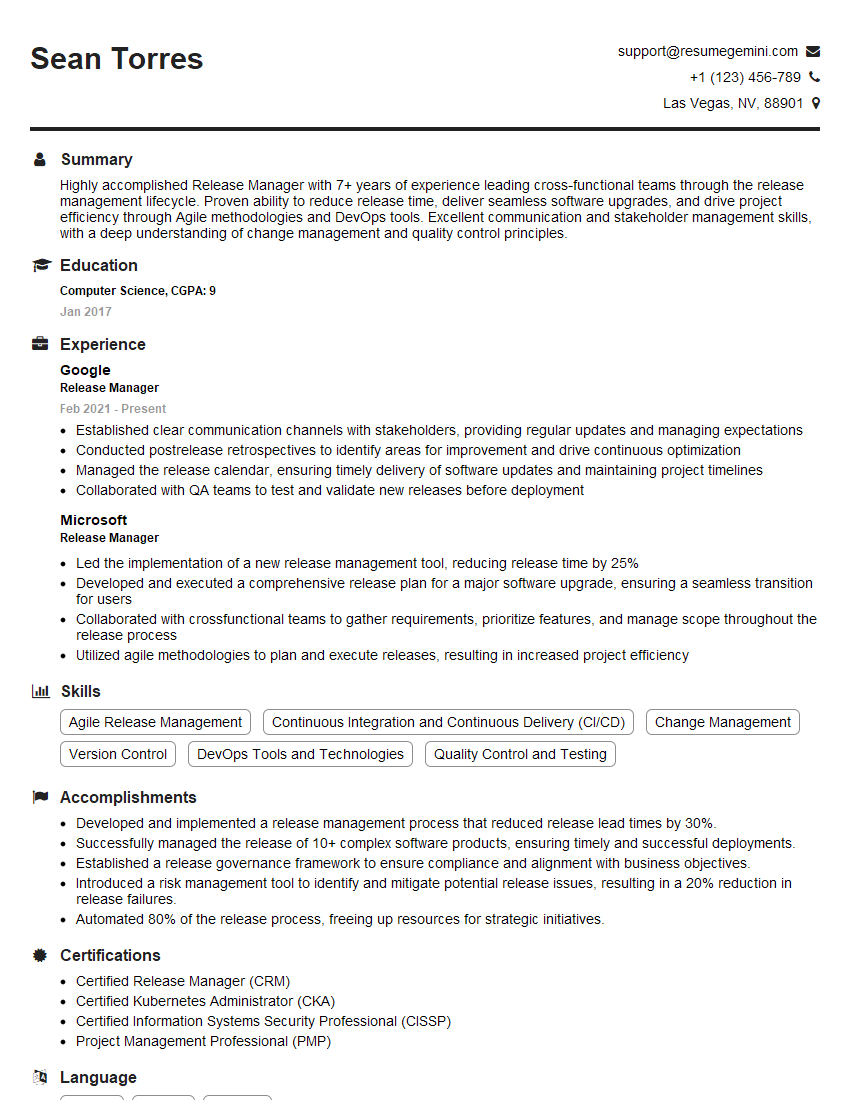

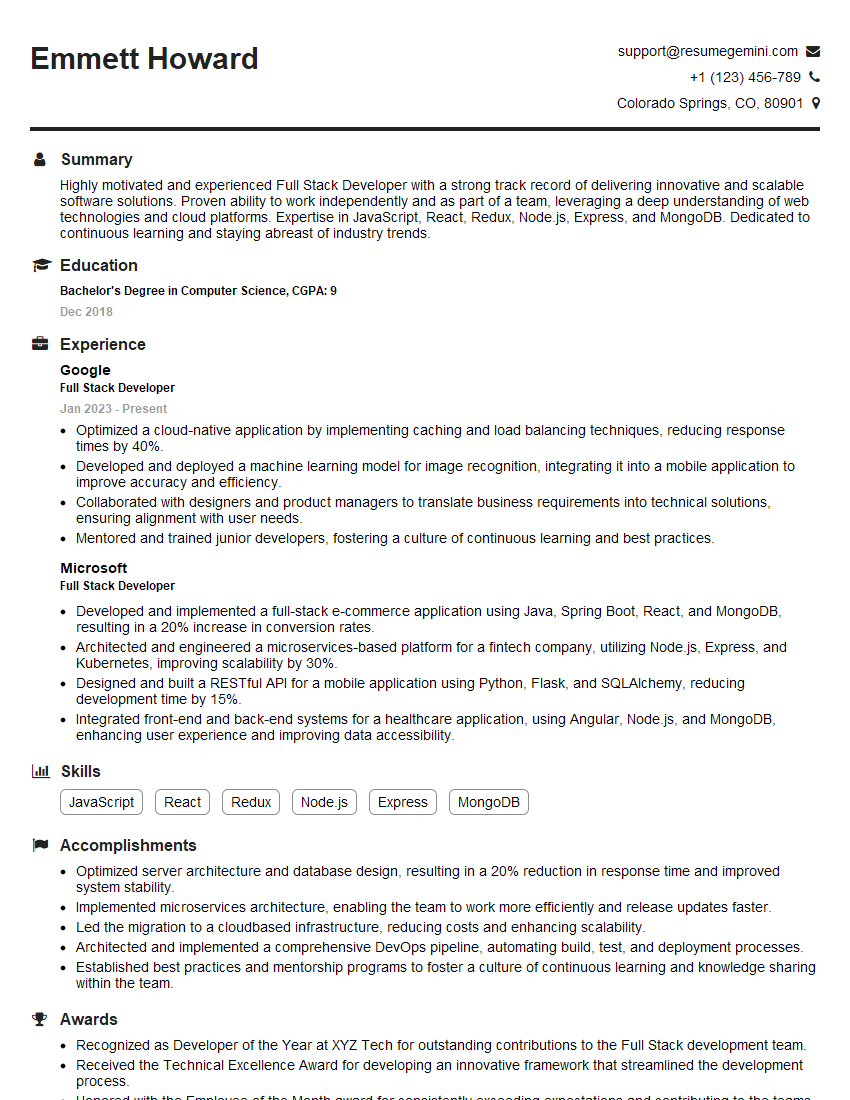

Mastering Software Development and Deployment is paramount for career advancement in this rapidly evolving field. A strong understanding of these concepts significantly enhances your marketability and opens doors to exciting opportunities. To further boost your job prospects, creating an ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that will catch the eye of recruiters. We provide examples of resumes tailored to Software Development and Deployment to help you get started. Take the next step towards your dream career today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO