Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Strong understanding of data structures and algorithms interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Strong understanding of data structures and algorithms Interview

Q 1. Explain the difference between a stack and a queue.

Stacks and queues are both linear data structures used to store and manage collections of elements, but they differ significantly in how elements are added and removed. Think of a stack like a stack of plates: you can only add (push) a new plate to the top, and you can only remove (pop) a plate from the top – this is known as Last-In, First-Out (LIFO). A queue, on the other hand, is like a line at a store: you add (enqueue) elements to the rear and remove (dequeue) them from the front – First-In, First-Out (FIFO).

- Stack: LIFO. Imagine a call stack in a programming language, tracking function calls; the last function called is the first to return.

- Queue: FIFO. Think of a printer queue; the first job submitted is the first to be printed.

Q 2. What is the time complexity of searching in a sorted array?

Searching a sorted array can be done very efficiently using binary search. Binary search repeatedly divides the search interval in half. If the target value is less than the middle element, the search continues in the lower half; otherwise, it continues in the upper half. This halving process continues until the target value is found or the search interval is empty.

The time complexity of binary search is O(log n), where n is the number of elements in the array. This is significantly faster than linear search (O(n)), which checks each element one by one. For example, searching a million-element sorted array would take approximately 20 steps with binary search instead of a million steps with linear search.

Q 3. Describe different types of tree data structures.

Trees are hierarchical data structures with a root node and branches connecting nodes. Several types of tree data structures exist, each optimized for different purposes:

- Binary Tree: Each node has at most two children (left and right). Binary search trees are a special type of binary tree where the left subtree contains only nodes with smaller values than the parent, and the right subtree contains only nodes with larger values.

- Binary Search Tree (BST): A binary tree where the value of every node in the left subtree is less than the value of its parent, and the value of every node in the right subtree is greater than the value of its parent. This makes searching, insertion, and deletion efficient.

- AVL Tree: A self-balancing BST, ensuring that the height of the tree remains balanced to maintain efficient search, insertion, and deletion operations (O(log n)).

- Red-Black Tree: Another self-balancing BST with slightly looser balance constraints than AVL trees. It offers a good balance between performance and the complexity of maintaining balance.

- B-Tree: Designed for disk-based data storage, where efficient retrieval is crucial. Nodes can have multiple children.

- Trie (Prefix Tree): Used for efficient retrieval of strings based on prefixes. Each node represents a character, and paths represent strings.

Q 4. What are the advantages and disadvantages of using linked lists?

Linked lists are linear data structures where elements are stored in nodes, and each node points to the next node in the sequence.

- Advantages:

- Dynamic size: Linked lists can grow or shrink easily during runtime.

- Efficient insertions and deletions: Inserting or deleting a node only requires changing a few pointers, making these operations O(1) if you have a pointer to the node before the insertion/deletion point.

- Memory efficient for frequent insertions/deletions: Unlike arrays, linked lists don’t require contiguous memory allocation, reducing memory waste.

- Disadvantages:

- Random access is not possible: You need to traverse the list from the beginning to reach a specific node, making access time O(n).

- Extra memory overhead: Each node requires extra memory to store the pointer to the next node.

- Traversal is slower compared to arrays.

Q 5. Explain the concept of Big O notation.

Big O notation is a way to describe the performance or complexity of an algorithm. It focuses on how the runtime or space requirements of an algorithm grow as the input size (n) increases. It doesn’t give the exact runtime, but rather describes the growth rate.

- O(1): Constant time. The runtime is independent of the input size (e.g., accessing an array element).

- O(log n): Logarithmic time. The runtime increases logarithmically with the input size (e.g., binary search).

- O(n): Linear time. The runtime increases linearly with the input size (e.g., linear search).

- O(n log n): Linearithmic time. The runtime increases proportionally to n multiplied by the logarithm of n (e.g., merge sort).

- O(n2): Quadratic time. The runtime increases proportionally to the square of the input size (e.g., bubble sort).

- O(2n): Exponential time. The runtime doubles with each addition to the input size (e.g., finding all subsets of a set).

Understanding Big O notation is critical for choosing the right algorithm for a given task, especially when dealing with large datasets.

Q 6. How would you implement a binary search tree?

A binary search tree (BST) is a binary tree where the value of each node is greater than all values in its left subtree and less than all values in its right subtree. This property allows for efficient searching, insertion, and deletion operations. Here’s a Python implementation:

class Node:

def __init__(self, data):

self.data = data

self.left = None

self.right = None

class BST:

def __init__(self):

self.root = None

def insert(self, data):

if self.root is None:

self.root = Node(data)

else:

self._insert_recursive(self.root, data)

def _insert_recursive(self, node, data):

if data < node.data:

if node.left is None:

node.left = Node(data)

else:

self._insert_recursive(node.left, data)

else:

if node.right is None:

node.right = Node(data)

else:

self._insert_recursive(node.right, data)

# ... (search, delete methods would go here)This code provides a basic framework. Complete BST implementation would include methods for searching, deleting nodes, and potentially balancing the tree to maintain efficient performance.

Q 7. Describe different sorting algorithms and their time complexities.

Many sorting algorithms exist, each with different time complexities. Here are a few examples:

- Bubble Sort: Repeatedly steps through the list, compares adjacent elements and swaps them if they are in the wrong order. Simple but inefficient, with a time complexity of O(n2).

- Insertion Sort: Builds the final sorted array one item at a time. Efficient for small datasets or nearly sorted data, with a time complexity of O(n2) in the worst case, but O(n) in the best case.

- Selection Sort: Repeatedly finds the minimum element from the unsorted part and puts it at the beginning. Also O(n2) time complexity.

- Merge Sort: A divide-and-conquer algorithm that recursively divides the list into smaller sublists until each sublist contains only one element, then repeatedly merges the sublists to produce new sorted sublists until there is only one sorted list remaining. Efficient, with a time complexity of O(n log n).

- Quick Sort: Another divide-and-conquer algorithm that picks an element as a pivot and partitions the other elements into two sub-arrays, according to whether they are less than or greater than the pivot. Average time complexity is O(n log n), but can degrade to O(n2) in the worst case (though this is rare with good pivot selection).

- Heap Sort: Uses a heap data structure to sort an array. Guaranteed O(n log n) time complexity.

The choice of sorting algorithm depends on factors like the size of the data, whether the data is nearly sorted, and memory constraints.

Q 8. What is a hash table, and how does it work?

A hash table, also known as a hash map, is a data structure that implements an associative array abstract data type, a structure that can map keys to values. It uses a hash function to compute an index into an array of buckets or slots, from which the desired value can be found.

Imagine a library catalog. Instead of searching through every book, you use the book's title (the key) to quickly find its location (the value) on the shelf. The hash function is like the cataloging system; it takes the title and determines the shelf location.

Here's how it works:

- Hash Function: This function takes a key as input and returns an integer (the hash code), which is used as an index into the array.

- Collision Handling: Because multiple keys might map to the same index (a collision), hash tables employ strategies like chaining (storing colliding keys in a linked list at that index) or open addressing (probing for the next available slot).

- Lookup, Insertion, Deletion: Operations are generally O(1) on average, making hash tables incredibly efficient for these tasks, provided there aren't excessive collisions.

Example (Python):

Python

dictionary = {"apple": 1, "banana": 2, "cherry": 3}

print(dictionary["banana"]) # Output: 2Hash tables are used extensively in databases, caches, symbol tables in compilers, and any application requiring fast key-value lookups.

Q 9. Explain the concept of graph traversal (BFS and DFS).

Graph traversal refers to the systematic exploration of all the vertices and edges in a graph. Breadth-First Search (BFS) and Depth-First Search (DFS) are two common algorithms for this task.

Breadth-First Search (BFS): Think of BFS as exploring a graph level by level. It starts at a root node and visits all its neighbors before moving on to their neighbors. It uses a queue data structure to manage the order of visits.

Depth-First Search (DFS): DFS explores a graph by going as deep as possible along each branch before backtracking. It uses a stack (implicitly through recursion or explicitly) to keep track of the nodes to visit.

Example (Conceptual): Imagine you're exploring a maze. BFS is like systematically checking each room in a given floor before going to the next floor. DFS is like exploring one corridor completely until you hit a dead end, then going back and trying another corridor.

Code Snippet (Python - DFS using recursion):

Python

def dfs(graph, node, visited):

visited[node] = True

print(node)

for neighbor in graph[node]:

if not visited[neighbor]:

dfs(graph, neighbor, visited)BFS is useful for finding the shortest path in unweighted graphs, while DFS is used in topological sorting, cycle detection, and finding connected components.

Q 10. How would you detect a cycle in a linked list?

To detect a cycle in a linked list, we can use the Floyd's Tortoise and Hare algorithm (also known as the fast and slow pointer approach).

Floyd's Algorithm:

- Two Pointers: Maintain two pointers, one (the tortoise) moving one node at a time, and the other (the hare) moving two nodes at a time.

- Iteration: Move both pointers simultaneously through the linked list.

- Cycle Detection: If there's a cycle, the hare will eventually lap the tortoise (they will meet).

- Cycle Point: Once the pointers meet, reset the tortoise to the head of the list. Move both pointers one node at a time. The point where they meet again is the beginning of the cycle.

Code Snippet (Python):

Python

def has_cycle(head):

tortoise = head

hare = head

while hare and hare.next:

tortoise = tortoise.next

hare = hare.next.next

if tortoise == hare:

return True

return FalseThis algorithm has a time complexity of O(n) and space complexity of O(1), making it highly efficient for detecting cycles in linked lists.

Q 11. What is dynamic programming, and when is it applicable?

Dynamic programming is an algorithmic technique that solves optimization problems by breaking them down into smaller overlapping subproblems, solving each subproblem only once, and storing their solutions to avoid redundant computations.

When is it applicable? Dynamic programming is applicable when a problem exhibits two key characteristics:

- Overlapping Subproblems: The problem can be broken down into smaller subproblems that are reused multiple times.

- Optimal Substructure: The optimal solution to the problem can be constructed from the optimal solutions of its subproblems.

Example (Fibonacci Sequence): Calculating the nth Fibonacci number using recursion involves redundant calculations. Dynamic programming avoids this by storing previously calculated Fibonacci numbers.

Code Snippet (Python - Dynamic Programming):

Python

def fibonacci_dp(n):

fib = [0, 1]

for i in range(2, n + 1):

fib.append(fib[i - 1] + fib[i - 2])

return fib[n]Dynamic programming is used in various applications, such as shortest path algorithms (e.g., Dijkstra's algorithm), sequence alignment in bioinformatics, and resource allocation problems.

Q 12. Explain different graph representations (adjacency matrix, adjacency list).

Graphs can be represented in several ways. Two common representations are the adjacency matrix and the adjacency list.

Adjacency Matrix: An adjacency matrix is a two-dimensional array where each element matrix[i][j] represents the edge between vertex i and vertex j. A value of 1 indicates an edge, and 0 indicates no edge. This representation is suitable for dense graphs (graphs with many edges).

Adjacency List: An adjacency list represents a graph as an array of linked lists or other dynamic sets. Each element in the array corresponds to a vertex, and the linked list contains all vertices adjacent to that vertex. This representation is suitable for sparse graphs (graphs with relatively few edges).

Example: Consider a graph with vertices A, B, C, and edges A-B, A-C, B-C.

Adjacency Matrix:

A B C

A 0 1 1

B 1 0 1

C 1 1 0Adjacency List:

A: [B, C]

B: [A, C]

C: [A, B]The choice of representation depends on the specific application and the characteristics of the graph. For example, checking if an edge exists between two vertices is faster with an adjacency matrix (O(1)), while iterating through all neighbors of a vertex is faster with an adjacency list (O(degree of the vertex)).

Q 13. How would you implement a priority queue?

A priority queue is an abstract data type similar to a regular queue, but where each element has an associated priority. Elements are dequeued in order of priority, with the highest-priority element dequeued first.

Implementation: Priority queues can be implemented using various data structures. A common and efficient choice is a min-heap (or max-heap, depending on the priority definition) data structure.

Min-Heap Implementation: A min-heap is a binary tree where the value of each node is less than or equal to the values of its children. This property ensures that the root node always holds the minimum element, enabling efficient retrieval of the highest priority element.

Code Snippet (Conceptual Python - using heapq module): The Python `heapq` module provides efficient min-heap operations.

Python

import heapq

heap = []

heapq.heappush(heap, (5, 'task5'))

heapq.heappush(heap, (2, 'task2'))

heapq.heappush(heap, (8, 'task8'))

print(heapq.heappop(heap)) #Output: (2, 'task2')Priority queues are used in various applications, including task scheduling, event-driven simulations, Dijkstra's algorithm (for finding shortest paths), and Huffman coding (data compression).

Q 14. What are heaps, and what are their applications?

Heaps are specialized tree-based data structures that satisfy the heap property. A min-heap satisfies the property that the value of each node is less than or equal to the value of its children. A max-heap satisfies the opposite property.

Types of Heaps: Common types include min-heaps and max-heaps, as mentioned above. Binary heaps are the most common type, using a binary tree structure.

Applications:

- Priority Queues: As discussed earlier, heaps are an efficient implementation for priority queues.

- Heap Sort: Heap sort is an efficient sorting algorithm that uses a heap to sort elements in O(n log n) time.

- Finding the kth smallest (or largest) element: Heaps are efficient for finding the kth smallest or largest element in a collection without fully sorting the data.

- Graph Algorithms: Heaps are used in graph algorithms like Dijkstra's algorithm and Prim's algorithm.

Example (Min-Heap): Imagine a hospital emergency room. Patients are prioritized based on the severity of their condition. A min-heap could be used to efficiently manage patients, ensuring that the patient with the highest priority is treated first. The root of the heap would always represent the patient with the least critical condition (lowest priority) which needs to be treated last.

Q 15. What are self-balancing trees (AVL, Red-Black), and why are they used?

Self-balancing trees, like AVL and Red-Black trees, are special types of binary search trees that automatically maintain a balanced structure during insertions and deletions. This balance ensures that the tree's height remains relatively small, preventing worst-case scenarios where search, insertion, and deletion operations could take O(n) time (linear time, where n is the number of nodes). In a balanced tree, these operations typically have a time complexity of O(log n) (logarithmic time), making them much more efficient.

AVL Trees: These trees maintain balance by ensuring that for every node, the height difference between its left and right subtrees is at most 1. This is achieved through rotations (single or double) performed during insertions and deletions to rebalance the tree.

Red-Black Trees: These trees use a more relaxed balancing approach compared to AVL trees. Each node is colored either red or black, and the coloring scheme ensures that the tree remains relatively balanced. The rules for coloring help prevent the tree from becoming too skewed. Insertions and deletions require recoloring and rotations.

Why are they used? Self-balancing trees are crucial when efficiency is paramount. Imagine a database system storing millions of records. Using a regular binary search tree, insertions and deletions could lead to a highly unbalanced tree, drastically slowing down searches. Self-balancing trees prevent this degradation in performance, guaranteeing efficient operations even with large datasets. They're commonly found in databases, operating systems, and other performance-critical applications.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini's guide. Showcase your unique qualifications and achievements effectively.

- Don't miss out on holiday savings! Build your dream resume with ResumeGemini's ATS optimized templates.

Q 16. Describe the difference between depth-first search and breadth-first search.

Depth-First Search (DFS) and Breadth-First Search (BFS) are two fundamental graph traversal algorithms. They differ primarily in the order they explore nodes.

Depth-First Search (DFS): Think of DFS as exploring a maze by going as deep as possible along one path before backtracking. It uses a stack (implicitly through recursion or explicitly) to keep track of the nodes to visit. It explores all the descendants of a node before moving to the siblings.

Example (using recursion):

function dfs(node) {

visit(node);

for each neighbor in node.neighbors {

dfs(neighbor);

}

}

Breadth-First Search (BFS): BFS is like exploring a maze level by level. It uses a queue to keep track of nodes. It visits all the neighbors of a node before moving to their neighbors. It explores all nodes at the same depth before moving to nodes at the next depth.

Example (using a queue):

function bfs(startNode) {

let queue = [startNode];

while (queue.length > 0) {

let node = queue.shift();

visit(node);

for each neighbor in node.neighbors {

queue.push(neighbor);

}

}

}

Difference Summarized:

- DFS uses a stack (recursion or explicit), BFS uses a queue.

- DFS explores depth first, BFS explores breadth first.

- DFS is generally better for finding paths (e.g., finding a specific item in a directory), while BFS is useful for finding the shortest path (e.g., in navigation systems).

Q 17. Explain the concept of recursion and its applications.

Recursion is a powerful programming technique where a function calls itself within its own definition. Think of it as a set of Russian nesting dolls; each doll contains a smaller version of itself. The process continues until a base case is reached, which stops the recursion and allows the function calls to unwind, returning values back up the chain.

How it works: A recursive function has two essential parts:

- Base Case: This is the condition that stops the recursion. Without a base case, the function would call itself indefinitely, leading to a stack overflow error.

- Recursive Step: This is where the function calls itself, typically with a smaller or modified version of the input.

Applications:

- Tree Traversal: DFS and other tree algorithms are naturally recursive.

- Divide and Conquer Algorithms: Algorithms like merge sort and quicksort use recursion to break down a problem into smaller subproblems.

- Factorial Calculation: Calculating the factorial of a number (n!) is a classic recursive example.

- Graph Algorithms: Many graph algorithms like DFS and topological sort use recursion.

Example (Factorial):

function factorial(n) {

if (n === 0) { // Base case

return 1;

} else {

return n * factorial(n - 1); // Recursive step

}

}

Careful consideration is needed when implementing recursion to avoid stack overflow issues, especially with large inputs. Iteration can sometimes provide a more efficient and memory-friendly alternative.

Q 18. How do you handle memory leaks in your code?

Memory leaks occur when your program allocates memory but fails to release it when it's no longer needed. Over time, this leads to increased memory consumption, potentially causing the program to crash or slow down significantly. Handling memory leaks requires careful attention to resource management.

Strategies to prevent memory leaks:

- Explicit deallocation: In languages like C and C++, use

free()ordeleteto explicitly release dynamically allocated memory. Failure to do so leads directly to leaks. - Garbage collection: Languages like Java, Python, and JavaScript have automatic garbage collection, which reclaims memory that is no longer referenced. While this greatly reduces the risk of memory leaks, it's still possible to create circular references or hold onto large objects unnecessarily.

- Reference counting: Some languages and libraries use reference counting to track the number of references to an object. When the count drops to zero, the object is automatically deallocated.

- Smart pointers (C++): Smart pointers automatically manage the lifetime of dynamically allocated objects, preventing leaks by automatically releasing the memory when the smart pointer goes out of scope.

- Resource management classes: Create custom classes that manage resources (like files or network connections) and ensure proper cleanup in destructors or

finallyblocks (in languages like Java or Python). - Regular memory profiling: Use memory profiling tools to identify memory leaks. These tools analyze your program's memory usage to pinpoint areas where memory isn't being released.

Example (C++ with smart pointer):

#include

int main() {

std::unique_ptr ptr = std::make_unique(10); // Memory automatically released when ptr goes out of scope

// ... use ptr ...

return 0;

}

By using appropriate techniques for your programming language, you can minimize the risks of memory leaks and build robust, reliable applications.

Q 19. What are the different ways to implement a cache?

Caches are used to store frequently accessed data in a faster, smaller storage location closer to the processor than main memory. This dramatically speeds up access times. There are several ways to implement a cache:

1. Direct Mapped Cache: The simplest implementation. Each memory location maps to only one cache location. If there's a collision (multiple memory locations mapping to the same cache location), a replacement policy (like FIFO or LRU) is needed.

2. Fully Associative Cache: Any memory location can be placed in any cache location. This offers greater flexibility but requires more complex hardware for address translation.

3. Set Associative Cache: A compromise between direct-mapped and fully associative. Memory locations are mapped to a set of cache locations. Within a set, any location can hold any memory block. This balances the cost and efficiency.

Cache Replacement Policies: When a cache is full and a new block needs to be stored, a replacement policy determines which existing block to evict. Common policies include:

- First-In, First-Out (FIFO): Replaces the oldest block.

- Least Recently Used (LRU): Replaces the block that hasn't been accessed in the longest time. Generally provides better performance.

- Least Frequently Used (LFU): Replaces the block accessed least frequently.

The choice of cache implementation and replacement policy depends on factors like cost, performance requirements, and available hardware.

Q 20. Explain different searching algorithms (linear, binary, etc.)

Searching algorithms are used to find a specific element within a collection of data. The efficiency of the algorithm often depends on whether the data is sorted.

1. Linear Search: This is the simplest algorithm. It sequentially checks each element in the collection until the target element is found or the end of the collection is reached. It has a time complexity of O(n) (linear time), where n is the number of elements.

2. Binary Search: This algorithm works only on sorted data. It repeatedly divides the search interval in half. If the target element is less than the middle element, the search continues in the lower half; otherwise, it continues in the upper half. This continues until the target element is found or the search interval is empty. Binary search has a time complexity of O(log n) (logarithmic time), making it significantly faster than linear search for large datasets.

3. Jump Search: An improvement over linear search, particularly for large arrays. It jumps ahead by a fixed step size and then performs a linear search in the subarray. It offers a time complexity of O(√n).

4. Interpolation Search: Similar to binary search but instead of dividing the array into halves, it estimates the position of the target element based on its value relative to the minimum and maximum values in the search interval. It works well for uniformly distributed data and has an average time complexity of O(log log n).

5. Hash Table Search: Hash tables use a hash function to map keys to indices in an array. Searching involves computing the hash of the key and directly accessing the corresponding array index. In the best case, this offers O(1) (constant time) search complexity, but collisions (multiple keys mapping to the same index) can degrade performance.

The choice of searching algorithm depends on the size of the dataset, whether it's sorted, and the frequency of searches.

Q 21. Design a data structure for a specific problem (e.g., LRU cache).

Let's design a data structure for an LRU (Least Recently Used) cache. The key requirement is to efficiently track the least recently used item for eviction when the cache is full. A common approach uses a combination of a doubly linked list and a hash map (dictionary).

Doubly Linked List: The linked list maintains the order of elements based on recency. The most recently used item is at the head, and the least recently used item is at the tail.

Hash Map: The hash map maps keys to nodes in the doubly linked list. This allows for O(1) lookup of an item's location in the list.

Operations:

- get(key):

- Look up the key in the hash map.

- If found, move the corresponding node to the head of the linked list (making it the most recently used).

- Return the value associated with the key.

- If not found, return -1 (or some appropriate indicator).

- put(key, value):

- If the cache is full, remove the tail node (LRU) from the linked list and remove its corresponding entry from the hash map.

- Create a new node with the key and value.

- Add the new node to the head of the linked list.

- Update the hash map with the key and the new node.

Example (Conceptual Python):

class Node:

def __init__(self, key, value):

self.key = key

self.value = value

self.prev = None

self.next = None

class LRUCache:

def __init__(self, capacity):

self.capacity = capacity

self.cache = {} # Hash map

self.head = Node(0, 0) # Dummy head

self.tail = Node(0, 0) # Dummy tail

self.head.next = self.tail

self.tail.prev = self.head

# ... (Implementation of get and put methods would follow here) ...

This combined data structure provides efficient O(1) time complexity for both get and put operations, crucial for a well-performing LRU cache.

Q 22. What is the time and space complexity of merge sort?

Merge Sort is a divide-and-conquer algorithm that works by recursively breaking down a list into smaller sublists until each sublist contains only one element. Then, it repeatedly merges the sublists to produce new sorted sublists until there is only one sorted list remaining.

Time Complexity: O(n log n) in all cases (best, average, and worst). This is because the algorithm divides the problem in half at each step (log n) and performs linear work (n) during the merge step. Think of it like efficiently sorting a deck of cards by repeatedly splitting it in half and merging the sorted halves.

Space Complexity: O(n). Merge sort requires linear extra space because it needs to create auxiliary arrays to store the sublists during the merging process. This is because it's not an in-place sorting algorithm.

Q 23. What is the time and space complexity of quicksort?

Quicksort, another divide-and-conquer algorithm, selects a 'pivot' element and partitions the other elements into two sub-arrays, according to whether they are less than or greater than the pivot. The sub-arrays are then recursively sorted.

Time Complexity: Average and best case: O(n log n). Worst case: O(n2). The worst-case scenario happens when the pivot selection consistently results in highly unbalanced partitions (e.g., always picking the smallest or largest element). Imagine trying to sort a deck of cards where you always pick the lowest card as the pivot – that's terribly inefficient!

Space Complexity: Average case: O(log n) due to the recursive calls. Worst case: O(n) if the recursion depth becomes linear (highly unbalanced partitions). Quicksort is generally considered an in-place algorithm, meaning it doesn't require significant extra memory.

Q 24. How would you reverse a linked list?

Reversing a linked list involves iteratively changing the 'next' pointers of each node. There are a few approaches, but a common and efficient one is iterative reversal using three pointers.

Algorithm:

- Initialize three pointers:

prev(initiallyNULL),curr(points to the head), andnext(temporary). - Iterate through the list:

- Store the

nextnode innext. - Reverse the

currnode's pointer:curr->next = prev. - Move the pointers forward:

prev = currandcurr = next. - Repeat until

currisNULL.

Example (C++):

struct Node { int data; Node *next; };

Node* reverseList(Node* head) {

Node* prev = nullptr;

Node* curr = head;

Node* next;

while (curr != nullptr) {

next = curr->next;

curr->next = prev;

prev = curr;

curr = next;

}

return prev; // prev now points to the new head

}Q 25. How would you implement a LRU cache?

An LRU (Least Recently Used) cache is a data structure that stores a fixed number of items. When the cache is full and a new item needs to be added, the least recently used item is evicted. This is commonly implemented using a combination of a doubly linked list and a hash map.

Implementation:

- Doubly Linked List: Maintains the order of items, with the most recently used at the head and the least recently used at the tail.

- Hash Map: Provides constant-time (O(1)) lookup for each item, mapping keys to nodes in the doubly linked list.

When an item is accessed, it's moved to the head of the list. When the cache is full, the tail node is removed.

Example (Conceptual): Imagine a coffee shop with limited counter space (cache size). The most recently ordered coffees are placed at the front, while the oldest are removed to make space for new orders.

Q 26. Given two sorted arrays, how would you merge them efficiently?

Merging two sorted arrays efficiently can be done using a technique similar to the merging step in merge sort. We iterate through both arrays, comparing elements and placing the smaller one into a new merged array.

Algorithm:

- Create a new array to store the merged result.

- Use two pointers, one for each input array, initially pointing to the beginning.

- Compare the elements at the pointers. Add the smaller element to the merged array and advance the corresponding pointer.

- Repeat until one of the input arrays is exhausted.

- Copy the remaining elements from the non-exhausted array to the merged array.

Example (Python):

def merge_sorted_arrays(arr1, arr2):

merged = []

i = j = 0

while i < len(arr1) and j < len(arr2):

if arr1[i] < arr2[j]:

merged.append(arr1[i])

i += 1

else:

merged.append(arr2[j])

j += 1

merged.extend(arr1[i:])

merged.extend(arr2[j:])

return mergedQ 27. Write a function to find the kth largest element in an array.

Finding the kth largest element can be done efficiently using a min-heap data structure. A min-heap keeps track of the smallest k elements encountered so far.

Algorithm:

- Create a min-heap of size k.

- Iterate through the array:

- If the current element is greater than the root of the min-heap (the smallest element currently in the heap), replace the root with the current element and heapify (re-establish the min-heap property).

- After processing the entire array, the root of the min-heap will be the kth largest element.

Example (Python using heapq module):

import heapq

def find_kth_largest(nums, k):

return heapq.nlargest(k, nums)[-1]This leverages Python's built-in `heapq` module for efficient heap operations. The `nlargest` function finds the k largest elements, and we take the last one (the kth largest).

Key Topics to Learn for Strong Understanding of Data Structures and Algorithms Interviews

- Arrays and Strings: Understanding manipulation, searching, and sorting techniques. Practical applications include optimizing database queries and processing large datasets.

- Linked Lists: Mastering singly, doubly, and circular linked lists. Practical applications include implementing caches and managing dynamic memory allocation.

- Trees (Binary Trees, Binary Search Trees, Heaps, Tries): Understanding traversal algorithms, balancing techniques, and their use in efficient searching and sorting. Practical applications include building hierarchical data structures and implementing priority queues.

- Graphs: Understanding graph representations (adjacency matrix, adjacency list), traversal algorithms (BFS, DFS), and shortest path algorithms (Dijkstra's, Bellman-Ford). Practical applications include network routing and social network analysis.

- Hash Tables: Understanding hash functions, collision handling, and their applications in fast data retrieval. Practical applications include implementing caches and dictionaries.

- Sorting Algorithms: Understanding the time and space complexities of various sorting algorithms (Merge Sort, Quick Sort, Heap Sort). Practical applications include database indexing and data visualization.

- Searching Algorithms: Understanding linear search, binary search, and their applications in efficient data retrieval. Practical applications include finding specific elements in large datasets.

- Algorithm Design Techniques: Familiarize yourself with common algorithm design paradigms like Divide and Conquer, Dynamic Programming, Greedy Algorithms, and Backtracking. This will help you approach and solve problems more effectively.

- Big O Notation: Understanding how to analyze the time and space complexity of algorithms is crucial for optimizing performance. This is essential for discussing algorithm efficiency during interviews.

Next Steps

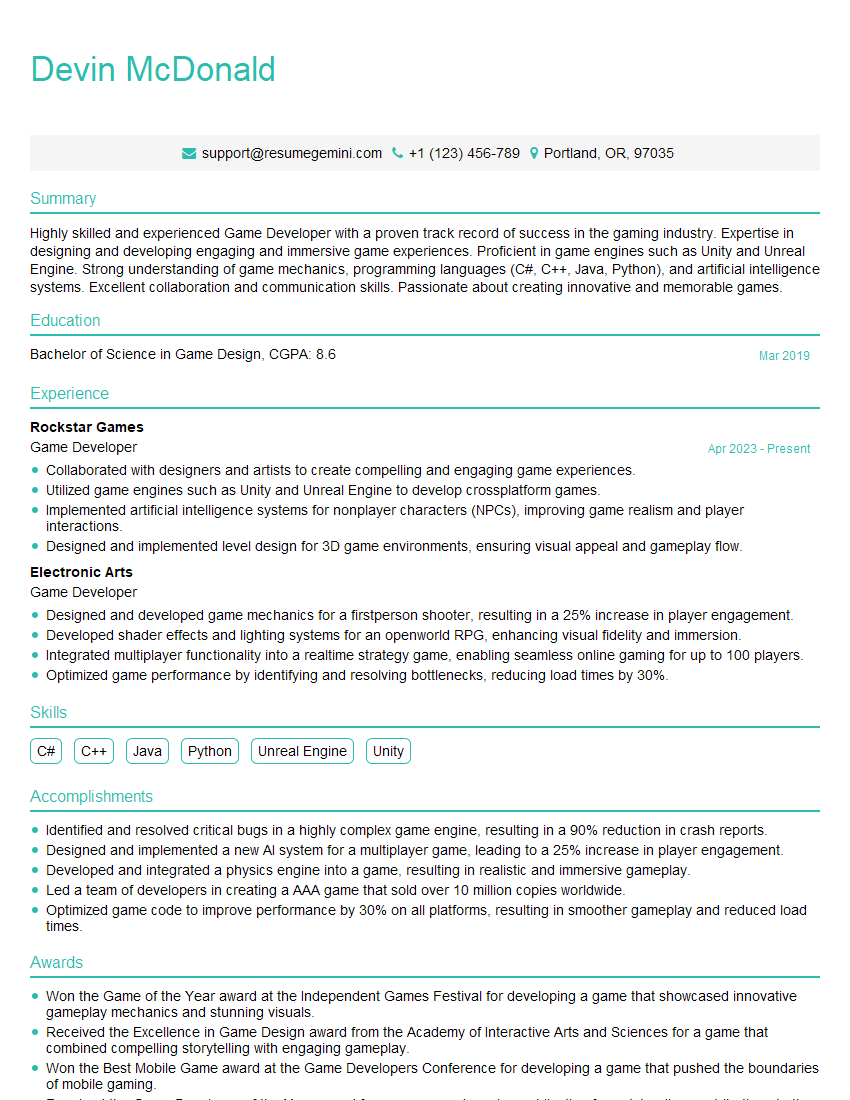

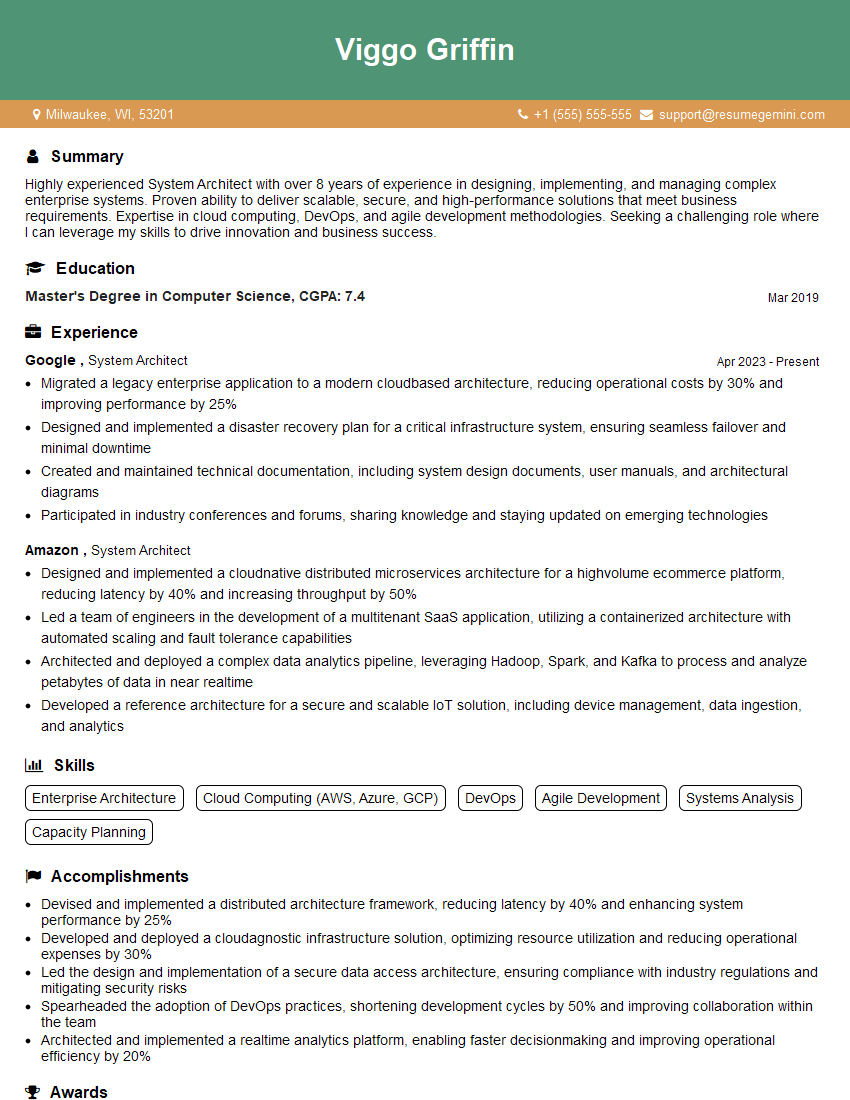

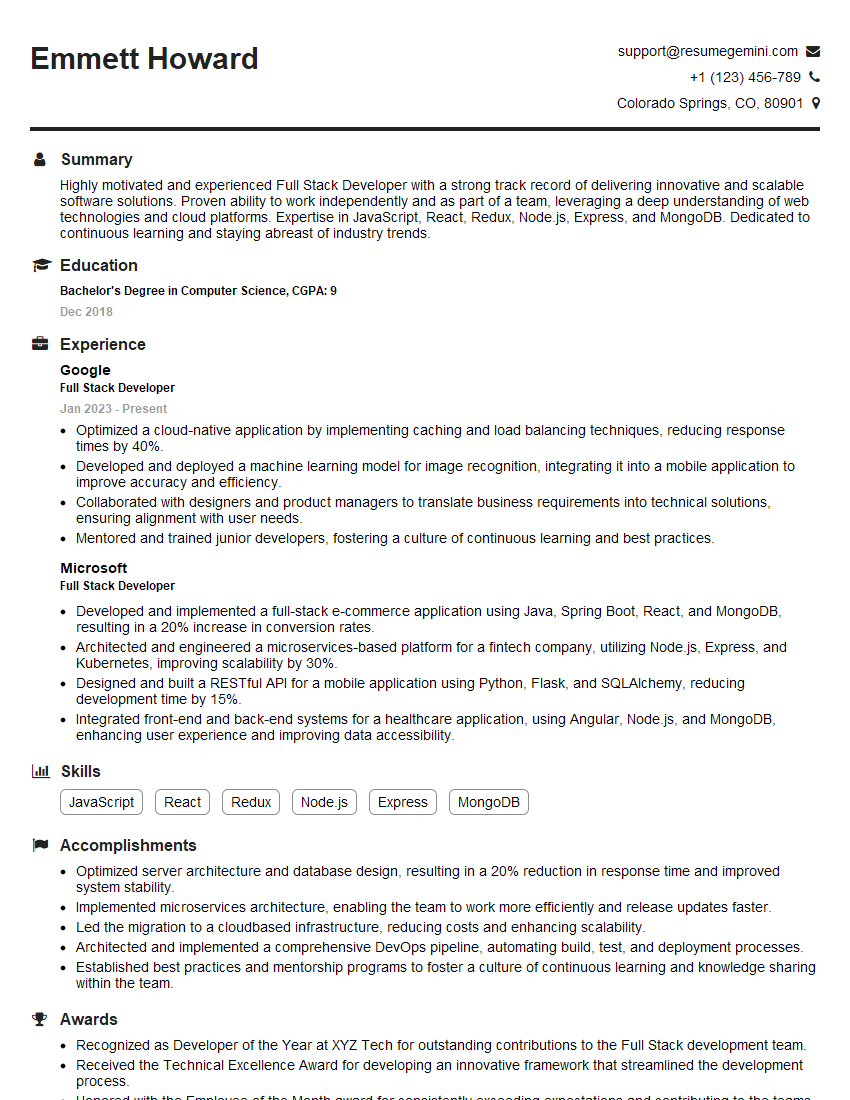

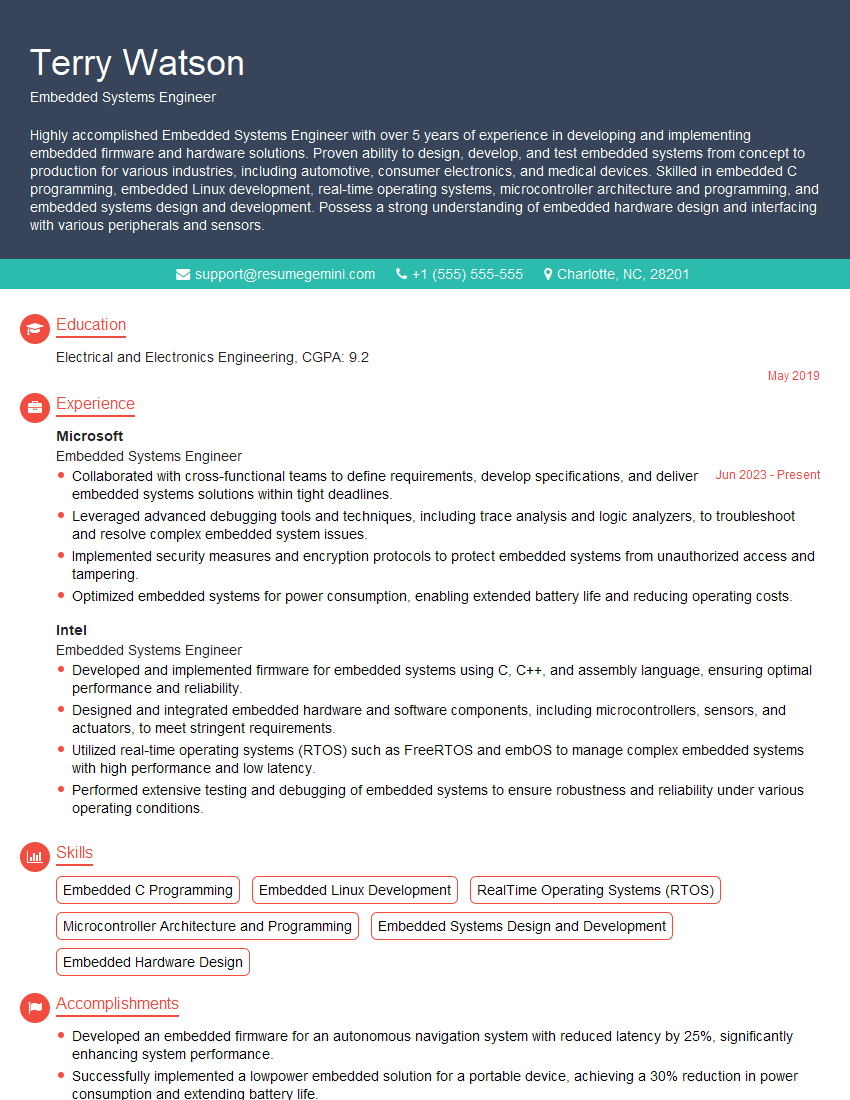

Mastering data structures and algorithms is paramount for a successful career in software development. It demonstrates a strong foundation in computer science and enables you to solve complex problems efficiently. To increase your job prospects, crafting an ATS-friendly resume that highlights these skills is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that catches the recruiter's eye. We offer examples of resumes tailored to candidates with a strong understanding of data structures and algorithms to guide you in creating your own.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO