Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Terrain Analysis and Visualization interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Terrain Analysis and Visualization Interview

Q 1. Explain the difference between a Digital Elevation Model (DEM) and a Digital Terrain Model (DTM).

While the terms ‘Digital Elevation Model’ (DEM) and ‘Digital Terrain Model’ (DTM) are often used interchangeably, there’s a subtle but important distinction. A DEM is a digital representation of the Earth’s surface, primarily focusing on elevation data. Think of it as a bare-earth model – it shows the height of the land surface without considering features like vegetation or buildings. In contrast, a DTM is more comprehensive. It incorporates not only elevation but also other surface features like buildings, trees, and even roads. Essentially, a DTM provides a more detailed and nuanced representation of the terrain’s surface, including man-made and natural elements. Imagine comparing a simple topographic map showing only contour lines (DEM) with a detailed 3D model showing buildings, roads, and trees (DTM).

For example, if you’re planning a road construction project, a DEM would be sufficient for initial route planning based on elevation. However, a DTM would be critical for determining precise earthworks, avoiding existing structures, and optimizing the road’s alignment.

Q 2. Describe various interpolation methods used in creating DEMs and their strengths and weaknesses.

Several interpolation methods are used to create DEMs from scattered elevation points, each with its strengths and weaknesses. Here are a few common ones:

- Inverse Distance Weighting (IDW): This method assigns weights to elevation points based on their distance from the interpolation point. Closer points get higher weights. It’s simple and computationally efficient, but can produce artifacts such as ‘bull’s-eyes’ around data points if not carefully parameterized. The weight factor (e.g., power of the distance) needs careful tuning.

- Kriging: A geostatistical method that considers both distance and spatial autocorrelation in the data. It provides an estimate of the interpolation error, making it valuable for assessing uncertainty. It’s more complex than IDW but often yields more accurate results, particularly for spatially correlated data. However, it is computationally expensive.

- Spline Interpolation: This method fits a smooth surface through the data points, using mathematical functions called splines. It produces smooth surfaces but might not always accurately represent sharp elevation changes. Different types of splines (e.g., cubic splines) offer varying degrees of smoothness and accuracy.

- Triangulated Irregular Networks (TINs): This method connects data points to form a network of triangles. It’s well-suited for areas with complex terrain, accurately representing abrupt changes in elevation. However, it’s less effective in areas with uniformly spaced data.

The choice of method depends on factors like the distribution of data points, the complexity of the terrain, and the desired level of accuracy. For instance, IDW is a good starting point for quick visualizations, while Kriging might be preferred for high-accuracy applications like hydrological modeling.

Q 3. How do you handle data inconsistencies or errors in elevation data?

Handling inconsistencies and errors in elevation data is crucial for reliable terrain analysis. Strategies include:

- Data Cleaning and Editing: This involves visually inspecting the data using GIS software and identifying outliers or erroneous values. These might be corrected manually or automatically using filters that identify improbable elevation changes based on context.

- Spatial Filtering: Techniques like median filtering or moving average filtering can smooth out noise and inconsistencies by replacing each data point with the median or average of its neighboring values. This can be useful for handling random errors but may blur subtle features.

- Interpolation with Error Handling: Using interpolation methods that explicitly consider and quantify uncertainty, such as Kriging, helps to create a DEM that reflects the uncertainty inherent in the input data.

- Gap Filling: For missing data, interpolation techniques can fill the gaps using the surrounding data. However, extrapolation should be avoided as it can introduce significant errors.

- Data Source Validation: Whenever possible, using multiple data sources and comparing the results can help detect and correct inconsistencies.

The approach chosen often depends on the type and extent of the errors. For instance, gross errors (clearly wrong elevation values) are best addressed with manual editing, while minor inconsistencies might be handled with spatial filtering.

Q 4. What are the common file formats used for storing terrain data?

Several common file formats store terrain data. The choice depends on the application and software used.

- ASCII Grid: A simple text-based format representing elevation data as a matrix of rows and columns. It’s easy to read and manipulate but can be inefficient for large datasets.

- GeoTIFF: A widely used tagged image file format that incorporates georeferencing information, ensuring the data is properly spatially located. Supports various compression methods.

- ESRI Grid: A proprietary format used in ArcGIS software, optimized for handling raster data efficiently.

- DEM/DTM (various formats): Various custom formats are also used depending on the data source and application; these often require specialized software for reading.

For instance, GeoTIFF is a good choice for sharing data among different GIS software packages because of its wide compatibility and georeferencing capability.

Q 5. Explain the concept of spatial resolution and its impact on terrain analysis.

Spatial resolution refers to the size of the individual cells (pixels) in a DEM. It directly impacts the level of detail and accuracy of the terrain representation. A higher spatial resolution (smaller cell size) means more detailed terrain features can be captured, leading to more accurate analyses. Conversely, lower resolution (larger cells) results in a smoother, less detailed representation, potentially losing important information.

For example, a DEM with a 1-meter resolution can accurately model small changes in elevation, like those found in a steep gully. A DEM with a 30-meter resolution might smooth this gully out, making it appear less steep and impacting analysis relying on precise elevation data. The choice of spatial resolution depends on the application; high-resolution DEMs are valuable for detailed analysis like landslide susceptibility mapping, while lower-resolution DEMs may suffice for large-scale regional analyses like flood modeling where fine detail is not as critical. It’s important to consider the scale and the specific features of interest when selecting or interpreting a DEM.

Q 6. Describe different types of terrain features and how they are represented in a DEM.

DEMs represent various terrain features through elevation variations. Here are some examples:

- Peaks: Represented by local maxima in elevation.

- Valleys: Represented by local minima in elevation.

- Ridges: Linear high points, represented by lines connecting peak points.

- Channels: Linear low points, represented by lines connecting valley points.

- Slopes: Areas with a gradual or steep change in elevation. Steepness is indicated by the rate of elevation change.

- Hillsides: Areas of relatively uniform slope.

- Depressions: Closed areas of lower elevation that may represent ponds or sinkholes.

These features can be identified and quantified using terrain analysis tools such as those available in GIS software. For example, algorithms can automatically detect peaks, valleys, and ridges using elevation data and define their characteristics such as height or length.

Q 7. How do you perform slope and aspect calculations from a DEM?

Slope and aspect are crucial terrain attributes derived from a DEM. They are calculated using the elevation values of neighboring cells.

Slope represents the steepness of the terrain, typically measured in degrees or percent. The most common method utilizes a finite difference approximation of the spatial gradient of the elevation surface. For each cell, the slope is computed based on the elevation differences with its surrounding neighbours. Many GIS software packages provide built-in tools to perform this calculation. A simple approach involves using a 3×3 neighborhood.

Example Calculation (simplified): slope = arctan(√((Δz/Δx)² + (Δz/Δy)²)) where Δz is the elevation difference, Δx and Δy are the distance differences in x and y directions. This formula needs adaption depending on the chosen cell size and neighbourhood.

Aspect represents the compass direction of the steepest slope. It’s typically measured in degrees (0-360), where 0 represents north, 90 represents east, and so on. Similar to slope calculation, aspect is derived from the elevation differences between a central cell and its surrounding neighbors. Flat areas might have an undefined or NoData aspect.

Both slope and aspect are fundamental for many applications, including hydrological modeling (determining flow direction), erosion analysis (identifying areas prone to erosion), and habitat suitability modeling (understanding sunlight exposure and water availability).

Q 8. Explain the concept of viewshed analysis and its applications.

Viewshed analysis determines the visible areas from a specific point or points on a terrain surface. Imagine standing on a hill; a viewshed analysis would show you exactly what you could see, considering the effects of terrain elevation. It’s like creating a 3D ‘line of sight’ map.

This is crucial for many applications:

- Urban planning: Assessing the visual impact of new buildings or infrastructure on surrounding landscapes.

- Military applications: Identifying strategic vantage points and potential areas of observation.

- Forestry: Planning forest management activities and assessing fire risks, considering visibility for early detection.

- Renewable energy: Selecting optimal locations for wind turbines or solar farms, considering visibility for sunlight or wind patterns.

- Telecommunication: Finding suitable locations for cell towers or antennas, ensuring adequate signal coverage based on line of sight.

The analysis uses a Digital Elevation Model (DEM) and considers factors like observer height, target height, and atmospheric conditions (refraction) to model visibility.

Q 9. Describe the process of creating a hillshade from a DEM.

Creating a hillshade from a DEM involves simulating how light would illuminate the terrain surface. Think of it like taking a photograph of the terrain with a virtual sun shining on it. The result is a shaded-relief map emphasizing terrain features.

The process generally involves these steps:

- Choose a light source: Define the azimuth (direction) and altitude (angle) of the light source. Azimuth is typically measured clockwise from north (e.g., 90 degrees for east).

- Calculate surface slopes and aspects: The DEM is used to calculate the slope (steepness) and aspect (direction of slope) for each cell in the DEM.

- Apply a shading algorithm: Algorithms like the Horn shading method calculate the intensity of light based on the slope, aspect, and the defined light source position. Areas facing the light source are brighter, and areas facing away are darker.

- Render the image: The calculated light intensities are assigned to grey-scale or color values to create the final hillshade image.

Many GIS software packages (e.g., ArcGIS, QGIS) have built-in tools to automate this process. You typically just need to specify the DEM, the azimuth, and altitude of the light source, and the software does the rest.

Q 10. How do you classify terrain using a DEM?

Classifying terrain from a DEM involves categorizing areas based on their elevation, slope, and aspect. Imagine sorting pebbles by size – we’re doing something similar but with landforms.

Methods include:

- Elevation-based classification: Dividing the terrain into zones based on elevation ranges (e.g., low elevation, mid-elevation, high elevation). This is simple but may not capture detailed topographic information.

- Slope-based classification: Categorizing terrain into classes based on slope gradients (e.g., flat, gently sloping, steep). This is useful for understanding the difficulty of terrain traversal or susceptibility to erosion.

- Aspect-based classification: Grouping areas based on the direction they face (e.g., north-facing slopes, south-facing slopes). This impacts sunlight exposure and vegetation growth.

- Combined classification: The most comprehensive approach. It uses combinations of elevation, slope, and aspect to create more detailed terrain classes. For example, you might have a class for ‘steep, south-facing slopes above 1000 meters’.

The specific thresholds for each class are chosen based on the application. For instance, a study on avalanche risk would require finer distinctions in slope classification than one examining broad landform patterns. GIS software usually provides tools for reclassification based on custom thresholds.

Q 11. What are the applications of LiDAR data in terrain analysis?

LiDAR (Light Detection and Ranging) data provides highly accurate elevation data with exceptional detail. Think of it as a super-powered laser rangefinder that creates a detailed 3D point cloud of the terrain.

Its applications in terrain analysis include:

- High-resolution DEM generation: LiDAR provides the data for creating very detailed DEMs with unprecedented accuracy.

- Forest canopy height modeling: LiDAR can penetrate the canopy to reveal the ground surface and measure tree heights, aiding in forest inventory and biodiversity studies.

- Floodplain mapping: LiDAR’s ability to penetrate vegetation makes it ideal for mapping floodplains and identifying areas at risk.

- Slope and aspect analysis: The high resolution of LiDAR enables more accurate measurements of slope and aspect, crucial for applications such as landslide hazard assessment.

- Change detection: By comparing LiDAR data collected at different times, changes in terrain elevation (e.g., due to erosion or construction) can be accurately tracked.

LiDAR data’s accuracy and detail surpasses traditional elevation data sources, making it essential for many high-precision terrain analysis tasks.

Q 12. Explain the process of orthorectification of aerial imagery.

Orthorectification corrects geometric distortions in aerial imagery caused by the camera’s perspective and the Earth’s curvature. Imagine straightening a slightly skewed photograph – that’s essentially what orthorectification does.

The process involves:

- Gathering ground control points (GCPs): These are points with known coordinates on the ground that are identifiable in the aerial imagery. They act as reference points for correction.

- Using a DEM: A DEM provides the elevation information needed to remove distortions caused by terrain relief. Areas of higher elevation appear lower in the imagery due to the camera’s perspective.

- Applying geometric corrections: Software uses the GCPs and the DEM to perform a mathematical transformation that removes distortions. This involves a complex process of warping and resampling the image pixels.

- Producing an orthophoto: The output is a geometrically accurate image, an orthophoto, where all features are in their correct map projection, as if the image was taken from directly above.

Orthorectification is crucial for accurate measurements and analysis using aerial imagery, ensuring the data can be reliably integrated with other geospatial datasets.

Q 13. How do you integrate terrain data with other geospatial datasets?

Integrating terrain data with other geospatial datasets is fundamental to many GIS analyses. Imagine layering information about elevation with data on soil type or population density to understand their relationships.

Common methods include:

- Spatial overlay: Combining terrain data (DEM) with other raster datasets (e.g., land cover, soil type, rainfall) using operations like intersection or union.

- Spatial joins: Linking attributes from vector datasets (e.g., roads, buildings) to terrain characteristics using spatial relationships (e.g., proximity, containment). For example, we could determine the average slope of areas adjacent to rivers.

- 3D visualization: Combining terrain data with other datasets in 3D environments to visualize spatial relationships more intuitively, providing insights that are hard to glean from 2D maps.

- Surface modeling: Using terrain data as a base for modeling other phenomena. For example, combining elevation data with hydrological information can help predict flood inundation zones.

The success of integration depends on accurate data alignment, proper projection systems, and the choice of appropriate analytical techniques. GIS software provides many tools to streamline this process.

Q 14. What are the challenges of visualizing large terrain datasets?

Visualizing large terrain datasets presents significant challenges. Imagine trying to display a detailed image of a mountain range on a small phone screen – it would likely be too cluttered and difficult to interpret.

Challenges include:

- Data volume: Large datasets require significant processing power and memory, which can lead to slow rendering speeds and crashes.

- Level of detail (LOD): Balancing detail with performance is critical. High-resolution displays are great, but we need methods to manage detail at different zoom levels to avoid slowdowns.

- Data simplification: Techniques like generalizing or creating lower-resolution versions of the data are crucial for efficient visualization.

- Visualization methods: Selecting appropriate visualization techniques (e.g., hillshades, 3D models, contour lines) based on the dataset size and the intended analysis is key.

- Hardware limitations: The available computing resources (RAM, GPU) directly impact the ability to visualize large datasets smoothly.

Addressing these challenges often involves using specialized software, employing data reduction strategies, or utilizing cloud computing resources for processing and rendering.

Q 15. Describe various techniques for visualizing terrain in 3D.

3D terrain visualization techniques aim to represent the Earth’s surface realistically, aiding in understanding its features and complexities. Several methods exist, each with its strengths and weaknesses:

- Wireframe Models: These are the simplest, showing only the edges of the terrain. They are computationally inexpensive but lack detail. Imagine a skeletal structure of a mountain range – you get the overall shape but not the surface texture.

- Surface Models: These display the surface using a mesh of polygons, offering significantly more detail than wireframes. Think of a finely textured 3D map, showing hills, valleys, and plateaus.

- Draped Imagery: Aerial or satellite imagery is draped over a digital elevation model (DEM), giving a photorealistic representation. This is what you commonly see in Google Earth; it blends elevation data with real-world images for a very visual experience.

- Perspective Views: These involve adjusting the viewpoint and camera parameters to simulate a real-world perspective. Different angles and distances can highlight specific features and provide different interpretations of the landscape.

- Shadowing and Lighting: Applying realistic lighting and shadow effects dramatically enhances the visual realism and allows for better interpretation of slope and aspect. The shadows help emphasize the three-dimensionality and give a better sense of depth.

- Exaggeration: Vertically exaggerating the terrain (increasing the vertical scale relative to the horizontal scale) can highlight subtle changes in elevation that might be difficult to discern otherwise. This is often used to emphasize variations in a relatively flat area.

- Contour Lines: While not strictly 3D, contour lines overlaid on a 3D model provide additional context and allow for quantitative analysis of elevation.

The best technique depends on the project’s specific goals, the data’s resolution, and the desired level of visual fidelity. For example, a quick overview might use a wireframe or draped imagery, while detailed analysis would leverage surface models with lighting and shadowing.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What software packages are you familiar with for terrain analysis and visualization?

My experience encompasses a wide range of software packages used for terrain analysis and visualization. I am proficient in:

- ArcGIS Pro: A comprehensive GIS platform offering powerful tools for DEM creation, analysis, and 3D visualization. I regularly utilize its 3D Analyst extension for surface analysis and visualization.

- QGIS: A free and open-source GIS software that provides a solid foundation for terrain analysis. I often use it for its flexibility and extensibility through plugins.

- Global Mapper: A specialized software for terrain modeling and visualization, excellent for handling massive datasets and performing advanced analysis.

- ENVI: A robust image processing and analysis software often used in conjunction with other GIS software to enhance the quality and analysis capabilities of remotely sensed data used for terrain analysis.

- Cloud-based platforms such as Google Earth Engine: I’m also experienced in utilizing cloud-based platforms for processing large terrain datasets and performing analysis using cloud computing resources, enabling efficient handling of high-resolution data.

My familiarity with these packages allows me to select the most appropriate tool based on the project requirements, considering factors such as data size, computational resources, and the specific analyses needed.

Q 17. How do you assess the accuracy of terrain data?

Assessing the accuracy of terrain data involves a multifaceted approach, combining quantitative and qualitative methods. Accuracy is typically evaluated in terms of both vertical and horizontal accuracy.

- Root Mean Square Error (RMSE): This statistical measure compares the elevation values of the DEM to a highly accurate reference dataset (e.g., ground survey data or LiDAR). A lower RMSE indicates higher accuracy. For instance, an RMSE of 0.5 meters indicates that, on average, the elevation values in the DEM are within 0.5 meters of the true elevation.

- Comparison with other datasets: Comparing the DEM with other independent datasets of similar resolution (e.g., another DEM from a different source) can highlight discrepancies and potential errors.

- Visual inspection: Visual inspection of the DEM and its derivatives (e.g., slope maps, hillshade) can reveal obvious errors or inconsistencies. This is particularly helpful for identifying localized errors or artifacts.

- Data source metadata: Checking the metadata provided with the dataset is crucial. This includes information on the data acquisition method, accuracy specifications, and potential limitations.

- Accuracy Assessment Points: A common practice involves randomly selecting points from the DEM, collecting highly accurate ground truth data for those points, and then comparing the two to calculate accuracy.

The chosen method depends on the data source, the required accuracy level, and the available resources. A multi-pronged approach, combining quantitative metrics and visual inspection, provides the most robust accuracy assessment.

Q 18. Explain your experience with different coordinate systems and projections.

Understanding coordinate systems and projections is fundamental to terrain analysis. Coordinate systems define the location of points on the Earth’s surface, while map projections transform the 3D Earth onto a 2D plane.

- Geographic Coordinate System (GCS): Uses latitude and longitude to define locations on the Earth’s surface, based on a spherical or ellipsoidal model. WGS84 is a commonly used GCS.

- Projected Coordinate System (PCS): Transforms the spherical coordinates of a GCS into a planar coordinate system, which is necessary for many types of analysis and mapping. Examples include UTM (Universal Transverse Mercator) and State Plane Coordinate Systems. Each projection distorts the Earth’s surface in different ways, some preserving area, others preserving shape, and some attempting a compromise.

- Datum: A reference surface that serves as a basis for measuring coordinates. Different datums (e.g., NAD83, NAD27) use different ellipsoidal models of the Earth, leading to positional differences.

In a terrain analysis project, it’s crucial to select the appropriate coordinate system and projection. Using incompatible coordinate systems can lead to significant errors in calculations and analyses. For example, performing distance measurements in a geographic coordinate system can yield inaccurate results unless the distances are very small. Therefore, choosing a suitable projected coordinate system that minimizes distortion in the area of interest is critical.

My experience includes working with various datums and projections, including WGS84, UTM, State Plane, and others, and I’m adept at transforming data between different coordinate systems to ensure consistent and accurate analyses.

Q 19. Describe your understanding of geostatistics and its application in terrain analysis.

Geostatistics is a branch of statistics that deals with spatially correlated data, making it invaluable in terrain analysis. It allows us to model and predict spatial variability in terrain characteristics.

- Kriging: A powerful geostatistical technique used to interpolate values at unsampled locations. Different types of kriging exist, each making different assumptions about the spatial autocorrelation of the data. Ordinary kriging is a common choice.

- Semivariogram Analysis: This helps determine the spatial dependence structure of the data, providing insights into how the values correlate with distance. The semivariogram helps in selecting appropriate parameters for kriging and other geostatistical methods.

- Applications in Terrain Analysis: Geostatistics is used to interpolate elevation data from scattered points (e.g., LiDAR points) to create a continuous DEM. It’s also used to predict soil properties, vegetation cover, or other spatially variable characteristics from point samples.

For example, in a hydrological modeling project, geostatistics could be used to interpolate rainfall data from sparse rain gauge measurements across a watershed. This improved spatial coverage is crucial for accurate flood prediction and water resource management. I have extensively applied geostatistics in such applications and regularly utilize tools within ArcGIS Pro and other software for such tasks.

Q 20. How do you handle different data formats in a terrain analysis project?

Terrain analysis often involves working with diverse data formats. Efficient data handling is essential for a successful project. I’m experienced in handling various formats, including:

- Raster formats (e.g., GeoTIFF, IMG, ERDAS IMAGINE): These are commonly used for elevation data (DEMs), imagery, and other gridded data. I can readily process and analyze these formats using GIS software.

- Vector formats (e.g., Shapefile, GeoJSON): These are used for representing points, lines, and polygons, such as contour lines, rivers, and boundaries. I use these formats for incorporating ancillary data into my analyses.

- Point cloud data (e.g., LAS, XYZ): These formats are often generated from LiDAR surveys and contain millions of 3D points. I use specialized software to process and filter these massive datasets efficiently.

- Proprietary formats: I have experience working with various proprietary formats and can usually convert them to more common, open formats when needed.

Data conversion and format handling are typically a crucial initial step. The choice of software and the conversion approach depend on the specific format, data volume, and the overall project workflow. For instance, processing a large point cloud dataset might require specialized software and optimized processing techniques.

Q 21. What is your experience with scripting or programming languages for geospatial data processing?

Scripting and programming are crucial for automating tasks and performing complex analyses in terrain analysis. My expertise includes:

- Python with geospatial libraries (e.g., GDAL, Rasterio, GeoPandas): I use Python extensively for automating geoprocessing tasks, such as converting data formats, performing spatial analysis, and creating custom visualizations. For example, I’ve used Python to automate the extraction of elevation profiles along specified lines, a task that would be tedious to perform manually.

- R with geostatistical packages (e.g., gstat): R provides robust tools for geostatistical analysis, which I use to model spatial patterns and interpolate missing data. I’ve developed scripts in R to perform kriging interpolation and assess the accuracy of spatial predictions.

- ModelBuilder in ArcGIS Pro: I leverage ModelBuilder to automate repetitive workflows and build customizable models for various terrain analysis tasks.

These scripting and programming skills enable efficient processing of large datasets, custom analysis development, and reproducible research. The choice of language often depends on the specific task and the available libraries and tools. For instance, I might use Python for handling raster data due to the powerful GDAL library, while R might be chosen for advanced geostatistical analysis.

Q 22. Describe a project where you had to overcome challenges in terrain data processing.

In a recent project involving landslide susceptibility mapping in a mountainous region, we faced significant challenges in processing the terrain data. The primary hurdle was the sheer volume and heterogeneity of the data sources. We had LiDAR data, aerial photography, and ground survey data, all with varying resolutions, coordinate systems, and levels of accuracy.

To overcome this, we implemented a multi-step approach. First, we rigorously checked and cleaned the data, identifying and rectifying inconsistencies and errors in individual datasets. This involved using geoprocessing tools to perform tasks like coordinate system transformation, data projection, and outlier removal. Second, we developed a robust data fusion strategy, combining the strengths of different data sources to create a comprehensive digital elevation model (DEM). We leveraged techniques such as weighted averaging and interpolation to handle data gaps and inconsistencies. Finally, we employed parallel processing techniques to speed up the computationally intensive tasks associated with large datasets, allowing us to meet project deadlines.

Q 23. How do you ensure the quality and accuracy of your terrain analysis results?

Ensuring the quality and accuracy of terrain analysis results requires a multifaceted approach that begins even before data acquisition. This starts with careful selection of appropriate data sources, considering their resolution, accuracy, and coverage.

- Data Validation: We always perform thorough data validation checks. This involves examining data for outliers, inconsistencies, and errors using visual inspection, statistical analysis, and cross-referencing with other datasets.

- Accuracy Assessment: We assess the accuracy of our DEMs and derived products using various techniques, such as comparing them against ground truth data, using root mean square error (RMSE) calculations, and analyzing elevation differences.

- Methodological Rigor: Choosing appropriate analytical techniques is critical. Different methods work better for different types of data and terrain features. We select methods based on the specific research question and data characteristics.

- Sensitivity Analysis: To ensure our results aren’t overly sensitive to minor data variations or parameter choices, we carry out sensitivity analysis. This involves modifying input parameters to determine the effect on the final output.

- Peer Review: We always involve a critical review process, either internally or externally, to independently verify the accuracy and soundness of our methods and conclusions.

Q 24. What are some common errors to avoid when working with terrain data?

Common errors when working with terrain data can lead to inaccurate and misleading results. Some of the most frequent pitfalls include:

- Ignoring data quality issues: Failing to thoroughly check for errors, inconsistencies, and inaccuracies in the source data can propagate throughout the analysis, leading to flawed conclusions.

- Inappropriate data processing: Applying incorrect techniques for interpolation, resampling, or data transformation can introduce artifacts and bias into the results.

- Misinterpreting analytical results: Overlooking the limitations of the chosen analytical methods, neglecting statistical significance, or drawing unsupported conclusions from the data are common issues.

- Incorrect coordinate systems and projections: Working with data that has different coordinate reference systems without properly transforming them will lead to significant positional inaccuracies.

- Neglecting uncertainty: Not considering uncertainty in the data or in the analysis methods can lead to overconfidence in the results.

For instance, applying a linear interpolation method to a heavily forested region, where elevation changes are abrupt, can smooth out critical features, misleading the interpretation of slope and aspect.

Q 25. Explain the difference between raster and vector data models in the context of terrain analysis.

Raster and vector data models represent terrain data in fundamentally different ways. Think of it like painting a picture: raster is like a mosaic of tiny tiles (pixels), each with a single value, while vector is like drawing individual lines and polygons to represent features.

- Raster data: Represents terrain as a grid of cells, each containing an attribute value (e.g., elevation, slope). Examples include DEMs derived from LiDAR or photogrammetry. Raster data is excellent for continuous surface analysis like slope and aspect calculations, but can be less efficient for storing discrete features like roads or buildings.

- Vector data: Represents terrain features as points, lines, and polygons, each with associated attributes (e.g., elevation for points, length for lines, area for polygons). Examples include contour lines or digitized features from maps. Vector data is better for representing discrete features and is more efficient for storing attribute information for specific features but can be less efficient for surface analysis.

The choice between raster and vector depends heavily on the specific application. For example, hydrological modeling often utilizes raster data, whereas a road network analysis would benefit from a vector-based approach. Often, data needs to be converted between formats during the analysis process.

Q 26. How do you use terrain analysis to inform decision-making in a specific application (e.g., urban planning, environmental management)?

In urban planning, terrain analysis plays a crucial role in guiding infrastructure development and mitigating risks. For example, we can use terrain data to identify areas prone to flooding, landslides, or high winds. This information is vital in determining appropriate locations for residential areas, schools, and hospitals, ensuring the safety and resilience of the urban environment.

Specifically, we can use slope analysis to identify areas unsuitable for construction due to high risk of landslides, or hydrological modelling using a DEM to simulate flood inundation zones to guide development of drainage systems. Similarly, we can use viewshed analysis to determine the visual impact of proposed developments, factoring in the natural terrain to preserve scenic vistas or minimize visual obstructions. This allows planners to make informed decisions that promote sustainable and hazard-resilient urban development.

Q 27. Describe your experience with cloud-based geospatial platforms for terrain analysis.

I have extensive experience using cloud-based geospatial platforms such as Google Earth Engine, AWS (Amazon Web Services), and ArcGIS Online for terrain analysis. These platforms offer several advantages. Firstly, they provide access to massive amounts of geospatial data, including global DEMs, satellite imagery, and climate data. Secondly, they have powerful processing capabilities, allowing for efficient handling of large datasets. Thirdly, cloud platforms offer collaborative tools, enabling teamwork on complex projects.

For example, I used Google Earth Engine to analyze global deforestation patterns using satellite imagery and a high-resolution DEM. The ability to perform these analyses on massive datasets, otherwise impossible on a local workstation, was invaluable. Cloud platforms offer scalability and reduced computational costs compared to in-house solutions, making it ideal for complex and large-scale projects.

Q 28. How do you stay current with advancements in terrain analysis and visualization techniques?

Keeping abreast of the rapid advancements in terrain analysis and visualization is crucial. I actively engage in several strategies to achieve this:

- Reading peer-reviewed journals and conference proceedings: I regularly read publications in GIScience, remote sensing, and related fields to stay informed about the latest research.

- Attending conferences and workshops: Participating in these events provides opportunities to learn from experts, network with colleagues, and discover new techniques and technologies.

- Online courses and webinars: Many online platforms offer courses and webinars on advanced geospatial analysis methods, allowing for continuous learning.

- Engaging with online communities: Participating in online forums and discussion groups allows me to exchange ideas and learn from others’ experiences.

- Experimenting with new software and tools: I actively explore and test new software and tools to become proficient in utilizing them for terrain analysis projects.

By combining these strategies, I maintain a strong understanding of current best practices and technological advancements in the field.

Key Topics to Learn for Terrain Analysis and Visualization Interview

- Digital Elevation Models (DEMs): Understanding different DEM formats (e.g., raster, TIN), data sources, and processing techniques. Practical application: Analyzing slope, aspect, and curvature for hydrological modeling.

- Terrain Classification and Feature Extraction: Identifying and classifying landforms (e.g., mountains, valleys, ridges) using algorithms and image processing techniques. Practical application: Creating habitat suitability maps for wildlife conservation.

- Spatial Analysis Techniques: Applying techniques like interpolation, surface analysis, and proximity analysis to solve real-world problems. Practical application: Determining optimal locations for infrastructure development based on terrain constraints.

- Visualization Techniques: Mastering various 3D visualization methods (e.g., shaded relief maps, contour lines, 3D models) and selecting appropriate techniques based on the data and objectives. Practical application: Communicating complex terrain information effectively to stakeholders.

- Data Processing and Management: Efficiently handling large geospatial datasets, including data cleaning, pre-processing, and post-processing. Practical application: Ensuring data accuracy and reliability for analysis.

- Geographic Information Systems (GIS) Software Proficiency: Demonstrating hands-on experience with relevant GIS software (e.g., ArcGIS, QGIS) and their functionalities for terrain analysis. Practical application: Performing spatial analysis and creating visualizations within a GIS environment.

- Remote Sensing Principles: Understanding how remote sensing data contributes to terrain analysis and visualization. Practical application: Extracting elevation information from LiDAR or satellite imagery.

Next Steps

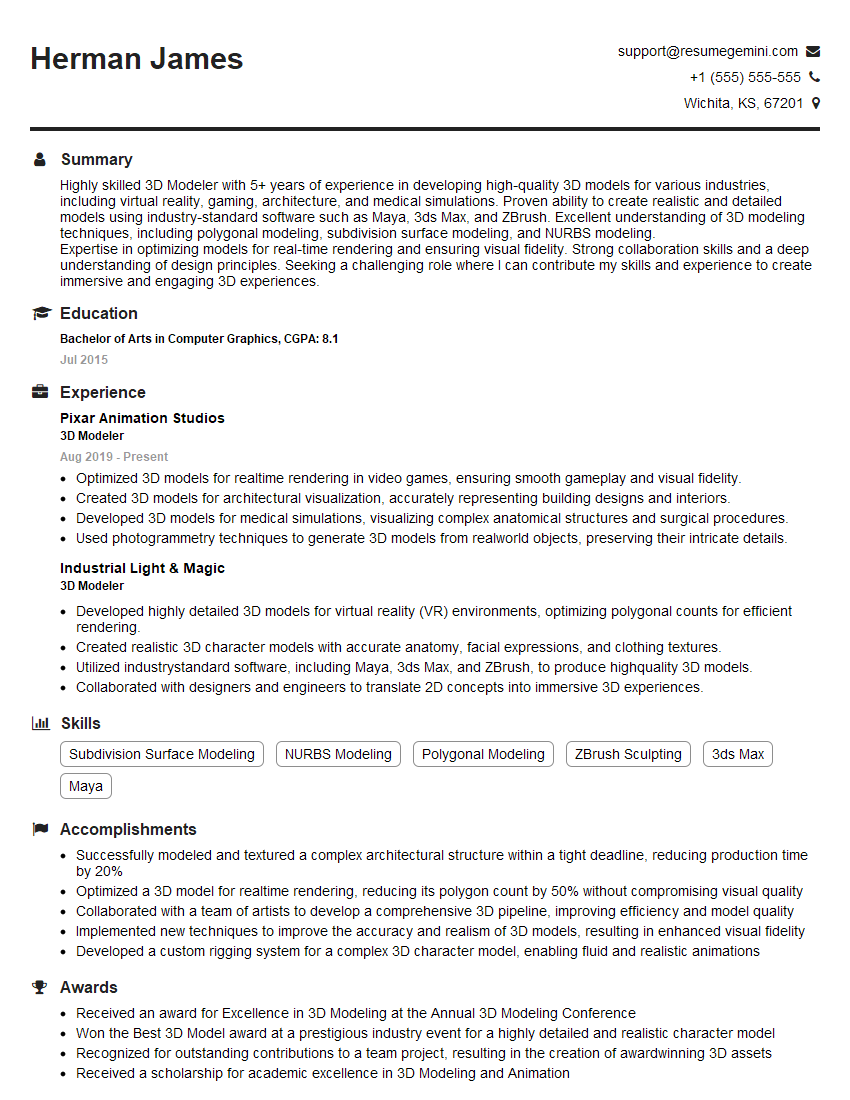

Mastering Terrain Analysis and Visualization opens doors to exciting careers in fields like environmental science, urban planning, and engineering. A strong understanding of these concepts is highly valuable and demonstrates a sought-after skillset. To maximize your job prospects, crafting a compelling and ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience. Examples of resumes tailored to Terrain Analysis and Visualization are available to help guide you. Invest time in building a strong resume; it’s your first impression to potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO