Cracking a skill-specific interview, like one for Tesseract OCR, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Tesseract OCR Interview

Q 1. Explain the architecture of Tesseract OCR.

Tesseract’s architecture is a sophisticated pipeline processing an image to produce text. It can be broken down into several key stages:

- Image Preprocessing: This initial step involves cleaning and enhancing the input image to improve OCR accuracy. This might include noise reduction, binarization, and skew correction. Think of it as preparing the raw ingredients before cooking.

- Layout Analysis (Page Segmentation): The preprocessed image is analyzed to identify text blocks, lines, and words. This stage is crucial because it determines how the text is structured on the page. It’s like organizing ingredients into different categories before starting a recipe.

- Text Recognition (Character Recognition): This is the core of Tesseract, where individual characters are recognized using a combination of feature extraction and pattern matching techniques. Each character is analyzed based on various features like its shape and structure. This is the actual “cooking” of the recipe.

- Post-processing: After character recognition, the output undergoes post-processing steps like spell checking and contextual analysis to improve accuracy and readability. Think of this as the final taste test and presentation of the dish.

These stages work in a sequential manner, with the output of one stage becoming the input for the next. The efficiency and accuracy of each stage significantly impact the overall performance of the OCR system.

Q 2. Describe the different image preprocessing techniques used in Tesseract OCR.

Tesseract employs several image preprocessing techniques, each addressing specific image imperfections:

- Noise Reduction: Techniques like median filtering or Gaussian blurring smooth out random noise in the image, making the text clearer. Imagine removing stray crumbs from your kitchen counter before cooking.

- Binarization: This converts the grayscale or color image into a black-and-white image (binary image), simplifying the subsequent text recognition process. It is similar to separating ingredients into “usable” and “discarded” items.

- Skew Correction: Many scanned documents have a slight angle or skew. Algorithms like Hough transform are used to detect and correct the skew, ensuring text lines are aligned properly. Think of aligning the ingredients neatly in a bowl.

- Deskewing: Rotates the image to correct for any skew or rotation. This is often applied after skew detection.

- Contrast Enhancement: Improves the contrast between text and background, making the text more prominent. It is analogous to adjusting the light in the kitchen to see the ingredients better.

The choice of preprocessing techniques depends on the quality and characteristics of the input image. For example, a highly noisy image would benefit from stronger noise reduction techniques.

Q 3. What are the various page segmentation methods in Tesseract?

Tesseract uses several page segmentation methods, primarily based on the layout analysis stage, to break down the image into meaningful text blocks, lines, and words. These methods are often automatic but can be influenced by configuration parameters.

- Block Segmentation: This divides the image into logical blocks of text (paragraphs, columns, etc.). Think of this like separating chapters or sections in a book.

- Line Segmentation: This divides blocks into individual lines of text. This is akin to identifying separate paragraphs in a chapter.

- Word Segmentation: This isolates individual words within each line. This would be like picking out individual words within a sentence.

- Character Segmentation: This separates individual characters within words. This is the finest level of segmentation, analogous to individual letters in a word.

The specific algorithm used for page segmentation can be adjusted based on the document type and layout. For instance, a document with complex layouts might require a more sophisticated segmentation approach compared to a simple, well-formatted document.

Q 4. How does Tesseract handle different languages and character sets?

Tesseract’s ability to handle multiple languages and character sets is a key strength. This is achieved through the use of language data files (traineddata files). Each language has a corresponding traineddata file that contains:

- Character Sets: A definition of the characters present in the language.

- Character Patterns: Statistical models representing how characters appear visually.

- Language-Specific Rules: Rules for word breaking and other language-specific aspects of text recognition.

To support a new language, a traineddata file specific to that language needs to be added. This file is typically created by training Tesseract on a large corpus of text in the target language. The more data used for training, the better the accuracy. For example, using the eng.traineddata file will enable English language processing and fra.traineddata enables French processing.

Q 5. Explain the concept of a Tesseract language data file.

A Tesseract language data file (often with the extension .traineddata) is a crucial component that enables Tesseract to recognize text in a specific language. It’s essentially a model containing all the information Tesseract needs to accurately identify and interpret the characters of that language. Think of it as a dictionary and grammar book combined.

These files are not simple text files but highly structured data files created through a process called training. This training involves feeding Tesseract with a large dataset of text images in the target language. The training process teaches Tesseract to recognize the patterns and variations of characters, words, and even sentence structures specific to that language. This is why accuracy drastically improves with the correct language data file. For example, attempting to OCR a French document with an English traineddata file will yield poor results.

Q 6. How do you improve the accuracy of Tesseract OCR on noisy images?

Improving Tesseract’s accuracy on noisy images requires a multi-pronged approach, combining preprocessing techniques and configuration adjustments:

- Improved Preprocessing: Experiment with different noise reduction techniques (e.g., adaptive thresholding, median filtering), and potentially employ more advanced image enhancement methods. More aggressive noise reduction might be needed for heavily degraded images.

- Adaptive Thresholding: Adaptive thresholding is particularly useful for unevenly lit images. It dynamically adjusts the threshold based on local image properties.

- Parameter Tuning: Tesseract has many configurable parameters that influence its behavior. Experimenting with these parameters, such as those controlling noise removal, can significantly impact accuracy. This involves careful testing and iteration.

- Pre-processing using external tools: Before feeding an image into Tesseract, you could use image editing tools (GIMP, Photoshop) or libraries (OpenCV) to further enhance or clean it.

- Using a better quality image: If possible, obtain a higher-resolution scan, or use an image capture device with higher contrast.

The ideal approach often involves a combination of these strategies; there is no one-size-fits-all solution. The best settings will depend heavily on the type and level of noise present in the image. For example, an image with heavy background noise will require stronger noise reduction.

Q 7. Describe the role of adaptive thresholding in Tesseract.

Adaptive thresholding is a crucial image preprocessing technique used to convert a grayscale image to a binary image (black and white). Unlike global thresholding, which uses a single threshold value for the entire image, adaptive thresholding dynamically adjusts the threshold based on the local characteristics of the image. This is particularly important for images with uneven lighting, where a global threshold may not effectively separate text from the background.

Imagine trying to separate dark and light colored stones using a single size sieve. Some stones would slip through, while others would get stuck. Adaptive thresholding is like using a range of sieves of various sizes to better sort those stones based on their local differences.

In the context of Tesseract, adaptive thresholding helps to improve the quality of the binarized image, making it easier for the OCR engine to recognize the text. It results in cleaner character segmentation and reduces the likelihood of errors caused by uneven illumination.

Q 8. Explain the difference between connected component analysis and text line detection.

Connected component analysis (CCA) and text line detection are crucial steps in Tesseract’s OCR pipeline, but they serve distinct purposes. Think of it like building a house: CCA lays the foundation, while text line detection constructs the walls.

Connected Component Analysis (CCA): This stage identifies individual components within an image based on pixel connectivity. Essentially, it groups together pixels that are adjacent and share similar characteristics (e.g., color, intensity). The result is a set of individual ‘blobs’, which might represent characters, punctuation marks, or even noise. Imagine separating individual letters from a word – CCA does the initial grouping.

Text Line Detection: This follows CCA and aims to group these individual components (the ‘blobs’ from CCA) into meaningful lines of text. It considers spatial relationships between components – proximity, vertical alignment – to identify which blobs belong to the same line. This is like arranging the bricks (individual characters) into rows (text lines) to build the wall of text.

In short: CCA identifies individual elements, while text line detection arranges them into meaningful text lines. CCA is a lower-level operation focusing on individual pixels, while text line detection is a higher-level operation focusing on spatial relationships between components.

Q 9. How does Tesseract handle different image formats?

Tesseract boasts impressive versatility, supporting a wide range of image formats. It leverages the Leptonica image processing library, which provides excellent format handling capabilities. While Tesseract itself doesn’t directly ‘decode’ the image format (that’s Leptonica’s job), it receives the image data in a format it understands – typically a pixel array. Popular formats like JPEG, PNG, TIFF, and even BMP are seamlessly handled because Leptonica efficiently converts them into the necessary internal representation. The process is transparent to the user; you just specify the image file path, and Tesseract takes care of the rest. The key is the underlying power of Leptonica and its robust support for image I/O.

Q 10. What are the common challenges faced during OCR implementation?

OCR implementation is rarely a smooth sail; several challenges often crop up.

- Image Quality: Poor resolution, noise, blur, skewed angles, uneven lighting, and artifacts significantly impact accuracy. Think of trying to read text on a crumpled, faded photograph.

- Font Variations: Unusual fonts, stylized text, or handwriting can confuse the system. Imagine trying to decipher a handwritten note compared to a cleanly printed document.

- Layout Complexity: Complex layouts with tables, columns, or overlapping text can make it difficult for the system to correctly segment and recognize text. Think about a newspaper page versus a simple paragraph.

- Language Support: While Tesseract supports many languages, accuracy can vary. It needs appropriate trained data for optimal performance in each language.

- Background Noise and Interference: Background patterns, watermarks, or other visual distractions can interfere with text recognition.

Addressing these requires pre-processing techniques like image enhancement, noise reduction, and layout analysis, along with careful selection of language data and possibly even custom training of the OCR engine.

Q 11. Explain how you would evaluate the performance of a Tesseract-based OCR system.

Evaluating Tesseract’s performance requires a multifaceted approach. We need quantitative metrics and qualitative assessments.

- Character Error Rate (CER): This measures the percentage of incorrectly recognized characters. Lower CER indicates higher accuracy.

- Word Error Rate (WER): Similar to CER, but at the word level. A lower WER suggests better overall text recognition.

- Mean Reciprocal Rank (MRR): Useful when dealing with multiple possible OCR outputs per image, providing an average ranking of the correct output.

- Precision and Recall: Useful for evaluating the accuracy of specific components of the system, such as text line detection or character segmentation.

- Visual Inspection: Manually examining a sample of the outputs to identify systematic errors or unexpected behavior. This helps diagnose issues not readily apparent from quantitative metrics.

To perform the evaluation, you would use a test set of images – ideally, a representative sample of the type of images your system will encounter in real-world scenarios. You compare the OCR output to the ground truth (the correct text) to calculate the error rates and other metrics.

Q 12. What are the different types of errors encountered in OCR, and how do you address them?

OCR errors can be broadly categorized into:

- Substitution Errors: Incorrect characters are substituted for correct ones (e.g., ‘o’ instead of ‘0’).

- Insertion Errors: Extra characters are added to the recognized text.

- Deletion Errors: Characters are omitted from the recognized text.

Addressing these errors: Error correction often involves a combination of techniques.

- Pre-processing: Improving image quality reduces errors at the source. Noise reduction, skew correction, and binarization are examples.

- Post-processing: Using techniques like spell checking, contextual analysis, and language models can help correct errors after OCR. Leveraging dictionaries and n-gram models are common approaches.

- Training Data: Using high-quality training data specific to the document types being processed is vital. Custom training data can significantly improve accuracy for specific fonts, layouts or writing styles.

- Parameter Tuning: Optimizing Tesseract’s configuration parameters (e.g., page segmentation mode) based on the nature of your documents can significantly boost accuracy.

Q 13. How can you optimize Tesseract for speed and performance?

Optimizing Tesseract for speed and performance involves several strategies:

- Choosing the Right Page Segmentation Mode: Select a mode that suits your document type; avoid overly complex modes unless necessary. For simple layouts, a simpler mode will be faster.

- Image Pre-processing: Efficient pre-processing steps minimize the workload on the OCR engine. Focus on essential pre-processing steps only, avoiding unnecessary image manipulations.

- Multi-threading: Enable multi-threading to leverage multiple CPU cores. This allows for parallel processing, leading to significant speed improvements.

- Using the appropriate tessdata: Ensure you are using trained data for the appropriate language(s). Using an unnecessarily large set of language data will increase processing time.

- Hardware Acceleration (e.g., GPU): For very large tasks, consider using GPU acceleration. This requires using appropriate libraries and Tesseract versions that support it.

- Using appropriate Tesseract versions: Newer versions often include performance improvements. Keeping your version updated is advisable.

Remember, the optimal balance between speed and accuracy is crucial. Excessive optimization for speed might negatively impact accuracy.

Q 14. Discuss the advantages and disadvantages of using Tesseract compared to other OCR engines.

Tesseract holds a strong position in the OCR landscape, but like any tool, it has strengths and weaknesses.

Advantages:

- Open-source and free: This makes it accessible to a broad user base and allows customization.

- Wide language support: It supports a vast number of languages, although accuracy can vary.

- Active community and development: Constant updates and improvements ensure it remains competitive.

- High accuracy for many use cases: Its accuracy is often comparable to commercial solutions, especially with proper configuration and training.

Disadvantages:

- Can struggle with complex layouts: It can be challenged by documents with intricate tables, overlapping text, or unusual layouts.

- Accuracy can be sensitive to image quality: Poor image quality will inevitably impact accuracy.

- May require pre- and post-processing: Achieving high accuracy often needs additional processing steps.

- Learning curve: While easy to use for basic tasks, achieving optimal performance requires understanding its parameters and intricacies.

Compared to commercial OCR engines, Tesseract offers a compelling balance of performance and cost-effectiveness. Commercial solutions often have better handling of complex layouts and advanced features, but come with a price tag.

Q 15. Describe your experience with training Tesseract on custom datasets.

Training Tesseract on custom datasets is crucial for achieving high accuracy on specific document types or handwriting styles. It involves creating a training data set consisting of images and their corresponding ground truth transcriptions. This dataset is then used to fine-tune Tesseract’s internal language models. The process typically involves several steps:

- Data Collection and Preparation: Gather a representative sample of images that reflect the characteristics of the documents you want to process. This may involve scanning documents, taking photographs, or using digital copies. Ensure the images are of high quality and clearly readable. You’ll also need to accurately transcribe each image. Tools like ABBYY FineReader can help with this, but manual verification is usually necessary.

- Data Formatting: Tesseract uses a specific format for its training data, typically involving boxed text regions (using a bounding box to specify the location of each word or character). Tools like

tesseract-trainercan assist with this, though creating the training data in the correct format requires care and attention to detail. - Training: Use the command-line tools provided with Tesseract (like

tesseractandunicharset_extractor) to generate the necessary training data files. This process involves multiple iterations, adjusting parameters as needed to optimize performance. This phase can be computationally intensive and may require a powerful machine. - Testing and Iteration: After training, thoroughly test the model on a separate validation set. This helps identify areas for improvement. Iteratively refine the training dataset, adjust training parameters, and retrain until you achieve a satisfactory accuracy level.

For example, if I needed to improve Tesseract’s performance on handwritten historical receipts, I would gather a large dataset of such receipts, meticulously transcribe them, format the data correctly, and then train a custom Tesseract model. The key is having a high-quality, representative dataset. A poorly prepared dataset will lead to poor results regardless of the training process.

Career Expert Tips:

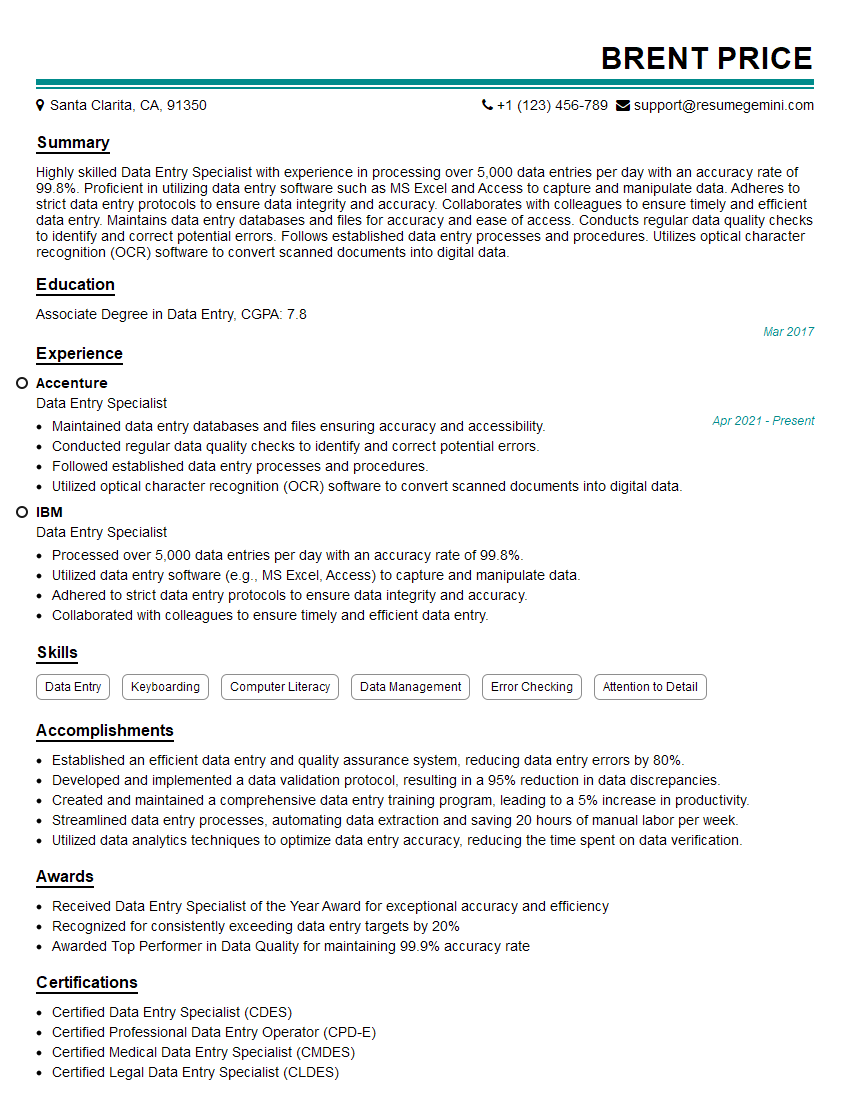

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your understanding of LSTM networks in the context of Tesseract.

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) used in Tesseract’s newer versions (Tesseract 4 and later) for improved accuracy, particularly in handling complex layouts and handwriting. Unlike traditional RNNs, LSTMs are designed to overcome the vanishing gradient problem, which allows them to learn long-range dependencies in sequential data, such as the characters in a line of text. In Tesseract, LSTMs are incorporated into the recognition engine, helping to model the relationships between characters, words, and lines of text more effectively.

Specifically, the LSTM network in Tesseract takes the feature vectors extracted from the image as input. These feature vectors represent the visual characteristics of the characters. The LSTM processes these vectors sequentially, capturing contextual information from neighboring characters and words. This contextual information is crucial for disambiguating ambiguous characters or correcting errors based on the surrounding text.

Think of it like reading a sentence: you don’t process each word in isolation. You understand the meaning based on the context of previous and subsequent words. The LSTM network in Tesseract does something similar, allowing it to make more informed decisions about character recognition.

Q 17. How do you handle skew detection and correction in Tesseract?

Skew detection and correction are critical preprocessing steps in OCR, as even a slight rotation can significantly impair Tesseract’s accuracy. Tesseract employs several methods for handling skew:

- Projection Profile Analysis: This method analyzes the horizontal and vertical projections of pixel density in the image. A skewed image will show a non-horizontal or non-vertical peak in these profiles. The angle of the skew is then estimated based on the orientation of the peak.

- Hough Transform: A more robust method, the Hough transform can detect lines in the image, even those that are partially obscured or fragmented. By analyzing the orientation of the detected lines, the skew angle can be estimated more accurately.

- Adaptive Thresholding and Binarization: Proper preprocessing, including adaptive thresholding, improves the accuracy of skew detection algorithms because they operate more effectively on binary images.

Once the skew angle is determined, the image is rotated using image processing techniques to correct the skew. Libraries like OpenCV are commonly used for this step. Tesseract itself doesn’t inherently perform the rotation. This is a pre-processing step done prior to feeding the image to the OCR engine. The quality of the skew correction is crucial; an incorrectly corrected skew can lead to further errors. Therefore, careful tuning and selection of appropriate algorithms are essential.

In my experience, combining multiple methods, such as projection profile analysis and the Hough transform, often provides more robust skew detection, especially when dealing with noisy or complex images.

Q 18. Describe your experience with integrating Tesseract with other systems or programming languages.

I’ve extensively integrated Tesseract with various systems and programming languages. The most common approach is using Tesseract’s command-line interface or its APIs (Application Programming Interfaces).

- Command-line Interface: This is straightforward for simple tasks. You can execute Tesseract commands directly from the terminal or script them. For example, the following command extracts text from an image named

image.png:

tesseract image.png output- Programming Languages: Tesseract’s APIs offer more control and flexibility. Wrappers exist for numerous languages, including Python (using libraries like

pytesseract), Java, C++, and C#. These wrappers simplify interactions, enabling you to integrate Tesseract seamlessly into your applications. For instance, in Python, usingpytesseract, you can easily extract text from an image within a larger application.

In past projects, I have used Python’s pytesseract to build OCR pipelines for processing large volumes of scanned documents. This involved integrating Tesseract with other libraries for image preprocessing (like OpenCV), post-processing (using regular expressions or natural language processing techniques), and database management. Understanding the strengths and limitations of Tesseract’s API and its interaction with other systems is key to building effective OCR solutions.

Q 19. How would you debug a Tesseract OCR system that is producing inaccurate results?

Debugging a Tesseract OCR system that produces inaccurate results requires a systematic approach. It’s crucial to consider that poor results often stem from a combination of factors, rather than a single cause.

- Image Quality Assessment: The first step is to examine the quality of the input images. Low resolution, poor lighting, noise, or artifacts can dramatically affect Tesseract’s accuracy. Preprocessing steps like noise reduction, binarization (converting to black and white), and deskewing can significantly improve results.

- Preprocessing Evaluation: Carefully evaluate your preprocessing steps. Issues in this stage (e.g., improper thresholding, aggressive noise reduction) can introduce artifacts that mislead Tesseract.

- Language Data Check: Ensure you’ve specified the correct language data file (e.g.,

eng.traineddatafor English). Using the wrong language data will inevitably produce inaccurate results. - Configuration Parameters: Tesseract offers various configuration parameters that influence its performance. Experiment with different parameters (e.g., page segmentation mode) to find settings optimized for your specific type of documents.

- Test with Subsets: If you’re processing a large dataset, test Tesseract on smaller subsets to isolate the source of errors. This might reveal problems with particular document types, handwriting styles, or image quality issues.

- Post-processing Analysis: Incorrect recognition is often due to errors in specific characters or words. Analyze the output to identify patterns of errors, which can guide adjustments to preprocessing or configuration parameters.

For example, if Tesseract consistently misreads a particular character, it might indicate a need for more focused preprocessing (e.g., enhancing contrast around that character) or additional training data for that specific character.

Q 20. Explain the concept of confidence scores in Tesseract and how to utilize them.

Confidence scores in Tesseract represent the likelihood that a recognized character or word is correct. They are typically expressed as a percentage (e.g., 95%). Higher confidence scores indicate greater certainty, while lower scores suggest potential errors. These scores are invaluable for evaluating and improving the accuracy of Tesseract’s output.

Utilizing confidence scores effectively requires several strategies:

- Filtering Output: You can filter the OCR results, retaining only those with confidence scores above a certain threshold (e.g., keeping only words with confidence above 80%). This reduces the number of errors in the final output.

- Error Correction: Confidence scores can guide post-processing steps. For instance, words with low confidence scores can be flagged for manual review or passed to a more sophisticated error correction module.

- Adaptive Thresholding: You can adjust the confidence threshold dynamically based on the characteristics of the input documents. For example, a lower threshold might be acceptable for noisy images, while a higher threshold is appropriate for clean, high-quality images.

- Performance Metrics: Confidence scores play a significant role in calculating performance metrics such as precision and recall. Analyzing these metrics provides insights into the overall accuracy of the system and pinpoints areas for improvement.

I’ve used confidence scores in many projects to improve efficiency. By automatically filtering out low-confidence results, I significantly reduced the need for manual review, accelerating the entire process. The key is selecting an appropriate confidence threshold based on the application requirements and balancing accuracy with efficiency.

Q 21. What is the role of post-processing in improving the accuracy of Tesseract results?

Post-processing plays a crucial role in enhancing the accuracy of Tesseract results. Raw OCR output often contains errors, inconsistencies, or artifacts. Post-processing aims to refine this output, correcting errors and improving overall readability.

Common post-processing techniques include:

- Spell Checking: Employing a spell checker to identify and correct misspelled words.

- Contextual Correction: Using natural language processing (NLP) techniques to identify and correct errors based on the context of surrounding words or sentences.

- Regular Expressions: Utilizing regular expressions to standardize or clean the text (e.g., removing extra whitespace, normalizing formatting).

- Noise Removal: Removing irrelevant characters or symbols that might have been incorrectly recognized by Tesseract.

- Layout Analysis: Analyzing the layout of the text to improve understanding of tables, columns, and other structural elements. This often involves using additional tools or libraries.

For instance, if Tesseract misinterprets the number ‘1’ as ‘l’ (lowercase L), a simple regular expression could identify and correct this common error. Similarly, spell checking can rectify words that are incorrectly recognized due to character misidentification or ambiguity. In practice, a combination of these techniques is usually employed. The choice of post-processing methods will depend on the specific requirements of the application and the nature of the input documents.

Q 22. Describe different methods for handling tables in Tesseract.

Tesseract doesn’t inherently understand tables; it sees them as blocks of text. Extracting tabular data requires post-processing. Several methods exist:

- Layout Analysis: Pre-processing the image to identify table structures using libraries like OpenCV. This involves detecting lines, identifying cells based on their boundaries, and ordering them. This is often the most robust approach, especially for complex tables.

- Regular Expressions (Regex): If the table has a consistent structure, regex can be used to parse the extracted text. This method is less robust and struggles with inconsistent formatting.

- Machine Learning (ML): Advanced techniques involve training ML models (like those built with TensorFlow or PyTorch) to recognize table structures and extract data directly from the image. This is the most powerful but also the most complex approach, requiring significant labeled data for training.

- Tesseract’s built-in features (with limitations): While Tesseract doesn’t directly offer table extraction, its page segmentation might provide some level of structural information that can be further processed. However, this is often unreliable.

Example: Imagine an invoice. Using OpenCV, we could detect horizontal and vertical lines defining the cells. Then, Tesseract could extract text from each identified cell, resulting in a structured representation of the invoice data. A CSV file could then be generated.

Q 23. How do you deal with images containing both text and graphics?

Images with mixed text and graphics require a multi-step approach. The key is to separate the text from the graphics before OCR processing. Here’s how:

- Image Preprocessing: Employ image processing techniques to isolate text regions. This might involve thresholding, noise reduction, and potentially more sophisticated methods like connected component analysis to distinguish text from graphic elements.

- Layout Analysis (Again!): Tools like OpenCV are vital here to identify regions of interest (ROIs) containing text. These ROIs can then be processed separately by Tesseract.

- Selective OCR: After identifying text regions, only these are fed to Tesseract. This prevents graphic elements from interfering with the OCR process, increasing accuracy.

Example: Consider a marketing flyer with text overlaid on a picture. We’d use OpenCV to segment the image, isolating text blocks. Then, only these isolated blocks would be passed to Tesseract for OCR.

Q 24. How would you optimize Tesseract for a specific type of document (e.g., invoices, forms)?

Optimizing Tesseract for a specific document type like invoices or forms demands a tailored approach:

- Training Data: Create a custom training dataset reflecting the characteristics of your target document (fonts, layout, styles). Tesseract’s accuracy significantly improves with document-specific training.

- Preprocessing: Develop a preprocessing pipeline specifically suited to the document type. This might include deskewing, noise reduction, or binarization techniques tailored to the document’s image quality.

- Configuration Parameters: Fine-tune Tesseract’s configuration parameters (tessedit_char_whitelist, page segmentation modes, etc.) based on experimental results. This often requires iterative testing.

- Post-processing: Implement post-processing routines to correct common errors, such as using regular expressions or rule-based systems to standardize data formats.

Example (Invoices): Train Tesseract on a dataset of invoices to recognize common invoice fields (invoice number, date, amounts, etc.). Develop preprocessing to handle variations in font sizes and layouts.

Q 25. What are some of the limitations of Tesseract OCR?

Despite its strengths, Tesseract has limitations:

- Complex Layouts: Struggles with intricate layouts, heavily formatted documents, or documents with overlapping text.

- Low-Quality Images: Poor image quality (blurry, noisy, low resolution) significantly impacts accuracy.

- Handwriting Recognition: Performance on handwritten text is generally lower than on printed text.

- Non-Latin Scripts: While support exists for numerous languages, accuracy can vary, particularly for less commonly used scripts.

- Tables and Complex Graphics: As mentioned earlier, handling tables and graphics effectively often requires additional preprocessing and post-processing.

These limitations highlight the need for careful preprocessing, post-processing, and potentially the use of supplementary techniques for improved results.

Q 26. How would you improve Tesseract’s performance on handwritten text?

Improving Tesseract’s performance on handwritten text is challenging but achievable:

- Training Data: The most impactful improvement comes from training Tesseract on a large dataset of handwritten text. This dataset should be representative of the type of handwriting the system will encounter (cursive, print, etc.).

- Preprocessing: Advanced preprocessing steps like noise reduction, skew correction, and binarization are crucial to clean up the image before OCR. Consider using techniques that enhance the contrast between characters and background.

- LSTM-based Models: Using newer versions of Tesseract which leverage more advanced neural network architectures like LSTMs can lead to significantly better results.

- Alternative OCR Engines: Consider using OCR engines specifically designed for handwriting recognition; many offer superior performance in this area, although they might require more setup and configuration.

Example: If you need to process historical documents with handwritten notes, gathering a sample of similar handwriting and training Tesseract on this data will dramatically improve results.

Q 27. What are some alternative OCR engines and their strengths compared to Tesseract?

Several alternative OCR engines exist, each with its strengths and weaknesses:

- Google Cloud Vision API: A cloud-based service offering excellent accuracy, particularly on modern, clean documents. It handles various languages well and integrates easily into applications but has costs associated with usage.

- Amazon Textract: Similar to Google Cloud Vision, it’s a cloud-based solution known for its strong table extraction capabilities. Also, it has costs associated with usage.

- EasyOCR: A powerful open-source option supporting multiple languages and offering good accuracy. It combines Tesseract with other image processing libraries for better performance and is suitable for simpler use cases.

- Abbyy FineReader: A commercial product with a long history, often praised for its accuracy and advanced features, including handling complex layouts and languages. However, it is expensive.

The choice of engine depends on factors like budget, required accuracy, document types, and ease of integration into your workflow.

Q 28. How do you ensure the security and privacy of data processed by Tesseract OCR?

Ensuring data security and privacy when using Tesseract OCR is paramount:

- Data Minimization: Process only the necessary data. Avoid uploading or processing sensitive information beyond what’s required for OCR.

- Secure Storage: Store processed data securely using encryption and access control mechanisms. Avoid storing sensitive information on insecure systems.

- Compliance: Adhere to relevant data privacy regulations (GDPR, CCPA, etc.).

- Data Anonymization: Consider techniques like data masking or pseudonymization to protect individual identities when dealing with sensitive personal data.

- On-Premise Deployment: Deploying Tesseract on your own servers or cloud instances under your control gives you greater security compared to using cloud-based APIs.

It’s essential to treat OCR-processed data like any other sensitive information, applying best practices for data security and privacy.

Key Topics to Learn for Tesseract OCR Interview

- Tesseract Architecture: Understand the core components of Tesseract, including image preprocessing, text localization, character segmentation, and recognition.

- Training Data and Language Models: Learn how Tesseract uses training data to improve accuracy and adapt to different languages and writing styles. Explore the concept of language models and their impact on OCR performance.

- Preprocessing Techniques: Master image enhancement techniques like noise reduction, binarization, and skew correction, and understand their impact on OCR accuracy.

- Post-processing Techniques: Familiarize yourself with methods to improve the output of Tesseract, such as spell checking, context-based correction, and layout analysis.

- Performance Optimization: Explore techniques to optimize Tesseract’s speed and accuracy, such as using different page segmentation modes and adjusting configuration parameters.

- Practical Applications: Discuss real-world applications of Tesseract, such as document digitization, automated data entry, and information extraction from images. Be prepared to discuss specific use cases and challenges.

- Error Handling and Debugging: Understand common errors encountered during OCR and methods for debugging and improving accuracy. This includes analyzing error logs and identifying sources of inaccuracy.

- Integration with other technologies: Discuss how Tesseract can be integrated into larger systems and workflows, such as using it as a component within a larger application or pipeline.

Next Steps

Mastering Tesseract OCR opens doors to exciting career opportunities in fields like data science, software engineering, and document processing. A strong understanding of this technology significantly enhances your value to potential employers. To maximize your job prospects, crafting an ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your Tesseract skills effectively. Examples of resumes tailored to Tesseract OCR are available within ResumeGemini to guide your creation process, ensuring your qualifications shine through.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO