Cracking a skill-specific interview, like one for Troubleshoot and resolve production issues, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Troubleshoot and resolve production issues Interview

Q 1. Describe your experience troubleshooting production issues in a high-pressure environment.

Troubleshooting production issues in high-pressure environments demands a calm, methodical approach combined with quick thinking. I’ve worked in situations where a critical system failure impacted thousands of users, leading to immediate revenue loss and reputational damage. My experience in these scenarios involved prioritizing immediate impact mitigation while concurrently investigating the root cause. For instance, during a recent incident involving a database outage, my team and I immediately implemented a failover mechanism to restore partial functionality. This prevented further damage while we worked to identify the source of the problem – a misconfigured replication setting.

Maintaining composure under pressure is crucial. I rely on clear communication with the team, stakeholders, and impacted users to ensure everyone is informed and working collaboratively. Effective use of communication tools, regular updates, and transparent reporting help manage expectations and alleviate stress during these critical situations.

Q 2. Explain your process for identifying the root cause of a production issue.

My process for identifying the root cause follows a structured approach, often using a variation of the 5 Whys technique. I start by clearly defining the problem: What exactly is broken? Then I gather data from various sources – logs, monitoring dashboards, user reports – to understand the symptoms. This often involves a systematic examination of logs to identify error messages, unusual patterns, and timestamps correlated to the issue’s onset.

Next, I systematically investigate potential causes. This might involve checking server resource utilization (CPU, memory, disk I/O), network connectivity, application code, or database performance. I use tools like debuggers, network sniffers, and database query analyzers to pinpoint the exact location and nature of the problem. The ‘5 Whys’ technique helps drill down from the surface symptoms to the underlying cause. For example, if a website is slow (Why 1?), it’s because the database is slow (Why 2?), which is because there are too many concurrent connections (Why 3?), which is because of a concurrency bug in the application code (Why 4?), which was caused by a missed testing phase (Why 5?). Once the root cause is identified, I document all findings and steps taken to aid in preventing future occurrences.

Q 3. What tools and techniques do you use for monitoring production systems?

Monitoring is the cornerstone of proactive production issue management. I utilize a combination of tools and techniques, tailored to the specific needs of the system. This typically includes system-level monitoring tools like Nagios or Zabbix for server health and resource usage. For application performance, I often rely on application performance monitoring (APM) tools such as New Relic or Dynatrace. These tools offer insights into application-level metrics, including response times, error rates, and transaction traces. I also employ log aggregation and analysis tools like Splunk or the ELK stack (Elasticsearch, Logstash, Kibana) to sift through vast quantities of log data and detect anomalies or error patterns. Real-time dashboards provide a centralized view of critical metrics, allowing for rapid identification of potential issues. Finally, synthetic monitoring through tools like Pingdom or Datadog helps assess the user experience from an external perspective, ensuring our monitoring is holistic.

Q 4. How do you prioritize multiple production issues simultaneously?

Prioritizing multiple simultaneous production issues requires a systematic approach. I employ a risk-based prioritization model, considering the impact and urgency of each issue. The impact is measured by the number of users affected, the potential for data loss, and the financial implications. Urgency refers to the immediacy of the problem and potential for further damage if not addressed quickly. I use a matrix to categorize issues based on these factors – high impact/high urgency, high impact/low urgency, low impact/high urgency, and low impact/low urgency.

High impact/high urgency issues are addressed immediately. Lower-priority issues are tackled sequentially based on their ranking. Effective communication is crucial here, keeping stakeholders updated on the status of various issues and providing realistic time estimates for resolution. Sometimes, it’s necessary to temporarily work around an issue to stabilize the system while the root cause of a more significant problem is being addressed.

Q 5. Describe a time you had to escalate a production issue. What was your approach?

One time, we experienced a significant surge in database query times impacting our primary e-commerce platform. Initial troubleshooting steps didn’t yield a clear solution. I escalated the issue to the database administrator team after documenting my findings, including specific queries exhibiting slow performance and relevant system logs. My approach involved a clear, concise summary of the problem, the steps taken so far, and my hypotheses about potential root causes. I also proactively provided access to relevant logs and monitoring data to expedite the investigation.

Effective communication was essential throughout the escalation. Regular updates were given to both internal teams and stakeholders. The collaboration between the application and database teams led to the identification of a poorly optimized query that was causing the bottleneck. Once the query was optimized, the system returned to normal operation. This experience highlighted the importance of clear escalation protocols and cross-team collaboration in handling complex production issues.

Q 6. How do you handle situations where you don’t have all the information needed to resolve a problem?

When facing a production issue without complete information, I begin by clearly defining what information is missing and its potential impact on the troubleshooting process. I then actively seek out the missing data from various sources: relevant team members, documentation, monitoring tools, and potentially external services if applicable. While searching for information, I focus on steps that are safe and won’t exacerbate the problem. This might involve temporarily disabling non-critical features, implementing workarounds to mitigate the immediate impact, or isolating potential problem areas. I focus on gathering all available evidence, even if incomplete. This aids in formulating educated hypotheses and prioritizing investigation efforts towards the most likely causes.

Q 7. What is your experience with logging and monitoring systems (e.g., Splunk, ELK, Prometheus)?

I have extensive experience with various logging and monitoring systems, including Splunk, ELK, and Prometheus. Splunk excels at its powerful search capabilities and visualizations, allowing me to easily correlate events across different data sources. The ELK stack, with its open-source nature and scalability, is valuable for building custom dashboards and analyzing large volumes of log data. Prometheus, with its time-series database and pull-based architecture, is a powerful tool for monitoring system metrics. I’m proficient in querying these systems using their respective query languages (SPL for Splunk, Kibana queries for ELK, PromQL for Prometheus) to extract meaningful insights from data. I’ve used these tools to build automated alerting systems, identify performance bottlenecks, and troubleshoot security issues by correlating events across multiple log sources. My experience extends to designing and implementing logging strategies that ensure data is collected comprehensively, efficiently, and securely.

Q 8. Explain your experience with incident management processes and frameworks (e.g., ITIL).

My experience with incident management revolves heavily around ITIL principles, specifically focusing on the Incident, Problem, and Change Management processes. I’ve been involved in numerous incident response cycles, from initial detection and logging to resolution and post-incident review. This includes prioritizing incidents based on impact and urgency, assigning ownership, coordinating with various teams (development, operations, security), and tracking progress toward resolution using tools like Jira or ServiceNow.

For instance, in a previous role, we experienced a significant spike in database errors during peak hours. Following ITIL guidelines, we quickly established an incident, diagnosed the root cause (a poorly optimized query), implemented a temporary workaround (query optimization), and implemented a permanent fix (database schema change after thorough testing). Post-incident review involved analyzing the cause, identifying gaps in monitoring, and updating our runbooks to prevent recurrence. The key here is the structured approach, minimizing disruption and ensuring continuous learning from each incident.

- Incident logging and categorization

- Root cause analysis

- Workaround implementation

- Permanent fix implementation and testing

- Post-incident review and knowledge sharing

Q 9. How do you ensure that fixes for production issues don’t introduce new problems?

Preventing the introduction of new problems during production fixes requires a rigorous and multi-layered approach. This starts with thorough testing in a staging environment that closely mirrors production. We use techniques such as regression testing to ensure that the fix doesn’t negatively impact existing functionalities. Furthermore, we employ canary deployments or blue-green deployments, releasing the fix to a small subset of users or a separate environment before full rollout. This allows us to monitor the impact in real-time and quickly roll back if any issues arise.

For example, when fixing a bug in a payment gateway, we wouldn’t deploy the fix directly to production. Instead, we would first deploy it to a staging environment mirroring the production setup and conduct extensive tests. Then, we would use a canary deployment, rolling it out to a small percentage of users. By monitoring key metrics like transaction success rate and error rates, we can confidently proceed with a full deployment after verifying the fix’s stability and lack of side effects.

Q 10. Describe your experience with debugging code in a production environment.

Debugging in production is a delicate balance between resolving the issue quickly and avoiding further disruption. My approach emphasizes minimizing impact on users while effectively diagnosing the root cause. This often involves leveraging logging and monitoring tools to gather information about the error, including timestamps, error messages, and relevant system metrics. Remote debugging tools are also invaluable, allowing for code inspection and execution analysis without requiring downtime.

In one case, we had a critical production service failing intermittently. Using detailed logs and monitoring dashboards, we identified a memory leak. We employed a remote debugging tool to analyze the memory usage pattern of the service, pinpointing the specific code responsible for the leak. A temporary workaround involved restarting the service periodically, buying time while a permanent fix was developed and deployed.

It’s crucial to remember safety and responsibility when debugging in production. We always have a rollback plan in place and strictly follow best practices to prevent accidental damage. The ability to analyze logs, metrics, and utilize remote debugging skills is paramount for effective and safe resolution.

Q 11. What are some common causes of production issues in your area of expertise?

Common causes of production issues vary depending on the system’s complexity and architecture but often fall under these categories:

- Software bugs: These can range from simple logic errors to complex concurrency issues.

- Infrastructure failures: Hardware malfunctions, network outages, or database issues can significantly impact services.

- Configuration errors: Incorrect settings in servers, databases, or application configurations can lead to unexpected behavior.

- Third-party dependencies: Issues with external APIs or services can propagate into the production environment.

- Deployment issues: Problems during the deployment process, such as incomplete updates or rollbacks, can introduce new bugs or disrupt services.

- Security vulnerabilities: Exploits and attacks can compromise system integrity and lead to outages.

For example, a poorly written SQL query could cause a database lock, leading to application slowdowns or crashes. A misconfiguration in a load balancer could result in requests being dropped or redirected incorrectly. Understanding these common causes helps us proactively design more robust and resilient systems.

Q 12. How do you document and share knowledge about resolved production issues?

Documenting and sharing knowledge about resolved production issues is crucial for preventing future incidents. We use a combination of methods to ensure information is readily accessible and easily searchable. This includes detailed incident reports with comprehensive steps to reproduce the problem, the root cause analysis, and the implemented solution. We also utilize a knowledge base (wiki or similar) where solutions are categorized and tagged, making it easier to find relevant information.

For instance, after resolving a particular database issue, we would document the specific error message, database query responsible, the steps taken to diagnose the problem, and the code changes made to resolve it. We would also tag it with keywords like ‘database error’, ‘slow query’, and ‘deadlock’. This ensures that if a similar issue arises in the future, the solution is readily available, reducing the time required for resolution and enhancing the efficiency of our team.

Regular post-incident reviews with the team ensure that lessons learned are shared and knowledge is transferred effectively.

Q 13. How do you communicate technical issues to non-technical stakeholders?

Communicating technical issues to non-technical stakeholders requires clear, concise, and jargon-free language. I avoid technical terms whenever possible, focusing on the impact of the issue and the steps being taken to resolve it. Using analogies and metaphors can help illustrate complex concepts in a simpler way. Visual aids such as charts and graphs can also improve understanding. It’s also important to set realistic expectations and provide regular updates.

For instance, instead of saying ‘The microservice experienced a deadlock due to contention on the shared resource lock’, I’d explain, ‘A part of our system got temporarily jammed, making some parts of the website slow or unavailable. We’re working to clear the jam and expect things to be back to normal within the next hour.’

Transparency and empathy are crucial. Keep stakeholders informed even if a solution isn’t immediately available. Regular updates and a clear communication plan can ease anxieties and build confidence.

Q 14. What is your experience with automated testing and its role in preventing production issues?

Automated testing plays a vital role in preventing production issues by catching bugs early in the development lifecycle. I have extensive experience with various testing methodologies, including unit tests, integration tests, end-to-end tests, and performance tests. These tests are automated and integrated into our Continuous Integration/Continuous Delivery (CI/CD) pipeline, ensuring that code changes are thoroughly validated before deployment.

For example, we use automated unit tests to ensure individual components of our code function correctly, integration tests to verify interactions between different components, and end-to-end tests to simulate real-world user scenarios. Automated performance tests help identify potential bottlenecks and ensure the application can handle expected traffic loads. By automating these tests, we are able to identify and fix bugs quickly and efficiently, reducing the likelihood of them making it into production.

The more comprehensive the automated test suite, the higher the confidence in the code quality and the lower the risk of production issues. This is a proactive measure compared to reactive incident management.

Q 15. How do you use metrics to track the effectiveness of your troubleshooting efforts?

Tracking the effectiveness of troubleshooting relies heavily on metrics. Before starting, I define Key Performance Indicators (KPIs) relevant to the issue. For example, if the issue is slow response times, my KPIs might be average response time, 95th percentile response time, and error rate. I then collect baseline metrics *before* any intervention. This establishes a benchmark against which to measure improvement. During troubleshooting, I continuously monitor these metrics to see if my actions are having a positive impact. If the KPIs aren’t improving, it signals I need to adjust my approach. After resolution, I compare post-resolution metrics to the baseline to quantify the success of my efforts. I also document the entire process, including the metrics, the steps taken, and the outcomes, to facilitate future troubleshooting and continuous improvement.

For instance, if a web server was experiencing high latency, I’d track metrics like CPU utilization, memory usage, disk I/O, and network traffic before and after optimizing the database queries or scaling the server resources. A significant reduction in latency coupled with improved resource utilization would confirm the effectiveness of my actions. This data is invaluable for reporting and demonstrating the impact of my work.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with different debugging techniques (e.g., binary search, log analysis).

I utilize a variety of debugging techniques depending on the complexity and nature of the problem. Log analysis is my first line of defense – examining error messages, timestamps, and other information within logs to pinpoint the source of the issue. This is often supplemented by binary search, where I systematically eliminate halves of the problem space. For example, if a large codebase is causing a crash, I’d comment out half of the code and see if the issue persists. If it does, I know the problem lies in the remaining half, and I repeat the process until I isolate the faulty component.

Beyond these, I use other techniques like setting breakpoints in the code (using debuggers like gdb or Visual Studio), inspecting memory dumps (to analyze memory leaks or segmentation faults), and utilizing network tracing tools (like tcpdump or Wireshark) to analyze network communication. I also leverage profiling tools to identify performance bottlenecks within applications.

For example, I recently debugged a production issue where an application was crashing intermittently. Log analysis revealed frequent memory allocation errors. Using a memory debugger, I discovered a memory leak in a specific function, which was ultimately resolved by modifying the memory management strategy within that function. Through a combination of these techniques, I effectively narrowed down the problem and implemented a fix.

Q 17. Describe your experience working with different operating systems (e.g., Linux, Windows).

My experience spans both Linux and Windows operating systems, I’m proficient in administering and troubleshooting issues across both. On Linux systems, I’m comfortable working with the command line, using tools such as ps, top, netstat, tcpdump, and strace for monitoring system processes, network connections, and resolving various system-level issues. I understand systemd, init scripts, and other crucial components of the Linux ecosystem. My experience includes managing user accounts, permissions, and configuring various services like web servers (Apache, Nginx), databases (MySQL, PostgreSQL), and message queues (RabbitMQ, Kafka).

On Windows, I’m familiar with using the PowerShell and command prompt for similar tasks. I understand Windows services, registry settings, event logs, and troubleshooting techniques specific to the Windows environment. I have experience working with Active Directory and troubleshooting issues related to domain controllers and user authentication. My experience also extends to working with virtual machines (VMs) on both platforms, utilizing tools like VMware and Hyper-V. I find myself constantly adapting to the nuances of each OS, but the fundamental principles of troubleshooting remain consistent.

Q 18. What is your experience with database troubleshooting?

Database troubleshooting is a significant part of my experience. I’ve worked extensively with relational databases (like MySQL, PostgreSQL, Oracle, and SQL Server) and NoSQL databases (like MongoDB and Cassandra). My approach involves understanding the database architecture, query performance, and potential bottlenecks. I’m proficient in analyzing query plans, identifying slow queries, and optimizing database performance through indexing, query rewriting, and schema design improvements.

I utilize database monitoring tools to track key metrics such as query execution time, transaction throughput, and disk I/O. I use these tools to identify areas for optimization and to diagnose issues such as deadlocks, table locks, and connection pool exhaustion. I’m also skilled at recovering from database failures, including restoring from backups and troubleshooting replication issues.

For example, I once resolved a significant performance bottleneck in an e-commerce application by identifying a poorly performing query that lacked proper indexing. Adding the correct index dramatically improved the query’s speed, resulting in a significant performance boost for the entire application.

Q 19. How do you ensure the security of production systems during troubleshooting?

Security is paramount during troubleshooting, especially in production. My approach centers around the principle of least privilege. I only access the systems and data necessary to resolve the issue, utilizing temporary accounts with restricted permissions instead of using administrative accounts unless absolutely necessary. I meticulously document every action and change made, enabling rollback if necessary. Before making any changes, I thoroughly assess the potential security risks and mitigate them proactively.

This includes utilizing secure remote access methods (SSH with strong passwords or multi-factor authentication), carefully reviewing any code changes before deploying them, and ensuring all systems are up-to-date with the latest security patches. If I discover any security vulnerabilities during troubleshooting, I immediately report them to the appropriate team for remediation. I follow strict procedures for handling sensitive data, adhering to company policies and relevant regulations.

Q 20. What is your experience with cloud-based infrastructure troubleshooting (e.g., AWS, Azure, GCP)?

I have experience troubleshooting cloud-based infrastructure on AWS, Azure, and GCP. My approach involves leveraging the cloud provider’s monitoring and logging services to identify the root cause of issues. For example, on AWS, I utilize CloudWatch for monitoring metrics and logs, while on Azure, I leverage Azure Monitor. GCP offers similar functionalities with Cloud Monitoring and Logging. I’m adept at using the cloud provider’s command-line interfaces (CLIs) and APIs for managing and troubleshooting infrastructure components.

I understand concepts like auto-scaling, load balancing, and container orchestration (like Kubernetes) and how they impact troubleshooting. I’ve resolved issues related to network connectivity, storage problems, and resource limitations in cloud environments. I can effectively diagnose issues related to virtual machines, databases, and other cloud services. My approach also involves understanding and utilizing the cloud provider’s security features to ensure that troubleshooting is conducted securely.

Q 21. Explain your approach to resolving performance bottlenecks in production applications.

Resolving performance bottlenecks involves a systematic approach. I start by identifying the bottleneck using profiling tools and performance monitoring. This might involve examining CPU utilization, memory usage, disk I/O, network traffic, and database query performance. Once the bottleneck is identified, I analyze the root cause. This might involve reviewing code, examining database queries, or investigating network configurations.

My solutions might include code optimization, database query optimization, caching strategies, load balancing, scaling up resources (e.g., adding more CPUs, memory, or network bandwidth), or optimizing algorithms. After implementing a solution, I carefully monitor performance metrics to verify the effectiveness of the changes and ensure the problem is resolved. Iterative testing and adjustments are often needed until optimal performance is achieved. Thorough documentation throughout the process is crucial for future reference and to facilitate collaboration with other team members.

For example, I once resolved a performance bottleneck in an application by identifying a poorly performing algorithm. By replacing it with a more efficient algorithm, I significantly reduced processing time and improved overall performance. The entire process involved profiling, code analysis, implementation of a new algorithm, and thorough testing to confirm improvement.

Q 22. Describe your experience with capacity planning and its role in preventing production issues.

Capacity planning is the process of determining the resources – servers, network bandwidth, storage, etc. – a system needs to handle projected workloads. It’s crucial for preventing production issues because inadequate resources lead to performance bottlenecks, slowdowns, and ultimately, outages. Think of it like planning a party: you wouldn’t invite 100 people to a space that only fits 20.

My experience involves using various tools and methodologies to forecast future demands based on historical data, growth projections, and anticipated events (e.g., seasonal spikes in traffic). This includes analyzing metrics like CPU utilization, memory usage, database query times, and network throughput. For instance, in a previous role, we used historical web server logs to predict peak traffic during holiday shopping seasons. This allowed us to proactively scale up our infrastructure, preventing performance degradation and ensuring a smooth user experience.

Beyond forecasting, capacity planning involves implementing auto-scaling features in cloud environments (like AWS or Azure) to dynamically adjust resources based on real-time demand. This allows for efficient resource utilization while guaranteeing system stability. We also develop and implement strategies to handle unexpected traffic surges – a ‘fail-safe’ mechanism to prevent complete system collapse even if initial predictions are inaccurate.

Q 23. What is your experience with version control systems (e.g., Git) in a production environment?

Version control systems, primarily Git, are fundamental to managing code changes in a production environment. They ensure traceability, collaboration, and the ability to roll back to previous versions if necessary. Imagine building with LEGOs – Git is like saving your progress at each step, allowing you to revert to an earlier, stable build if something goes wrong.

My experience spans using Git for branching strategies (like Gitflow), creating pull requests for code review, and utilizing merging techniques to integrate code changes smoothly. We employ strict branching policies to avoid conflicts and maintain a clean codebase. For example, we use feature branches for developing new features, ensuring that unstable code doesn’t affect the production branch. We also regularly tag releases to easily identify specific versions deployed to production. This aids in debugging and rapid rollbacks during incidents.

Furthermore, I’m experienced with utilizing Git alongside continuous integration/continuous deployment (CI/CD) pipelines. These pipelines automate the build, test, and deployment processes, minimizing manual errors and ensuring consistent deployments.

Q 24. Describe your experience with different types of monitoring tools (e.g., APM, infrastructure monitoring).

I have extensive experience using various monitoring tools, spanning Application Performance Monitoring (APM) and infrastructure monitoring. APM tools provide insights into application-level performance, such as identifying slow database queries or inefficient code. Infrastructure monitoring tools, on the other hand, focus on the health and performance of the underlying infrastructure, including servers, networks, and storage. These tools are crucial for proactive identification and resolution of issues.

Examples of APM tools I’ve used include New Relic and Dynatrace. These tools offer detailed insights into application behavior, allowing us to pinpoint performance bottlenecks and identify root causes of issues. For infrastructure monitoring, I have experience with tools like Prometheus, Grafana, and Datadog, which provide real-time dashboards for tracking key metrics like CPU usage, memory consumption, disk I/O, and network latency.

The combination of APM and infrastructure monitoring provides a comprehensive view of the entire system, allowing for faster diagnosis and resolution of problems. For example, a sudden spike in database latency identified by APM might point towards an underlying infrastructure issue (e.g., disk space exhaustion) which is then confirmed through infrastructure monitoring. This integrated approach is crucial for efficient troubleshooting.

Q 25. How do you stay updated on the latest technologies and best practices in your field?

Staying updated is paramount in this rapidly evolving field. I leverage several strategies to ensure I remain current on the latest technologies and best practices. These include:

- Following industry blogs and publications: I regularly read blogs and publications from leading tech companies and industry experts to stay abreast of new trends and best practices.

- Attending conferences and webinars: Participating in conferences and webinars allows for networking with peers and learning from industry leaders.

- Engaging in online communities: Active participation in online forums and communities provides opportunities to discuss challenges and solutions with other professionals.

- Taking online courses: Continuous learning through online courses keeps me updated on specific technologies and skills.

- Hands-on experimentation: I frequently experiment with new technologies and tools to gain practical experience.

This multi-faceted approach ensures I am constantly expanding my knowledge and skillset, enabling me to address evolving challenges in production environments effectively.

Q 26. Describe a challenging production issue you faced and how you overcame it.

One challenging production issue involved a significant performance degradation of our e-commerce platform during a major promotional sale. Initially, we suspected a coding error or database bottleneck. However, after thorough investigation using APM and infrastructure monitoring tools, we discovered the root cause was an unexpected surge in traffic overwhelming our CDN (Content Delivery Network).

Our initial response involved scaling up our application servers, but this provided only temporary relief. The CDN was the true bottleneck. We systematically worked through the following steps:

- Identified the bottleneck: APM and infrastructure monitoring pinpointed the high latency originating from the CDN.

- Investigated the CDN logs: We analyzed CDN logs to identify specific regions experiencing high latency and errors.

- Collaborated with the CDN provider: We worked closely with our CDN provider to troubleshoot the issue and implement temporary mitigations, such as adding more servers in affected regions.

- Implemented long-term solutions: Post-incident, we re-evaluated our CDN configuration, opting for a more robust setup with enhanced capacity and better geographic distribution.

This experience highlighted the importance of comprehensive monitoring, swift collaboration, and proactive planning to handle unforeseen circumstances. The successful resolution demonstrated the value of a systematic approach to resolving complex production issues.

Q 27. What are some common pitfalls to avoid when troubleshooting production issues?

Several common pitfalls to avoid when troubleshooting production issues include:

- Jumping to conclusions: Avoid assuming the cause of the problem without sufficient evidence. Thorough investigation is critical.

- Lack of proper monitoring: Inadequate monitoring hinders identifying the root cause promptly. Comprehensive monitoring is essential.

- Ignoring logs and metrics: Logs and metrics provide crucial clues; overlooking them can lead to ineffective troubleshooting.

- Insufficient collaboration: Effective troubleshooting often requires collaboration with various teams (e.g., development, operations, database administrators).

- Lack of version control: Improper version control makes it difficult to revert to previous stable versions if necessary.

- Insufficient testing of solutions: Always thoroughly test solutions in a controlled environment before deploying them to production.

Avoiding these pitfalls ensures a more efficient and effective troubleshooting process.

Q 28. How do you balance speed and thoroughness when resolving production issues?

Balancing speed and thoroughness is crucial when resolving production issues. While speed is essential to minimize downtime and user impact, thoroughness prevents hasty fixes that could lead to further problems or mask underlying issues. Think of it as a firefighter – you need to act quickly to contain the immediate threat (speed), but you also need to investigate the cause of the fire to prevent it from reoccurring (thoroughness).

My approach involves a structured process:

- Immediate containment: First, focus on immediate actions to mitigate the impact of the issue – perhaps rolling back a deployment or temporarily disabling a feature.

- Rapid diagnosis: Simultaneously, begin a thorough investigation using monitoring tools and logs to determine the root cause.

- Prioritize fixes: Address the most critical issues first, while continuing the deeper investigation.

- Thorough testing: Before deploying any solution to production, conduct thorough testing in a staging or development environment.

- Post-mortem analysis: After resolution, conduct a thorough post-mortem analysis to understand what went wrong and implement preventative measures.

This balanced approach ensures a rapid response while maintaining a focus on long-term stability and preventing recurrence.

Key Topics to Learn for Troubleshoot and Resolve Production Issues Interview

- System Monitoring and Logging: Understanding various monitoring tools and interpreting log files to identify the root cause of issues. Practical application: Analyzing error logs from a web server to pinpoint a database connection problem.

- Debugging Techniques: Mastering debugging methodologies like binary search, rubber ducking, and using debuggers effectively. Practical application: Systematically isolating a performance bottleneck in a complex application.

- Incident Management: Following established incident management procedures, including prioritization, escalation, and communication. Practical application: Effectively coordinating a team response to a critical production outage.

- Problem Diagnosis & Root Cause Analysis (RCA): Moving beyond symptom identification to uncover the underlying cause of recurring issues. Practical application: Using RCA techniques to prevent a similar production error from happening again.

- Understanding System Architecture: Possessing a strong grasp of the system’s infrastructure, dependencies, and interactions. Practical application: Quickly identifying the impact of a change on dependent services.

- Version Control & Rollbacks: Working with version control systems (like Git) and implementing rollback strategies to mitigate the impact of faulty deployments. Practical application: Recovering from a failed software release by reverting to a previous stable version.

- Communication and Collaboration: Effectively communicating technical issues to both technical and non-technical stakeholders. Practical application: Clearly explaining a complex technical problem to a client in understandable terms.

Next Steps

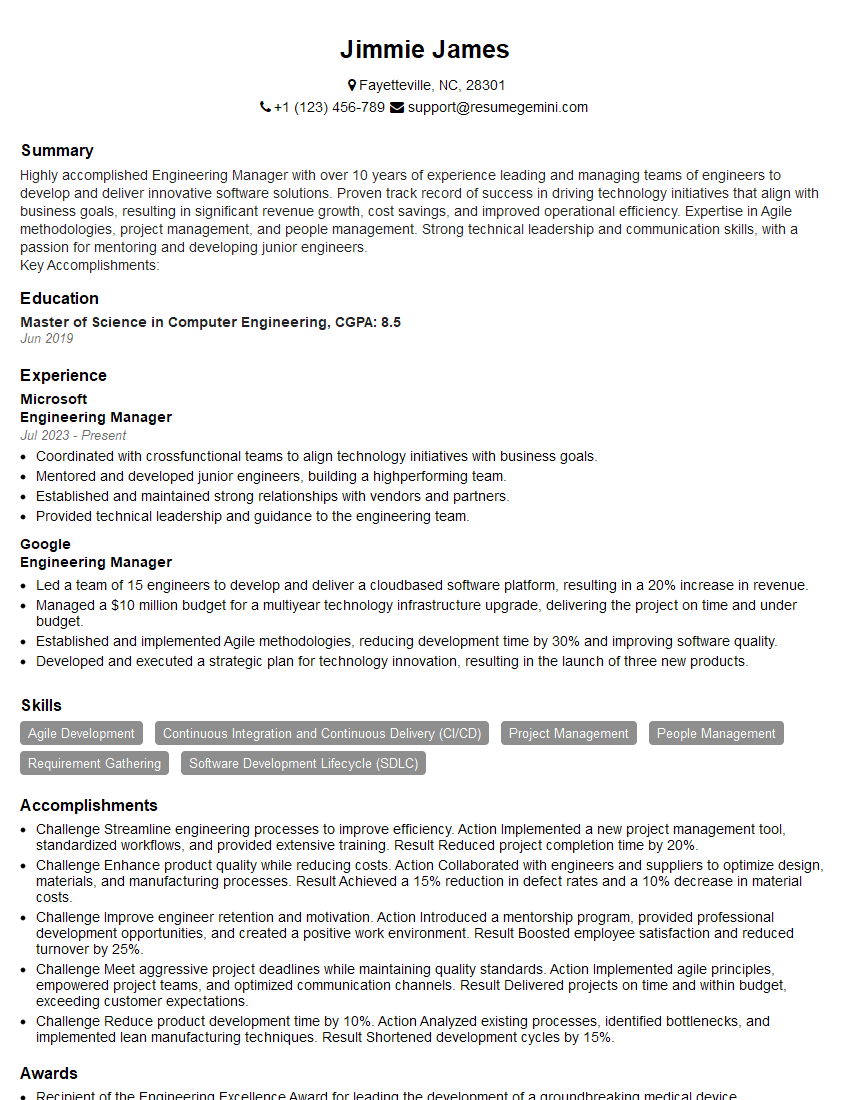

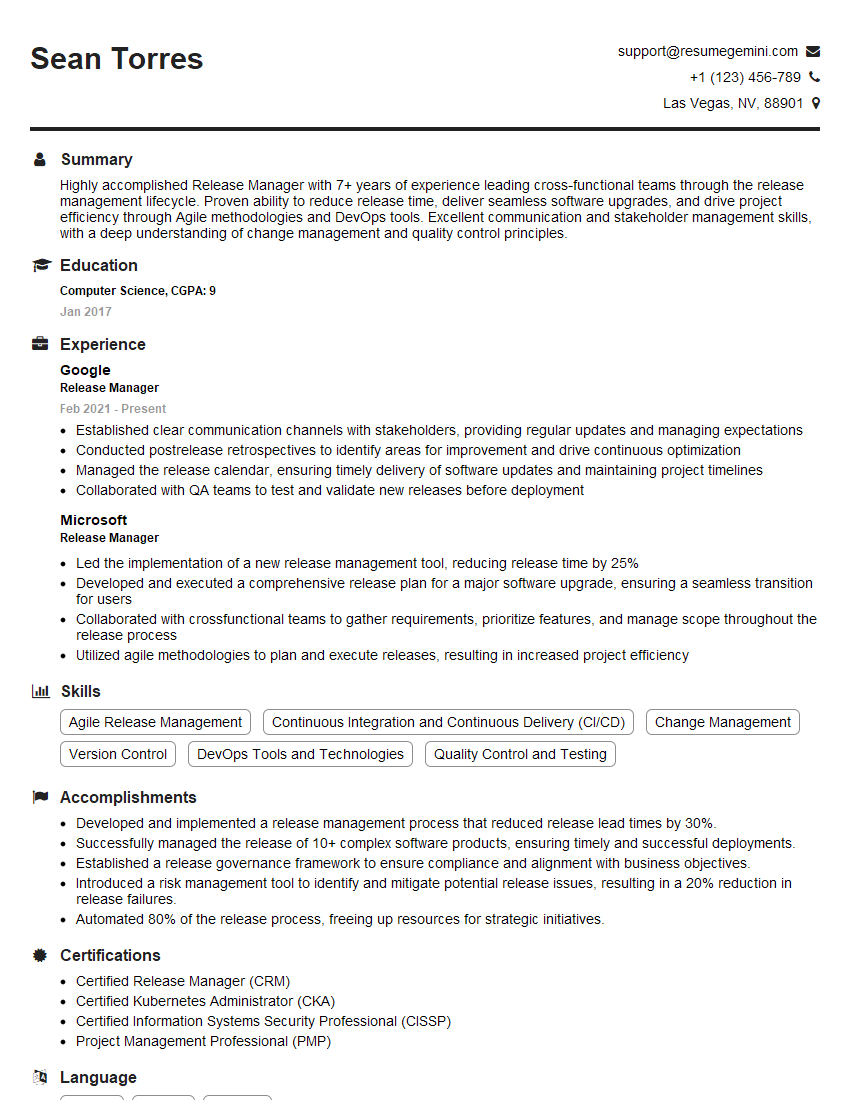

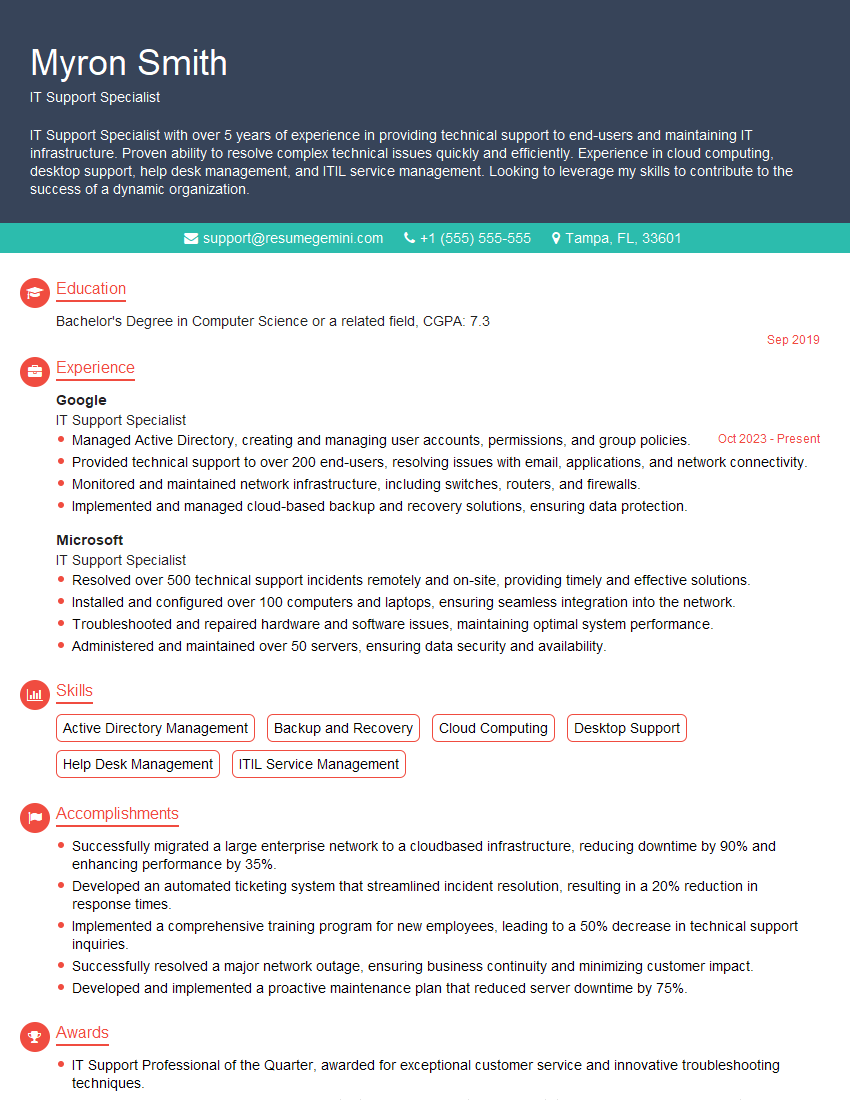

Mastering the art of troubleshooting and resolving production issues is crucial for career advancement in any technical field. It demonstrates critical thinking, problem-solving skills, and a proactive approach to maintaining system stability. To significantly boost your job prospects, create a resume that is both ATS-friendly and showcases your expertise effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to highlight your abilities in troubleshooting and resolving production issues. Examples of resumes specifically designed for this skillset are available within ResumeGemini to guide your process. Invest the time – it will pay off in a successful job search.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO