Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Uncertainty and Error Analysis interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Uncertainty and Error Analysis Interview

Q 1. Explain the difference between Type I and Type II errors.

Type I and Type II errors are two types of errors that can occur in hypothesis testing. Think of it like this: you’re a detective investigating a crime. You have a suspect, and you need to decide if they’re guilty or innocent.

A Type I error, also known as a false positive, occurs when you incorrectly reject a true null hypothesis. In our detective analogy, this is like arresting an innocent person. You concluded they were guilty (rejected the null hypothesis of innocence), but they were actually innocent.

A Type II error, also known as a false negative, occurs when you fail to reject a false null hypothesis. In our detective case, this is like letting a guilty person go free. You concluded they were innocent (failed to reject the null hypothesis of innocence), but they were actually guilty.

The probability of committing a Type I error is denoted by α (alpha) and is often set at 0.05 (5%). The probability of committing a Type II error is denoted by β (beta). Minimizing both types of errors is crucial, but often involves a trade-off; reducing one may increase the other.

Q 2. Describe the concept of propagation of uncertainty.

Propagation of uncertainty describes how uncertainties in individual measurements contribute to the uncertainty in a calculated result. Imagine you’re baking a cake. The recipe calls for precise amounts of flour, sugar, and eggs. If your measurements of each ingredient are slightly off, the final cake will also be slightly different from the expected result. This difference is the propagated uncertainty.

Mathematically, we use partial derivatives to quantify this propagation. If we have a function z = f(x, y), where x and y are measured quantities with uncertainties σx and σy respectively, the uncertainty in z (σz) can be approximated using the formula:

σz ≈ √[(∂f/∂x)²σx² + (∂f/∂y)²σy²]

This formula shows that the uncertainty in z depends on the uncertainties in x and y, as well as how sensitive z is to changes in x and y (represented by the partial derivatives).

Q 3. How do you calculate the uncertainty associated with a measurement?

Calculating the uncertainty associated with a measurement involves considering various sources of error. Let’s say you’re measuring the length of a table using a ruler.

- Random Error: This is due to variations in readings. Repeated measurements will give slightly different results. We quantify this using the standard deviation of multiple readings.

- Systematic Error: This is a consistent bias in your measurements, perhaps due to a poorly calibrated ruler. This error is more difficult to quantify and may require recalibration or using a different instrument.

- Resolution Error: This is the limitation of the measuring instrument. A ruler marked in millimeters has a resolution of 1 mm; you can’t measure to a finer degree. We often assign half the resolution as the uncertainty due to resolution.

The overall uncertainty is usually expressed as a combination of these errors, often using the root-sum-square method, combining random and systematic uncertainties in quadrature.

Q 4. What are some common sources of uncertainty in experimental data?

Uncertainty in experimental data arises from various sources. They can be broadly categorized as:

- Measurement Instrument Limitations: Every instrument has a limited precision and accuracy. A worn-out scale will give consistently inaccurate readings (systematic error), while a simple stopwatch might produce slightly different readings each time (random error).

- Environmental Factors: Temperature fluctuations, humidity, vibrations, and even lighting conditions can influence measurements, especially in sensitive experiments.

- Human Error: Mistakes in reading instruments, incorrect data recording, or biases in observation are all human-induced sources of uncertainty. Double-checking measurements and using standardized procedures can minimize this.

- Model Limitations: If the experiment involves using a theoretical model to interpret the data, the limitations of that model introduce uncertainty.

- Data Sampling: If a small sample is used to represent a larger population, there’s sampling error which introduces uncertainty in extrapolating to the population.

Thorough planning and meticulous execution are key to minimizing these sources of uncertainty.

Q 5. Explain the concept of confidence intervals.

A confidence interval is a range of values that is likely to contain the true value of a population parameter with a certain level of confidence. Imagine you’re trying to estimate the average height of all students in a university. You measure a sample of students, and calculate the average height of this sample. The confidence interval provides a range of values where you’re confident the true average height of all students lies.

For example, a 95% confidence interval of 170cm to 180cm means that we’re 95% confident that the true average height of all university students falls within this range. The width of the confidence interval reflects the uncertainty in our estimate; a wider interval indicates greater uncertainty.

Confidence intervals are widely used in various fields to present estimates along with their associated uncertainties. The choice of confidence level depends on the context; a higher confidence level means a wider interval but also greater certainty.

Q 6. What is a probability density function, and how is it used in uncertainty analysis?

A probability density function (PDF) is a function that describes the likelihood of a continuous random variable taking on a given value. Instead of giving a probability at a specific point (like a discrete probability distribution), it gives a probability *density* at a specific point. The probability of the variable falling within a certain range is given by the integral of the PDF over that range.

In uncertainty analysis, PDFs are crucial for representing uncertainties associated with continuous measurements or parameters. For instance, the uncertainty in the length of a component might be represented by a normal (Gaussian) PDF. The PDF allows for calculating the probability of the length falling within specific bounds. Different PDFs (e.g., uniform, triangular, log-normal) can model various distributions of uncertainty depending on the nature of the source of error.

Using simulation techniques (like Monte Carlo methods), we can use the PDFs of input parameters to generate numerous simulated values and calculate the resulting PDF for the output variable. This gives a detailed picture of the uncertainty in the output.

Q 7. How do you assess the validity of a measurement instrument?

Assessing the validity of a measurement instrument involves checking its accuracy and precision. Accuracy refers to how close the measured value is to the true value, while precision refers to the repeatability of measurements. Several methods exist:

- Calibration: Comparing the instrument’s readings against a known standard (a traceable standard of known accuracy). This reveals any systematic errors.

- Repeatability and Reproducibility Studies: Repeatedly measuring the same quantity under identical conditions (repeatability) and different conditions (reproducibility) to assess the random error and identify potential sources of variability.

- Linearity Check: Verifying the instrument’s response is linear across its operating range. A non-linear response indicates potential bias.

- Drift Check: Monitoring the instrument’s readings over time to see if it exhibits drift due to aging or environmental factors.

- Comparison with other instruments: Comparing measurements from the instrument with readings from another, well-validated instrument.

The specific methods employed depend on the type of instrument and the required level of certainty. Proper instrument validation and maintenance are crucial for reliable measurement results and reducing uncertainties.

Q 8. Describe different methods for uncertainty quantification.

Uncertainty quantification (UQ) is the process of identifying and estimating the uncertainty associated with a measurement, model, or prediction. Different methods exist depending on the nature of the uncertainty and available information.

- Probabilistic methods: These treat uncertainty as a random variable and use probability distributions to represent it. Examples include Monte Carlo simulations (discussed in the next question), Bayesian methods (which incorporate prior knowledge about the uncertainty), and various statistical inference techniques.

- Interval methods: These methods provide bounds or intervals within which the true value is likely to lie. They are particularly useful when probabilistic information is limited. An example is interval arithmetic, where calculations are performed on intervals instead of single values.

- Fuzzy logic methods: These handle uncertainty by using fuzzy sets, which represent imprecise concepts or values. They are helpful when dealing with linguistic uncertainties or subjective judgments.

- Sensitivity analysis methods: These quantify the influence of different input uncertainties on the output uncertainty, thereby identifying the most crucial sources of uncertainty. We’ll discuss this in more detail later.

The choice of method depends on factors like the availability of data, the nature of the uncertainties (random vs. systematic), and the computational resources available.

Q 9. Explain the Monte Carlo method and its applications in uncertainty analysis.

The Monte Carlo method is a computational technique that uses repeated random sampling to obtain numerical results. In uncertainty analysis, it’s used to propagate uncertainties through a model or calculation. Imagine you’re trying to estimate the area of a circle by randomly throwing darts at a square that encompasses the circle. The ratio of darts landing inside the circle to the total number of darts thrown gives an approximation of the circle’s area. Similarly, in UQ, we:

- Define probability distributions for each uncertain input parameter of the model.

- Randomly sample values from these distributions.

- Run the model using these sampled values.

- Repeat steps 2 and 3 many times.

- Analyze the distribution of the model outputs to quantify the uncertainty.

Applications of the Monte Carlo method are widespread, including:

- Financial modeling: Estimating the risk associated with investment portfolios.

- Engineering design: Assessing the reliability of structures or components under uncertain loads and material properties.

- Climate modeling: Predicting future climate scenarios given uncertainties in greenhouse gas emissions and climate feedbacks.

# Python example (simplified): import numpy as np # Define distributions for uncertain inputs mean_x = 10; std_x = 2 mean_y = 5; std_y = 1 # Number of simulations n_simulations = 10000 # Sample from the distributions x_samples = np.random.normal(mean_x, std_x, n_simulations) y_samples = np.random.normal(mean_y, std_y, n_simulations) # Calculate the output (example: z = x + y) z_samples = x_samples + y_samples # Analyze the distribution of z_samples (e.g., calculate mean, standard deviation)

Q 10. How do you handle correlated uncertainties in a calculation?

Correlated uncertainties occur when the uncertainties of different input parameters are not independent; their values are related. Ignoring correlation can lead to underestimation of the total uncertainty. To handle correlated uncertainties, we need to account for the relationships between the variables. This is often done by using:

- Covariance matrices: These matrices describe the relationships between pairs of uncertain parameters. A high covariance between two parameters indicates a strong positive correlation (when one is high, the other tends to be high as well). A negative covariance indicates a negative correlation.

- Copulas: These are functions that link marginal probability distributions of individual variables to their joint distribution, allowing for the specification of complex dependencies.

- Latin Hypercube Sampling (LHS): This sampling method is designed to efficiently explore the input parameter space and account for correlations by ensuring good coverage of the entire range of possible values.

In practice, obtaining correlation information may require careful analysis of the underlying processes and potentially involve expert judgment. For example, in a structural analysis, the strength of different materials used might be positively correlated due to factors like the origin of the material or manufacturing techniques. A Monte Carlo simulation using a covariance matrix or LHS would appropriately capture this dependence.

Q 11. What is sensitivity analysis, and why is it important in uncertainty analysis?

Sensitivity analysis identifies which input parameters most significantly affect the output uncertainty. This is crucial for efficient resource allocation and risk management. By pinpointing the most influential factors, we can focus on reducing uncertainties associated with those parameters and improve model accuracy without needing to consider less important variables. For example, if a model’s output is very sensitive to one particular parameter, efforts should be made to improve its measurement or reduce its variability. There are various sensitivity analysis methods including:

- Local sensitivity analysis: Analyzes the sensitivity of the model to small changes in input parameters around a specific point.

- Global sensitivity analysis: Considers the entire range of variation of the input parameters, which is particularly useful in the presence of non-linearity.

- Variance-based methods (e.g., Sobol indices): Quantify the contribution of individual input parameters and their interactions to the output variance.

Imagine designing a bridge. A sensitivity analysis might reveal that the strength of the concrete is a far more critical parameter than the precise dimensions of some minor bracing elements. This information lets engineers focus resources on obtaining more accurate measurements for concrete strength.

Q 12. Discuss the difference between systematic and random errors.

Systematic errors (or biases) are consistent, repeatable errors that always occur in the same direction. They’re often caused by flaws in the measuring instrument or the experimental procedure. Examples include:

- A balance that’s not properly calibrated, consistently giving readings that are 0.1 grams too high.

- A thermometer that is consistently 2 degrees off due to a manufacturing defect.

Random errors are unpredictable variations that fluctuate randomly during measurement. They are typically due to uncontrollable factors such as environmental noise. Examples include:

- Small variations in the reading of a scale due to vibrations in the environment.

- Uncertainties in timing an event due to reaction time.

Systematic errors are generally more difficult to detect and correct than random errors because they are consistent and tend to not average out. Reducing systematic errors requires careful calibration of instruments and designing experiments to minimize biases. Random errors can be reduced by averaging multiple measurements.

Q 13. How do you determine the appropriate number of significant figures in a result?

Determining the appropriate number of significant figures is crucial for accurate representation of data and results. The rule of thumb is to retain only the number of digits that reflects the uncertainty in the measurement. Consider these points:

- Uncertainty in measurements: The number of significant figures should reflect the uncertainty associated with the measurement. For example, if a measurement has an uncertainty of ±0.1, it’s inappropriate to report the result with more than one decimal place.

- Calculations: The result of a calculation should not have more significant figures than the least precise measurement used in the calculation. For example, if you multiply 2.5 by 3.14159, the result should be rounded to 7.85 (two significant figures) because 2.5 only has two significant figures.

- Rounding rules: Standard rounding rules should be followed when reducing the number of significant figures. If the digit to be dropped is 5 or greater, round up; otherwise, round down.

In scientific notation, only the significant figures are reported. For example, 123000 can be expressed as 1.23 x 105 if the uncertainty is in the last two digits. If the uncertainty is much larger, one might express it as 1.2 x 105.

Q 14. Explain the concept of degrees of freedom in statistical analysis.

Degrees of freedom (df) in statistical analysis represent the number of independent pieces of information available to estimate a parameter. It’s essentially the number of values that are free to vary after certain constraints have been imposed. Here’s a simple explanation:

- Sample mean: If you have a sample of n measurements and you know the sample mean, only n-1 of the measurements are free to vary. Once you know n-1 measurements and the sample mean, the nth measurement is determined. Thus, the sample mean has n-1 degrees of freedom.

- Linear regression: In a linear regression with p parameters (e.g., slope and intercept), the degrees of freedom are n-p, where n is the number of data points. This is because once we have estimated p parameters from the data, the remaining information is restricted.

- Chi-squared test: The degrees of freedom in a chi-squared test depend on the number of categories and constraints imposed on the data.

The concept of degrees of freedom is important because it affects the choice of statistical distributions (e.g., t-distribution instead of a normal distribution when the degrees of freedom are low) and impacts the accuracy of statistical inferences. In essence, degrees of freedom reflect the amount of information we have available relative to the complexity of the model we are trying to fit to the data.

Q 15. What are some common statistical distributions used in uncertainty analysis?

Uncertainty analysis often relies on probability distributions to model the variability and uncertainty in data. Several common distributions are particularly useful depending on the nature of the data and the type of uncertainty being modeled.

Normal (Gaussian) Distribution: This is the bell-shaped curve, ubiquitous in statistics. It’s used when the uncertainty is symmetric around a mean value and follows a pattern of random variations. Think of the height of adult women – it’s roughly normally distributed.

Uniform Distribution: This distribution assigns equal probability to all values within a specified range. It’s used when we know the minimum and maximum values, but have no reason to believe any value within that range is more likely than another. Imagine picking a random number between 1 and 10; each number has an equal chance of being selected.

Lognormal Distribution: This distribution is used when the logarithm of a variable follows a normal distribution. It’s often appropriate for variables that cannot be negative and tend to have a skewed distribution, such as the size of particles in a suspension or the lifespan of electronic components.

Triangular Distribution: This distribution is defined by its minimum, maximum, and most likely values. It’s useful when we have limited data, but can make educated guesses about the bounds and the most probable outcome. This might be used to estimate project duration when historical data is scarce.

Beta Distribution: This is a flexible distribution defined on the interval [0, 1], often used to model probabilities or proportions. It’s particularly valuable in Bayesian analysis where prior knowledge is incorporated into the model.

The choice of distribution depends critically on the context and available information. Often, a careful assessment of the data and the underlying process is needed to select the most appropriate distribution.

Career Expert Tips:

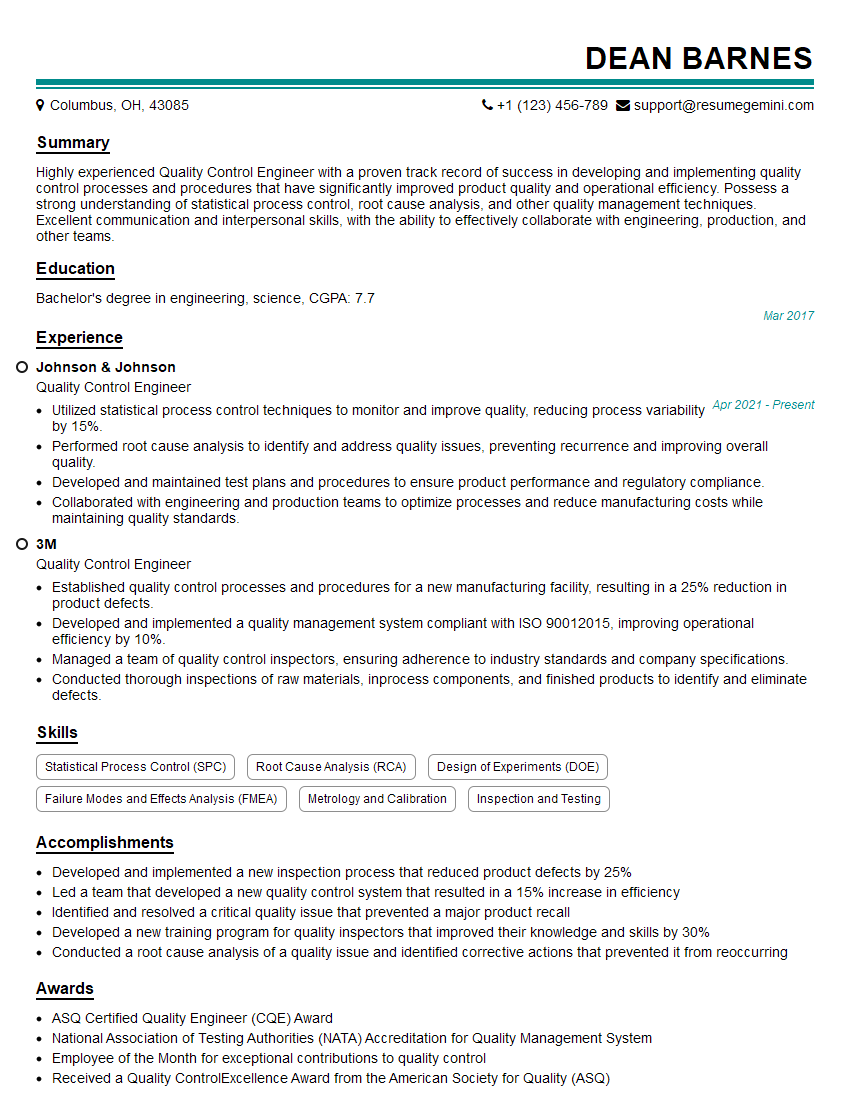

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

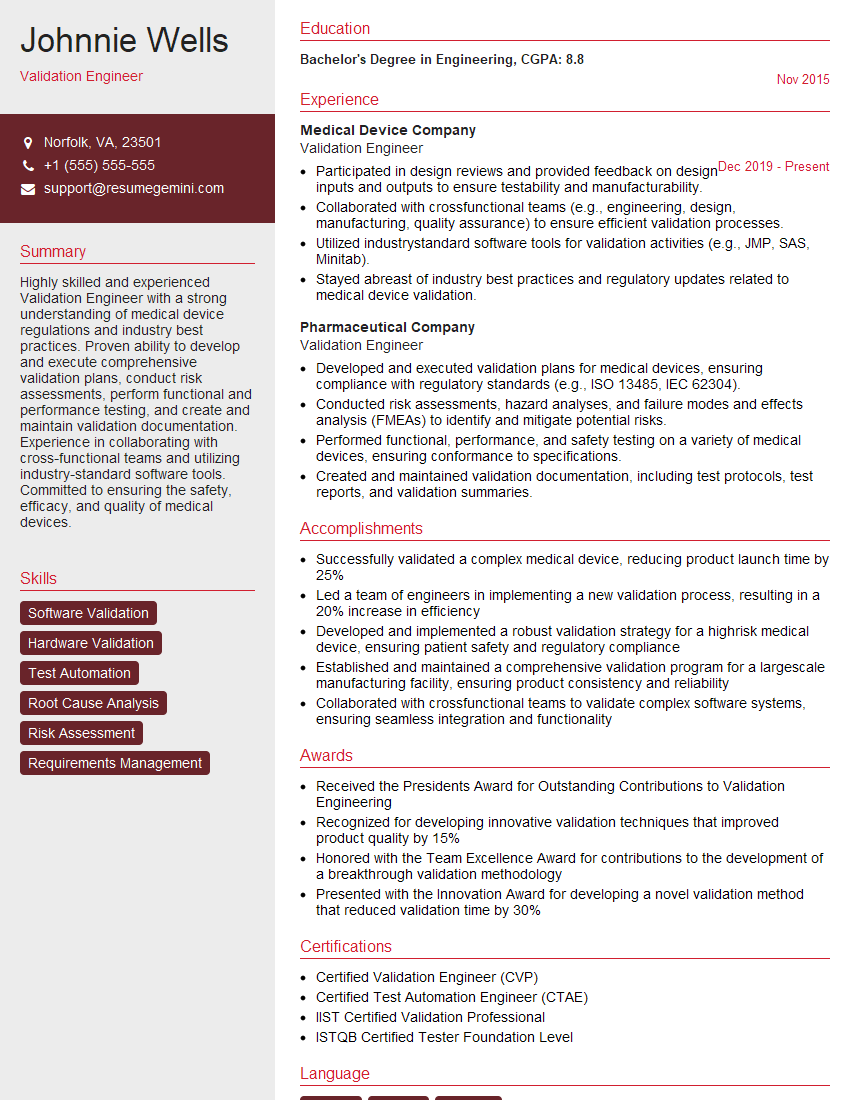

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe the method of least squares and its application in data analysis.

The method of least squares is a fundamental technique in data analysis used to find the best-fitting curve to a set of data points. It works by minimizing the sum of the squares of the differences between the observed values and the values predicted by the curve. In simpler terms, it aims to find the line (or curve) that is closest to all the data points simultaneously.

Let’s consider a linear model, where we want to find the line y = mx + c that best fits the data. The least squares method finds the values of m (slope) and c (intercept) that minimize the sum of squared residuals, where a residual is the difference between the observed value yi and the predicted value mxi + c for each data point (xi, yi). This minimization is often done using calculus (finding the derivatives and setting them to zero), or through numerical optimization techniques.

Applications:

Regression Analysis: To model relationships between variables. For example, predicting house prices based on size and location.

Curve Fitting: Finding the best-fitting curve to experimental data. This is common in scientific fields to derive mathematical models from experimental observations.

Parameter Estimation: Estimating unknown parameters in models using observed data. For instance, estimating the speed of light using experimental measurements.

While widely used, least squares is sensitive to outliers and assumes a linear relationship in the simplest case. More robust methods exist for situations that violate these assumptions.

Q 17. Explain the difference between precision and accuracy.

Precision and accuracy are both crucial aspects of measurement, but they represent different things. Think of it like aiming at a target:

Accuracy refers to how close a measurement is to the true or accepted value. High accuracy means your measurements are clustered around the bullseye.

Precision refers to how close repeated measurements are to each other. High precision means your measurements are tightly clustered, regardless of whether they hit the bullseye.

You can have high precision but low accuracy (e.g., consistently hitting the same spot, but far from the bullseye), high accuracy but low precision (e.g., hitting around the bullseye, but with a wide spread), high accuracy and high precision (ideal), or low accuracy and low precision.

Example: Imagine measuring the length of a table. If you get repeated measurements of 1.50m, 1.51m, and 1.52m, but the actual length is 2.00m, you have high precision (measurements are close together) but low accuracy (far from the true value).

Q 18. How do you handle outliers in a dataset?

Outliers are data points that significantly deviate from the rest of the data. Handling them requires careful consideration and depends on the reason for their existence. Simply removing them is generally discouraged without a valid justification.

Identify Potential Outliers: Use box plots, scatter plots, or statistical methods (e.g., Z-scores) to identify data points that fall significantly outside the expected range.

Investigate the Cause: Try to understand why the outlier occurred. Was it a measurement error, a data entry mistake, or does it represent a genuinely unusual event?

Robust Methods: Employ statistical methods that are less sensitive to outliers. For instance, median instead of mean, robust regression techniques, or trimmed means.

Transformation: Sometimes, transforming the data (e.g., using logarithms) can reduce the influence of outliers.

Winsorizing: Replace extreme values with less extreme ones, but still within the range of the data.

Careful Removal: Only remove outliers if you have a strong justification for believing they are errors, after careful investigation. Document your reasons clearly.

The choice of approach depends on the specific context and the implications of the outlier on the analysis. It’s crucial to document the handling of outliers transparently in any analysis report.

Q 19. What is the role of uncertainty analysis in risk assessment?

Uncertainty analysis plays a vital role in risk assessment by quantifying the uncertainty associated with different risk factors. It helps to move beyond simple point estimates of risk and provides a more nuanced understanding of the potential range of outcomes.

How it’s used:

Quantifying Uncertainties: Uncertainty analysis identifies the sources of uncertainty (e.g., data limitations, model simplifications, future projections) and quantifies their impact on the risk assessment.

Sensitivity Analysis: It determines how sensitive the risk assessment is to changes in the inputs. This helps to identify the most critical factors that contribute to the overall uncertainty.

Probability Distributions: Instead of relying on single values, uncertainty analysis uses probability distributions to represent the range of possible values for each risk factor. This enables a more comprehensive representation of risk.

Risk Communication: Uncertainty analysis helps in communicating the inherent limitations and uncertainties associated with the risk assessment, leading to more informed decision-making.

By incorporating uncertainty analysis into risk assessment, we can make more robust and informed decisions, better prepared to handle unexpected events or outcomes.

Q 20. How do you communicate uncertainty results effectively to a non-technical audience?

Communicating uncertainty to a non-technical audience requires careful consideration and a focus on clarity and simplicity. Avoid jargon and technical terms whenever possible. Effective communication might involve:

Visualizations: Use charts and graphs, like bar charts showing the range of potential outcomes, or probability distributions to illustrate the uncertainty visually.

Analogies and Metaphors: Use simple analogies to explain complex concepts. For instance, comparing uncertainty to the spread of darts on a dartboard.

Scenario Planning: Present different scenarios and their associated probabilities to illustrate the range of possible outcomes. For example, “There’s a 70% chance we’ll see X, a 20% chance of Y, and a 10% chance of Z.”

Focus on Implications: Explain the implications of the uncertainty for decision-making. What are the potential consequences of different outcomes? What are the potential risks?

Transparency: Clearly state the limitations and assumptions of the analysis.

Plain Language: Use everyday language, avoid technical terms unless absolutely necessary, and always define them if used.

The key is to make the uncertainty understandable and relevant to the audience, allowing them to make informed decisions based on the information presented.

Q 21. Describe a situation where you had to deal with significant uncertainty in your work.

During a project assessing the environmental impact of a proposed offshore wind farm, we faced considerable uncertainty. The primary challenge stemmed from predicting long-term sediment transport patterns in the area. Existing data was limited, and the models available had significant limitations in accurately representing the complex hydrodynamic processes.

To address this, we employed a combination of techniques:

Multiple Models: We used several different sediment transport models, each with its own assumptions and strengths, to generate a range of predictions.

Sensitivity Analysis: We conducted sensitivity analyses to identify the model parameters that had the greatest influence on the predictions. This helped us focus our efforts on reducing uncertainty in those key areas.

Expert Elicitation: We engaged with leading experts in sediment transport modeling to gather their insights and judgments on the likelihood of different outcomes.

Uncertainty Quantification: We used probabilistic methods to quantify the uncertainty in our predictions, expressing the results as probability distributions rather than single point estimates.

The final report clearly communicated the uncertainties associated with our predictions, allowing stakeholders to make informed decisions based on a realistic understanding of the potential environmental impacts of the project.

Q 22. How do you validate a model used for uncertainty quantification?

Validating a model for uncertainty quantification involves rigorously assessing its accuracy and reliability in capturing the inherent uncertainties within a system. This isn’t a single test, but a multi-faceted process. Think of it like testing a new recipe – you wouldn’t just try it once!

Firstly, we perform verification, ensuring the model correctly implements the intended mathematical and physical principles. This often involves code reviews, unit tests, and comparison against simplified analytical solutions where available.

Next, we focus on validation, comparing the model’s predictions to real-world data. This requires a robust dataset that ideally includes a range of conditions and potential sources of uncertainty. We use statistical methods to assess the model’s predictive capability. For example, we might calculate the root mean square error (RMSE) or use a goodness-of-fit test like the chi-squared test. A low RMSE and a high goodness-of-fit statistic indicate a well-validated model.

Finally, we consider sensitivity analysis. This helps identify which input parameters have the largest influence on the model’s output uncertainty. This information is critical for refining the model and focusing efforts on reducing uncertainties in the most influential parameters. For instance, if a small change in one parameter significantly alters the prediction, we know to focus our efforts on obtaining a more accurate measurement for that parameter.

The entire process should be meticulously documented, including all assumptions, data sources, and validation metrics, ensuring transparency and reproducibility.

Q 23. Explain the concept of Bayesian inference in the context of uncertainty analysis.

Bayesian inference provides a powerful framework for incorporating prior knowledge and updating our beliefs about a system’s parameters in light of new data. Unlike frequentist methods that treat parameters as fixed but unknown values, Bayesian inference considers them as random variables with probability distributions.

Imagine you’re trying to estimate the average height of students in a university. A prior distribution represents your initial belief about the average height before collecting any data – perhaps based on national averages. As you collect height measurements (data), you update your belief using Bayes’ theorem. The result is the posterior distribution, which represents your updated belief about the average height after considering the data.

In uncertainty analysis, we use Bayesian inference to quantify the uncertainty in model parameters by expressing them as probability distributions. This allows us to obtain not only point estimates but also credible intervals, which indicate the range within which the true parameter value likely lies with a specified probability. This provides a richer and more informative representation of uncertainty compared to just reporting a point estimate and a standard deviation.

Q 24. What are some software packages you have experience using for uncertainty analysis?

My experience encompasses several software packages commonly used for uncertainty analysis. These include:

- MATLAB: Excellent for both numerical computation and visualization. I’ve extensively used MATLAB’s built-in statistical functions and toolboxes for Monte Carlo simulations, sensitivity analysis, and Bayesian inference.

- Python (with libraries like SciPy, NumPy, and PyMC3): A highly versatile language with extensive libraries for statistical computing. PyMC3 is particularly valuable for Bayesian inference, providing efficient tools for implementing complex Bayesian models.

- R: Another powerful statistical computing language with a wide range of packages dedicated to uncertainty quantification and visualization. I’ve utilized R for developing customized analysis pipelines and exploring different uncertainty propagation methods.

- GUM workbench software: Specifically designed for performing uncertainty analysis according to the Guide to the Expression of Uncertainty in Measurement (GUM) method. This is especially useful in metrology applications.

The choice of software often depends on the specific problem, the available data, and personal preference. However, proficiency in at least one or two of these packages is crucial for effectively handling uncertainty analysis in various contexts.

Q 25. How do you determine the appropriate level of uncertainty to report?

Determining the appropriate level of uncertainty to report is a crucial aspect of responsible uncertainty analysis and hinges on several factors. It’s not a matter of arbitrarily choosing a precision, but rather a careful consideration of the context and consequences of the results.

Intended use of the results: A high level of precision is needed if the results inform critical decisions with potentially significant consequences (e.g., safety-critical applications). Less precision might suffice for exploratory studies or preliminary assessments.

Cost of further reduction: Reducing uncertainty often requires additional resources (time, money, expertise). We should weigh the cost against the potential benefits of increased precision. If reducing uncertainty further is prohibitively expensive or time-consuming, it might be more sensible to report the existing level of uncertainty with transparency.

Available resources: The complexity of the system, the quality and quantity of the data available, and the resources dedicated to uncertainty analysis all influence the attainable level of precision. It’s vital to be realistic and transparent about the limitations.

Finally, always clearly communicate the uncertainty estimate along with its associated confidence level or probability, providing a complete picture of the reliability of the results. For example, report an uncertainty as ’10 ± 2 with 95% confidence’, rather than just ’10 ± 2′.

Q 26. Describe your experience with different uncertainty analysis methods (e.g., GUM, Monte Carlo).

I have extensive experience with various uncertainty analysis methods, including the Guide to the Expression of Uncertainty in Measurement (GUM) and Monte Carlo methods.

GUM: This method is particularly suitable for measurements with well-defined mathematical models. It propagates uncertainties through the model using a first-order Taylor series approximation. I have used GUM in calibration laboratories and for measuring physical quantities where the measurement model is precisely understood. Its strength is in its rigor and clarity, ideal for establishing traceability and reporting uncertainty in conformity with international standards.

Monte Carlo methods: These methods use random sampling to estimate uncertainty. I’ve used Monte Carlo simulations to model complex systems and account for uncertainty arising from various sources, including input parameter variability and model structural uncertainty. Monte Carlo methods excel in handling non-linear relationships and high-dimensional systems where GUM’s assumptions might be violated. I’ve employed Monte Carlo simulations in environmental modeling, risk assessment, and reliability engineering.

The choice between GUM and Monte Carlo methods often depends on the characteristics of the system and the availability of a well-defined measurement model. For simple, well-understood systems, GUM might be sufficient. For complex systems with non-linearity and multiple uncertainty sources, Monte Carlo simulation is typically more appropriate.

Q 27. How do you manage uncertainty in complex systems?

Managing uncertainty in complex systems presents a significant challenge, as the interactions between components can amplify or mitigate individual uncertainties. My approach involves a multi-pronged strategy:

- Decomposition: Breaking down the complex system into smaller, more manageable subsystems. This allows for analyzing uncertainty within individual subsystems and then propagating the uncertainties upwards to the system level.

- Sensitivity analysis: Identifying the most influential input parameters on the overall system uncertainty. This allows us to prioritize efforts in reducing uncertainty in the most critical parameters.

- Model simplification and validation: Simplifying the system model, ensuring that the simplification does not excessively compromise accuracy while enhancing computational efficiency. Validation is then performed against available data to ensure the simplified model is representative of the real system.

- Hierarchical Bayesian modeling: For highly complex systems, Bayesian methods can be particularly useful, allowing for the incorporation of prior knowledge and the efficient propagation of uncertainties across multiple hierarchical levels.

- Ensemble methods: Combining predictions from multiple models to generate a more robust estimate and quantify model uncertainty.

Careful consideration should be given to the propagation of uncertainty between subsystems, acknowledging that uncertainty from one subsystem can significantly impact others. Visualization tools are crucial for understanding the interplay of uncertainties within the entire system.

Q 28. Explain the importance of traceability in measurement uncertainty.

Traceability in measurement uncertainty is paramount for ensuring the reliability and comparability of results. It establishes a chain of evidence linking a measurement result to national or international standards. This is analogous to a family tree – tracing the lineage back to a known and reliable source.

Traceability allows us to verify the accuracy of instruments and methods by referring them to higher-order standards. Without traceability, a measurement’s uncertainty is essentially meaningless, as there’s no objective basis for assessing its accuracy. Imagine comparing measurements from two different laboratories using instruments that haven’t been calibrated against common standards – the results would be difficult to compare.

In practice, traceability is achieved through a series of calibrations and validations. Each instrument used in a measurement chain should be calibrated against a more accurate standard, and the uncertainty associated with each calibration step should be documented. This allows the uncertainty to be propagated through the entire measurement chain, ultimately relating the final measurement uncertainty to a national or international standard. This process is crucial for ensuring the quality and reliability of measurements across different laboratories and organizations, fostering confidence in scientific results.

Key Topics to Learn for Uncertainty and Error Analysis Interview

- Types of Uncertainty: Understanding and differentiating between random and systematic errors, including their sources and propagation.

- Error Propagation: Mastering techniques for calculating uncertainties in derived quantities from measurements with uncertainties (e.g., using partial derivatives).

- Statistical Methods: Familiarity with descriptive statistics (mean, standard deviation, variance), confidence intervals, and hypothesis testing as applied to uncertainty analysis.

- Calibration and Validation: Understanding the processes involved in instrument calibration and experimental validation to minimize systematic errors.

- Data Analysis and Visualization: Effectively presenting uncertainty analysis results through appropriate graphs and visualizations (e.g., error bars, box plots).

- Least Squares Regression and Curve Fitting: Applying these techniques to experimental data and quantifying the uncertainties associated with the fitted parameters.

- Practical Applications: Discussing real-world examples of uncertainty analysis in your field of study or work experience (e.g., engineering, scientific research, finance).

- Advanced Topics (Consider based on Job Description): Explore topics like Monte Carlo simulations, Bayesian inference, or specific methods relevant to your target roles.

Next Steps

Mastering Uncertainty and Error Analysis is crucial for success in many analytical and quantitative fields. A strong understanding demonstrates a commitment to accuracy and rigorous methodology, highly valued by employers. To maximize your job prospects, invest time in crafting an ATS-friendly resume that clearly highlights your skills and experience in this area. ResumeGemini is a trusted resource for building professional resumes that catch recruiters’ attention. We provide examples of resumes tailored to Uncertainty and Error Analysis to help you get started. Take the next step towards your dream career – build a standout resume today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

I Redesigned Spongebob Squarepants and his main characters of my artwork.

https://www.deviantart.com/reimaginesponge/art/Redesigned-Spongebob-characters-1223583608

IT gave me an insight and words to use and be able to think of examples

Hi, I’m Jay, we have a few potential clients that are interested in your services, thought you might be a good fit. I’d love to talk about the details, when do you have time to talk?

Best,

Jay

Founder | CEO